Compute-storage separation is an important feature of cloud-native. Typically, computing is CPU intensive and storage is I/O intensive. Both computing and storage have different hardware configuration requirements. With the traditional hybrid compute-storage architecture, CPU and storage devices cannot underperform in balancing compute and storage, thus sacrificing flexibility and increasing costs. If an architecture has compute and storage separated, we can configure computing and storage models independently to attain greater flexibility and reduce costs.

Compute-storage separation architecture is a new type of hardware architecture. However, the previous systems were designed based on hybrid architectures; so, we should transform them to fully utilize the advantages of the separation architecture. Otherwise, errors may occur. For example, many systems assume that the local disk is large enough, but the local disk of a computing node is small. Another example is that the locality optimization for some systems is not applicable in a separation architecture, for which Spark Shuffle is a typical example. The following figure shows the shuffle process:

Each Mapper writes full shuffle data to a local file after sorting it by the partitionId. Simultaneously, the index file that records the offset and length of each partition will be saved. The reduce task then pulls corresponding shuffle data from all map nodes. TB-level shuffle data in big data scenarios are prevalent, which requires a large enough local disk. As a result, you will see conflicts in the compute-storage separation architecture. Therefore, it is necessary to reconstruct the traditional shuffle process and unload the shuffle data to the storage node.

Besides compute-storage separation architecture, the current shuffle implementation also has major defects under the traditional hybrid architecture. These defects include many random readings and writings, and network transmission with a small amount of data. Given the stage of 1,000 Mappers X 2,000 Reducers, each Mapper writes 128 MB shuffle data, and the data volume for each reduce is about 64 KB, which is a very bad pattern. Each disk I/O request randomly reads only 64 KB of data, and each network request only transmits 64 KB of data. Thus, a lot of instability and performance problems occur. Therefore, it is necessary to refactor shuffle even in a hybrid architecture.

E-MapReduce Remote Shuffle Service (ESS) from Alibaba Cloud can solve the above problems. ESS is an extension provided by E-MapReduce (EMR) to solve the shuffle stability and performance problems under the compute-storage separation and hybrid architectures.

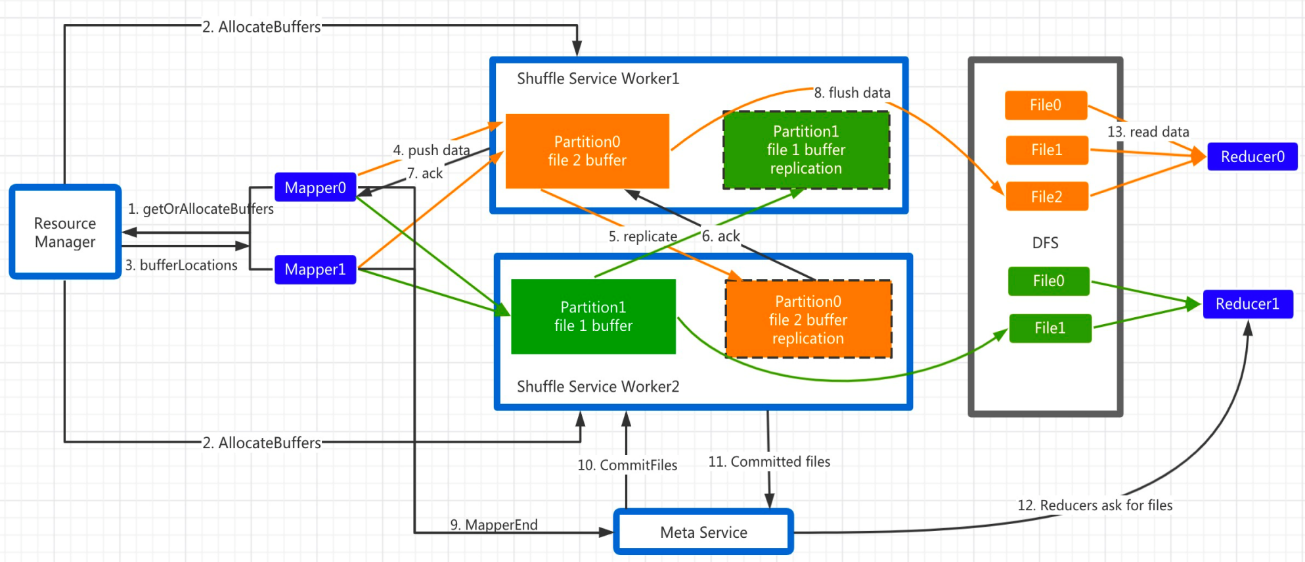

ESS has three main roles: Master, Worker, and Client. The Master and Worker constitute the server. The Client integrates into Spark in a non-intrusive manner. The Master is responsible for resource allocation and state management. The Worker processes and stores shuffle data. Lastly, the Client caches and pushes shuffle data. The following figure shows the overall process of ESS (ResourceManager and MetaService are the components of the Master):

ESS adopts the shuffle mode of Push Style. Each Mapper has a cache that is delimited by partition, and the shuffle data is written to the cache first. PushData request is triggered when the cache of a partition is full.

Before triggering PushData, the Client checks whether there is partition location information in the local, as location specifies the target Worker address of each partition. If the partition location does not exist, the Client initiates a getOrAllocateBuffers request to the Master. After receiving this request, the Master checks whether the buffer is allocated. If not, the Master selects two replica-pair Workers and initiates the AllocateBuffers instruction to them based on the current resource situation. After receiving the instruction, the Worker records the meta and allocates memory for caching. After receiving the ack packet from the Worker, the Master returns location information of the primary copy to the Client.

The Client starts to push data to the primary copy. After receiving the request, the primary copy Worker caches the data to the local memory and forwards the request to the secondary copy by pipeline. Then, the secondary copy immediately sends an ack to the primary copy after receiving complete data. After receiving the ack, the primary copy immediately sends the ack to the Client.

To avoid blocking PushData requests, after receiving the PushData request, the Worker first puts the request into a queue, which is asynchronously processed by the dedicated thread pool. Then, the data will be replicated to the pre-allocated buffer based on the partition to which it belongs. If the buffer is full, data flushing is triggered. ESS supports multiple storage backends, including DFS and Local. If the backend is DFS, one out of primary and secondary copy will be flushed. The dual-copy mechanism ensures the fault tolerance of DFS. If the backend is Local, both primary and secondary copies are flushed.

Once all Mappers are finished, the Master will trigger the StageEnd event and send CommitFiles requests to all Workers. After receiving these requests, the Workers will flush the data in the buffer of the stage to the storage layer, close the file, and release the buffer. After receiving all ack, the Master records the list of files corresponding to each partition. If the CommitFiles request fails, the Master marks this stage as DataLost.

In the Reduce stage, the reduce task first requests the Master for the file list corresponding to the partition. If the return code is DataLost, it triggers the stage re-calculation or directly aborts the job. If the response is normal, the reduce task reads data directly from the file.

The key points of the ESS design are:

1) We can employ the Push Style method for shuffle to avoid local storage, thus adapting to the compute-storage separation architecture.

2) We can implement integrations according to reduce, which avoids random reading and writing of small files and network requests with small data volume.

3) Dual-copy mechanism improves the system stability.

Besides the dual-copy mechanism and DataLost detection, ESS takes multiple measures to ensure fault tolerance.

When the number of PushData failures (due to reasons like Worker crashes and busy network or CPU) exceeds the value of MaxRetry, the Client sends a message to the Master to request a new partition location. Each Client then uses the new location address.

If Client rather than a Worker causes the Revive, the same partition data is distributed across different Workers. The meta component of the Master can handle this issue correctly.

If WorkerLost occurs, a large number of PushData requests will fail at the same time. In this case, a large number of Revive requests from the same partition will be sent to the Master. The Master processes only one Revive request to avoid allocating too many locations to the same partition. Other requests are placed in the pending queue. The Master will return the same Location after processing the Revive request.

When WorkerLost occurs, the Master sends a CommitFiles request to its peer for the replica data on this Worker and then clears the buffer on this peer. If the CommitFiles request fails, the stage is recorded as DataLost. If it is successful, the subsequent PushData requests apply for the location again through the Revive mechanism.

Speculation tasks and task recalculation may result in data redundancy. The solution is that each data slice of PushData encodes the mapId, attemptId, and batchId, and the Master records the attemtpId successfully committed for each map task. The read end filters different attempt data through attemptId and redundant data of the same attempt using batchId.

In DFS mode, the ReadPartition failure would directly cause the stage recalculation or job abortion. In Local mode, when ReadPartition fails, it triggers data reading from peer location. If both the primary and secondary copies fail, it triggers stage recalculation or job abortion.

ESS currently supports two storage backends: DFS and Local.

ESS integrates with Spark without intruding the Spark code. Users only need to configure the Shuffle Client jar package to the classpath of driver and client and add the following configurations. By doing so, users can transform the shuffle process into ESS:

spark.shuffle.manager=org.apache.spark.shuffle.ess.EssShuffleManagerMonitoring and alerts:

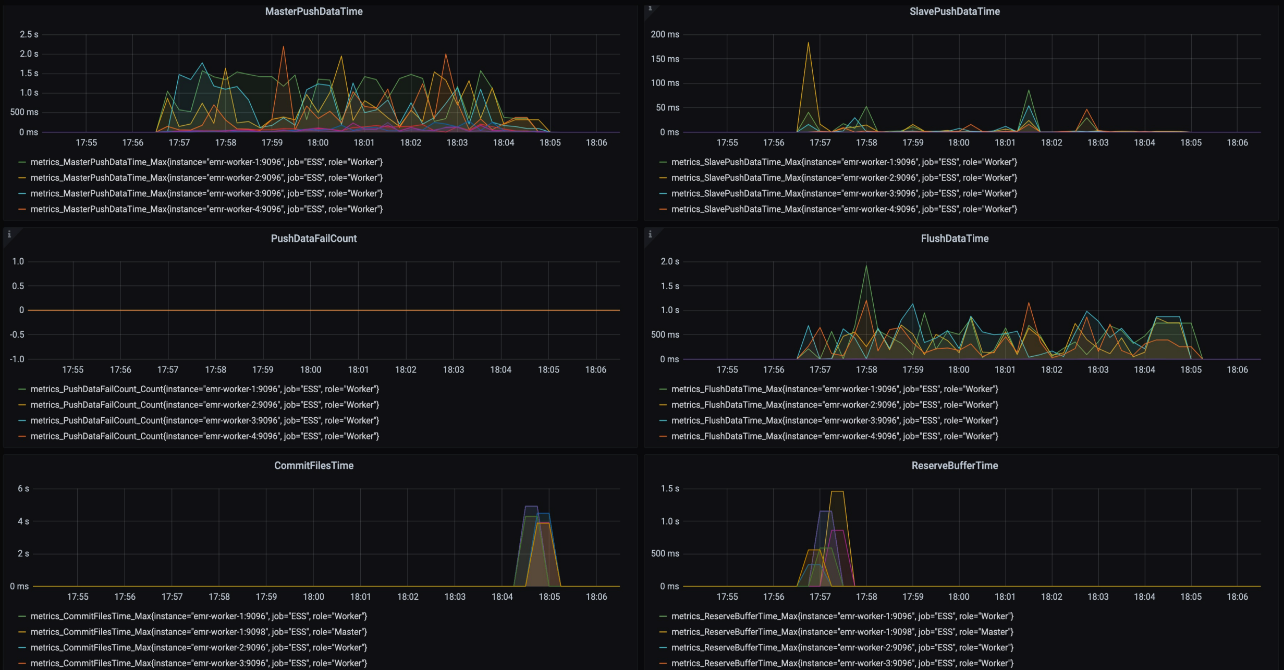

Alibaba Cloud provides detailed monitoring and alerts information on the ESS server. It also connects the ESS server to Prometheus and Grafana, as shown below:

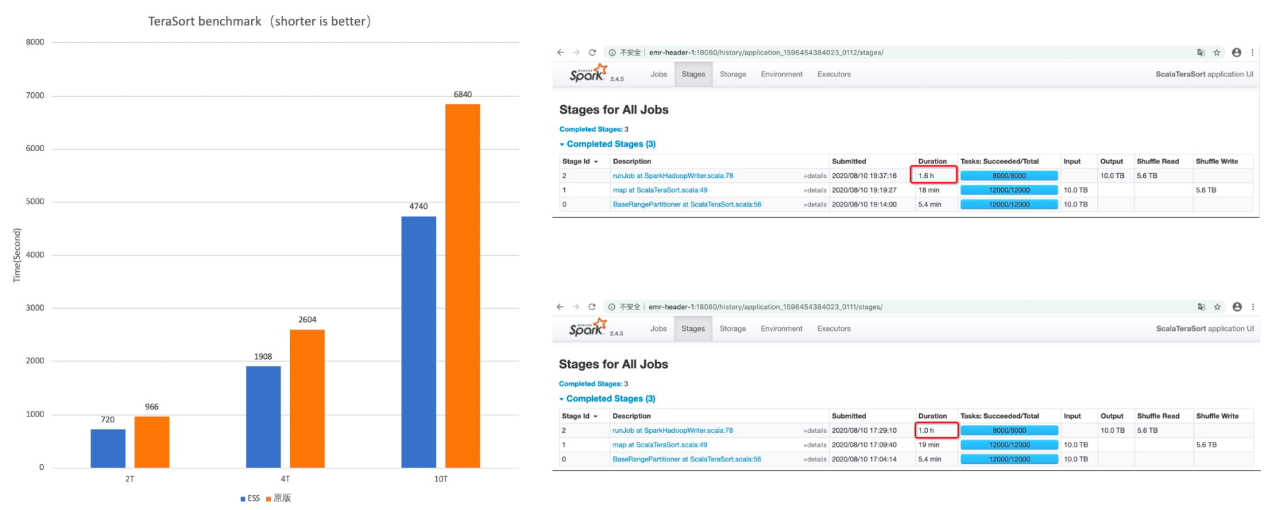

The performance figures of TeraSort are as follows (for 2 TB, 4 TB, and 10 TB data):

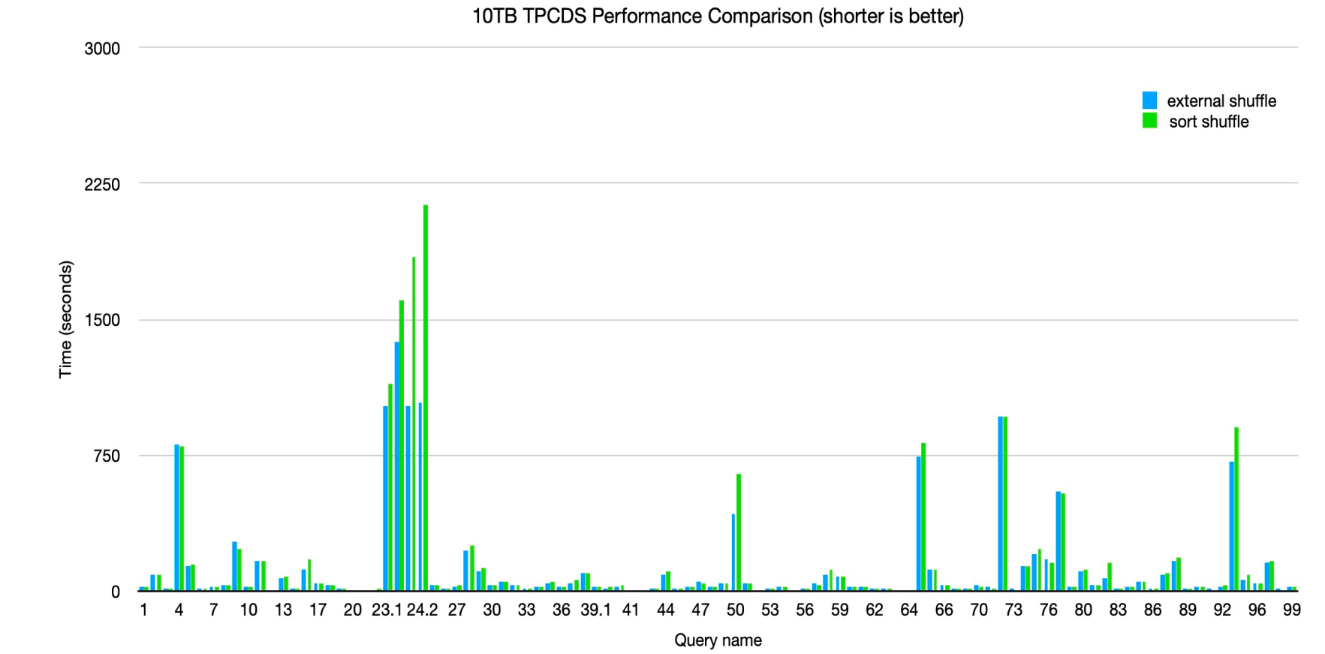

The performance figures of 10 TB TPC-DS are as follows:

Alibaba Cloud will continue to work in the future by focusing on products, ultimate performance, pooling, and other directions.

Implementation and Challenges of Data Lake Metadata Services

Data Lake: How to Explore the Value of Data Using Multi-engine Integration

62 posts | 6 followers

FollowAlibaba EMR - May 11, 2021

Alibaba Cloud Native - January 25, 2024

Alibaba EMR - June 8, 2021

Alibaba EMR - August 24, 2021

Alibaba Cloud Native - March 5, 2024

Alibaba EMR - March 16, 2021

62 posts | 6 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba EMR

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free