By Tianyi Wang (Architect of StarRocks Solutions) and Kang Zhou (Team OLAP of Alibaba Cloud Open-Source Big Data)

The rapid development of the Internet industry is causing the types of businesses and enterprises to increase and the amount of data to grow. The data kanban service with the Apache Hadoop ecosystem as the core can only implement offline services. In some areas, the ability to process data in real-time has become one of the bottlenecks limiting enterprise data realization. Building a fast-paced data kanban for data analysis has become an inevitable choice.

Real-time data warehouses have three well-known watersheds. The first one is building from scratch. The emergence of Apache Storm breaks the single computing method of MapReduce, enabling businesses to process T+0 data. The second watershed is from emergence to completeness. The emergence of Lambda and Kappa architecture has taken offline data warehouses one step towards real-time data warehouses. The evolution of Lambda architecture to Kappa architecture closely integrates the offline data warehouse model and the real-time data warehouse model. The third watershed is from complex to simple. Implementing the Flink technology stack has simplified the real-time data warehouse architecture, which is recognized as the best solution for stream-batch integration.

The real-time data warehouse implemented by using Flink as a real-time computing engine transfers some complex computing to the OLAP analysis engine, making the analysis requirements at the application layer flexible. However, the exclusion of data warehouse changing its data cannot be altered. The next-generation real-time data warehouse platform needs to provide better performance and needs complete functions to match different services.

As a platform-wide MPP architecture, StarRocks provides various methods of performance optimization and flexible modeling. The ultimate performance experience can be obtained on a variety of models (such as pre-aggregation, wide tables, and star/snowflake). Integrating StarRocks and Flink to build an open-source real-time data warehouse allows users to synchronize data in seconds and analyze and query quickly. Scenarios (such as real-time and frequent updates) can be supported better with the StarRocks primary key model.

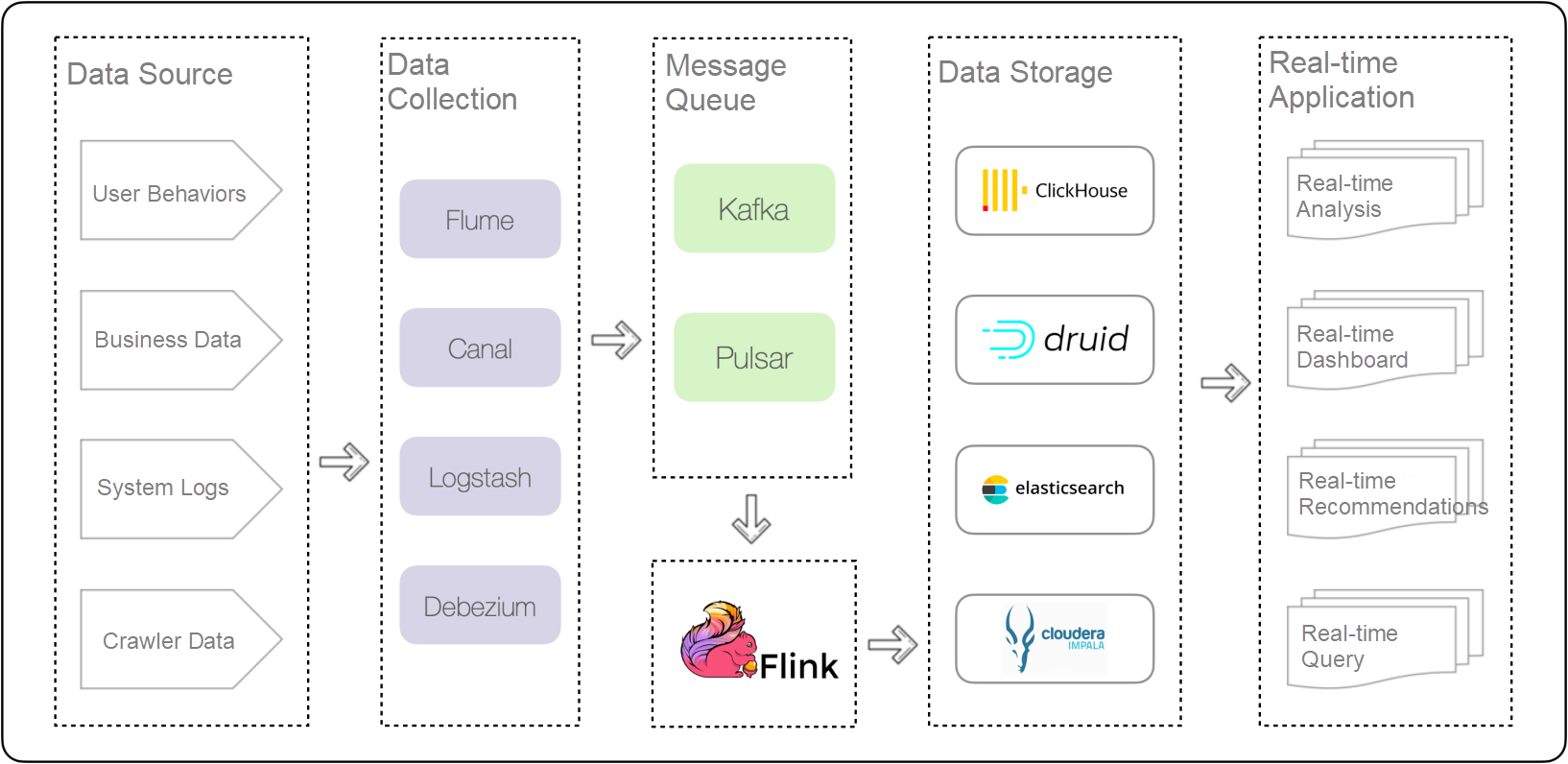

In the original solution of building and implementing data ware houses based on Flink, different collection tools (such as Flume, Canal, and Logstash) are required due to the diversity of data sources. We use different analysis engines for different businesses. For example, for a fixed report business, fact and dimension tables can be flattened into wide tables in advance with known query statements, making full use of the powerful single-table query capability of ClickHouse. Apache Druid can be used for highly concurrent query requests to withstand the concurrency pressure caused by the centralized use of a large number of users during peak hours. The stacking of technology stacks can meet business requirements but can also make the analysis layer bloated, increasing the cost of development and O&M.

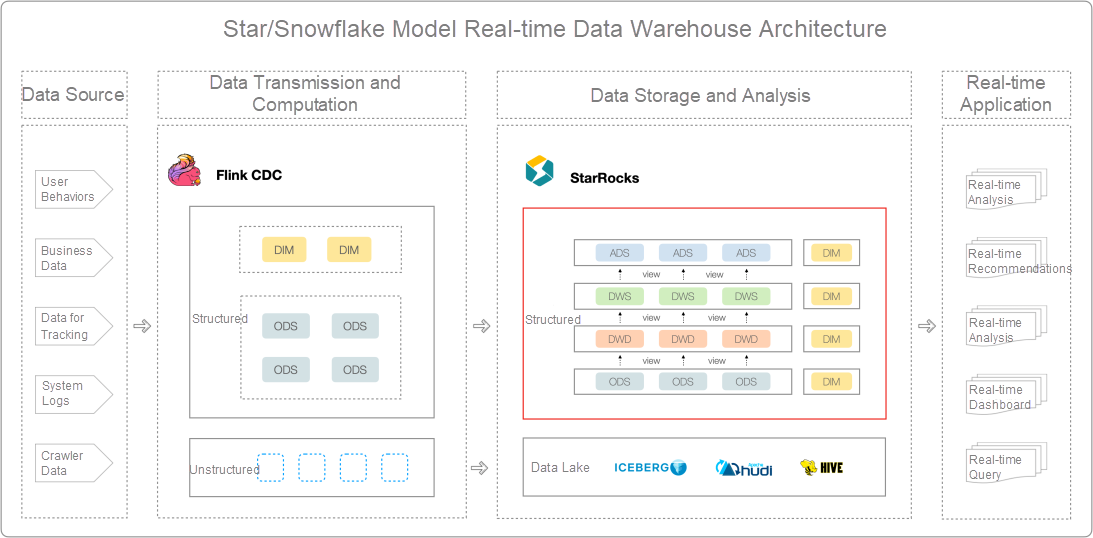

In general, the StarRocks x Flink-based open-source real-time data warehouse ecosystem is built with five layers:

Fast data ingestion and short latency matter the most for the real-time data warehouse system built with Flink as the computing engine. In such an architecture, data is collected from the data sources to the OLAP analysis system through the tool, message queue, and real-time computing layer. Long links bring great risks to development and O&M. Blocking any module will affect real-time performance. Meanwhile, we choose different storage engines to adapt to different businesses at the data storage layer. We are facing many challenges from the data links above. We need to do more exploration in terms of timeliness, functionality, and maintainability. Thus, we can summarize that many aspects need to be optimized.

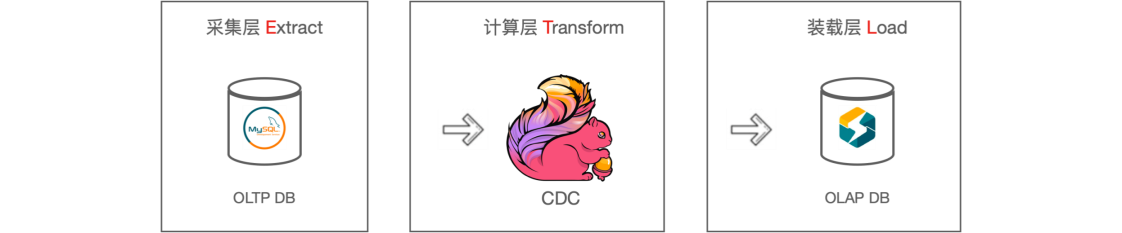

Flink CDC is a component developed by the Flink community that integrates data collection, conversion, and loading. It can directly read full or incremental data from data sources (such as MySQL, PostgreSQL, and Oracle) and write them to downstream OLAP data storage systems. With Flink CDC, upstream data changes can be simply and efficiently captured and synchronized to downstream OLAP data warehouses.

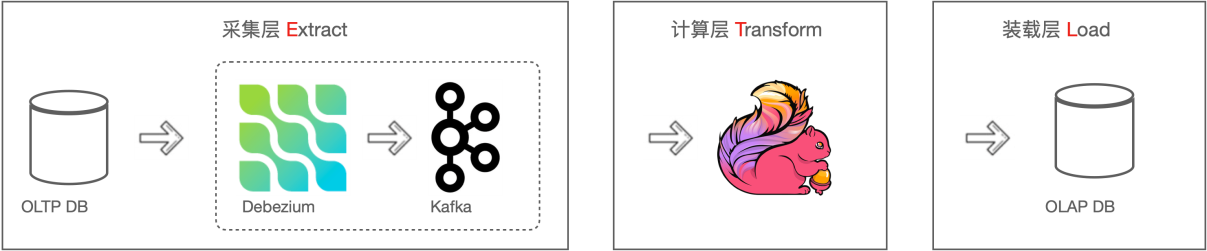

Data collection tools are indispensable in the construction of traditional real-time data warehouses. Due to the inconsistency of upstream data sources, we may access different synchronization and collection tools at the data collection layer. For example, when collecting data from Oracle, we usually choose GoldenGate, but we may choose Canal or Debezium for MySQL. Some collection tools support full data synchronization, and some support incremental data synchronization. After the data passes through the collection layer, it is transmitted to message queues (such as Kafka) where the incremental data is consumed by Flink. Then, the data is written to the downstream OLAP data warehouse or data lake.

In business development, upstream data sources, message middleware, Flink, and downstream analytical data warehouses are maintained in different departments. When business changes or faults are debugging, multiple departments may be required to work together, increasing the difficulty of business development and testing. Replace the data collection components and message queues in the preceding figure with Flink CDC. The collection components and message queues in the dashed box are merged into the computing layer Flink, which simplifies analysis links and reduces maintenance costs. At the same time, fewer components mean fewer failures and transmission bottlenecks. Thus, data effectiveness will be improved.

With Flink CDC, components in the data trace become fewer, the architecture becomes clear and simple, and maintenance becomes convenient. As in the preceding example, Flink CDC is used to pull incremental data from MySQL. Flink SQL is used to create a MySQL CDC table of facts and dimensions, and a widening operation is performed in Flink to write the results to downstream StarRocks. The whole process of grabbing, converting, and loading can be completed through a Flink CDC job.

We divide synchronization into two stages in the traditional data synchronization framework:

We can choose the multi-thread import mode during full data synchronization to speed up the import. When full data synchronization is performed under the multi-thread model, after data is split, data synchronization is completed by starting multiple concurrent tasks. Multiple concurrent services may not belong to the same read transaction and have a certain time interval. Therefore, data consistency cannot be strictly guaranteed. We have two solutions to ensure the consistency of data and balance from the perspective of engineering and technical implementation.

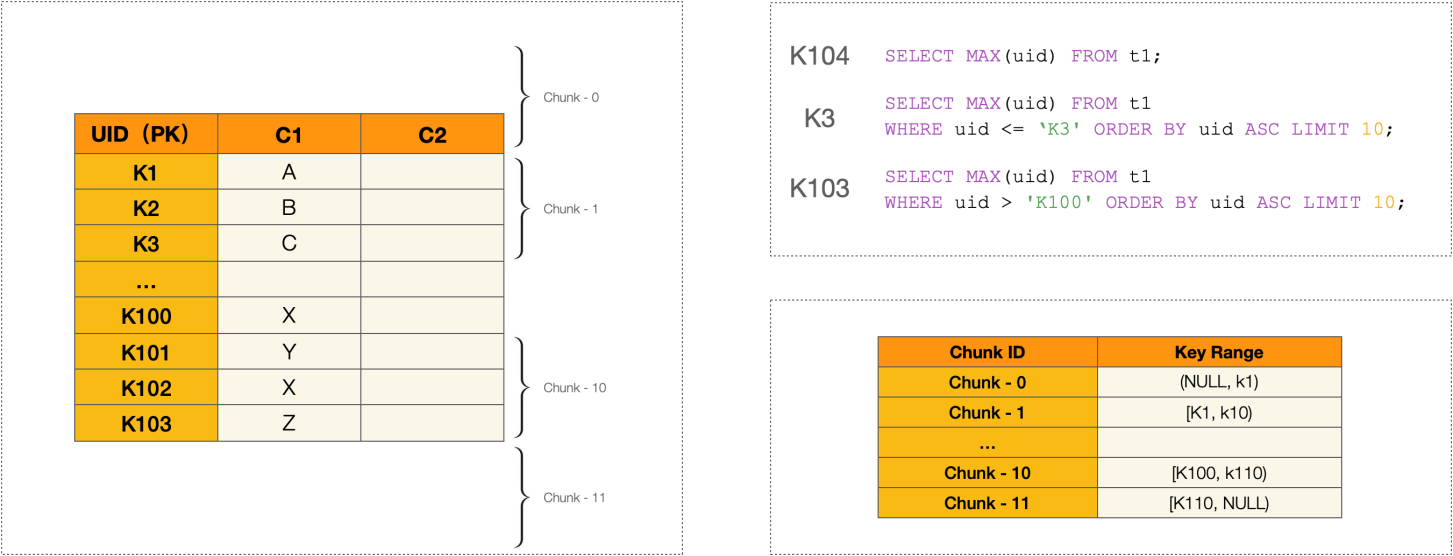

With Flink CDC, full and incremental data can be synchronized. Debezium is used as an underlying collection tool in Flink CDC version 1.x. Databases or tables need to be locked to ensure data consistency during full data reading. Flink 2.0 introduces the Chunk splitting algorithm to solve this problem by ensuring lock-free data reading. The splitting algorithm of Chunk is similar to the principle of database and table sharding. Data sharding is performed using the primary key of a table.

After chunk data is sharded, each chunk is only responsible for the data within its primary key range with the condition of ensuring read consistency of each chunk. This is the basic principle of the lock-free algorithm.

StarRocks is a fast and full-scenario MPP enterprise-class data warehouse product with the capability of horizontal online expansion and high availability at the financial level. It is compatible with the MySQL protocol and MySQL ecosystem and provides important features (such as a comprehensive vectorization engine and federated query of various data sources). As an analytical data warehouse with MPP architecture, StarRocks can support PB-level data volume and has flexible modeling methods. It can build a fast and unified analysis layer data storage system through optimization methods (such as materialized views, bitmap indexes, and sparse indexes).

StarRocks launched its primary key model in version 1.19. Compared with the updated model, the primary key model can support real-time and frequent update scenarios better. The primary key model requires a table to have a unique primary key (the primary key in a traditional data warehouse). It supports updating and deleting rows in a table by primary key.

Variable data is often undesirable in OLAP data warehouses. We use batch updates to handle large amounts of data changes in traditional data warehouses. We have two ways to deal with data changes.

We can delete the original partition of most OLAP data warehouse products by manipulating partitions and replacing them with new partitions, so the change operations on a large amount of data are realized. Generally speaking, there are three steps:

Swapping partitions to achieve large-scale data changes is a heavy operation and is suitable for low-frequency batch data updates. Due to changes in table definitions, developers cannot independently complete data changes through this solution.

Some OLAP data warehouses provide Merge on Read-based data change models. For example, ClickHouse provides the MergeTree engine, which can complete asynchronous updates but fails to synchronize data in real-time. After we specify the FINAL keyword, ClickHouse merges the results before they are returned, which allows us to synchronize data updates in quasi-real-time. However, due to the high cost of the FINAL operation, it is not enough to support the frequent dimension update requirements brought by real-time data warehouses. Even in low-frequency update scenarios, the ClickHouse Merge Tree solution cannot be copied to other storage systems.

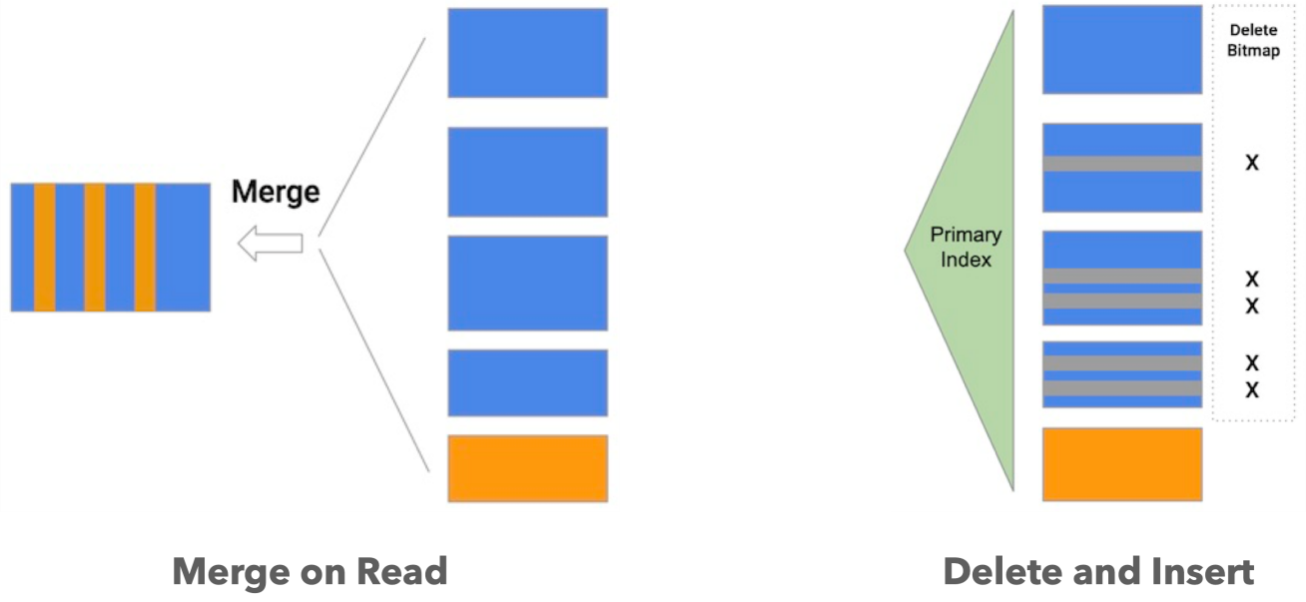

StarRocks provides an updated model (Unique Key model) similar to ClickHouse Merge Tree, which updates data by the Merge on Read model. In the updated model, StarRocks assigns a version number to each batch of imported data. One primary key may have multiple versions that merge during the query and return the record of the latest version.

Merge on Read mode is simple and efficient in writing but consumes a large number of resources in version merging during reading. Due to the merge operator, predicates cannot be pushed down, and indexes cannot be used, seriously affecting the performance of queries. StarRocks provides a primary key model based on the Delete and Insert mode, which avoids the problem that operators cannot be pushed down due to version merging. The primary key model is suitable for scenarios where data needs to be updated in real-time. It can solve row-level update operations and support the TPS of million traffic. It is suitable for scenarios where MySQL or other business libraries are synchronized to StarRocks.

We selected some queries for comparison in the TPCH standard test set. Compared with the Unique Key model based on the Merge on Read, the performance of the Delete and Insert mode-based primary key model is improved.

Eliminating duplicate data is a common challenge in real business. The deletion of deduplication helps reduce the capacity consumed by storage in a data warehouse. In some specific scenarios, duplicate data is unacceptable. For example, duplicate operations are performed according to user IDs in business scenarios (such as customer group selection and precision marketing) to avoid repeatedly pushing marketing information. In traditional offline computing, the distinct function can be used to complete deduplication. In real-time computing, deduplication is an incremental and long-term process, and we can deduplicate by adding MapState logic in Flink. However, in most cases, MapStat can only ensure data deduplication within a certain time window. It is difficult to deduplicate incremental data with historical data in the OLAP database. As the time window increases, deduplication in Flink occupies a large number of memory resources and makes the computing layer bloated.

The primary key model requires a table to have a unique primary key and rows in the table can be updated and deleted based on the primary key. The uniqueness of the primary key is highly matched with the requirements of deduplication. The primary key model has completed deduplication during data import, avoiding the resource consumption caused by manual deduplication. We can select an appropriate deduplication column as the primary key by dismantling the business logic and completing the requirement of data deduplication according to the unique primary key in the Delete and Insert way during data import. Compared with the implementation of deduplication in Flink, the StarRocks primary key model can save a large number of hardware resources, which is simpler to operate. Also, the deduplication of incremental and historical data can be performed.

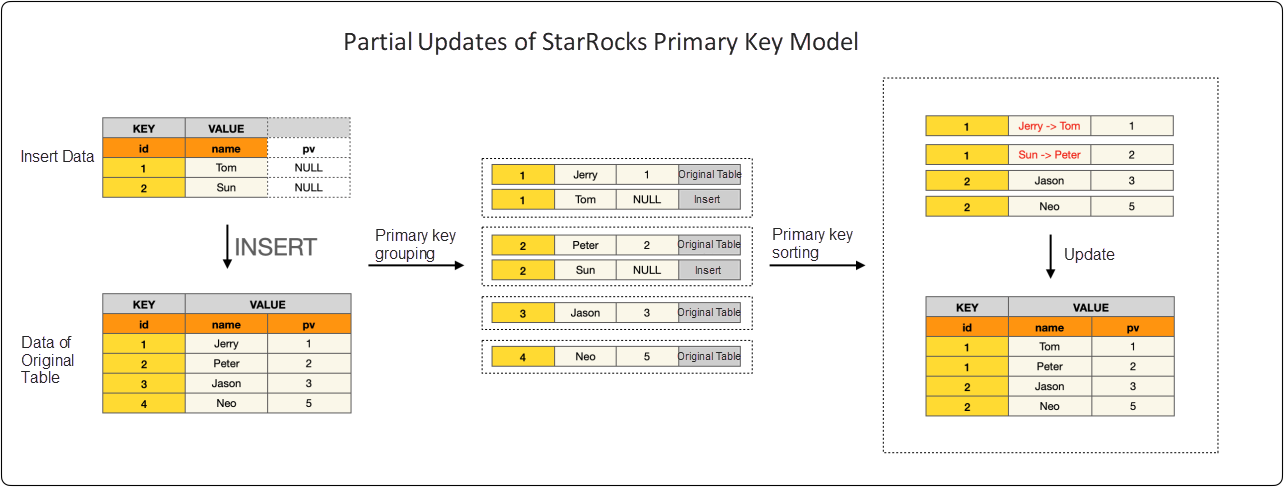

In fixed report business scenarios, data is typically cleaned in Flink based on fixed queries and then flattened into wide tables. The excellent multi-dimensional analysis performance of wide tables is used to speed up the queries and simplify the data models used by analysts. However, due to the attribute that a wide table needs to be pre-aggregated, when dimension data changes, the wide table needs to be rerun to update the data. The primary key model of StarRocks can be applied to data change scenarios. The function of updating some columns meets the requirements of various businesses to partially update different fields in a wide table.

There are dozens, hundreds, or thousands of columns in the wide table model. This poses a challenge to the primary key model that updates data by UPSERT. We need to obtain all information about the changing rows before we can complete the data update of the wide table. This leads to the fact that the change will be accompanied by the previous table read. The changed data row needs to be pulled from StarRocks, and the inserted statements need to be spelled out to complete the data update. This puts query pressure on StarRocks. The partial update simplifies the UPSERT. After enabling the partial_update parameter, we can only modify some of the specified columns based on the primary key, and the original value column remains unchanged.

In the following example, we can consume data in Kafka by the Routine Load import. In the properties, we need to set "partial_update" = "true", specify that the partial column update mode is enabled, and specify the column names to be updated, namely, COLUMN(id, name):

CREATE ROUTINE LOAD routine_load_patical_update_demo on example_table COLUMNS (id, name), COLUMNS TERMINATED BY ',' PROPERTIES ( "partial_update" = "true" ) FROM KAFKA ( "kafka_broker_list" = "broker1:9092,broker2:9092,broker3:9092", "kafka_topic" = "my_topic", "kafka_partitions" = "0,1,2,3", "kafka_offsets" = "101.0.0.200" ); Flink CDC solves the problem of lengthy data links, while StarRocks provides a solution of extreme performance and integrated data storage at the OLAP analysis layer to match different business scenarios. The real-time data warehouse platform built by the integration of StarRocks and Flink CDC can reduce development and O&M costs.

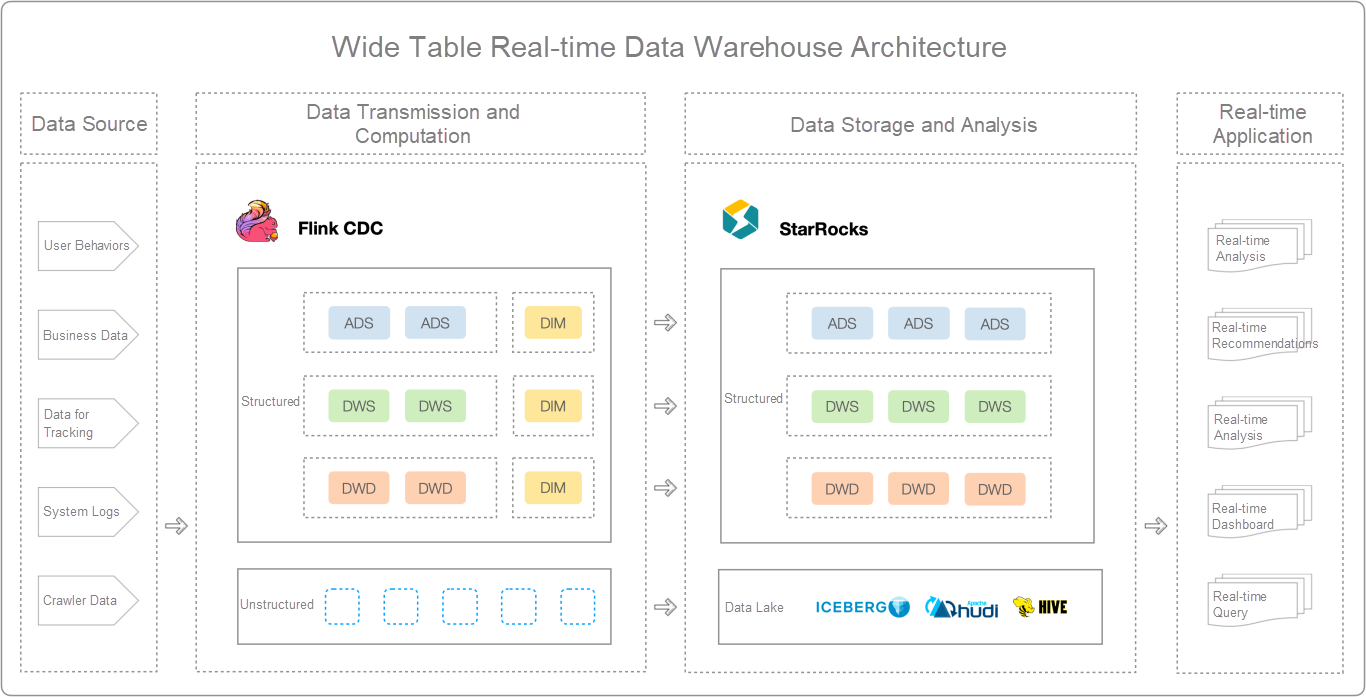

With the joint solution of StarRocks and Flink CDC, we can plan real-time data warehouses into a four-tier structure.

In scenarios where ETL is not complex, we can implement most ETL operations in Flink. The business model is simple in some scenarios. Data of facts and dimensions are flattened into a wide table with the multi-stream join capability of Flink. DWD, DWS, and ADS layer models are divided in Flink. Unstructured data can also be incrementally written to Iceberg, Hudi, or Hive. Complete the integrated architecture of the lake house using the appearance function of StarRocks.

When complex business logic is introduced in the ETL process, it may occupy a large number of memory resources at the Flink computing layer. The mode of the wide table cannot cope with multi-dimensional analysis scenarios where queries are not fixed. We can choose to replace the wide table model with the star model. Then, the cleaning and modeling of data are completed in StarRocks.

Analysts often explore data from different dimensions in some complex business scenarios (such as in self-service BI reports and operation analysis scenarios). The randomness and flexibility of queries require the OLAP analysis engine to have good support for performance and many modeling methods to satisfy users to pull metrics and dimensions, drill down, roll up, and associate queries on the page at near random.

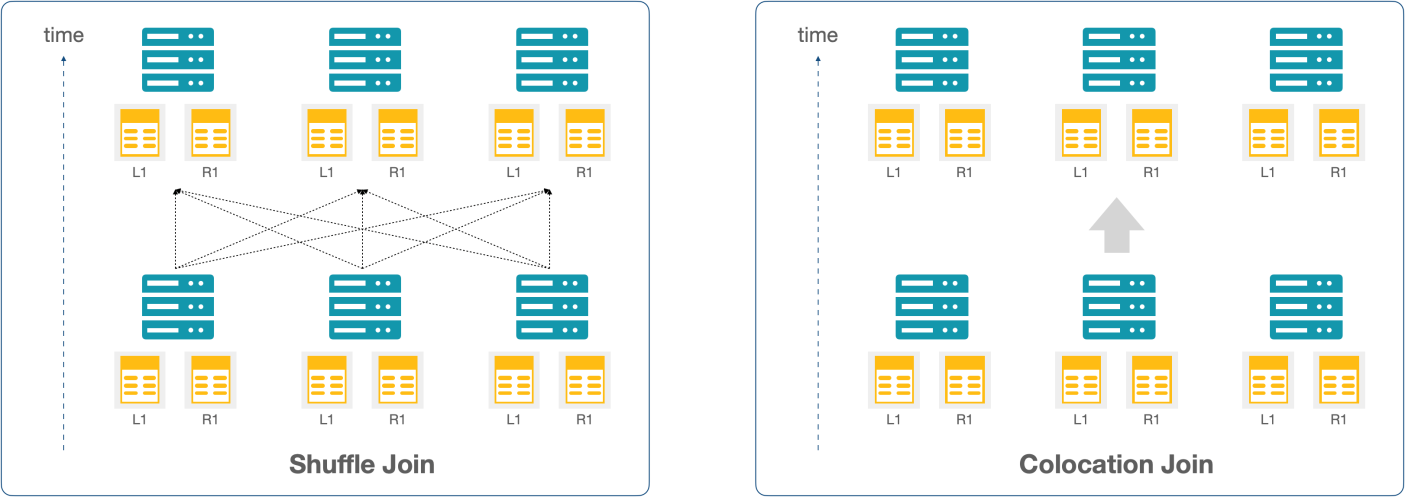

StarRocks can use a more flexible star model instead of a wide table. StarRocks provides different join methods to enhance the real-time association of multiple tables, such as Boardcast Join, Shuffle Join, Bucket Join, Replica Shuffle Join, and Colocation Join. CBO selects the type of join reorder and join based on the table statistics. It provides multiple optimization methods (such as predicate pushdown, limit pushdown, and materialization delay) to accelerate queries associated with multiple tables.

Based on the real-time join capability of StarRocks, we can post ETL operations to StarRocks and complete data modeling in StarRocks through the real-time join. We can also support data changes with the Primary Key model and create slowly changing dimensions in StarRocks to implement dimension data changes.

The real-time data warehouse built by the star/snowflake model allows us to post the modeling operation of Flink at the computing layer into the StarRocks engine. In Flink, we only need to clean data at the ODS layer. Dimension and fact tables are synchronized to StarRocks through Flink CDC. In StarRocks, fact and dimension data are implemented at the ODS layer and perform aggregation by the aggregation model or materialized views. We can quickly calculate query results and ensure the business data is highly homologous and consistent at different model layers with the real-time multi-table association capability of StarRocks and multiple optimization methods (such as intelligent CBO optimizer, sparse index, and vectorization engine).

In real life, the attributes of dimensions are not static and will change slowly over time. The star model can store fact and dimension tables independently and decouple dimension data from wide tables to deal with the problem of slowly changing dimensions through the primary key model of StarRocks. Generally speaking, we have three solutions to deal with the problem of slowly changing dimensions:

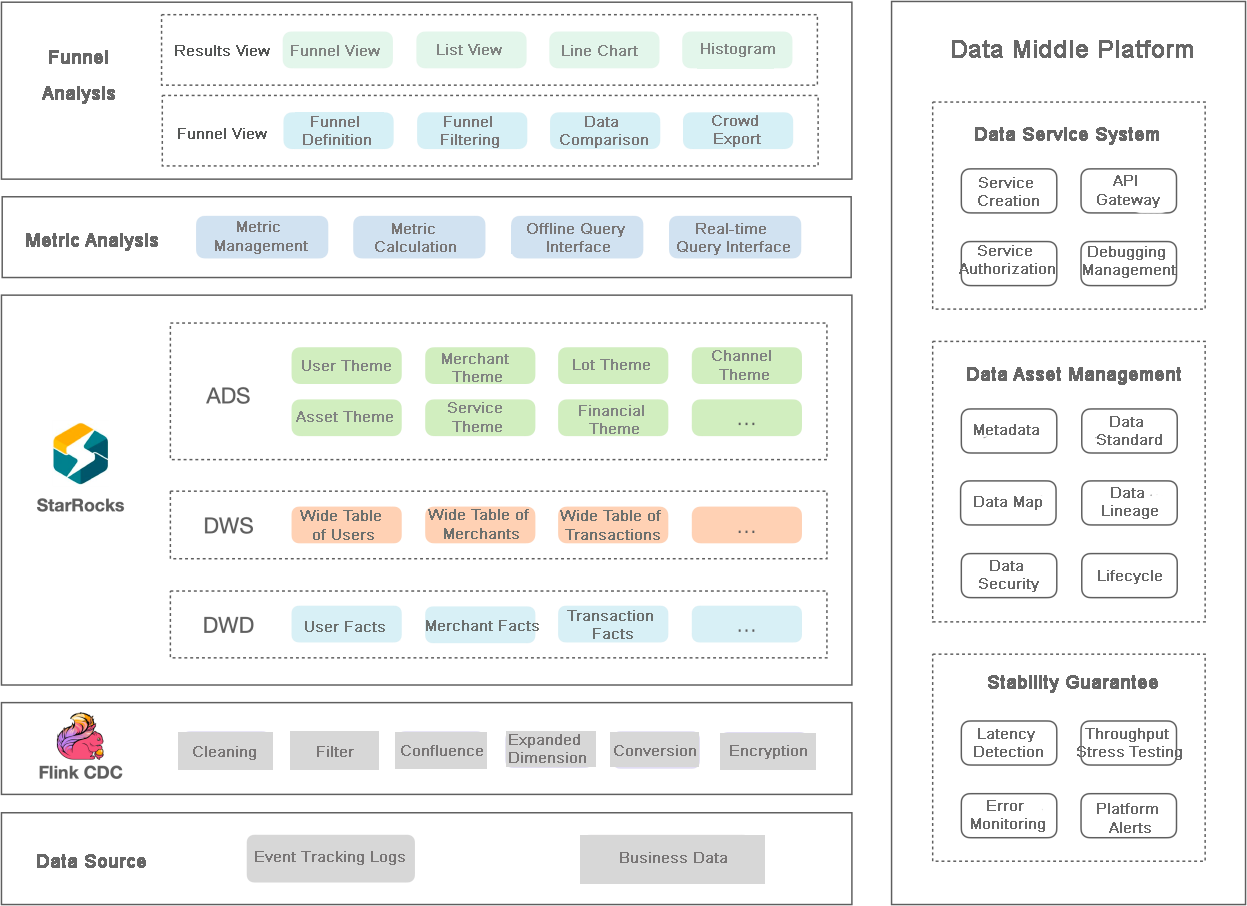

StarRocks and Flink CDC has significantly simplified the complexity of data links on a well-known e-commerce platform. Users can build a real-time data kanban platform through StarRocks to implement multi-dimensional data filtering, flexible funnel analysis, and flexible analysis of different dimension movements.

ClickHouse was initially selected as the storage engine for the data analysis layer in the e-commerce data kanban platform. However, as the business grows, ClickHouse cannot effectively support it in some scenarios, which are mainly reflected in the following aspects:

The e-commerce platform has re-made technical selections to solve the problems above. After continuous comparison and stress testing, StarRocks was finally selected as the data storage engine for the analysis layer.

The real-time kanban business can be divided into five parts:

Recently, Alibaba Cloud E-MapReduce (EMR) cooperated with the StarRocks community to launch the first StarRocks cloud product. We can select Flink and StarRocks with corresponding specifications on EMR.

An excellent product should not be separated from community ecology. You are welcome to participate in the joint construction of StarRocks and Flink community and test the end-to-end real-time data warehouse link scheme StarRocks Primary Key x Flink CDC.

62 posts | 7 followers

FollowApache Flink Community - May 30, 2024

Apache Flink Community - July 5, 2024

Apache Flink Community - August 14, 2025

Apache Flink Community - March 7, 2025

Apache Flink Community - May 28, 2024

Apache Flink Community - July 18, 2024

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba EMR