By Hongrui, product expert on Alibaba Cloud E-MapReduce

Contents:

• Background of StarRocks

• Important Features and Business Scenarios of StarRocks 3.x

• Build a New Data Lakehouse Paradigm Based on StarRocks

• Product Capabilities of E-MapReduce Serverless StarRocks

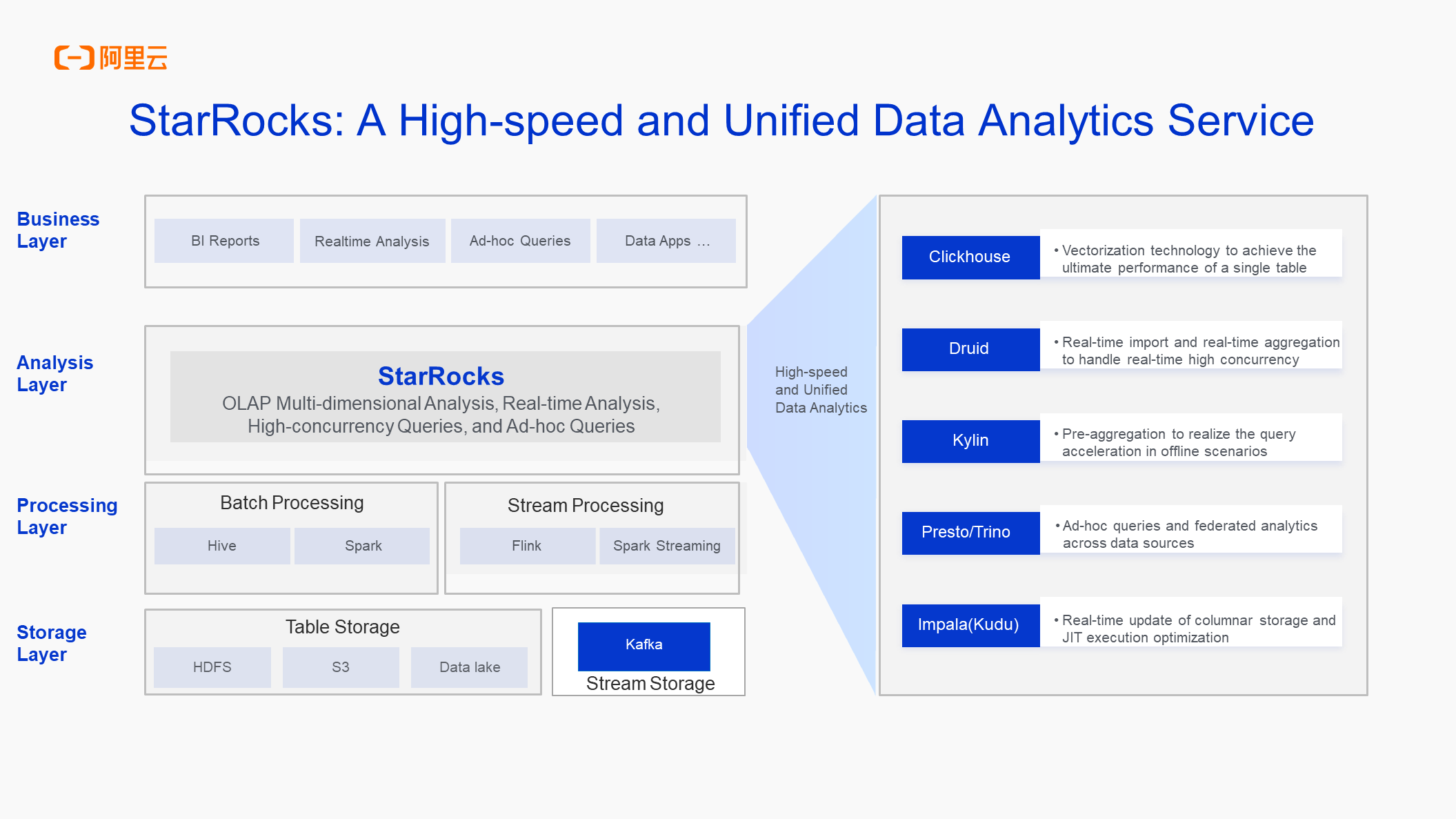

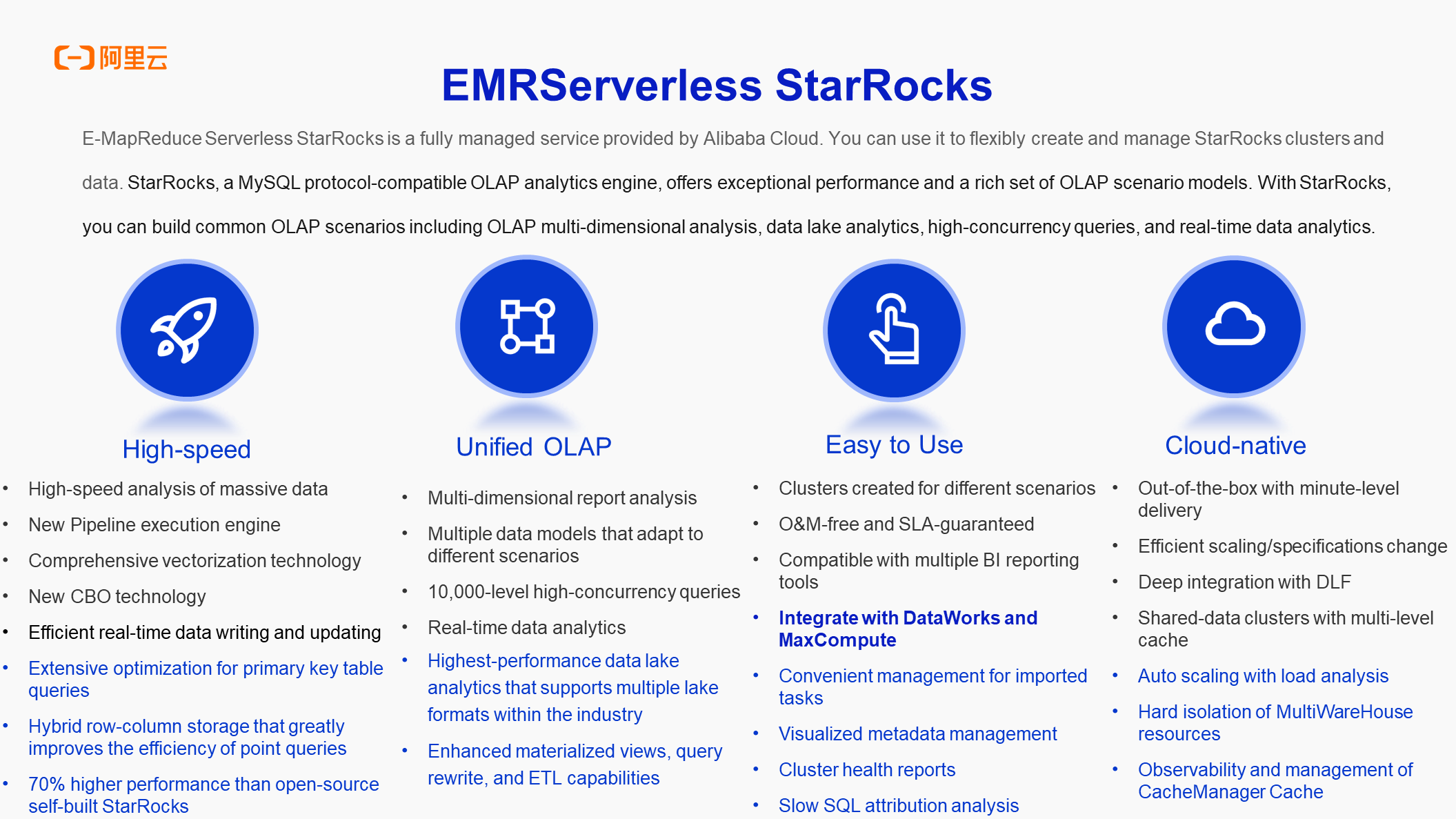

StarRocks provides two key points: high-speed and unified.

1. High-speed means that it can achieve extreme performance and greatly improve query efficiency in OLAP scenarios. From version 1.0, StarRocks has aimed at ultimate speed, leveraging CBO and vectorization technology to create a comprehensive high-speed underlying computation framework.

2. Unified starts with StarRocks 2.0 and contains the following two aspects:

In StarRocks 3.x and later versions, the storage layer can use HDFS storage or object storage such as OSS. Data can also be written into StarRocks after batch processing or stream processing for accelerated analytics at the upper layer.

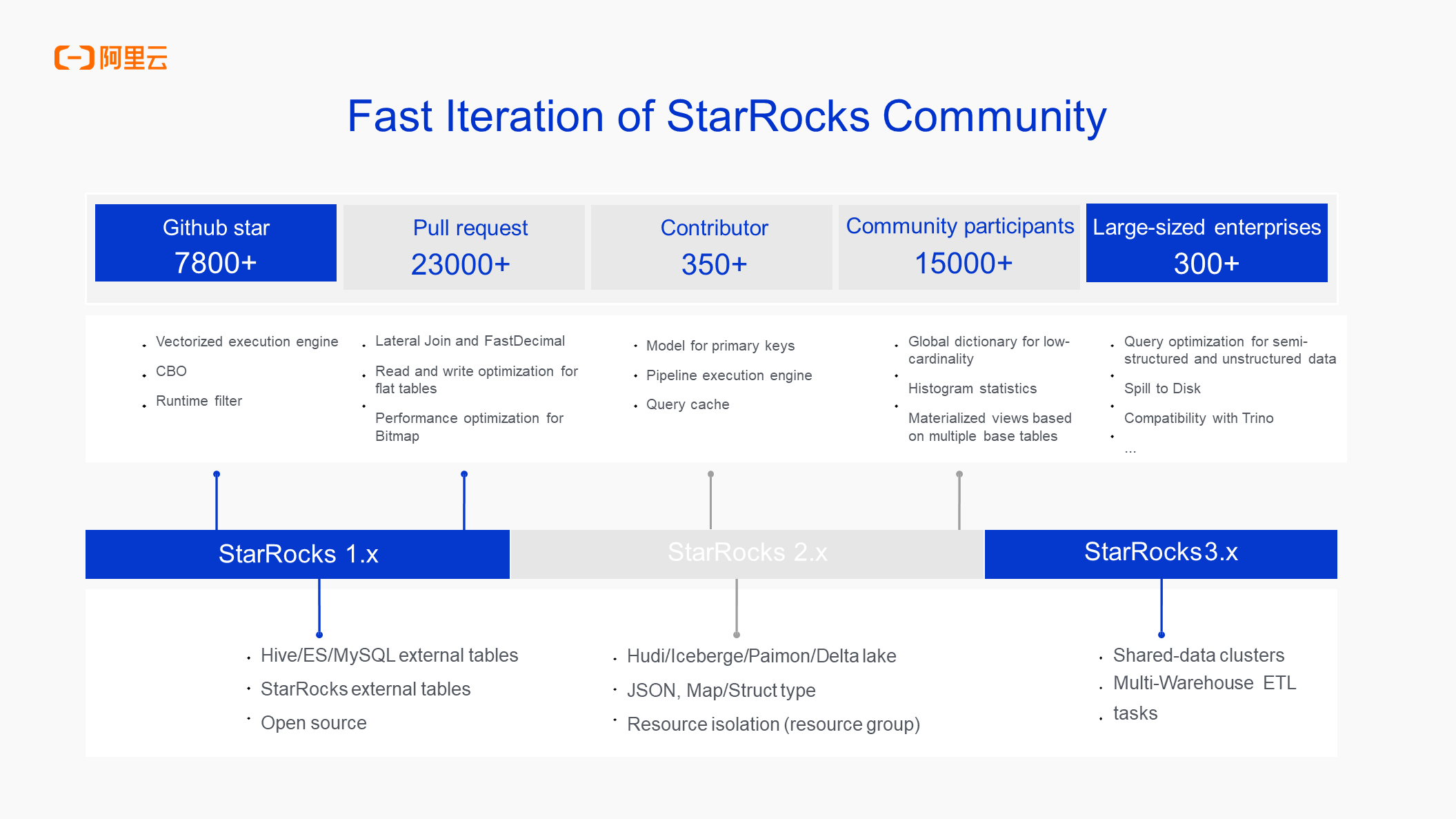

StarRocks is backed by an engaged community, and Alibaba Cloud has been closely collaborating with StarRocks for nearly three years.

StarRocks 1.0 introduced the high-speed feature, enabling high-performance queries for traditional OLAP. StarRocks 2.0 began to support a wider range of scenarios and ecosystems such as data lake analytics, and started to integrate more formats, including JSON and Map/Struct. Additionally, after integrating more scenarios, it began to support soft resource isolation through resource groups, providing an initial solution for resource isolation across multiple scenarios.

In 2023, StarRocks officially released version 3.x, which introduced a core shared-data architecture. This technical advancement has laid a solid foundation for the expansion of StarRocks application scenarios. With this optimization, StarRocks can still maintain high performance while flexibly adapting to diverse workloads, such as data pre-processing, data lake analytics, data warehouse modeling, and data Lakehouse modeling. This is referred to as the new StarRocks data Lakehouse paradigm. The data Lakehouse fusion advocated by StarRocks 3.x is a strategic upgrade aimed at modern data management needs. It decouples and flexibly configures storage and computing resources without performance reduction. Therefore, it can provide a comprehensive solution that not only has the flexibility of a data lake but also has the efficiency of data warehouse analytics. It is an important milestone in the evolution of the data analytics architecture.

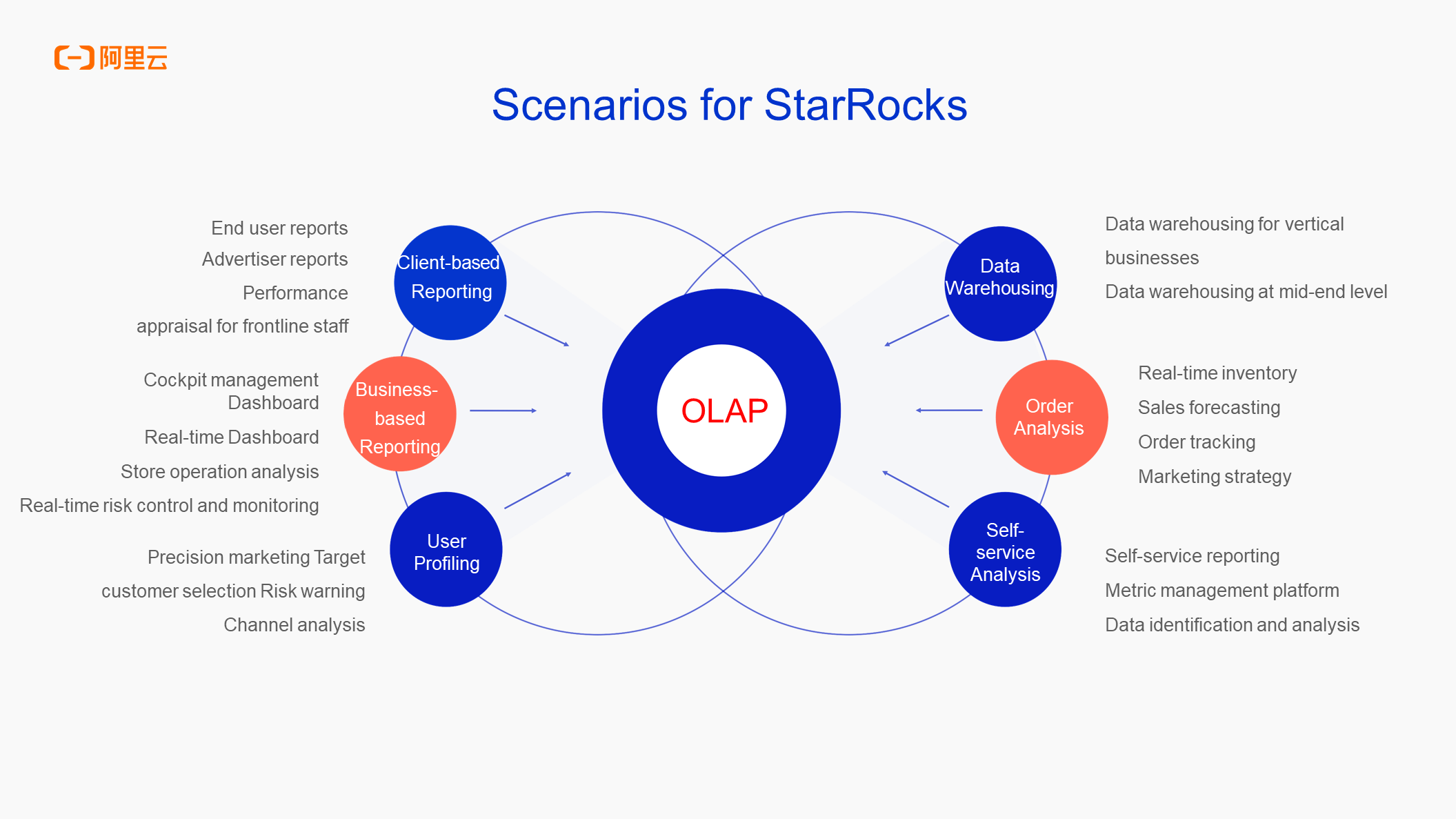

StarRocks is widely applicable to the following six scenarios:

First, it excels in report analysis for daily work, particularly in meeting high real-time report analysis needs, and serves multiple roles including customers, operations teams, and managers.

Second, it supports real-time data writing and querying, which is suitable for Dashboard displays and dynamic monitoring systems.

Third, it builds user profiles to aid in precision marketing and segments markets through tag filtering to deepen channel analysis.

Fourth, it is suitable for real-time writing and querying business scenarios such as order analysis, sales forecasting, and order tracking, as well as sales-related business scenarios and common ad-hoc queries and self-service reporting platforms. For example, you can use Alibaba Cloud Quick BI or DataV combined with StarRocks exceptional performance to create reports.

Fifth, the metric management platform can also use StarRocks to accelerate queries of underlying data.

Sixth, StarRocks 3.x begins to support ETL scenarios such as data warehouses. For vertical businesses, if the business volume is large, a small vertical business may use StarRocks as a data warehouse. This new business is featured by its frequent changes and requires a rapid response from StarRocks. Unlike traditional data warehouses, this prevents establishing a complete standard layering and allows for quick data processing and handling to respond to real-time business changes.

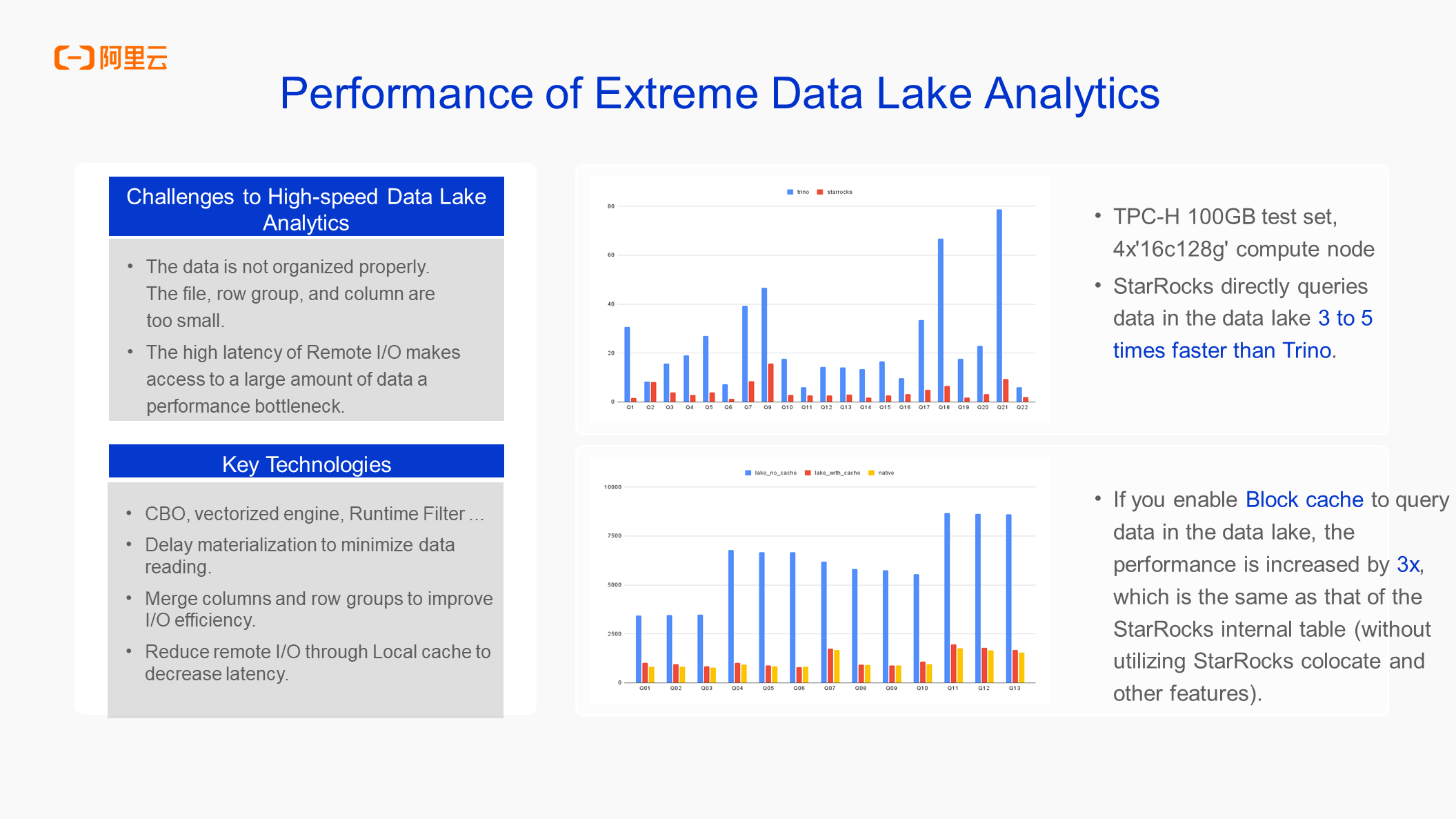

In addition, from the perspective of business scenarios, the data analytics in the data lake is also involved. Strictly speaking, all of the six scenarios mentioned above are related to data lake analytics, except for data warehouses. In the past, Trino may be used for analytics. However, in StarRocks 3.x and later versions, you can use StarRocks to query and analyze data lakes, which provides an overall performance improvement of 3 to 5 times.

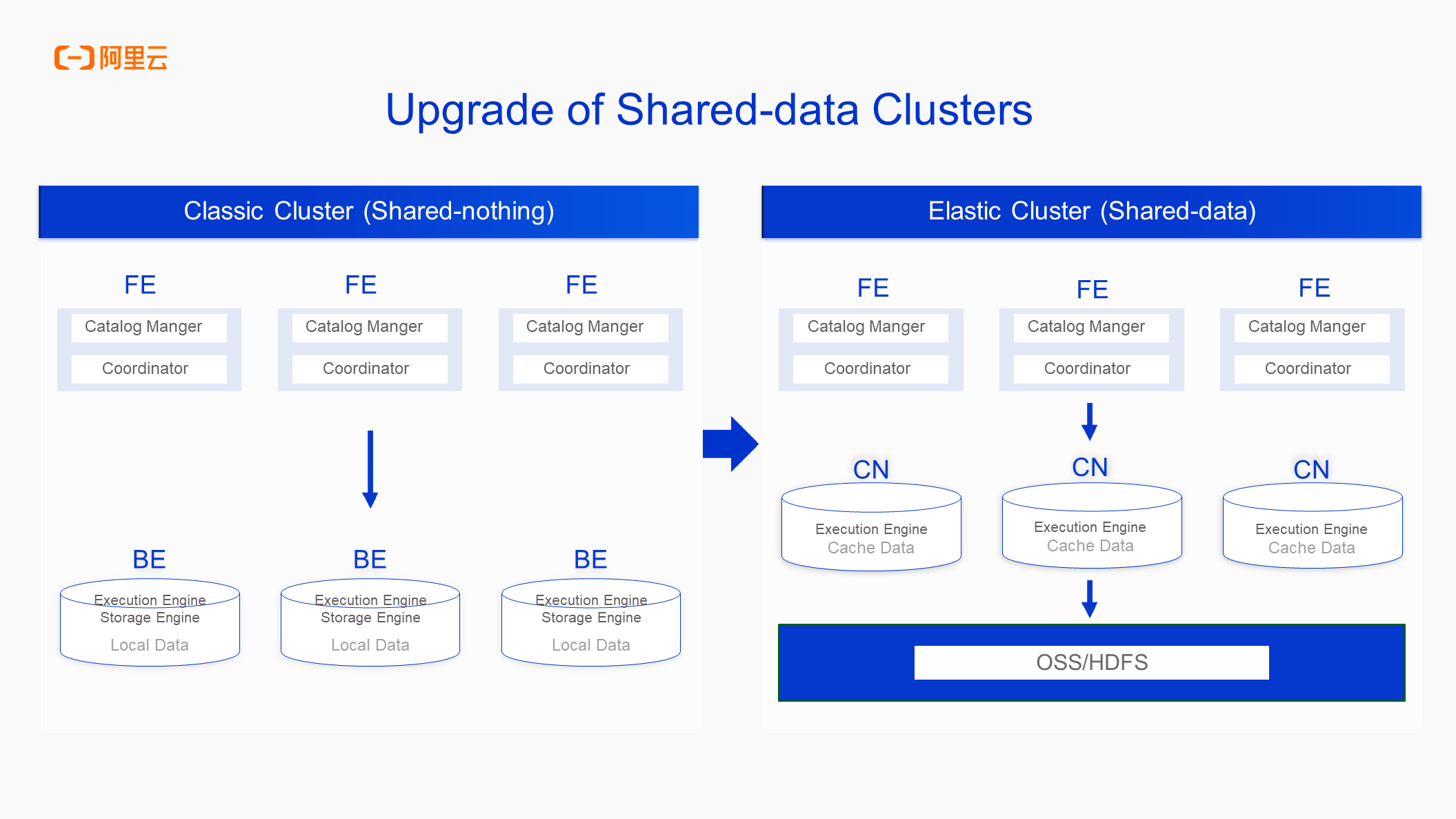

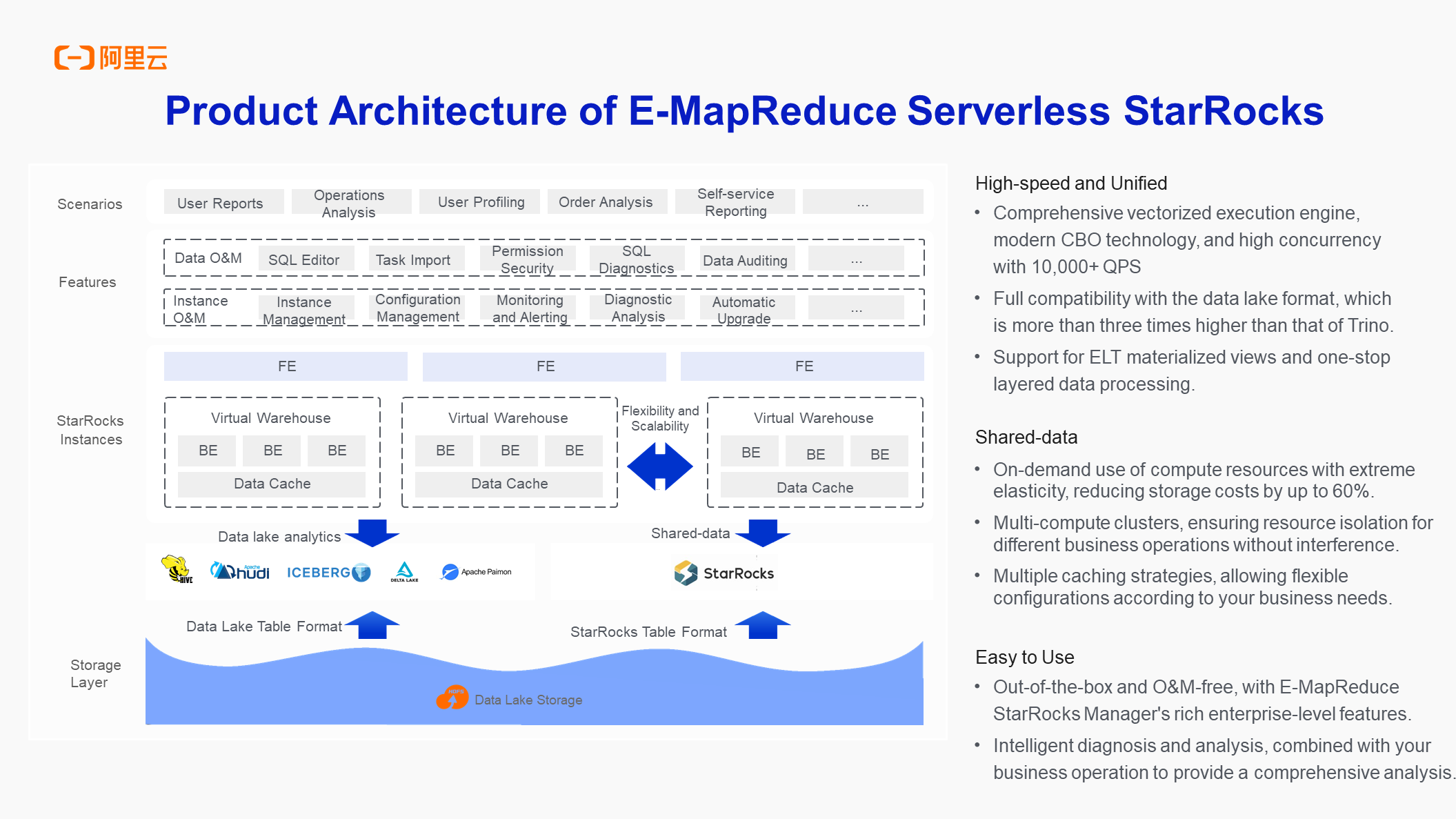

In earlier versions of 2.x and 1.x (the left side of the preceding figure), the system consists of frontend nodes (FE) and backend nodes (BE). The backend nodes are responsible for both executing data computing tasks and managing local data storage to ensure high data availability by maintaining three replicas of each data piece.

StarRocks 3.0 has significantly upgraded the architecture and separated the functions of backend nodes. The data storage layer is external to systems such as OSS or HDFS. Alibaba Cloud users tend to choose OSS for its high efficiency and low storage costs. This adjustment enables compute nodes (CNs) to focus on data processing tasks and transforms them into a stateless design, which enhances the flexibility and scalability of the system and can effectively use cached data to accelerate query responses. This is particularly important for high-real-time OLAP scenarios that StarRocks specializes in and ensures the necessity and efficient use of cached data. At the same time, frontend nodes are still compatible with previous versions. The following content will further explore the enhancements and optimizations of Warehouse capabilities with shared-data clusters.

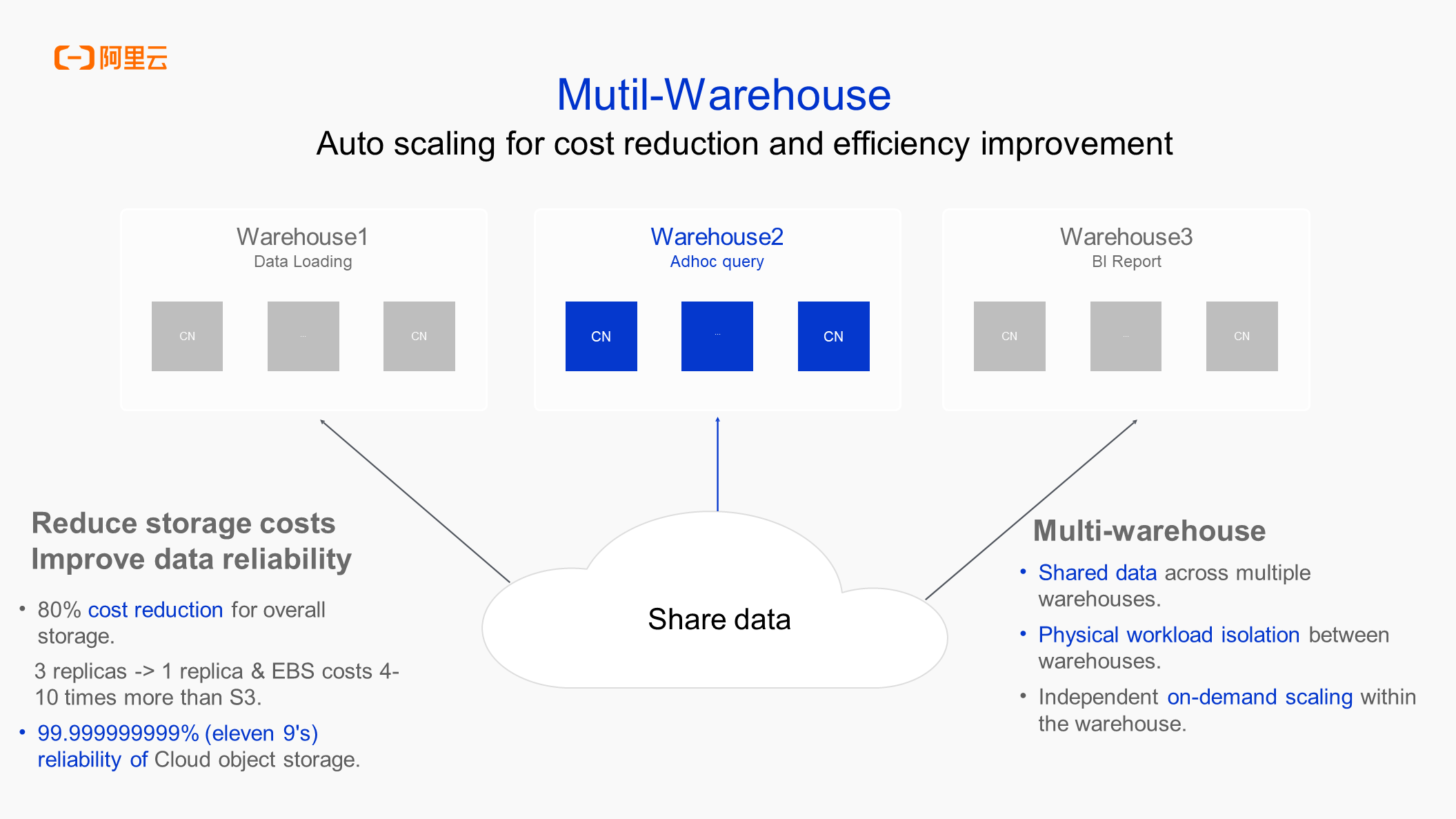

Before adopting the shared-data architecture, storage costs accounted for 30% to 40% of the total expense (computing plus storage), especially for large data volumes. However, in StarRocks 3.0 and later versions, by reducing from three replicas to a single replica and using OSS storage, storage costs can be reduced by 70% to 80%. Although adding cache disks incurs some costs, they are negligible compared to the previous multi-replica storage expenses. In short, switching to OSS single-replica storage with a local cache significantly reduces storage costs.

In terms of computing cost control, StarRocks integrates warehouse management and elastic analytics functions. Particularly when using E-MapReduce Serverless StarRocks, it can fully leverage the newly introduced elasticity. This ability adjusts the number of compute nodes based on time or workload, that is, increasing nodes during peak times to handle high concurrency demands and reducing nodes during off-peak times to save resources. This effectively optimizes costs and achieves a highly efficient and economical on-demand billing operation model.

In terms of reliability, StarRocks relies on the highly available architecture of OSS to ensure data security. At the computing level, the stateless CN design supports elastic scaling, enabling on-demand resource activation, and further enhancing the stability and efficiency of the system.

Resource isolation is a major improvement of StarRocks. Especially with shared-data clusters, the compute nodes can implement more refined management. Compute nodes are grouped into independent resource units, and each group belongs to a specific warehouse, which avoids resource contention, as shown in the preceding figure. This means that import jobs, ad-hoc queries, and fixed reports can be assigned to different warehouses, achieving physical isolation. Each warehouse can operate independently, scale elastically, and automatically adjust resources for tasks such as ad-hoc queries in cases of fluctuating traffic, thereby reducing unnecessary computing overhead. This design, aimed at high-speed and unified features pursued by StarRocks, effectively addresses the potentially critical issue of resource contention and ensures that data imports, query operations, and other business processes do not interfere with each other. Through the multi-warehouse mechanism within the shared-data framework, it achieves efficient and orderly resource scheduling and utilization, further solidifying its advantageous position in the high-performance data analytics field.

In terms of performance, when the cache is missed in a shared-data scenario, the performance is slightly worse than that in a shared-nothing scenario, with a ratio of approximately 1:3. However, if the local cache is effectively utilized, even though retaining a data replica may cause a slight increase in storage costs, the overall expenses are still significantly lower than those of a shared-nothing solution. More importantly, when queries fully hit the local cache, their performance can rival that of a shared-nothing architecture. Therefore, shared-data clusters not only reduce costs while ensuring that performance remains uncompromised but also enhance resource isolation and elastic scalability, which further optimizes overall system efficiency and cost-effectiveness.

The key benefit of shared-data clusters is the significant reduction in storage costs, which poses new possibilities for StarRocks to process larger data sets. With the establishment of the new data Lakehouse paradigm in StarRocks 3.x and later versions, its application scope extends beyond the traditional OLAP field to more diverse computing and analytics scenarios, showing greater flexibility and extensibility.

The Multi-Warehouse design ensures physical isolation of hardware resources, covering key areas such as CPU, memory, network, and disk I/O, and provides dedicated resource pools for different workloads. Combined with the auto scaling capability of resources, it effectively controls computing costs. This capability is not yet published, and the official version of E-MapReduce Serverless StarRocks is scheduled for release in June 2024.

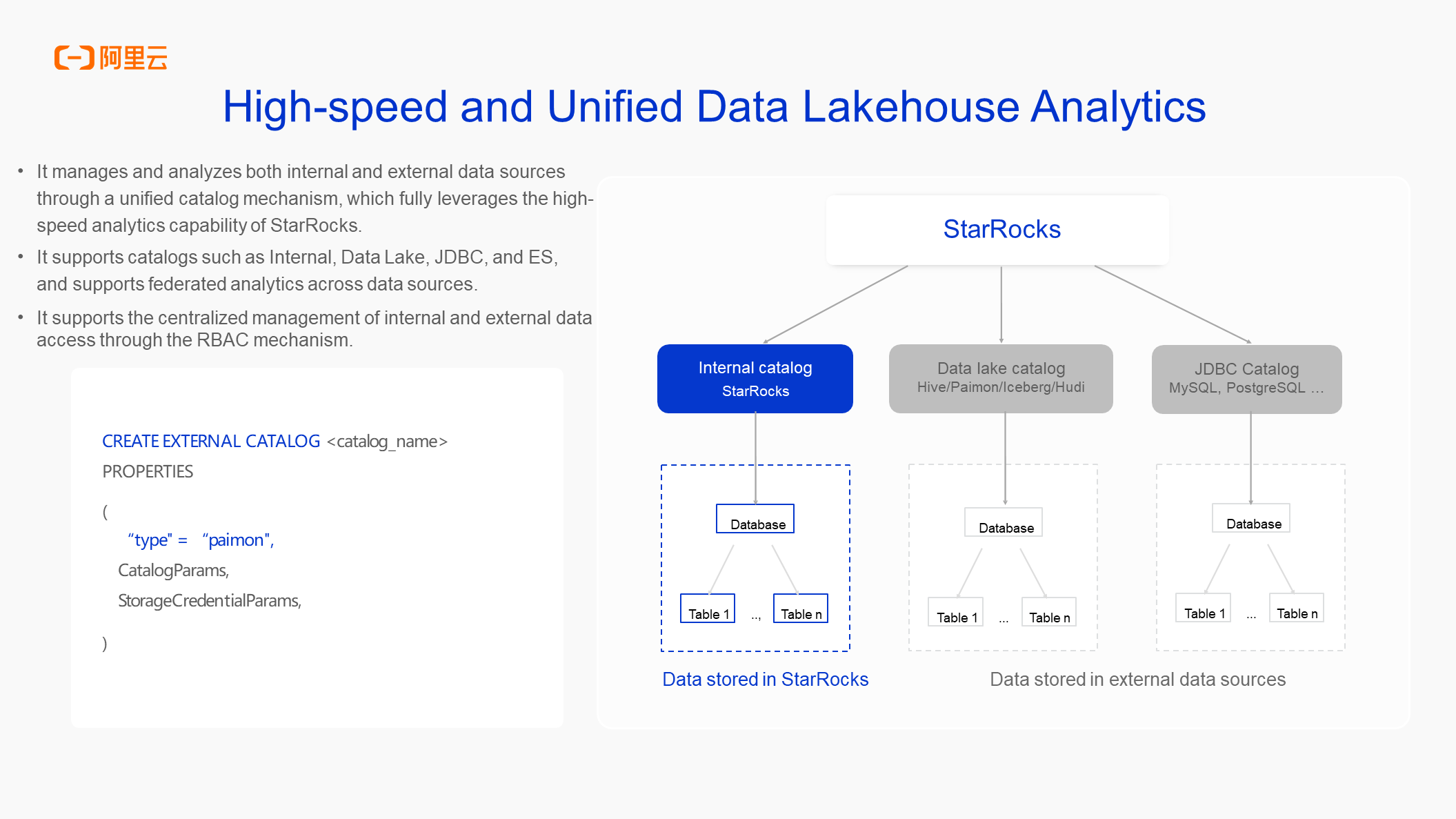

StarRocks 2.5 has introduced data Lakehouse analytics capabilities including the ability to read Hive tables, HDFS tables, or tables related to lake formats such as Iceberg and Paimon. In StarRocks 3.x and later versions, Hive Catalogs are unified to enhance the user experience and scenario support in data Lakehouse usage.

First, it can manage and analyze data in the external tables at the same time by using a unified Catalog. In the past, we created Catalogs for Hive and Paimon respectively. In StarRocks 3.x and later versions, users can manage Hive, Paimon, and tables related to lake format through one Catalog, which greatly reduces the difficulty of maintaining external Catalogs and helps users to analyze lakes at any time.

Second, StarRocks 3.x begins to support write capability on the lake. Data processed and calculated by StarRocks can be written to the lake again.

In addition, a new RBAC permission mechanism is provided in StarRocks 3.x and later versions. StarRocks 2.5 provided a simpler mechanism and more difficult processes, while StarRocks 3.x offers a more standardized permission mechanism applicable to both external and internal tables. StarRocks 3.x achieves unified permissions and security between external and internal tables, making it more convenient. External tables do not need to be managed by ranger. Of course, the 3.x version also provides ranger-related capabilities to manage external and internal tables. Although there are relatively few applications, it already has this capability.

StarRocks 3.x and later versions have seen significant improvements in the usage, management, and expansion of new scenarios for the Catalog, as well as further performance enhancements. In scenarios where we need to establish data lakes, such as querying data on HDFS or OSS, StarRocks performs 3 to 5 times faster than Trino. The key technology of StarRocks is its comprehensive vectorization (vectorization of all underlying operators), including techniques such as CBO and delayed materialization, which ensures extreme performance queries in data lake analytics scenarios.

StarRocks excels at data lake analytics on E-MapReduce data lake products, and this is also a feature co-developed by Alibaba Cloud and the community in early versions. Therefore, StarRocks has a huge improvement in the performance of data lake analytics. At the same time, the StarRocks data lake analytics scenario uses fewer resources than those of Trino or Presto to achieve the same performance, which can save about 1/3 of the resources. Therefore, more customers are switching the computing engine from traditional data lake analytics scenarios such as Impala, Trino, and Presto to StarRocks to achieve a win-win situation in terms of performance and cost.

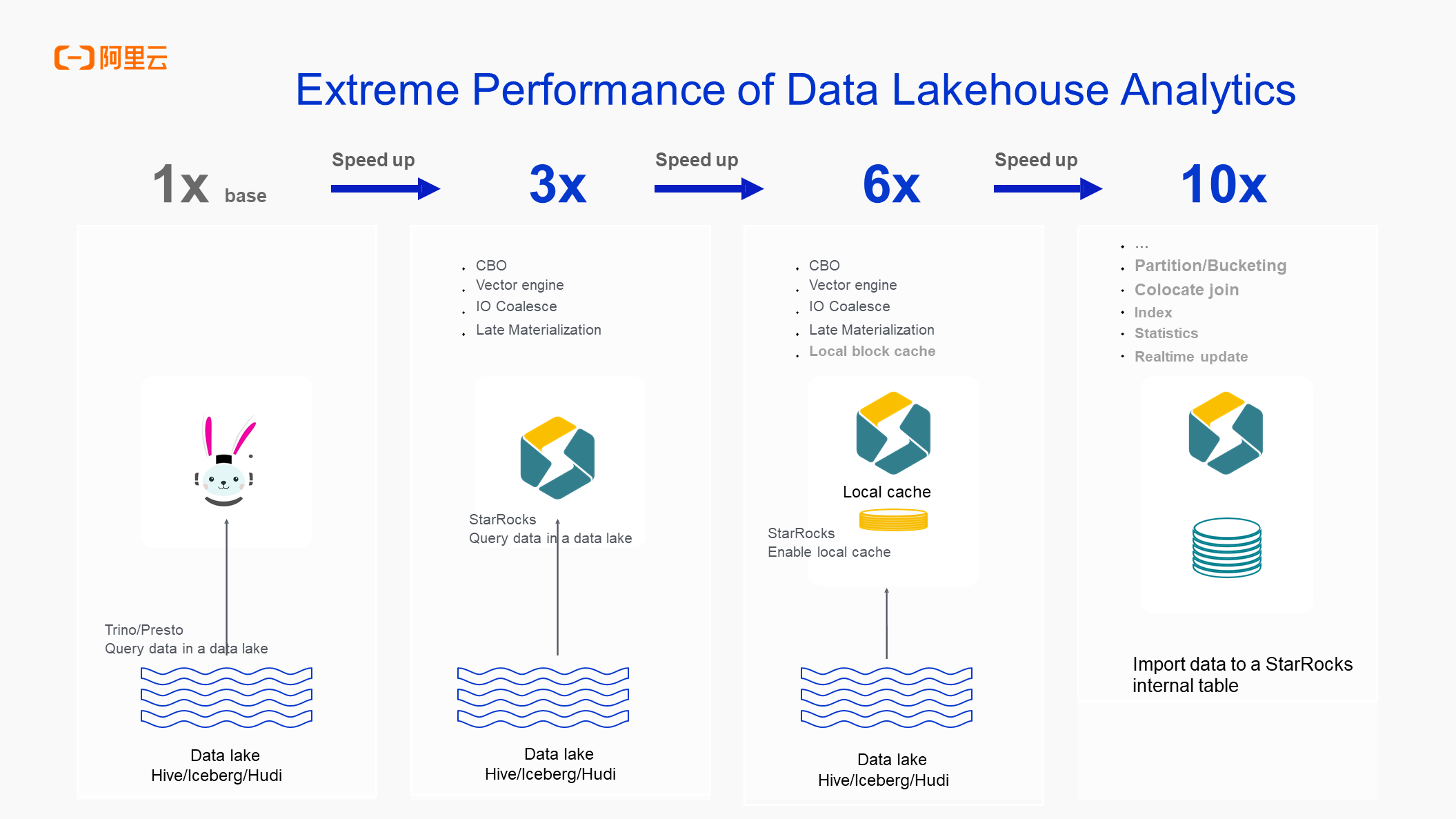

In addition, pay attention to the Block cache in the preceding figure. When using data lake analytics, if you think that the performance improvement of 3 to 5 times is far from enough, you can also use its Block cache for better performance. If you enable Block cache to query data in the data lake, the performance is increased by 3x, which is the same as that of the StarRocks internal table.

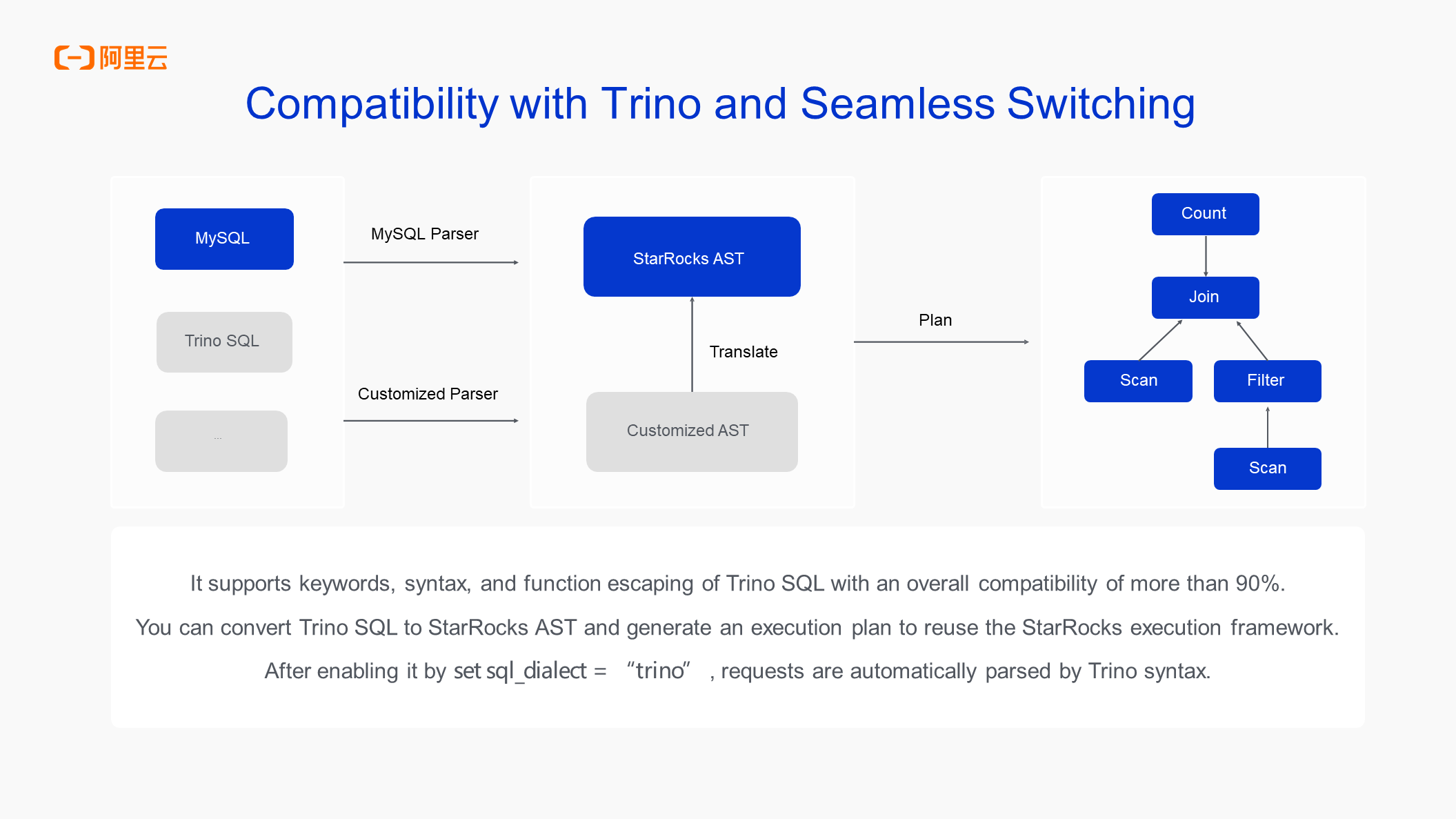

The Trino migration scenario has been mentioned above. Previously, users often used engines like Trino or Presto for data lake analytics. A feature introduced in StarRocks 3.x is the Trino gateway or Trino SQL translation. By using the hint statement set sql_dialect = "trino" and enabling it, requests will be automatically parsed by Trino syntax, achieving over 90% compatibility (except for some specific functions). It is compatible with common functions and SQL syntax. Therefore, you can smoothly migrate the original Trino-related business to StarRocks 3.x as long as you enable it.

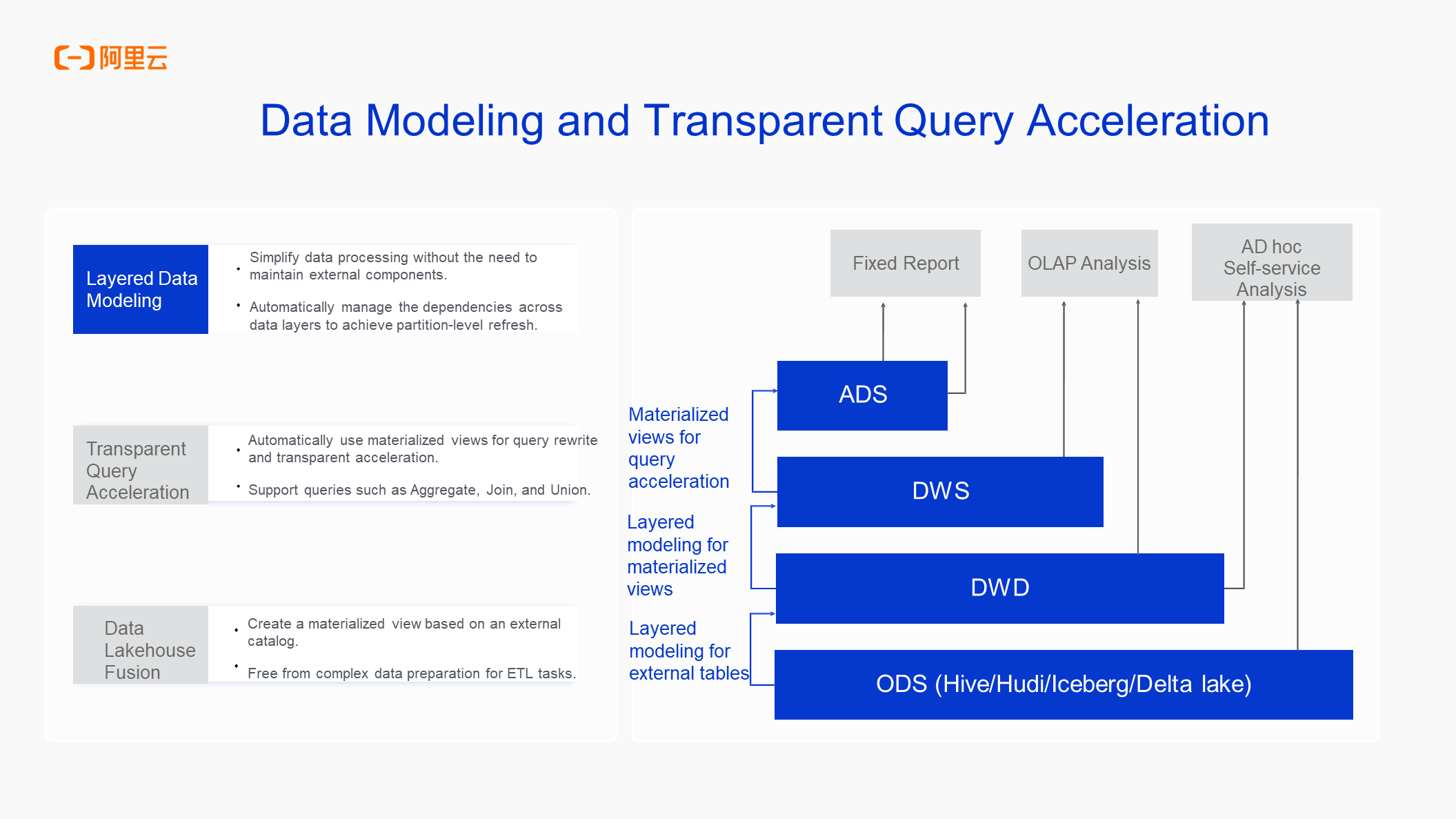

Data warehouse modeling is strongly related to the new data Lakehouse paradigm. StarRocks can manage modern materialized views. Materialized views have been developed since version 2.5. However, the stability, performance, and capabilities of materialized views have been greatly improved since version 3.x, such as transparent acceleration.

Transparent acceleration refers to the pre-creation of materialized views. When the original business involves selecting from table A and joining table B, the pre-existing materialized view can significantly improve the performance of such select and join operations for customers. However, business users are unaware of this. This transparent acceleration capability is recommended in both data lake analytics and traditional OLAP scenarios since its materialized view capability can improve query performance. This feature is also closely integrated with the original analytics scenario.

Since 3.x, the StarRocks community has emphasized the new paradigm of data Lakehouse. The traditional data warehouse is divided into four layers: ADS, DWS, DWD, and ODS. However, in the new data Lakehouse paradigm of 3.x, we recommend that you do not layer it too finely. This is because, in scenarios with large amounts of data, StarRocks can directly query underlying ODS- and DWD-related tables. It can also meet the analytics needs of daily ad-hoc queries or scenarios with lower performance requirements for report queries. In such cases, it allows for the direct analysis of raw data without the need for pre-processing and enables adjustments based on actual business needs.

StarRocks 3.x and later versions support a materialized view management mechanism. With capabilities related to materialized views, such as setting scheduled refreshes, the complex ETL and scheduling management tasks can be prevented because materialized views can achieve similar functionalities. Of course, we do not recommend that you use it in scenarios where multiple layers are used to create many tasks and time-based scheduling is very complex, like traditional data warehouses. However, if you want to perform simple processing with a small number of layers, you can use materialized views with refresh capabilities to simplify complex ETL features. At the same time, StarRocks 3.x also supports scenarios where data is directly loaded from the lake into internal tables. This can be achieved by creating catalog materialized views based on external tables, which transform external tables into internal tables for faster performance through materialized views. This includes scenarios such as data warehouse layering. Assuming the lake is ODS, the data on ODS can be written into internal tables through materialized views, moved to DWD, and then further processed into warehouse layers which can be simply divided into two layers. The feature used in the data modeling of the data warehouses scenario mentioned above is the materialized view.

The new data Lakehouse paradigm described by StarRocks refers to its ability to connect both lakes and warehouses, facilitating the fusion of these two systems through StarRocks. If there is a large lake or warehouse, vertical businesses can use StarRocks to quickly bridge these two types of systems. Or for small businesses, such as business data warehouses with a volume of less than 100 TB, you can even use StarRocks to build a new data Lakehouse system.

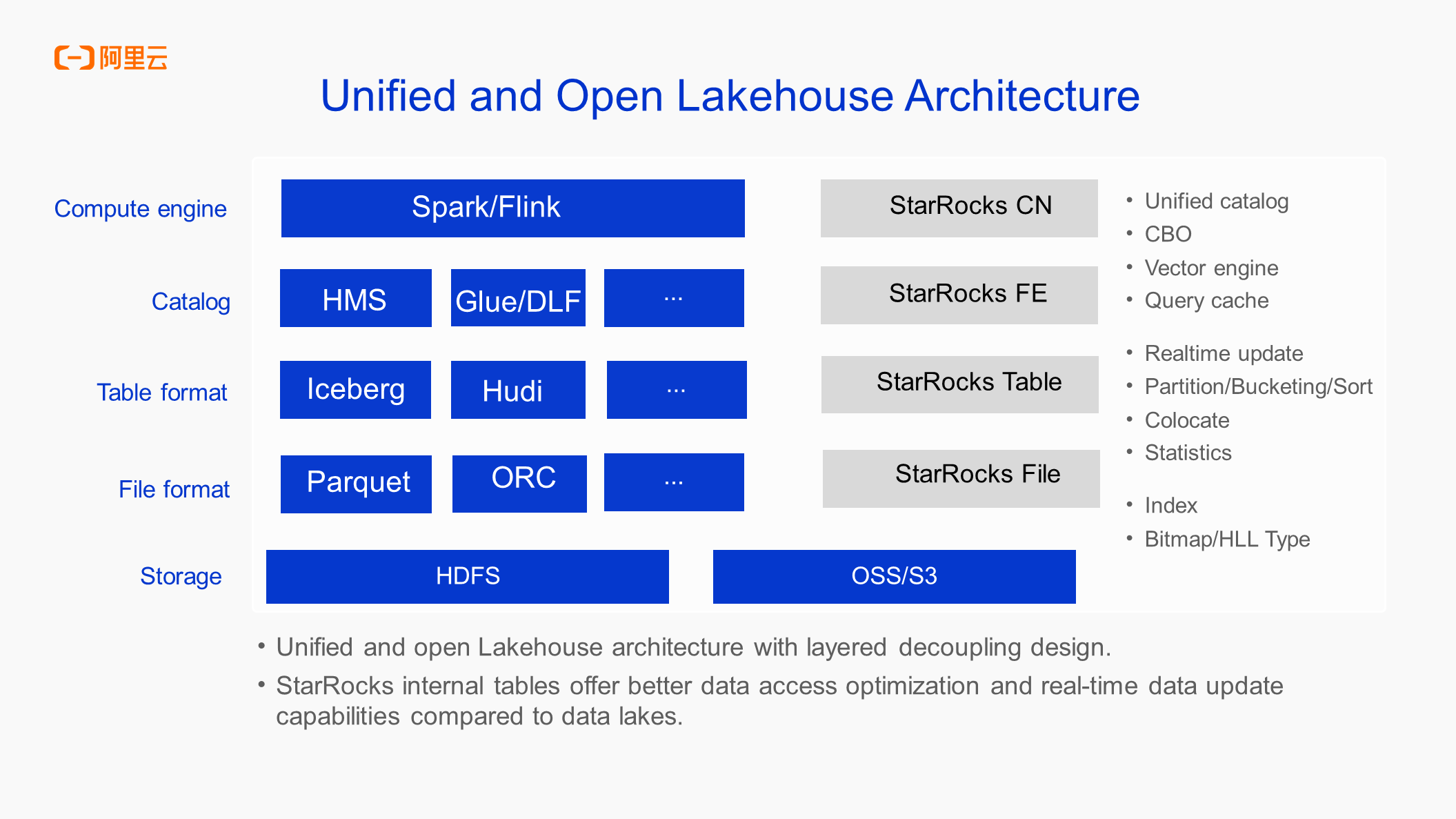

It is similar to the open-source Lakehouse architecture, as shown in the following figure:

Compute nodes are similar to open-source Spark and Flink and are responsible for overall data computing.

FE nodes are responsible for metadata and are related to plans and are similar to DLF of Alibaba Cloud, Glue of AWS, or open-source HMS.

For StarRocks itself, StarRocks internal tables have their own set of Table format and File format, which still belong to internal tables and have not yet been opened. These two can also be compared with open-source communities.

At the storage layer, you can use OSS or HDFS to build storage media for the entire lake.

StarRocks 3.x has launched the data Lakehouse, whose technical architecture is fully aligned with the open-source Lakehouse architecture. This process achieves complete decoupling between services, allowing for more flexible and on-demand resource allocation at each layer.

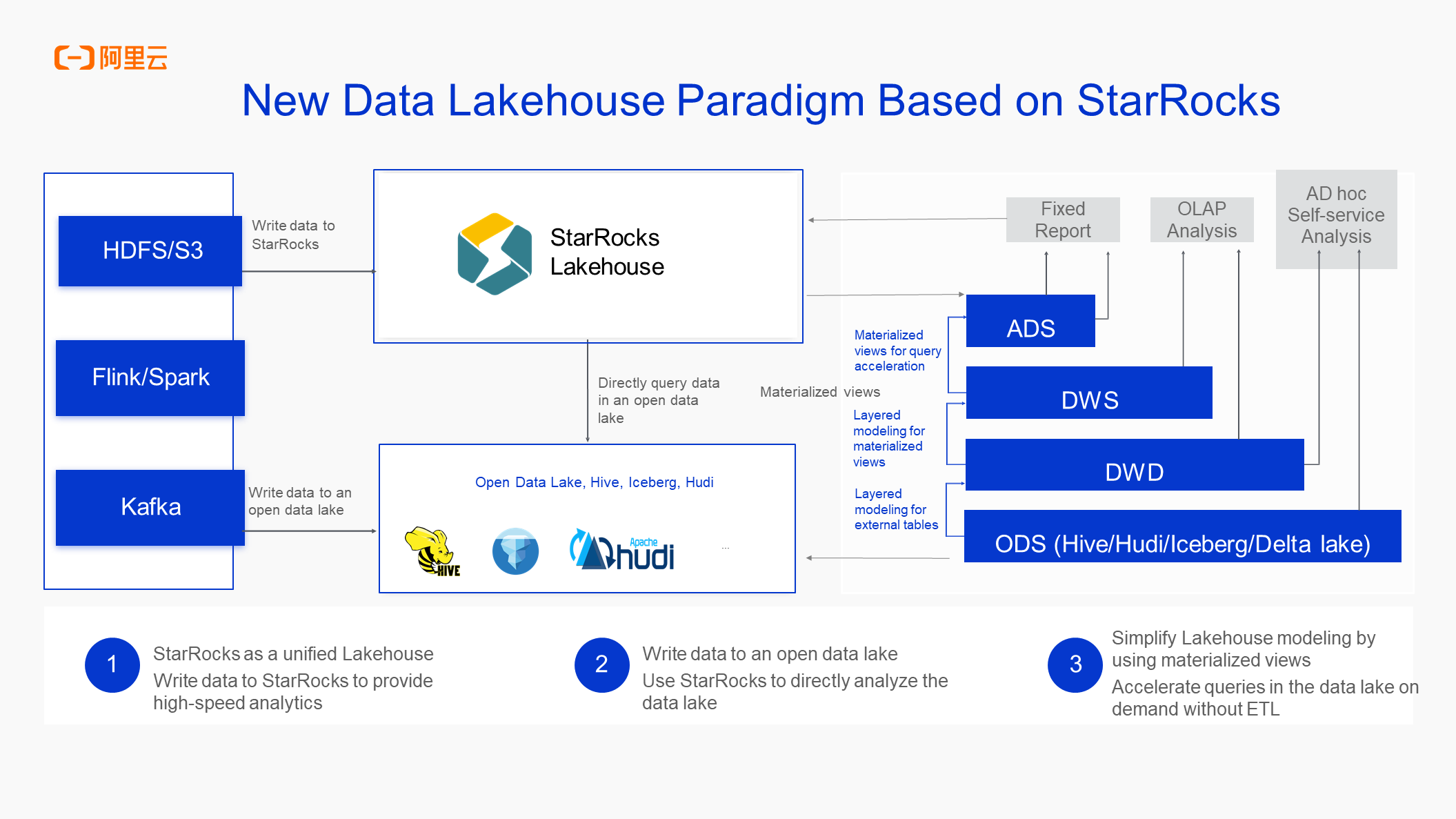

First, we can write data into StarRocks in real-time (including updates), directly use materialized views for pre-processing, and provide them to business systems for direct queries.

Second, we can write data to the lake in real-time (using the lake format). Data in the lake can be directly processed through materialized views and then written to StarRocks. You can also use StarRocks to process the data and write it back to the lake (this is a new feature and is still being iterated on).

Third, in offline scenarios, you can also use Spark or other data integration tools (DataWorks) to write data from the lake to StarRocks.

All these processes can be connected at present. Therefore, you can basically use StarRocks as the core computing engine of data Lakehouse to help users quickly analyze and process data.

The materialized view mechanism greatly simplifies the data Lakehouse modeling process, saves cumbersome ETL steps, and directly facilitates real-time analytics and efficient query of data lakes. StarRocks, with its powerful acceleration capabilities, can significantly enhance data processing and analytics speed in both data lake environments and traditional data warehouse scenarios. The new data Lakehouse paradigm seamlessly integrates data lakes and data warehouses, enabling direct data reading from lakes and building efficient data warehouses within StarRocks. This provides users with a one-stop, high-performance data Lakehouse fusion solution.

Whether analyzing lakes or warehouses (users may use open-source tools or Spark to build warehouses on HDFS, or store lakes in OSS), users previously used open-source engines like Trino/Impala for data analytics.

In StarRocks 3.x and later versions, many customers have migrated this part of their business to StarRocks, as expected, due to its significant benefits. The preceding figure shows the details. If you use Presto to query data, the performance is doubled. If you use external tables to query data, the performance is improved by three times. If you add a layer of local cache, the performance is improved by six times. If you want faster performance, you can write data from external tables to StarRocks internal tables, and the performance is improved by ten times. This performance improvement is an average value, and the improvement for a specific scenario will be greater than this value. This is the business scenario where StarRocks currently excels and shows significant performance improvement: the extreme performance of data Lakehouse analytics.

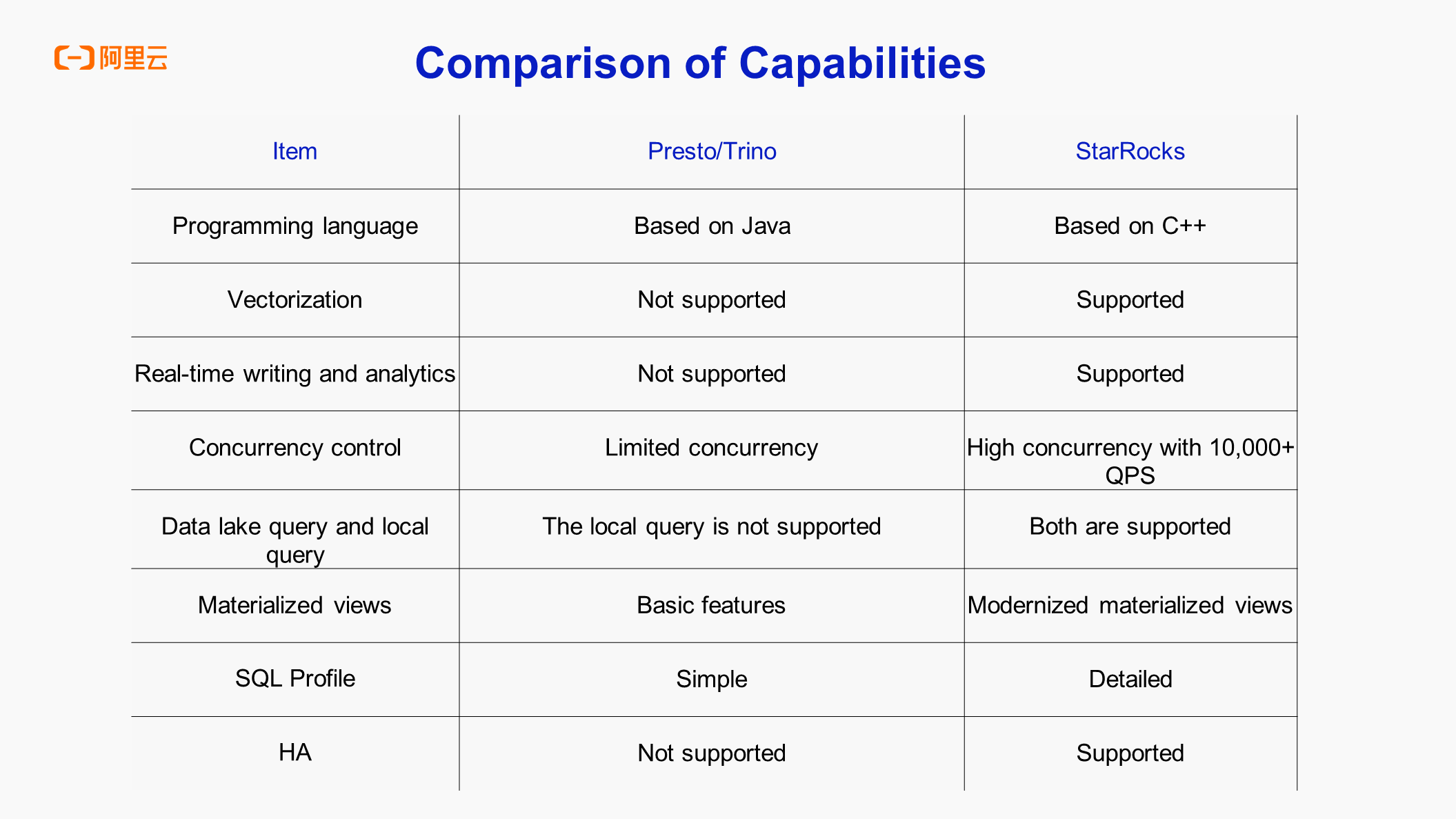

Compared with Presto/Trino, there are some obvious differences.

Therefore, users migrate their businesses from Presto and Trino to StarRocks to perform analytics in the lake. This is because in terms of capability, performance, as well as costs & benefits, StarRocks far surpasses Presto and Trino.

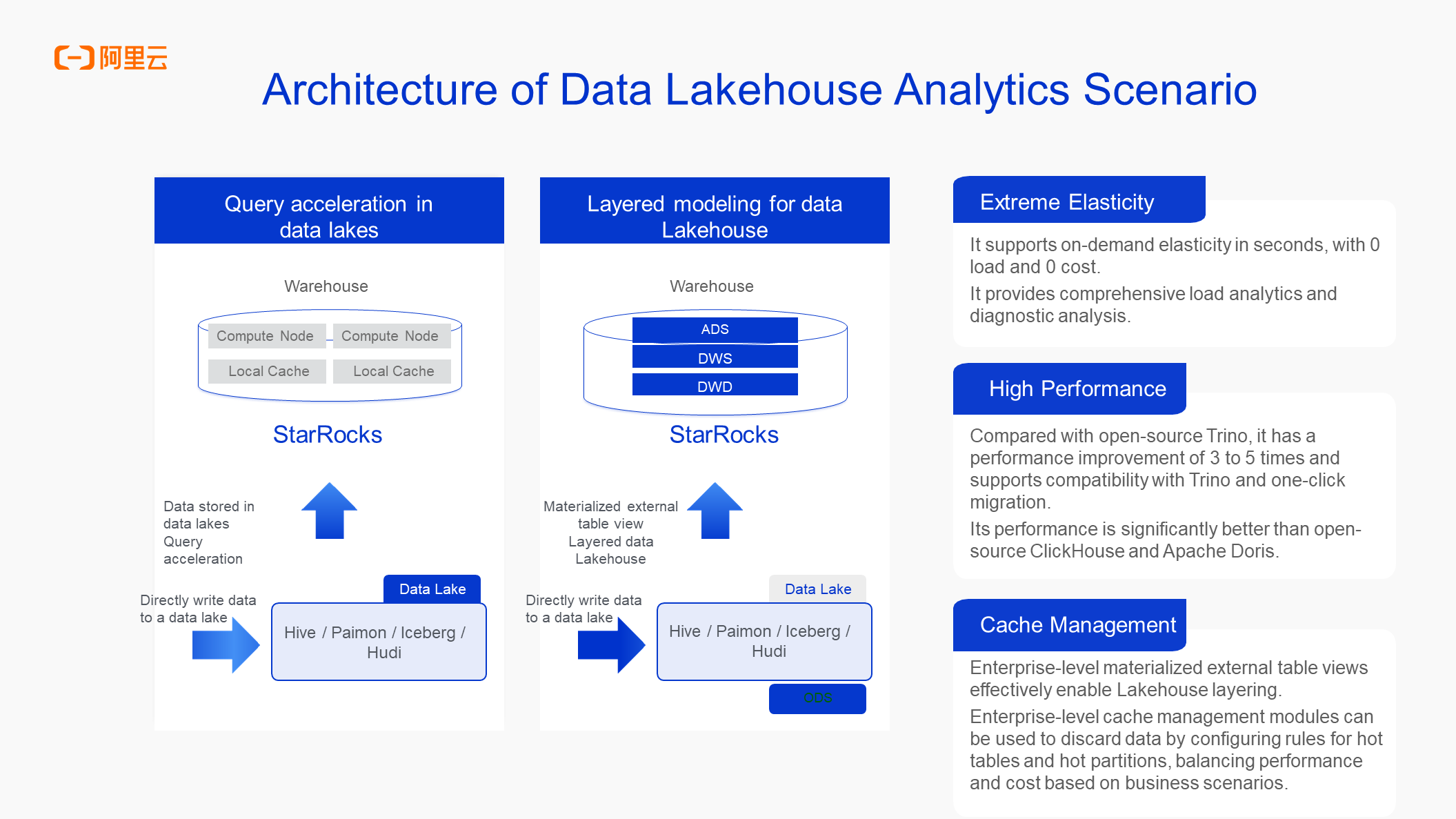

Analyzing the entire architecture of the new data Lakehouse paradigm, there are two usages in the data Lakehouse analytics architecture.

The first, as shown on the left side of the figure, is to use StarRocks for query acceleration in the data lake. In this approach, external tables are used to write data to the lake, and then StarRocks is used directly for reporting or analytics. This process is equivalent to using StarRocks to accelerate queries. Compared with Spark, the performance can be improved by about five times. Therefore, query acceleration in the data lake adopts direct querying.

The second, as shown on the right side of the figure, involves indirect querying. In this approach, data is first written to the lake, then processed by using materialized views, written into StarRocks internal tables, and finally queried. This query method improves the performance by about 10 times. In other words, the data lake and StarRocks are used to build and write data to the internal table which is then used for layered modeling of data warehousing.

If you use the elastic capabilities provided by the E-MapReduce Serverless StarRocks, it will also achieve zero load and zero cost of computing resources. In this way, during daily use, you can formulate elastic computing rules to dynamically allocate appropriate computing resources in real-time based on actual usage. At the same time, comprehensive load diagnostics and SQL-level diagnostic capabilities will be provided.

The ability to manage cache will be explained in subsequent sections, specifically focusing on the optimizations StarRocks has made in caching to assist users with external table queries and queries with shared-data clusters. The effectiveness of cache management directly impacts the final query performance, making it crucial in data Lakehouse analytics scenarios.

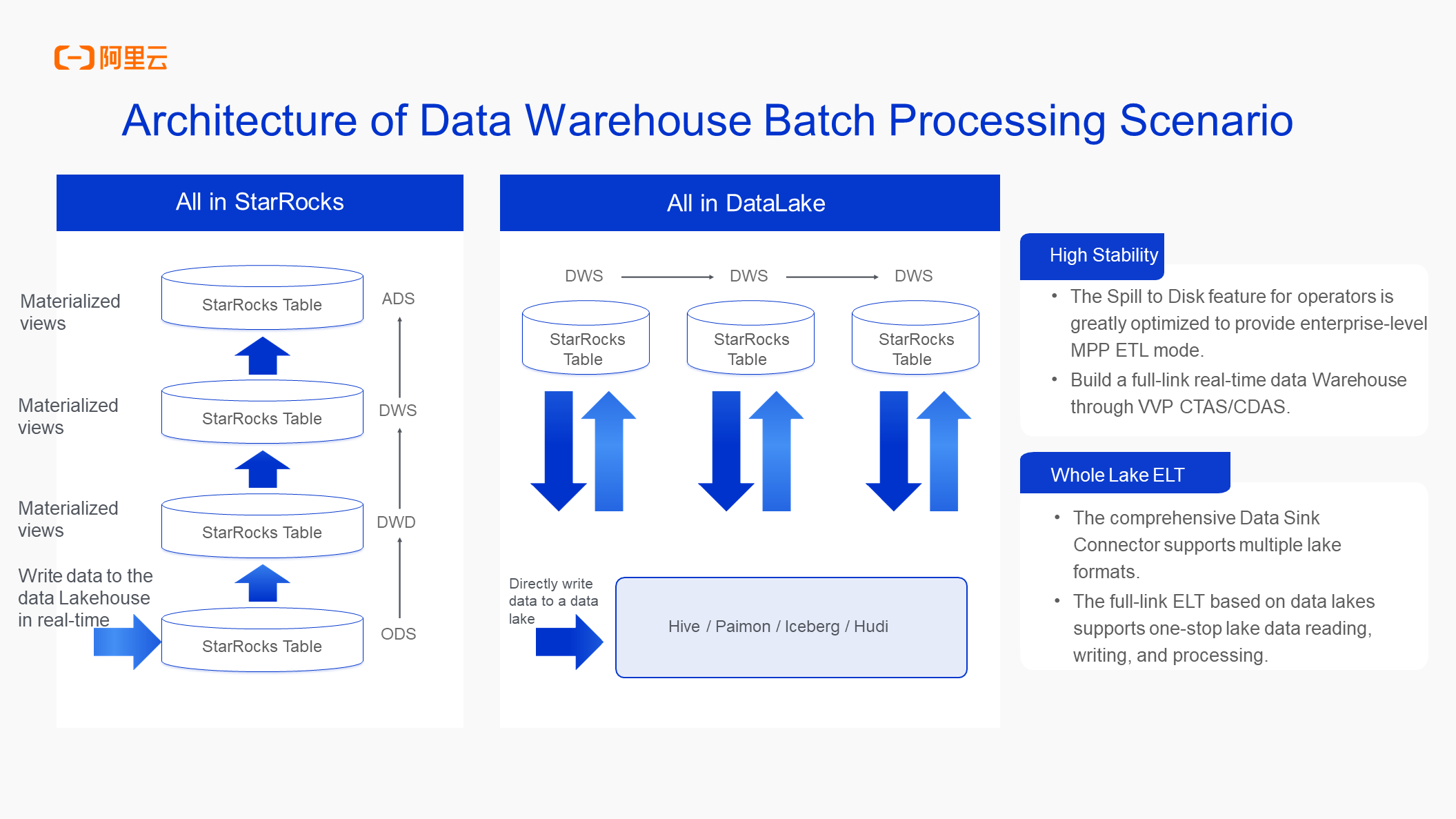

There are two main scenarios for building a data warehouse by using StarRocks.

One is All in StarRocks, where data is written directly into StarRocks internal tables from the source, and then upper-level layering and scheduling are performed through materialized views. The other is All in DataLake, as shown on the right side of the figure. The process is still incomplete, but this may be a more common scenario in the subsequent development process. This method first writes data to the lake, and then processes it through StarRocks materialized views or external insert, all right, and scheduling tools. After processing it with StarRocks, write it back to the lake such as Paimon and Iceberg. Currently, the community has supported writing back to Iceberg, Paimon and other lake formats will be supported in subsequent releases.

From version 3.x, StarRocks supports the Spill to Disk feature for operators, ensuring the stability of the entire ETL process.

All in StarRocks can be integrated with Flink VVP products to achieve CTAS/CDAS scenarios and enable full database synchronization from MySQL. It can also be used in real-time data integration scenarios with DataWorks for real-time writing, providing a smoother experience.

It has been nearly three years since the development of E-MapReduce Serverless StarRocks. Developed from semi-managed to fully managed today, it has achieved several important features. In the open-source version, including OLAP multi-dimensional analysis, ad-hoc analysis, and data lake analysis, these analysis scenarios can be implemented on StarRocks to achieve high-concurrency point queries, as well as real-time writes, real-time queries, or build real-time data warehouses, as well as build simple small data warehouses, and combine the lake to build data warehouses in the lake.

The fully managed service has been optimized from the perspective of high speed, such as the QPS for primary key tables or point queries, and provides some performance improvements, mainly in shared-data scenarios. In addition, under default resource allocation and configuration, StarRocks outperforms the open-source version Doris by 2 to 3 times. This is also the reason why EMR chose StarRocks as its fully managed OLAP engine.

From a unified perspective, StarRocks is also optimized. This part focuses more on the content related to the lake format, such as the integration with Paimon, which makes the performance and capabilities of the lake format better. It also includes query rewrite and materialized views. Query rewrite can help users improve query performance and reduce O&M difficulties during data analytics.

Additionally, Alibaba Cloud has integrated usability and cloud-native features at the platform level. For example, deep integration with DataWorks allows data to be written to StarRocks in real-time by using DataWorks, which supports both offline batch writing and full database synchronization into StarRocks, thereby enabling data warehousing or lake integration. You can also use DataWorks to schedule small-scale data warehousing in StarRocks. Such integration can be directly available to users, without the need to build their own. The E-MapReduce StarRocks also provides import visualization, metadata visualization, permission visualization, data auditing, and out-of-the-box capabilities to reduce user O&M and usage costs. It also provides health diagnosis and analysis, such as cluster health reports, to help users better manage and use clusters. In addition, it supports SQL attribution analysis.

In terms of cloud-native capabilities, its cloud resources features of out-of-the-box, minute-level delivery, and scaling performance can be fully guaranteed. It also supports Multi-Warehouse and elastic scaling (to be launched later). Cache manage provides observability capabilities and future Cache manageability to help users better manage Cache-related contents in shared-data and data lake analytics scenarios.

The fully managed service is O&M-free and SLA-guaranteed. This feature is fully managed by Alibaba Cloud.

As shown in the preceding figure, the underlying layer of E-MapReduce StarRocks can use OSS or cloud disk storage. Regardless of the storage method, you can use the open-source lake format or use StarRocks internal tables. Finally, you can use StarRocks for corresponding analytics, and then combine it with Warehouse for resource isolation and resource elasticity. E-MapReduce Serverless StarRocks also offers various product capabilities, such as out-of-the-box SQL Editor, import tasks, permission management, diagnostics and auditing, as well as basic instance management, configuration management, monitoring and alerting. Additionally, it addresses the major issue of automatic upgrades faced by StarRocks. It supports automatic upgrades to perform fully automatic upgrades with just a click of a button, ensuring seamless upgrades.

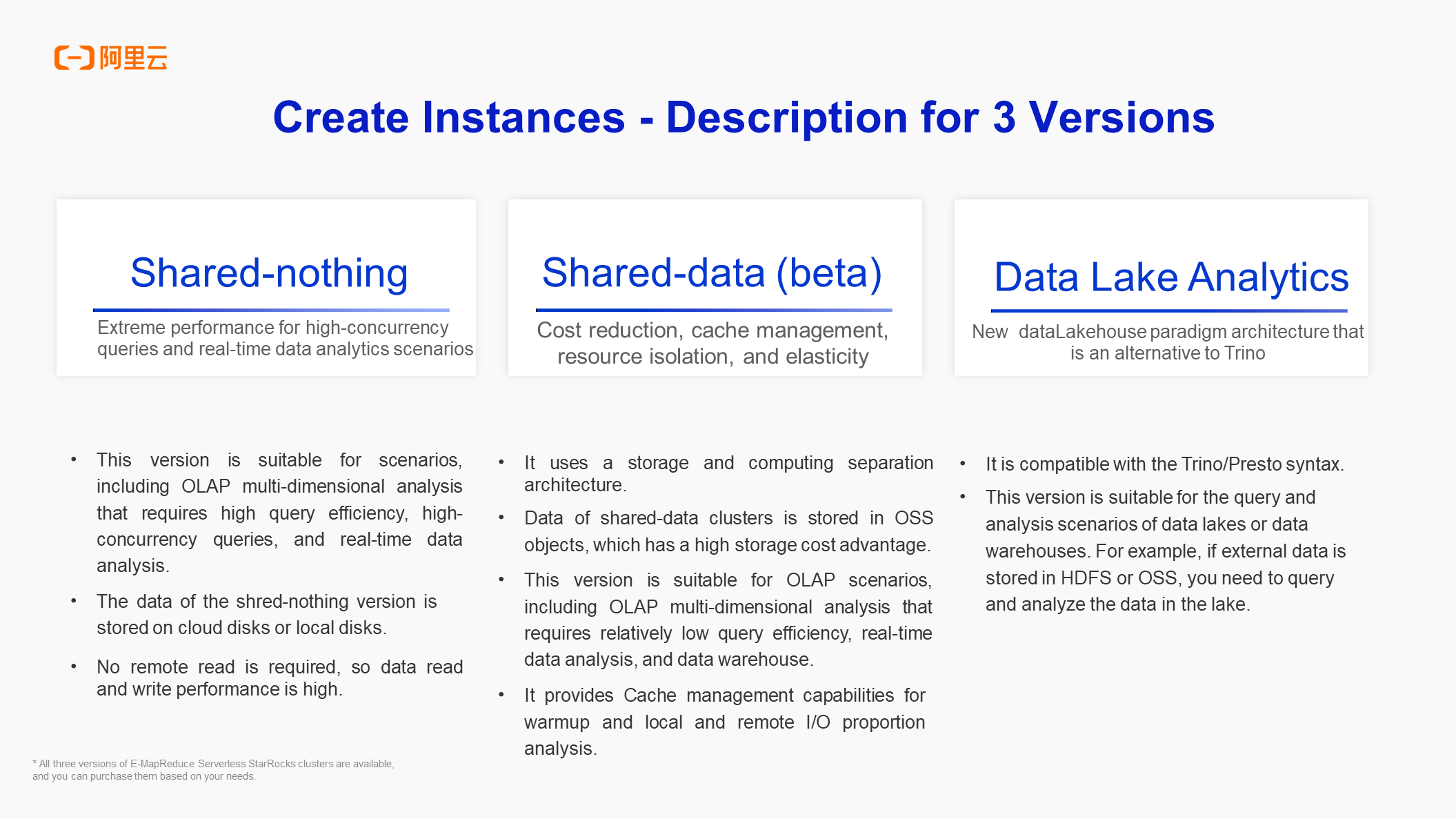

In actual use of E-MapReduce Serverless StarRocks, there may be certain challenges, as it has three versions. These three versions include shared-nothing, shared-data, and data lake analytics, which are suitable for different scenarios.

The shared-nothing version is suitable for OLAP scenarios that require high query performance, such as the toC scenario that requires millisecond-level responses. However, elasticity and Warehouse resource isolation are unavailable in this version. Its advantage lies in its extreme performance.

The shared-data version is still in the beta stage, but its capabilities are essentially problem-free. It adopts the StarRocks 3.x architecture. If you want to build a simple small data warehouse or make internal reports (the response must be more than seconds), you can use the shared-data version. It can provide elasticity, Warehouse, and cache management for local and remote I/O analysis reports.

The data lake analytics version can be used in Trino/Presto scenarios and can only use external table-related capabilities. However, its management capabilities are generally consistent with the shared-data version, including support for elasticity and Multi-Warehouse.

Therefore, you need to choose a version based on your business when creating a StarRocks cluster.

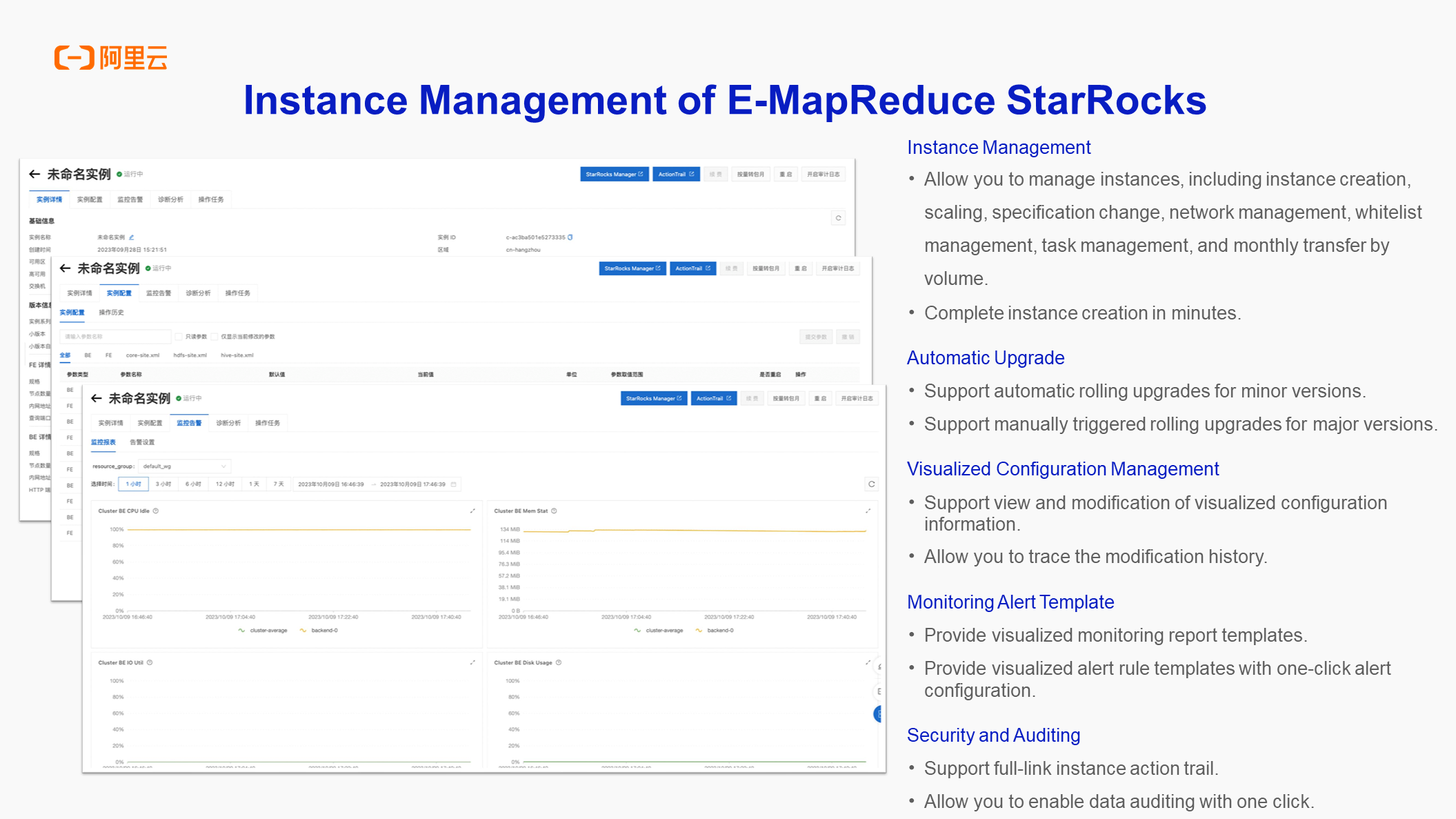

For the basic management of instances, it can be viewed directly on the console.

It includes instance scaling configurations, cloud disk scaling configurations, blacklists and whitelists, public network access, and automatic upgrades. Additionally, it offers visual configuration management, monitoring and alerting (related chart alert templates), and auditing (console operation auditing and data auditing, which can be queried in StarRocks audit log table after enabling audit logs with one click).

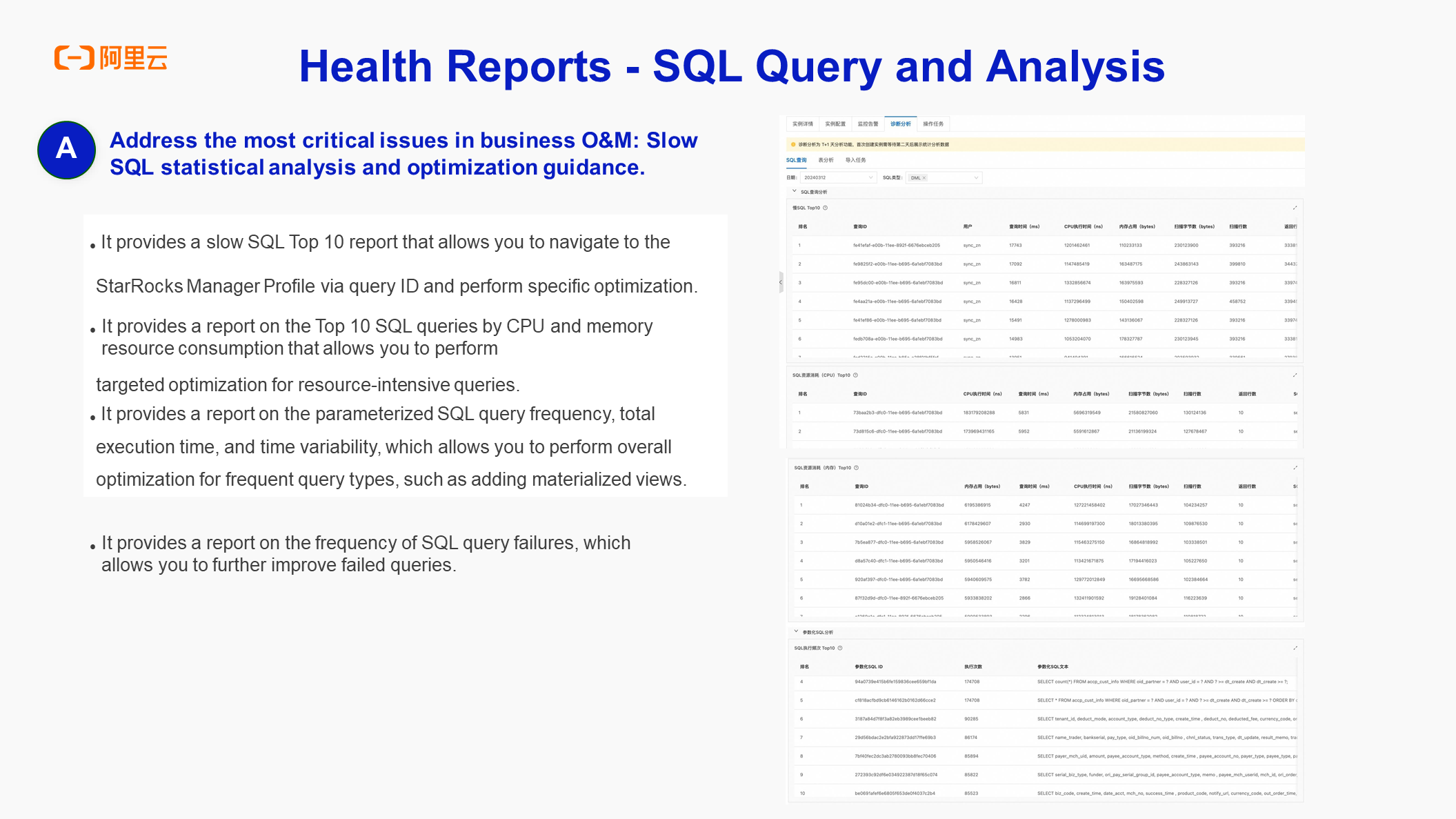

With SQL-related health reports, you can troubleshoot slow SQL statements or SQL statements that occupy a large amount of resources.

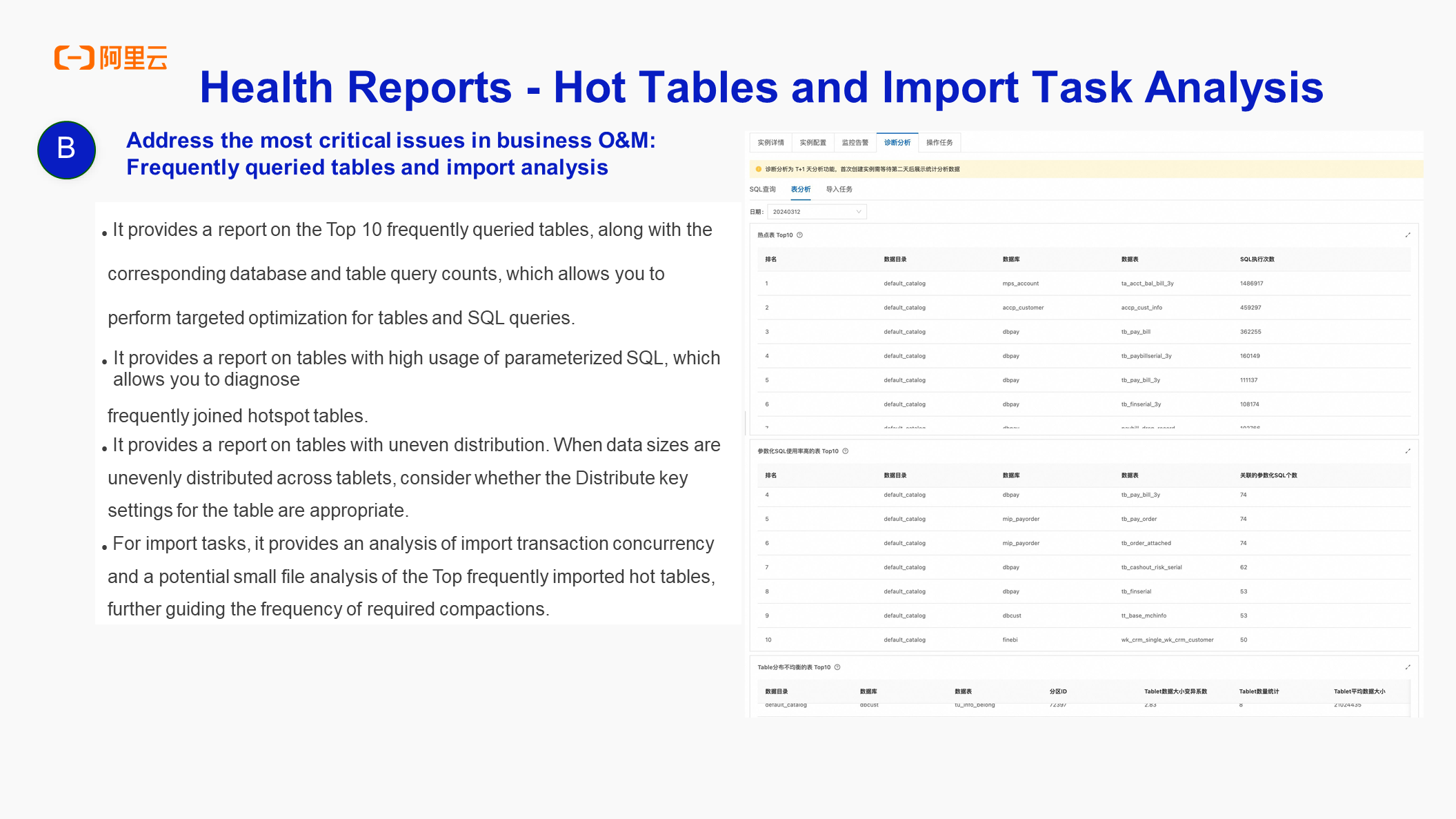

The hot table and import task analysis will examine frequently used tables or those with many small files, import tasks that consume significant resources, or data that do not meet expectations. These analyses can be found in the health report to assist you in instance governance or data governance.

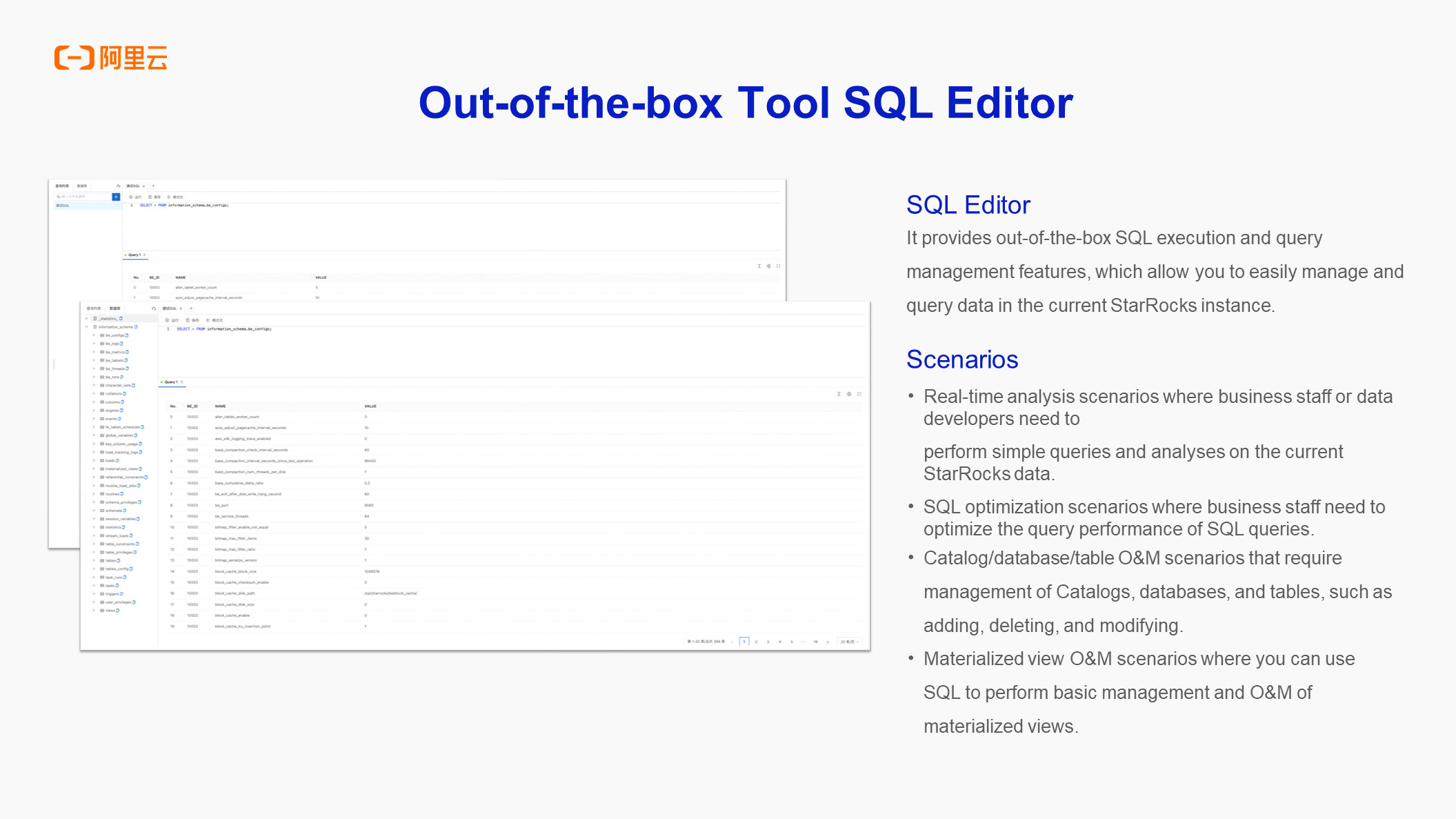

In the E-MapReduce StarRocks Manager, you can see the SQL Editor immediately after connecting to the instance upon creation. This makes it convenient to write SQL, perform ad-hoc queries, or develop and debug. SQL Editor can solve these daily O&M scenarios.

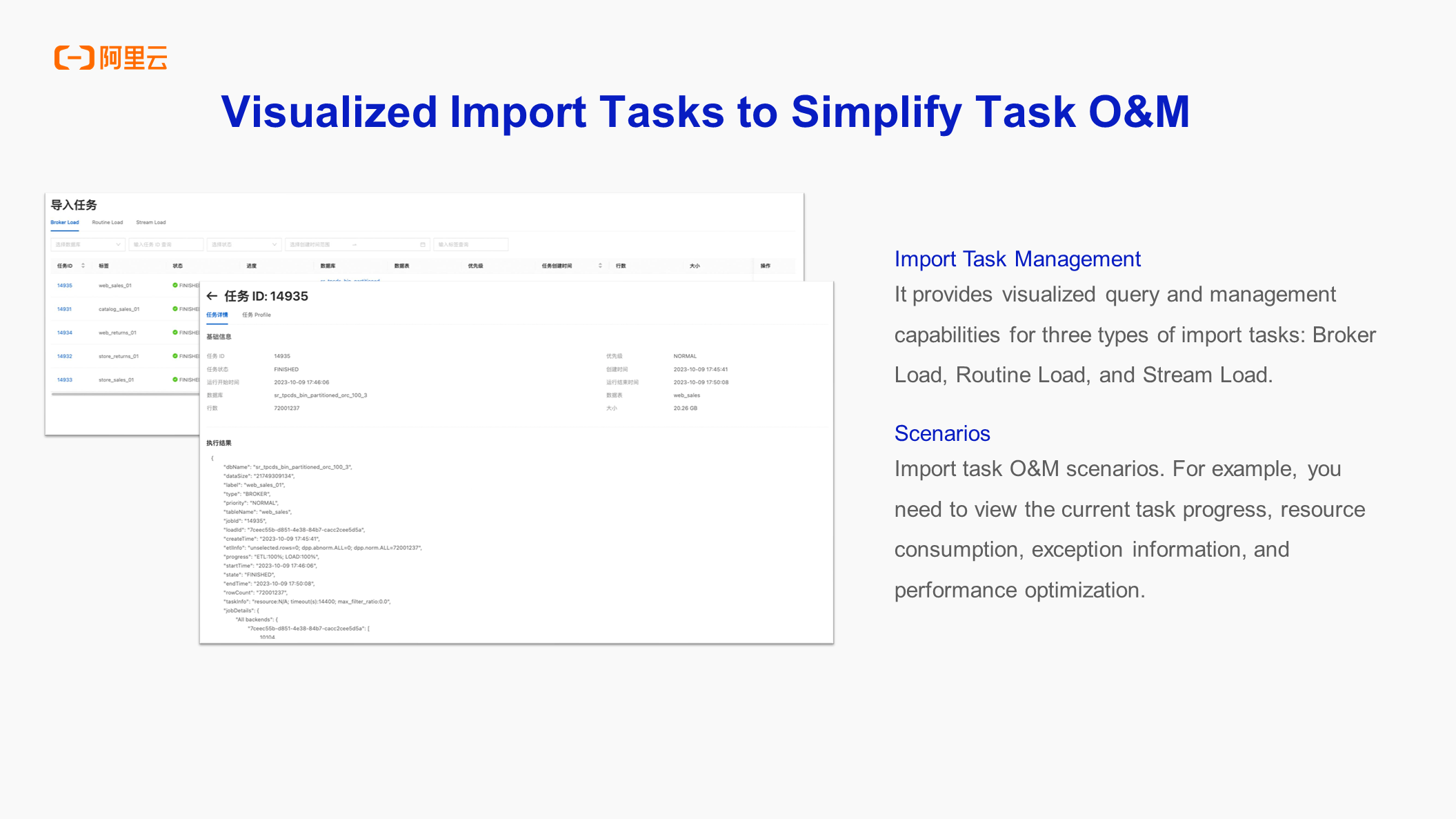

With visualized import tasks, all information of these related import tasks, regardless of Broker Load, Routine Load, or Steam Load, can be queried in the import task of the E-MapReduce StarRocks Manager, as well as error information.

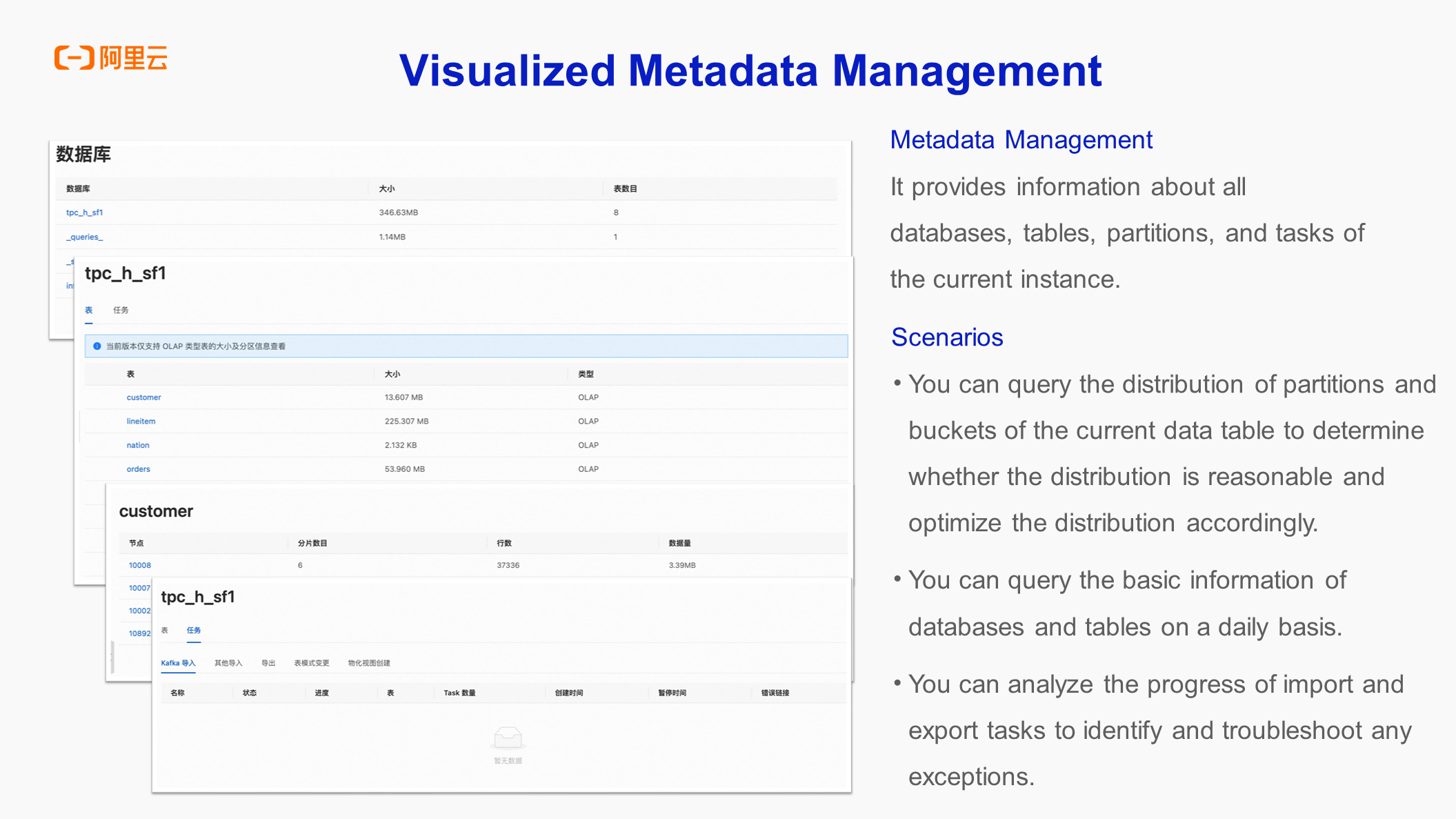

You can also see information about the metadata in the E-MapReduce StarRocks Manager, including the distribution of caches as well as partitions and buckets of storage.

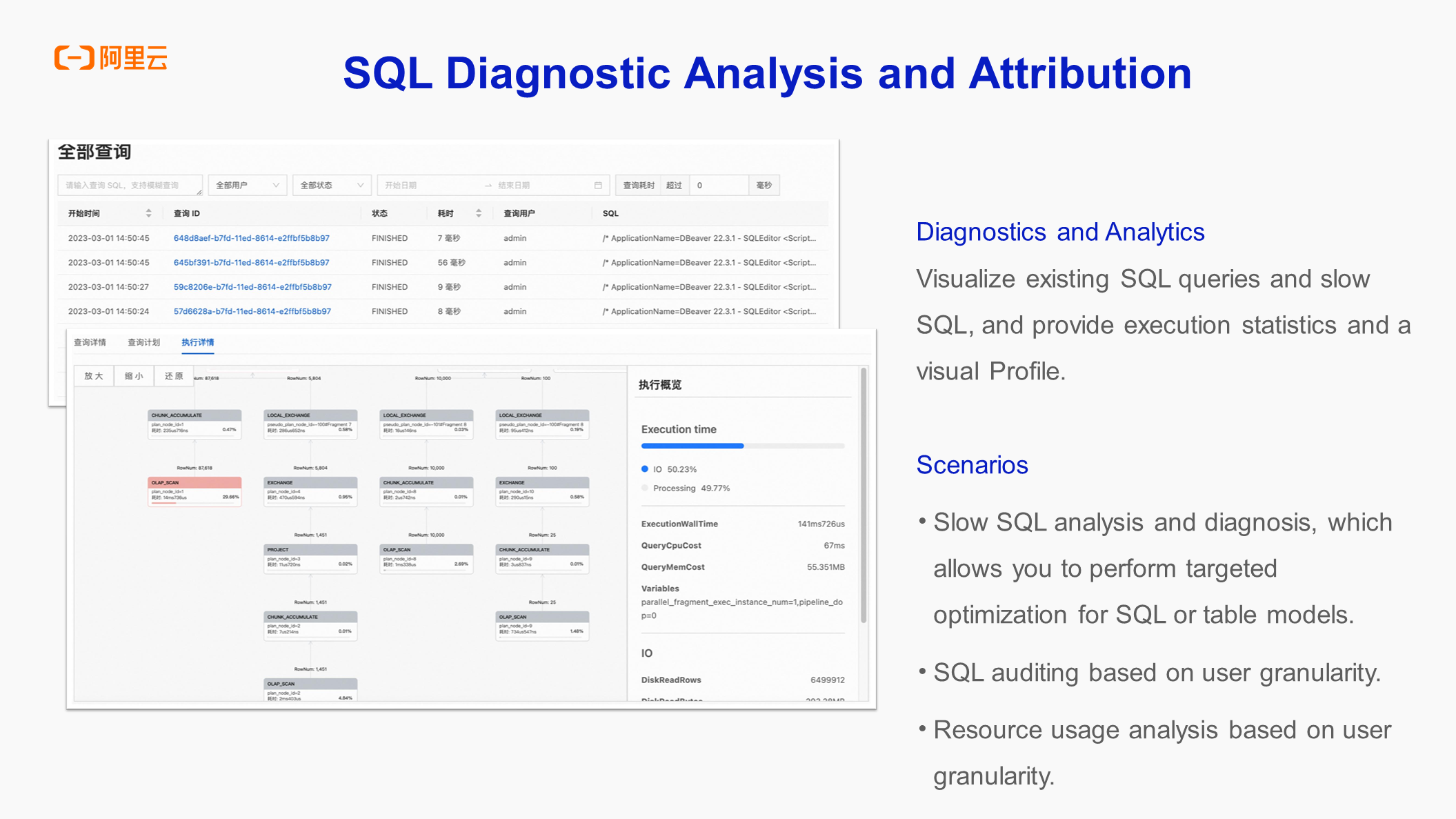

The SQL profile is provided, which allows you to query slow SQL statements, as well as the causes and processing methods of slow queries in a visualized manner.

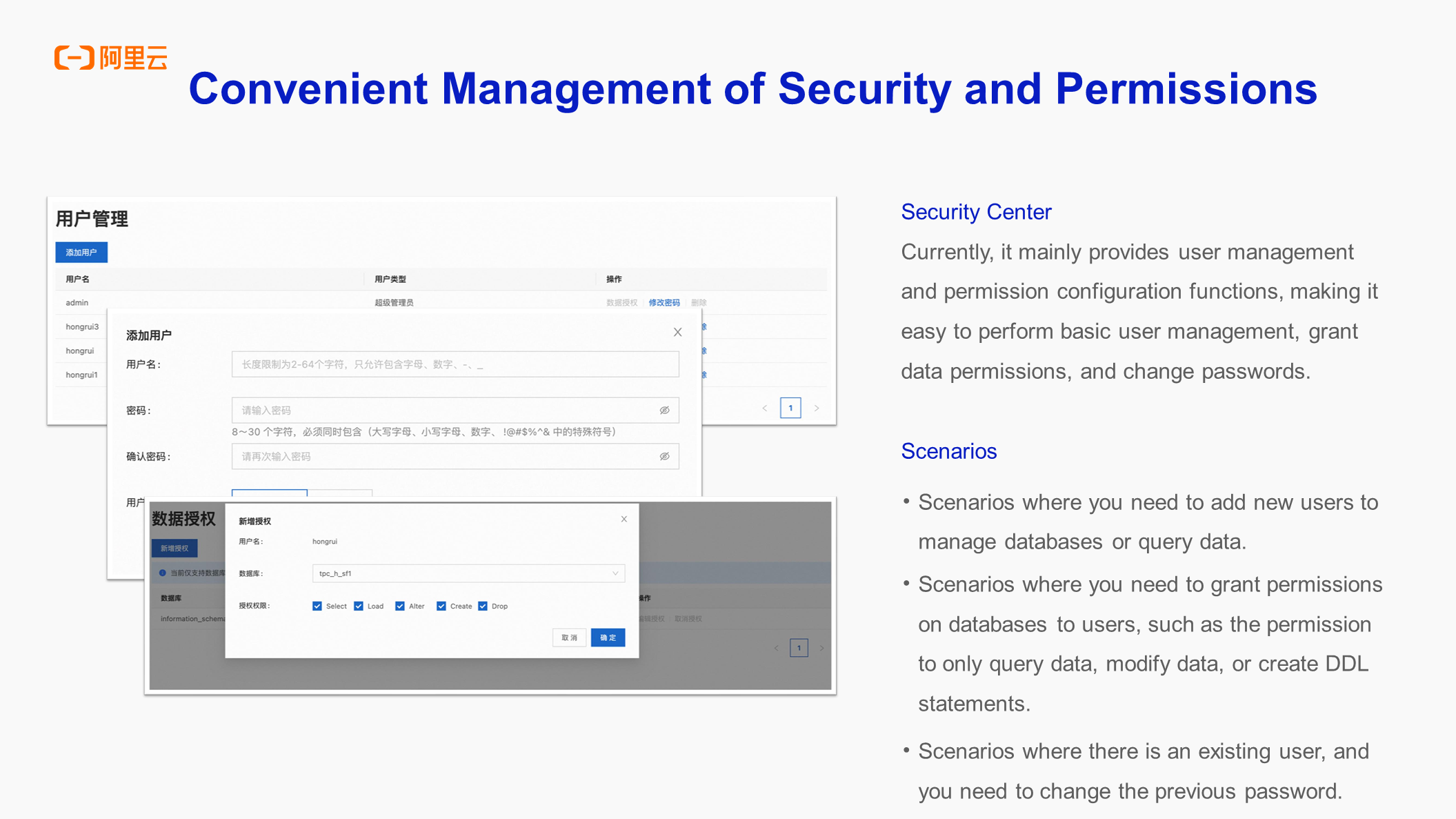

Visualized management capabilities for permissions are provided. You can also use open-source commands to perform O&M.

Integration of Paimon and Spark - Part 2: Query Optimization

Use Cases for EMR Serverless Spark | Use EMR Serverless Spark to Submit a PySpark Streaming Job

62 posts | 7 followers

FollowApache Flink Community - April 30, 2024

Apache Flink Community - March 7, 2025

Apache Flink Community - August 14, 2025

Apache Flink Community - July 28, 2025

Apache Flink Community - July 5, 2024

Apache Flink Community - January 21, 2025

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba EMR

Santhakumar Munuswamy August 5, 2024 at 6:51 am

Thanks for the sharing