By Meng Shuo, Alibaba Cloud Intelligence Product Expert

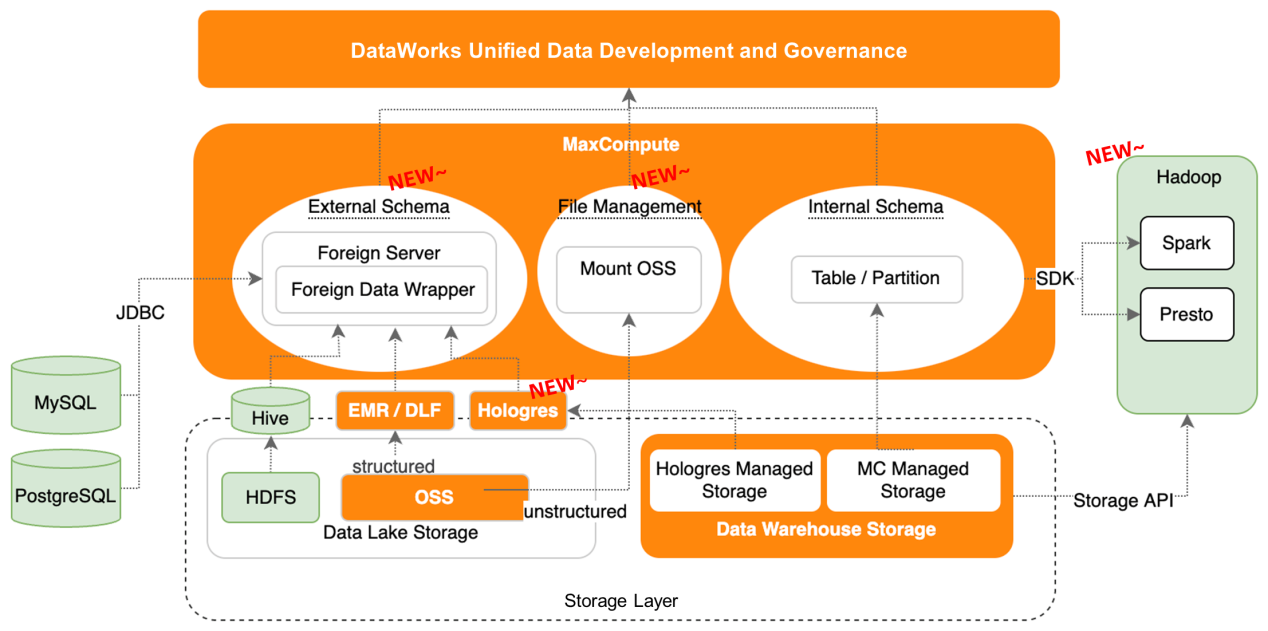

The Lakehouse architecture of the MaxCompute-based cloud data warehouse was upgraded recently. If you are familiar with MaxCompute, you may know that MaxCompute has a two-tier structure. You must first create a Project and then create tables and resources in the Project. The traditional database has a layer of schema between the database and the table. When migrating data from a database to MaxCompute, some customers have demands on the schema layer. In this architecture upgrade, MaxCompute is upgraded to a three-tier model, Table → schema → Project.

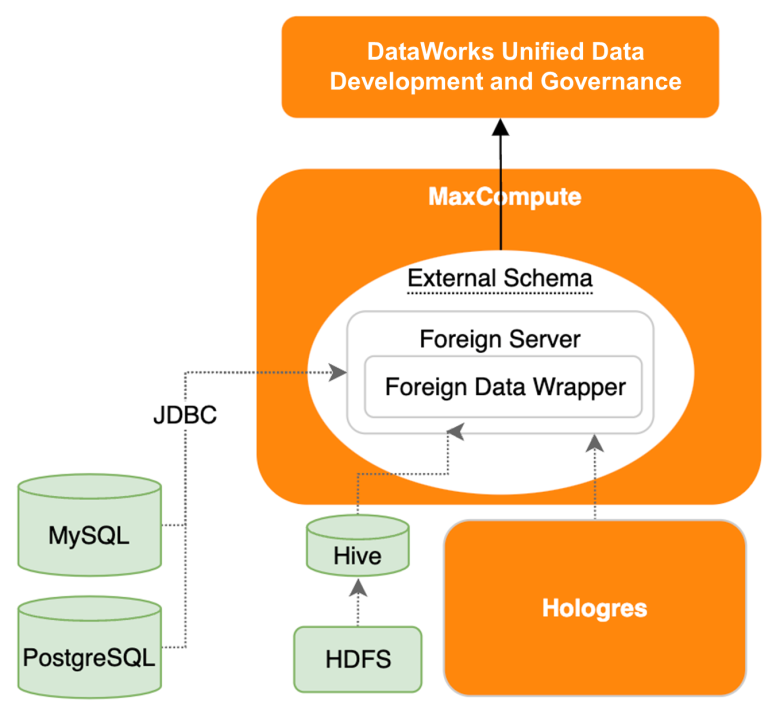

MaxCompute is used as a warehouse for the Lakehouse architecture. It uses an external schema to connect to external data sources. The external data sources are called Foreign Server, which includes the connection information of each data source in the Foreign Data Wrapper. The earliest Hive data source that supports Hadoop reflects the Hive Metastore to read and write HDFS data. Also, it can be connected to Alibaba Cloud Data Lake to build Data Lake Formation (DLF) products. DLF is mainly used to perform unified metadata management and permission management by scanning OSS files. We can use Foreign Server, which is the external data source, to connect to DLF metadata and process OSS file structures. Then, you can connect to OSS data lakes on the cloud and open-source Hadoop ecosystem HDFS data lakes. It also supports data warehouses and databases in the Alibaba Cloud ecosystem, such as Hologres, ApsaraDB RDS, and AnalyticDB. External databases are connected through the JDBC protocol. Internal ecosystem products (such as Hologres) can achieve direct read on storage, which is faster than JDBC. It can use external schemas to connect to external data sources.

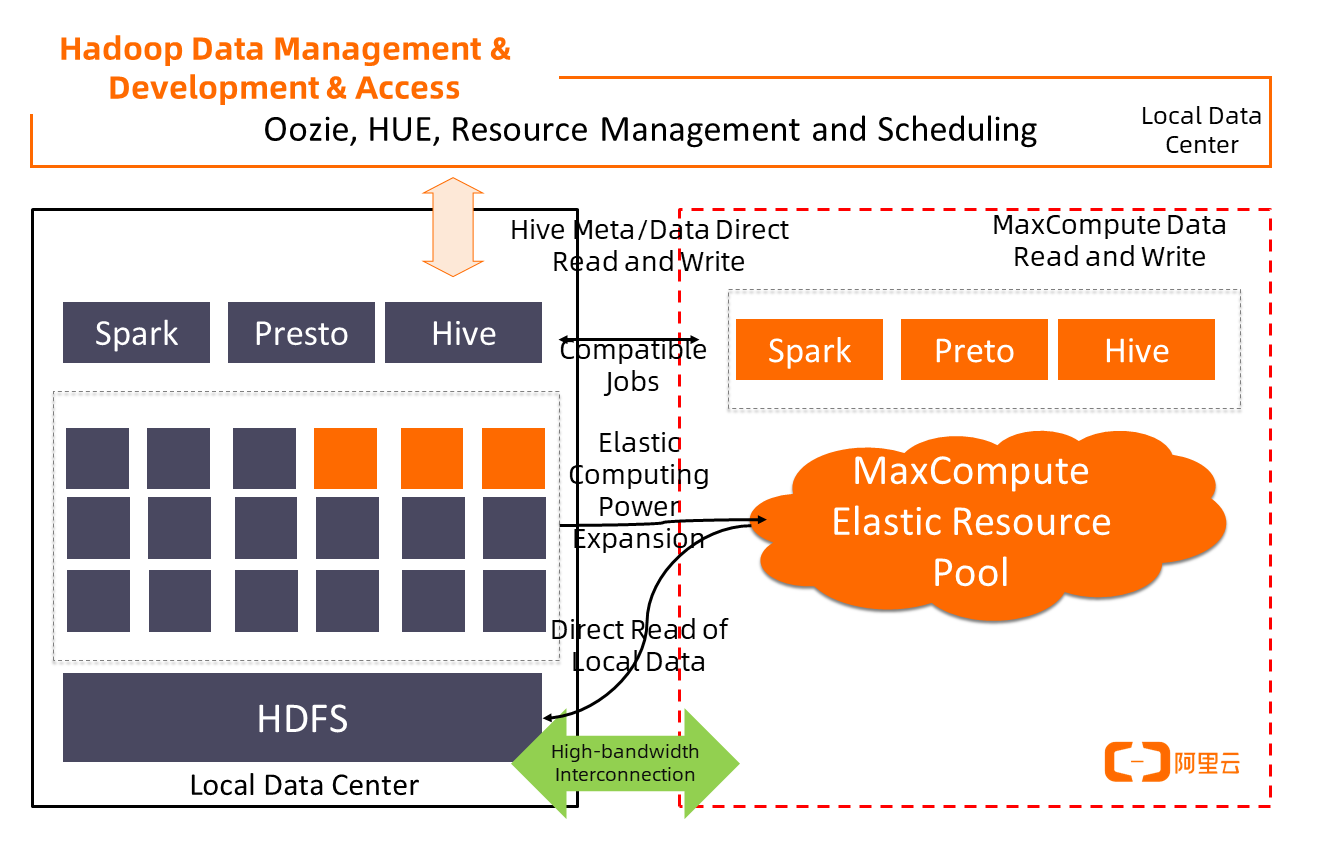

You can use the Mount OSS path for some unstructured data in OSS to upgrade to MaxCompute for object management. You can use the MaxCompute permission system to perform authorization management on the objects in Mount OSS. You can use engines (such as MaxCompute Spark ML and MaxCompute AI) to process the data of file structures in OSS. If the traditional Hadoop data platform wants to use the computing power expansion on the cloud but does not want to migrate entirely to the cloud, it can call the cloud computing power through SDK in Hadoop. It can import the data from the cloud as an extension of the Hadoop clusters computing power under the cloud.

In summary, the internal storage is connected to Alibaba Cloud Hologres and MaxCompute (both using the Pangu file system and the traditional method). At the same time, a layer of schema is added between Project and Table to connect to the entire database ecosystem. HDFS can be mapped to MaxCompute's external schema through Hive's Metastore for data lake storage. OSS can be mapped to Foreign Server and Foreign Data Wrapper through Data Lake Formation (DLF). This implements mapping to external internal schema and supports Alibaba Cloud Hologres and external database ecosystems. The computing power of MaxCompute can also be referenced by Hadoop clusters under the cloud as an expansion of computing power under the cloud. Complex underlying structures can be managed using DataWorks (a unified data development and governance platform).

We can summarize three points:

Support MaxCompute external schema to map MySQL and Hologres DB/schema. Storage direct reads with Hologres can be achieved to improve the read and write efficiency. Users do not need to migrate data from ApsaraDB RDS to MaxCompute. You can directly use the mapping of external data sources to enable federated queries between local data warehouses and external data sources in MaxCompute.

MaxCompute uses the Mount OSS path to integrate structured files, unstructured images, and audio and video files stored in OSS into the data warehouse permission system for management. OSS also performs coarse-grained permission management on files. OSS is promoted to an object in MaxCompute. ACL in MaxCompute is used to manage fine-grained permissions on whether each user can access certain files in OSS. Use the Spark engine in MaxCompute or Platform of Artificial Intelligence to process data in structured, semi-structured, and unstructured file formats.

When an IDC cluster or an on-premises Hadoop cluster needs to be scaled out and line-of-business is updated and iterated, you want to perform trial and error quickly. You can directly put the required computing power into the Serverless cloud data warehouse service without adjusting to cluster resources. It can realize rapid business iteration and trial and error and achieve seamless expansion of existing resources.

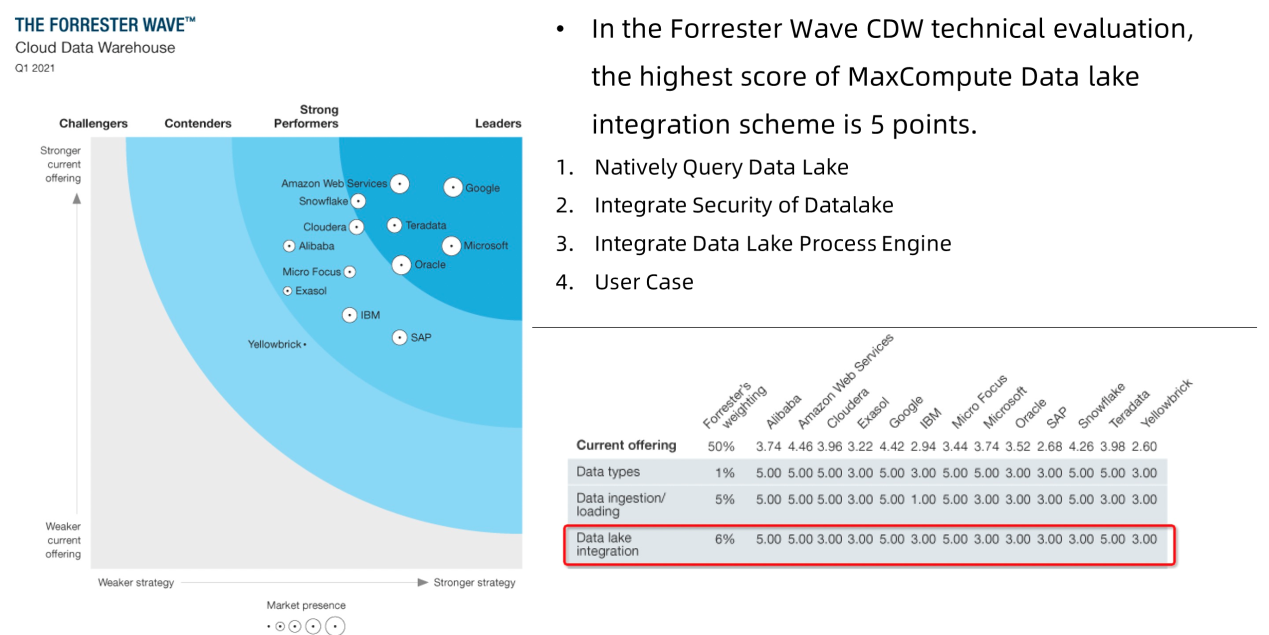

The ForresterWaveCDW technology evaluation is mainly carried out from four aspects.

Based on the evaluation of these four aspects, the combination of MaxCompute and DataWorks achieved the highest score in the data lake integration solution.

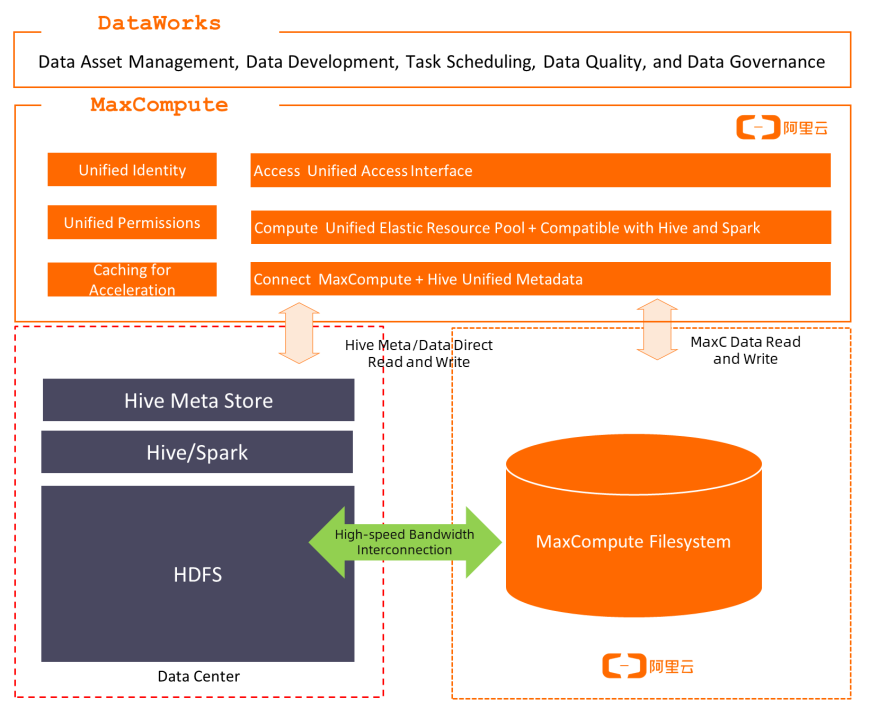

Customers do not want all data to be migrated to the cloud. They want to keep some data controllable, but some computing power needs to be solved on the cloud. The previous practice was to import and export data by ETL every day and then use MaxCompute (a large-scale distributed engine on the cloud) for processing. A lot of ETL operations need to be done every day, which consumes a lot of time and hours. The underlying layer stores metadata through online and offline connections. The cloud directly consumes data under the cloud and returns the data through high-speed network interconnection.

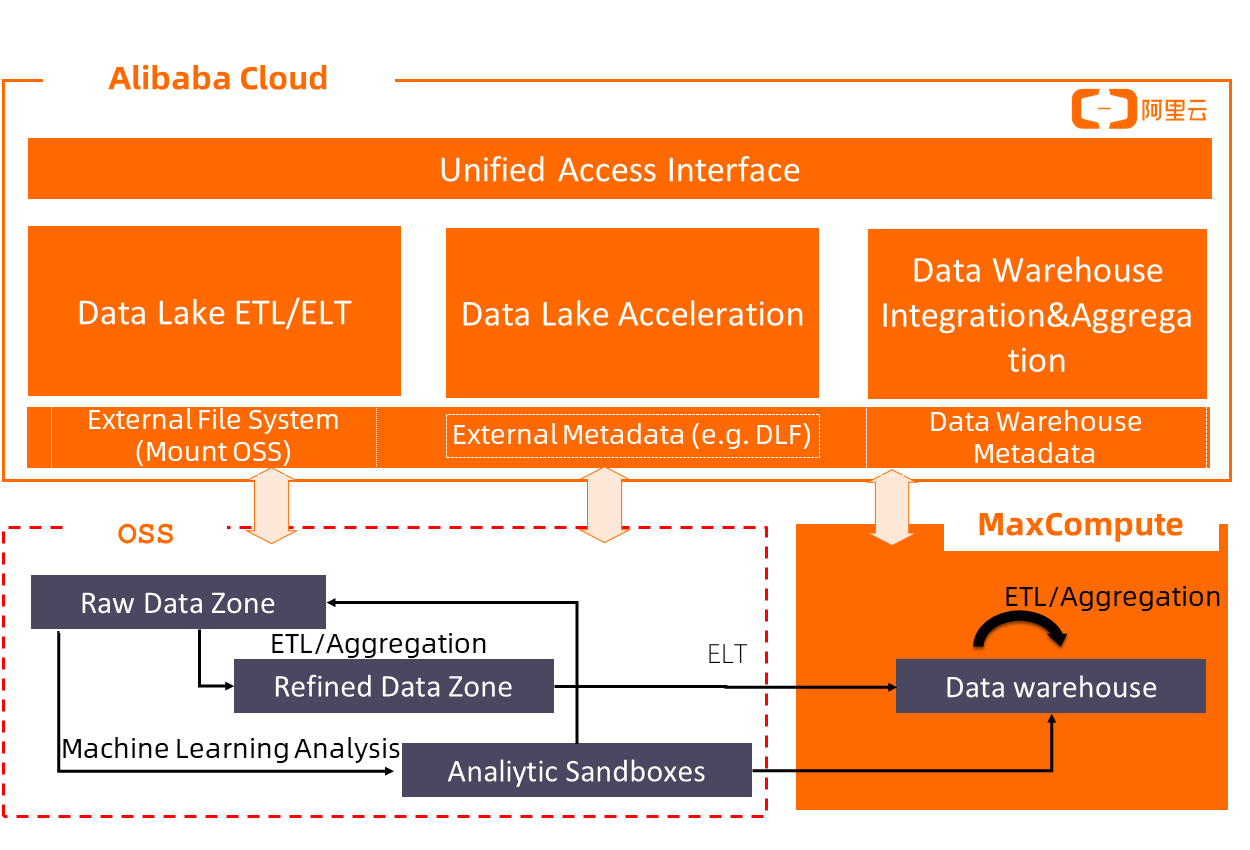

Data storage on the cloud has become a de facto data lake. The lake contains offline, real-time, structured, semi-structured, and unstructured data. Scanning files on OSS for unified management of OSS data and external metadata management DLF can be schematized into the form of databases and tables. Those that cannot be schematized change into file location management. You can use DLF to manage metadata and connect data warehouses and engines. You can also connect to Spark and Presto of Alibaba Cloud EMR. It achieves data sharing, unified metadata, and a flexible architecture with multiple engines.

The cloud engine MaxCompute can be used as an elastic resource pool for the on-premises data platform. The overall data development and governance are based on the on-premises Hadoop. MaxCompute is used as a resource pool based on Hadoop scheduling. Initiate works from offline Hadoop clusters, read and write MaxCompute resources on the cloud, and use MaxCompute's computing power to transmit data on and off the cloud.

You can use MaxCompute to perform multi-federated queries. You can join the databases and tables of MaxCompute data warehouses and other databases and tables externally mapped to MaxCompute using the unified development interface.

Alibaba Cloud's Cloud-Native Integrated Data Warehouse: Integrated Analysis Service Capabilities

137 posts | 21 followers

FollowApsaraDB - January 9, 2023

Alibaba Cloud Community - September 30, 2022

Alibaba EMR - August 5, 2024

Alibaba Cloud MaxCompute - October 31, 2022

Alibaba Clouder - September 27, 2020

Apache Flink Community - July 5, 2024

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free