By Yukui

As data processing business scenarios become increasingly complex, the demands on big data processing platform infrastructure are also rising. In addition to large-scale data lake storage, these demands include efficient batch processing for massive data and may also involve low-latency near real-time links. This article introduces how the new offline near real-time integrated architecture based on MaxCompute supports these comprehensive business scenarios. We also provide an integrated data storage and computing (Transaction Table2.0) solution for near real-time full and incremental data storage and computing.

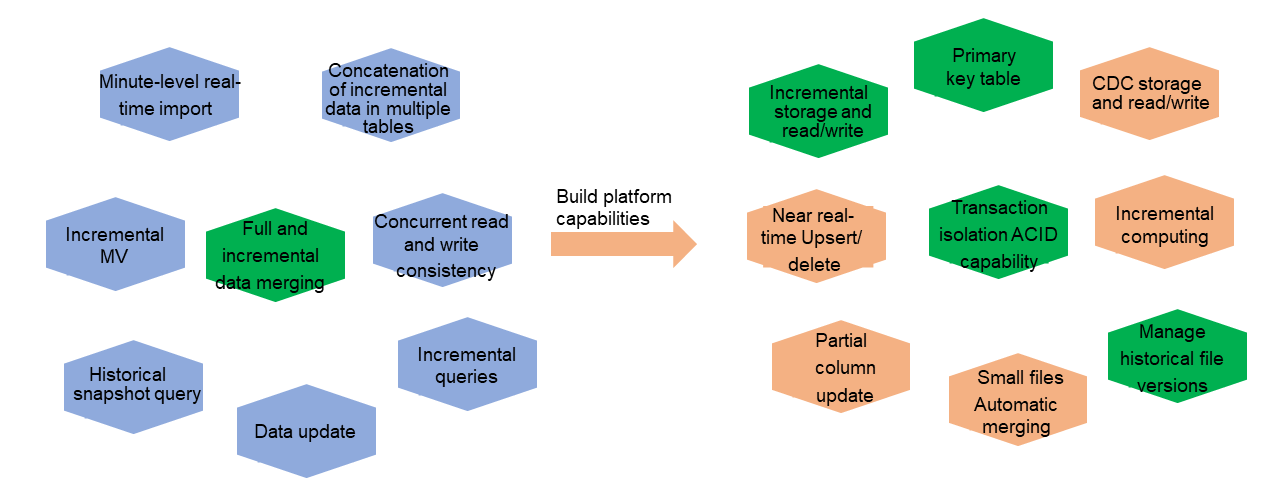

In typical data processing business scenarios, MaxCompute can directly meet the requirements for single-scenario full batch processing of large-scale data with low timeliness demands. However, as MaxCompute supports increasingly diverse businesses in terms of scale and usage scenarios, it not only handles large-scale offline batch processing effectively but also faces growing demands for near real-time and incremental processing. The following figure shows some of these business scenarios.

For example, the near real-time data import link requires the platform engine to support transaction isolation and automatic merging of small files. Similarly, the full and incremental data merge link relies on incremental data storage, read/write, and primary key capabilities. Previously, MaxCompute lacked the capabilities of the new architecture, forcing users to rely on the three solutions shown in the following figure to support these complex business scenarios. However, some pain points persisted, regardless of whether a single engine or federated multiple engines were used.

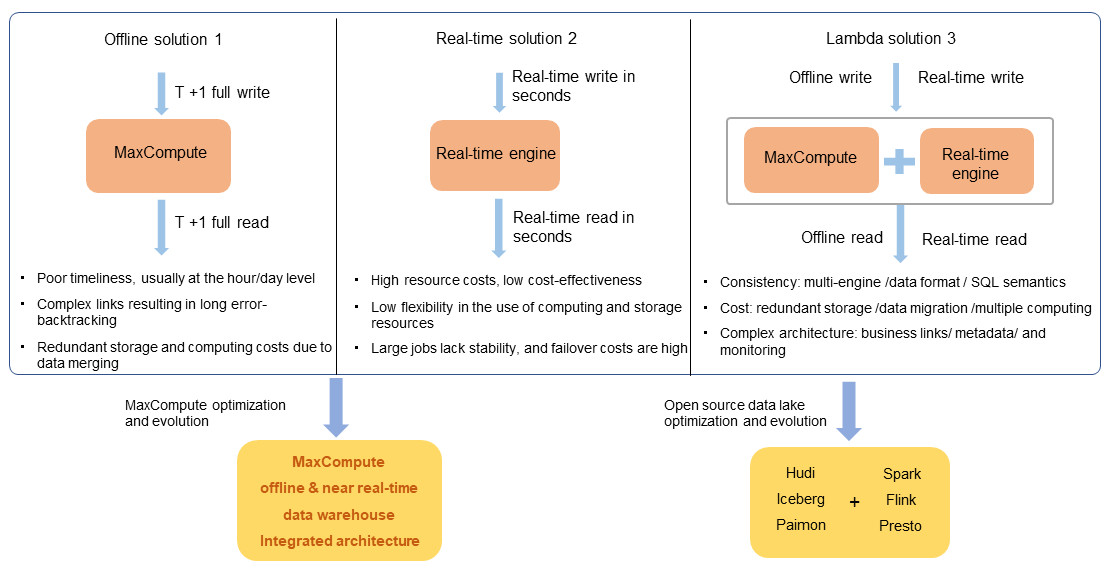

Solution 1: Only a single offline batch processing solution of MaxCompute is used. For near real-time links or incremental processing links, you usually need to convert them into T +1 batch processing links. This will increase the complexity of business logic, and the timeliness is also poor. The storage cost may also be high.

Solution 2: Only a single real-time engine is used. The resource cost is high, the cost performance is low, and there are some bottlenecks in the stability and flexibility of large-scale data batch-processing links.

Scenario 3: Use the typical Lambda architecture. MaxCompute links are used for full batch processing, and real-time engine links are used for incremental processing with high timeliness requirements. However, this architecture also has some well-known inherent defects, such as data inconsistency caused by multiple sets of processing and storage engines, additional costs caused by redundant storage and computing of multiple copies of data, complex architecture, and a long development cycle.

These solutions restrict each other in terms of cost, ease of use, low latency, and high throughput, making it difficult to achieve good results at the same time. This also drives the need for MaxCompute to develop a new architecture that can not only meet the needs of these business scenarios but also provide lower costs and a better user experience.

In recent years, the big data open source ecosystem has formed typical solutions to address these issues. The most popular open source data processing engines, such as Spark, Flink, and Trino, have deeply integrated with open source data lakes like Hudi, Delta Lake, Iceberg, and Paimon. This integration has enabled an open and unified computing engine and data storage approach, providing solutions to a series of problems caused by Lambda architecture. Meanwhile, MaxCompute has developed and designed an integrated offline and near real-time data warehouse architecture based on its offline batch computing engine architecture over the past year. While maintaining the cost-effective advantages of batch processing, MaxCompute meets business requirements for minute-level incremental data read, write, and processing. Additionally, MaxCompute provides practical functions like Upsert and Time Travel to expand business scenarios, effectively reducing data computing, storage, and migration costs and enhancing the user experience.

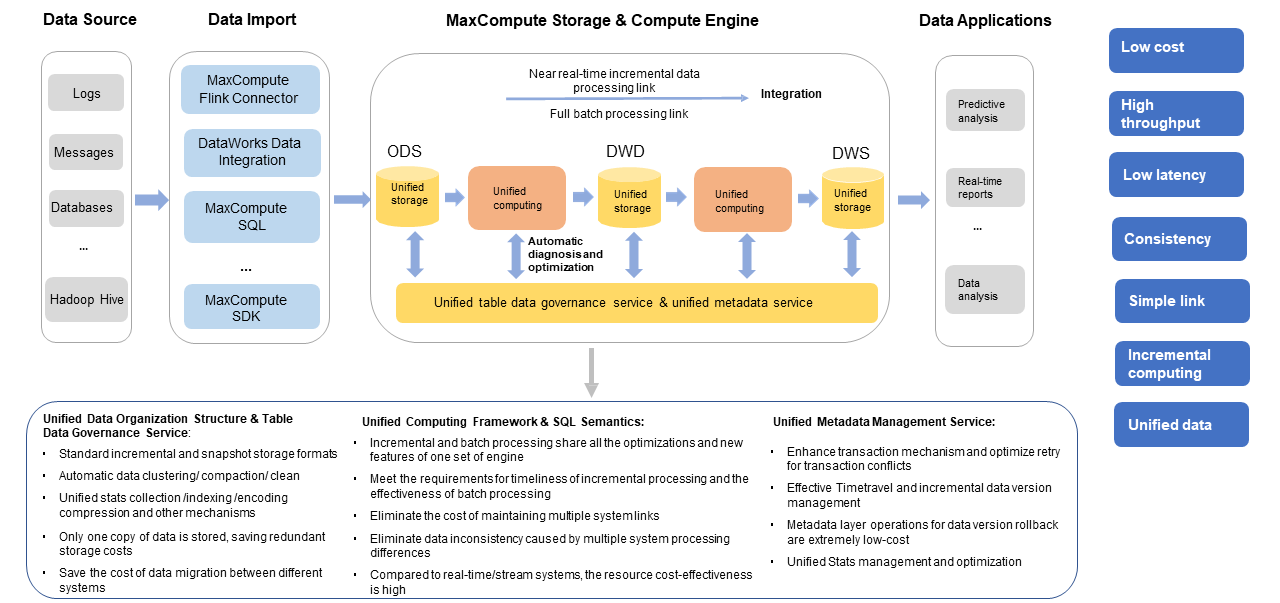

The preceding figure shows the new business architecture of MaxCompute, which efficiently supports comprehensive business scenarios. Data integration tools import a wide range of data sources into MaxCompute tables in near real-time incremental or batch mode. The table data management service of the storage engine automatically optimizes the data storage structure to manage small files. The unified computing engine supports near real-time incremental and large-scale offline batch analysis and processing links. The unified metadata service supports transaction mechanisms and massive file metadata management. The new unified architecture brings significant advantages, effectively solving problems of redundant computing, storage, and low timeliness caused by pure offline systems processing incremental data. It also avoids the high resource consumption cost of real-time systems, eliminates the inconsistency problem of multiple systems in Lambda architecture, and reduces the cost of redundant multiple storage and data migration between systems. In short, the new integrated architecture meets the computing and storage optimization of incremental processing links and minute-level timeliness while ensuring the overall efficiency of batch processing and effectively saving resource usage costs.

Currently, the new architecture supports some core capabilities, including primary key tables, Upsert real-time write, time travel query, incremental query, SQL DML operation, and automatic table data governance optimization. For more information on architecture principles and related operation instructions, please refer to Architecture Principles and User Operations.

This section focuses on how the new architecture supports some typical business links and the resulting optimization effects.

This session describes how to create a table and the attributes of key tables. It also describes how to set table attribute values based on business scenarios and how to automatically optimize table data in the background of the storage engine.

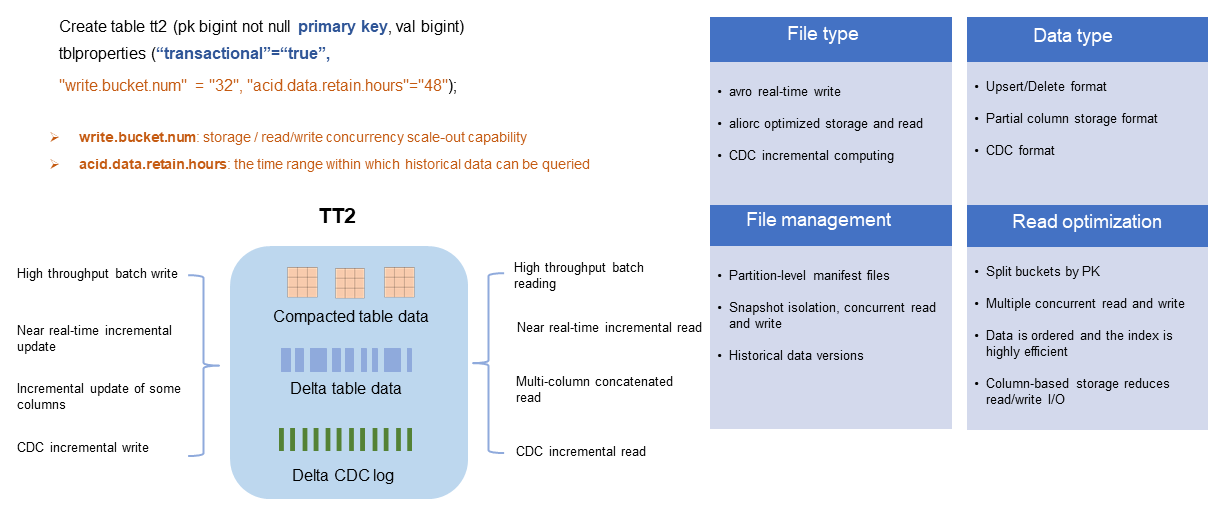

First of all, the new integrated architecture needs to design a unified table format to store data in different formats to support data read and write in different business scenarios. Here, it is called Transaction Table 2.0, or TT2 for short, which can support all the features of existing batch processing links and new links such as the near real-time incremental link.

For more information about the syntax used to create a table, see the official website. Example:

createtable tt2 (pk bigint notnullprimarykey, val string) tblproperties ("transactional"="true");

createtable par_tt2 (pk bigint notnullprimarykey, val string)

partitioned by (pt string) tblproperties ("transactional"="true");To create a TT2 instance, you only need to set the primary key(PK) and set the table attribute transactional to true. The primary key is used to ensure the unique attribute of a data row, and the transactional attribute is used to configure the atomicity, consistency, isolation, and durability (ACID) transaction mechanism to meet read/write snapshot isolation.

For more information about how to configure the attributes, see the official website.

Example:

createtable tt2 (pk bigint notnullprimarykey, val string)

tblproperties ("transactional"="true", "write.bucket.num" = "32", "acid.data.retain.hours"="48");This attribute is very important and indicates the number of buckets for each partition or non-partition table. The default value is 16. All written records are stored in buckets based on the PK value. Records with the same PK value are stored in the same bucket. Non-partition tables cannot be modified. Partition tables can be modified, but only new partitions take effect.

The concurrency of data write and query can be scaled horizontally based on the number of buckets. Each concurrency can process at least one bucket of data. However, it does not mean that the larger the number of buckets, the better. Each data file belongs to only one bucket. Therefore, the larger the number of buckets, the easier it is to generate more small files, which may further increase storage costs and pressure and improve read efficiency. Therefore, you must evaluate the overall number of buckets based on the data write throughput, latency, total data size, number of partitions, and read latency.

In addition, data is stored in buckets to improve the performance of point query scenarios. If the filter condition of a query statement is a specific PK value, bucket pruning, and data file pruning can be efficiently performed during queries, greatly reducing the amount of data queried.

• For a non-partition table, if the data volume is less than 1G, the number of buckets is recommended to be set to 4-16. If the total data volume is more than 1G, it is recommended to use 128M-256M as the size of the bucket data. If you wish to increase the concurrency of queries, you can further reduce the size of the bucket data. If the total data volume is more than 1T, it is recommended to use 500M-1G as the data size of the bucket. However, the maximum number of buckets that can be set at present is 4,096. Therefore, for a larger amount of data, the amount of data in a single bucket can only increase, which affects the query efficiency. In the future, the platform will consider whether to set a higher limit.

• For partition tables, the set number of buckets is based on each partition, and the number of buckets per partition may be different. For more information about how to set the number of buckets in each partition, see the non-partition table suggestions above. For tables with a massive number of partitions, where each partition contains a relatively small amount of data, typically less than several tens of megabytes, it is recommended to keep the number of buckets per partition as low as possible, ideally one or two, to avoid generating too many small files.

This attribute is also important and represents the range of historical data that can be read during time travel queries. The default value is 1 day and the maximum support is 7 days.

We recommend that you set a reasonable time period based on your business requirements. The longer the time period is, the more historical data will be saved, the more storage costs will be incurred, and the query efficiency will be affected to some extent. If you do not need time travel to query historical data, we recommend that you set this attribute value to 0, which means that the time travel function is disabled. This can effectively save the storage cost of historical data.

TT2 supports full Schema Evolution operations, including adding and deleting columns. When you query historical data in time travel, the data is read based on the schema of the historical data. In addition, PK columns cannot be modified.

For more information about the DDL syntax, see the official website.

Example:

altertable tt2 add columns (val2 string);

altertable tt2 drop columns val;One of the typical scenarios of TT2 is that it supports minute-level near real-time incremental data import. Therefore, the number of small incremental files may expand, especially if the number of buckets is large. This may lead to problems such as high storage access pressure, high costs, low data read/write I/O efficiency, and slow file metadata analysis. If a large amount of data is in the Update/Delete format, there are also many redundant records in the intermediate state of the data. This further increases the storage and computing costs and reduces query efficiency.

To this end, the backend storage engine supports a reasonable and efficient table data service to automatically manage and optimize stored data, reduce storage and computing costs, and improve analysis and processing performance.

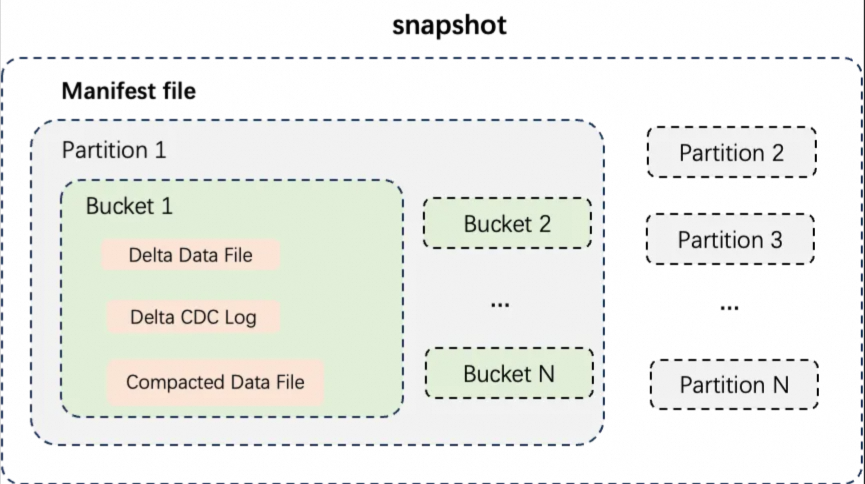

In the preceding figure, the data structure of the partition table is shown. First, the data files are physically isolated by partition, and the data in different partitions are under different directories. The data in each partition is divided into buckets based on the number of buckets, and the data files in each bucket are stored separately. The data files in each bucket are mainly divided into three types:

• Delta Data File: The incremental data files that are generated after the data of each transaction is written or small files are merged. The intermediate historical data of all rows is stored in the Delta data files to meet the requirements for near real-time read and write of incremental data.

• Compacted Data File: The data file generated by the compact execution of Delta File eliminates the intermediate historical status of data records. Records with the same PK value only retain one row and are compressed and stored in columns to support efficient data query requirements.

• Delta CDC Log: Incremental logs that are stored in the CDC format based on time series (not available).

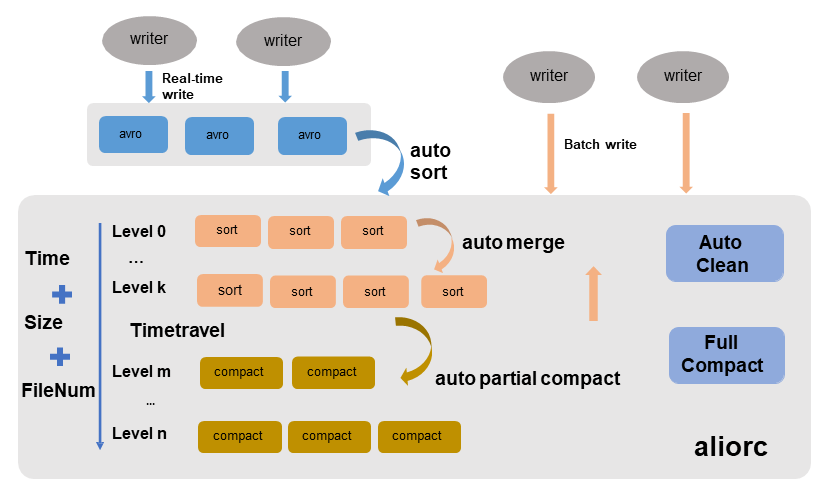

As shown in the preceding figure, the table data service of TT2 is mainly divided into four types: Auto Sort, Auto Merge, Auto Compact, and Auto Clean. You do not need to actively configure them. The storage engine background service automatically collects data from various dimensions and automatically executes the policies that are configured.

• Auto Sort: automatically converts real-time row-store avro files into aliorc column-store files, saving storage costs and improving read efficiency.

• Auto Merge: automatically merges small files and solves various problems caused by the expansion of the number of small files. The main strategy is to periodically perform comprehensive analysis based on multiple dimensions such as data file size, number of files, and write timing, and perform hierarchical consolidation. However, it does not eliminate the intermediate historical status of any record and is mainly used for time travel query historical data.

• Auto Partial Compact: automatically merges files and eliminates the historical status of records, reduces additional storage costs caused by excessive update/delete records, and improves read efficiency. The main strategy is to periodically perform a comprehensive analysis based on multiple dimensions such as incremental data size, write timing, and time travel time to perform a compact operation. This operation compacts only the historical records that exceed the query time range of time travel.

• Auto Clean: automatically cleans up invalid files to save storage costs. After the Auto Sort, Auto Merge, and Auto Partial Compact operations are executed, new data files are generated. Therefore, the old data files are useless and will be automatically deleted immediately, saving storage costs in a timely manner.

If you have high requirements for query performance, you can also manually perform the major compact operation on the full data. All data in each bucket is removed from all historical states, and a new Aliorc column storage data file is generated for efficient queries. However, additional execution costs and storage costs are incurred. Therefore, it is not necessary to perform the operation.

For more information about the syntax, see the official website.

Example:

set odps.merge.task.mode=service;

altertable tt2 compact major;This section describes some typical business practices in write scenarios.

The offline architecture of MaxCompute generally imports incremental data to a new table or a new partition at the hourly or daily level and then configures the corresponding offline ETL processing link to perform the Join Merge operation on the incremental data and the existing table data to generate the latest full data. This offline link has a long latency and consumes certain computing and storage costs.

The Upsert real-time import link of the new architecture can basically keep the latency from data write to query visible for 5-10 minutes, meeting the near real-time business requirements at the minute level, and does not require complex ETL links to perform full and incremental merge operations, saving the corresponding computing and storage costs.

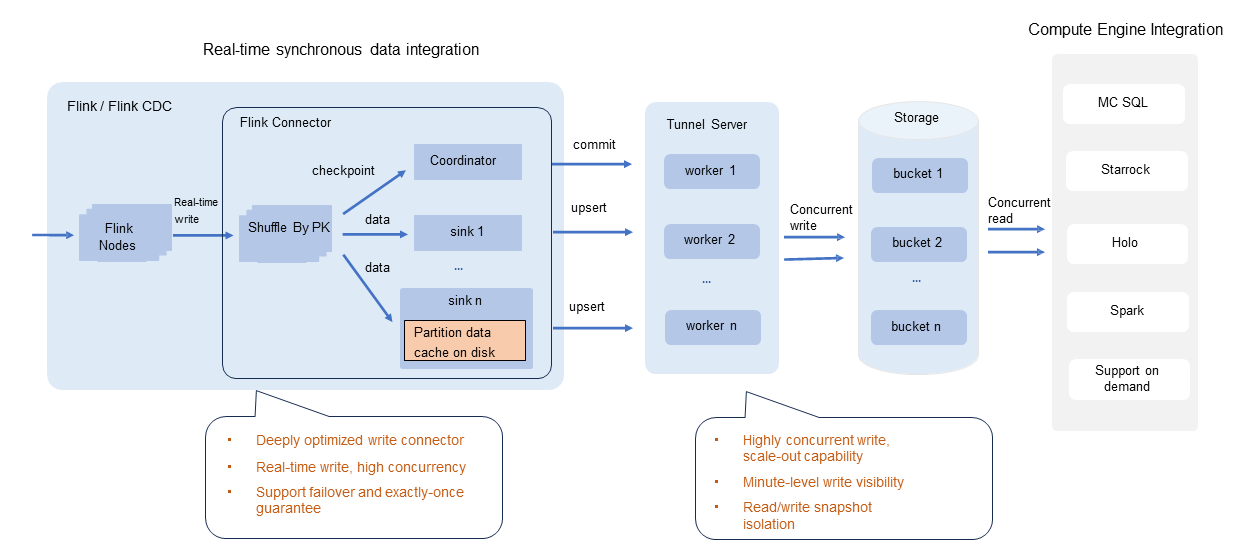

In actual business data processing scenarios, a variety of data sources are involved, such as databases, log systems, and other MQ systems. To facilitate user data write into TT2, MaxCompute has developed an open source Flink Connector tool to customize the design and development of the tool for scenarios such as high concurrency, fault tolerance, and transaction submission. This tool can meet the requirements of low latency, high accuracy, and good integration with the Flink ecosystem. For details, please refer to the Product Description on the official website.

The preceding figure shows the overall write process. The following key points can be summarized:

• Basically, most engines or tools that can integrate the Flink ecosystem can write data into TT2 tables in real time through Flink tasks and the MaxCompute Flink connector.

• Write concurrency can be scaled out to achieve low latency and meet high throughput requirements. The write traffic throughput is related to parameters such as the parallelism of the Flink sink and the number of TT2 buckets. You can configure the write traffic throughput based on your business scenario. Note that for scenarios where the number of TT2 buckets is configured as an integral multiple of the Flink sink concurrency, the system has been efficiently optimized to ensure optimal write performance.

• The system supports minute-level data visibility and provides read-write snapshot isolation.

• The checkpoint mechanism of Flink is used to handle fault tolerance scenarios and ensures the exactly_once semantics.

• You can write data to thousands of partitions at the same time. This meets the requirements for concurrently writing data to many partitions.

• The maximum throughput of a single bucket can be evaluated based on the processing capacity of 1 MB/s. The throughput may be affected by different environments and configurations. If you are sensitive to write latency and require stable throughput, you can apply for Exclusive Data Transmission Service resources. However, this feature incurs an additional charge. If shared public data transmission service resource groups are used by default, stable write throughput may not be guaranteed and the amount of available resources is also limited when there is severe resource competition.

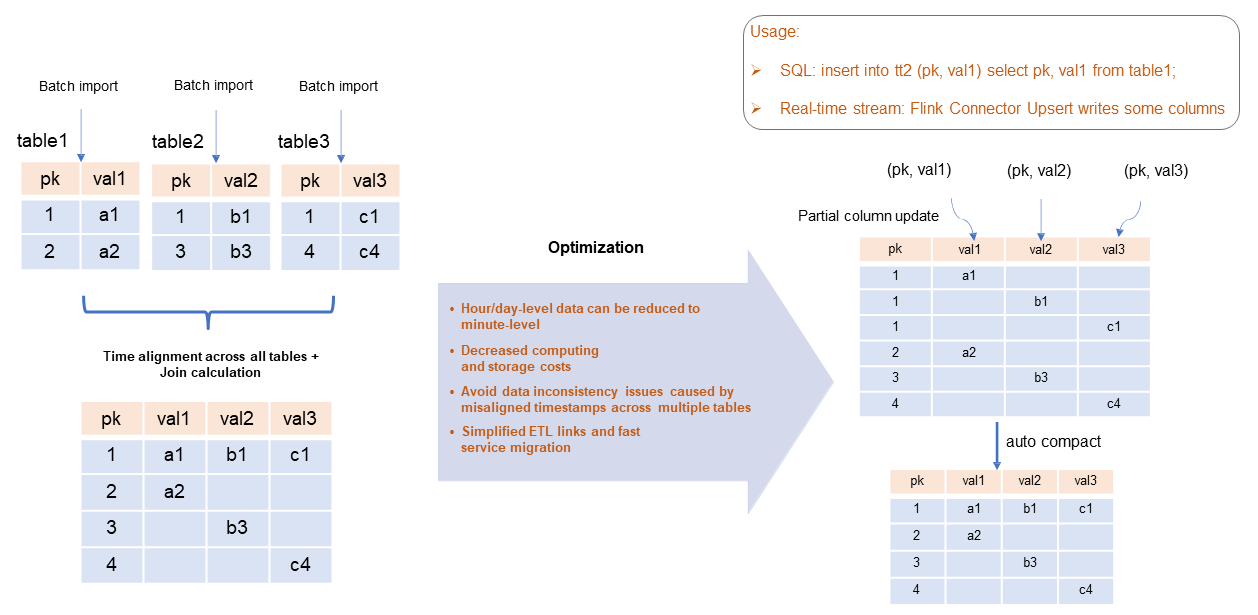

This link can be used to optimize the scenario where data columns of multiple incremental tables are concatenated into one large and wide table, which is similar to the business scenario of multi-stream join.

As shown in the preceding figure, the offline ETL link of MaxCompute is shown on the left to process such scenarios. Multiple incremental tables are aligned at a fixed time, usually at the hour/day level, and then a join task is triggered to concatenate the data columns of all tables to generate a large wide table. If there is existing data, an ETL link similar to upsert is also required. Therefore, the overall ETL link has a long latency, a complex process, and consumes computing and storage resources. Data is also prone to scenarios where data cannot be aligned.

The right side shows the ability to support partial column updates through TT2 tables. You only need to incrementally update the data columns of each table to TT2 large wide table in real time. The background Compact service of TT2 tables will automatically concatenate data rows with the same PK value into one row during the query. This link basically solves the problems encountered by offline links. The latency is reduced from the hour/day level to the minute level. Moreover, the link is simple and almost ZeroETL, which can also save computing and storage costs exponentially.

Currently, you can use the following two methods to update some columns. The feature is still in canary release and has not been released to the official website (it is expected to be released to the public cloud within two months).

• Use SQL Insert to perform incremental write of some columns:

createtable tt2 (pk bigint notnullprimarykey, val1 string, val2 string, val3 string) tblproperties ("transactional"="true");

insertinto tt2 (pk, val1) select pk, val1 from table1;

insertinto tt2 (pk, val2) select pk, val2 from table2;

insertinto tt2 (pk, val3) select pk, val3 from table3;• Use the Flink connector to write data to some columns in real time.

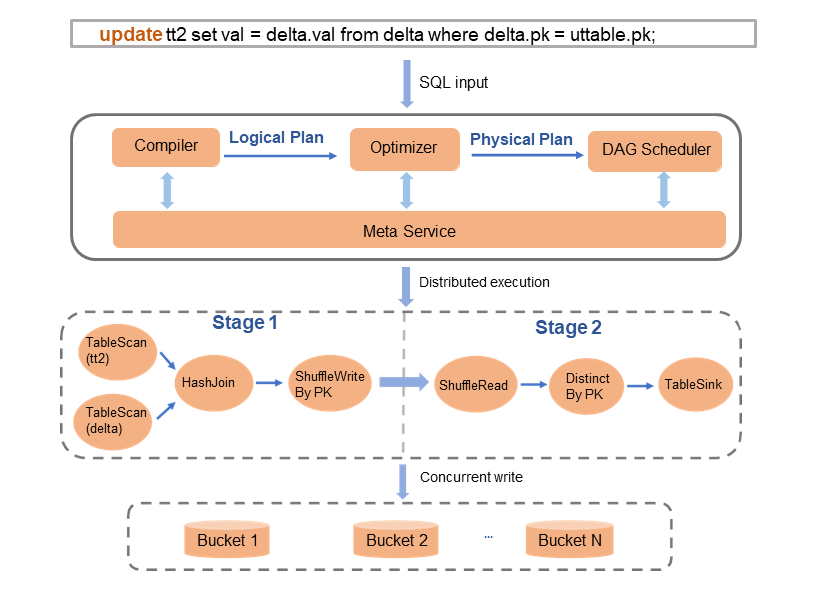

To facilitate operations on TT2 tables, the MaxCompute computing engine supports the full set of SQL DQL syntax for data queries and DML syntax for data operations. This ensures a high availability of offline links and a good user experience. The kernel modules of the SQL engine, including Compiler, Optimizer, and Runtime, are specially adapted and developed to support related functions and optimizations, including parsing of specific syntax, plan optimization of specific operators, deduplication logic for PK columns, and runtime upsert concurrent write.

After data processing is complete, Meta Service detects transaction conflicts and updates data object metadata atomically to ensure read/write isolation and transactional consistency.

For more information about the SQL DML syntax, see the Official documentation. The INSERT, UPDATE, DELETE, and MERGE INTO statements and examples are provided.

For the Upsert batch write capability, TT2 tables are automatically merged based on the PK value when they are background service or queried. Therefore, in the Insert + Update scenario, you do not need to use the complex Update/Merge Into syntax. You can use Insert Into to insert new data. This is simple to use and can save some read I/O and computing resources.

This section describes some business practices in typical query scenarios.

Based on TT2, the computing engine can efficiently support the typical business scenarios of Time travel query, that is, query data of historical versions. It can be used to trace the historical status of business data or to restore the historical status data for data correction when data errors occur.

For more information about the syntax, see the official website.

Example:

// Query historical data with a specified timestamp.

select * from tt2 timestampasof'2024-04-01 01:00:00';

// Query historical data within 5 minutes.

select * from tt2 timestampasofcurrent_timestamp() - 300;

// Query the historical data that has been written up to the second-to-last Commit.

select * from tt2 timestampasof get_latest_timestamp('tt2', 2);For the time range of historical data that can be queried, you can configure this range through the table attribute acid.data.retain.hours. The policy for configuration has been introduced previously. For more information about the policy, see the official website.

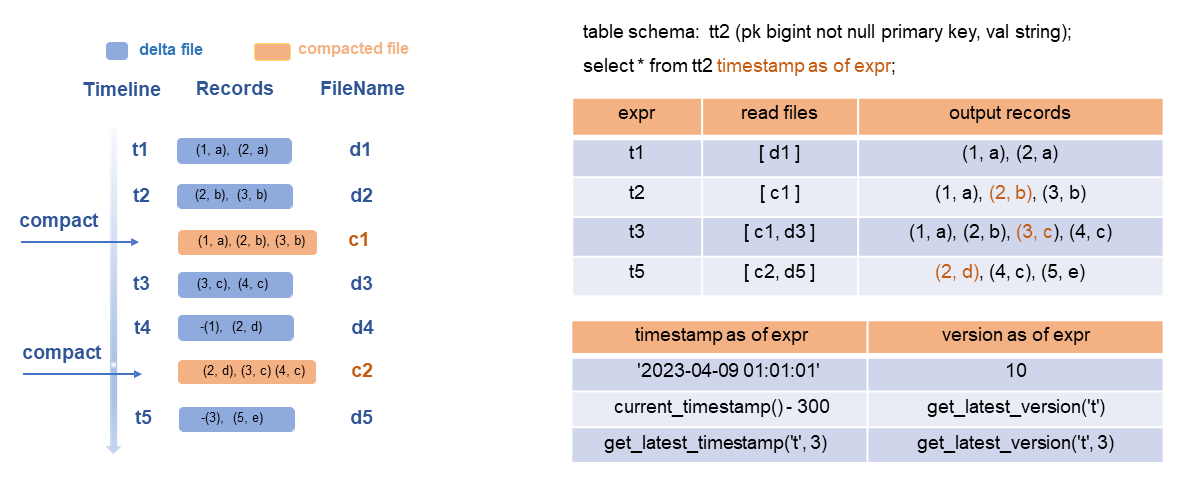

After receiving the time travel query syntax input from the user, the SQL engine first parses the historical data version to be queried from the Meta service. It then filters out the Compacted files and Delta files to be read, merges them, and outputs the result. The use of Compacted files can significantly enhance read efficiency.

Further description of the query details according to the example in the preceding figure:

• The TT2 Schema in the figure contains a pk column and a val column. The figure on the left shows the data change process. The t1-t5 represents the time version of five transactions. Five data write operations were performed respectively to generate five Delta files. The Compact operations were performed at t2 and t4 respectively to generate two Compacted Files: c1 and c2. It can be seen that c1 has eliminated the intermediate state history (2,a) and only saves the latest state records (2,b).

• If you query the historical data at t1, you only need to read the Delta file (d1) for output. If you query the historical data at t2, you only need to read the Compacted file (c1) and 3 records are output. If you query the historical table at t3, the merged output of the Compacted file (c1) and Delta file (d3) is included. You can extend that idea to queries at other times. The Compacted file can be used to accelerate queries, but heavy Compact operations need to be triggered. You need to trigger major compact operations based on your business scenarios, or the backend system can automatically trigger compact operations.

• The transaction version set by the time travel query supports two types of versions: the time version and the ID version. The SQL syntax not only directly specifies some constants and common functions, but also develops two additional functions: get_latest_timestamp and get_latest_version. The second parameter indicates the latest commit, which is convenient for users to obtain the internal data version of MaxCompute for accurate queries and a better user experience.

TT2 tables support incremental write and storage. One of the most important ideas is to support incremental queries and the incremental computing link. To this end, a new SQL incremental query syntax is specially designed and developed to support the near real-time incremental processing link. Users can flexibly build incremental data warehouse business links by using incremental query statements. Recently, we have been planning to develop and support incremental materialized views to further lower the use threshold, improve user experience, and reduce user costs.

Two types of incremental query syntax are supported:

• You can specify a timestamp or version to query incremental data. For more information, see the official website.

Example:

//Query the incremental data in the ten minutes between 2024-04-0101: 00: 00-01: 10: 00

select * from tt2 timestampbetween'2024-04-01 01:00:00'and'2024-04-01 01:10:00';

// Query the incremental data between the last 10 minutes and the last 5 minutes.

select * from tt2 timestampbetweencurrent_timestamp() - 601andcurrent_timestamp() - 300;

// Query the incremental data of the last commit.

select * from tt2 timestampbetween get_latest_timestamp('tt2', 2) and get_latest_timestamp('tt2');• The engine automatically manages the data version to query incremental data. You do not need to manually specify the query version, which is ideal for periodic incremental computing links (the canary release is subject to the official website).

Example:

// Bind a stream object to the TT2 table.

create stream tt2_stream ontable tt2;

insertinto tt2 values (1, 'a'), (2, 'b');

//Automatically query the newly added two records (1, 'a' ), (2, 'b' ), and update the next query version to the latest data version

insert overwrite dest select * from tt2_stream;

insertinto tt2 values (3, 'c'), (4, 'd');

//Automatically query the new two records (3, 'c' ), (4, 'd')

insert overwrite dest select * from tt2_stream;

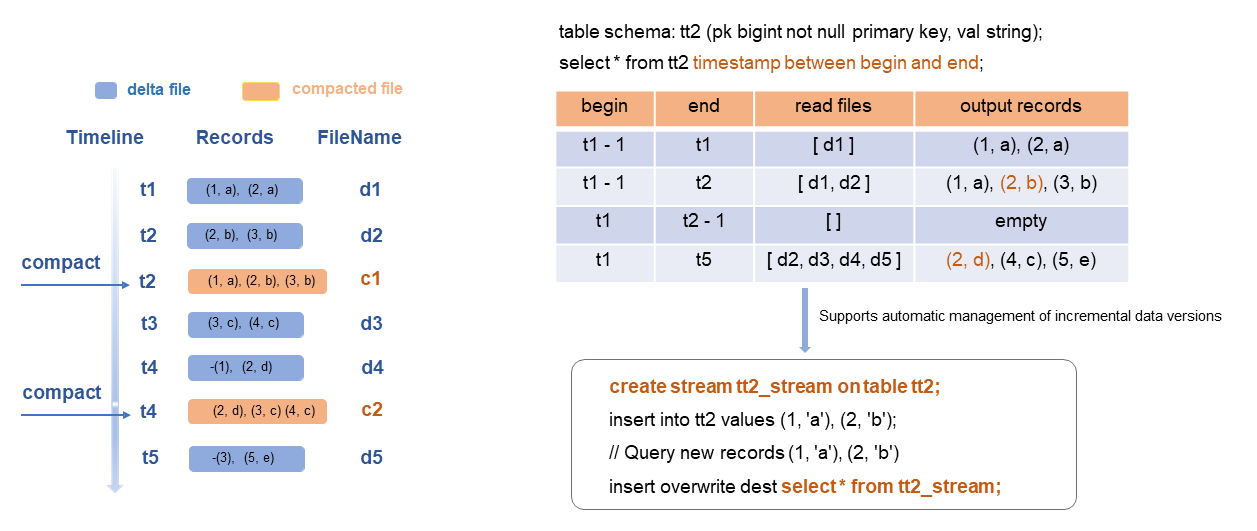

Upon receiving the incremental query syntax input from the user, the SQL engine first parses the historical incremental data version from the Meta service. It then filters out the list of Delta files to be read and proceeds to merge and output them.

Further description of the query details according to the example in the preceding figure:

• The table tt2 Schema in the figure contains a pk column and a val column. The figure on the left shows the data change process. The t1-t5 represents the time versions of five transactions. Five data write operations are performed respectively to generate five Delta files. The Compact operations are performed at t2 and t4 respectively to generate two Compacted files: c1 and c2.

• In a specific query example, if the begin is t1-1 and the end is t1, only the Delta file (d1) corresponding to t1 is read for output. If the end is t2, two Delta files (d1, d2) are read. If the begin is t1 and the end is t2-1, that is, the query time range is (t1, t2), a blank line will be returned as no incremental data is inserted into this time period.

• The data (c1, c2) generated by the Compact/Merge service will not be repeatedly output as new data.

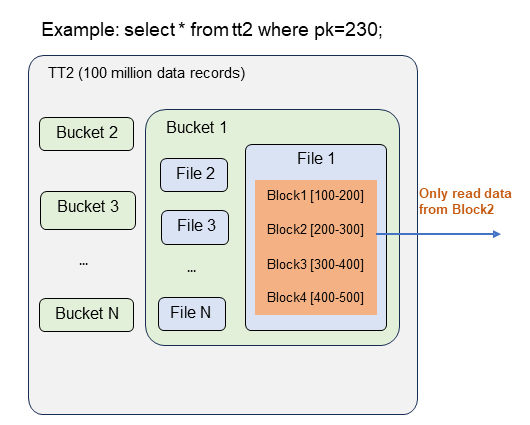

As mentioned above, the data distribution and indexes of the TT2 table are basically built based on the PK column values. Therefore, if you perform a point query on the TT2 table and specify the PK value for filtering, the amount of data to be read and the reading time will be greatly reduced, and the resource consumption may also be reduced by hundreds of times. For example, the total number of data records in the TT2 table is 100 million, and the number of data records actually read from the data file after filtering may be only 10,000.

The main DataSkipping optimizations include:

• Bucket pruning is performed first. Only one bucket that contains the specified PK value is read.

• Data files are pruned within the bucket. Only the file that contains the specified key value is read.

• Block pruning is performed in the file, and filtering is performed based on the range of primary key values of blocks. Only the block that contains the specified key value is read.

Follow the regular SQL query syntax. A Simple example:

select * from tt2 where pk = 1;

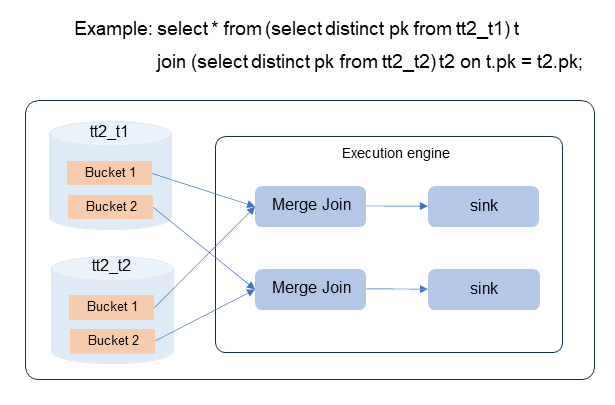

TT2 table data is distributed in buckets based on the PK value, and the data queried from buckets has a Unique attribute and sort order. Therefore, SQL Optimizer can use these attributes to perform a large number of optimizations.

For example, in the SQL statement shown in the following figure, the number of buckets for tt2_t1 and tt2_t2 is the same. The SQL optimizer can optimize the following operations:

• The PK column's inherent Unique attribute in a Distinct operation allows for the elimination of the deduplication operator.

• The Join on key and PK columns are the same, so Bucket Local Join can be used directly to eliminate the resource-consuming Shuffle process.

• The data read from each bucket is ordered. Therefore, you can directly use the MergeJoin algorithm to eliminate the preceding Sort operator.

These eliminated operators are extremely resource-consuming, so these optimizations can improve performance by more than one time overall.

Follow the regular SQL query syntax. A Simple example:

select * from (selectdistinct pk from tt2_t1) t

join (selectdistinct pk from tt2_t2) t2 on t.pk = t2.pk;Currently, databases and big data processing engines have their own data processing scenarios. In some complex business scenarios, OLTP, OLAP, and offline analysis engines also need to analyze and process data. Therefore, data also needs to flow between engines. Synchronizing the change records of a single table or the entire database to MaxCompute in real time for analysis and processing is a typical business link.

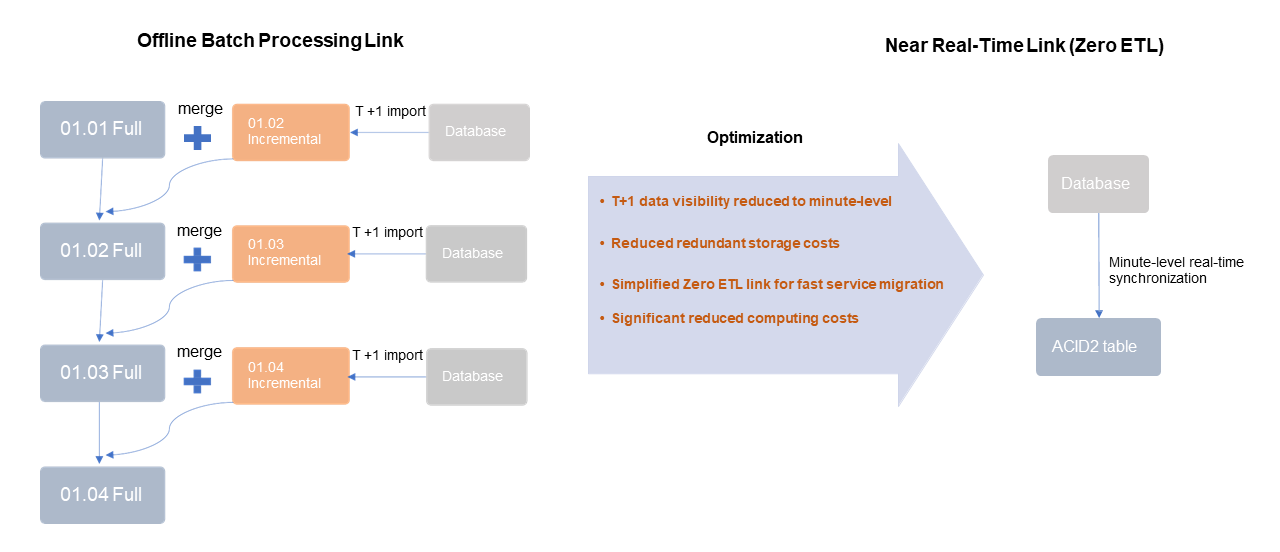

As shown in the preceding figure, the process on the left is a typical ETL processing link that was previously supported by MaxCompute. The change records of the database are read on an hourly or daily basis and written to a temporary incremental table in MaxCompute. Then, the temporary table and the existing full table are joined and merged to generate new full data. This link is complex and has a long latency. It also consumes certain computing and brings storage costs.

The process on the right uses the new architecture to support this scenario. You can directly read the Upsert change records of the database in real time at the minute level and write them to the TT2 table. The link is very simple. The data visibility is reduced to the minute level. Only one TT2 table is required, and the computing and storage costs are minimized.

Currently, MaxCompute integrates two ways to support this link:

• You can configure a real-time full and incremental synchronization task for an entire database or a single table in DataWorks Data Integration.

The new MaxCompute offline and near real-time data warehouse integration architecture aims to cover some common features of near real-time data lakes, such as HUDI and ICEBERG. Additionally, this self-developed and designed architecture boasts many unique highlights in terms of low cost, functionality, performance, stability, and integration:

• MaxCompute supports near real-time and incremental links at a low cost, offering high cost-effectiveness.

• With a unified design for storage, metadata, and compute engines, MaxCompute achieves in-depth and efficient integration of the engines, resulting in low storage costs, efficient data file management, and high query efficiency. Furthermore, many optimization rules for MaxCompute batch queries can be reused by time travel and incremental queries.

• MaxCompute supports a full set of common SQL syntax, making it very user-friendly.

• The platform provides a highly customized and optimized data import tool that supports many complex business scenarios with high performance.

• Seamlessly integrating with MaxCompute's existing business scenarios reduces migration, storage, and computation costs.

• Intelligent and automated management and optimization of table data ensure better read and write stability and performance, while automatically optimizing storage efficiency and costs.

• As a fully managed MaxCompute platform, users can achieve out-of-the-box usage without additional access costs, simply by creating a TT2 table.

• As a self-developed architecture, MaxCompute allows users to manage data development according to their business requirements.

Practical Use of MaxCompute Metadata: Data Permission Statistics

137 posts | 20 followers

FollowAlibaba Cloud New Products - August 20, 2020

Alibaba Clouder - July 26, 2019

Alibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud MaxCompute - December 8, 2020

Apache Flink Community - July 18, 2024

Apache Flink Community China - June 8, 2021

137 posts | 20 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free