By Kong Liang (Lianyi), Product Expert of Alibaba Cloud Intelligence

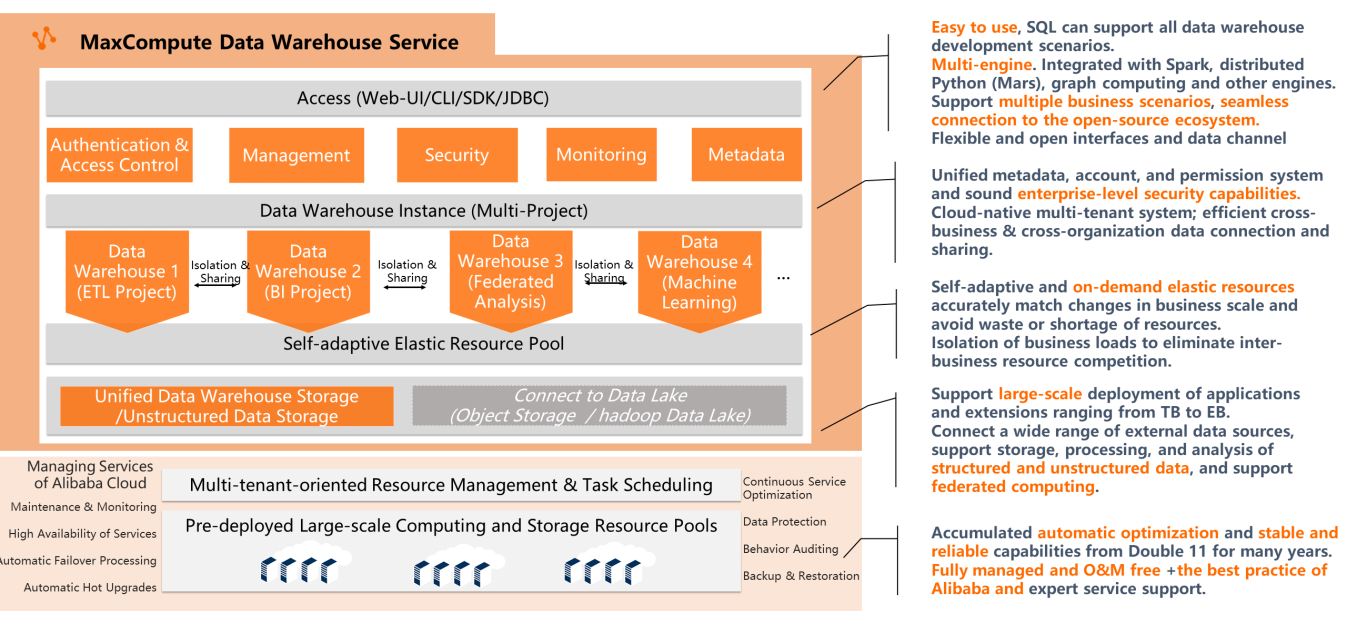

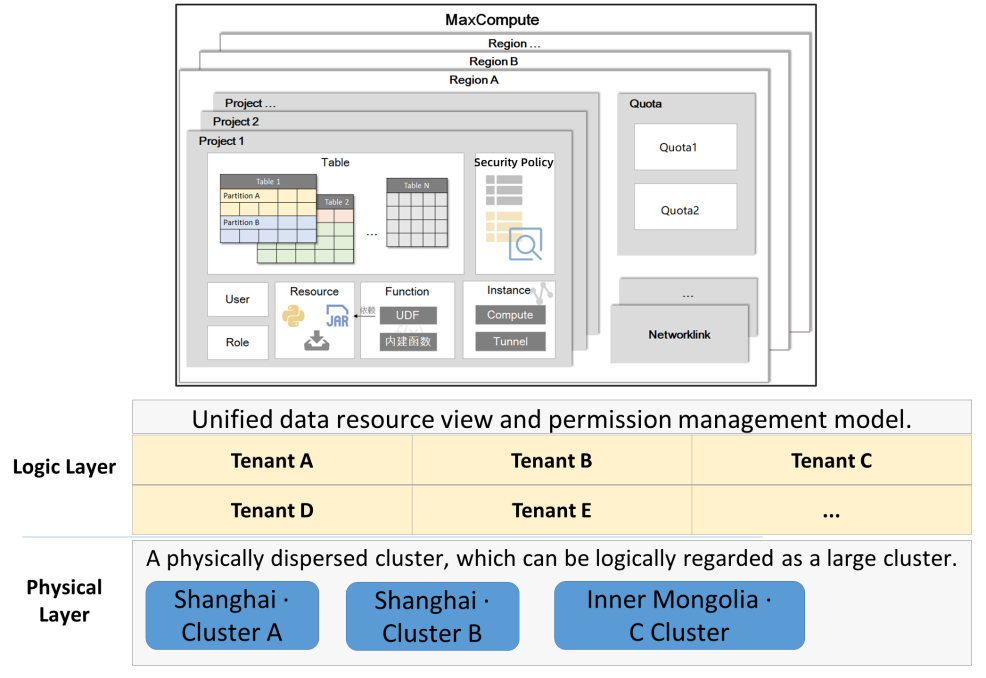

MaxCompute is a multi-functional, low-cost, high-performance, highly reliable, and easy-to-use data warehouse and a big data platform that supports all data lake capabilities. It supports ultra-large-scale, serverless, and comprehensive multi-tenant capabilities. It has built-in enterprise-level security capabilities and management functions to support data protection, secure sharing, and open data/ecosystem. It can meet the needs of multiple business scenarios (such as data warehouse/BI, unstructured data processing and analysis of data lakes, and machine learning).

Alibaba Cloud MaxCompute provides fully managed out-of-the-box services. Users only need to pay attention to their business and resources, making the PaaS platform act as SaaS mode. MaxCompute is a cloud-native multi-tenant platform that allows users to obtain low Total Cost of Ownership (TCO) with low resource costs. Data can be easily shared between tenants without interfacing between multiple Hadoop instances. From the perspective of access and use, it is easy to use, flexible, and supports multiple engines. Many MC users said that it is not that the business cannot be migrated, but that there are no other better and more economical options. In terms of data warehouse management capabilities, MaxCompute provides unified metadata, a unified account and permission system, and complete enterprise-level security capabilities. From the perspective of resource usage, self-adaptive elastic resources on demand avoid resource waste or insufficient resources, which saves costs and meets demand. Isolation of business loads eliminates inter-business resource competition. From the perspective of scale and data storage, it supports large-scale deployment of applications and expansion from TB to EB level. It connects a wide range of external data sources and supports structured and unstructured data storage and processing and federated computing.

MaxCompute has accumulated the automatic optimization and stable and reliable capabilities of Alibaba from many years of experience during Double 11. This is not available for any commercial Hadoop product. Based on its first-mover advantage and Alibaba's continuous business practices, MaxCompute makes the product mature and stable.

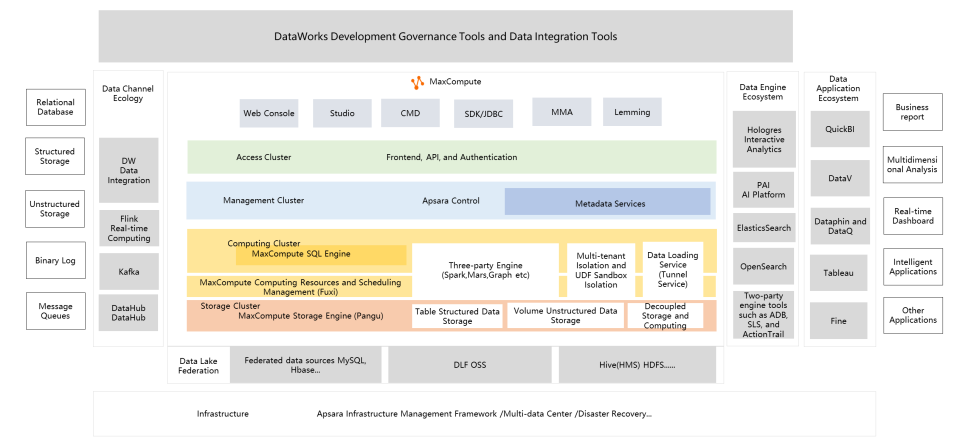

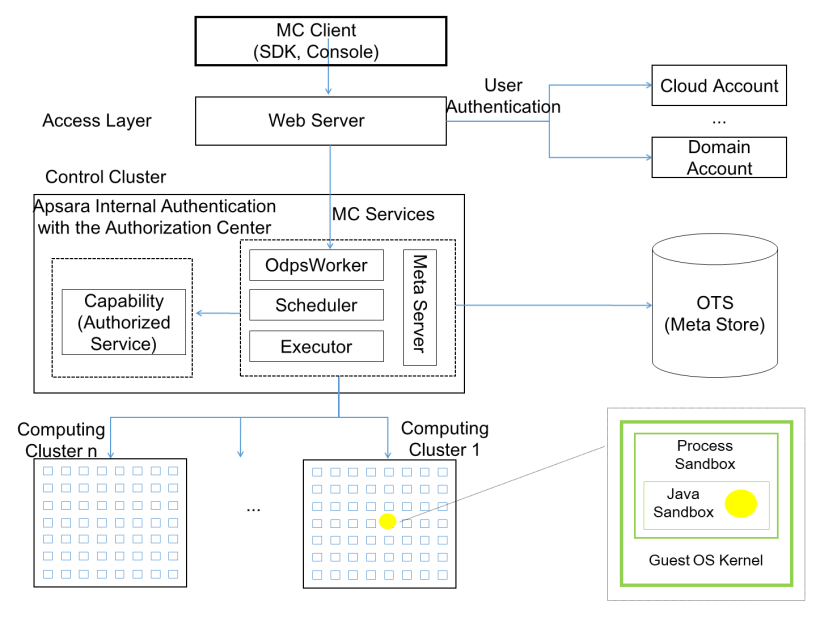

MaxCompute is a data warehouse based on big data technology. It uses Pangu (the exclusive distributed storage engine), Fuxi (distributed resource management scheduler), and a distributed high-performance SQL engine. It is equivalent to open-source HDFS, Yarn, and Hive + Spark SQL but its capabilities surpass open-source. The storage of MaxCompute includes the databases and tables required by the schema on the write mode of the data warehouse and the recently released volume of unstructured storage. MaxCompute uses an architecture that separates big data storage and computing to reduce costs and customer TCO in large-scale scenarios. MaxCompute provides a sandbox runtime environment to make users' UDFs and business codes secure and flexible to run in a multi-tenant environment, eliminating the trouble and limitation of user-managed private codes outside data.

MaxCompute uses the tunnel service to converge data warehousing channels and only exposes tunnel endpoints to make data warehousing secure. It also checks the file format and collects metadata for subsequent read and write optimization. It increases nearly a magnitude level in performance compared with Hive at a minimal cost. This is the advantage of the data warehousing mode. MaxCompute also provides multiple connection methods (such as the web console, IDE studio, CMD, and SDK). The MMA migration tool can help users quickly migrate to MaxCompute. Lemming provides collection, computing, and cloud-edge collaborative computing at the edge.

MaxCompute connects to the object storage of the OSS data lake and uses DLF to obtain the metadata on the lake to achieve a lake house. Here, MaxCompute is a warehouse and OSS is a lake. It connects to the customer's Hadoop system and automatically obtains the metadata in hms and maps the Hive database to the external project of the MaxCompute project. The data in the warehouse can be directly associated and computed with Hive and HDFS data without creating external tables. Here, MaxCompute is the warehouse and Hadoop is the lake.

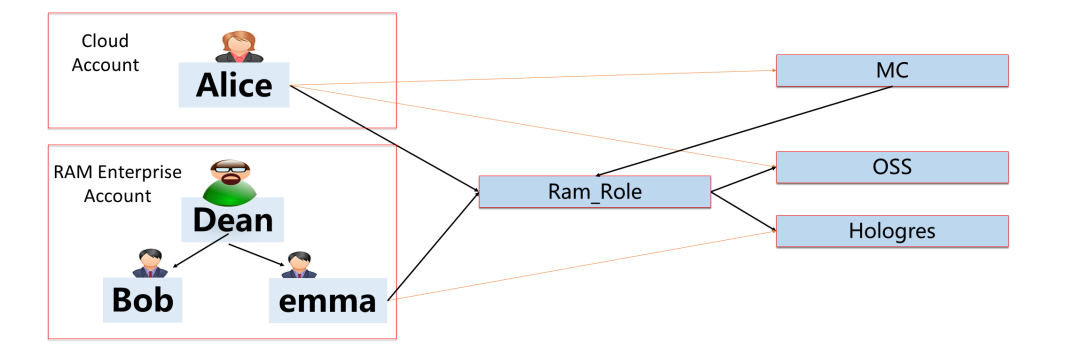

The two-party and three-party ecosystems around MaxCompute constitute a complete data procedure and big data solution. MaxCompute can use DataWorks data integration to obtain batch-loaded data. It can directly connect to message queues or streaming data (such as Flink, Kafka, and Datahub), storing data in real-time. Hologres can be seamlessly integrated with MaxCompute, with mutual permission recognition and direct Pangu reading. Based on the data warehouse model, Hologres can obtain high concurrency and low latency of interactive analytics. DataWorks, the golden partner of MaxCompute, is a development and governance tool developed together with MaxCompute. The capabilities and advantages of MC can be used better with DataWorks.

MaxCompute supports two-party engines (such as PAI, ES, OS, ADB, and SLS) to implement machine learning, retrieval, data mart analysis, and log processing. It also supports reports (such as QuickBI and DataV, dashboards, and big screen applications). Data middle platform governance tools Dataphin and DataQ will be based on the best practices of MaxCompute for many years to enable customers with products. In addition, three-party ecosystem tools (such as Tableau and Fine) have been mutually recognized with MaxCompute, giving users more choices.

Data security can be preset from four aspects. The following interpretation of MaxCompute's data security capabilities will correspond to these issues to solve enterprise data security issues, ensuring data security.

| What | Where | Who | Whether |

| What data are there? | Where is the data? | Who can use the data? | Is it abused? |

| What users are there? | Where can I access data? | Who used the data? | Is there a risk of leakage? |

| What permissions are there? | Where can the data be downloaded? | Is there a risk of loss? |

According to data abuse, data leakage, and data loss prevention, look at the core functions of the MaxCompute security system. Start with the core capabilities of MaxCompute for data security.

Data abuse prevention includes fine-grained permission management (ACL/Policy/Role) and hierarchical management of Label Security.

Data leakage prevention includes authentication, tenant isolation, project space protection, and network isolation.

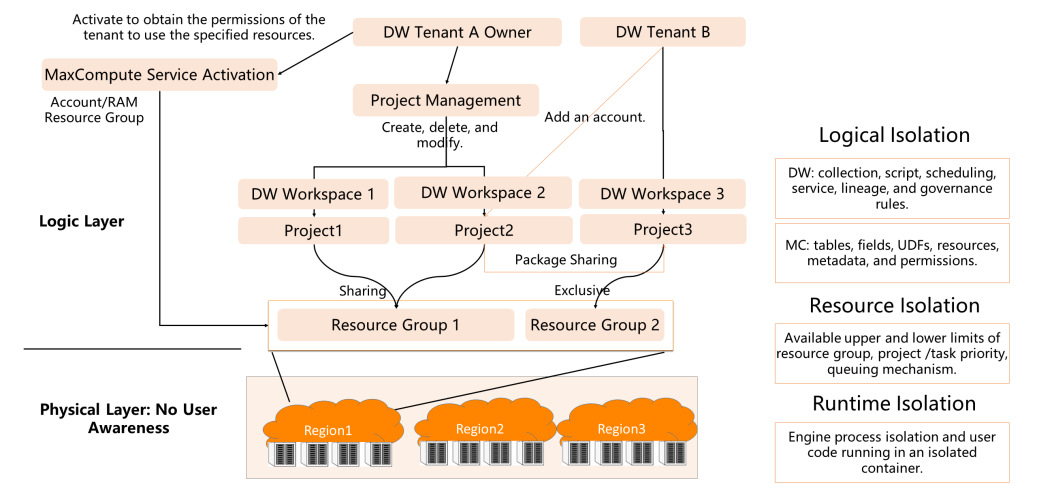

Users need to register an Alibaba Cloud master account in the current cloud system before they can apply to activate MaxCompute and create projects.

MaxCompute has two billing methods: pay-as-you-go (pay according to the number of shared resources you use afterward) and subscription (pay before using exclusive resources). When you activate a MaxCompute project, you need to activate a DataWorks workspace. DataWorks can be understood as a comprehensive development and governance tool, including script development, scheduling, and data service. MaxCompute includes tables, fields, UDFs, resources, metadata, etc. A DataWorks workspace can help two MaxCompute projects, one development environment, and one production environment. The two projects are isolated to prevent the leakage of key sensitive data in the production environment.

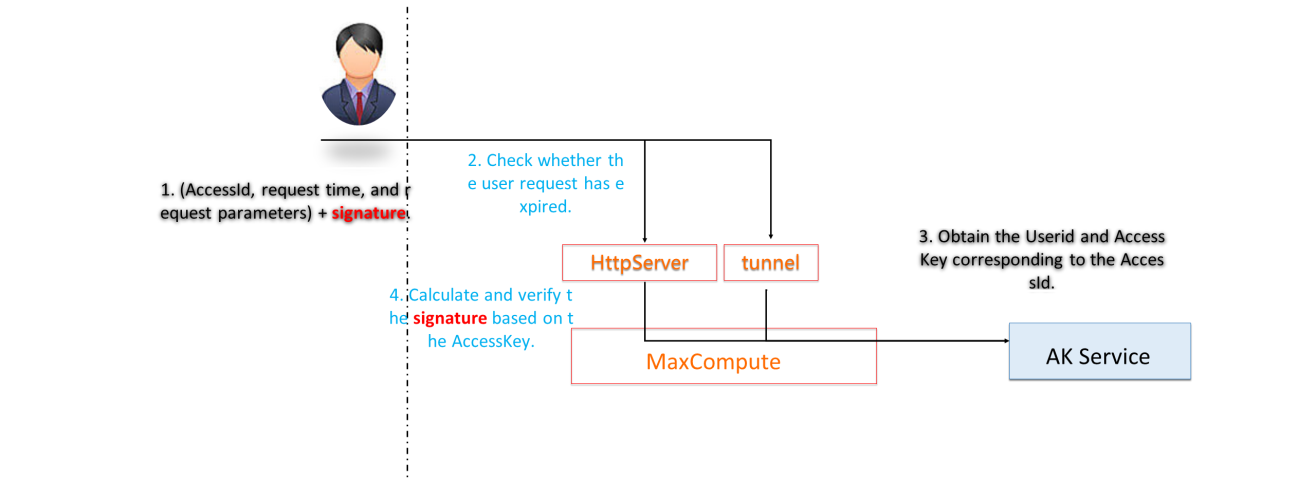

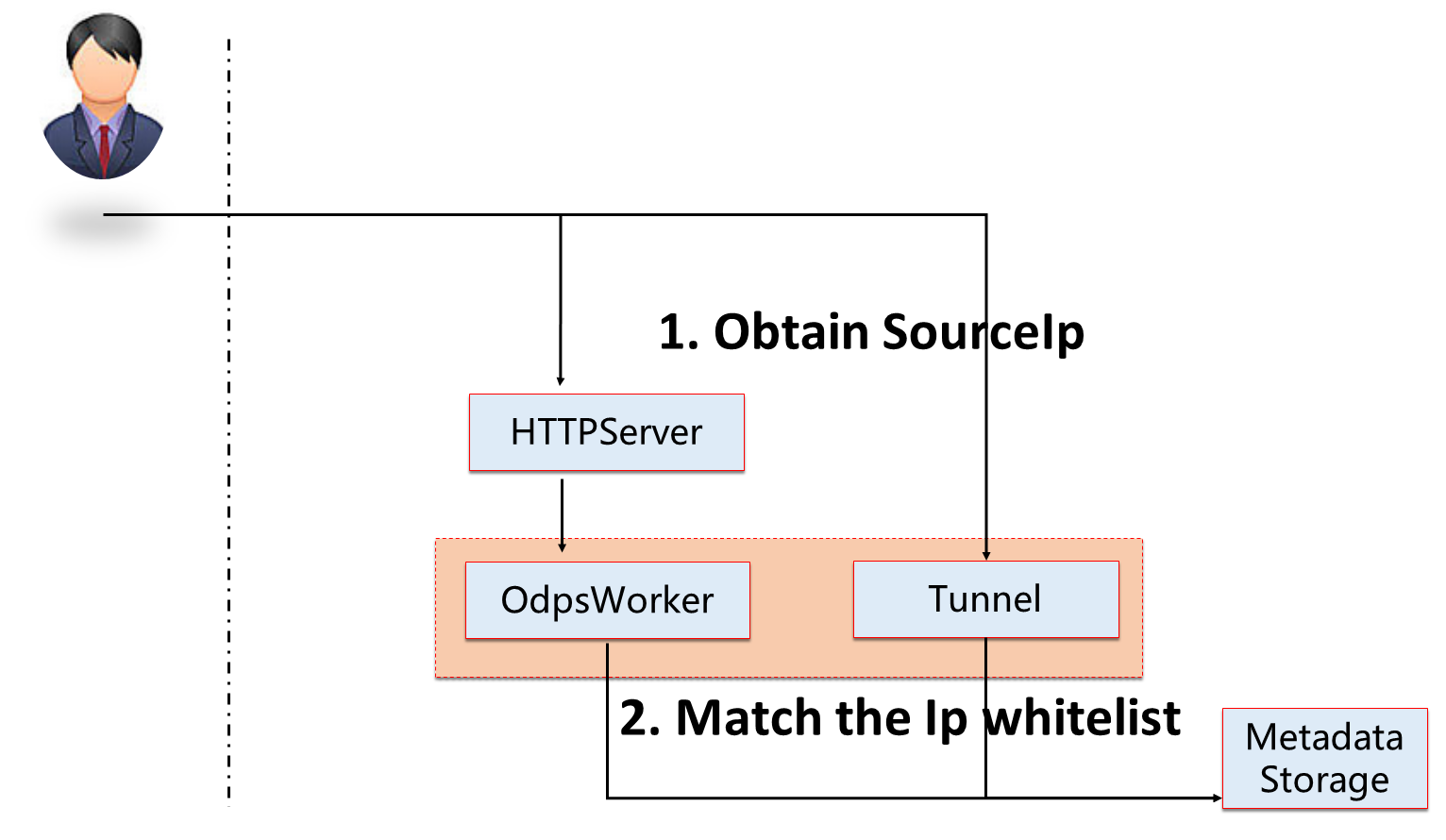

The following steps are performed for MaxCompute access authentication. Identity authentication is used for identity recognition. Request source check (IP whitelist) means users check whether network isolation is set. Project status check refers to checking whether security settings (such as project protection) are enabled. Check the permission management rules (such as labelsecurity | rle | policy | acl) for MaxCompute projects. Next, I will talk about the security mechanism of MaxCompute in this order. When entering the permission management section, I will talk about the permission system in detail.

When a request occurs, the user sends Accessld, request time, request parameters, and signature to the MaxCompute frontend in a fixed format. The MaxCompute frontend contains the HttpServer and tunnel (data upload and download channels). This process needs to check whether the user request has expired. When MaxCompute gets the AK information requested by the user and compares it with the AK information on the AK service. If the AK information is consistent, the user request is valid. Accessing MaxCompute data and computing resources must be authenticated. User authentication checks the identity of the request sender, correctly verifies the identity of the message sender, and whether the received message has been fiddled with on the way. Alibaba Cloud account authentication uses the message signature mechanism to guarantee the integrity and authenticity of messages during transmission.

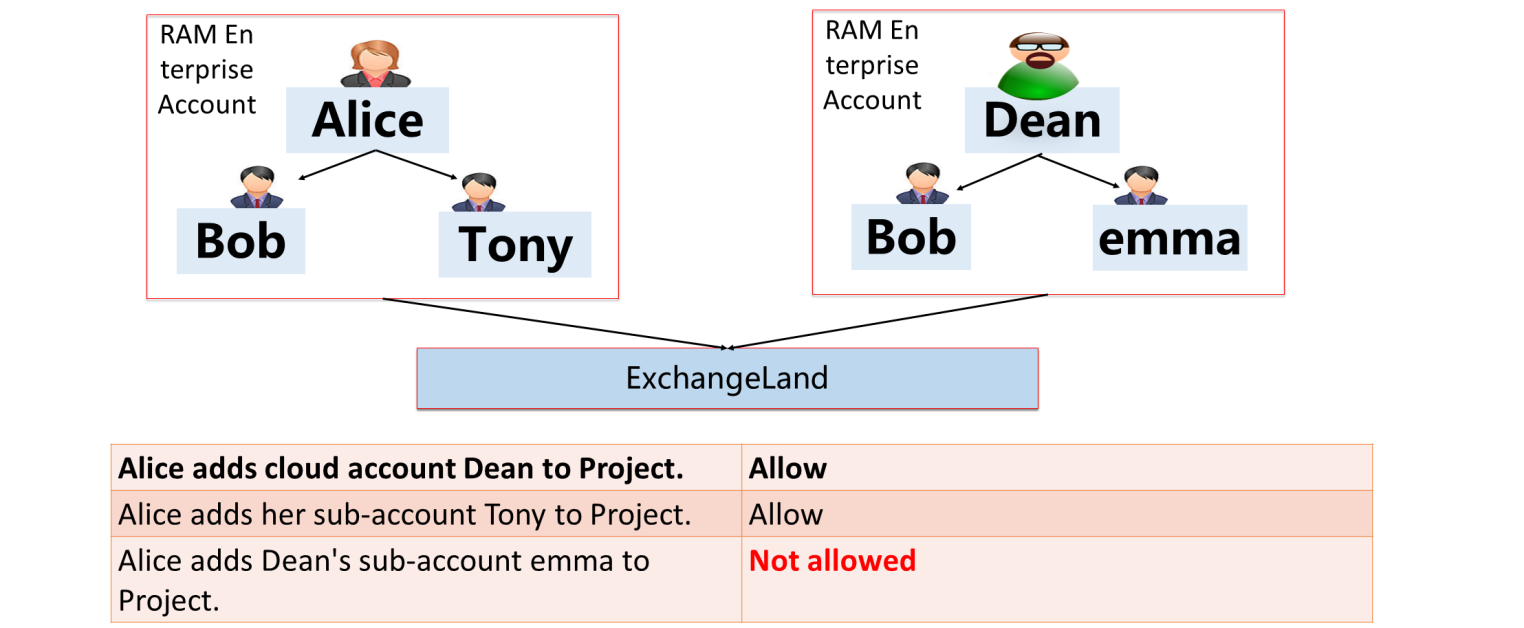

A primary account can add a RAM account under the current primary account to a MaxCompute project. It can also add another primary account to a MaxCompute project. However, a RAM account under another primary account cannot be added to a MaxCompute project.

| Role Category | Role Name | Role Description |

| Account Level (Tenant) | Super_Administrator | The built-in management roles of MaxCompute. The operation permissions on MaxCompute are equivalent to those of an Alibaba Cloud account, except for creating a project, deleting a project, or activating a service. |

| Admin | The built-in management roles of MaxCompute. Use to manage all objects and network connection (Networklink) permission. | |

| Project Level | Project Owner | The owner of the project. After a user creates a MaxCompute project, the user is the owner of the project and has all permissions to the project. No one except the project owner can access objects in this project unless authorized by the project owner. |

| Super_Administrator | The built-in management roles of MaxCompute. This role has the operation and management permissions on all resources in the project. Please see Manage Role Permissions at the Project Level for more information about permissions. Project owners or users with the Super_Administrator role can assign the Super_Administrator role to other users. | |

| Admin | The built-in management roles of MaxCompute. This role has operation permissions on all resources in a project and some basic management permissions. Please see Manage Role Permissions at the Project Level for more information about permissions. The project owner can assign the Admin role to other users. A user assigned the Admin role cannot assign the role to other users, configure security policies for the project, modify the authentication models of the project, or modify the corresponding permissions. | |

| Custom Role | A role that is not built in MaxCompute needs to be customized. |

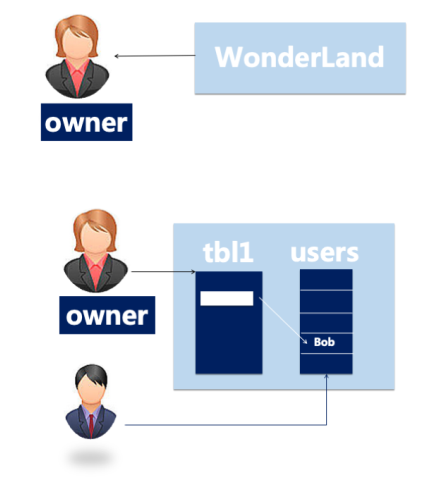

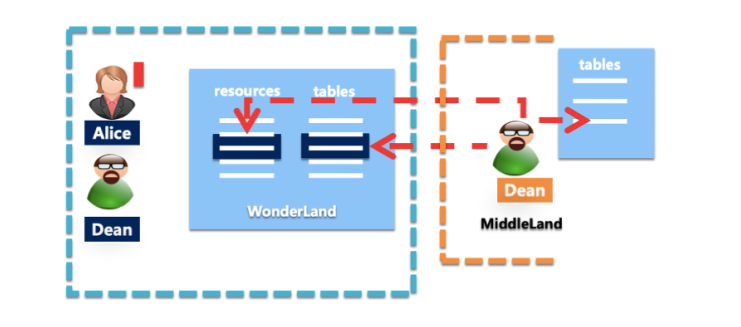

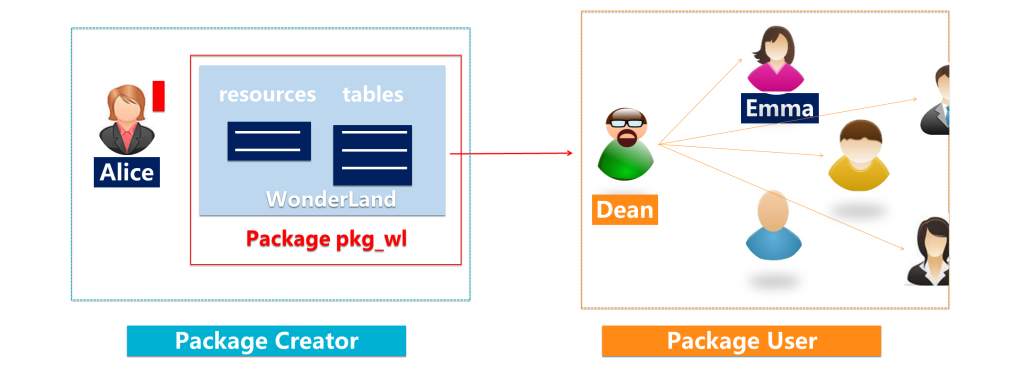

Alice creates a project named WonderLand and becomes the Owner.

No one else can access WonderLand without Alice's authorization.

Alice wants to authorize Bob to access some objects in WonderLand:

First, Bob needs to have a legitimate cloud account or Alice's RAM sub-account.

Then, Alice will add Bob's account to the project.

Finally, Alice permits Bob to some objects.

If Alice wants to prohibit Bob from accessing the project, she can remove his account from the project.

Although Bob was removed from the project, the permissions he granted remained in the project.

Next time Alice adds Bob to the same project, the original permission will be activated unless Bob's permissions are completely cleared.

If the requested IP matches the whitelist stored in the MaxCompute metadata, perform a project-level check. If the IP matches, access is allowed. The whitelist format can be fixed IP, masks, or IP segments. Look at the following example.

Scope of action: project space

Whitelist format: 101.132.236.134, 100.116.0.0/16, 101.132.236.134-101.132.236.144

Configure the whitelist: adminConsole; setprojectodps.security.ip.whitelist=101.132.236.134,100.116.0.0/16

Close the whitelist: Clear the whitelist *

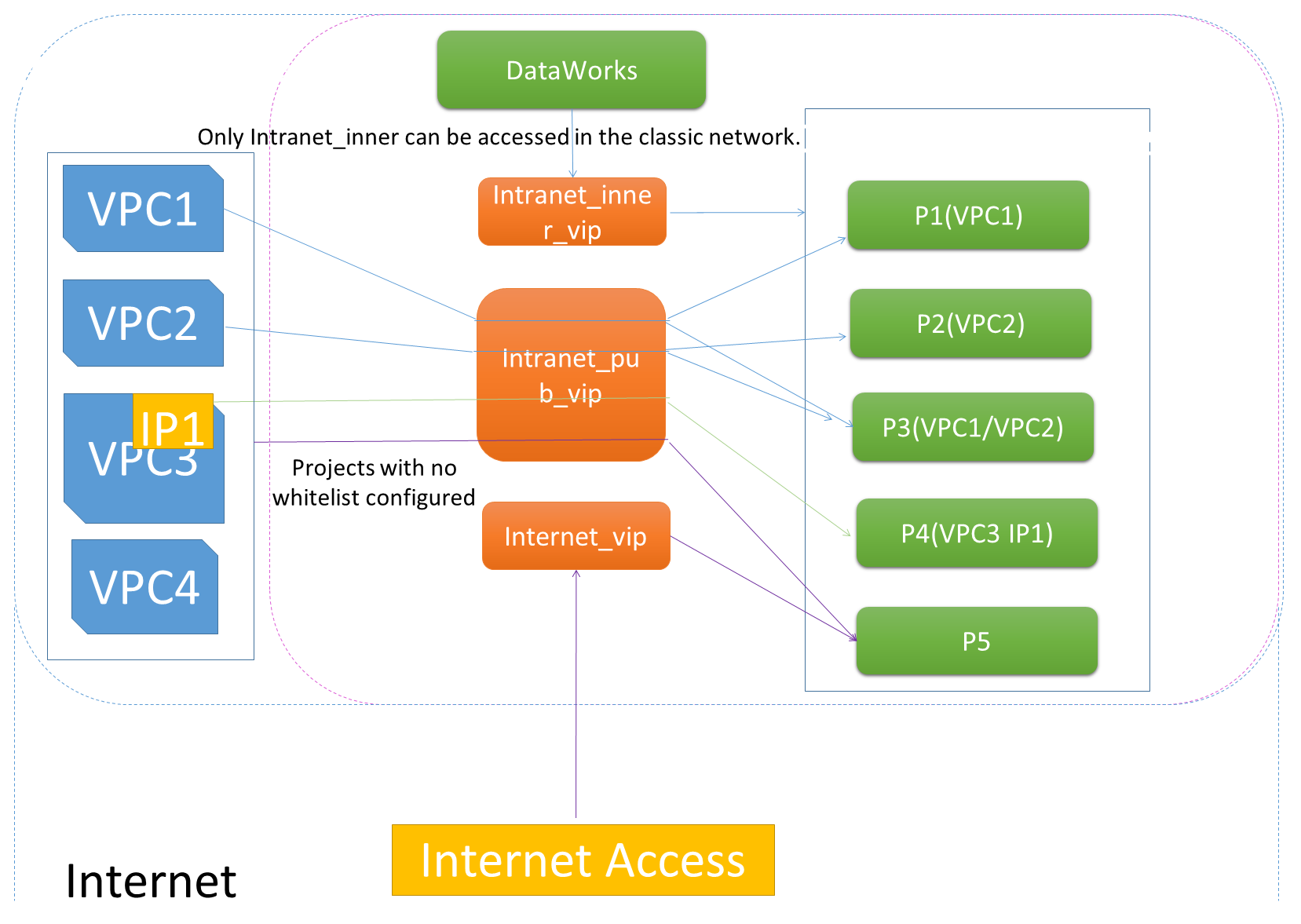

As a massive data processing platform developed by Alibaba Cloud, MaxCompute needs to meet the requirements of security isolation specifications. MaxCompute supports virtual private clouds (VPCs), which allow you to isolate data. Limits are imposed when you use MaxCompute in VPCs. Currently, MaxCompute supports VPC:

The following figure shows a specific example. In the following figure, the green part is the classic network, the blue part is the user's VPC network, and the red part is the public cloud access.

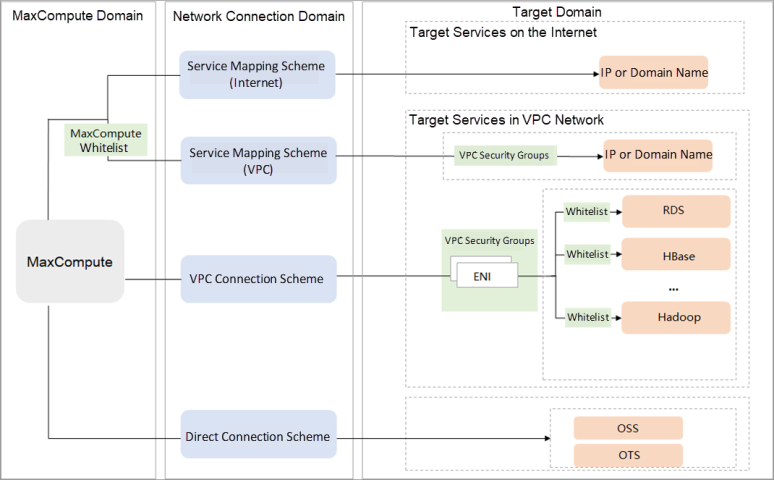

You can use this scheme if you want to access a public IP address or a public endpoint by calling a UDF or using an external table. If you want to use this scheme, you must submit a ticket. If no security limits are imposed on the destination IP address or endpoint, you can access the IP address or endpoint after the application is approved.

You can use this scheme if you want to access an IP address or endpoint in a VPC by calling a UDF or using an external table when MaxCompute is connected to the VPC. You only need to add the CIDR blocks of the region where your MaxCompute project resides to the security group of the VPC and add the VPC in which the destination IP address or endpoint resides to your MaxCompute project. After you grant mutual access between MaxCompute and the VPC, MaxCompute can access the destination IP address or endpoint in the VPC.

You can use this scheme if you want to access an ApsaraDB RDS instance, an ApsaraDB for HBase cluster, or a Hadoop cluster that resides in a VPC by using an external table, calling a UDF, or using the data lakehouse solution. If you want to use this scheme, you must perform authorization and configure security groups in the VPC console. You must also establish a network connection between MaxCompute and the VPC and configure security groups for services (such as an ApsaraDB RDS instance, an ApsaraDB for the HBase cluster, or a Hadoop cluster in the MaxCompute console) to establish a network connection between MaxCompute and the destination service.

You can use this scheme if you want to access Alibaba Cloud OSS or OTS (Tablestore) by calling a UDF or using an external table. If you want to establish a network connection between MaxCompute and OSS or OTS services, you do not need to apply for activating the VPC service.

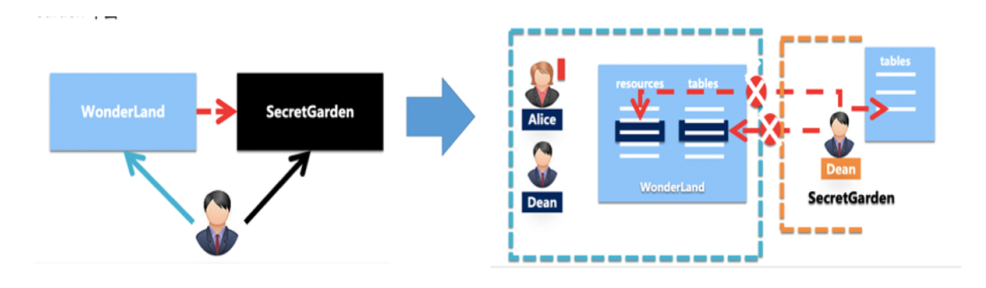

If there are projects (WonderLand and SecretGarden), the following risks may cause data to flow out.

When the project protection mode is started, this means the ProjectProtection rule is set, and data can only flow in, not out.

set ProjectProtection = true

After being set up, the four operations above will be invalid since they all violate ProjectProtection rules.

When project protection is started but some tables still need to be allowed to flow out, there are two schemes.

Scheme 1: Attach an exception policy while setting Project Protection:

set ProjectProtection = true with exception

Scheme 2: If two related projects are set to TrustedProject, the data flow will not be considered a violation:

add trustedproject = SecretGarden

Security Features:

Protected MC items allow data to flow in and prohibit data output without explicit authorization.

Scenarios:

Avoid randomly exporting highly sensitive data to unprotected projects (such as corporate employee salaries).

Implementation Logic:

Set the MaxCompute project where highly sensitive data is located to the strong protection mode so the data can only be accessed in the currently protected project. Even if a user obtains data access permission, ze cannot read the data outside the project or export the data. New data generated after processing or masking can flow out of protected items after authorization is displayed.

Security Features:

A protected MaxCompute project performs multi-factor verification on data access. Over 20 dimensions are verified, including data outbound destinations (IP addresses and target project names) and restricted data access time intervals. Even if the password of the user account is leaked, the data cannot be taken at will.

Scenarios:

Data exchange of guarantee mode

Scenario Description:

Two units want to use each other's sensitive data, but they are unwilling and not allowed to give the data directly to each other. What should they do?

Implementation Logic: Both parties import data into the protected project (black box), complete the integration and processing of data on both sides in the protected project, and generate insensitive result data. After the authorization is displayed, the protected project can be exported and returned to both parties. As such, both sides use each other's data but cannot take it away.

The sequence of permission checks for MaxCompute is listed below:

LabelSecurity Label Check → policy (returned directly by DENY) policy check → ACL (bound role) ACL check, role, and user permissions superimposed → package permission check across projects

Let's talk about the permission-related functions of MaxCompute in this order.

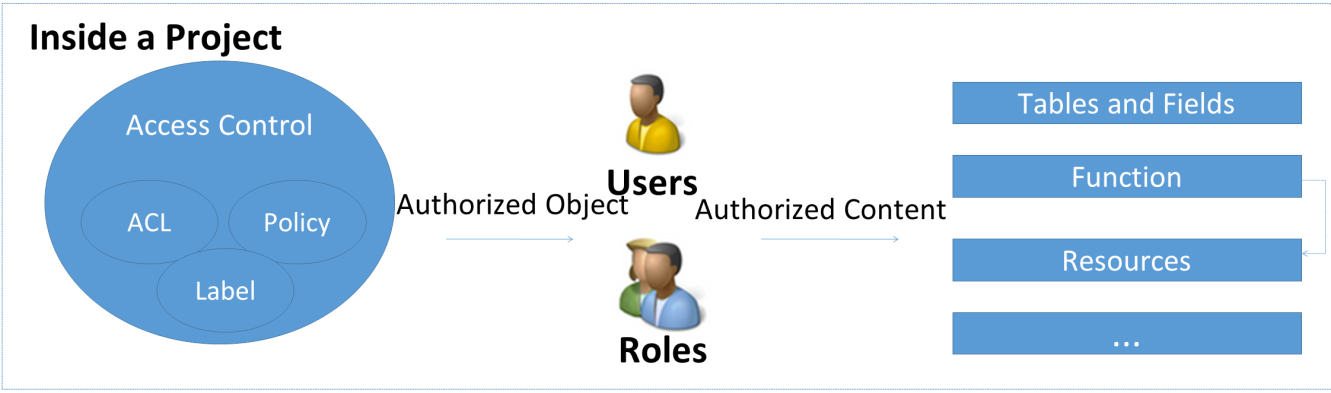

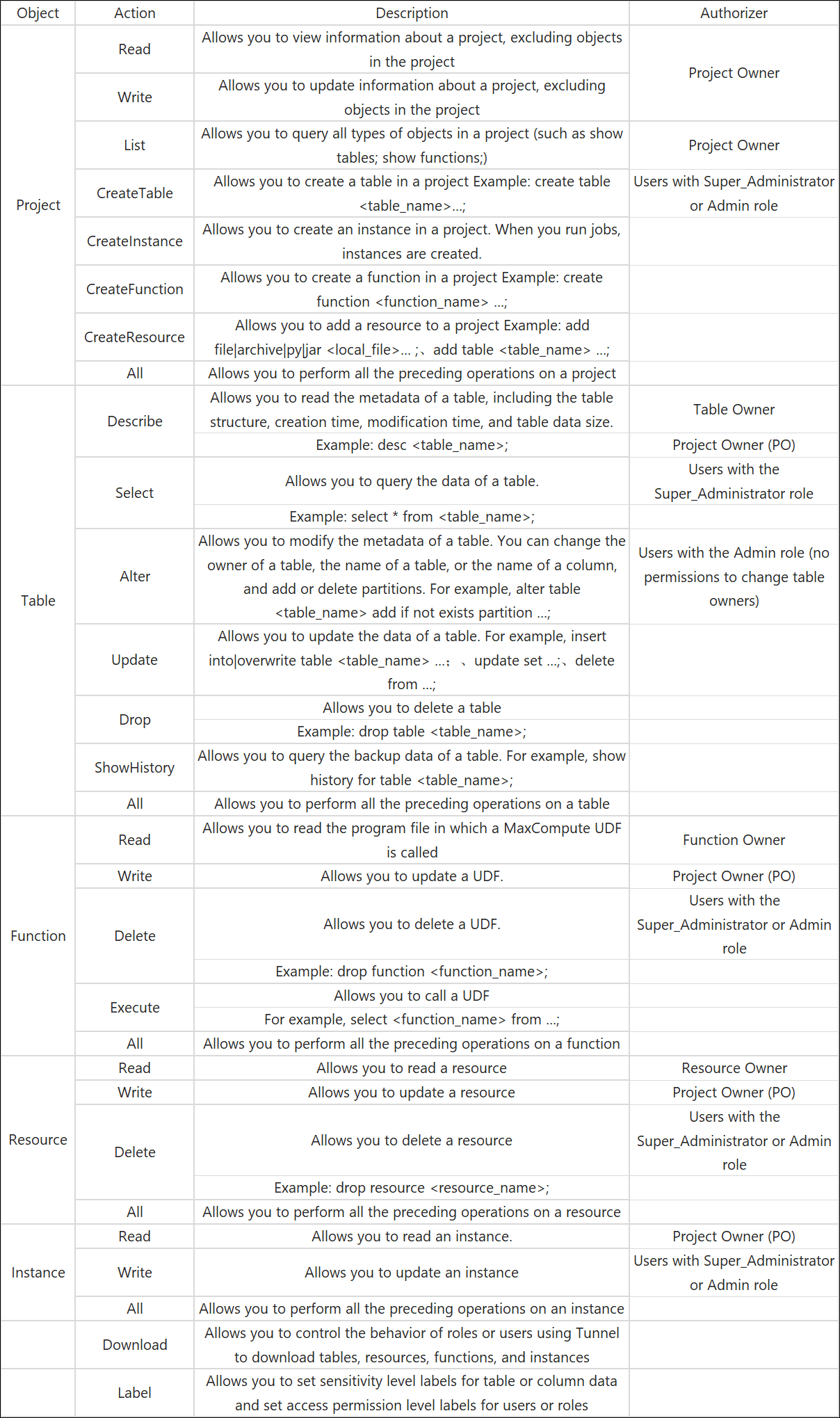

Authorization requires three parts: subject (authorized person), object (object or behavior in the MaxCompute project), and operation (related to object type). You need to add users to the project and assign one or more roles to inherit all permissions in the roles during authorization.

The authorization includes tables (which can be authorized by field), functions, and resources.

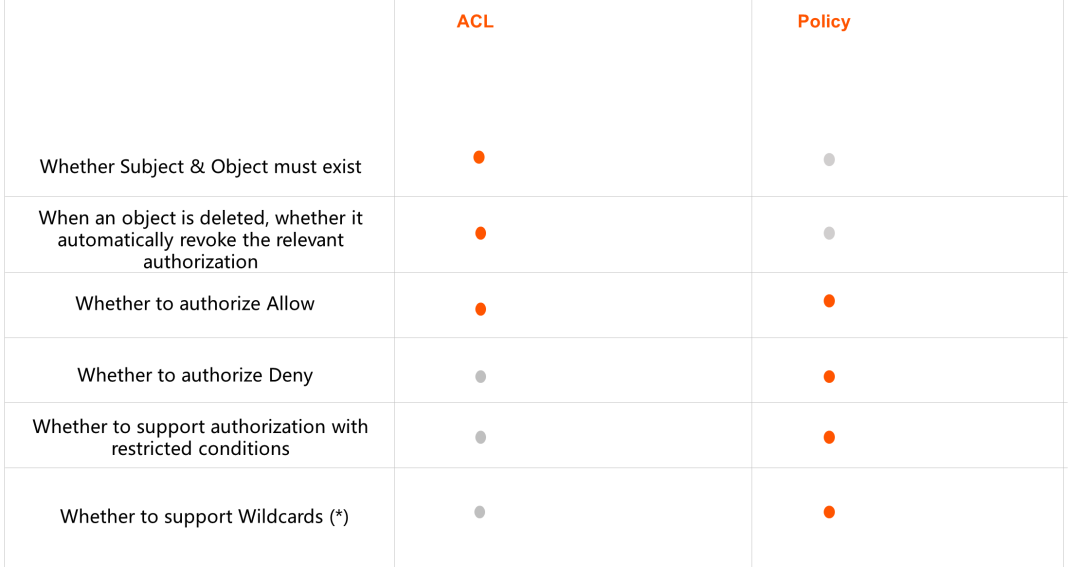

There are three access control methods: ACL (Access Control List), Policy, and Label.

Authorization Object:

Single user and role

Scenario Description:

When the tenant Owner decides to authorize user B, he first adds user B to his tenant. Only users added to a tenant can be authorized. After user B joins the tenant, it can be assigned to one or more roles (or no roles). Then, user B will automatically inherit the various permissions all these roles have. When a user leaves the project, the tenant Owner needs to remove the user from the tenant. Once a user is removed from a tenant, the user will no longer have any permissions to access the resources of this tenant.

The ACL-based access control method is implemented based on the whitelist mechanism. The whitelist mechanism allows specified actions on an object for a user or role. The ACL-based access control method is easy-to-use and helps implement precise access control.

Subject: The authorized person who must exist in the project.

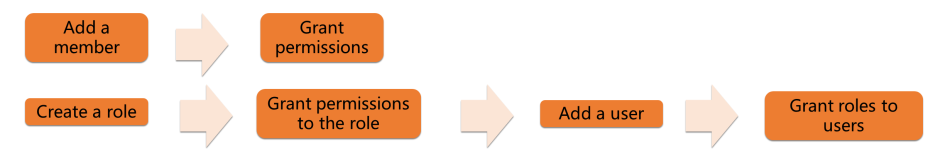

Therefore, you can add and authorize users to a project by adding users and authorizing them or by creating and authorizing roles, adding users, and granting roles to users.

Examples

grant CreateTable, CreateInstance, List on project myprj to user Alice - Add user permissions

grant worker to aliyun$abc@aliyun.com - Add a user to a role

revoke CreateTable on project myprj from user Alice - Delete user permissions

Permission Description

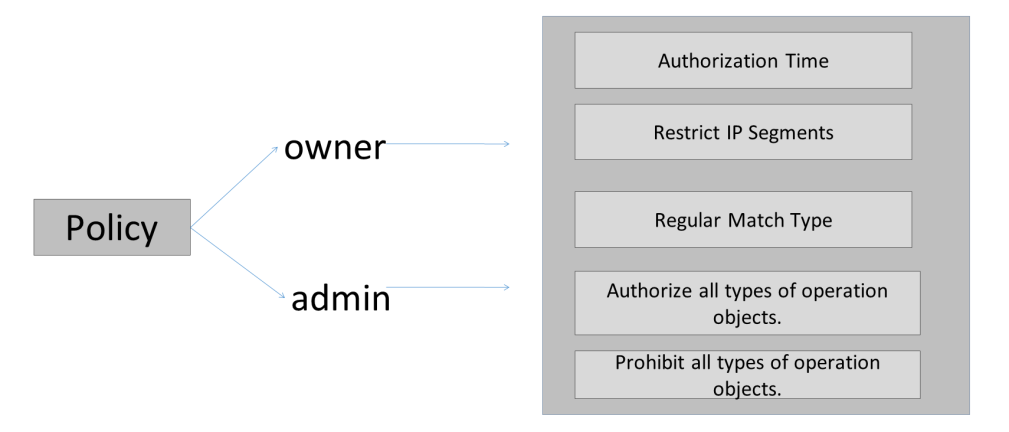

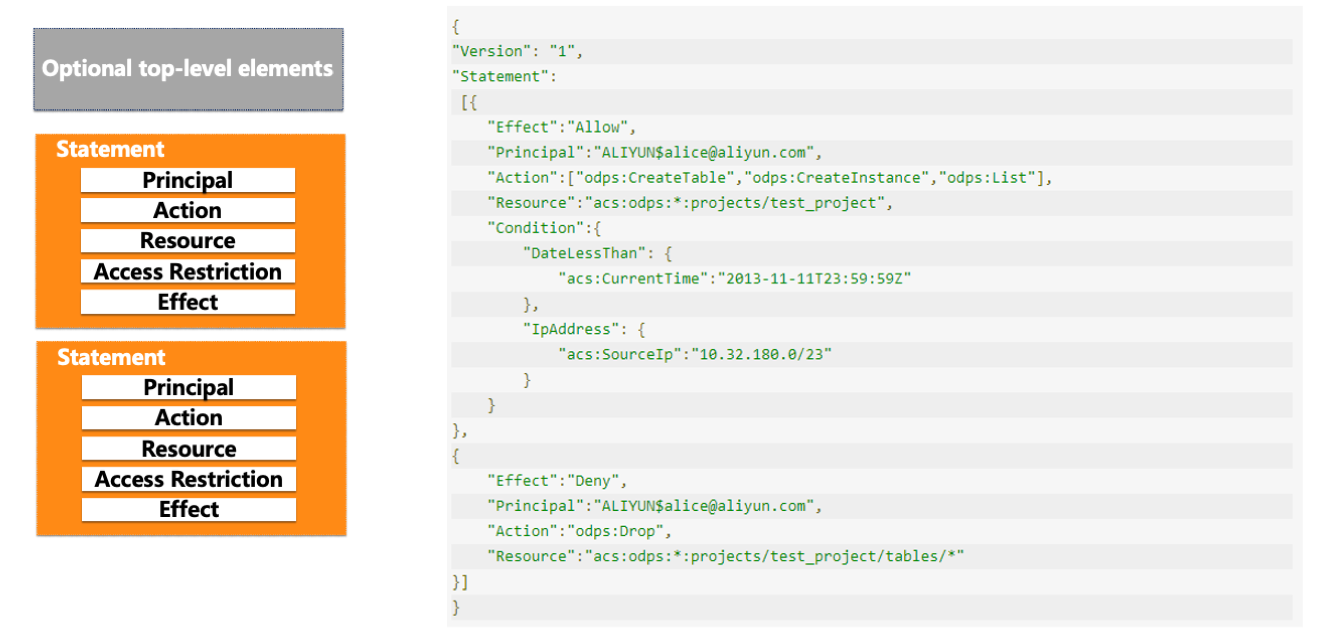

Policy Structure and Examples

If a user has been assigned a built-in role and the operation permissions of the user need to be managed in a more refined manner, the ACL permission control solution cannot be used to resolve such authorization issues. As such, you can use the Policy permission control scheme to add a role, allow or prohibit the role from manipulating objects in a project, and bind the role to a user to implement fine-grained control over user permissions.

The policy supports the following syntax to define rules for subjects, behaviors, objects, conditions, and effects, such as limiting access time and access IP addresses.

The first two permission control methods (ACL and Policy) belong to Discretionary Access Control (DAC).

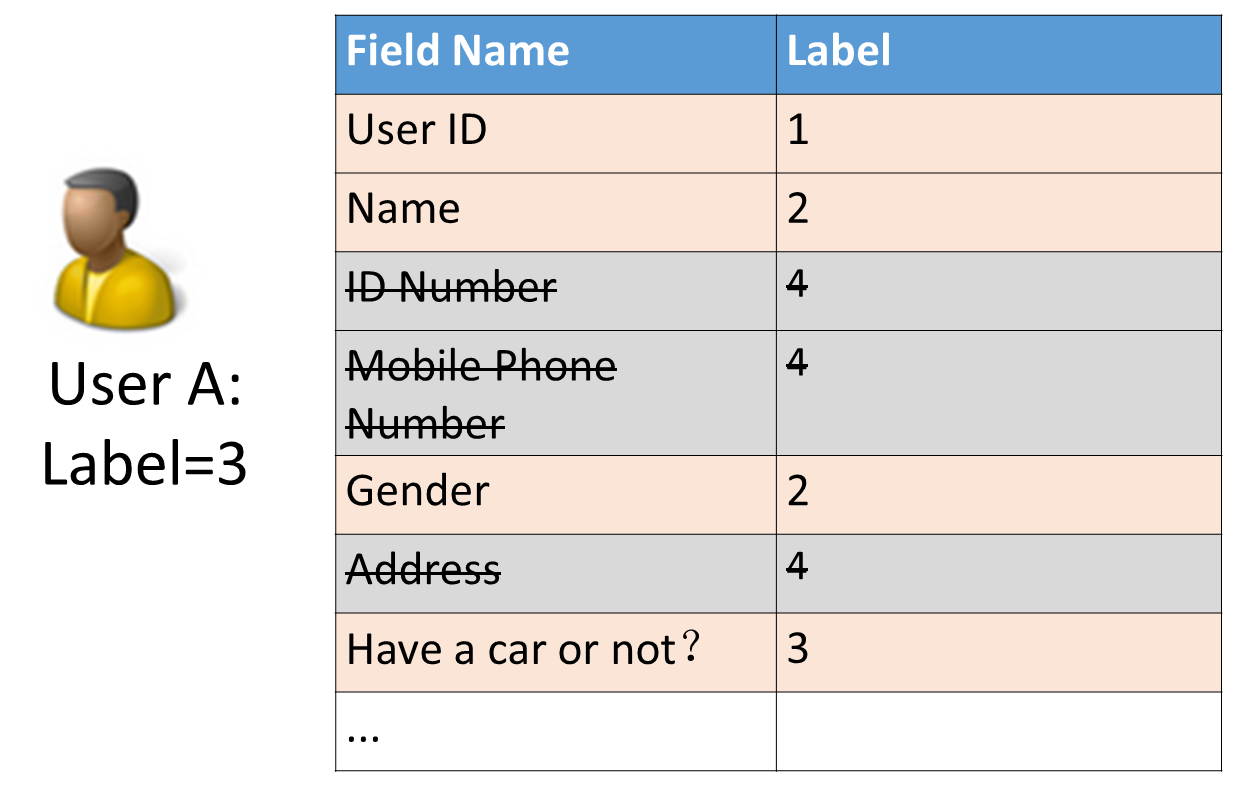

LabelSecurity is a mandatory access control (MAC) at the project level. It is introduced to allow project administrators to flexibly control user access to sensitive data at the column level.

The mandatory access control is independent of the discretionary access control (DAC). For a better understanding, the relationship between MAC and DAC can be compared with the following example.

Let's imagine there is a country (analogy to a project). Citizens of this country must apply for a driver's license first (analogy to apply for SELECT permission) if they want to drive (analogy to read data). These are within the scope of DAC considerations.

Since the country's traffic accident rate has been high, the country has added a new law: prohibit drunk driving. Since then, everyone that wants to drive must not drink alcohol in addition to having a driver's license. This ban on drunk driving is equivalent to a ban on reading highly sensitive data. This falls into the category of MAC considerations.

LabelSecurity applies the following default security policies based on the sensitivity levels of data and users:

For example, the label of user A below is level 3. The user has the select permission on this table and should be able to find all data in this table. However, after LabelSecurity is enabled, data from level 4 cannot be accessed.

Security Features

Users are not allowed to read data with a sensitivity level higher than the user level (No-ReadUp) unless there is an authorization.

Scenario

Restrict read access to certain sensitive columns of a table by all non-Admin users.

Implementation Logic

If the Admin user is set to the highest level and the non-Admin user is set to a certain general level (for example, Label=2), no one except the Admin user can access the data with Label>2.

Security Features

Users are not allowed to write data whose sensitivity level is not higher than the user level (No-WriteDown).

Scenario

Restrict users that have been granted access to sensitive data from wanton dissemination and replication of sensitive data.

Scenario Description

John obtained access to sensitive data with level 3 due to business needs. However, the administrator worries John may write those data with sensitivity level 3 into columns with sensitivity level 2, resulting in Jack being able to access the data with sensitivity level 2.

Implementation Logic

Set the security policy to No-WriteDown to prevent John from writing data to columns lower than his level

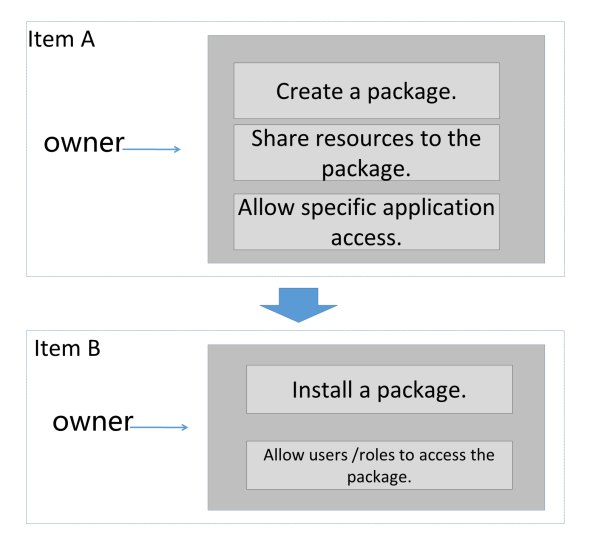

A package is used to share data and resources across projects. You can use a package to implement cross-project user authorization:

Manage a package

Install a package

Manage resources in a package

Authorize the resources of the package to the local user role

Manage the authorized project of the package

Data providers can control shared data in the process of creating, adding resources, and authorizing packages that can be installed. Data consumers can manage data usage and permissions in the process of installing packages and preferring package resources to local user roles.

Let's look at other platform-level support capabilities of MaxCompute to ensure data security.

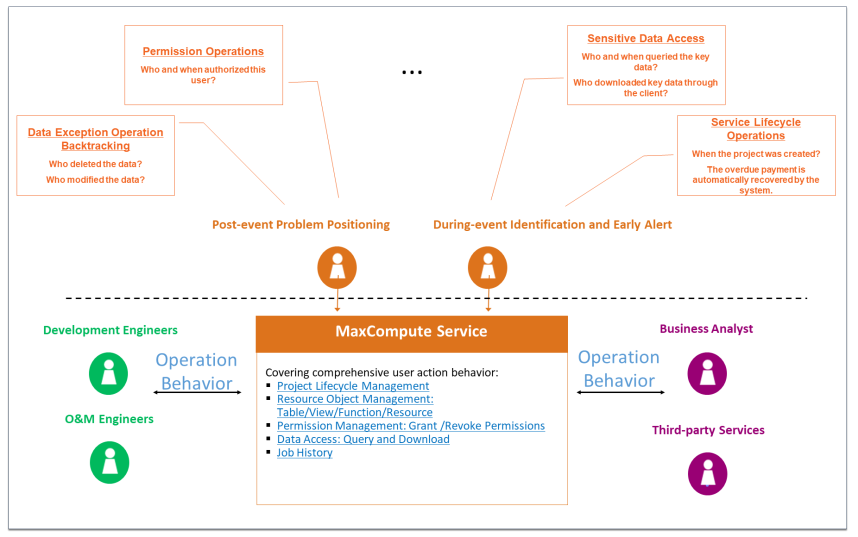

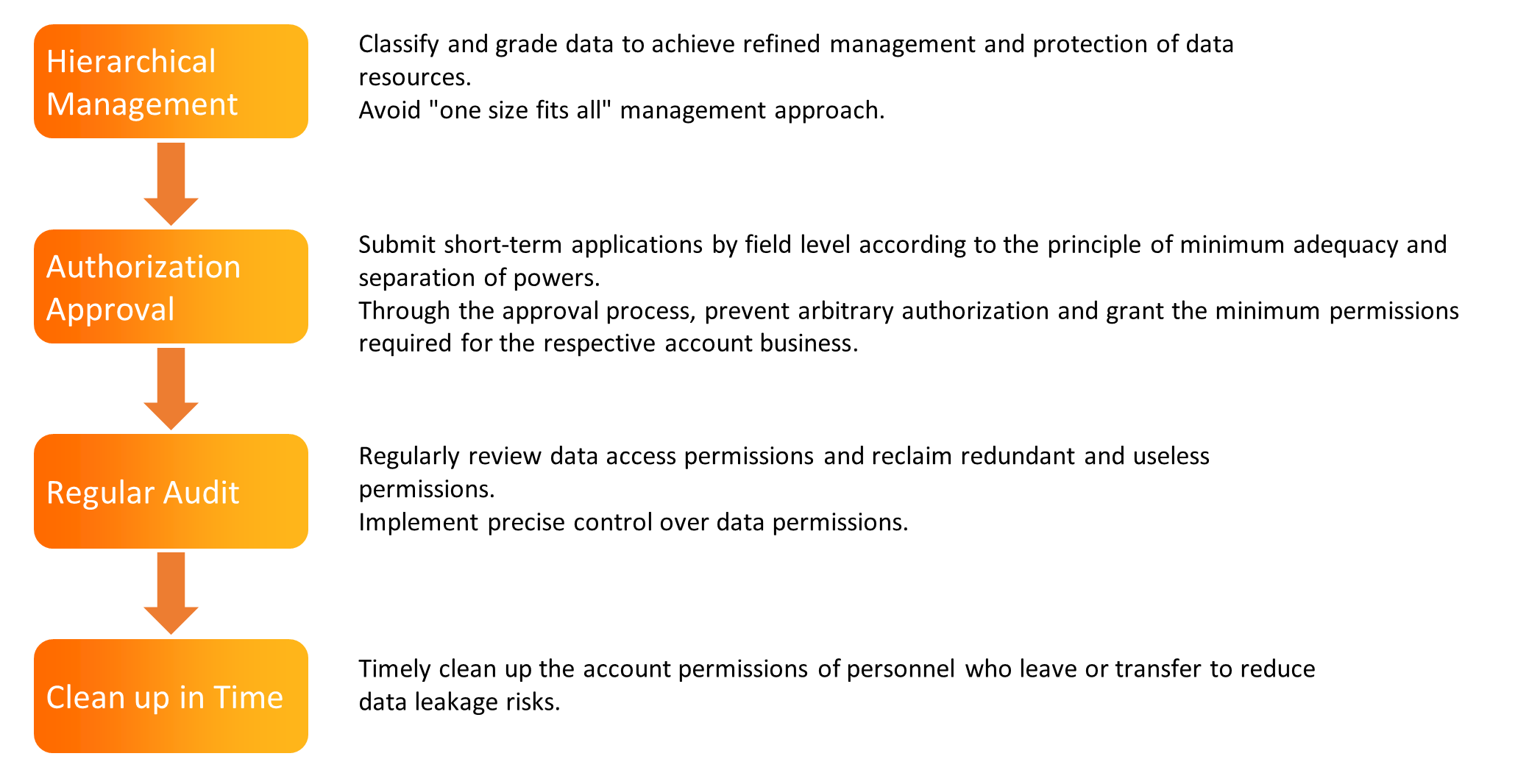

Data abuse prevention includes regular audits.

Data leakage prevention includes sandbox isolation and storage/transmission encryption.

Data loss prevention includes backup and disaster recovery.

All computing in MaxCompute is running in limited sandboxes, which are multi-layered, from KVM to Kernel. The system sandbox is used with the authentication management mechanism to ensure data security to avoid server failures caused by malicious or careless internal personnel. The user's business processes are managed on the MaxCompute engine side for execution, so the permissions are isolated. However, user-submitted UDF/MR programs may:

MaxCompute is different from other multi-tenant systems. MaxCompute implements user code in an external environment and then interfaces. Users need to implement, debug, and connect in other environments to ensure concurrency and security. MaxCompute's fully managed mode allows your private code to run in a sandbox environment. MaxCompute implements concurrency and security isolation, which is easy to use and completely free to implement user-defined logic.

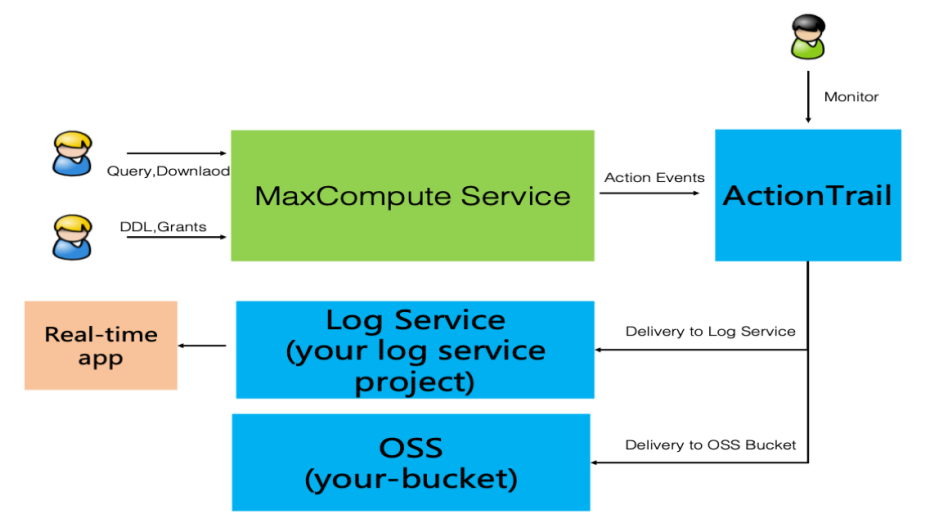

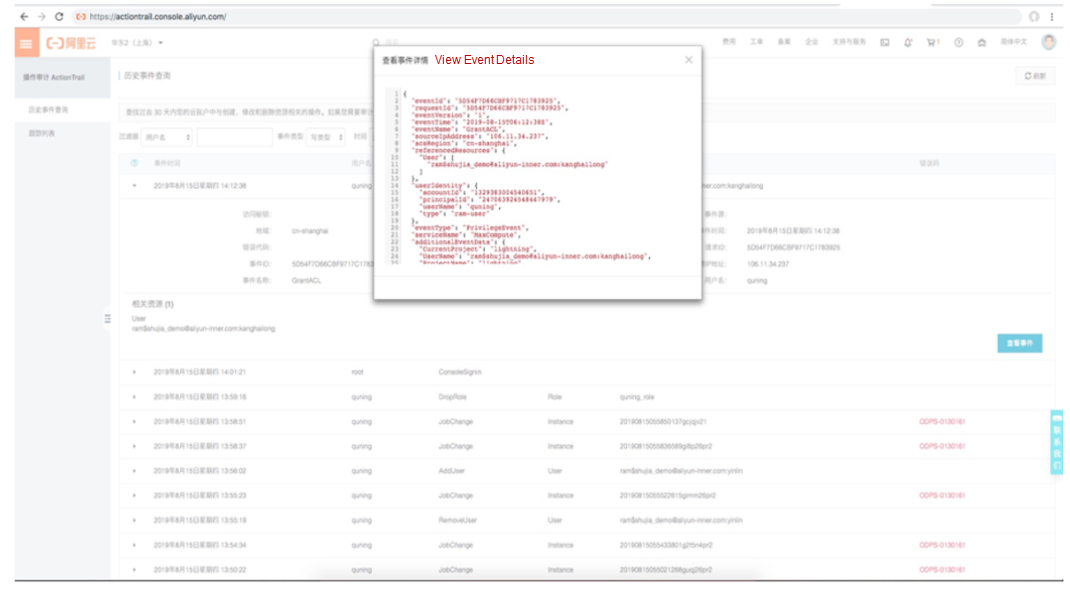

MaxCompute provides accurate and fine-grained data access operation records that are stored for a long time. The MaxCompute platform depends on a large number of functional service modules. We can call them the underlying service stack. MaxCompute collects all operation records on the service stack for data operation records, including data access logs at the table/column level in the upper layer and data operation logs on the underlying distributed file system. Each data access request processed on the bottom-level distributed file system can be traced to the data access initiated by which user and which job in the MaxCompute project. Logs include operations (such as task, tunnel, and endpoint access) and information (such as authorization, label, and package). Please see Meta Warehouse or information_schema for more information.

Record user operations in a MaxCompute project:

Access the Alibaba Cloud Action Trail service: view retrieval + shipping (logs /OSS):

Meet customer requirements (such as real-time audit and problem backtracking analysis):

Transmission encryption MaxCompute uses the Restfull interface. Server access uses https, whose task call and data transmission security are guaranteed by https.

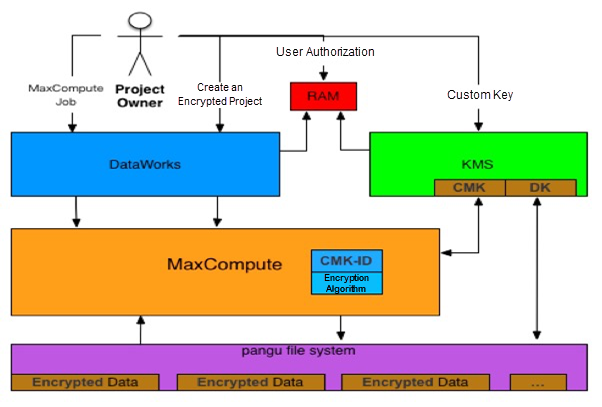

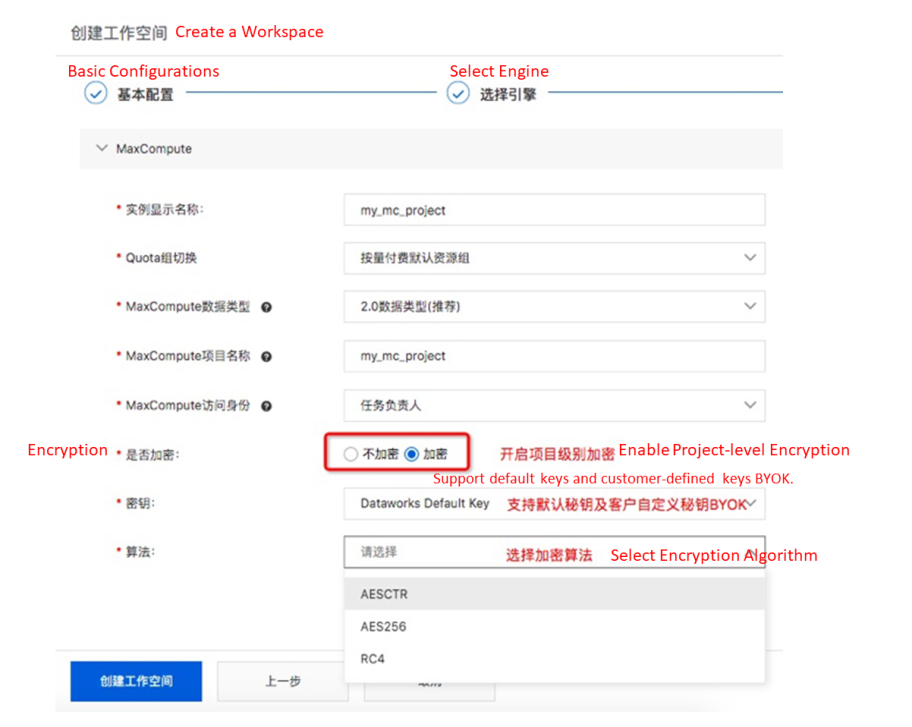

Storage Encryption: MaxCompute supports TDE for transparent encryption and anti-copy. Storage encryption supports MC-managed keys, KMS-based BYOK (user-defined keys), and mainstream encryption algorithms (such as AES256). Apsara Stack supports SM4.

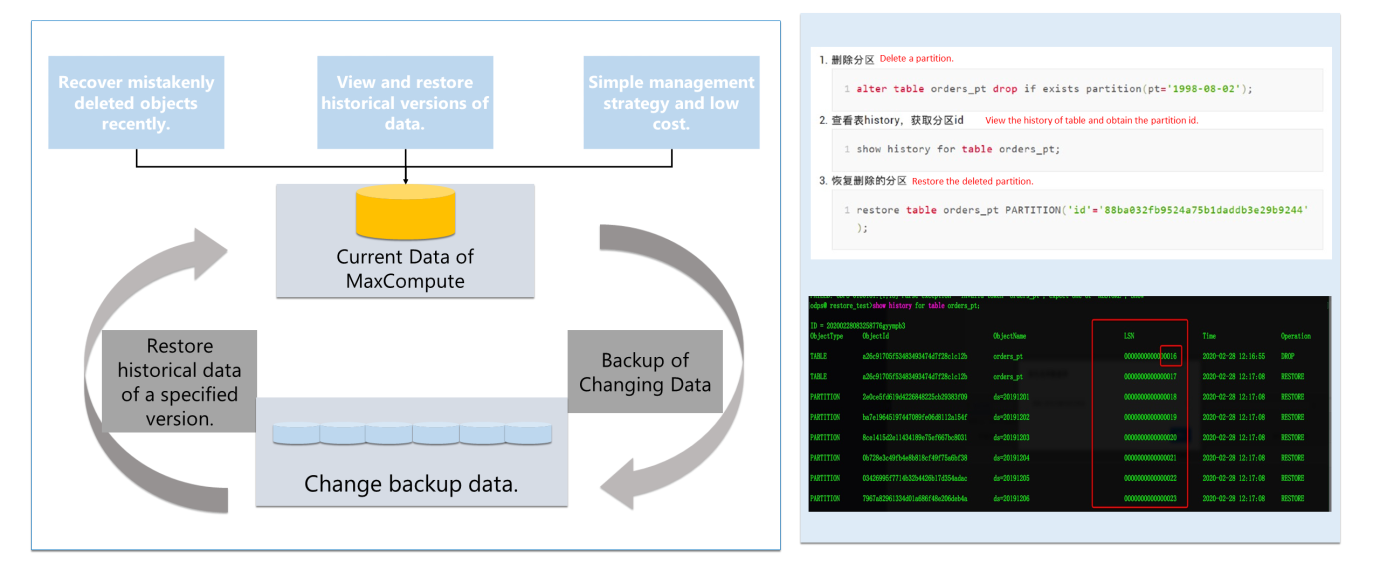

The continuous backup feature of MaxCompute does not require manual backup operations. It automatically records the data change history generated by each DDL or DML operation. You can restore data to a specific historical version when needed. The system automatically backs up historical versions of data (such as data before being deleted or modified) and retains them for a certain period. You can quickly restore the data within the retention period to avoid data loss due to misoperations. This feature does not rely on external storage. The data retention period available for all MaxCompute projects is 24 hours by default. Backup and storage are free of charge. When the project administrator modifies the backup retention period for more than one day, MaxCompute is billed on a pay-as-you-go basis for backup data that exceeds one day.

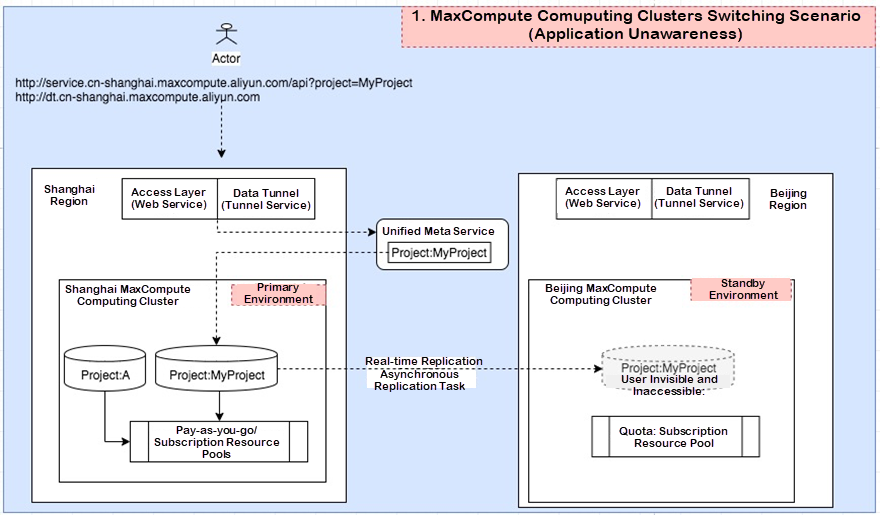

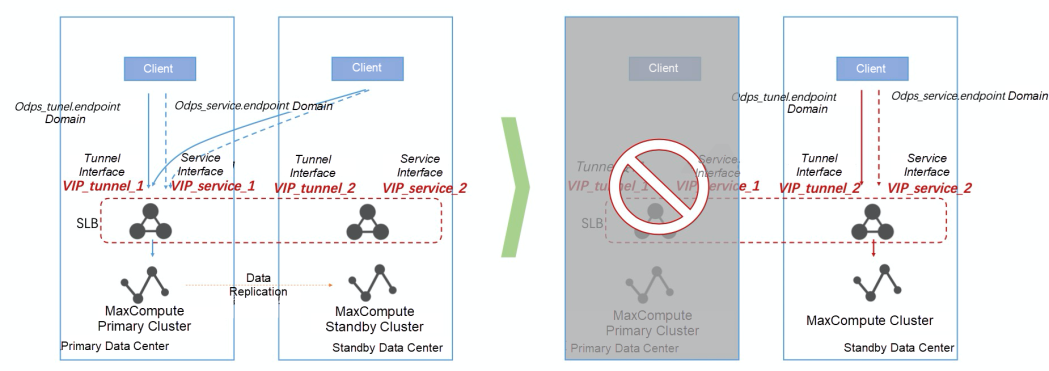

(1) We recommend scaling out the secondary cluster to the primary cluster.

(2) Create a non-disaster recovery single cluster project in addition to the reserved resources of the secondary cluster for disaster recovery. This type of project cannot be changed to disaster recovery in the future.

Finally, look at the data security capabilities brought by the combination of DataWorks and MaxCompute from the perspective of application security.

Data abuse prevention includes security centers and authorization and approval. Data leakage prevention includes data masking.

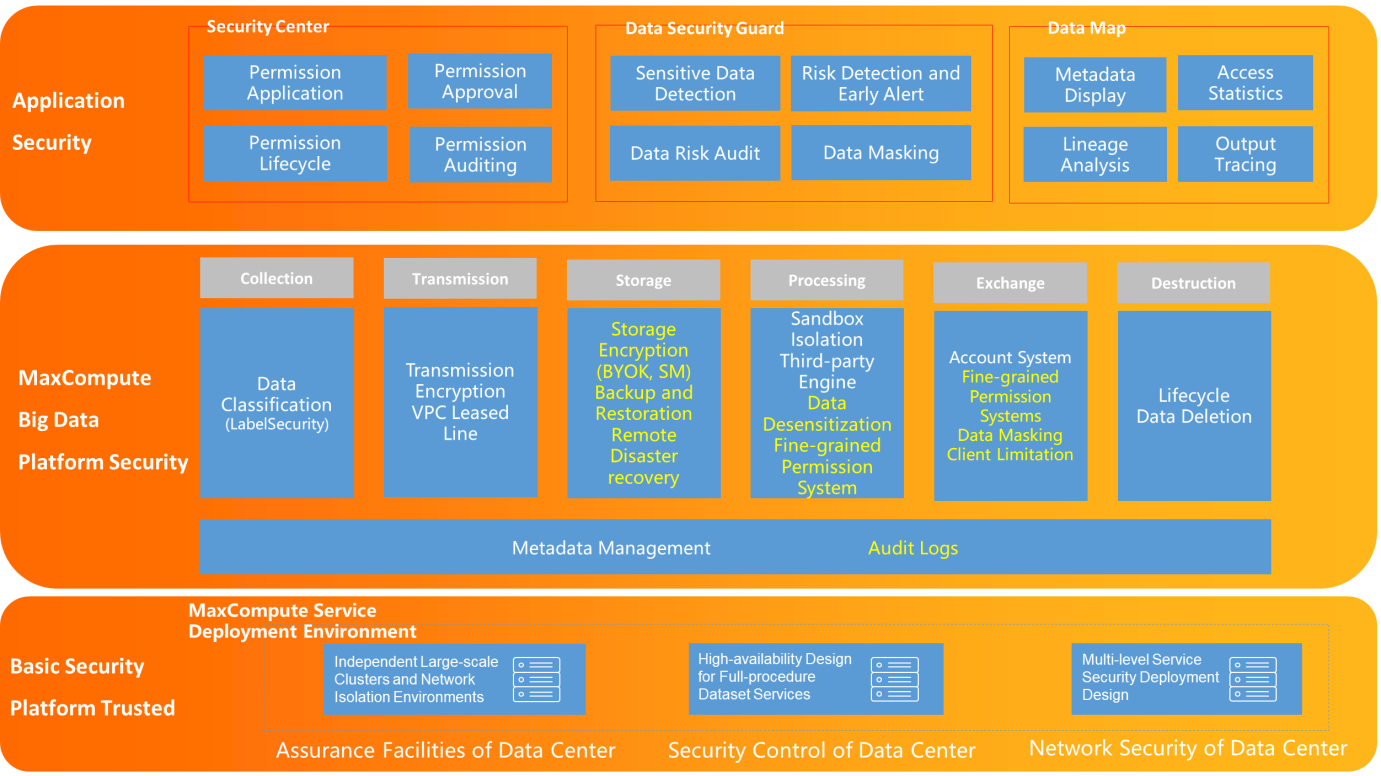

The big data platform security architecture includes basic security platform trust, MaxCompute big data platform security, and application security.

This sharing focuses on the security capabilities of the MaxCompute big data platform, including metadata management, audit logs, and the collection, transmission, storage, processing, exchange, and destruction of MaxCompute applications to ensure user security.

In addition to engine security capabilities, applications are required to implement security capabilities on the business side, including permission applications, sensitive data identification, and access statistics.

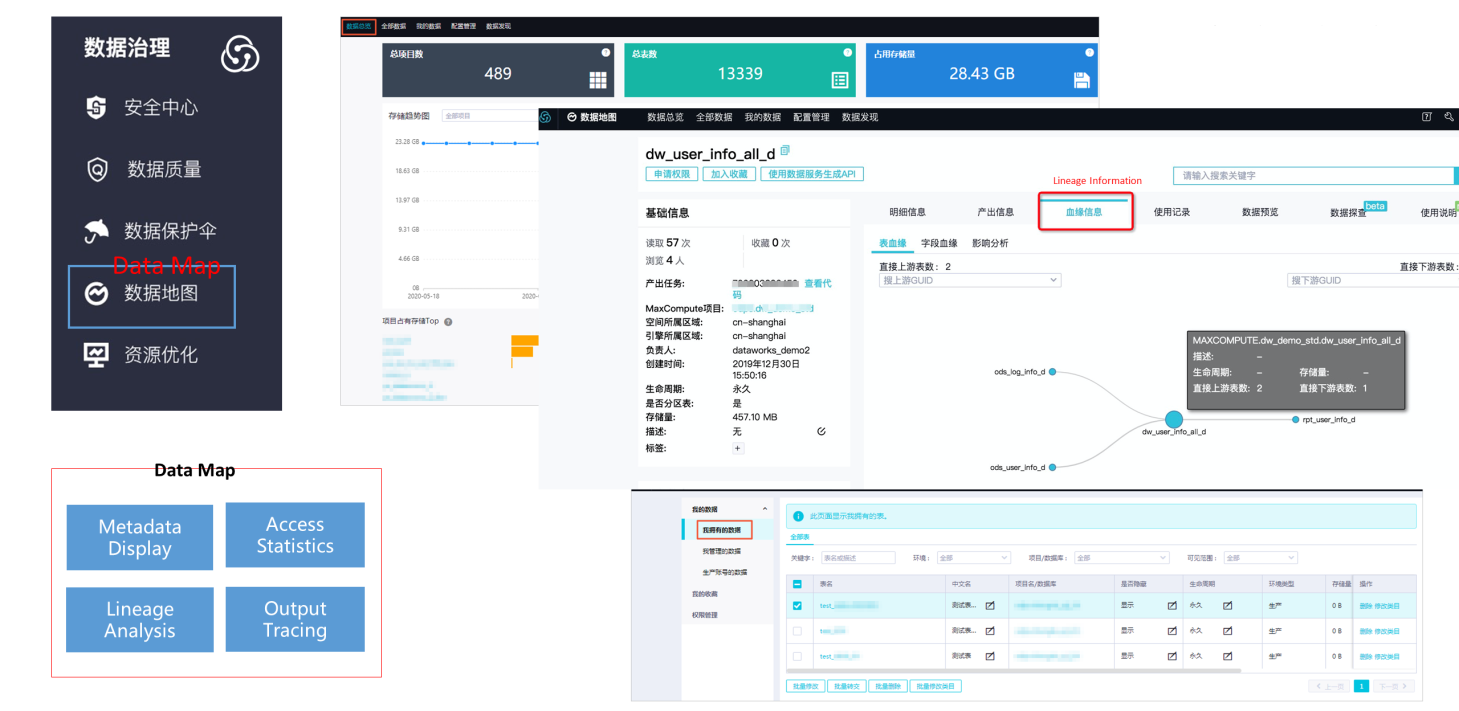

You can use Data Map to manage the metadata and data assets of your business. For example, you can use Data Map to search for data globally, view the details about the metadata, preview data, view data lineage, and manage data categories. Data Map can help you search for, understand, and use data.

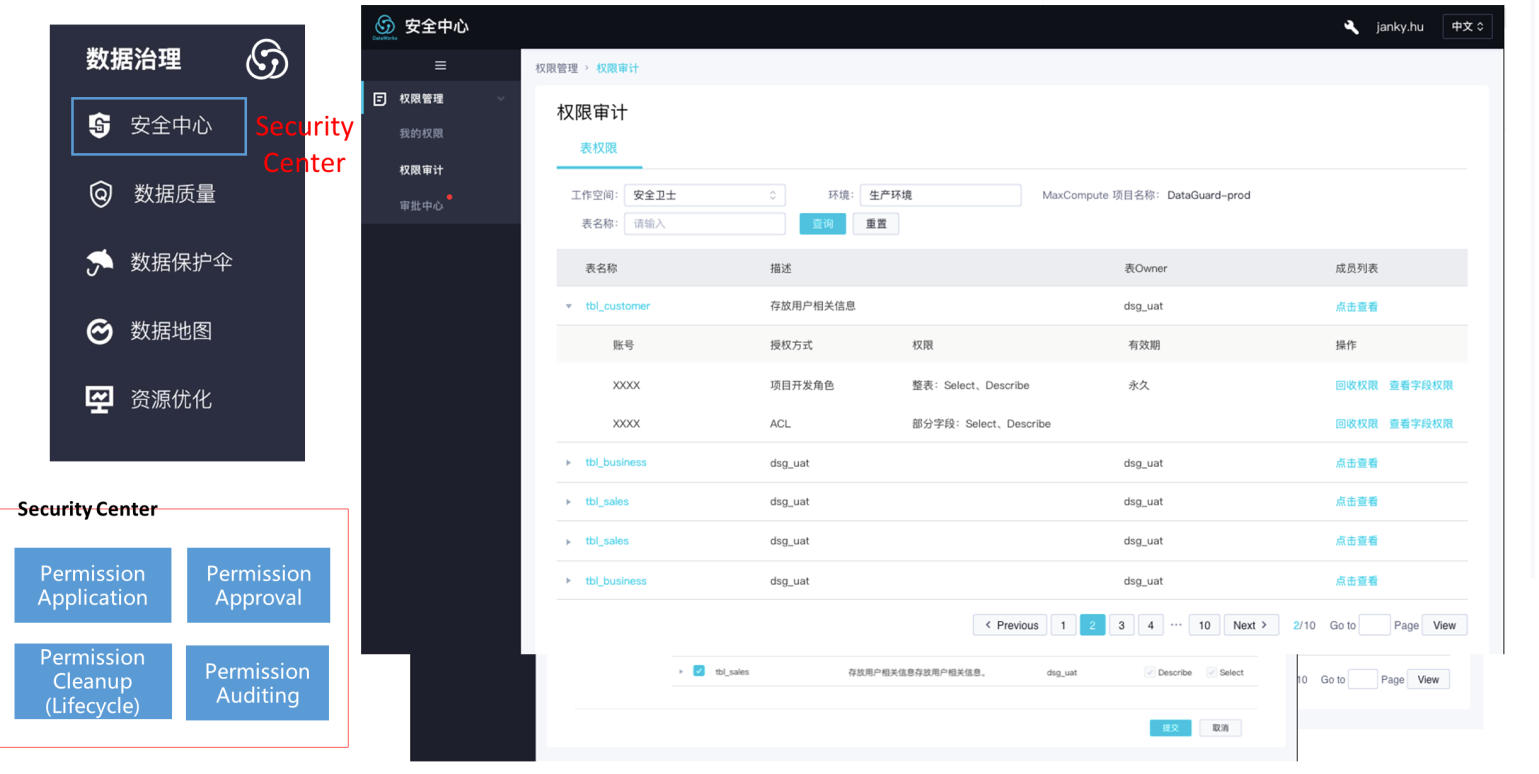

The DataWorks security center can quickly build the data content, personal privacy, and other related security capabilities of the platform to meet various security requirements of enterprises for high-risk scenarios (such as auditing). You can directly use the function without additional configuration.

The Security Center provides refined functions (such as data permission application, permission approval, and permission audit) to realize minimum permission control. At the same time, it is convenient to view the progress of each permission approval process and follow up on the process timely.

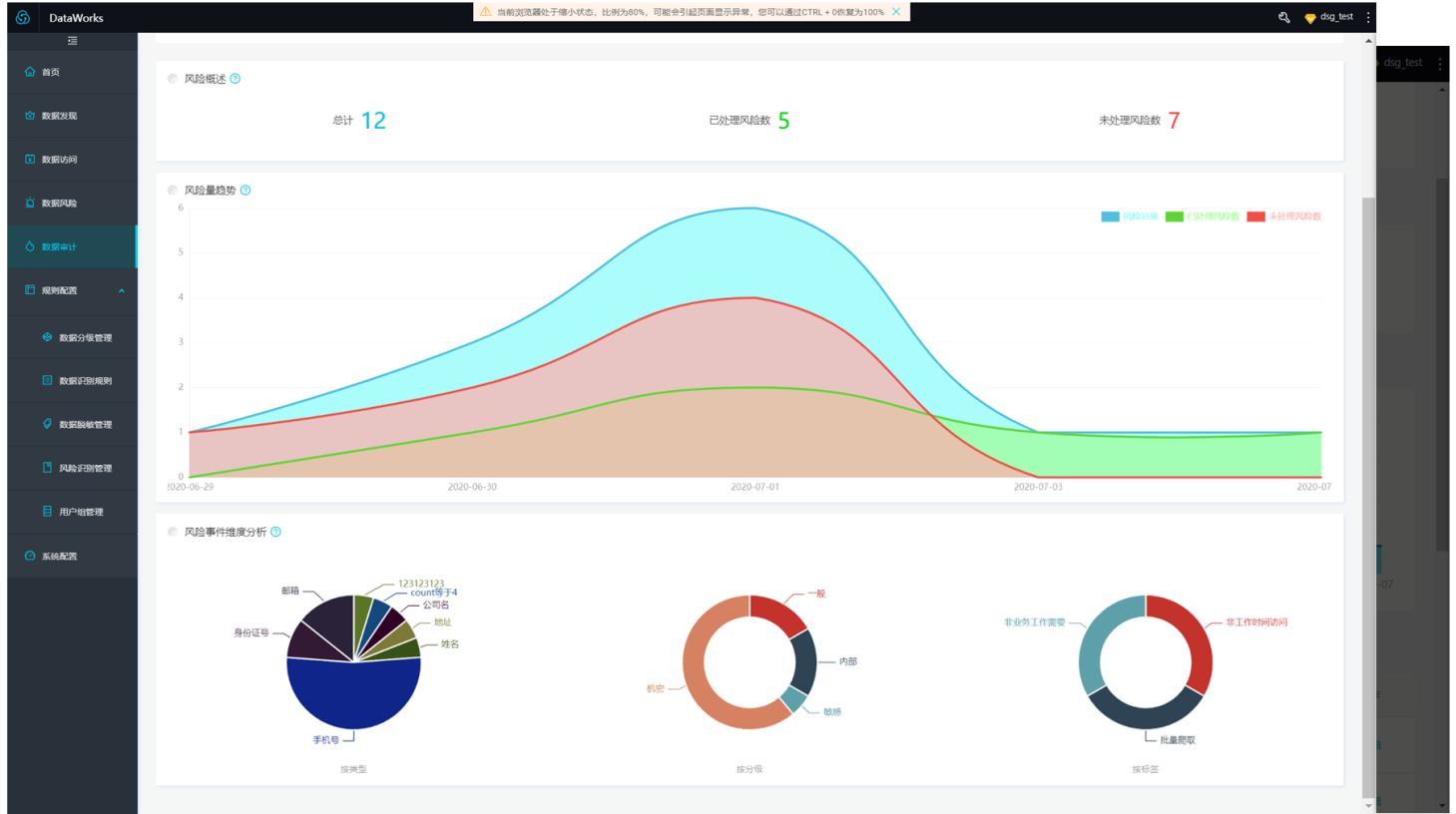

Security Center uses features (such as data classification, sensitive data identification, data access auditing, and data source tracking) to identify data with security risks at the earliest opportunity when it processes a workflow. This ensures the security and reliability of data.

The Security Center provides features (such as platform security diagnosis and data usage diagnosis). It also provides best practices for various security diagnosis scenarios based on security specifications. These features ensure your business is run effectively in a secure environment.

Data Security Guard is a product that ensures data security. It can be used to identify and mask sensitive data, add watermarks to data, manage data permissions, identify and audit data risks, and trace leak sources.

According to the information above, we can answer the previous four questions:

What data are there? → Understand and clean up data timely.

What users are there? → Authentication, tenant isolation, and project space protection.

What permissions are there? → Fine-grained permission management, ACL/Policy/Role.

Where is the data? → Authentication, tenant isolation, and project space protection.

Where can I access data? → Sandbox isolation

Where can the data be downloaded? → Network isolation

Who can use the data? → Security center, authorization, and approval

Who used the data? → Regular audit, metadata, and logs

Is it abused? → Label Security hierarchical management

Is there a risk of leakage? → Data masking

Is there a risk of loss? → Backup and recovery, disaster recovery, and storage/transmission encryption

Best Practices for the Artificial Intelligence Recommendation of Materialized Views in MaxCompute

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud Community - November 4, 2022

Alibaba Cloud Community - September 1, 2022

Alibaba Cloud MaxCompute - August 15, 2022

Alibaba Cloud Community - April 24, 2022

Alibaba Cloud Community - September 30, 2022

137 posts | 21 followers

Follow Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute