In its simplest sense, load balancing refers to the balancing of a work task (also known as a "load") distributed across multiple operating units for efficient operation. These operating units often involve FTP servers, web servers, core application servers in enterprises, and other main task servers. load balancing provides a transparent, inexpensive, and effective way to expand the bandwidth of existing servers and network devices without introducing more devices. By building upon the original network structure, it can also strengthen network data processing capabilities, increase throughput, and improve network availability and flexibility.

The rise of a technology depends a lot on its development prospect. Let's look back at the development history of load balancing, which shoud also reflect the development of networking. First of all, we need to find out where load balancing came from and why it was developed.

In 1996, several students from Washington University founded the F5 Company, and a network load balancing enterprise was born. Why did enterprises such as F5 choose network load balancing as its starting point?

The opportunity for network load balancing lies in the expansion of network applications and the increase of network traffic. Therefore, it is highly dependent on the development of the network. However, in 2000, when the Internet bubble burst, all network load balancing manufacturers faced huge challenges. Major manufacturers shifted their business focus from the Internet to large enterprises, specifically telecommunications, banks, and the federal government. At this time, load balancing focused on the four-layer network load balancing technology.

Despite the bubble burst, many companies in the global market were able to recover by adapting to the new economy. Network applications, including online news and online food ordering, thrived rapidly. Based on the previous round of infrastructure construction, they began to pay attention to the construction of value-added services/data services. In addition, the customer economy guided by the Internet bubble era began to show real economic benefits. Various value-added services (such as online banking and the SMS value-added services of telecom operators) began to flourish during this period and produced huge economic benefits. All kinds of network application traffic bottlenecks followed shortly.

At that time, a typical Internet economy (such as online games) grew rapidly, which led to network traffic bottlenecks. It could not be solved simply by upgrading the traditional routing and switching device, but the load balancer could solve these problems.

The load balancer manufacturers (including F5 and Netscaler) developed rapidly during that time and provided various solutions to improve the access efficiency of key services (such as network access, data center synchronous access, telecommuting, application firewall, etc.).

Similarly in China, online applications, especially e-commerce and live-streaming applications, boomed in the early 2000s. During that time, Chinese domestic video websites began to appear one after another, and streaming media was flooded with visits, which required higher network traffic processing capabilities. The pressure on the four-layer load balancing switch increased, and conventional load balancing technology was not able to meet the needs of such rapid network application traffic growth. An application-oriented solution became obvious, and the demand for integrating multiple technical means became stronger.

This eventually led to the development of Layer 7 load balancing, also known as "application switching. Layer 7 load balancing is a system solution based completely on network applications and associates key applications with basic network devices. By combining both the Layer 7 load balancing with the traditional Layer 4 solution, workloads can now be manage in a more efficient manner. Furthermore, many modern advanced load balancing solutions offer a comprehensive delivery platform that integrates load balancing, TCP optimization management, link management, SSLVPN, compression optimization, intelligent Network Address Translation, advanced routing, intelligent port mirroring, and other technical capabilities. This innovation has opened up new avenues for businesses to explore, providing them with a reliable and cost-efficient solution to cope with diversified business needs.

Cloud-native development is in full swing, and embracing cloud-native has become the consensus of the industry. This trend has also inspired innovation in load balancing technology, such as the development of Alibaba Cloud's Application Load Balancer (ALB) service. ALB is deeply integrated with cloud-native services (such as ACK/ASK) to support cloud-native scenarios. Now, Alibaba Cloud has taken a step further by launching ALB Ingress – a cloud-native Ingress Gateway.

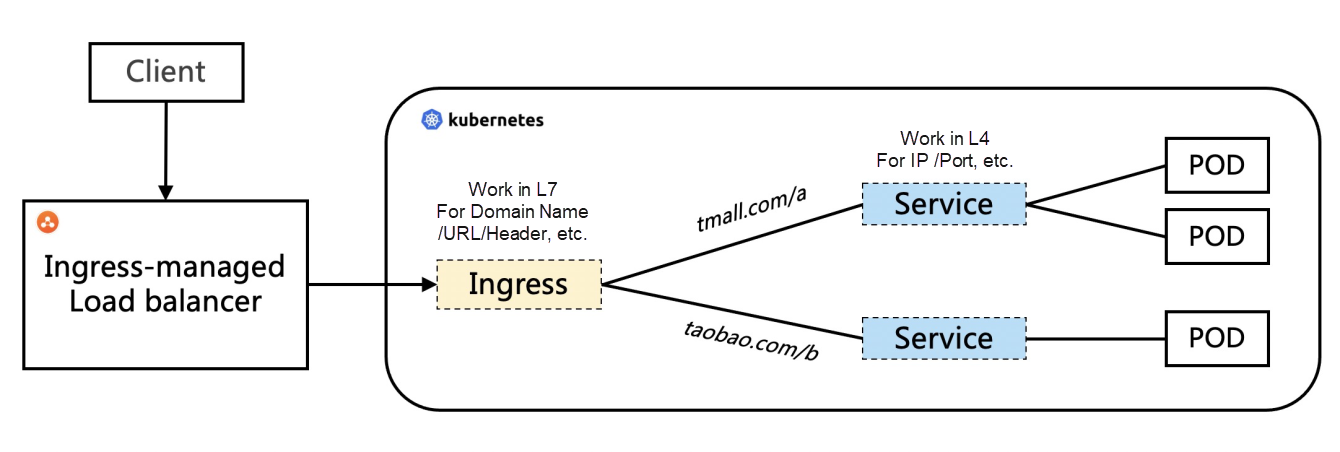

Kubernetes clusters can use Ingress to route traffic outside the cluster to service within the cluster to implement seven-layer load balancing. Ingress works at the seventh layer and does routing for service.

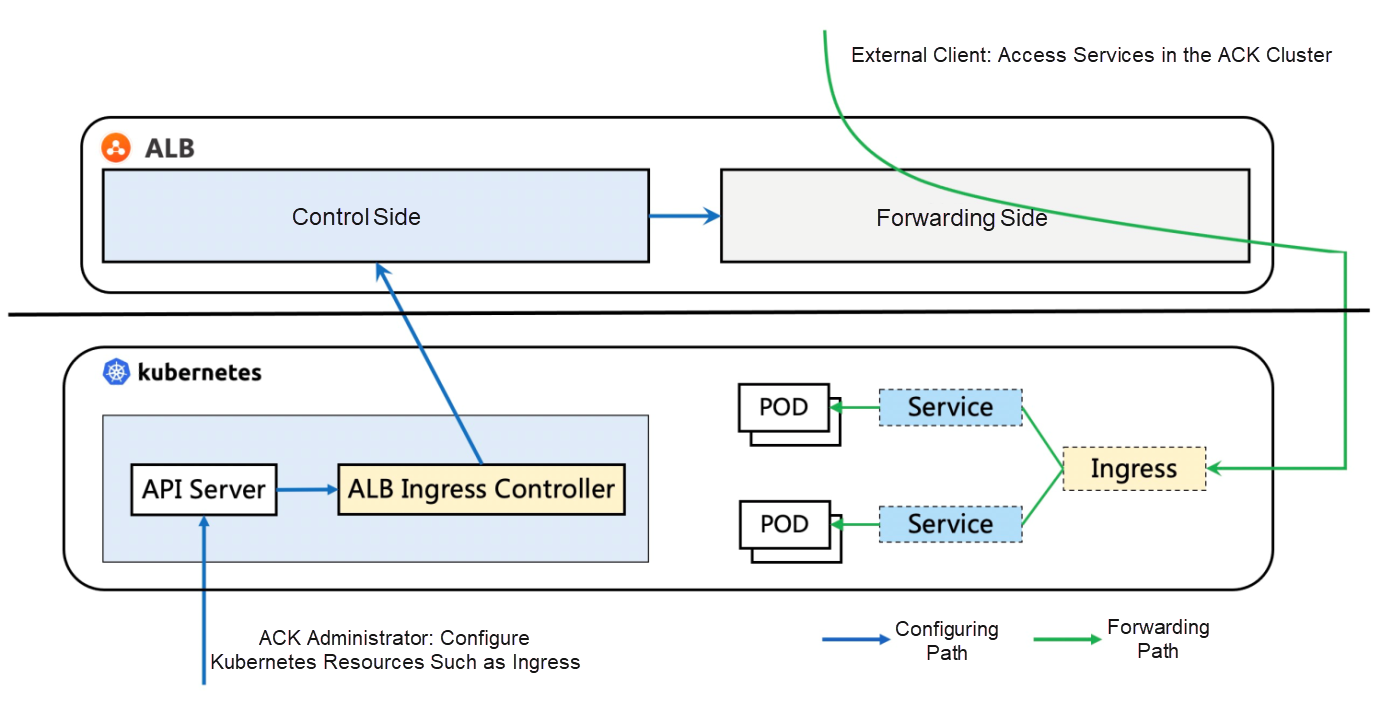

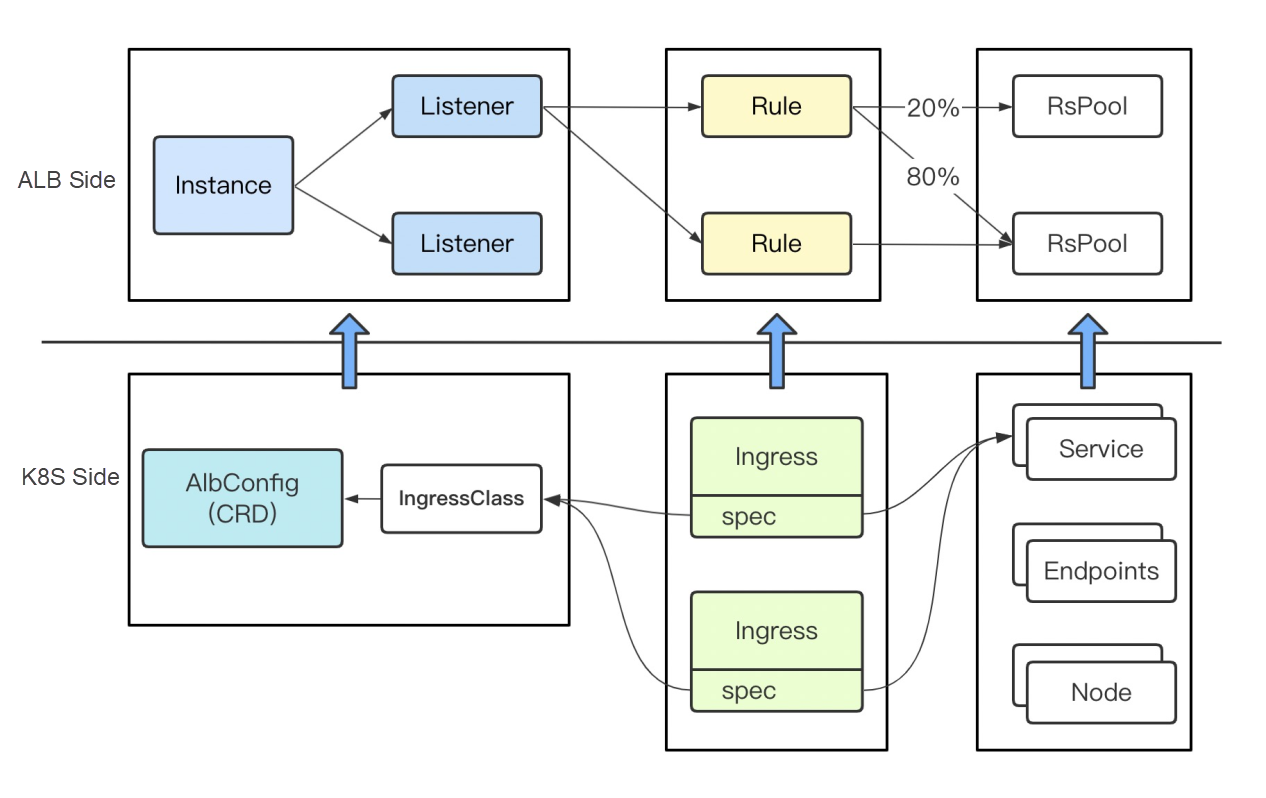

Kubernetes products such as Alibaba Cloud ACK and ASK can use the cloud-native application-oriented load balancing product (namely ALB) to route traffic outside the cluster to Service inside the cluster to implement seven-layer load balancing. At the same time, the ALB Ingress Controller is deployed in the Kubernetes cluster to monitor changes in resources (such as AlbConfig, Ingress, and Service) in the API server and dynamically convert the configurations required for ALB.

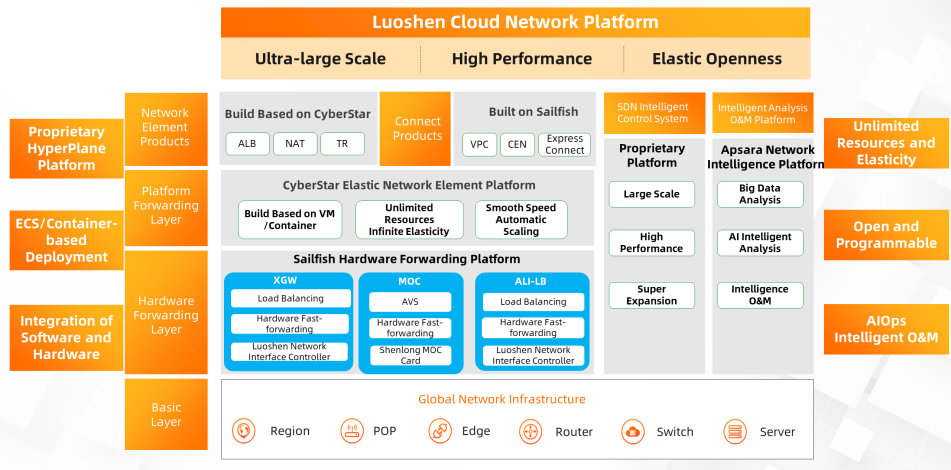

ALB is based on the Luoshen Cloud Network Platform. It is open-programmable and features ultra-large-scale and ultra-high performance. A single instance supports 100w QPS through multi-level loading and multi-level scheduling. It has super forwarding performance through software-hardware integration and hardware encryption cards. SLA is up to 99.995% through automatic elasticity that makes operation and maintenance easier, and it provides rich advanced routing features through the custom forwarding platform.

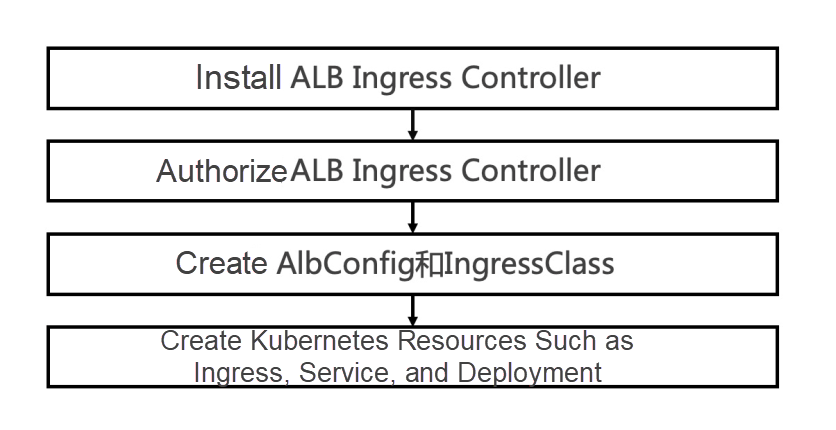

ALB Ingress is deeply integrated with cloud-native services to ensure ease of use while providing various features. You can use the ALB Ingress gateway by performing the following operations in ACK/ASK:

ALB Ingress is compatible with the native features of Kubernetes. On top of that, it provides many advanced features (please see the official documentation for details) through AlbConfig CRD and Ingress annotations. You can use AlbConfig CRD to configure ALB instances and listeners in Kubernetes easily. You can use Ingress annotations to configure ALB forwarding rules and server groups in Kubernetes easily. The following is the mapping of resources in ALB and Kubernetes:

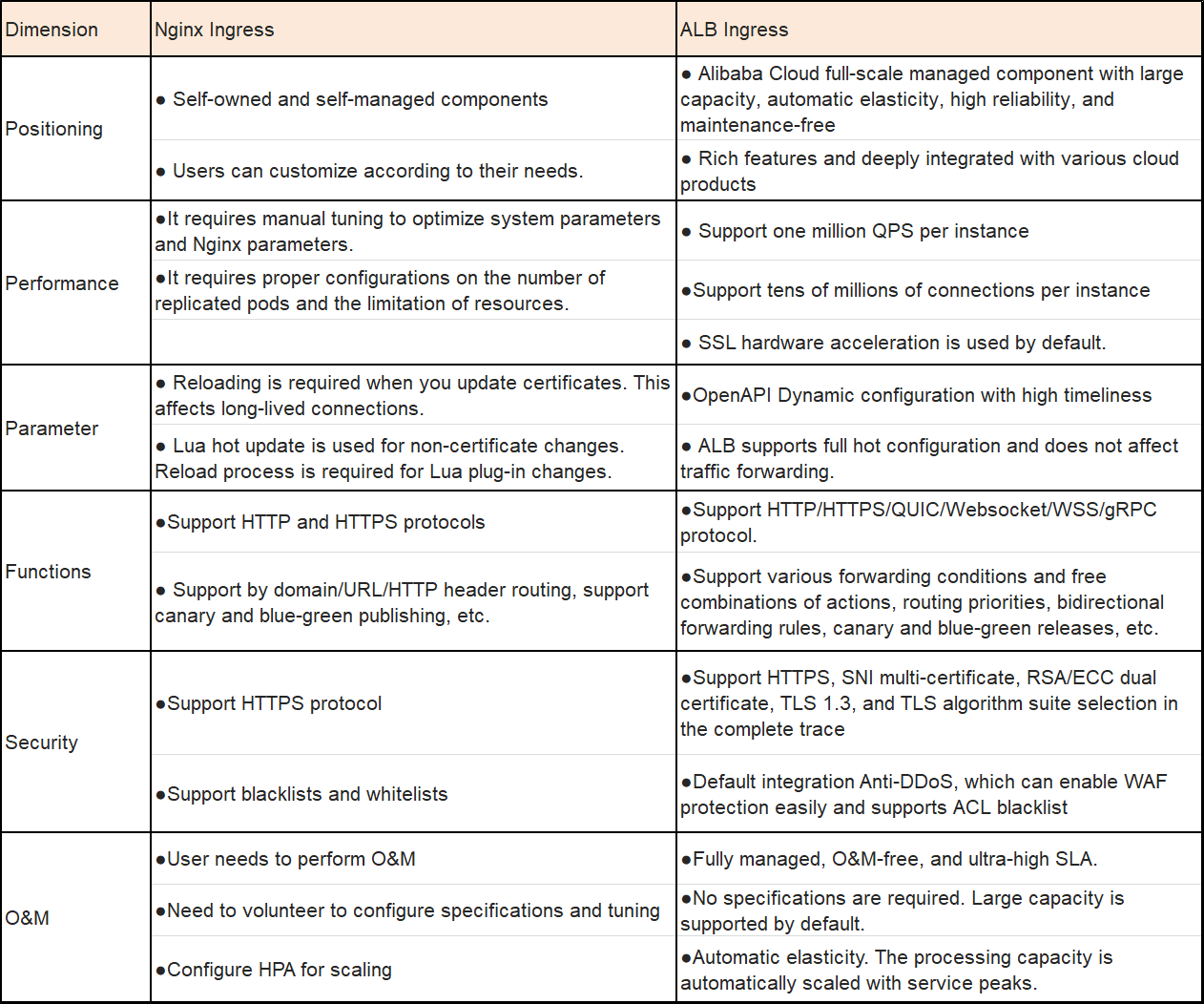

Nginx Ingress and ALB Ingress are supported in Kubernetes products (such as Alibaba Cloud ACK and ASK). The Nginx Ingress needs O&M from users. It is generally used in scenarios where users have strong requirements for gateway customization. ALB Ingress is in fully managed mode. It features large capacity, automatic elasticity, high reliability, and is O&M-free. It provides powerful Ingress traffic management capabilities. Let's look at the differences between them from the following dimensions:

ALB Ingress has clear advantages over Nginx Ingress in some scenarios:

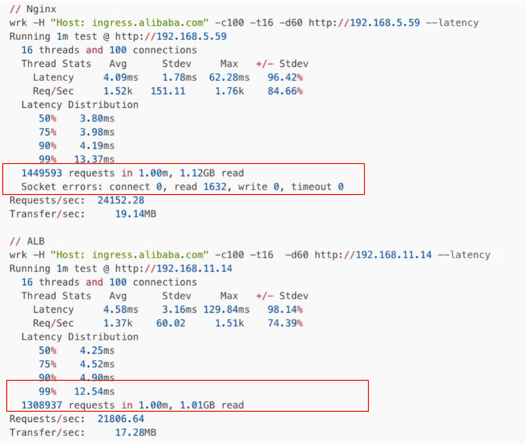

Long Connection Scenarios: Long connection scenarios are suitable for business scenarios with frequent interactions, such as IoT, Internet finance, and online games. When you make a configuration change, the Nginx Ingress requires the Reload Nginx process, which causes a long connection to be disconnected. This is unacceptable for some applications. The following is a comparison test between an Nginx Ingress and an ALB Ingress in long connection scenarios. You can see that Nginx has read/write failures, and ALB has no exceptions.

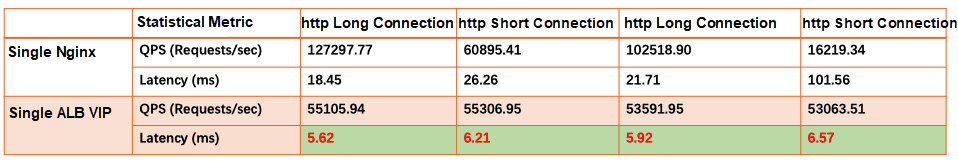

High QPS Scenarios: Internet services often have high QPS, such as expected large-scale promotional activities and unexpected hot events. The following is the test data when we use a single Nginx and ALB VIP to the utmost degree. On the one hand, the processing latency of ALB is lower than Nginx. On the other hand, Nginx does not use a hardware accelerator card when handling HTTPS short connections, which makes the single-machine performance low and requires more machines under high QPS. Although the QPS of a single VIP in ALB is limited to around 50,000, ALB supports automatic elasticity, and when the QPS is high, more VIPs will automatically pop up, and one ALB instance can support millions of QPS.

High-Concurrency Connection Scenarios: IoT businesses often feature high-concurrency connections due to a large number of terminals. ALB Ingress is based on the Luoshen Cloud Network Platform and supports the convergence of user sessions. A single ALB instance supports tens of millions of connections. Nginx Ingress requires users to maintain their operations. A single device supports a limited number of sessions. Even if network-enhanced virtual machines are used, capacity risks still exist, and a large number of devices need to be maintained.

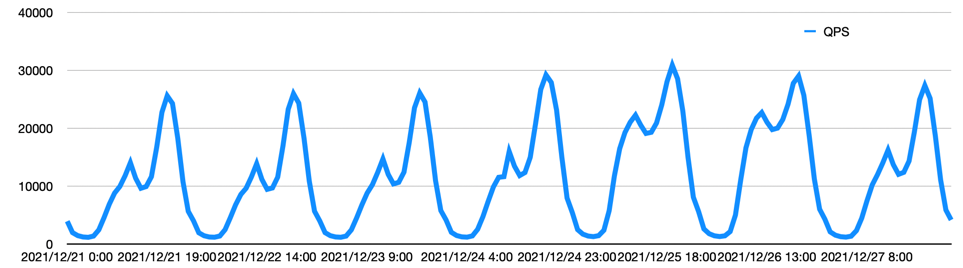

Business Peaks and Valleys: ALB has advantages in terms of costs, especially for businesses with peaks and valleys, such as e-commerce and games. Since ALB is billed on a pay-as-you-go basis, fewer LCUs are consumed during troughs. Since automatic elasticity is supported, users do not need to deal with their business traffic models. However, Nginx often has idle costs during the trough period. Users need to adjust the machines to the appropriate quantity and specifications according to the business traffic. In addition, considering the need for disaster recovery, Nginx usually needs to have resource buffers, which will incur additional costs.

ALB Ingress is a cloud-native Ingress gateway provided by Alibaba Cloud. It applies to the following Kubernetes environments:

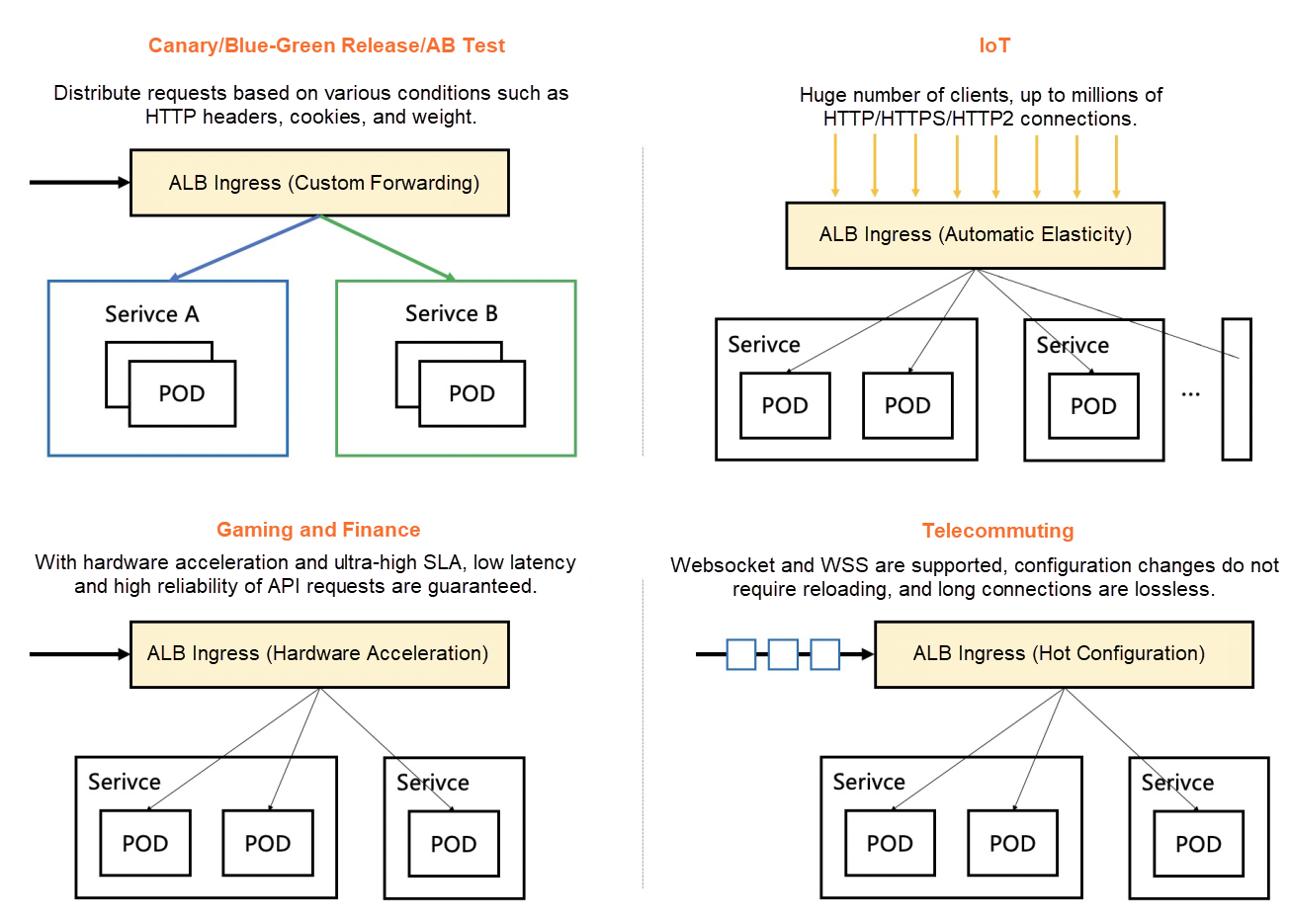

ALB Ingress has attracted customers from multiple industries since its release due to its ultra-large capacity, automatic elasticity, rich features, high reliability, and O&M-free features. It covers various business scenarios. The following are several common application scenarios:

ALB Ingress is a cloud-native Ingress gateway launched by Alibaba Cloud and based on the Luoshen Cloud Network Platform. It features large capacity, automatic elasticity, rich features, maintenance-free, and high reliability coupled with clear advantages over Nginx Ingress in specific scenarios. It applies to Alibaba Cloud ACK/ASK and self-developed Kubernetes environments and covers various business scenarios.

Technical Knowledge Sharing | An Interpretation of CEN 2.0 Technology

1,076 posts | 263 followers

FollowAlibaba Cloud Community - September 2, 2022

Alibaba Container Service - January 26, 2022

Alibaba Cloud Native - February 15, 2023

Alibaba Cloud Native Community - April 9, 2024

Alibaba Cloud Community - August 21, 2024

Alibaba Container Service - September 14, 2022

1,076 posts | 263 followers

Follow Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn MoreMore Posts by Alibaba Cloud Community