By Yanxun

Virtual threads are Java threads implemented by the JDK during runtime, rather than by the operating system. The primary difference between virtual threads and traditional threads (also known as platform threads) lies in the fact that we can easily run a large number of active virtual threads within the same Java process, even up to millions. The capability to handle a large number of virtual threads endows them with powerful functionality: by allowing servers to concurrently handle more requests, they can more efficiently run server applications coded with a thread-per-request model, achieving higher throughput and less hardware waste.

Recently, while working on a personal project, I tried developing with JDK21 to explore the principles and implementation of virtual threads. Given my limited technical expertise, I welcome exchanges and discussions on this topic.

Virtual threads were introduced to reduce the effort required for writing, maintaining, and observing high-throughput concurrent applications.

For the interface provided by the application, its response time is constant, then its throughput at this time is proportional to the number of requests that the application can process at the same time (that is, the number of concurrent requests). Suppose an interface takes 50 milliseconds to respond, and the application can concurrently handle 10 requests; then the throughput would be 200 requests per second (1s/50ms*10). If the concurrency handling capacity of the application increases to 100, then the throughput could reach 2,000 requests per second. Clearly, increasing the number of threads that can handle concurrency significantly improves the throughput of the application. However, platform threads in Java are expensive resources, consuming 1 MB of stack memory each by default, limiting the number of platform threads that can run within the JVM. Additionally, the operating system also has a limit on the maximum number of threads it can support, meaning the number of kernel threads cannot be increased indefinitely. The following figure shows the maximum number of threads supported by the system:

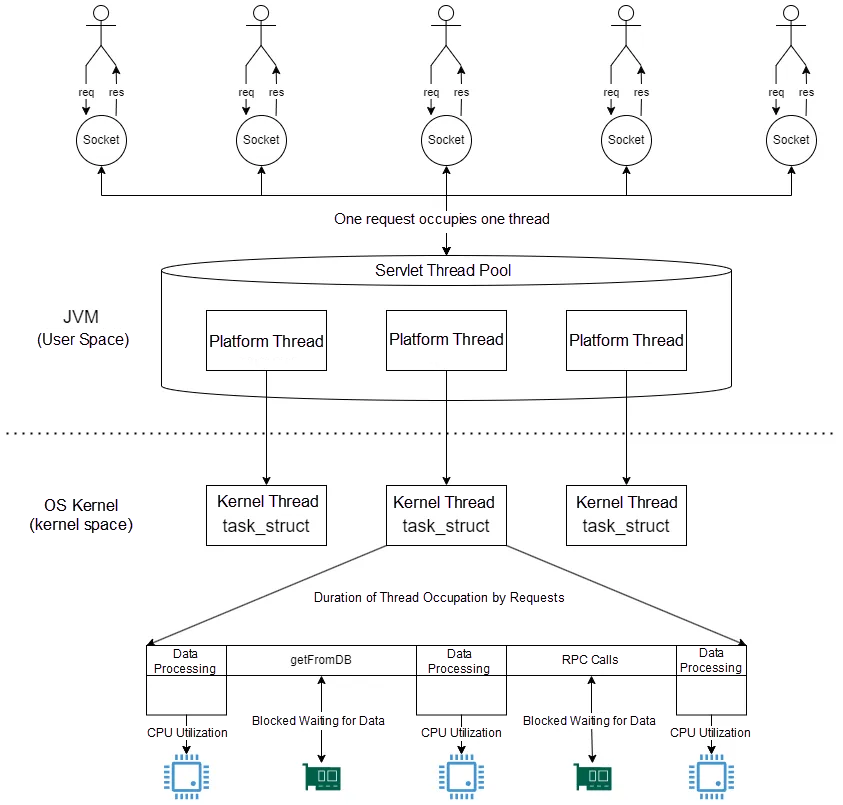

In most JVM implementations, Java threads map one-to-one with operating system threads (as shown in the figure below). If we use a thread-per-request model (commonly seen in servers like Tomcat and Jetty), meaning creating a thread for each request to process, we will quickly hit the upper limit of operating system threads.

If the requests are I/O-intensive, most threads will spend their time blocked waiting for I/O operations to complete, leading to a situation where thread resources are exhausted while CPU utilization remains low. Therefore, if a platform thread is dedicated to user requests, for applications with high concurrent users, it becomes very easy for the thread pool to be filled up, causing subsequent requests to become blocked.

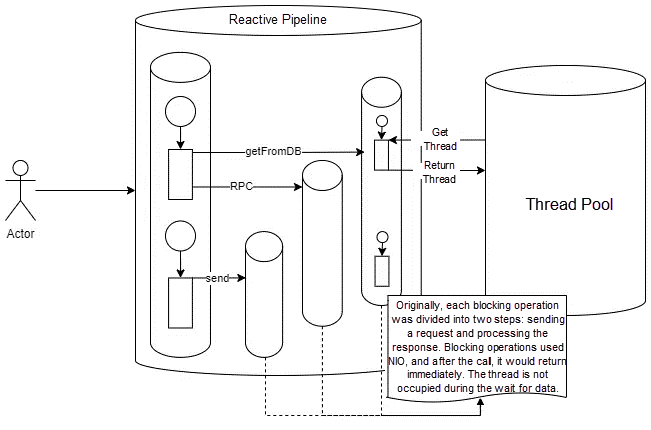

Some developers who want to fully utilize hardware have abandoned the thread-per-request model and adopted reactive programming. This means that the code for processing requests does not run on a single thread from start to finish but instead returns its thread to the pool while waiting for I/O operations to complete, allowing the thread to serve other requests. This fine-grained thread sharing, where code stays on a thread only while it is executing computations, not while waiting for I/O, allows for a large number of concurrent operations without occupying threads for extended periods.

However, while this approach eliminates the limitations on throughput imposed by the scarcity of operating system threads, it significantly increases the cost of understanding and debugging the program. It uses a set of separate I/O methods that do not wait for I/O operations to complete but instead signal completion to a callback later. Developers must break their request processing logic into small stages and then combine them into a sequential pipeline. In reactive programming, each stage of a request may execute on different threads, and each thread runs stages of different requests in an interleaved manner. This is a complex method, where creating reactive channels, debugging, and understanding their execution flow is rather difficult, let alone troubleshooting when exceptions occur.

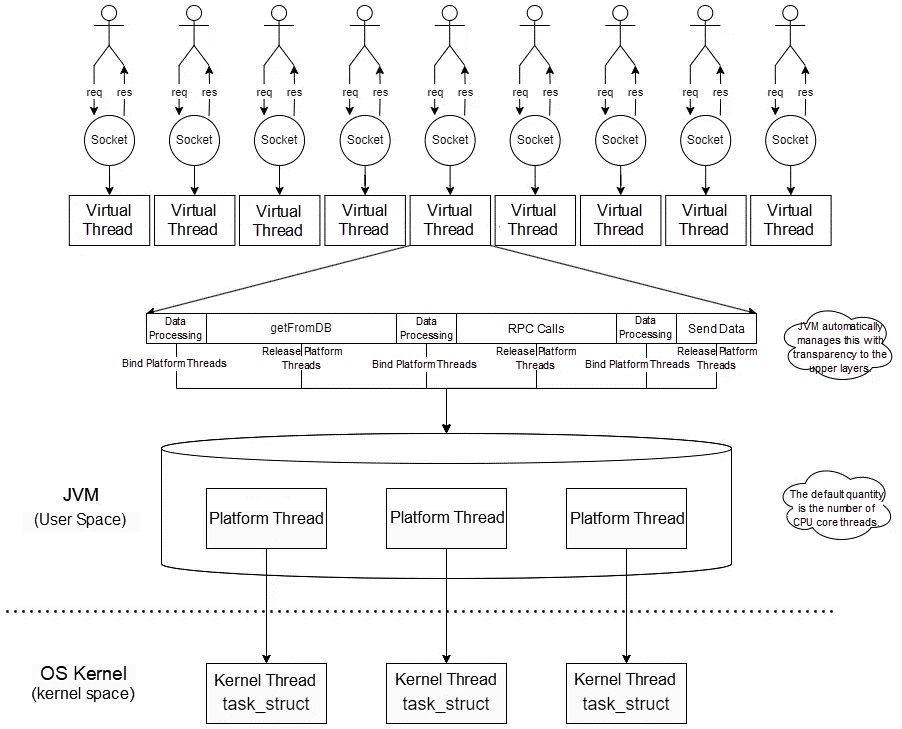

The introduction of virtual threads addresses these issues. At runtime, Java implements Java threads, or virtual threads, in a way that breaks the one-to-one correspondence between Java threads and operating system threads. Just as the operating system gives the illusion of ample memory by mapping large virtual address spaces to a limited amount of physical RAM, Java can give the illusion of abundant threads by mapping a large number of virtual threads to a small number of operating system threads at runtime.

Platform threads (java.lang.Thread) are instances implemented in the traditional way. They act as thin wrappers around operating system threads, mapping one-to-one with system threads, whereas virtual threads are instances not bound to specific operating system threads. "Thread-per-request" application code can run in a virtual thread throughout the entire duration of the request, but virtual threads only use operating system threads when performing computations on the CPU. Virtual threads offer the same scalability as asynchronous styles, but their implementation is transparent and does not require additional understanding or development effort. When code running in a virtual thread performs blocking I/O operations, it automatically suspends the virtual thread at runtime until it can be resumed later. For Java developers, virtual threads are just threads that are cheap to create and nearly infinitely abundant. Hardware utilization approaches optimal levels, allowing for high concurrency and thus high throughput, while the application remains in harmony with the multi-threaded design of the Java platform and its tools.

Like platform threads, virtual threads are instances of java.lang.Thread, but are not bound to specific operating system threads. Virtual threads still run code on operating system threads. The difference is that when the code running on a virtual thread calls a blocking I/O operation, Java suspends it at runtime until it can resume. The associated operating system thread is then free to perform actions for other virtual threads.

The implementation of virtual threads is similar to that of virtual memory. To simulate a large amount of memory, the operating system maps a large virtual address space to a limited amount of RAM. Similarly, to simulate a large number of threads, Java maps a large number of virtual threads to a small number of operating system threads at runtime.

Unlike platform threads, virtual threads typically have shallower call stacks and thus often execute just one HTTP client call or one JDBC query. Although virtual threads support thread-local variables and inherited thread-local variables, we should carefully consider their usage since a single JVM might support millions of virtual threads.

Virtual threads are suitable for running tasks that are mostly blocked, such as I/O-intensive operations, rather than long-running CPU-intensive operations. This is because virtual threads are not faster threads; they do not run code any faster than platform threads. They are designed to provide scalability (higher throughput) rather than speed (lower latency).

final class VirtualThread extends BaseVirtualThread {

private static final ForkJoinPool DEFAULT_SCHEDULER = createDefaultScheduler();

private final Executor scheduler;

private final Continuation cont;

private final Runnable runContinuation;

private volatile Thread carrierThread;

VirtualThread(Executor scheduler, String name, int characteristics, Runnable task) {

super(name, characteristics, /*bound*/ false);

Objects.requireNonNull(task);

// choose scheduler if not specified

if (scheduler == null) {

Thread parent = Thread.currentThread();

if (parent instanceof VirtualThread vparent) {

scheduler = vparent.scheduler;

} else {

scheduler = DEFAULT_SCHEDULER;

}

}

this.scheduler = scheduler;

this.cont = new VThreadContinuation(this, task);

this.runContinuation = this::runContinuation;

}

private static ForkJoinPool createDefaultScheduler() {

ForkJoinWorkerThreadFactory factory = pool -> {

PrivilegedAction<ForkJoinWorkerThread> pa = () -> new CarrierThread(pool);

return AccessController.doPrivileged(pa);

};

PrivilegedAction<ForkJoinPool> pa = () -> {

int parallelism, maxPoolSize, minRunnable;

String parallelismValue = System.getProperty("jdk.virtualThreadScheduler.parallelism");

String maxPoolSizeValue = System.getProperty("jdk.virtualThreadScheduler.maxPoolSize");

String minRunnableValue = System.getProperty("jdk.virtualThreadScheduler.minRunnable");

if (parallelismValue != null) {

parallelism = Integer.parseInt(parallelismValue);

} else {

parallelism = Runtime.getRuntime().availableProcessors();

}

if (maxPoolSizeValue != null) {

maxPoolSize = Integer.parseInt(maxPoolSizeValue);

parallelism = Integer.min(parallelism, maxPoolSize);

} else {

maxPoolSize = Integer.max(parallelism, 256);

}

if (minRunnableValue != null) {

minRunnable = Integer.parseInt(minRunnableValue);

} else {

minRunnable = Integer.max(parallelism / 2, 1);

}

Thread.UncaughtExceptionHandler handler = (t, e) -> { };

boolean asyncMode = true; // FIFO

return new ForkJoinPool(parallelism, factory, handler, asyncMode,

0, maxPoolSize, minRunnable, pool -> true, 30, SECONDS);

};

return AccessController.doPrivileged(pa);

}

private void runContinuation() {

// the carrier must be a platform thread

if (Thread.currentThread().isVirtual()) {

throw new WrongThreadException();

}

// set state to RUNNING

int initialState = state();

if (initialState == STARTED || initialState == UNPARKED || initialState == YIELDED) {

// newly started or continue after parking/blocking/Thread.yield

if (!compareAndSetState(initialState, RUNNING)) {

return;

}

// consume parking permit when continuing after parking

if (initialState == UNPARKED) {

setParkPermit(false);

}

} else {

// not runnable

return;

}

mount();

try {

cont.run();

} finally {

unmount();

if (cont.isDone()) {

afterDone();

} else {

afterYield();

}

}

}

}There are several core objects involved in virtual threads:

• Continuation: A wrapper for the actual user task. A virtual thread wraps the task in a Continuation instance. When the task needs to be blocked, the thread calls the yield operation of the Continuation instance to block it.

• Scheduler: Submits tasks to a platform thread pool for execution. Virtual threads maintain a default scheduler called DEFAULT_SCHEDULER, which is an instance of ForkJoinPool. The maximum number of threads defaults to the number of system cores, capped at 256, and can be configured using jdk.virtualThreadScheduler.maxPoolSize.

• carrier: Carrier thread (Thread object) refers to the platform thread responsible for executing the tasks in the virtual thread.

• runContinuation: A Runnable object used by the virtual thread to load onto the current thread before the task runs or resumes. When the task is completed, the runContinuation will be unloaded.

A deeper analysis of the source code for the specific workflow of virtual threads may be covered in a future discussion.

// name(String prefix, Integer start) p0: prefix p1: initial counter value

Thread.Builder.OfVirtual virtualThreadBuilder = Thread.ofVirtual().name("worker-", 0);

Thread worker0 = virtualThreadBuilder.start(this::doSomethings);

worker0.join();

System.out.print("finish worker-0 running");

Thread worker1 = virtualThreadBuilder.start(this::doSomethings);

worker1.join();

System.out.print("finish worker-1 running");Calling the Thread.ofVirtual() method creates a Thread.Builder instance that is used to create virtual threads.

try (ExecutorService executorService = Executors.newVirtualThreadPerTaskExecutor()) {

Future<?> submit = executorService.submit(this::doSomethings);

submit.get();

System.out.print("finish running");

}Since virtual threads are inexpensive and plentiful, they should never be pooled; instead, a new virtual thread should be created for each application task. Using newVirtualThreadPerTaskExecutor creates a thread pool without limits on the number of threads (this is not a typical thread pool designed for reusing threads); it creates a new virtual thread for each submitted task.

public class Server {

public static void main(String[] args) {

Set<String> platformSet = new HashSet<>();

new Thread(() -> {

try {

Thread.sleep(10000);

System.out.println(platformSet.size());

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

}).start();

try (ServerSocket serverSocket = new ServerSocket(9999)) {

Thread.Builder.OfVirtual clientThreadBuilder = Thread.ofVirtual().name("client", 1);

while (true) {

Socket clientSocket = serverSocket.accept();

clientThreadBuilder.start(() -> {

String platformName = Thread.currentThread().toString().split("@")[1];

platformSet.add(platformName);

try (

BufferedReader in = new BufferedReader(new InputStreamReader(clientSocket.getInputStream()));

PrintWriter out = new PrintWriter(clientSocket.getOutputStream(), true);

) {

String inputLine;

while ((inputLine = in.readLine()) != null) {

System.out.println(inputLine + "(from:" + Thread.currentThread() + ")");

out.println(inputLine);

}

} catch (IOException e) {

System.err.println(e.getMessage());

}

});

}

} catch (IOException e) {

System.err.println("Exception caught when trying to listen on port 999");

System.err.printf(e.getMessage());

}

}

}Listen for client connections. Create a virtual thread for each incoming connection, and add the name of the underlying platform thread to the Set while the virtual thread is running. Additionally, after another thread sleeps for 10 seconds, you can print out the size of the Set, which shows how many platform threads are actually being used by these virtual threads.

public class Client {

public static void main(String[] args) throws InterruptedException {

Thread.Builder.OfVirtual builder = Thread.ofVirtual().name("client", 1);

for (int i = 0; i < 100000; i++) {

builder.start(() -> {

try (

Socket serverSocket = new Socket("localhost", 9999);

BufferedReader in = new BufferedReader(new InputStreamReader(serverSocket.getInputStream()));

PrintWriter out = new PrintWriter(serverSocket.getOutputStream(), true);

) {

out.println("hello");

String inputLine;

while ((inputLine = in.readLine()) != null) {

System.out.println(inputLine);

}

} catch (UnknownHostException e) {

System.err.println("Don't know about localhost");

} catch (IOException e) {

System.err.println("Couldn't get I/O for the connection to localhost");

}

});

}

Thread.sleep(1000000000);

}

}Create 100,000 client connections to the server and send messages. The main thread sleeps for an extended period to prevent the program from ending immediately.

The server ultimately uses 19 platform threads (related to the number of CPU cores) to handle the 100,000 client connections.

When a platform thread is running, it is scheduled by the operating system. In contrast, when a virtual thread is running, it is scheduled by Java at runtime. When Java schedules a virtual thread at runtime, it attaches this virtual thread to a platform thread, after which the operating system kernel handles the scheduling. The platform thread to which the virtual thread is attached is referred to as the carrier. When a virtual thread is blocked, it is detached from the carrier, leaving the carrier idle. Java can then schedule another virtual thread to attach to the carrier at runtime. This process is invisible to the kernel threads, avoiding the overhead of switching between kernel and user modes that occurs when platform threads encounter blocks, and making full use of CPU computing performance to increase the throughput of applications.

When a virtual thread is pinned to a carrier, it remains attached even when blocked. Virtual threads get pinned in the following scenarios:

Pinning will not cause the application to malfunction but may hinder its scalability. You can try using: java.util.concurrent.locks.ReentrantLock.synchronized

to modify frequently executed blocks and methods and protect potentially long-running I/O operations, thereby avoiding frequent and prolonged pinning.

Since virtual threads are implementations of java.lang.Thread and follow the same rules specified for java.lang.Thread since Java SE 1.0, developers do not need to learn new concepts to use them. However, the inability to generate a very large number of platform threads (the only available thread implementation in Java for many years) gave rise to practices designed to cope with their high cost. These practices can be counterproductive when applied to virtual threads and must be abandoned.

Virtual threads can significantly improve the throughput (not latency) of servers written in a thread-per-request style. In this style, the server dedicates a thread to handle each incoming request for its entire duration.

Blocking a platform thread is costly because it occupies a system thread (a relatively scarce resource) without doing much useful work. In the past, we might have used asynchronous and non-blocking approaches to implement certain features. However, since there can be many virtual threads, the cost of blocking them is low. Therefore, we should write code in a straightforward synchronous style and use blocking I/O APIs.

For example, the following code, written in a non-blocking and asynchronous style, would not gain much benefit from virtual threads:

CompletableFuture.supplyAsync(info::getUrl, pool)

.thenCompose(url -> getBodyAsync(url, HttpResponse.BodyHandlers.ofString()))

.thenApply(info::findImage)

.thenCompose(url -> getBodyAsync(url, HttpResponse.BodyHandlers.ofByteArray()))

.thenApply(info::setImageData)

.thenAccept(this::process)

.exceptionally(t -> { t.printStackTrace(); return null; });Code written in a synchronous style using simple blocking I/O will benefit greatly:

try {

String page = getBody(info.getUrl(), HttpResponse.BodyHandlers.ofString());

String imageUrl = info.findImage(page);

byte[] data = getBody(imageUrl, HttpResponse.BodyHandlers.ofByteArray());

info.setImageData(data);

process(info);

} catch (Exception ex) {

t.printStackTrace();

}Such code is also easier to debug and profile, or observe with thread dumps. The more stacks are written in such a style, the better the performance and observability of virtual threads will be. Programs or frameworks written in other styles that do not assign a thread to each task might not see much benefit from virtual threads. Avoid mixing synchronous and blocking code with asynchronous frameworks.

Although virtual threads exhibit the same behavior as platform threads, they should not represent the same programmatic concept. Platform threads are scarce and therefore a valuable resource. Valuable resources need to be managed, and the most common method of managing platform threads is through thread pools. The next question that arises is, how many threads should the pool contain?

However, virtual threads are plentiful, so each thread should not represent a shared and pooled resource but rather a task. Threads transition from managed resources to application domain objects. The question of how many virtual threads we should have becomes clear, much like deciding how many strings to use for storing a set of usernames in memory: the number of virtual threads always equals the number of concurrent tasks in the application.

To represent each application task as a thread, do not use a shared thread pool executor as shown in the following example:

Future<ResultA> f1 = sharedThreadPoolExecutor.submit(task1);

Future<ResultB> f2 = sharedThreadPoolExecutor.submit(task2);

// ... use futuresInstead, use the following approach:

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

Future<ResultA> f1 = executor.submit(task1);

Future<ResultB> f2 = executor.submit(task2);

// ... use futures

}The code still uses the ExecutorService, but the instance returned by Executors.newVirtualThreadPerTaskExecutor() does not reuse virtual threads. Instead, it creates a new virtual thread for each submitted task.

void handle(Request request, Response response) {

var url1 = ...

var url2 = ...

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

var future1 = executor.submit(() -> fetchURL(url1));

var future2 = executor.submit(() -> fetchURL(url2));

response.send(future1.get() + future2.get());

} catch (ExecutionException | InterruptedException e) {

response.fail(e);

}

}

String fetchURL(URL url) throws IOException {

try (var in = url.openStream()) {

return new String(in.readAllBytes(), StandardCharsets.UTF_8);

}

}Additionally, the ExecutorService itself is lightweight, and you can create a new one just like handling any simple object. There is no need to keep the object around and use the same instance repeatedly; instead, create a new one whenever needed.

You should create a new virtual thread as shown above, even for small and short-lived concurrent tasks. As a rule of thumb, if your application never has 10,000 or more virtual threads, it is unlikely to benefit from virtual threads. Either its load is too light to require better throughput, or it does not show enough tasks to the virtual thread.

Sometimes it is necessary to limit the concurrency of an operation. For example, some external services might not be able to handle more than ten concurrent requests. With platform threads, you can limit concurrency by setting the size of the thread pool. When using virtual threads, if you want to limit the concurrency of access to certain services, you should use the Semaphore class, which is specifically designed for this purpose. The following example demonstrates the usage of this class:

Semaphore sem = new Semaphore(10);

// ......

Executors.newVirtualThreadPerTaskExecutor().submit(() -> {

try {

// Before executing the task, decrement the semaphore by 1, indicating one more concurrent thread is executing, reducing the number of remaining concurrent executions.

// If the semaphore (permit) is 0, block until another thread finishes and releases a permit.

sem.acquire();

doSomething();

} catch (InterruptedException e) {

throw new RuntimeException(e);

} finally {

// After completing the task, increment the semaphore by 1.

sem.release();

}

});Virtual threads support thread-local variables, just like platform threads. Typically, thread-local variables are used to associate some context-specific information with the currently executing code, such as the current transaction and user ID. For virtual threads, it is perfectly reasonable to use thread-local variables. Another use of thread-local variables is to cache reusable objects, which are stored in thread-local variables for reuse by multiple tasks running on the thread over time, aiming to reduce the number of instantiations and instances in memory.

This goes against the design of virtual threads. Such caching is useful only when multiple tasks share and reuse a thread (thus the expensive objects cached in thread-locals), similar to the case when platform threads are pooled. When running in a thread pool, many tasks might be called, but since the thread pool contains only a few threads, objects are instantiated only a few times (once per thread pool) and then cached and reused. However, virtual threads are never pooled and are not reused by unrelated tasks. Since each task has its own virtual thread, each call from different tasks will trigger the instantiation of this cached variable. Moreover, given that there could be a large number of virtual threads running concurrently, the expensive objects might consume considerable memory. These outcomes are exactly opposite to what thread-local caching aims to achieve.

A current limitation of virtual thread implementation is that performing blocking operations inside synchronized blocks or methods can lead to the JDK's virtual thread scheduler blocking precious operating system threads, which we refer to as pinning. Performing blocking operations outside these blocks or methods does not cause pinning. If the blocking operations are both lengthy and frequent, pinning can negatively impact the server throughput. Protect short-term operations, such as memory operations, or operations that do not frequently use synchronized blocks or methods.

If there are places where pinning is both long-lasting and frequent, replace synchronized with ReentrantLock in those specific locations (there is no need to replace synchronized in places where the protection time is short or operations are infrequent). Below is an example of a synchronized block that is used for a long time and frequently.

synchronized(lockObj) {

frequentIO();

}Replace with the following implementation:

lock.lock();

try {

frequentIO();

} finally {

lock.unlock();

}JDK: OpenJDK21.0.4

Physical machine: Win11 & i5-14600KF (14 cores and 20 threads)

public class PerformanceTest {

private static final int REQUEST_NUM = 10000;

public static void main(String[] args) {

long vir = 0, p1 = 0, p2 = 0, p3 = 0, p4 = 0;

for (int i = 0; i < 3; i++) {

vir += testVirtualThread();

p1 += testPlatformThread(200);

p2 += testPlatformThread(500);

p3 += testPlatformThread(800);

p4 += testPlatformThread(1000);

System.out.println("--------------");

}

System.out.println("Average duration of virtual threads:" + vir / 3 + "ms");

System.out.println("Average duration of platform threads [200]:" + p1 / 3 + "ms");

System.out.println("Average duration of platform threads [500]:" + p2 / 3 + "ms");

System.out.println("Average duration of platform threads [800]:" + p3 / 3 + "ms");

System.out.println("Average duration of platform threads [1000]:" + p4 / 3 + "ms");

}

private static long testVirtualThread() {

long startTime = System.currentTimeMillis();

ExecutorService executorService = Executors.newVirtualThreadPerTaskExecutor();

for (int i = 0; i < REQUEST_NUM; i++) {

executorService.submit(PerformanceTest::handleRequest);

}

executorService.close();

long useTime = System.currentTimeMillis() - startTime;

System.out.println("Virtual thread duration:" + useTime + "ms");

return useTime;

}

private static long testPlatformThread(int poolSize) {

long startTime = System.currentTimeMillis();

ExecutorService executorService = Executors.newFixedThreadPool(poolSize);

for (int i = 0; i < REQUEST_NUM; i++) {

executorService.submit(PerformanceTest::handleRequest);

}

executorService.close();

long useTime = System.currentTimeMillis() - startTime;

System.out.printf("Platform thread [%d] duration:%dms\n", poolSize, useTime);

return useTime;

}

private static void handleRequest() {

try {

Thread.sleep(300);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

}

}Test results:

Virtual thread duration: 654ms

Platform thread [200] duration: 15,551ms

Platform thread [500] duration: 6,241ms

Platform thread [800] duration: 4,069ms

Platform thread [1000] duration: 3,137ms

--------------

Virtual thread duration: 331ms

Platform thread [200] duration: 15,544ms

Platform thread [500] duration: 6,227ms

Platform thread [800] duration: 4,047ms

Platform thread [1000] duration: 3,126ms

--------------

Virtual thread duration: 326ms

Platform thread [200] duration: 15,552ms

Platform thread [500] duration: 6,228ms

Platform thread [800] duration: 4,054ms

Platform thread [1000] duration: 3,151ms

--------------

Average duration of virtual threads: 437ms

Average duration of platform threads [200]: 15,549ms

Average duration of platform threads [500]: 6,232ms

Average duration of platform threads [800]: 4,056ms

Average duration of platform threads [1000]: 3,138msSince virtual threads can be created without limits, whereas platform threads are constrained by the size of the thread pool, 10,000 requests cannot be processed simultaneously. Subsequent requests have to wait until previous requests are processed and release the threads, resulting in significantly longer durations compared with using virtual threads.

springboot-web version (Tomcat/10.1.19): 3.2.3 / springboot-webflux version (Netty): 3.2.3

Write a simple test program, using Thread.sleep to simulate a 300ms blocking and using JMeter to simulate concurrent requests from 3,000 users.

Web version program:

@RestController

public class TestController {

@GetMapping("get")

public String get() {

try {

// System.out.println(Thread.currentThread());

Thread.sleep(300);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

return "ok";

}

}Control the number of threads and whether to enable virtual threads through the application.yaml configuration file:

server:

tomcat:

threads:

max: 200

spring:

threads:

virtual:

enabled: false # specifies whether to enable the virtual threadWebFlux version program:

@Configuration

public class TestWebClient {

@Bean

public RouterFunction<ServerResponse> routes() {

return route(

GET("/get"),

request -> ok()

.contentType(MediaType.APPLICATION_JSON)

.body(fromPublisher(Mono.just("ok").delayElement(Duration.ofMillis(300)), String.class))

);

}

}| Number of Used Platform Threads | Throughput (req/s) | Average Response Time (ms) | 90% | 95% | 99% | |

|---|---|---|---|---|---|---|

| Virtual Thread | 20 | 5217 | 316 | 311 | 344 | 354 |

| Platform Thread | 200 | 624.5 | 2660 | 4407 | 4782 | 4801 |

| Platform Thread | 512 | 1564.1 | 984 | 1683 | 1693 | 1787 |

| Platform Thread | 800 | 2340 | 661 | 1067 | 1070 | 1075 |

| WebFlux | 5281.3 | 310 | 374 | 321 | 325 |

It can be observed that the throughput with virtual threads and WebFlux reactive programming far exceeds that of using regular thread pools, and the throughput with virtual threads is not inferior to that of WebFlux. Virtual threads do not require complex reactive programming. As a result, high throughput can be achieved simply by configuring the use of virtual threads.

In summary, the introduction of Java virtual threads represents a revolution in modern concurrent programming models. It not only simplifies the complexity of concurrent programming but also significantly enhances the concurrency handling capability and resource utilization of applications. This provides new ideas and tools for building high-performance and scalable server-side applications. As the technology matures and becomes more widely adopted, virtual threads are likely to become a standard practice in Java concurrent programming.

• Virtual Threads :

https://docs.oracle.com/en/java/javase/21/core/virtual-threads.html#GUID-DC4306FC-D6C1-4BCC-AECE-48C32C1A8DAA

• JEP 444: Virtual Threads :

https://openjdk.org/jeps/444#Thread-local-variables

• Spring WebFlux :

https://springdoc.cn/spring-webflux/

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

1,345 posts | 471 followers

FollowAlibaba Cloud Native Community - March 29, 2023

Alibaba Cloud Native Community - February 24, 2023

Alibaba Cloud Native - October 9, 2021

Alibaba Cloud Community - May 9, 2024

Ye Tang - March 9, 2020

OpenAnolis - September 28, 2022

1,345 posts | 471 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community