By Taosu

In this series of articles on interview questions for technicians, today's topic is the JVM. We will cover the basics you need to know about JVM in interviews. This article is divided into two parts due to its length. The first part covers the division of JVM memory, the JVM class loading process, and JVM garbage collection (GC). The second part will focus on how to perform online troubleshooting.

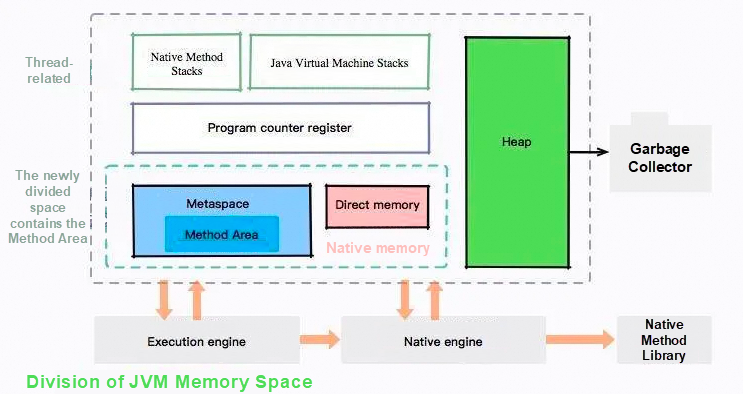

Heap, Method Area (Metaspace), Java Virtual Machine Stacks, Native Method Stacks, and Program counter (PC) register.

The memory for object instances and arrays is allocated on the heap which is an area shared by threads to store object instances. The heap is also the main area for garbage collection (GC). After the escape analysis (EA) is enabled, some non-escaped objects can be allocated on the stack through scalar replacement.

Heap subdivision: the Young Generation and the Old Generation. The Young Generation can be divided into: Eden space, Survivor1 space, and Survivor2 space.

The JVM method area can also be called the permanent generation (PermGen) which stores class information, constants, and static variables that have been loaded by JVM. Since JDK 1.8, the concept of method area has been canceled and replaced by metaspace.

When there are too many Java classes in the application, for example, Spring and other frameworks that use dynamic proxies to generate many classes, if the occupied space exceeds the specified value, the Out of Memory (OOM) of metaspace occurs.

Java virtual machine stacks are private to threads, and their lifecycles are the same as those of threads. There are stack frames stored one by one. A new stack frame is created each time a method is executed. The stack frame is used to store the local variable table, operand stack, dynamic linking, and return address. In the JVM specification, two exceptions are specified in this area: if the stack depth requested by the thread is greater than the depth permitted by JVM, a StackOverflowError will be thrown; if the Java virtual machine stacks cannot apply for enough memory when dynamically expanded, an OutOfMemoryError will be thrown.

• Local variable table: a local variable table is a space to store a set of variables such as method parameters and local variables defined in methods. The bottom layer is the variable slot.

• Operand stack: the operand stack records the pushing and popping process of the bytecode instruction into and from the operand stack during the execution of a method. The size of the operand stack is determined at compile-time. The operand stack is empty when a method is at the beginning of execution. During the execution of the method, various bytecode instructions will be pushed into and pop from the operand stack.

• Dynamic linking: there are many symbolic references in the bytecode file. Some of these symbolic references are converted into direct references during the resolving phase of class loading or the first use, which is called static resolving. The other parts are converted into direct references during runtime, which is called dynamic linking.

• Return address: the return address is a type that points to the address of bytecode instructions.

Just In Time Compiler (JIT Compiler):

During runtime, JVM will compile hot codes into machine codes related to the local platform and perform various levels of optimization such as lock coarsening to improve the execution efficiency of hot codes.

Native method stacks are similar to the Java virtual machine stacks, except that Java virtual machine stacks serve Java methods, while native method stacks serve native methods. In the HotSpot virtual machine implementation, the native method stack and the virtual machine stack are combined. Similarly, StackOverflowError and OOM exceptions will be thrown.

PC refers to a pointer to the location where the next instruction is stored. It is a small memory space that is private to the thread. Due to thread switching, the CPU needs to remember the location of the next instruction of the original thread during execution, so each thread needs to have its own PC.

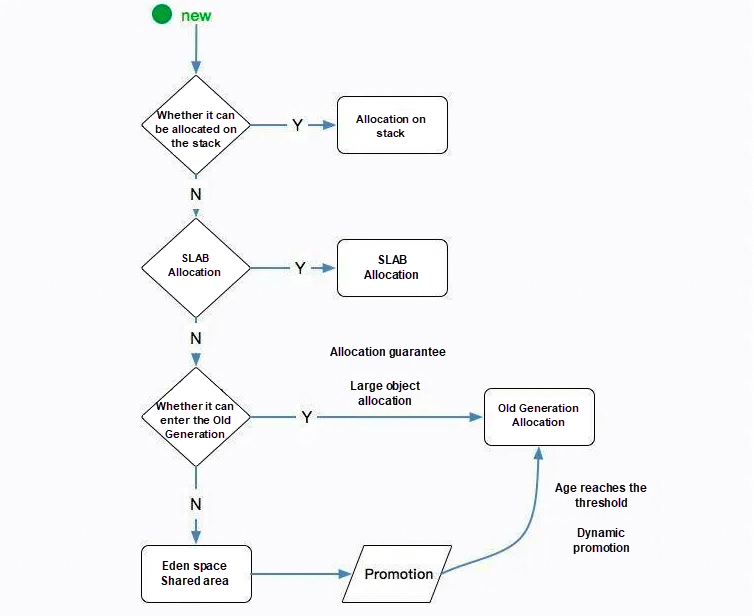

• Objects are preferentially allocated in the Eden space. If the Eden space has insufficient memory for allocation, JVM executes MinorGC once. However, those surviving objects that do not need to be reclaimed will be stored in the From space of Survivor. If the From space has insufficient memory, it will directly enter the Old Generation.

• Large objects that require a lot of contiguous memory space directly enter the Old Generation. The purpose of this is to avoid a large number of memory copies between the Eden space and the two Survivor spaces (the Young Generation uses a copying algorithm to collect memory).

• Long-lived objects enter the Old Generation. JVM defines an age counter for each object. If the object passes through a Minor GC, it will enter the Survivor space. After that, the age of the object will be increased by 1 for each Minor GC until the threshold value is reached (15 times by default), and then the object will enter the Old Generation.

(Dynamic object age determination: the program starts to accumulate from the smallest object. If the size of the accumulated objects is greater than the half size of the Survivor space, the current object age is used as the new threshold. Objects whose age is greater than this threshold directly enter the Old Generation.)

• Every time a Minor GC is performed or a large object directly enters the Old Generation, JVM calculates the required space size. If it is smaller than the remaining value size of the Old Generation, a Full GC is performed.

Steps: check class loading, allocate memory, initialize zero value, set object header, and execute init method

When JVM encounters the new instruction, it checks whether the symbolic reference of this class can be located in the constant pool and whether the class represented by this symbolic reference has been loaded, resolved, and initialized. If not, the corresponding class loading process must be executed first.

After checking the class loading, JVM will allocate memory for the newly created object which can be allocated in two ways: "pointer bumping" and "free list". The allocation method is determined by whether the Java heap is structured, and the structured Java heap is determined by whether the garbage collector has the compacting function.

After the memory allocation is completed, JVM needs to initialize the allocated memory space to zero value. This step ensures that the object's instance fields can be directly used without the initial value in the Java code and that the program can access the corresponding zero value of these fields' data type.

After the initialization of zero value is completed, JVM should perform the necessary settings for the object, such as the instance of which class the object is, how to find the metadata information of the class, the hash code of the object, and the GC generation and age of the object. This information is stored in the object header. In addition, according to the current states of JVM, such as whether the bias lock is enabled, the object header will have different setting methods.

Judging from the perspective of JVM, the new object has been created, but judging from the perspective of the Java program, the method has not been executed and all fields are still zero. Therefore, generally speaking (except for circular dependence), the new instruction will be executed and followed by the execution of the method. In this way, we can say that a real usable object is created.

A common object reference relationship is a strong reference.

Soft reference is used to maintain optional objects. Only when the memory is insufficient will the system reclaim the soft-reference object. If there is still insufficient memory after the soft-reference object is reclaimed, the OOM exception will be thrown.

Weak reference is more useless than soft reference and has a shorter lifecycle. When JVM performs GC, the objects associated with weak references are reclaimed regardless of whether the memory is sufficient.

Phantom reference is a non-existent reference that is not used much in real-world scenarios. It is primarily used to track the activity of an object that has been performed GC.

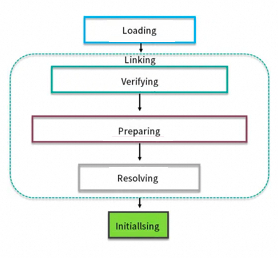

Process: loading, verifying, preparing, resolving, and initializing

It is the phase of formally allocating memory for class variables and setting initial values for class variables. It initializes the object to a "zero" value.

During resolving, JVM replaces symbolic references within the constant pool with direct references.

String constant pool: is the static constant pool of the default class file on the heap.

Runtime constant pool: is located in the method area and belongs to the metaspace.

It is the last step in the loading process, and this phase is also the real beginning of executing the Java program code defined in the class.

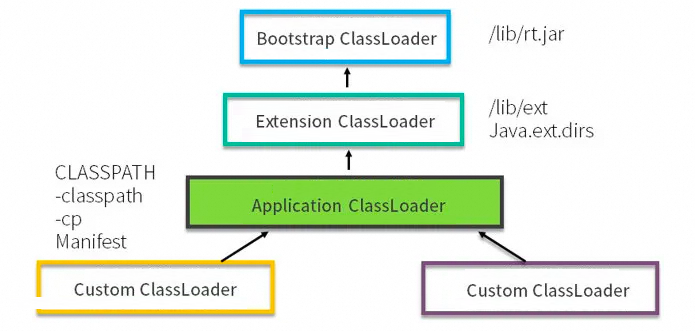

Every class has a corresponding class loader. The class loader in the system defaults to using the parent delegation model during collaborative work. It means that when the class is loaded, the system will first judge whether the current class has been loaded. Classes that have already been loaded will be returned directly, otherwise, the system will try to load. During loading, the request will first be delegated to the loadClass() of the parent class loader for processing, so all requests should eventually be passed to the top-level startup class loader BootstrapClassLoader. When the parent class loader cannot handle it, it is handled by itself. When the parent class loader is null, the startup class loader BootstrapClassLoader will be used as the parent class loader.

This mechanism ensures that the JDK core class is loaded first. It enables the stable operation of the Java program, avoids the repeated loading of classes, and ensures that the Java core API is not tampered with. If the parent delegation model is not used and each class loader loads itself, there will be some problems, for example, if we write a class called java.lang.Object, then when the program is running, the system will have multiple different Object classes.

• You can define a class loader and override the loadClass method.

• Tomcat can load class files in its own directory and will not pass them to the parent class loader.

• For Java SPI, the initiator BootstrapClassLoader has already been at the top layer. It directly obtains AppClassLoader for driver loading, which is opposite to the parent delegation mechanism.

To sum up, when WebAppClassLoader loads classes, we deliberately break the JVM parent delegation mechanism, bypass AppClassLoader, and directly use ExtClassLoader to load classes first.

Add a reference counter to the object. Every time a reference is attached, the counter value is increased by 1. When the reference fails, the counter value is decreased by 1. An object whose counter is zero at any time cannot be used again.

Advantages: simple implementation and high efficiency of judgment

Disadvantages: difficult to solve the problem of circular references between objects and abandoned

From the series of objects that become GC Roots (various references related to active threads, Java virtual machine stack frame references, static variable references, and JNI references), search downward from these node ReferenceChains. The path traveled by the search process becomes the reference chain. When there is no reference chain between an object and GC ROOTS, it is proved that the object is unavailable.

The finalize() method of the object is called before the object is reclaimed. For two marking processes, for the first time, an object that is not in the "relationship" is marked. For the second time, it is necessary to judge whether the object has implemented the finalize() method. If not, it is directly judged that the object is reclaimable. If it has, it will be placed in a queue first, and a low-priority thread established by JVM will execute it. Then a second small-scale marking will be carried out, and the marked object will be truly reclaimed this time.

GC Algorithms: copying, mark-sweep, mark-compact, and generational collection

Copying (Young):

Divide the memory into two blocks of the same size. Each time one of the two is used. When the memory of one block is used up, the surviving objects are copied to another block, and then the used space is swept at once. In this way, each memory reclaiming is to reclaim half of the memory space.

Advantages: it is simple to implement with high memory efficiency and is not prone to fragmentation.

Disadvantages: the memory is compacted by half. If there are many surviving objects, the efficiency of the copying algorithm will be greatly reduced.

Mark-sweep (CMS):

Mark all objects that need to be reclaimed, and reclaim all marked objects after the marking is completed.

Disadvantages: the efficiency is low. A large number of discontinuous fragments will be generated, and space needs to be reserved for floating garbage in the allocation phase.

Mark-compact (Old):

The marking process is still the same as the "mark-sweep" algorithm. All surviving objects are moved to one end, and then the memory outside the end boundary is directly swept. This solves the problem of generating a large number of discontinuous fragments.

Generational Collection:

Select the appropriate GC algorithm according to the characteristics of each generation.

The Young Generation adopts the copying algorithm. The Young Generation needs to reclaim most objects during each GC. There are few surviving objects, that is, there are few operations that need to be copied. Generally, the Young Generation is divided into a larger Eden space and two smaller Survivor spaces (From Space and To Space). Each time it uses the Eden space and one of the Survivor spaces, and the surviving objects in the two spaces are copied to the other Survivor space when reclaiming.

The surviving rate of objects in the Old Generation is relatively high, and there is no extra space for allocation guarantees, so we must choose the "mark-sweep" or "mark-compact" algorithm for GC.

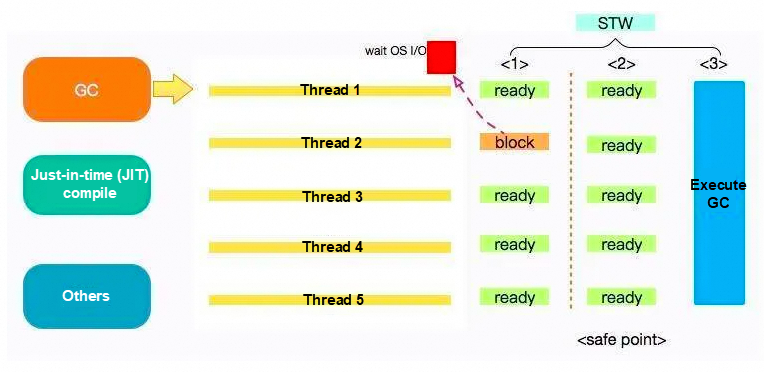

Safepoint: when GC occurs, all user threads must be stopped before GC can be performed. In this state, we can consider JVM to be safe and the state of the entire heap to be stable. If a thread cannot enter the safepoint for a long time before GC, then the entire JVM will wait for this blocked thread, resulting in a longer time for the overall GC.

MinorGC occurs when the Young Generation has insufficient space.

MajorGC refers to the GC of the Old Generation and is often accompanied by MinorGC.

FullGC

In most cases, objects are allocated in the Eden space of the Young Generation. When the Eden space is insufficient, Minor GC is initiated.

Large objects are those that require contiguous memory space, such as long strings and arrays. The purpose of the direct allocation of the Old Generation is to avoid a large number of memory copies between the Eden space and the Survivor space.

JVM defines an age counter for each object. After the object is born in the Eden space, if it passes through a Minor GC, it will enter the Survivor space. At the same time, the age of the object becomes 1, and when it increases to a certain threshold (the threshold is 15 by default), it will enter the Old Generation.

For better adaptation to the memory conditions of different programs, JVM does not always require that the age of objects must reach a threshold before the objects enter the Old Generation. If the sum space of all objects at the same age in the Survivor space is greater than half of the Survivor space, objects whose age is greater than or equal to this age directly enter the Old Generation.

Before Minor GC occurs, JVM checks whether the maximum available contiguous space in the Old Generation is greater than the sum space of all objects in the Young Generation. If this condition holds, then Minor GC can be ensured to be safe. If not, full GC is performed.

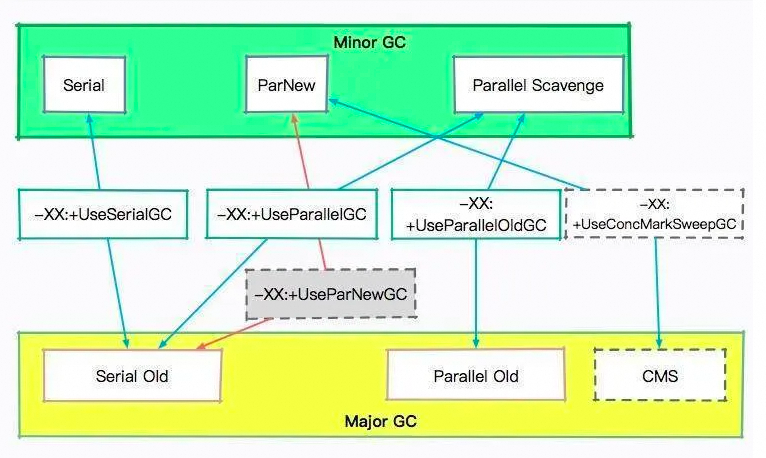

Serial is a single-threaded collector that not only uses only one CPU or one thread to complete GC work but also must stop all other working threads during GC until the end of GC. It is suitable for a client-side garbage collector.

ParNew garbage collector is actually a multi-threaded version of the Serial collector. It also uses a copying algorithm and behaves exactly the same as the Serial collector except for using multiple threads for GC. The ParNew garbage collector also stops all other working threads during GC.

The Parallel Scavenge collector focuses on throughput (efficient use of CPU). Garbage collectors such as CMS focus more on the pause time of user threads to improve the user experience. High throughput can make the most efficient use of CPU time, and complete computing tasks of the program as soon as possible. It is mainly suitable for background computing tasks without too many interactions.

Serial Old is the Old Generation version of the Serial collector. It is also a single-threaded collector and uses a mark-compact algorithm. There are two main uses:

• Used with the Young Generation of Parallel Scavenge collector in versions before JDK 1.5.

• Used as a backup GC scheme for using the CMS collector in the Old Generation.

Parallel Old is the Old Generation version of the Parallel Scavenge collector. It is a multi-threaded collector and uses a "mark-compact" algorithm.

CMS collector is a kind of Old-Generation garbage collector. The main goal is to obtain the shortest GC pause time. Different from other Old Generation collectors that use a mark-compact algorithm, it uses a multi-threaded mark-sweep algorithm. The shortest GC pause time can improve the user experience for highly interactive programs. The CMS working mechanism is more complex than that of other garbage collectors. The whole process is divided into the following four stages:

Initial mark: it just marks the objects that GC Roots can directly associate with, which is very fast, STW (Stop The World).

Concurrent mark: it is the process of ReferenceChains tracking, which works with user threads without the need to stop working threads.

Remark: it is to correct the marking record of those objects that have changed due to the continuous running of the user program during concurrent marking, STW.

Concurrent sweep: it sweeps the objects that are unreachable for GC Roots, which works with user threads without the need to stop working threads.

Since the GC thread can now work concurrently with the user during the most time-consuming concurrent mark and concurrent sweep processes, the memory reclaiming of the CMS collector and the user thread are executed concurrently together in general.

Advantages: concurrent collection and low pauses

Disadvantages: sensitive to CPU resources, unable to deal with floating garbage, and the use of the "mark-sweep" algorithm will lead to a large amount of space fragments

It is a server-oriented garbage collector mainly for machines equipped with multiple processors and large-capacity memory. While meeting the GC pause time requirements with a high probability, it also has high throughput performance characteristics. The two most prominent improvements of the G1 collector over the CMS collector are:

[1] it is based on the mark-compact algorithm without the generation of memory fragments.

[2] the pause time can be controlled very precisely to achieve low pause GC without sacrificing throughput.

The G1 collector avoids region-wide GC. It divides the heap memory into several independent regions with a fixed size, tracks the GC progress in these regions, and maintains a priority list in the background, which gives priority to the region with the most garbage each time according to the permitted collection time. Mechanisms of space division and priority space collection ensure that the G1 collector can achieve the highest GC efficiency within a limited time.

• Initial mark: uses only one initial marking thread to mark objects associated with GC Roots, STW.

• Concurrent mark: uses a marking thread to execute concurrently with the user thread. This process performs the reachability analysis and is very slow.

• Final mark: uses multiple marking threads to execute concurrently, STW.

• Filter and reclaim: reclaims abandoned objects with STW at this time and uses multiple filtering and reclaiming threads to execute concurrently.

Coloring technology: speeds up the marking process

Read barrier: resolves the STW problem caused by concurrency between GC and the application

• Support for TB-level heap memory (maximum 4 TB, maximum 16 TB of JDK13)

• Maximum GC pause 10 ms

• Maximum impact on throughput, no more than 15%

• The first is the memory size problem. Basically, I will set an upper limit for each memory space to avoid overflow problems, such as metaspace.

• Usually, I will set the heap space to 2/3 of the operating system, and G1 is preferred for the heap over 8 GB.

• Then I will make preliminary optimizations to JVM, such as adjusting the ratio between the Young Generation and the Old Generation according to the object promotion speed of the Old Generation.

• Based on system capacity, access latency, and throughput, our services are highly concurrent and time-sensitive to STW.

• I will find this bottleneck by recording detailed GC logs, and locate the problem by using log analysis tools such as GCeasy.

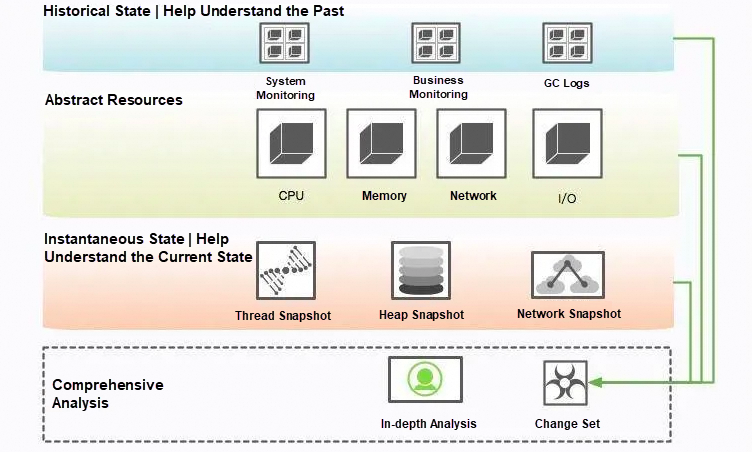

By corresponding to the JVM state of the process, locate and solve the problem and make the corresponding optimization.

Common commands: jps, jinfo, jstat, jstack, and jmap

jps: views Java process and related information

jps -l outputs jar package path and the full name of the class

jps -m outputs the main parameter

jps -v outputs the JVM parameterjinfo: views the JVM parameter

jinfo 11666

jinfo -flags 11666

Xmx、Xms、Xmn、MetaspaceSizejstat: views the state information of JVM runtime, including memory state and GC

jstat[option] LVMID[interval] [count] LVMID is the process id. interval is the printing interval (milliseconds). count is the number of printing times (always printing by default). option parameter explanation: -gc is the statistics on the GC heap behavior. -gccapacity is the capacity of each generation GC ( young, old, perm) and their corresponding space statistics. -gcutil is the overview of GC statistics. -gcnew is the statistics on Young Generation behaviors. -gcold is the statistics on both Young Generation and Old Generation behaviors.jstack: views JVM thread snapshots. The jstack command can be used to locate the causes of thread stuttering for a long time, such as deadlocks and dead loops.

jstack [-l] < pid > (connecting to the running process) option parameter explanation: -F forcibly outputs the thread stack when the use of jstack < pid > is not responded. -m outputs both Java and native stack (mixed mode). -l additionally displays lock information.jmap: views memory information (used with jhat)

jmap [option] <pid> (connecting to the executing process) option parameter explanation: -heap prints the Java heap abstract. -dump: < dump-options> generates the Java heap dump file.It supports Lamda expressions and stream operations on sets to improve HashMap performance

// It is a new reloading method of the iterate method in the Stream API. You can specify when to end the iteration of IntStream.iterate(1, i -> i < 100, i -> i + 1).forEach(System.out::println);G1 garbage collector by default

It focuses on improving the worst-case waiting of G1 through full GC parallelism.

ZGC (strategy for concurrent reclaiming): a local variable syntax in which 4 TB is used for Lambda parameters.

Shenandoah GC (GC algorithm): no relationship between the pause time and the size of the heap. Parallel attention is paid to the pause response time.

It adds ZGC to return unused heap memory to the operating system. (16 TB)

It removes the CMS garbage collector and deprecates the GC algorithm combination of ParallelScavenge and SerialOldGC.

It applies the ZGC garbage collector to macOS and Windows platforms.

If there is a problem with an instance, choose whether to restart it in a hurry according to the situation. If the CPU and memory usage increase or an OOM exception occurs in the log, the steps are: isolating, site retaining, and troubleshooting.

Remove your machine from the request list, such as setting the weight related to nginx to zero.

View CPU and system memory. The historical state can reflect a trending problem, and the acquisition of this information generally relies on the cooperation of the monitoring system.

ss -antp > $DUMP_DIR/ss.dump 2>&1The reason for using the ss command instead of netstat is that the execution of netstat is very slow when there are a lot of network connections.

For subsequent processing, you can check the organization of various network connection states to troubleshoot TIME_WAIT, CLOSE_WAIT, or other high connection issues, which is very useful.

netstat -s > $DUMP_DIR/netstat-s.dump 2>&1It can output the statistics according to the various protocols. It is very useful to know the state of the entire network at that time.

sar -n DEV 1 2 > $DUMP_DIR/sar-traffic.dump 2>&1In some very high-speed modules, such as Redis and Kafka, it often runs all of the network interface controllers. The manifestation is that the network communication is very slow.

lsof -p $PID > $DUMP_DIR/lsof-$PID.dumpBy viewing the process, you can see which files are open and the usage of the entire resource from the perspective of the process, including each network connection and each open file handle. At the same time, you can easily view which servers are connected and which resources are used. The output of this command is slightly slow when there are many resources. Please wait patiently.

mpstat > $DUMP_DIR/mpstat.dump 2>&1vmstat 1 3 > $DUMP_DIR/vmstat.dump 2>&1sar -p ALL > $DUMP_DIR/sar-cpu.dump 2>&1uptime > $DUMP_DIR/uptime.dump 2>&1It is mainly used to output the CPU and load of the current system for troubleshooting afterward.

iostat -x > $DUMP_DIR/iostat.dump 2>&1Generally, the I/O resource of compute-based service nodes is normal, but sometimes problems occur, such as excessive log output or disk problems. This command can output the basic performance information of each disk for troubleshooting I/O problems. The GC log sub-disk problem introduced in Lesson 8 can be found by using this command.

free -h > $DUMP_DIR/free.dump 2>&1The free command can generally show the memory profile of the operating system, which is a very important point in troubleshooting. For example, SWAP affects GC and the SLAB space occupies the JVM memory.

ps -ef > $DUMP_DIR/ps.dump 2>&1dmesg > $DUMP_DIR/dmesg.dump 2>&1sysctl -a > $DUMP_DIR/sysctl.dump 2>&1The dmesg is the last clue left by many quietly dead services. Of course, as the most frequently executed command, ps will affect the system and JVM due to the configuration parameters of the kernel, so we also output a copy of dmesg.

${JDK_BIN}jinfo $PID > $DUMP_DIR/jinfo.dump 2>&1This command will output the basic process information of Java, including environment variables and parameter configurations. You can check whether JVM problems occur caused by some incorrect configurations.

${JDK_BIN}jstat -gcutil $PID > $DUMP_DIR/jstat-gcutil.dump 2>&1${JDK_BIN}jstat -gccapacity $PID > $DUMP_DIR/jstat-gccapacity.dump 2>&1jstat will output the current gc information. In general, you can basically find a clue. If not, you can use jmap to analyze.

${JDK_BIN}jmap $PID > $DUMP_DIR/jmap.dump 2>&1${JDK_BIN}jmap -heap $PID > $DUMP_DIR/jmap-heap.dump 2>&1${JDK_BIN}jmap -histo $PID > $DUMP_DIR/jmap-histo.dump 2>&1${JDK_BIN}jmap -dump:format=b,file=$DUMP_DIR/heap.bin $PID > /dev/null 2>&1jmap will get the dump information of the current Java process. As shown above, the fourth command is the most useful, but the first three can give you a preliminary overview of the system because the files generated by the fourth command are generally very large. Moreover, you need to download it and import it into tools such as MAT for in-depth analysis and results. This is a necessary process for analyzing memory leaks.

${JDK_BIN}jstack $PID > $DUMP_DIR/jstack.dump 2>&1jstack will obtain the execution stack at that time. Generally, the value is taken multiple times. We can take it once here. This information is very useful to restore the thread information in the Java process.

top -Hp $PID -b -n 1 -c > $DUMP_DIR/top-$PID.dump 2>&1For obtaining more detailed information, we can use the top command to obtain the CPU information of all threads in the process, so that we can see where the resources are consumed.

kill -3 $PIDSometimes, jstack cannot run. There are many reasons, for example, the Java process is almost unresponsive. We will try to send a kill -3 signal to the process. This signal will print the trace information of jstack to the log file, which is an alternative to jstack.

gcore -o $DUMP_DIR/core $PIDFor the problem that jmap cannot be executed, there is also a substitute, that is, gcore in the GDB component will generate a core file. We can use the following command to generate a dump:

${JDK_BIN}jhsdb jmap --exe ${JDK}java --core $DUMP_DIR/core --binaryheapThere is a little information about the jmap command which was killed in version 9 and replaced by jhsdb. You can use it as the following command.

jhsdb jmap --heap --pid 37340jhsdb jmap --pid 37288jhsdb jmap --histo --pid 37340jhsdb jmap --binaryheap --pid 37340Generally, memory overflow is manifested in the form of a continuous increase in the occupancy of the Old Generation. Even after multiple rounds of GC, there is no significant improvement. For example, for GC Roots in ThreadLocal, the root of the memory leak is that these objects do not cut off the relationship with GC Roots, and their connection can be seen through some tools.

There is a report system where memory overflow occurs frequently, and service rejections occur frequently during peak periods of use. Since most users are administrators, they will soon feed back to the R&D.

The business scenario is that some result sets do not have all the fields, therefore, you need to loop the result sets and fill data by using HttpClient to call the interfaces of other services. Guava is used for caching within JVM, but the response time is still very long.

Initial troubleshooting shows that JVM has too few resources. Each time interface A performs report calculation, it involves hundreds of megabytes of memory and resides in the memory for a long time. In addition, some calculations have very high CPU and resource requirements. However, the memory allocated to JVM is only 3 GB. When many people access these interfaces, the memory is not enough, so OOM occurs. In this case, there is no way but to upgrade the machine. You can solve the OOM problem by upgrading the machine configuration to 4C8G and allocating 6 GB of memory to JVM. However, this is followed by frequent GC problems and extremely long GC time, with an average GC time of more than 5 seconds.

Further, since the report system is not the same as the high-concurrency system, its objects are much larger when they are alive, so you cannot solve the problem by just adding Young Generations. Moreover, if you add Young Generations, the size of Old Generations must be reduced as a result. Due to the CMS fragments and floating garbage, you will have less available space. Although the service can meet the current demand, there are some uncertain risks.

First, we have learned that there are a lot of cached data and static statistics in the program. We have adjusted the MaxTenuringThreshold parameter to 3 (special configuration for special scenarios) by analyzing the age distribution of objects printed by GC logs to reduce the number of MinorGC. This parameter is to let these objects of the Young Generation move to the Old Generation and not stay in the Young Generation for a long time.

Second, our GC takes a long time, so we have opened the parameter CMSScavengeBeforeRemark together so that Minor GC will be executed to clear the new generation before the CMS remark. At the same time, with the previous parameter, the effect is relatively good. On the one hand, the object is quickly promoted to the Old Generation. On the other hand, the objects of the Young Generation, in this case, are limited, and the time occupied is also limited in the entire MajorGC.

Third, there are a large number of weak references due to cache usage. Taking a 10-second GC as an example, we have found that in the GC log, it takes a long time of 4.5 seconds to process weak refs. Here you can accelerate the processing speed and shorten the time by adding the parameter ParallelRefProcEnabled to parallel processing the reference.

After optimization, the effect is good, but not particularly obvious. After evaluating and investigating the situation during peak periods, we have decided to improve the performance of the machine again by switching to an 8Core 16G machine. However, this poses another problem.

High-performance machines brings large service throughput. jstat monitoring shows that the allocation rate of the Young Generation is significantly improved, but the consequent MinorGC duration becomes uncontrollable, sometimes exceeding 1 second. The cumulative requests have had even more serious consequences.

This is due to the longer recovery time caused by the significantly larger heap space. To achieve a shorter pause time, we have switched to the G1 garbage collector on the heap and set its target at 200 ms. G1 is a very good garbage collector. It is suitable for applications with large heap memory and simplifies the tuning work at the same time. With the main parameters' initial goal, the maximum heap space goal, and the maximum tolerated GC pause goal, you can get a relatively good performance. After the modification, although GC is more frequent, the pause time is relatively short and the application runs smoothly.

So far, it has only barely withstood the existing business. At this time, the leader requires the report system to support the business growth of 10 to 100 times in the next two years and maintain its availability. However, this report system will go down with a little pressure measurement. How can it cope with the pressure of 10 to 100 times? Even if the hardware can be dynamically expanded, there is a limit after all.

By using MAT to analyze heap snapshots, we can find many places that can be optimized by code. For those objects that take up a lot of memory:

Each step makes the use of JVM more available. After a series of optimizations, the performance of the machine with the same pressure measurement data is improved several times.

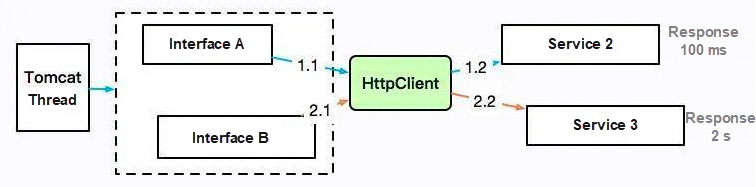

Some data needs to be obtained and completed by using HttpClient. The response time of the service provider that provides data may be very long, and the service may be blocked as a whole.

Interface A accesses service 2 through HttpClient, and the response is returned 100 ms later. Interface B accesses service 3, which takes 2 seconds. HttpClient itself has a limit on the maximum number of connections. If service 3 does not return, the number of connections to HttpClient will reach the upper limit. Generally speaking, for the same service, the overall service is unavailable due to a time-consuming interface.

At this time, through jstack printing of the stack information, it will be found that most of them are blocked on interface A instead of interface B which takes a longer time. It is very confusing at first, but after analysis, we guess that it is actually because interface A is faster and more requests are entered at the point where the problem occurs. All of them are blocked and printed out at the same time.

To verify this guess, I have built a demo project to simulate two interfaces that use the same HttpClient. The fast interface is used to access Baidu and can be returned soon. The slow interface accesses Google. Due to the well-known reason, it will be blocked until timeout, about 10 s. Use ab to perform pressure measurement on the two interfaces and use the jstack tool dump stack. First, use the jps command to find the process number, and then redirect the results to the file (you can refer to the 10271.jstack file).

Filter the nio keyword to check tomcat-related threads. There are 200 threads, which coincides with the default maxThreads number of Spring Boot. What's more, most of the threads are in the BLOCKED state, indicating that the thread times out while waiting for resources. According to the analysis of grep fast | wc -l, 150 of 200 are blocked fast processes.

When the problem is found, the solution can be completed.

(1) The competition between the fast and the slow for connecting resources can be solved by thread pool throttling or circuit-breaking processing.

(2) Sometimes slow threads are not always slow, so monitoring has to be added.

(3) You can control the execution sequence logic of threads by using countdownLaunch.

There was an instance of a service, and service stutters often occurred. Since the concurrency of the service was relatively high, for every additional pause for 1 second, tens of thousands of user requests would feel delayed.

Therefore, we counted and compared the CPU, memory, network, and I/O resources of other instances of this service. The difference was not very big, so we once suspected that it was a problem with the machine hardware.

Next, we compared the GC logs of the node and found that the time spent on this node was much longer than that of other instances, whether it was Minor GC or Major GC.

Through careful observation, we found that when GC occurred, the si and so of vmstat soared very seriously, which was obviously different from other instances.

We used the free command to confirm again. It was found that the proportion of used SWAP partitions was very high. What was the specific reason?

For more detailed operating system memory distribution, from the /proc/meminfo file we could see the size of the specific logic memory block. There were up to 40 items of memory information that could be obtained by traversing some files in the /proc directory. We noticed that the slabtop command showed some exceptions. The dentry (the directory high-speed cache) usage was very high.

The problem was finally located that an O&M engineer regularly executed a command when deleting logs:

find / | grep "xxx.log"He wanted to find a log file called to be deleted and see which server it was on. As a result, these old servers had too many files, and the file information was cached in the slab area after scanning. However, when the server turned on SWAP, the operating system did not release the cache immediately after finding that the physical memory was full, resulting in every GC having to deal with the hard disk once.

The solution was to disable the SWAP partition.

Swap is often the main reason for performance issues in many scenarios, and it is recommended to disable it. In high concurrency situations, using SWAP can lead to a significant drawback: the process may continue running, but the long GC time becomes intolerable.

I once encountered an issue online. After a reboot and running the jstat command, I noticed that the Old Generation space was continuously growing. By using the jmap command, I exported a heap dump and then analyzed it with MAT. My analysis of the GC Roots revealed a very large HashMap, initially intended for caching by a colleague. Unfortunately, it was an unbounded cache without a timeout or an LRU policy. Furthermore, my colleague hadn't overridden the hashCode and equals methods for the key class, which meant objects couldn't be retrieved, leading to a constant increase in heap memory usage. Ultimately, switching the cache to Guava's Cache with weak references set resolved the issue.

As for the file processor application, after reading or writing certain files, due to some exceptions, the close method was not placed within the finally block, resulting in a leak of the file handle. With frequent file processing, a serious memory leak issue arose.

A memory overflow is a result, and a memory leak is a cause. The causes of memory overflow include insufficient memory space and configuration errors. For some wrong ways of programming, such as objects that are no longer used are not reclaimed and do not cut off the connection with GC Roots in time, this is a memory leak.

For example, a team uses HashMap for caching but does not set a timeout or LRU policy, so more and more data are put into the Map object, resulting in memory leaks.

Let's look at another example of a memory leak that often occurs, which is also due to HashMap. The code is as follows. Since the hashCode and equals methods of the Key class are not rewritten, all objects put into HashMap cannot be taken out, and they are lost from the outside world. So the following code results in null.

//leak exampleimport java.util.HashMap;import java.util.Map;public class HashMapLeakDemo { public static class Key { String title; public Key(String title) { this.title = title; }}public static void main(String[] args) { Map<Key, Integer> map = new HashMap<>(); map.put(new Key("1"), 1); map.put(new Key("2"), 2); map.put(new Key("3"), 2); Integer integer = map.get(new Key("2")); System.out.println(integer); }}Even if the equals methods and the hashCode method are provided, you should be very careful and try to avoid using custom objects as keys.

In another example, regarding the application of the file processor, after reading or writing some files, due to some exceptions, the close method is not placed in the finally block, resulting in the leak of the file handle. Due to the frequent processing of files, a serious memory leak problem occurs.

We had an online application whose CPU usage soared after a single node ran for a period of time. Once the CPU usage soared, it was generally suspected that a certain business logic had too much computation or triggered an infinite loop (such as the infinite loop caused by the famous HashMap high concurrency). However, in the end, we found that it was a GC problem.

(1) Use the top command to find the process that uses the most CPU and record its pid. Use the SHIFT + P shortcut to sort by CPU utilization.

top(2) Use the top command again and add the -H parameter to view the thread that uses the most CPU in the process and record the ID of the thread.

top -Hp $pid(3) Use the printf function to convert the decimal tid into a hexadecimal one.

printf %x $tid(4) Use the jstack command to view the thread stack of the Java process.

jstack $pid >$pid.log(5) Use the less command to view the generated file and find the hexadecimal tid just converted to find the thread context where the problem occurs.

less $pid.logWe have searched for the keyword DEAD in jstack logs and found the IDs of several threads with the most CPU usage.

We could see that the root cause of the problem was the full heap, but OOM did not occur. Consequently, the GC process had been continuously trying to reclaim memory, with a rather modest effect, leading to an increase in CPU usage and the application appearing to be unresponsive. To further investigate the specific problem, we needed to dump the memory and analyze the specific reasons using tools such as MAT.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Interview Questions We've Learned Over the Years: The Distributed System

Alibaba Cloud's Qwen Models Attract over 90,000 Enterprise Adoptions Within its First Year

1,319 posts | 463 followers

FollowAlibaba Cloud Community - May 3, 2024

Alibaba Cloud Community - July 29, 2024

Alibaba Cloud Community - May 29, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Community - May 8, 2024

1,319 posts | 463 followers

Follow AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More VPC

VPC

A virtual private cloud service that provides an isolated cloud network to operate resources in a secure environment.

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn MoreMore Posts by Alibaba Cloud Community