By Taosu

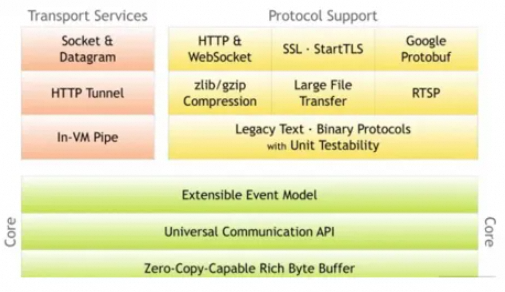

As the essence of Netty, the core layer provides the generic abstraction and implementation of the underlying network communication, including the extensible event model, universal communication API, and zero-copy-capable rich byte buffer.

The protocol support layer basically covers the codec implementation of mainstream protocols, such as HTTP, Protobuf, WebSocket, and binary. In addition, Netty also supports custom application layer protocols. Netty's extensive protocol support reduces user development costs. Based on Netty, we can quickly develop services such as HTTP and WebSocket.

The transport services layer provides the definition and implementation of network transport capabilities. It supports Socket, HTTP tunnel, and In-VM pipe. Netty abstracts and encapsulates data transmission services such as TCP and UDP. Users can focus more on the implementation of business logic instead of the details of the underlying Data Transmission Service.

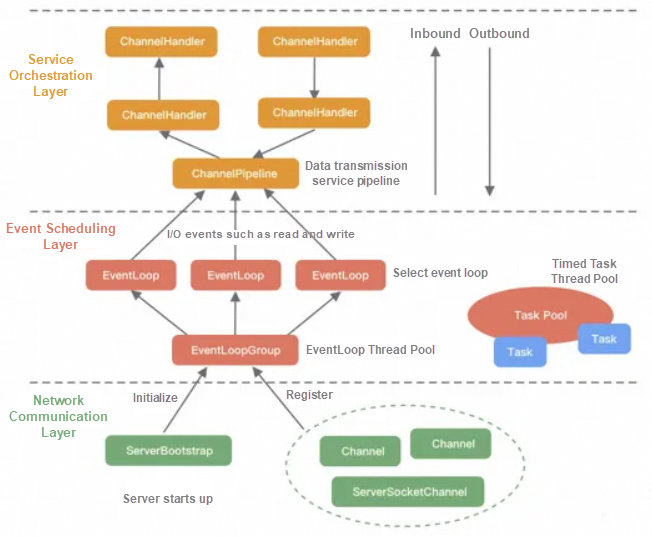

The network communication layer performs network I/O operations. It supports multiple network protocols and I/O models for connection operations. After network data is read into the kernel buffer, various network events are triggered. These network events are distributed to the event scheduling layer for processing.

The core components of the network communication layer include BootStrap, ServerBootStrap, and Channel.

Bootstrap is responsible for the Netty client program startup, initialization, and server connection. It connects with other core components of Netty.

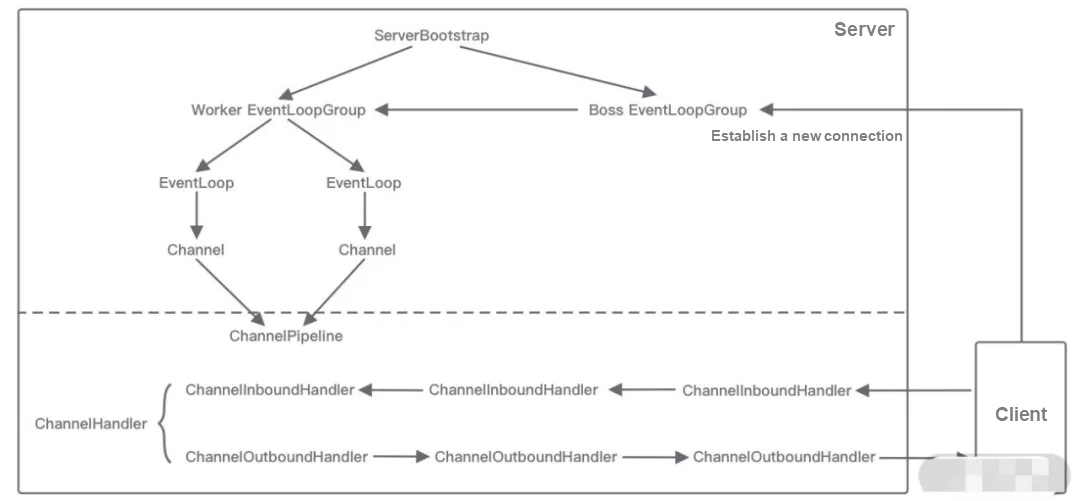

ServerBootStrap is used to bind the local port for server startup and will be bound to two EventLoopGroups, Boss and Worker.

Netty channels provide higher-level abstractions based on NIO, such as register, bind, connect, read, write, and flush.

The event scheduling layer is responsible for aggregating various types of events through the Reactor thread model and integrating various events (such as I/O events, signal events, and scheduled events) through the Selector main loop thread. The actual business processing logic is completed by the relevant handlers in the service orchestration layer.

The core components of the event scheduling layer include EventLoopGroup and EventLoop.

EventLoop handles all I/O events within the channel lifecycle, such as accept, connect, read, and write events.

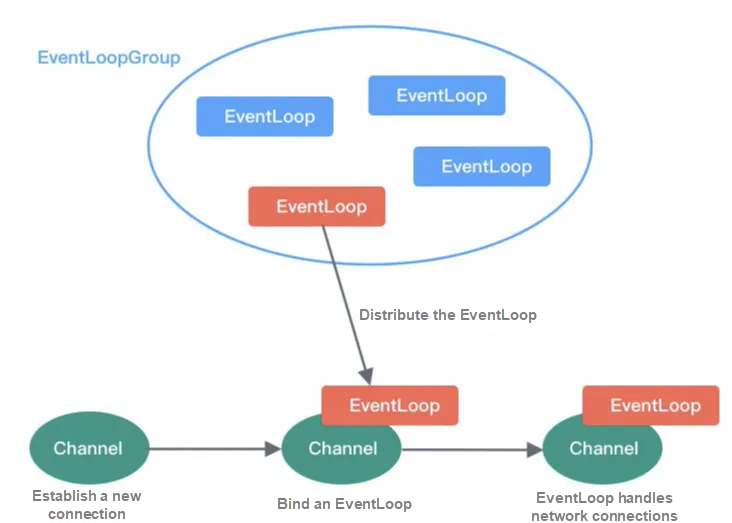

① An EventLoopGroup contains one or more EventLoop.

② An EventLoop is bound to a channel at the same time. Each EventLoop processes one type of channel.

③ A channel can be bound to and unbound from multiple EventLoops multiple times within the lifecycle.

EventLoopGroup is the core processing engine of Netty. It is essentially a thread pool and is mainly responsible for receiving I/O requests and allocating threads to process the requests. By creating different EventLoopGroup parameter configurations, it can support the three thread models of Reactor:

Single-thread model: The EventLoopGroup contains only one EventLoop. Boss and Worker use the same EventLoopGroup.

Multi-thread model: The EventLoopGroup contains multiple EventLoops. Boss and Worker use the same EventLoopGroup.

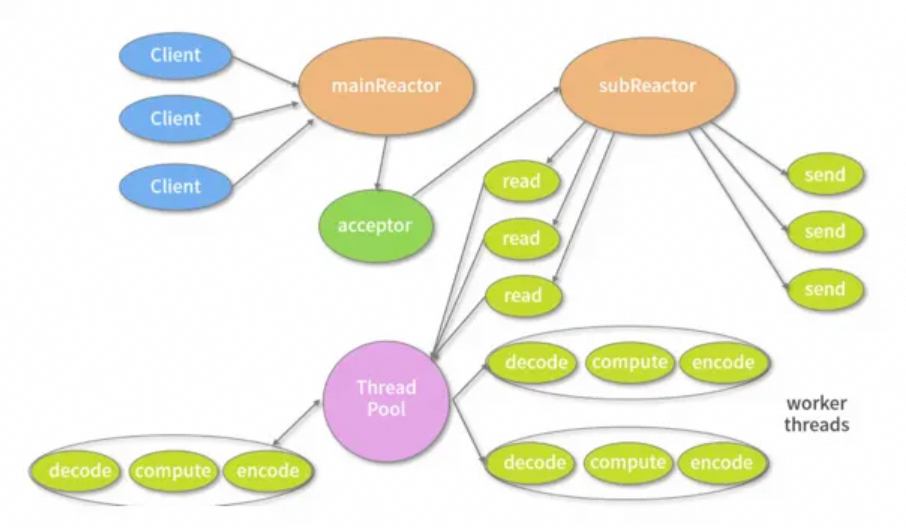

Primary-secondary multi-thread model: The EventLoopGroup contains multiple EventLoops. Among them, the Boss is the main Reactor, and the Worker is the sub Reactor. They use different EventLoopGroups. The main Reactor creates a new network connection channel and registers the channel to the sub Reactor.

The service orchestration layer is responsible for assembling various services. It is the core processing chain of Netty to implement dynamic orchestration and orderly propagation of network events.

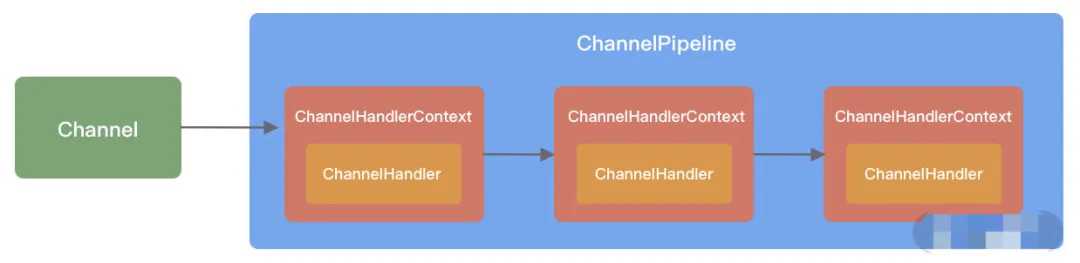

The core components of the service orchestration layer include ChannelPipeline, ChannelHandler, and ChannelHandlerContext.

ChannelPipeline is the core orchestration component of Netty and is responsible for assembling various ChannelHandlers. The ChannelPipeline internally links different ChannelHandlers together through doubly-linked lists. When an I/O read-write event is triggered, the pipeline calls the handler list in sequence to intercept and process the channel data.

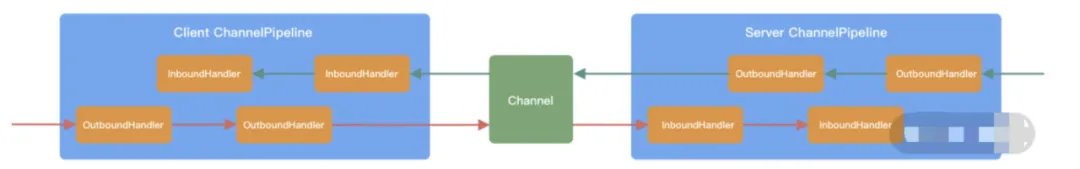

Both the client and the server have their own ChannelPipeline. A complete request from the client and server: client outbound (Encoder requests data), server inbound (Decoder receives data and executes business logic), and service outbound (Encoder responses result).

ChannelHandler completes data encoding, decoding, and processing.

ChannelHandlerContext is used to store the handler context. We can use HandlerContext to know the association between a pipeline and a handler. HandlerContext can implement interactions between handlers. HandlerContext contains all events within the handler lifecycle, such as connect, bind, read, flush, write, and close events. At the same time, HandlerContext implements the model abstraction of the logic that is generic to handlers.

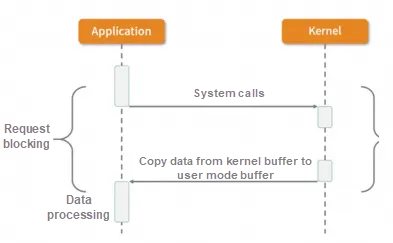

The application process initiates an I/O request to the kernel. The thread that initiates the call waits for the kernel to return the result. A complete I/O request is referred to as a BIO, so BIO can only use a multi-thread model with one request corresponding to one thread when implementing asynchronous operations. However, thread resources are limited and precious, and creating too many threads will increase the overhead of thread switching.

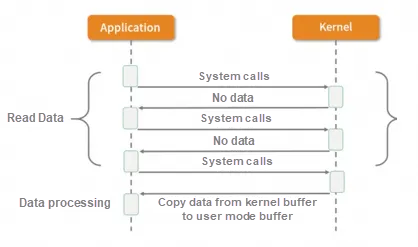

After the application process initiates an I/O request to the kernel, it no longer waits for the result synchronously. Instead, it immediately returns the request and obtains the request result by polling. Although NIO greatly improves the performance compared with BIO, a large number of system calls during the polling process lead to a large amount of context-switching overhead. Therefore, the efficiency of NIO alone is not high, and as the concurrency increases, NIO will have serious performance waste.

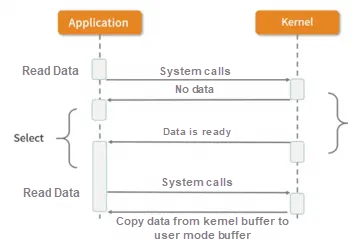

Multiplexing enables one thread to process multiple I/O handles. Multi- refers to multiple tunnels, and -plex refers to the use of one or more fixed threads to process each Socket. Select, poll, and epoll are specific implementations of multiplexing I/O. A thread can obtain the data status of multiple tunnels in the kernel mode with one select call. Among them, select is only responsible for waiting, and recvfrom is only responsible for copying. In BIO, multiple file descriptors can be blocked and listened to. Therefore, it is a very efficient I/O model.

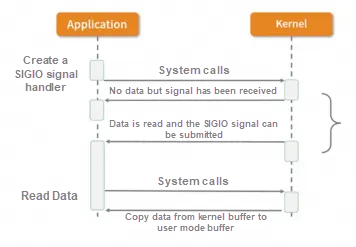

Signal-driven I/O (SIGIO):

The signal drives the I/O model, and the application process tells the kernel: when the datagram is ready, send me a signal, capture the SIGIO signal, and call my signal handler function to obtain the datagram.

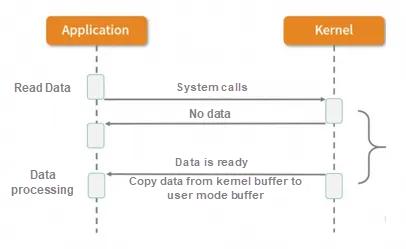

When the application calls aio_read, the kernel obtains and returns the contents of the datagram, and at the same time returns the control of the program to the application process which continues to process other things. It is a non-blocking state. When a datagram is ready in the kernel, the kernel copies the datagram to the application and returns the function handler defined in aio_read.

Netty's I/O model is based on NIO, and the underlying depends on the multiplexer selector of the NIO framework. With the epoll model, only one thread is required to poll the Selector. When data is ready, an Event Dispatcher is required to distribute read and write events to corresponding event handlers. The event dispatcher has two design models: Reactor and Proactor. Reactor uses synchronous I/O, and Proactor uses asynchronous I/O.

The Reactor is relatively simple to implement and is suitable for scenarios with short processing time because it is easy to block time-consuming I/O operations. The Proactor has higher performance, but the implementation logic is very complex, which is suitable for image or video stream analysis servers. Currently, mainstream event-driven models still rely on select or epoll to implement.

The unpacked TCP transport protocol is stream-oriented and has no data packet boundaries.

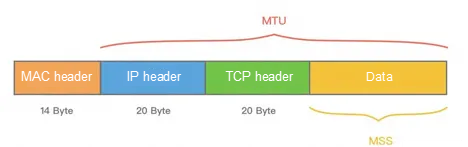

The MTU (Maximum Transmission Unit) is the maximum size of data transmitted at the link layer each time. The MTU is typically 1,500 bytes in size.

The MSS (Maximum Segment Size) refers to the maximum TCP segment length, which is the size of the maximum data sent by the transmission layer each time.

As shown in the figure above, if MSS + TCP header + IP header > MTU, the packet will be split into multiple transmissions. This is unpacking.

The Nagle algorithm can be understood as batch sending, which is also an optimization idea often used in our usual programming. It is to write the data to the buffer before the data is confirmed, wait for the data confirmation or the buffer to accumulate to a certain size, and then send the data packet out. In order to minimize the data transmission service delay, Netty disables the Nagle algorithm by default.

During the communication between the client and the server, the size of the data that the server reads each time is uncertain. Boundaries need to be determined:

Netty Common Encoder Types

MessageToByteEncoder // Encode the object into a byte stream.

MessageToMessageEncoder // Encode one message type into another message type.Netty Common Decoder Types

ByteToMessageDecoder/ReplayingDecoder // Decode the byte stream into a message object.

MessageToMessageDecoder // Decode one message type into another message type.Codecs can be divided into primary decoders and secondary decoders. The primary decoders are used to resolve problems of TCP unpacking and sticking packets and parse bytes based on protocols. If you need to convert the parsed byte data to the object model, you need to use a secondary decoder. Similarly, the encoder process is reversed.

Netty Custom Protocol Content

/*

+---------------------------------------------------------------+

| Magic number 2byte | Protocol version number 1byte | Serialization algorithm 1byte | Message type 1byte |

+---------------------------------------------------------------+

| Status 1byte | Reserved field 4byte | Data length 4byte |

+---------------------------------------------------------------+

| Data content (unfixed length) |

+---------------------------------------------------------------+

*/How do I determine whether a complete message exists in ByteBuf? The most common method is to determine by reading the message length dataLength. If the readable data length of ByteBuf is less than dataLength, it indicates that ByteBuf cannot obtain a complete packet.

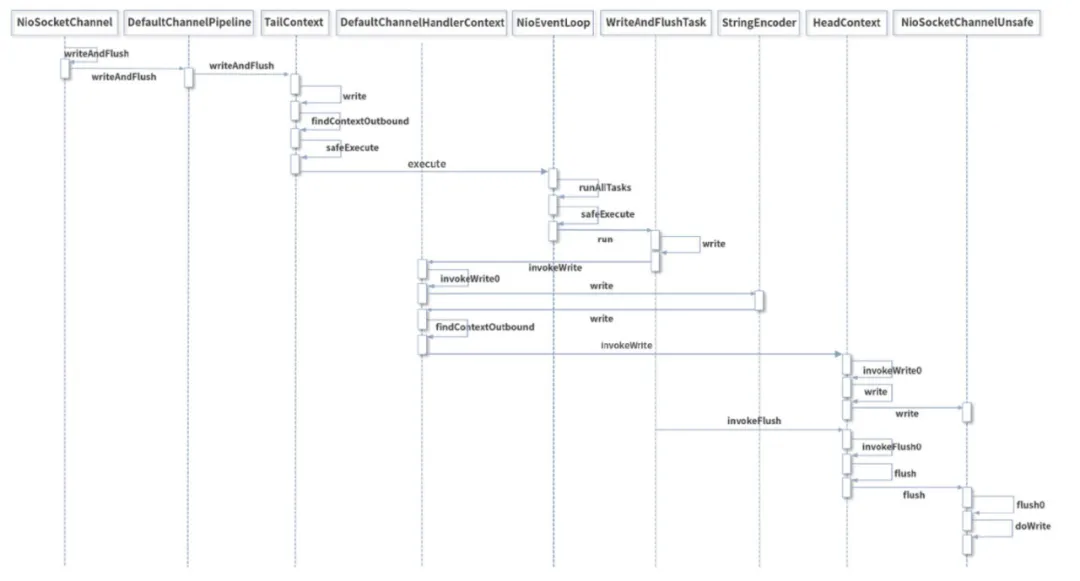

① writeAndFlush is an outbound operation. Events are propagated from the Tail node of the pipeline to the Head node which plays an important role in both the write and flush processes.

② The write method does not write data to the Socket buffer, but only to the ChannelOutboundBuffer cache which is implemented inside the cache by a one-way linked list.

③ The flush method finally writes the data to the Socket buffer.

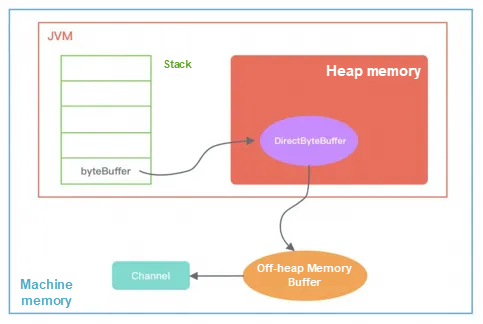

In Java, objects are allocated in the heap. Generally, JVM memory refers to heap memory. Heap memory is completely managed by JVM. JVM has its own garbage collection (GC) algorithm, so users do not need to care about how the memory of objects is reclaimed. Off-heap memory corresponds to in-heap memory. For the entire machine memory, except for the in-heap memory, all the remaining part is the off-heap memory. Off-heap memory is not managed by JVM but is directly managed by the operating system. Using off-heap memory has several advantages:

The DirectByteBuffer object stored in the heap is not large and only contains attributes of the off-heap memory such as the address and size. It also creates the corresponding Cleaner object. The off-heap memory allocated through ByteBuffer does not need to be manually reclaimed, instead, it can be automatically reclaimed by JVM. When the DirectByteBuffer object in the heap is reclaimed by GC, Cleaner is used to reclaim the corresponding off-heap memory.

As can be seen from the DirectByteBuffer constructor, the logic for actually allocating off-heap memory is still unsafe.allocateMemory(size). Unsafe is a very unsafe class that is used to perform sensitive operations such as memory access, allocation, and modification, and can overcome the shackles of JVM restrictions. Unsafe was not originally designed for developers. Although it can gain control over the underlying resources, it cannot guarantee security, so be careful when you use Unsafe (Unsafe cannot be used directly in Java, but Unsafe instances can be obtained through reflection). Netty depends on the Unsafe tool class because Netty needs to interact with the underlying Socket. That is, Unsafe improves the performance of Netty.

Since the reclaim of DirectByteBuffer objects depends on Old GC or Full GC to trigger cleanup, if there is no GC execution for a long time, the off-heap memory will always occupy the memory and will not be released even if it is no longer used, which will easily exhaust the physical memory of the machine. -XX:MaxDirectMemorySize specifies the maximum size of off-heap memory. If the maximum size of off-heap memory is exceeded, GC is triggered and an OOM exception is thrown if the memory still cannot be released.

When the off-heap memory is initialized, the object references in the memory are shown in the following figure. The first is a static variable in the Cleaner class, and the Cleaner object is added to the Cleaner linked list during initialization. The DirectByteBuffer object contains the address and size of the off-heap memory and the reference to the Cleaner object. The ReferenceQueue is used to store the Cleaner object to be reclaimed.

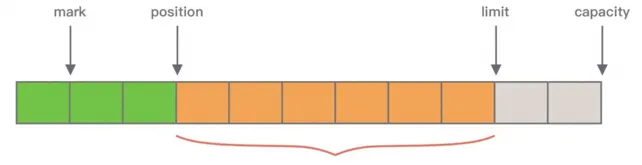

JDK NIO ByteBuffer

• mark: marks a key position that has been read, so that you can easily return to this position.

• position: is the current read position.

• limit: is the effective length of data in the buffer.

• capacity: is the space capacity at initialization.

First, the length allocated by ByteBuffer is fixed and cannot be dynamically expanded. The capacity is checked each time when data is stored, and existing data needs to be migrated for expansion.

Second, ByteBuffer can only obtain the current operable position through position, because read and write share the position pointer, and you need to frequently call the flip and rewind methods to switch the read and write status.

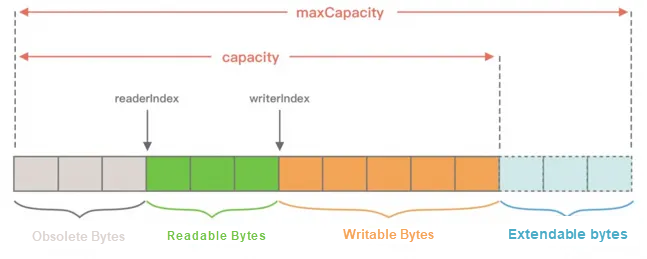

ByteBuf in Netty

• Obsolete bytes: indicate invalid bytes that have been discarded.

• Readable bytes: indicate the bytes in ByteBuf that can be read, which can be calculated by writeIndex-readerIndex. If the read and write positions overlap, the ByteBuf is unreadable.

• Writable bytes: all data written to ByteBuf is stored in the writable bytes area. If the value of the writeIndex field exceeds the value of the capacity field, it means that the capacity of the ByteBuf is insufficient and expansion is needed.

• Extendable bytes: indicate the maximum number of bytes that can be added to a ByteBuf file. The maximum number of bytes is maxCapacity, and an error occurs if you write data to a ByteBuf file when the maxCapacity is exceeded.

When the reference count of a ByteBuf is 0, the ByteBuf can be put into the object pool to avoid repeated creation each time a ByteBuf is used.

JVM does not know how the reference count of Netty is implemented. When a ByteBuf object is unreachable, it will be reclaimed by GC. However, if the reference count of ByteBuf is not 0 at this time, the object will not be released or put into the object pool, resulting in a memory leak. Netty samples and analyzes the allocated ByteBufs to check whether the ByteBufs are unreachable and the reference count is greater than 0. Netty then determines the location of the memory leak and outputs it to the log. You can use the LEAK keyword in the log to find the specific object of the memory leak.

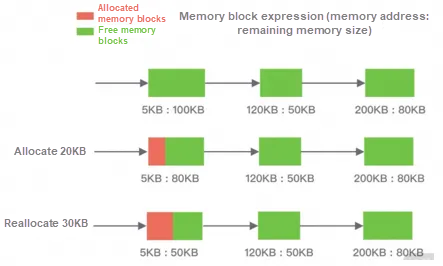

To reduce internal and external fragments during allocation, common memory allocation algorithms include Dynamic Memory Allocation, Buddy Algorithm, and Slab Algorithm.

For the first fit algorithm, the free partition chain connects the free partitions in the form of a doubly linked list in the increasing order of addresses. It finds the first free partition that meets the allocation condition from the free partition chain and then allocates the request process to an available memory block from the free partition. The remaining free partitions are still retained in the free partition chain.

The next fit algorithm no longer starts searching from the beginning of the linked list each time but from the beginning of the last free partition found. This algorithm improves the search efficiency but leads to more fragments.

For the best fit algorithm, the free partition chain connects the free partitions in the form of a doubly linked list in the increasing size of the free partitions. Each time, it starts searching from the beginning of the free partition chain.

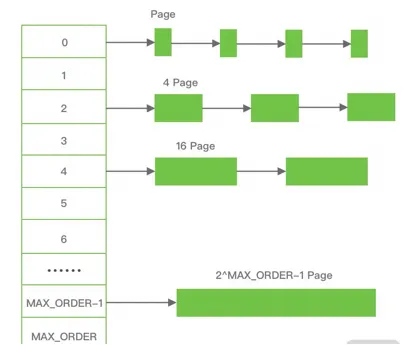

Buddy Algorithm (Less External Fragments but More Internal Fragments)

It is a very classic memory allocation algorithm that uses the separation and adaptation design idea. It divides the physical memory according to the powers of 2 and allocates the memory according to the powers of 2 on demand.

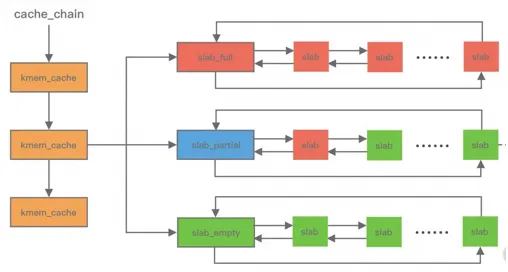

Based on the buddy algorithm, the slab algorithm is specially optimized for small memory scenarios and uses a memory pool scheme to solve the internal fragment problem.

In the slab algorithm, slab sets of different sizes are maintained, and the memory is divided into slots of the same size. The memory blocks are not merged again. At the same time, the bitmap is used to record the usage of each slot.

kmem_cache contains three slab linked lists: fully allocated slab_full, partially allocated slab_partial, and completely free slabs_empty. These three linked lists are responsible for memory allocation and release. The memory management of the slab algorithm is based on objects, which classifies objects of the same type into one category. When memory is allocated, the corresponding memory units are divided from the slab linked list. A single slab can be moved between different linked lists. For example, when a slab is allocated, it will be moved from slab_partial to slabs_full. When an object in a slab is released, the slab will be moved from slab_full to slab_partial. If all objects are released, the slab will be moved from slab_partial to slab_empty. When the memory is released, the slab algorithm does not discard the allocated object but stores it in the cache. The next time the memory is allocated for the object, the system directly uses the recently released memory block.

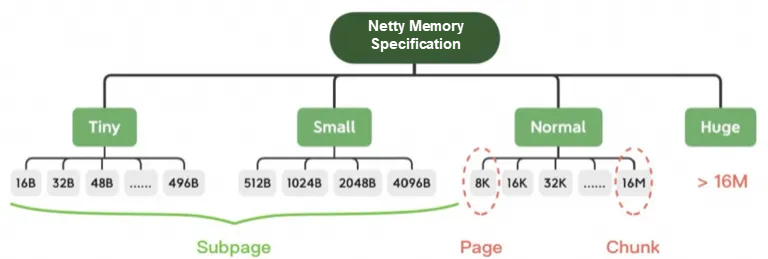

• Memory is managed by a certain number of arenas, and threads are evenly distributed among arenas.

• Each arena contains a bin array, and each bin manages memory blocks of different gears.

• Each arena is divided into several chunks, each chunk contains several runs, and each run is composed of consecutive pages. Run is the actual operation object for memory allocation.

• Each run is divided into a certain number of regions. In small memory allocation scenarios, the region is equivalent to the user memory.

• Each tcache corresponds to an arena, and the tcache contains multiple types of bins.

Memory management: arena. Memory is managed by a certain number of arenas. Each user thread uses round-robin polling to select an available arena for memory allocation.

Hierarchical management: bin. The memory size managed by each bin increases by category. The allocation of small and medium memory in jemalloc is performed based on the slab algorithm, which produces different types of memory blocks.

Page collection: chunk. The chunk manages the memory by page. Each chunk can be used to apply for small memory multiple times, but it can only be allocated once in the scenario of large memory allocation.

The actual allocation unit: run. The specific size of the run structure is determined by different bins. For example, a run corresponding to an 8-byte bin has only one page. You can select an 8-byte block from the run structure for allocation.

The run is divided into regions. Each run is divided into several regions with equal length, and each memory allocation is also distributed according to regions.

tcache is a private cache for each thread. Each time tcache requests a batch of memory from the arena, it first searches in tcache to avoid lock contention during memory allocation. Only when the memory allocation fails, the run is used to allocate memory.

In small scenarios, if the requested memory size is smaller than the smallest bin in the arena, the memory is preferentially allocated from the corresponding tcache in the thread. First, determine whether there is a cached memory block in the corresponding tbin. If there is, the allocation succeeds. Otherwise, find the arena corresponding to the tbin, allocate the region from the bin in the arena, and store it in the avail array of the tbin. Finally, select an address from the avail array for memory allocation. When the memory is released, the reclaimed memory block will also be cached.

The memory allocation in large scenarios is similar to that in small scenarios. If the size of the requested memory to be allocated is larger than the smallest bin in the arena but not larger than the largest block that can be cached in tcache, it will still be allocated through tcache. However, the difference is that the chunk and the corresponding run will be allocated at this time, and the corresponding memory space will be found from the chunk for allocation. The memory release is also similar to that in the small scenario. The released memory blocks are cached in the tbin of tacache. In addition, when the size of the requested memory to be allocated is greater than the largest block that can be cached in the tcache but not greater than the size of the chunk, the tcache mechanism will not be used. Instead, the memory allocation will be directly performed in the chunk.

In huge scenarios, if the size of the requested memory to be allocated is larger than the size of chunks, the memory allocation will be directly performed by using mmap and munmap will be called to reclaim the memory.

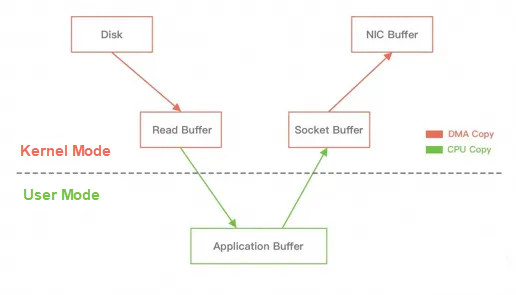

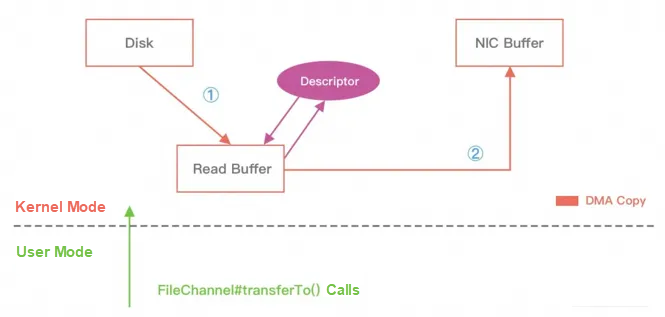

In Linux, the system calls sendfile() to transfer data from one file descriptor to another, thus implementing zero-copy technology.

In Java, zero-copy technology is also used. It is the transferTo() method in the NIO FileChannel class, which can directly transfer data from a FileChannel to another channel.

In addition to functional encapsulation at the operating system level, the zero-copy technology in Netty is more optimized for data operations in the user mode, which is mainly reflected in the following five aspects:

• Off-heap memory avoids copying data from JVM heap memory to off-heap memory.

• CompositeByteBuf class allows you to combine multiple buffer objects into one logical object, which avoids combining several buffers into one large buffer through traditional memory copy.

• You can use Unpooled.wrappedBuffer to wrap a byte array into a ByteBuf object and no memory copy is generated during the wrapping process.

• For the ByteBuf.slice, the slice operation can split a ByteBuf object into multiple ByteBuf objects. No memory copy is generated during the splitting process, and the bottom layer shares the storage space of a byte array.

• Netty uses FileRegion to encapsulate the transferTo() method so that it can directly transfer the data of the file buffer to the target channel to avoid data copying between the kernel buffer and the user-mode buffer.

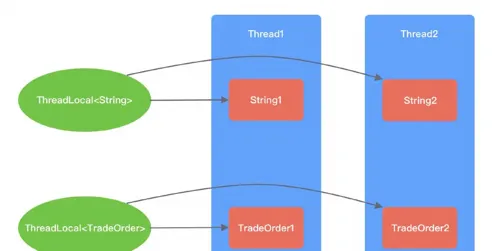

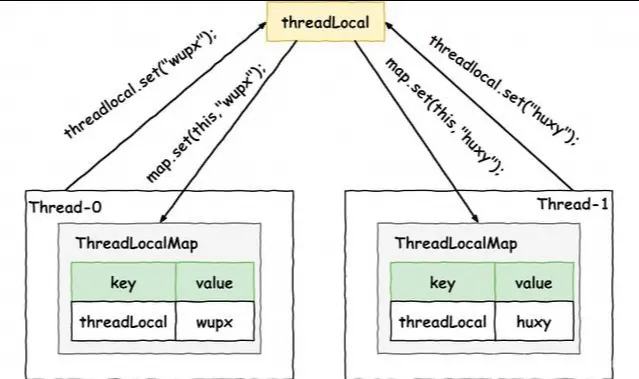

ThreadLocal can be understood as a thread-local variable. ThreadLocal creates a replica for the variable in each thread. The replica can only be accessed by the current thread. Multiple threads are isolated from each other and the variable cannot be shared among multiple threads. In this way, when each thread modifies the variable replica, other threads will not be affected.

Since multiple threads have their own independent instance replicas when accessing ThreadLocal variables, it is easy to come to a solution that maintains a Map in the ThreadLocal to record the mapping relationship between threads and instances. When adding threads and destroying threads, the mapping relationship in Map needs to be updated, because there will be multiple concurrent modifications. It is necessary to ensure that the Map is thread-safe. However, in high-concurrency scenarios, concurrent Map modification requires locking, which inevitably reduces performance.

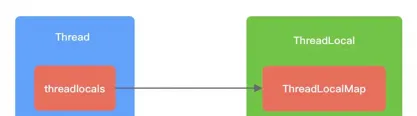

In order to avoid locking, JDK uses the opposite design idea. It starts with Thread, maintains a Map in Thread, and records the mapping relationship between ThreadLocal and the instance so that in the same thread, the Map does not need to be locked.

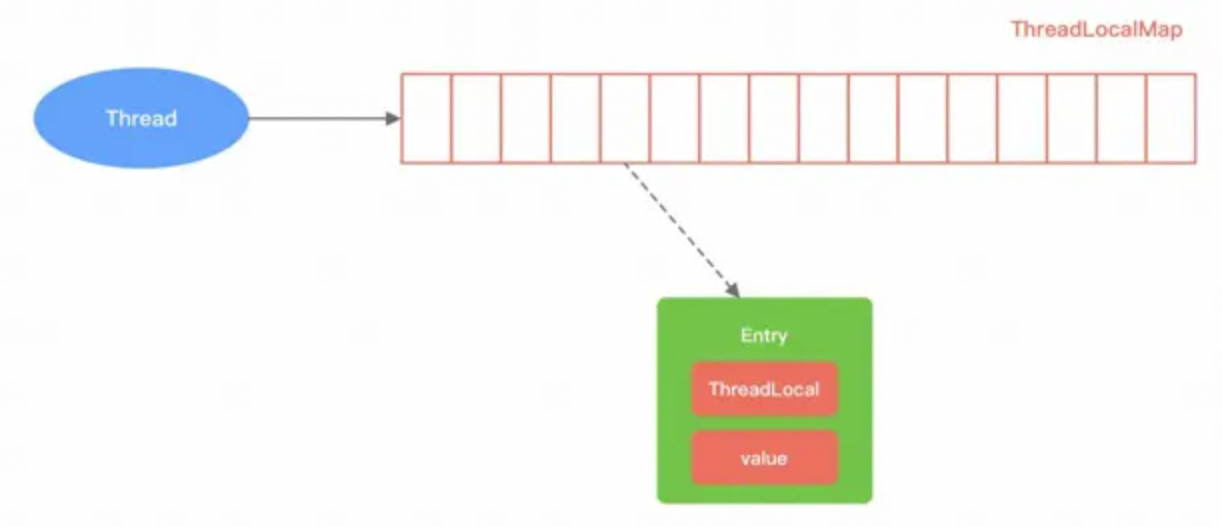

ThreadLocalMap is a hash table implemented by using linear probing. The bottom layer uses arrays to store data and the magic number 0x61c88647 to make the hash more balanced. At initialization, the ThreadLocalMap is an Entry array with a length of 16. Different from HashMap, the key of Entry is the ThreadLocal object itself, and the value is the value that the user needs to store.

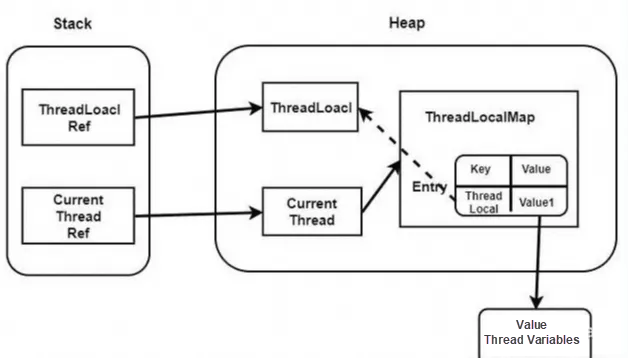

Entry inherits from the weak reference class WeakReference. The key of Entry is a weak reference while the value is a strong reference. During JVM GC, as long as a weakly referenced object is found, it will be reclaimed regardless of whether the memory is sufficient. Then why is the key of Entry designed as a weak reference? If keys are all strong references, when the line ThreadLocal is no longer used but there is still a strong reference to ThreadLocal in the ThreadLocalMap, then GC cannot reclaim, resulting in a memory leak.

Although the key of Entry is designed as a weak reference, when ThreadLocal is no longer used (business logic is complete but the thread does not end due to thread reuse) and is reclaimed by GC, the key of Entry may be NULL in ThreadLocalMap, so the value of Entry will always strongly reference data and cannot be released, and it can only wait for the thread to be destroyed. So how should we avoid ThreadLocalMap memory leaks? ThreadLocal has helped us to take certain protection measures. When the ThreadLocal.set()/get() method is executed, ThreadLocal clears the entry object whose key is NULL in the ThreadLocalMap so that it can be reclaimed by GC. In addition, when a ThreadLocal object in a thread is no longer used, the remove() method is immediately called to delete the Entry object. In an abnormal scenario, you need to clean it up in the finally code block to maintain good coding awareness. In Netty, you can easily use FastThreadLocal to prevent memory leaks.

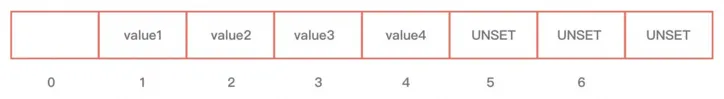

FastThreadLocal uses the object array instead of the entry array. Object[0] stores a set of Set<FastThreadLocal<?>>. From array subscript 1, value data is directly stored and is no longer stored in the form of a ThreadLocal key-value pair. It is mainly for the set method, and two additional behaviors have been added.

• Search efficiently. When the FastThreadLocal locates the data, it can directly obtain the time complexity O(1) according to the array subscript index. For the JDK native ThreadLocal, the hash table is prone to hash conflicts when there is a large amount of data, and the linear probing method requires a constant downward search to resolve hash conflicts, which is inefficient. In addition, FastThreadLocal is simpler and more efficient than ThreadLocal data expansion. FastThreadLocal rounds up the index to the power of 2 as the expanded capacity and then copies the original data to a new array. ThreadLocal is based on the hash table, so after the expansion it needs a round of rehash.

• Security is higher. Improper use of JDK-native ThreadLocal may cause memory leaks and you can only wait for threads to be destroyed. In scenarios where a thread pool is used, ThreadLocal can only prevent memory leaks through active probing, resulting in a certain amount of overhead. However, FastThreadLocal provides the remove() method to actively clear objects and Netty also encapsulates the FastThreadLocalRunnable in the thread pool scenario. After the task is executed, the FastThreadLocal.removeAll() will be executed to clean up all FastThreadLocal objects in the Set collection.

Three forms of monthly statistics report generation, daily score settlement, and scheduled tasks push through emails:

Three key methods for scheduled tasks:

JDK comes with three timers: Timer, DelayedQueue, and ScheduledThreadPoolExecutor.

The Timer corresponds to a min-heap queue, and the deadline task is located at the top of the heap. The task that pops up is always the one with the highest priority to be executed. The time complexity of the Run operation is O(1), while that of both the Schedule and Cancel operations is O(logn). Regardless of how many tasks are added to the array, the asynchronous thread TimerThread is always responsible for processing. TimerThread will regularly poll the tasks in TaskQueue. If the deadline of the task at the top of the heap has been reached, the task will be executed. If the task is a periodic one, the deadline of the next task will be recalculated after the execution is completed and the task will be put into the min-heap again. If the task is a single execution, it will be deleted from the TaskQueue after the execution is completed.

DelayedQueue is a blocking queue that uses the priority queue PriorityQueue to delay obtaining an object. Each object in the DelayQueue must implement the Delayed interface and rewrite the compareTo and getDelay methods. DelayQueue provides blocking methods of put() and take() to add and remove objects from the queue. After the objects are added to the DelayQueue, they are prioritized according to the compareTo() method. The getDelay() method is used to calculate the remaining time of the message delay. The object can be taken out of the DelayQueue only when getDelay <= 0.

The most common scenario in the daily development of DelayQueue is to implement the retry mechanism. For example, after an interface call fails or the request times out, you can put the current requested object into the DelayQueue, use an asynchronous thread take() to retrieve the object, and then continue to retry. If the request still fails, continue to put back the DelayQueue. You can set the maximum number of retries and use the exponential backoff algorithm to set the deadline of the object, such as 2s, 4S s, 8s, 16s... and so on. The time complexity of DelayQueue is basically the same as that of Timer.

Timer's task scheduling is based on the absolute system time. The incorrect system time may lead to problems.

If an exception occurs in the execution of a TimerTask, the Timer will not catch it, which will cause the thread to terminate and other tasks will never be executed.

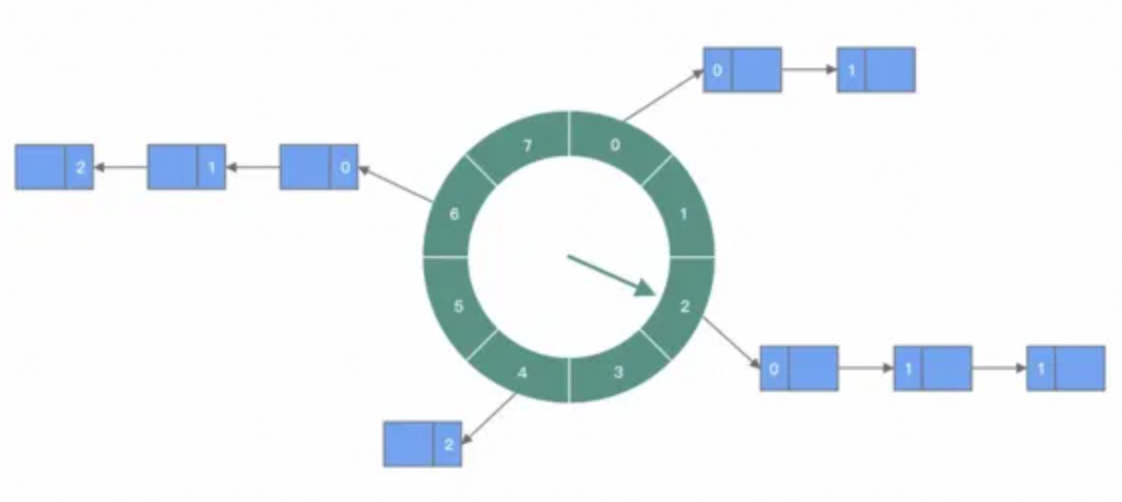

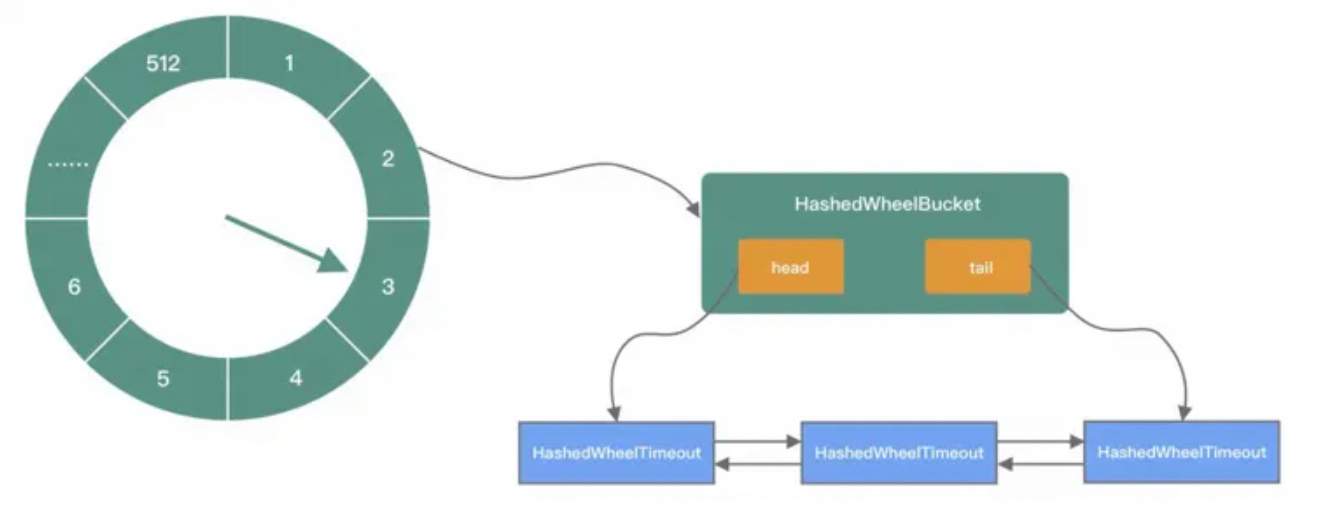

The remainder and modulo are performed according to the expiration time of the task, and then the task is distributed to different slots according to the remainder result. In each slot, whether to operate is determined according to the round value. Each time the specified slot is polled, the object with the least round is traversed for execution. In this way, the time complexity of the two operations adding and executing is approximately O(1). If the conflict is large, you can increase the array length or use a hierarchical time wheel to handle it.

public HashedWheelTimer( ThreadFactory threadFactory, // Thread pool, but only one thread is created. long tickDuration, // The time of each tick of the hour hand, which is equivalent to the time the hour hand interval takes to go to the next slot. TimeUnit unit, // Indicate the time unit of tickDuration. tickDuration * unit int ticksPerWheel, // How many slots are there on the time wheel and the default number is 512. boolean leakDetection, long maxPendingTimeouts) {// The maximum number of tasks allowed to wait // Omit other codes. wheel = createWheel(ticksPerWheel); // Create the circular array structure of the time wheel. mask = wheel.length - 1; // Mask for fast modulo. long duration = unit.toNanos(tickDuration); // Convert it to nanosecond for processing. workerThread = threadFactory.newThread(worker); // Create worker thread. leak = leakDetection || !workerThread.isDaemon() ? leakDetector.track(this) : null; // Specify whether to enable the memory leak probing. this.maxPendingTimeouts = maxPendingTimeouts; // The maximum number of tasks that can be waited for. If the number of tasks in the HashedWheelTimer exceeds this threshold, an exception is thrown.}

The time wheel in Netty is pushed by a fixed time interval tickDuration. If there are no expired tasks for a long time, the time wheel will be pushed, resulting in a certain performance loss. In addition, if the expiration time span of the task is very large, for example, task A is executed after 1 second, and task B is executed after 6 hours, it will also cause the problem of air propulsion.

To solve the air propulsion problem, Kafka uses the DelayQueue of JDK to push the time wheel. DelayQueue stores all buckets in a time wheel and sorts buckets based on their expiration time. The latest expiration time is placed at the head of the DelayQueue. A thread in Kafka reads the task list in the DelayQueue. If the time is not reached, the DelayQueue is always blocked. Although the performance of DelayQueue insertion and deletion is not very good, this is actually a trade-off strategy. DelayQueue only stores buckets, and the number of buckets is not large. Compared with the impact of air advance, it is more beneficial than disadvantages.

To solve the problem of a large task time span, Kafka introduces a hierarchical time wheel, as shown in the following figure. When the deadline of the task exceeds the time wheel representation range of the current layer, it will try to add the task to the previous layer of the time wheel, which is the same as the rotation rules of the hour, minute, and second hands of the clock.

select (windows) poll (linux) is essentially no different from select. It queries the device status corresponding to each fd. If the device is ready, it adds an item to the device waiting queue and continues traversing. If no ready device is found after traversing all fd, it suspends the current process until the device is ready or actively times out. After waking up, it will traverse fd again.

epoll supports the horizontal trigger and edge trigger. The most important feature is the edge trigger, which only tells the process which fd has just become ready, and only notifies once. Another feature is that epoll uses the "event" ready notification method and registers fd through epoll_ctl. Once the fd is ready, the kernel will use a kind of callback mechanism to activate the fd, and epoll_wait can receive the notification.

JDK has bugs during the implementation of Epoll. Even if the event list polled by the Selector is empty, the NIO thread can be woken up, resulting in 100% CPU usage. In fact, Netty does not solve the problem from the root, but cleverly circumvents the problem.

long time = System.nanoTime();if (/*Event polling duration is greater than or equal to timeoutMillis*/) { selectCnt = 1;} else if (/*Abnormal number of selectCnt reaches the threshold 512*/) { // Rebuild Select and register SelectionKey with new Selector selector = selectRebuildSelector(selectCnt);}The reliability of the NioEventLoop thread is critical. Once the NioEventLoop is blocked or trapped in empty polling, the entire system will be unavailable.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Activate Your Social Dynamics through the Salesforce Connected Experiences Gateway (CXG)

1,353 posts | 478 followers

FollowAlibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 3, 2024

Alibaba Cloud Community - May 8, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 1, 2024

Alibaba Cloud Community - July 29, 2024

1,353 posts | 478 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by Alibaba Cloud Community