By Taosu

The role of Message Queue (MQ): asynchronous processing, load shifting, and decoupling

For small and medium-sized enterprises with fewer technical challenges, the active community and open-source nature make RabbitMQ a good choice. On the other hand, for large enterprises with robust infrastructure research and development capabilities, RocketMQ, developed in Java, is a solid option.

In scenarios such as real-time computing and log collection within the realm of big data, Kafka stands as an industry standard. Utilizing Kafka ensures there are no issues, given its high level of community activity and global prominence in the field.

| RabbitMQ | RocketMQ | Kafka | |

|---|---|---|---|

| Single Machine Throughput | 10,000 magnitude | 100,000 magnitude | 100,000 magnitude |

| Programming Language | Erlang | Java | Java and Scala |

| Message Delay | Microseconds (μs) | Millisecond (ms) | Millisecond (ms) |

| Message Loss | Low possibility | No loss after parameter optimization | No loss after parameter optimization |

| Consumption Mode | Push and pull | Push and pull | Pull |

| Impact of Number of Topics on Throughput | \ | Hundreds or thousands of topics will have a small impact on throughput | Dozens or hundreds of topics will have a great impact on throughput |

| Availability | High (primary/secondary) | Very high (primary/secondary) | Very high (distributed) |

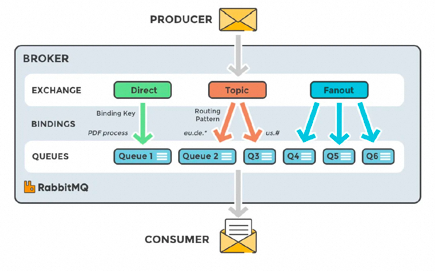

RabbitMQ was initially used for reliable communication in telecommunication services. It is one of the few products that support Advanced Message Queuing Protocol (AMQP).

Advantages:

Disadvantages:

It has drawn on the design of Kafka and has made many improvements. It has almost all the features and functions that an message queue should have.

Disadvantages:

The integration and compatibility with peripheral systems are not very good.

High availability: It supports almost all open-source software and meets most application scenarios, especially big data and stream computing.

Message is the data unit of Kafka. A message can be seen as a "row of data" or a "record" in a database.

Messages are written to Kafka in batches to improve efficiency. However, more throughput increases response time.

Messages are classified by topics. It is similar to tables in a database.

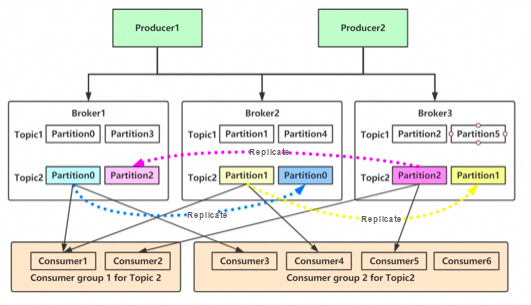

Topics can be divided into multiple partitions and distributed in the Kafka clusters. Each single partition is ordered, which facilitates scale-out. If a global order is needed, you need to set the partition to one.

Each topic is divided into multiple partitions, and each partition has multiple replicas.

By default, the producer distributes messages evenly across all partitions in the topic.

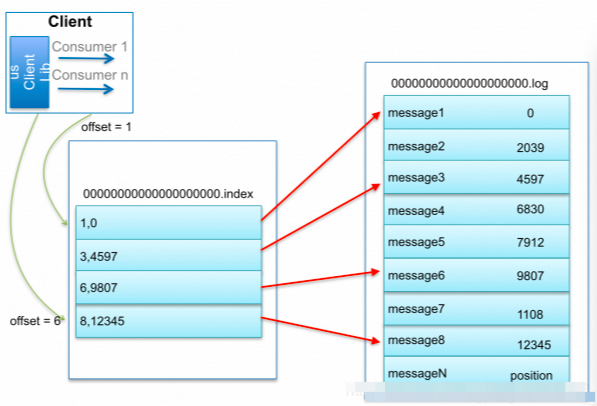

The consumer uses the offset to distinguish the messages that have been read and then consume the messages. The last read message offset of each partition is stored in Zookeeper or Kafka. If the consumer shuts down or restarts the system, the read status will not be lost.

The consumer group ensures that each partition can only be used by one consumer, which avoids repeated consumption. If one consumer in the group fails, other consumers in the group can take over the work of the failed consumer for rebalance and repartition.

It connects producers and consumers. A single broker can easily process thousands of partitions and millions of messages per second.

Each partition has a leader. When a partition is assigned to multiple brokers, the leader is used to replicate the partition.

When a message is written, each partition has an offset, which is the latest and largest offset of each partition.

Consumers in different consumer groups can store different offsets for a partition without affecting each other.

.log .index .timeindex..log append is written sequentially. The file name is named after the offset of the first message in the file.. Index can be used to quickly locate the position when you delete logs or search for data..timeStamp searches for the corresponding offset according to the timestamp.High throughput: A single server processes tens of millions of messages per second. It keeps stable performance even when TB and messages are stored.

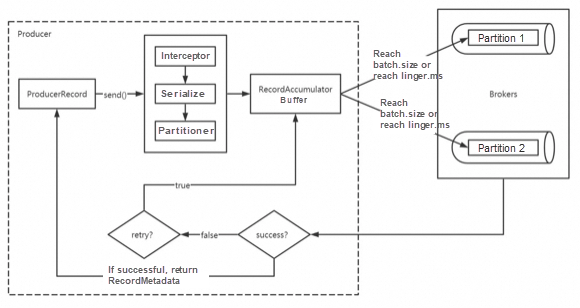

1. When a producer is created, a sender thread is also created and set as a daemon thread.

2. The produced message goes through the interceptor -> serializer -> partitioner and then is cached in the buffer.

3. The condition for batch sending is that the buffer data size reaches the batch.size or linger.ms reaches the upper limit.

4. After the batch sending, the message is sent to the specified partition and then dropped to the broker.

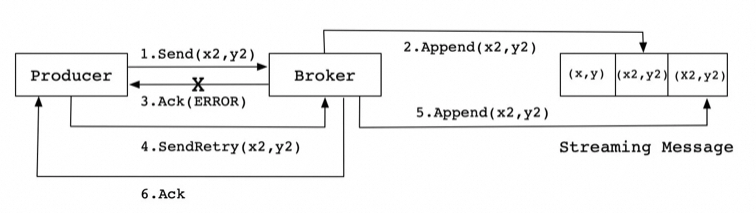

5. If the producer configures the retries parameter greater than 0 and does not receive an acknowledgment, the client will retry the message.

6. If the message is successfully dropped to the broker, the production metadata will be returned to the producer.

If the replicas in the ISR are all lost:

First, the follower sends a FETCH request to the leader. Then, the leader reads the message data in the underlying log file and updates the LEO value of the follower replica in its memory with the fetchOffset value in the FETCH request. Finally, try to update the HW value. After receiving the FETCH response, the follower writes the message to the underlying log and then updates the LEO and HW values.

After the leader election is completed and when the above three situations occur, the leader starts to allocate consumption plans according to the configured RangeAssignor, that is, which consumer is responsible for consuming which partitions of which topics. Once the allocation is completed, the leader will encapsulate this plan into the SyncGroup request and send it to the coordinator. Non-leaders will also send a SyncGroup request, but the content is empty. After receiving the allocation plan, the coordinator will insert the plan into the response of the SyncGroup and send it to each consumer. In this way, all members of the group know which partitions they should consume.

kafka-topics.sh --zookeeper localhost:2181/myKafka --create --topic topic_x --partitions 1 --replication-factor 1kafka-topics.sh --zookeeper localhost:2181/myKafka --delete --topic topic_xkafka-topics.sh --zookeeper localhost:2181/myKafka --alter --topic topic_x --config max.message.bytes=1048576kafka-topics.sh --zookeeper localhost:2181/myKafka --describe --topic topic_xBy querying the skip list ConcurrentSkipListMap, you can locate the 00000000000000000000.index, and find the largest index entry that is not greater than 23 in the offset index file by using the dichotomy method, that is, the column of offset 20. Then, you can sequentially search for the messages whose offset is 23, starting from the physical location of 320 in the log segment file.

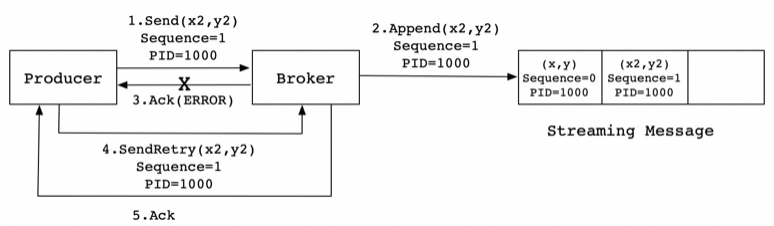

It ensures that the consumer does not repeat the processing when the message is resent. Even if the consumer receives repeated messages, the repeat processing also ensures the consistency of the final result. The mathematical concept of the idempotence is: f(f(x)) = f(x)

You can add a unique ID that is similar to the primary key of a database to uniquely mark a message.

ProducerID: # When each new producer is initialized, a unique PIDSequenceNumber is assigned: # Each topic that receives data from each PID corresponds to a monotonically increasing SN value from 0

ignore small epochs to avoid split-brain: both nodes simultaneously consider themselves the current controller.If the Kafka cluster contains a large number of nodes, such as hundreds of nodes, the rebalance within the consumer group caused by the changes in cluster architecture may take several minutes to several hours. In this case, Kafka is almost unavailable, which greatly affects the transactions per second (TPS) of Kafka.

Causes

The crash and leave of group members are two different scenarios. When a crash occurs, the member does not actively inform the coordinator of the crash. The coordinator may need a complete session.timeout period (heartbeat period) to detect the crash, which will inevitably cause the consumer to lag. To put it simply, leaving the group is actively initiating rebalance while crashing is passively initiating rebalance.

Solutions

Increase timeout session.timout.ms = 6s. Decrease heartbeat interval heartbeat.interval.ms = 2s. Increase poll interval max.poll.interval.ms = t + 1 minutesCurrently, Kafka uses ZooKeeper to take on cluster metadata storage, member management, controller election, and other management tasks. After that, when the KIP-500 proposal is completed, Kafka will no longer depend on ZooKeeper at all.

In conclusion, KIP-500 is a consensus algorithm based on Raft. It is developed by the community and implements controller self-election.

For metadata storage, the etcd based on Raft has become more popular in recent years.

More and more systems are using etcd to store critical data. For example, the flash sale system often uses it to store information about each node to control the number of services that consume MQ. Some configuration data of business systems are also synchronized to each node of the business system in real time through etcd. For example, etcd is used in the flash sale management to synchronize the configuration data of the flash sale activity to each node of the flash sale API service in real time.

Only the leader replica of Kafka can provide external read and write services and respond to requests from clients. Follower replicas only use the PULL method to passively synchronize data in the leader replica and keep ready to apply for the leader replica when the broker where the leader replica resides is down.

if (maxOffset-curOffset> 100000) { // The priority processing logic when TODO messages pile up // Unprocessed messages can be discarded or logged return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;}// TODO normal consumption process return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;Quick scale-out needs to be supported. Brokers + partitions. Partitions should be placed on different machines. When adding machines, data is migrated according to topics. In distributed mode, consistency, availability, and partition fault tolerance need to be considered.

In terms of performance, you can learn from TimingWheel, Zero Copy, I/O multiplexing, sequential read-write, and compressed batch processing.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

True Digital Group Launches "Climate Technology Platform" with Alibaba Cloud's AI Solutions

Interview Questions We've Learned Over the Years: MySQL – Part 1

1,076 posts | 263 followers

FollowAlibaba Cloud Community - May 8, 2024

Alibaba Cloud Community - May 3, 2024

Alibaba Cloud Community - May 29, 2024

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - July 29, 2024

1,076 posts | 263 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn MoreMore Posts by Alibaba Cloud Community