By Taosu

The user ID of the table is used to split the table, and each table is limited to 3 million data. Database sharding is based on business scenarios and regions. Each database has no more than 2,000 concurrent operations.

Sharding-jdbc: The client layer solution has the advantages of no deployment, low O&M cost, no proxy layer secondary forwarding requests, but high performance. However, each system needs to couple Sharding-jdbc dependencies, which makes upgrading troublesome.

Mycat: The proxy layer solution has the disadvantages of deployment, a set of middleware by self O&M, and high O&M costs. However, the advantage is that it is transparent to each project. If you need to upgrade, you can do it by the middleware.

Horizontal splitting: A table is placed in multiple databases to share high concurrency and speed up queries.

• ID ensures that the business can operate on the same database when multiple tables are associated.

• Range is convenient for expansion and data statistics.

• Hash can make the data more even.

One table is split into multiple tables, and some cold data can be split into redundant databases.

When it is not a write bottleneck, prioritize table sharding.

• Data between database shards can no longer be directly queried through the database. The problem of deep paging will occur.

• With more database shards, the possibility of problems becomes higher and the maintenance cost is also higher.

• Cross-database transactions cannot be guaranteed after the database sharding. You can only use other middleware to implement the eventual consistency.

The following requirements to meet the core business scenarios should be firstly considered when doing database sharding:

Three questions:

• Rich query: How do I meet the needs of queries across database shards after sharding databases and tables? You can use ElasticSearch (ES) wide tables.

You can use the database shard gateway and the database shard service to implement multi-dimensional query capabilities. However, the overall performance is poor, and normal write requests are affected. The most common way to respond to multi-dimensional real-time queries in the industry is to use ES.

• Data skew: Data is sharded into tables based on the database shards.

• Distributed transactions: Distributed transactions are caused by modifications across multiple databases and write operations between multiple microservices.

• Deep paging: Query by cursor, or call each query with the last query after sorting the maximum ID.

• Every place in the online system where database writing occurs, adding, deleting, or modifying operations, in addition to actions being performed on the old database, should also be carried out on the new database.

• After the system is deployed, it is also necessary to run a program to read the data of the previous database and write the data in the new database. When writing, you need to judge the update time.

• Loop until the data of the two databases are the same, and finally redeploy the code of the sharded databases and tables.

A customer has 100 million users now with 100,000 write concurrency, 1 million read concurrency, and 6 billion data volume.

The extreme situations should be considered in the design: 32 databases × 32 tables ~ 64 tables, totaling 1,000 to 2,000 tables.

• 30,000 write concurrency can be supported and it works with MQ to achieve a write speed of 100,000 per second.

• In the read-write splitting mode, 60,000 read concurrency is supported and it reaches 100 read concurrency per second with distributed cache.

• If each table supports 3 million data, you can write up to 6 billion pieces of data in 2,000 tables.

• Thirty-two user tables can support hundreds of millions of users, and you only need to expand the capability once subsequently.

• You can use Redis.

• If the concurrency is not high, you can start a separate service to generate an auto-increment ID.

• Set the database auto-increment step to support horizontal scaling.

• UUID is suitable for file names and numbers, but not suitable for primary keys.

• Snowflake algorithm integrates 41 ms, 10 machines, and 12 serial numbers (auto-increment within milliseconds).

The 10 bits the machine reserved can be configured based on your business scenario.

In the past, online bugs caused by primary-secondary synchronization latency were indeed handled online, which was a small production accident.

The fact is as follows. Someone wrote code logic like this: firstly inserting a piece of data, finding it out, and then updating the data. During the peak period of the production environment, the write concurrency reached 2,000 per second. At this time, the primary-secondary synchronization latency was about tens of milliseconds. We will find online that some important data status is expected to be updated, but there are always some data that are not updated during peak hours. Users give feedback to customer service, and customer service will give feedback to us.

We use the MySQL command:

show slave statusAfter viewing the Seconds_Behind_Master, we can see that the data the secondary database replicates from the primary database delay for several milliseconds.

Usually, if the primary-second latency is severe, we use the following solutions:

• Sharding the database: After sharding the database into multiple primary databases, the write concurrency of each primary database is reduced several times, and the primary-second latency is negligible.

• Rewriting the code: Those who write code should be careful. The data may not be found if you query immediately after you insert the data.

• If you do need to insert the data, find it out, and immediately perform some operations, you can set up a direct connection to the main database or query latency. The primary-secondary replication latency generally does not exceed 50 milliseconds.

We have a table with online traffic records. Due to the large number of data, we have sharded databases and tables. At that time, some problems often occurred in the initial stage of database and table shards. The typical case was that deep paging was used in traffic record queries. By using some tools such as MAT and Jstack, we tracked that it was caused by sharding-jdbc internal references.

The traffic record data is stored in two databases. If the sharding key is not provided, the query statement will be distributed to all databases. For example, if the query statement is limit 10 and offset 1000 and only 10 records need to be returned in the final result, the database middleware needs (1000 + 10) × 2 = 2020 records to complete the calculation process. If the value of the offset is too large, the memory used will skyrocket. Although sharding-jdbc uses the merge algorithm to make some optimizations, deep paging still causes memory and performance problems in real-world scenarios.

Operations of merging and aggregating on intermediate nodes are very common in distributed frameworks. For example, ES has a similar data acquisition logic. The unrestricted deep paging will also cause ES memory problems.

(1) Rewrite the order by time offset X limit Y to order by time offset 0 limit X + Y.

(2) The service layer performs memory sorting on the obtained N*(X + Y) pieces of data and then takes Y records after the offset X after memory sorting.

The performance will become lower with paging by using this method.

(1) Get the data of the first page in a normal way and get the time_max of the first-page record.

(2) Every time you turn the page, rewrite order by time offset X limit Y to order by time where time>$time_max limit Y

In this way, you can ensure that only one page of data is returned at a time and that the performance is constant.

(1) Rewrite order by time offset X limit Y to order by time offset X/N limit Y/N.

(2) Rewrite order by time offset X limit Y to order by time offset X/N limit Y.

(3) Find the minimum value time_min.

(4) Perform secondary query by between, order by time between timeminandtime_i_max.

(5) Set the virtual time_min and find the offset of time_min in each database shard to obtain the global offset of time_min.

(6) After the global offset of time_min is obtained, the global offset X limit Y is naturally obtained.

Before database and table sharding, there is an SQL statement that uses the username to query a user:

select * from user where name = "xxx" and community="other";This SQL statement was modified by a colleague as follows to achieve the effect of dynamic splicing. His original intention was to dynamically remove these query conditions when the name or community was passed in as empty. This writing is also very common in MyBaits configuration files. In most cases, this writing method has no problem because the result set can be controlled. However, as the system ran, the user table had more and more records. When the name and community passed in were all empty, something went wrong:

select * from user where 1=1All records in the database were queried and then loaded into the JVM memory. Due to too many database records, the memory was directly exploded. Memory overflow due to this reason occurs very frequently. For example, when you import Excel files, memory overflow happens.

The common solution is to add the paging feature or verify the required parameters.

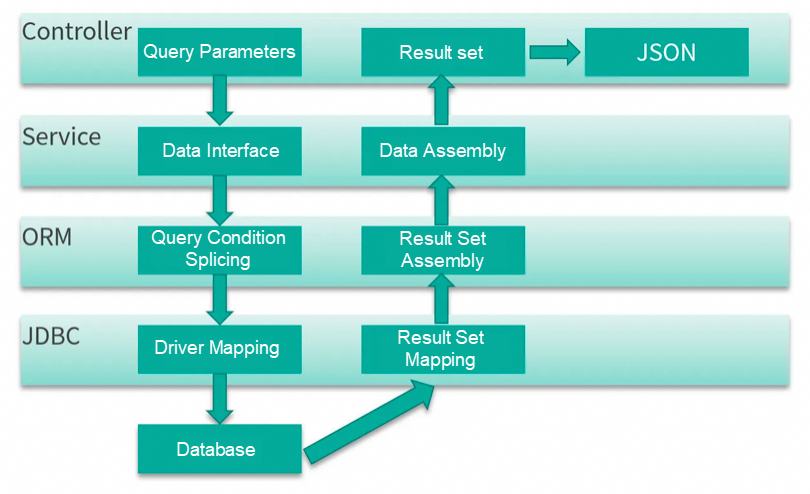

At present, many projects adopt the front-end separation architecture, so the controller layer method generally uses the @ResponseBody annotation to parse the query results and return JSON data. This consumes a lot of memory resources when the data set is very large. If the result set occupies 10 MB of memory before it is parsed into JSON, 20 MB or more of memory may be used during the parsing process.

Therefore, it is necessary to keep the result set compact, which is also necessary for the existence of the Data Transfer Object (DTO). In the Internet environment, high concurrent requests for small result sets are not worrisome, but time-consuming requests for large result sets are terrifying. This is one of the reasons.

The service layer is used to process specific businesses and better meet the functional requirements of businesses. A service may be used by multiple controller layers, or it may use the query results of multiple DAO structures for computing and assembly.

int getUserSize() { List<User> users = dao.getAllUser(); return null == users ? 0 : users.size();}A ticking time bomb is found in the code review. However, it exposes the problem only after the number of data reaches a certain level.

For example, when using Mybatis, there is a batch import service. When MyBatis performs batch insert, it generates memory overflow. In a common situation, this insert operation will not cause additional memory occupation. Finally, the problem is traced through the source code.

This is because when MyBatis circularly processes a batch, the operation object is an array, while when we define the interface, we use lists. When passing in a very large list, it needs to call the toArray method of the list to convert the list into an array (shallow copy). In the final assembly stage, StringBuilder is used to splice the final SQL, so the actual memory used is much more than the list.

It turns out that both insert operations and query actions are prone to problems as long as the data set involved is very large. Due to the introduction of many frameworks in the project, it becomes very difficult to analyze these specific memory footprints. So keeping small batch operations and clean result sets is a very good habit.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Interview Questions We've Learned Over the Years: MySQL – Part 1

Interview Questions We've Learned Over the Years: SpringCloud

1,076 posts | 263 followers

FollowAlibaba Cloud Community - May 8, 2024

Alibaba Cloud Community - May 2, 2024

Alibaba Cloud Community - May 1, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Community - July 29, 2024

1,076 posts | 263 followers

Follow PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by Alibaba Cloud Community