Performance problems are different from bugs. It's pretty easy, relatively speaking, to analyze and solve bugs. Often, you can directly find the root cause of a bug from the application log, or to be a bit more technical, a log of a node in a distributed service. However, in contrast to all of this, the troubleshooting methods required for performance problems is a far bit more complicated.

Application performance optimization can be described as a systemic project or process that requires engineers to possess a great deal of technical knowledge. A simple application not only contains the application code, but it also involves the container, or virtual machine, as well as the operating system, storage, network, and file system. Therefore, when an online application has performance problems, we need to consider many different factors and complications.

At the same time, besides some performance problems caused by low-level code issues, many performance problems also lurk deep in the application and are difficult to troubleshoot. To address them, we need to have a working knowledge of the sub-modules, frameworks, and components used by the application as well as some common performance optimization tools.

In this article, I will summarize some of the tools and techniques often used for performance optimization, and through doing so, I will also try to show how performance optimization works. This article will be divided into four parts. The first part will provide an overview about the idea behind performance optimization. The second part will introduce the general process involved with performance optimization and some common misconceptions. Next, the third part will discuss some worthwhile performance troubleshooting tools and common performance bottlenecks you should be aware of. Last, the fourth part will bring together the tools introduced previously to describe some common optimization methods that are focused on improving CPU, memory, network, and service performance.

Note that, unless specified otherwise, thread, heap, garbage collection, and other terms mentioned in this article refer to their related concepts in Java applications.

Application performance problems are different from application bugs. Most bugs are due to code quality problems, which can lead to missing or risky application functions. Once discovered, they can be promptly fixed. However, performance problems can be caused by many factors, including mediocre code quality, rapid business growth, and unreasonable application architecture designs. These problems generally require a series of time-consuming and complicated analysis processes, and many people are simply unwilling to do this work. Therefore, they are often covered up by temporary fixes. For example, if the system usage is high or the thread pool queue on a single host overflows, developers address this situation simply by adding machines to scale out the cluster. If the memory usage is high or out-of-memory (OOM) occurs during peak hours, they tackle this simply by restarting the system.

Temporary fixes are like burying landmines in the application and only partially solve the initial problem. To give one example, in many scenarios, adding hosts alone cannot solve the performance problems of applications. For instance, some latency-sensitive applications require extreme performance optimization for each host. At the same time, adding hosts is prone to resource waste and is not beneficial in the long run. Reasonable application performance optimization provides significant benefits in application stability and cost accounting.

This is what makes performance optimization necessary.

Consider the following situation. Your application has clear performance problems, including a high CPU utilization rate. You are ready to start working out how you can optimize the system, but you may not be well prepared for the potential difficulties involved in optimizing your application. Below are difficulties you will probably face:

1. The performance optimization process is unclear. If we detect a performance bottleneck and set to work to fix it immediately, we may later find that, after all our work, the issue we resolved was only one of the side effects of a greater, underlying cause.

2. There is no clear process that we can use to analyze performance bottlenecks. With the CPU, network, memory, and so many other performance indicators showing problems, we don't know which areas should we focus on and where should we start.

3. Developers often do not know what sort of performance optimization tools are out there. After a problem occurs, the developer team might not know which tool they should use or what the metrics obtained from the tool actually mean.

So far, there is no strictly defined process in the field of performance optimization. However, for most optimization scenarios, the process can be abstracted into the following four steps:

1. Preparation: Here, the main task is to conduct performance tests to understand the general situation of the application, the general location of the bottlenecks and the identification of optimization objectives.

2. Analysis: Use tools or techniques to provisionally locate performance bottlenecks.

3. Tuning: Perform performance tuning based on the identified bottlenecks.

4. Testing: Perform performance testing on the tuned application and compare the metrics you obtained with the metrics in the preparation phase. If the bottleneck has not been eliminated or the performance metrics do not meet expectations, repeat steps 2 and 3.

These steps are illustrated in the following diagram:

Among the four steps in this process, we will focus on steps 2 and 3 in the next two sections. First, let's take a look at what we need to do during the preparation and testing phases.

The preparation phase is a critical step and cannot be omitted.

First, for this phase, you need to have a detailed understanding of the optimization objects. As the saying goes, the sure way to victory is to know your own strength and that of your enemy.

a. Make a rough assessment of the performance problem: Filter out performance problems caused by the related low-level business logic. For example, if the log level of an online application is inappropriate, the CPU and disk load may be high in the case of heavy traffic. In this case, you simply need to adjust the log level.

b. Understand the overall architecture of the application: For example, what are the external dependencies and core interfaces of the application, which components and frameworks are used, which interfaces and modules have a high level of usage, and what are the upstream and downstream data links?

c. Understand the server information of the application: For example, you must be familiar with the cluster information of the server, the CPU and memory information of the server, the operating system installed on the server, whether the server is a container or virtual machine, and whether the current application will be disturbed if the hosts are deployed in a hybrid manner.

Second, you need to obtain the benchmark data. You can only tell if you have achieved your final performance optimization goals based on benchmark data and current business indicators.

When we enter this stage, we have already provisionally determined the performance bottlenecks of the application and have completed the initial tuning processes. To check whether the tuning is effective, we must perform stress testing on the application under simulated conditions.

Note that Java involves the just-in-time (JIT) compilation process, and therefore warm-up may be required during stress testing.

If the stress test results meet the expected optimization goals or represent a significant improvement compared with the benchmark data, we can continue to use tools to locate more bottlenecks. Otherwise, we need to provisionally eliminate the current bottleneck and continue to look for the next variable.

During performance optimization, taking note of the following precautions can reduce the number of undesired wrong turns.

a. Performance bottlenecks generally present an 80/20 distribution. This means that 80% of performance problems are usually caused by 20% of the performance bottlenecks. The 80/20 principle also indicates that not all performance problems are worth optimizing.

b. Performance optimization is a gradual and iterative process that needs to be carried out step by step and in a dynamic manner. After recording benchmark values, each time a variable is changed, multiple variables are introduced, causing interference in observations and the optimization process.

c. Do not place excessive emphasis on the single-host performance of applications. If the performance of a single host is good, consider it from the perspective of the system architecture. Do not pursue the extreme optimization in a single area, for example, by optimizing the CPU performance and ignoring the memory bottleneck.

d. Selecting appropriate performance optimization tools can give you twice the results with half the effort.

e. Optimize the entire application. The application needs to be isolated from the online system. A downgrade solution should be provided when new code is launched.

Performance optimization seeks to discover the performance bottlenecks of applications and then to apply optimization methods to mitigate the problems causing these bottlenecks. It is difficult to find the exact locations of performance bottlenecks. To quickly and directly locate bottlenecks, we need to possess two things:

So, to do a good job, we must first find the appropriate tools. But, how do we choose the right tools? And, which tools should be used in different optimization scenarios?

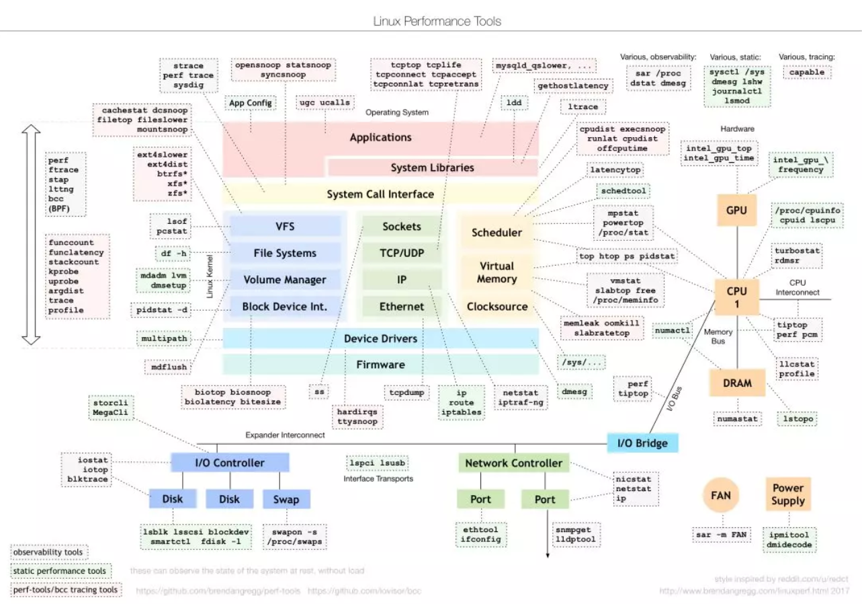

Well, first of all, let's take a look at the "Linux Performance Tools" diagram. Many engineers are probably familiar with this diagram created by Brendan Gregg, a system performance expert. Starting from the subsystems of the Linux kernel, this diagram lists the tools we can use to conduct performance analysis on each subsystem. It covers all aspects of performance optimization, such as monitoring, analysis, and tuning. In addition to this panoramic view, Brendan Gregg also provides separate diagrams for benchmark tools, specifically Linux Performance Benchmark Tools, and performance monitoring tools, Linux Performance Observability Tools. For more information, refer to the descriptions provided on Brendan Gregg's website.

Image source: http://www.brendangregg.com/linuxperf.html?spm=ata.13261165.0.0.34646b44KX9rGc

The preceding figure is a very informative reference for performance optimization. However, when we use it in practice, we may find that it is not the most appropriate reference for two reasons:

1. It assumes a high level of analysis experience. The preceding figure shows performance metrics from the perspective of Linux system resources. This requires the developer to be familiar with the functions and principles of various Linux subsystems. For example, when we encounter a performance problem, we cannot try all the tools under each subsystem. In most cases, we suspect that a subsystem has a problem and then use the tools listed in this diagram to confirm our suspicions. This calls for a good deal of performance optimization experience.

2. The applicability and completeness of the diagram are not great. When you analyze performance problems, bottom-up analysis from the underlying system layer is inefficient. Most of the time, analysis on the application layer is more effective. The Linux Performance Tools diagram only provides a toolkit from the perspective of the system layer. However, we need to know which tools we can use at the application layer and what points to look at first.

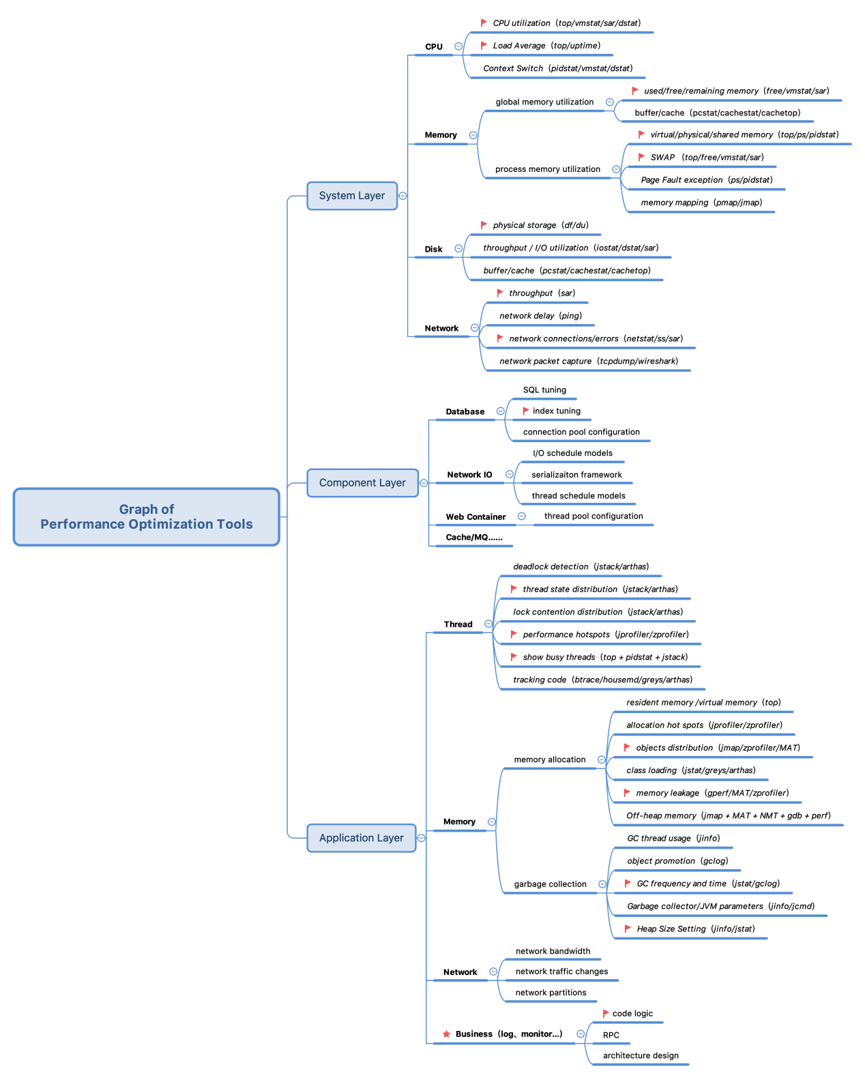

To address these difficulties, a more practical performance optimization tool map is provided below. This map applies to both the system layer and application layer, including the component layer. It also lists the metrics that we need to pay attention to first when analyzing performance problems. The map also highlights the points most likely to produce performance bottlenecks. Note that less-used indicators and tools are not listed in the graph, such as CPU interruption, index node usage, and I/O event tracking. Troubleshooting these uncommon problems is complicated. So, in this article, we will only focus on the most common problems.

Compared with the preceding Linux Performance Tools diagram, the following figure has several advantages. By combining specific tools with performance metrics, the distribution of performance bottlenecks is described from different levels simultaneously, which makes the map more practical and operational. The tools at the system layer are divided into four parts: CPU, memory, disk, including the file system, and the network. The toolkit is basically the same as that in the Linux Performance Tools diagram. The tools in the component layer and application layer are made up of tools provided by JDK, trace tools, "dump" analysis tools, and profiling tools.

Here, we will not describe the specific usage of these tools in detail. You can use the man command to obtain detailed instructions for using the tools. In addition, you can also use info to query the command manual. info can be understood as a detailed version of man. If the output of man is not easy to understand, you can refer to the info document. Here involves many commands, which actually do not need to be remembered.

First, although the distribution of bottlenecks is described from the perspectives of systems, components, and applications, these three elements complement each other and affect each other in actual operations. The system provides a runtime environment for applications. A performance problem essentially means that system resources have reached their upper limits. This is reflected in the application layer, where the metrics of applications or components begin to decline. Improper use or design of applications and components can also accelerate the consumption of system resources. Therefore, when analyzing the bottleneck point, we need to combine the analysis results from different perspectives, find their commonalities, and reach a final conclusion.

Second, we recommend that you start from the application layer and analyze the most commonly used indicators marked in the map to find the points that are most important, most suspicious, and most likely to impact performance. After reaching preliminary conclusions, go to the system layer for verification. The advantage of this approach is due to the fact that many performance bottlenecks are reflected in the system layer and presented in multiple variables. For example, if the GC metric at the application layer is abnormal, it can be easily observed by the tools provided by JDK. However, it is reflected in the system layer through abnormal current CPU utilization and memory indicators. This can lead to confusion in our analysis.

Finally, if the bottleneck points present as a multi-variable distribution at both the application layer and the system layer, we recommend that you use tools such as ZProfiler and JProfiler to profile the application. This allows you to obtain the overall performance information of an application. Note that, here, profiling refers to the use of event-based statistics, statistical sampling, or byte-code instrumentation during application runtime. After profiling, we can then collect the application runtime information to perform a dynamic analysis of application behavior. For example, we can sample CPU statistics and bring in various symbol table information to see the code hotspots in an application over a period of time.

Now, let's go on to discuss the core performance metrics that we need to pay attention to at different analysis levels, and how to preliminarily determine whether the system or application has performance bottlenecks based on these metrics. Then, we will talk about how to confirm bottleneck points, the cause of the bottleneck points, and the relevant optimization methods.

CPU-related metrics are CPU utilization, load average, and context switch. Common tools include top, ps, uptime, vmstat, and pidstat.

top - 12:20:57 up 25 days, 20:49, 2 users, load average: 0.93, 0.97, 0.79

Tasks: 51 total, 1 running, 50 sleeping, 0 stopped, 0 zombie

%Cpu(s): 1.6 us, 1.8 sy, 0.0 ni, 89.1 id, 0.1 wa, 0.0 hi, 0.1 si, 7.3 st

KiB Mem : 8388608 total, 476436 free, 5903224 used, 2008948 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 0 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

119680 admin 20 0 600908 72332 5768 S 2.3 0.9 52:32.61 obproxy

65877 root 20 0 93528 4936 2328 S 1.3 0.1 449:03.61 alisentry_cliThe first line shows the current time, system running time, and the number of currently logged-on users. The three numbers following "load average" represent the average load over the last 1 minute, 5 minutes, and 15 minutes in sequence. Load average refers to the average number of processes in the running state (that is, processes using CPUs or waiting for CPUs in the R state) and non-interrupted state (or D state) per unit time. This is actually the average number of active processes. It's important to recognize that the CPU load average is not directly related to the CPU utilization.

The third row indicates the CPU utilization metric. You can use man to view the meaning of each column. The CPU utilization shows the CPU usage statistics per unit time and is displayed as a percentage. The calculation method for this is: CPU utilization = 1 - (CPU idle time)/Total CPU time. Note that the CPU utilization obtained from performance analysis tools is the average CPU usage during the sampling period. Note also that the CPU utilization displayed by the top tool adds up the utilization rates of all CPU cores. This means that the CPU utilization of 8 cores can reach 800%. You can also use htop or other new tools for the same purpose.

Use the vmstat command to view the "context switching times" metric. As shown in the following table, a set of data is output every 1 second:

$ vmstat 1

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 504804 0 1967508 0 0 644 33377 0 1 2 2 88 0 9The context switch (cs) metric in the above table indicates the number of context switches per second. Based on different scenarios, CPU context switches can be divided into interrupted context switches, thread context switches, and process context switches. However, in all of these cases, excessive context switching consumes CPU resources in the storage and recovery of data such as registers, kernel stacks, and virtual memory. As such, the actual running time of the process is shortened, resulting in a significant reduction in the overall performance of the system. In the vmstat output, us and sy, which represent the user-mode and kernel-mode CPU utilization, are important references.

The vmstat output only reflects the overall context switching situation of the system. To view the context switching details of each process, such as voluntary and involuntary switching, you need to use pidstat. This command can also be used to view the user-mode and kernel-mode CPU utilization of a process.

1. CPU utilization: If we find that the CPU utilization of the system or application process remains very high, such as more than 80% for a single core, for a certain period of time, we need to be on guard. We can use the jstack command multiple times to dump the application thread stack in order to view the hotspot code. For non-Java applications, we can directly use perf for CPU sampling and then perform the offline analysis of the sampled data to obtain CPU execution hotspots. Java applications require stack information mapping for symbol tables, and therefore we cannot directly use perf to obtain the result.

2. CPU load average: When the average load is higher than 70% for the number of CPU cores, this means that bottlenecks occur in the system. There are many possible causes of high CPU loads. We will not go into detail here. You must note that monitoring the trends of load average changes makes it easier to locate problems. Sometimes, large files are loaded, which leads to an instantaneous increase in the load average. If the load averages for 1 minute, 5 minutes, and 15 minutes do not differ much, the system load is stable and we do not need to look for short-term causes. This indicates that the load is increasing gradually. Therefore, we need to then switch our attention to the overall performance.

3. CPU context switching: There is no specific empirical value recommended for context switching. This metric can be in the thousands or tens of thousands. The specific metric value depends on the CPU performance of the system and the current health of the application. However, if the number of system or application context switches increases by an order of magnitude, then a performance problem is very likely. One possible issue could be an involuntary increase in upstream and downstream switches. This sort of problem would indicate that there are too many threads, and they are competing for CPUs.

These three metrics are closely related. Frequent CPU context switching may lead to an increased average load. The next section describes how to perform application tuning based on the relationships among the three indicators.

Some CPU changes can also be detected from the thread perspective, but we must remember that thread problems are not only related to CPUs. The main thread-related metrics are as follows, and all of them can be obtained through the jstack tool that comes with JDK:

To understand threat exception, you'll need to do the following:

1. Check whether the total number of threads is too high. Too many threads cause frequent context switching in the CPU. At the same time, these threads consume a great deal of memory. The appropriate number of threads is determined by the application itself and the host configurations.

2. Check whether the thread status is abnormal. Check whether there are too many waiting or blocked threads. This occurs when too many threads are set or the competition for locks is fierce. In addition, perform comprehensive analysis based on the application's internal lock usage.

3. Check whether some threads consume a large amount of CPU resources based on the CPU utilization.

Memory-related metrics are listed below, and common analysis tools include top, free, vmstat, pidstat, and JDK tools.

1. System memory usage indicators, including the free memory, used memory, and available memory and cache or buffer.

2. Virtual memory, resident memory, and shared memory for processes, including Java processes.

3. The number of page faults of the process, including primary page faults and secondary page faults.

4. Memory size and Swap parameter configurations for swap-in and swap-out.

5. JVM heap allocation and JVM startup parameters.

6. JVM heap recycling and Garbage Collection conditions.

free allows you to view the system memory usage and Swap partition usage. The top tool can be used for each process. For example, you can use the top tool to view the resident memory size (RES) of Java processes. Together, both tools can cover most memory metrics. The following is the output of the free command:

$free -h

total used free shared buff/cache available

Mem: 125G 6.8G 54G 2.5M 64G 118G

Swap: 2.0G 305M 1.7GWe're not going to describe what the above column results indicate here. They should be relatively easy to understand.

Next, let's take a look at the swap and buff/cache metrics.

Swap uses a local file or disk space as the memory and includes two processes: swap-out and swap-in. Swap needs to read data from and write data to disks, and therefore its performance is not very high. In fact, we recommend that you disable Swap for most Java applications, such as ElasticSearch and Hadoop, because memory costs have been decreasing. This is also related to the JVM garbage collection process. The JVM traverses all the heap memory used during garbage collection. If this memory is swapped out, disk I/O occurs during traversal. Increases in the Swap partition are generally strongly correlated to the disk usage. During specific analysis, we need to comprehensively analyze the cache usage, the "swappiness" threshold, and the activity of anonymous pages and file pages.

Buff/cache indicates the sizes of the cache and buffer. A cache is a space for temporarily stored data generated when a file is read from or written to a disk. It is a file-oriented storage object. You can use cachestat to view the read and write cache hits for the entire system and use cachetop to view the cache hits of each process. A buffer is a temporary data storage for data written to a disk or directly read from a disk. It is intended for block devices. The free command outputs the total of these two metrics. You can use the vmstat command to distinguish between the cache and the buffer and see the size of the Swap partitions swapped in and out.

Now that we understand the common memory metrics, here are some common memory problems to look out for:

a. Insufficient free or available system memory. This means that a process occupies too much memory, the system itself does not have enough memory, or that there is memory overflow

b. Memory reclamation exceptions. This may be memory leakage, which is the process where memory usage continues to rise over a period of time, and an abnormal garbage collection frequency.

c. Excessive cache usage during reading or writing of large files, and a low cache hit ratio.

d. Too many page faults. This would be probably due to frequent I/O reads.

e. Swap partition usage exceptions. This would be likely due to high consumption.

Now, we need to analyze the memory abnormities:

free or top to view the global memory usage, such as the system memory usage, Swap partition memory usage, and cache/buffer usage, to initially determine the location of the memory problem, such as in the process memory, cache/buffer, or Swap partition.vmstat to check whether the memory usage has been increasing, run jmap to regularly compile statistics on the memory distribution of objects to determine whether memory leaks exist, and run cachetop to locate the root cause of a buffer increase.Consider this. If the cache or buffer usage is low, after the impact of the cache or buffer on memory is excluded, run vmstat or sar to observe the memory usage trend of each process. Then, if you find that the memory usage of a process continues to rise, for a Java application, you can use jmap, VisualVM, heap dump analysis, or other tools to observe the memory allocation of objects. Alternatively, you can run jstat to observe the memory changes of applications after garbage collection. Next, considering the business scenario, locate the problem, such as a memory leakage, invalid garbage collection parameter configuration, or abnormal service code.

When analyzing disk-related issues, we can generally assume these issues have something to do with the file system. Therefore, we do not distinguish between disk-related and file system related issues. The following metrics to be listed below are related to disks and file systems. iostat and pidstat are common monitoring tools. The former applies to the entire system, whereas the latter can monitor the I/O of specific processes.

a. Disk I/O utilization: indicates the percentage of time a disk takes to process I/O.

b. Disk throughput: indicates the amount of I/O requests per second, in KB.

c. I/O response time: indicates the interval between sending an I/O request and receiving the first response to this request, including the waiting time in the queue and the actual processing time.

d. IOPS (Input and Output per Second): indicates the number of I/O requests per second.

e. I/O wait queue size: indicates the average I/O queue length. Generally, the shorter the better.

When you use iostat, the output is as follows:

$iostat -dx

Linux 3.10.0-327.ali2010.alios7.x86_64 (loginhost2.alipay.em14) 10/20/2019 _x86_64_ (32 CPU)

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sda 0.01 15.49 0.05 8.21 3.10 240.49 58.92 0.04 4.38 2.39 4.39 0.09 0.07In the preceding output, %util indicates the disk I/O utilization, which may exceed 100% as the CPU utilization may in the case of parallel I/O. rkB/s and wkB/s respectively indicate the amount of data read from and written to the disk per second, namely, the throughput, in KB. The metrics for the disk I/O processing time are r_await and w_await, which respectively indicate the response time after a read or a write request is processed. svctm indicates the average time required to process I/O, but this metric has been deprecated and has no practical significance. r/s and w/s are the IOPS metrics, indicating the number of read requests and write requests sent to the disk per second, respectively. aqu-sz indicates the length of the wait queue.

Most of the output of pidstat is similar to that of iostat, the only difference being that we can view the I/O status of each process in real time.

Next are the steps for determining whether disk metrics are abnormal or not.

When the disk I/O utilization exceeds 80% for a sustained period of time or the response time is too long. For SSDs, the response time ranges from 0.0x to 1.x milliseconds, and 5 to 10 milliseconds for mechanical disks, which generally indicates a performance bottleneck in disk I/O.

If %util is large while rkB/s and wkB/s are small, a large number of random disk reads and writes exist. In this case, we recommend that you optimize random reads and writes to sequential reads and writes. You can use strace or blktrace to check the I/O continuity and determine whether read and write requests are sequential. For random reads and writes, pay attention to the IOPS metric. For sequential reads and writes, pay attention to the throughput metric.

Last, if the avgqu-sz value is large, it indicates that many I/O requests are waiting in the queue. Generally, if the queue length of a disk exceeds 2, this disk is considered to have I/O performance problems.

The term "network" is a broad term and may refer to several different things, and as a consequence also involves several different metrics to measure performance at the application, transport, and network layer, as well as the network interface layer. Here, we use "network" to refer to the network part at the application layer. The common network indicators are as follows:

a. Network bandwidth: This refers to the maximum transmission rate of the link.

b. Network throughput: This refers to the volume of data successfully transferred per unit time.

c. Network latency: This refers to the amount of time from sending a request to receiving the first remote response.

d. The number of network connections and errors.

Generally, the following network bottlenecks can be found at the application layer:

a. The network bandwidth of the cluster or data center is saturated. This causes an increase in the application QPS or TPS.

b. The network throughput is abnormal. If a large amount of data is transmitted through the interface, the bandwidth usage is high.

c. An exception or error occurred in the network connection.

d. A network partition occurred.

Bandwidth and network throughput are metrics that apply to the entire application and can be directly obtained by monitoring the system. If these metrics increase significantly over a period of time, a network performance bottleneck will occur. For a single host, sar can be used to obtain the network throughput of network interfaces and processes.

We can use ping or hping3 to check for network partitioning and specific network latency. For applications, we pay more attention to end-to-end latency. We can obtain the latency information of each process through the trace log output after middleware tracking.

We can use netstat, ss, and sar to obtain the number of network connections or network errors. Excessive network connections result in significant overhead because they occupy both file descriptors and the cache. Therefore, the system can only support a limited number of network connections.

We can see that several tools, such as top, vmstat, and pidstat, are commonly used to analyze the CPU, memory, and disk performance metrics. Some common tools are given below:

top, vmstat, pidstat, sar, perf, jstack, and jstat.top, free, vmstat, cachetop, cachestat, sar, and jmap.top, iostat, vmstat, pidstat, and du/df.netstat, sar, dstat, and tcpdump.profiler and dump analysis.Most of these above tools are used to view system-layer metrics. At the application layer, in addition to a series of tools provided by JDK, some commercial products are available, such as gceasy.io for analyzing garbage collector logs and fastthread.io for analyzing thread dump logs.

To troubleshoot online exceptions for Java applications or analyze application code bottlenecks, we can also use Alibaba's open-source Arthas tool. So, let's take a closer look at this powerful tool.

Alibaba Cloud's Arthas is mainly used for the real-time diagnosis of online applications and can be used to solve problems such as online application exceptions that need to be analyzed and located online. At the same time, some tracing tools for method calls provided by Arthas are also very useful for troubleshooting problems such as slow queries. Arthas has the following features:

You must note that performance tools are only a means to solve performance problems. Therefore, you only need to understand the general usage of common tools. Therefore, it is advised to not put too much effort into learning about tools that you do not actually need.

After detecting abnormal metrics through tools and initially locating the bottlenecks, you need to confirm and address the problem. Here, we provide some performance tuning routines that you can learn from. We will look at how to determine the most important performance metrics, locate performance bottlenecks, and finally perform performance tuning. The following sections are divided into code, CPU, memory, network, and disk performance optimization practices. For each optimization point, we will present a system routine to help you move from theory to practice.

In the case that you encounter performance problems, the first thing we'll need to do is to check whether the problem is related to business code. We may not be able to solve the problem by reading the code, but we can eliminate some low-level errors related to the business code by examining logs or the code. The best ground for performance optimization is within the application.

For example, we can check the business log to see whether there are a large number of errors in the log content. Most performance problems at the application layer and the framework layer can be found in the log. If the log level is not properly set, this can result in chaotic online logging. In addition, we must check the main logic of the code, such as the unreasonable use of for loops, NPE, regular expressions, and mathematical calculations. In these cases, you can fix the problem simply by modifying the code.

It's important to know that you should not directly associate performance optimization with caching, asynchronization, and JVM optimization without analysis. Complex problems sometimes have simple solutions. The 80/20 principle is still valid in the field of performance optimization. Understanding some basic common code mistakes can accelerate the problem analysis process. When working to address bottlenecks in the CPUs, memory, or JVM, you may be able to find a solution in the code.

Next, we will look at some common coding mistakes that can cause performance problems.

split() and replaceAll() with caution, and remember that regular expressions must be pre-compiled.String.intern() is used in an earlier JDK version, specifically Java 1.6 and earlier versions. It may cause memory overflow in the method area (permanent generation). In later versions of JDK, if the string pool is too small and too many strings are cached, the performance overhead will be high.ThreadPoolExecutor to manually create a thread pool based on your specific business scenario. Specify the number of threads and the queue size according to different tasks to avoid the risk of resource exhaustion. Consistent thread naming facilitates subsequent troubleshooting.CopyOnWriteArrayList. ConcurrentHashMap is used for scenarios with small access data volumes, low data consistency requirements, and infrequent changes. ConcurrentSkipListMap is used for scenarios with large access data volumes, frequent reads and writes, and low data consistency requirements.LongAdder instead of AtomicLong for counting and use ThreadLocalRandom instead of Random.These are merely some of the potential code optimizations. We can also extract some common optimization ideas from these code optimizations:

a. Change space into time: Use memory or disks instead of the more valuable CPU or network resources, for example, by using a cache.

b. Change time into space: Conserve memory or network resources at the expense of some CPU resources. For example, we can switch a large network transmission into multiple smaller transmissions.

c. Adopt other methods, such as parallelization, asynchronization, and pooling.

We should also pay more attention to the CPU load. High CPU utilization alone is generally not a problem. However, CPU load is one key factor for determining the health of system computing resources.

This situation is common in CPU-intensive applications. This means that a large number of threads are in the ready state and I/O is low. Common situations that consume a large amount of CPU resources include:

Common troubleshooting approaches for high CPU usage: If we use jstack to print thread stacks more than five times, we can usually locate the thread stacks with high CPU consumption. Alternatively, we can use profiling, specifically event-based sampling or tracking, to obtain an on-CPU flame graph for the application over a period of time, allowing us to quickly locate the problem.

Another possible situation is that frequent garbage collection, including Young garbage collection, Old garbage collection, and Full garbage collection, occurs in the application, which in turns increases the CPU utilization and load. For troubleshooting purposes, use jstat-gcutil to continuously output the garbage collection count and time statistics for the current application. Frequent garbage collection increases the CPU load and generally results in insufficient available memory. So, for this, run the free or top command to view the available memory of the current host.

If CPU utilization is too high, a bottleneck may occur in the CPU itself. In addition, we can use vmstat to view detailed CPU utilization statistics. A high user-mode CPU utilization (us) indicates that user-mode processes occupy a large amount of the CPU. If this value is greater than 50% for a long time, look for performance problems in the application itself. High kernel-mode CPU utilization (sy) indicates that the kernel-mode consumes a large amount of CPU resources. If this is the case, then we recommend that you check the performance of kernel threads or system calls. If the value of us + sy is greater than 80%, more CPUs are required.

If CPU utilization is not high, it means that our application is not busy with computing but running other tasks. Low CPU utilization and high load average is common in I/O-intensive processes. After all, the load average is the sum of processes in the R state and D state. If R-state processes are removed, we are left with only D-state processes. The D-state is usually caused by waiting for I/O, such as disk I/O and network I/O.

Troubleshooting and verification methods: Use vmstat 1 to regularly output system resource usage. Observe the value of the %wa(iowait) column, which identifies the percentage of the disk I/O wait time in the CPU time slice. If the value is greater than 30%, the degree of disk I/O wait is severe. This may be caused by a large volume of random disk access or direct disk access, such as when no system cache is used. The disk may also have a bottleneck. This can be verified by using the iostat or dstat output. For example, when %wa(iowait) rises and there is a large number of disk read requests, the problem may be caused by disk reads.

In addition, time-consuming network requests, network I/O, can also increase the average CPU load, such as slow MySQL queries and the use of RPC interfaces to obtain interface data. To troubleshoot this problem, we need to comprehensively analyze the upstream and downstream dependencies of the application and the trace logs produced by middleware tracking.

Use vmstat to check the number of system context switches and then use pidstat to check the voluntary context switching (cswch) and involuntary context switching (nvcswch) situation of the process. Voluntary context switching is caused by the conversion of the internal thread status of the application, such as calling sleep(), join(), wait(), or using a lock or synchronized lock structure. Involuntary context switching is triggered when the thread uses up the allocated time slices or is scheduled by the scheduler due to the execution priorities.

If the number of voluntary context switches is high, then this means that the CPU is waiting for resource acquisition, which can be due to insufficient I/O, memory, or other system resource insufficiencies. And, if the number of involuntary context switches is high, the cause may be an excessive number of threads in the application, which leads to fierce competition for CPU time slices and frequent forcible scheduling by the system. Such a situation can be evidenced by jstack's thread count and thread status distribution.

Memory is divided into system memory and process memory, of which process memory for Java application processes is included. Generally, most memory problems occur in the process memory, and relatively few bottlenecks are caused by system resources. For Java processes, the built-in memory management capability automatically solves two problems: how to allocate memory to objects and how to reclaim the memory allocated to objects. The core of memory management is the garbage collection mechanism.

Although garbage collection can effectively prevent memory leakage and ensure the effective use of memory, it is not a universal solution. Unreasonable parameter configurations and code logic still cause a series of memory problems. In addition, early garbage collectors had poor functionality and collection efficiency. Too many garbage collection parameter settings were highly dependent on the tuning experience of developers. For example, improper setting of the maximum heap memory may cause a series of problems, such as heap overflow and heap shock.

Let's look at some common approaches to solving memory problems.

Java applications generally monitor the memory level of a single host or cluster. If the memory usage on a single host is greater than 95% or the cluster memory usage is greater than 80%, this might indicate a memory problem. Note that, here, the memory level is for the system memory.

Except for some extreme cases, high memory usage results from insufficient system memory, and the problem is usually caused by Java applications. When we run the top command, we can see the actual memory usage of the Java application process. Here, RES indicates the resident memory usage of the process, and VIRT indicates the virtual memory usage of the process. The memory consumption relationship is as follows: VIRT > RES > Heap size that is actually used by the Java application. In addition to heap memory, the memory usage of the Java process as a whole also includes the method area, metaspace and JIT cache. The main components include: memory usage of Java applications = Heap + Code cache + Metaspace + Symbol tables + Thread stacks + Direct buffers + JVM structures + Mapped files + Native libraries + so on.

You can run the jstat-gc command to view the memory usage of the Java process. The output metrics can display the usage of each partition and metaspace of the current heap memory. The statistics and usage of direct buffers can be obtained by using Native Memory Tracking (NMT, introduced by HotSpot VM Java8). The memory space used by the thread stack can be easily ignored. Although the thread stack memory adopts lazy loading, the memory is not allocated directly by using the +Xss size. However, too many threads may cause unnecessary memory consumption. As such, we can use the jstackmem script to count the overall thread usage.

Troubleshooting methods for insufficient system memory:

free command to view the currently available memory space. Then, run the vmstat command to view the specific memory usage and memory growth trends. At this stage, you can generally locate the processes that consume the most memory.pcstat, cachetop, and slabtop to analyze the specific usage of the cache or buffer.A memory overflow occurs when an application creates an object instance and the required memory space is greater than the available memory space of the heap. There are many types of memory overflows, which usually are indicated by the OutOfMemoryError keyword in the error log. Below are the common memory overflow types and their corresponding analysis methods:

1. java.lang.OutOfMemoryError: Java heap space. The cause of this error is that objects cannot be allocated in the heap (the young generation and the old generation), the references of some objects have not been released for a long time and the garbage collector cannot reclaim these objects, or a large number of Finalizer objects are used and these objects are not in the garbage collection reclamation period. Generally, heap overflow is caused by memory leakage. If you confirm that no memory leak occurs, the solution is to increase the heap memory.

2. java.lang.OutOfMemoryError: GC overhead limit exceeded. The cause for this error is that the garbage collector uses more than 98% of the GC time, but less than 2% of the heap memory is collected. This is generally due to memory leakage or insufficient heap space.

3. java.lang.OutOfMemoryError: Metaspace or java.lang.OutOfMemoryError: PermGen space. For this type of error, troubleshooting can be done as follows. Check whether dynamic classes are loaded but not promptly uninstalled, whether there is a large volume of string constant pooling, and whether the set permanent generation or metaspace is too small.

4. java.lang.OutOfMemoryError: unable to create new native Thread. The cause of this error is that the virtual machine cannot request sufficient memory space when expanding the stack space. You can reduce the size of each thread stack and the total number of threads for the application. In addition, the total number of processes or threads created in the system is limited by the system's idle memory and operating system, so we need to carefully inspect the situation. Note that this stack overflow is different from StackOverflowError. The latter occurs because the method calling layer is too deep and the allocated stack memory is insufficient to create a new stack frame.

Other OutOfMemoryError types, such as Swap partition overflow, local method stack overflow, and array allocation overflow, are not described here because they are less common.

Java memory leakage is a nightmare for developers. Memory leakage is different from memory overflow, which is simple and easy to detect. Memory leakage occurs when the memory usage increases and the response speed decreases after an application runs for a period of time. This process eventually causes the process to freeze.

Java memory leaks may result in insufficient system memory, suspended processes, and OOM. For this, there are only two troubleshooting methods:

jmap to regularly output heap object statistics and locate objects that continue to increase in number and size.Profiler tool to profile the application and find memory allocation hotspots.In addition, as the heap memory continues to grow, we recommend that you dump a snapshot of the heap memory for subsequent analysis. Although snapshots are instantaneous values, they do have a certain significance.

Garbage collection metrics are important for measuring the health of Java process memory usage. Core garbage collection metrics include the following: the frequency and occurrences of GC Pauses, including MinorGC and MajorGC, and the memory details for each collection. The former can be directly obtained by using the jstat tool, but the latter requires an analysis of garbage collection logs. Note that FGC/FGCT in the jstat output column indicates the number of GC Pauses (Stop-the-World) during an old-generation GC. For example, for the CMS garbage collector, the value is increased by two each time old-generation garbage is reclaimed. For the initial marking and re-marking stages of Stop-the-World, this statistical value is 2.

So, when is garbage collection tuning required? The answer to this depends on the specific situation of the application, such as its response time requirements, throughput requirements, and system resource restrictions. Based on our experience, if the garbage collection frequency and time greatly increase, the average GC pause time exceeds 500 milliseconds, and the Full GC execution frequency is less than one minute, this indicates that garbage collection tuning is required.

Due to the wide variety of garbage collectors, garbage collection tuning policies vary depending on the application. Therefore, the following only describes several common garbage collection tuning policies.

1. Select an appropriate garbage collector. You'll need to select an appropriate garbage collector based on the latency and throughput requirements of the application and the characteristics of different garbage collectors. We recommend that you use G1 to replace the CMS garbage collector. The performance of G1 is being gradually improved. On hosts with 8 GB memory or less, the performance of G1 is about the same or even a bit higher than that of CMS. Parameter adjustment is convenient for G1, while the CMS garbage collector parameters are too complex and may cause space fragmentation and high CPU consumption. This can cause the CMS garbage collector to cease operation. The ZGC garbage collector introduced in Java 11 can be used for concurrent marking and collection in all stages. It looks like a promising tool.

2. Set a proper heap memory size. The heap size cannot be too large. To avoid system memory exhaustion, we recommend that the heap size not exceed 75% of the system memory. The maximum heap size must be consistent with the initialized heap size to avoid heap shock. The size setting of the young generation is critical. When we adjust the garbage collection frequency and time, we are usually adjusting the size of the young generation, including the proportions of the young and old generations and the proportions of the Eden and Survivor areas in the young generation. When setting these ratios, we must also consider the ages of objects for promotion in each generation. Therefore, a lot of things need to be considered throughout the process. If the G1 garbage collector is used, it is much easier to decide on the young generation size because the adaptive policy determines the collection set (CSet) each time. The adjustment of the young generation is the core of garbage collection tuning and is highly dependent on experience. However, in general, if the young garbage collection frequency is high, this means that the young generation is too small or the configurations of the Eden and Survivor areas are unreasonable. Alternatively, if the young garbage collection is time-consuming, this means that the young generation is too large.

3. Reduce the frequency of Full GC. If frequent Full GC or old-generation GC occurs, a memory leak is likely to have occurred, causing objects to be held for a long time. This problem can be located quickly by dumping a memory snapshot for analysis. In addition, if the ratio of the young generation to the old generation is inappropriate, objects are frequently assigned to the old generation and Full GC may also occur. If this is the case, it is necessary to analyze both the business code and memory snapshot.

Also, configuring garbage collection parameters can help us obtain a lot of key information required for garbage collection tuning. This includes configuring -XX:+PrintGCApplicationStoppedTime, -XX:+PrintSafepointStatistics, and -XX:+PrintTenuringDistribution. These parameters allow us to obtain the distribution of GC pauses, the time consumption of safepoints, and the distribution of ages of objects for promotion, respectively. In addition, -XX:+PrintFlagsFinal can be used to help us understand the final garbage collection parameters.

Below are some steps you can take in Disk I/O Troubleshooting:

%wa (iowait) and %util. You can determine whether disk I/O is abnormal based on the output. For example, a high %util value indicates I/O-intensive behaviors.pidstat to locate a specific process and check the volume and rate of data reads and writes.lsof + process number to view the list of files opened by the abnormal process, including directories, block devices, dynamic libraries, and network sockets. By also considering the business code, we can generally locate the source of I/O pressure. For more specific analysis, we can also use tools such as perf to trace and locate the source of I/O.An increase in %wa(iowait) does not necessarily indicate a bottleneck in the disk I/O. This value indicates the proportion of the time used for I/O operations on the CPU. A high value is normal if the main activity of the application process is I/O during a given period.

a. Too many objects are transmitted at a time, which may cause slow request response times and frequent garbage collection.

b. Improper network I/O model selection leads to low overall QPS and long response times for the application.

c. The thread pool setting for RPC calls is inappropriate. We can use jstack to compile statistics on thread distribution. If there are many threads in the TIMED_WAITING or WAITING state, we need to look into the situation carefully. For example, if the database connection pool is insufficient, this will affect the thread stack because many threads will compete for a connection pool lock.

d. The set RPC call timeout is inappropriate, resulting in many request failures.

For Java applications, the thread stack snapshot is very useful. In addition to its use to troubleshoot thread pool configuration problems, snapshots allow us to begin troubleshooting from the thread stack in other scenarios, such as high CPU usage and slow application response.

This section provides several commands we can use to quickly locate performance problems.

1. View the system's current network connections.

netstat -n | awk '/^tcp/ {++S[$NF]} END {for(a in S) print a, S[a]}'2. View the top 50 objects in a heap to locate memory leaks.

jmap ¨Chisto:live $pid | sort-n -r -k2 | head-n 503. List the top 10 processes by CPU or memory usage.

#Memory

ps axo %mem,pid,euser,cmd | sort -nr | head -10

#CPU

ps -aeo pcpu,user,pid,cmd | sort -nr | head -104. Display the overall CPU utilization and idle rate of the system.

grep "cpu " /proc/stat | awk -F ' ' '{total = $2 + $3 + $4 + $5} END {print "idle \t used\n" $5*100/total "% " $2*100/total "%"}'5. Count the number of threads by thread state (in the enhanced version).

jstack $pid | grep java.lang.Thread.State:|sort|uniq -c | awk '{sum+=$1; split($0,a,":");gsub(/^[ \t]+|[ \t]+$/, "", a[2]);printf "%s: %s\n", a[2], $1}; END {printf "TOTAL: %s",sum}';6. View the 10 machine stacks that consume the most CPU resources.

We recommend that you use the show-busy-java-threads script, which allows you to quickly troubleshoot Java CPU performance problems when the top us value is too high. This script automatically identifies the threads that consume a large amount of CPU resources in the running Java process and prints out their thread stacks. This allows us to identify the method calls that cause performance problems. This script is already used in Alibaba's online O&M environment. You can find the script here.

7. Generate a flame graph, which requires you to install perf, perf-map-agent, and flushgraph:

# 1. Collect stack and symbol table information during application runtime (sampling time: 30 seconds, 99 events per second).

sudo perf record -F 99 -p $pid -g -- sleep 30; ./jmaps

# 2. Use perf script to generate the analysis results. The generated flamegraph.svg file is the flame graph.

sudo perf script | ./pkgsplit-perf.pl | grep java | ./flamegraph.pl > flamegraph.svg8. List the top 10 processes by the usage of Swap partitions

for file in /proc/*/status ; do awk '/VmSwap|Name|^Pid/{printf $2 " " $3}END{ print ""}' $file; done | sort -k 3 -n -r | head -109. Compile JVM memory usage and GC status statistics

#Show the cause of the last or ongoing GC

jstat -gccause $pid

#Show the capacity and usage of each generation

jstat -gccapacity $pid

#Show the capacity and usage of the young generation

jstat -gcnewcapacity $pid

#Show the capacity of the old generation

jstat -gcoldcapacity $pid

#Show GC information (continuous output at 1-second intervals)

jstat -gcutil $pid 100010. Other common commands

# Quickly kill all Java processes

ps aux | grep java | awk '{ print $2 }' | xargs kill -9

# Find the 10 files that occupy the most disk space in the / directory

find / -type f -print0 | xargs -0 du -h | sort -rh | head -n 10Performance optimization is a big field, which would take dozens of articles to explain. In addition to the content discussed here, application performance optimization also involves frontend optimization, architecture optimization, distributed and cache usage, data storage optimization, and code optimization, such as design mode optimization. Due to space limitations, we could not discuss all these topics here. However, I hope this article will inspire you to learn more. This article is based on my personal experience and knowledge, and surely it is not perfect. Any corrections or additions are welcome.

Performance optimization is a comprehensive task. We need to constantly practice it, make use of relevant tools and experience, and continuously improve our approach to form an optimization methodology that works for us.

In addition, although performance optimization is very important, do not put too much effort into optimization too early. Of course, sound architecture design and code are necessary. But, premature optimization is the root of all negatives. On the one hand, premature optimization may not be suitable for rapidly changing business needs and even obstruct the implementation of new requirements and new functions. On the other hand, premature optimization increases the complexity of applications, reducing application maintainability. The appropriate time and extent of optimization are questions that must be carefully considered by the many parties involved.

Alibaba Clouder - March 11, 2021

Alibaba Clouder - May 20, 2020

Sabih - December 3, 2018

Alibaba Clouder - March 13, 2019

Alibaba Clouder - October 20, 2020

Alibaba Clouder - August 26, 2020

Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More CloudMonitor

CloudMonitor

Automate performance monitoring of all your web resources and applications in real-time

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn More