By Zhiqiang Long

You can review the article below beforehand:

Data Warehouse and Lake House Continuous Evolution of the Cloud Native Big Data Platform

The main content of this article is the new capabilities of real-time offline integrated data warehouses.

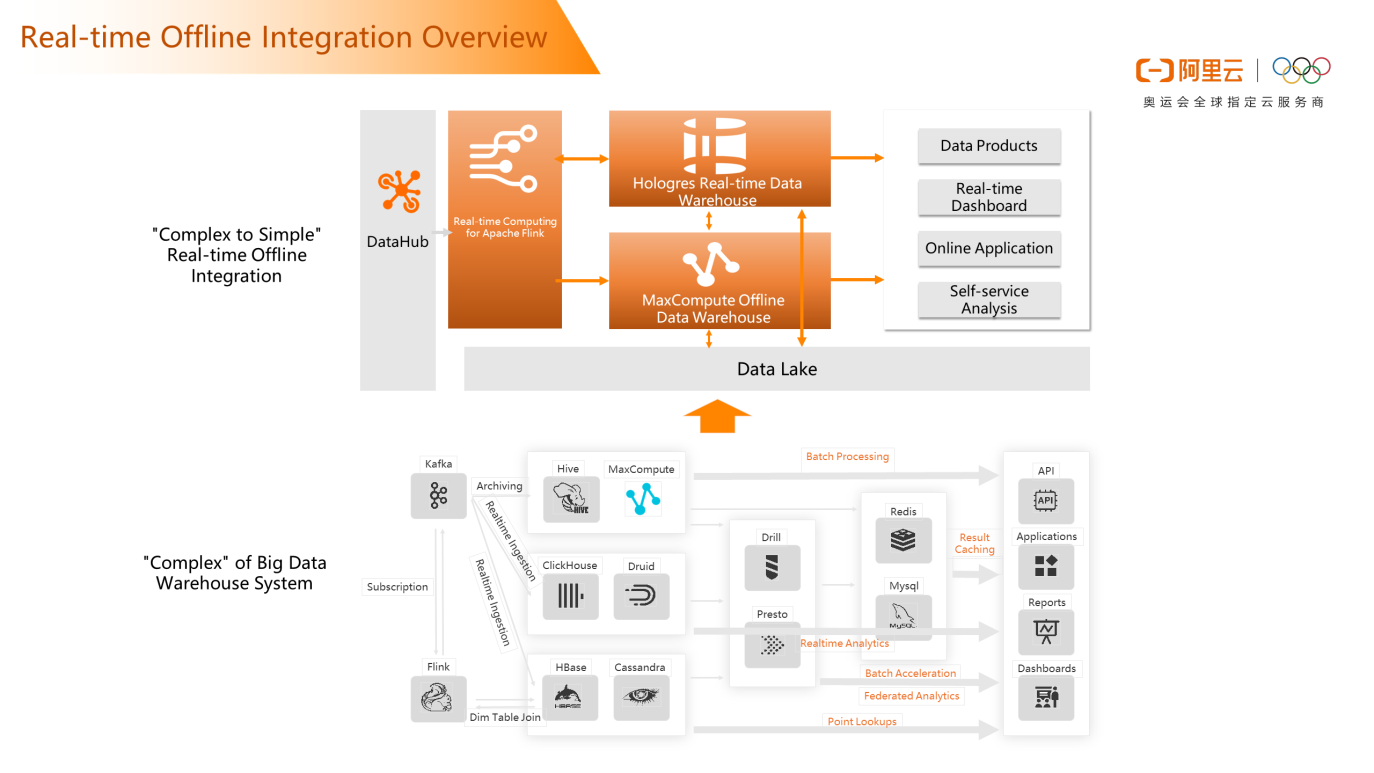

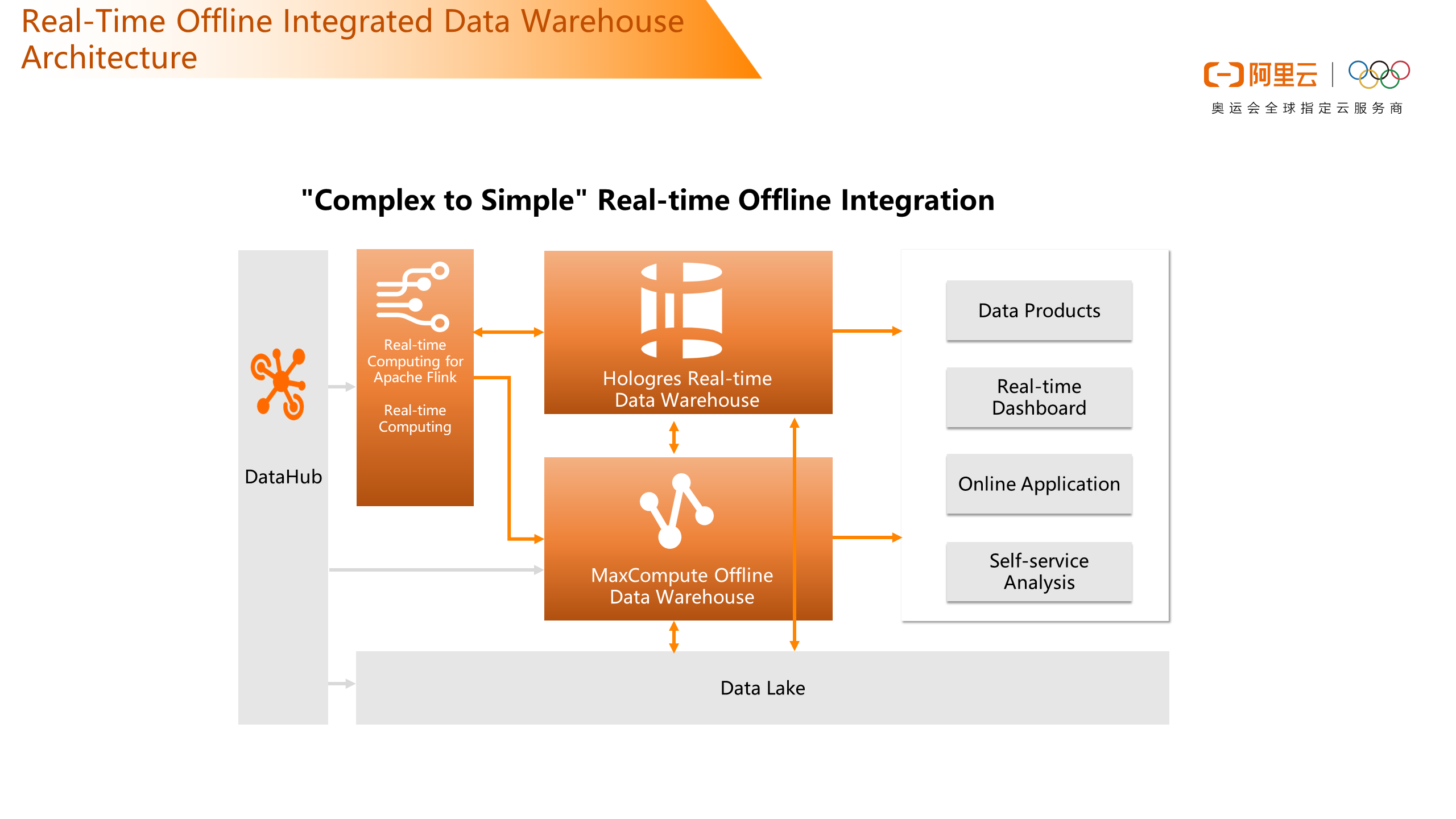

The big data warehouse system has evolved from a complex architecture to a real-time offline integrated data warehouse. The core is to connect MaxCompute and Hologres offline and real-time data warehouses based on the streaming computing engine and implement hierarchical data processing through intercommunication. The current architecture applies to scenarios (such as data governance, offline analysis, real-time analysis, data warehouse bazaar, multi-mode analysis, and machine learning online models). It helps customers build a comprehensive big data analysis platform and release the value of enterprise data.

The current solution applies to the business scenarios of real-time and offline data analysis, real-time data computing and analysis, massive data analysis and point search, and diverse traffic data and business data analysis. If you have low requirements for timeliness, you can use MaxCompute offline data warehouses instead of using real-time analysis. In business scenarios, such as online alerts and prediction, it can be understood that the whole trace does not use the combination of offline data and real-time data. This is a typical solution. For example, Flink and Hologres are used as real-time data warehousing solutions with high real-time performance.

Real-time offline integration mainly focuses on adapting to the comprehensive application scenario of a mixture of offline and real-time online services, which can solve the analysis service business of diverse traffic and business data.

From the data writing point of view, real-time and offline data and streaming data can be supported. The feature of MaxCompute on the data write side is that it supports high QPS after writing, which is visible and can be checked. From the perspective of data writing channels, the current real-time offline integration supports batch tunnels, streaming tunnels, and real-time tunnels and the support of intermediate plug-ins (such as Kafka and Flink) before data writing. Data is written from a data source and message service middleware to MaxCompute intermediate zero code development, and you can directly use the plug-ins supported by MaxCompute. Hologres supports high-performance and real-time write updates and write and query capabilities.

MaxCompute and Hologres are combined to support batch data write, streaming data write, real-time data write, and write and query products.

Data computing is supported by multiple engines. MaxCompute supports EB-level data computing. MaxCompute supports Spark, MR, and SQL in its computing engine. After data is written, MaxCompute supports Spark stream processing or batch processing with MaxCompute SQL. With the support of multiple engines, the latency of real-time computing can reach seconds or even milliseconds, and the throughput of a single job can reach millions.

In terms of data sharing and intercommunication, MaxCompute and Hologres can be used for data sharing and direct data reading. Hologres can directly read data from MaxCompute and vice versa. The current feature is read from external tables, and the direct reading feature will be launched soon. Such an advantage is that the same data can be processed by a real-time engine and an offline engine. Under the condition that the data is not moved, the data can be processed in the offline data warehouse and summarized in the real-time data warehouse. Also, real-time data can be read from the real-time data warehouse, and the offline data can be combined for fusion data calculation and analysis.

In terms of the advantages of integration of analysis services, MaxCompute supports interactive data queries at the second level since MaxCompute provides the capability to accelerate queries. If the real-time requirements are higher, such as sub-second milliseconds, MaxCompute can connect to Hologres at the analysis layer to support sub-second Interactive Analytics.

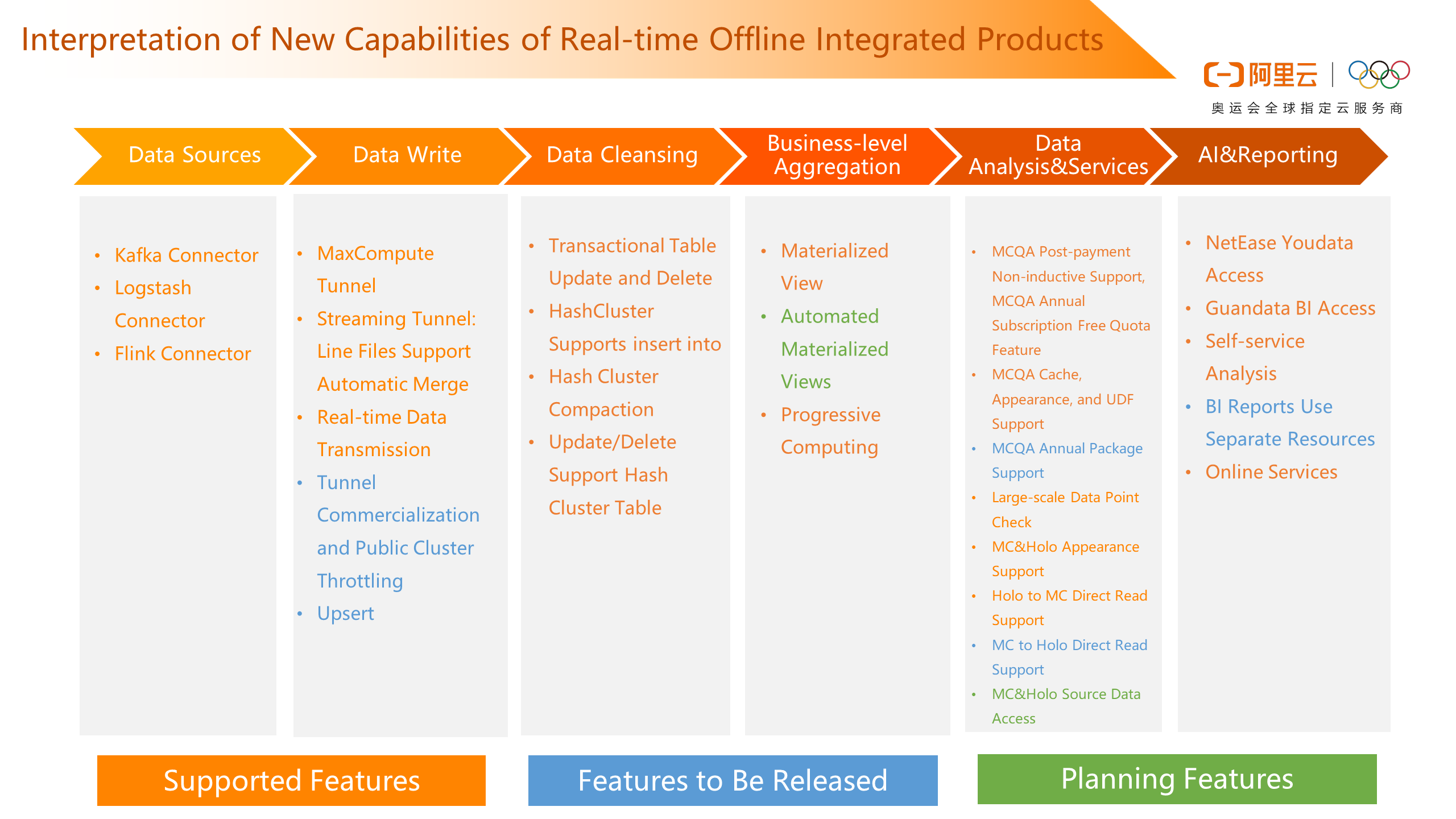

The advantages of this architecture are split into the entire data warehouse development trace, corresponding to some product capabilities. The development process of a data warehouse is data source → data writing → data cleaning → business-level aggregation → data analysis and service → AI & Reporting. There are third-party or product application packages in the data analysis service or some online application scenarios and online analysis services in some AI scenarios. You can connect to an interface of the data analysis service or connect to the data in the MaxCompute data warehouse or the data in the OSS. You can decide according to your business scenario.

We support third-party plug-ins for data sources, such as Kafka Connector, Logstash Connector, and Flink Connector. The data writing layer supports batch tunnel, streaming tunnel: line files support automatic merge, and real-time tunnel. Exclusive resources based on data writing, which is commercial resources, will soon be provided. Currently, the computing resources written to are public clusters and are provided free of charge. Delays may occur when large business volumes are required. The upsert capability will soon be released to update data from business databases (such as RDS to MaxCompute) in real-time.

From the perspective of data cleansing, when data is written into MaxCompute, update and delete capabilities are supported. In this process, materialized views and progressive computing are performed at the business aggregation layer and automated materialized views in planning. In the data analysis service layer, MaxCompute provides query acceleration capabilities, unaware query acceleration capabilities during the post-payment process, and queries of MCQA resources that are prepaid during the invitational preview process. Previously, a capability for subscription query acceleration free quota was released, and each project has a query limit of fewer than 500 times per day for a single SQL10G query. For the subsequent connection of data services and third-party applications, if the user is prepaid, a resource group is divided based on the purchased resources and used as an independent query acceleration resource to meet the needs of subscription users. You can connect to Hologres if you require more interactive data analysis services.

On the Hologres side, we use the external table support from MaxCompute to Hologres and directly read from Hologres to MaxCompute to implement data interoperability. We will make a metadata connection and directly read from MaxCompute to Hologres for subsequent planning capabilities. In the process of upper-level BI report analysis, some ecological access has been made, such as Netease's online model of data, remote BI, autonomous analysis, and online service with AI. Online training will directly connect to the MaxCompute data warehouse.

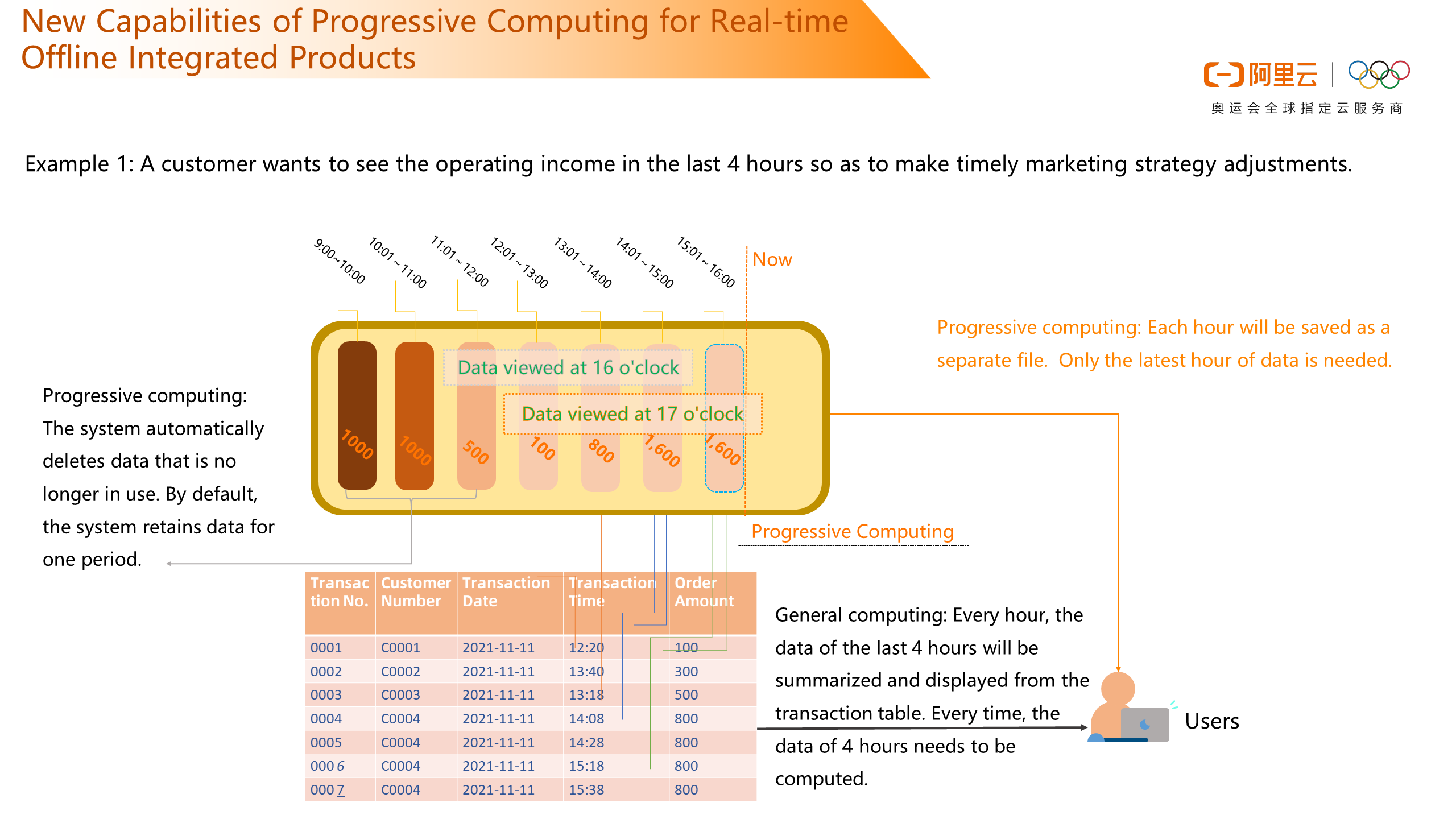

Conceptually, progressive computing is a form that can complete computing by processing incremental data and maintaining intermediate states. It is between traditional stream computing and batch processing. As you can see in the figure, there is a transaction table that has transaction data at each point on a certain date, such as 12, 1, and 2 o'clock. In other words, the transaction order amount and transaction order summarized per hour can be collected in a separate file produced per hour through progressive computing. This means progressive computing will automatically summarize the transaction details data and make a preliminary summary. When checking the data, there is no need to count the data for one hour or several hours. We can directly check the statistical data after completing the preliminary summary through progressive computing. This example shows that transaction order data can also be written to MaxCompute in real-time (or near real-time) or to Hologres in real-time. You can write data into Hologres based on your business requirements. For example, you can write data from order data to Hologres. You can perform real-time computing in streaming trace to complete hourly window statistics and perform a preliminary summary. If you write data to MaxCompute, you can use progressive computing to complete the summary statistics at the hourly level. In this process, real-time data is written in real-time, near real-time preliminary summary, and upper-level gathering, which can provide data query capabilities for data analysis services.

The purpose and advantage of progressive computing are that it can store data periodically by the window. When querying the most recent data, it can reduce computing, save computing resources, and improve computing efficiency. Every time you access, you don't need to check the schedule, you can directly check the summary data, which can improve both speed and experience. For example, data from transaction orders are streamed and written to MaxCompute through DataHub. This set of the trace is near real-time for the preliminary summary of progressive computing and subsequent data consumption in MaxCompute. Another trace is that DataHub uses Flink to consume data. Flink completes various statistics and computing in other dimensions and then writes them to Hologres to provide the ability to consume services.

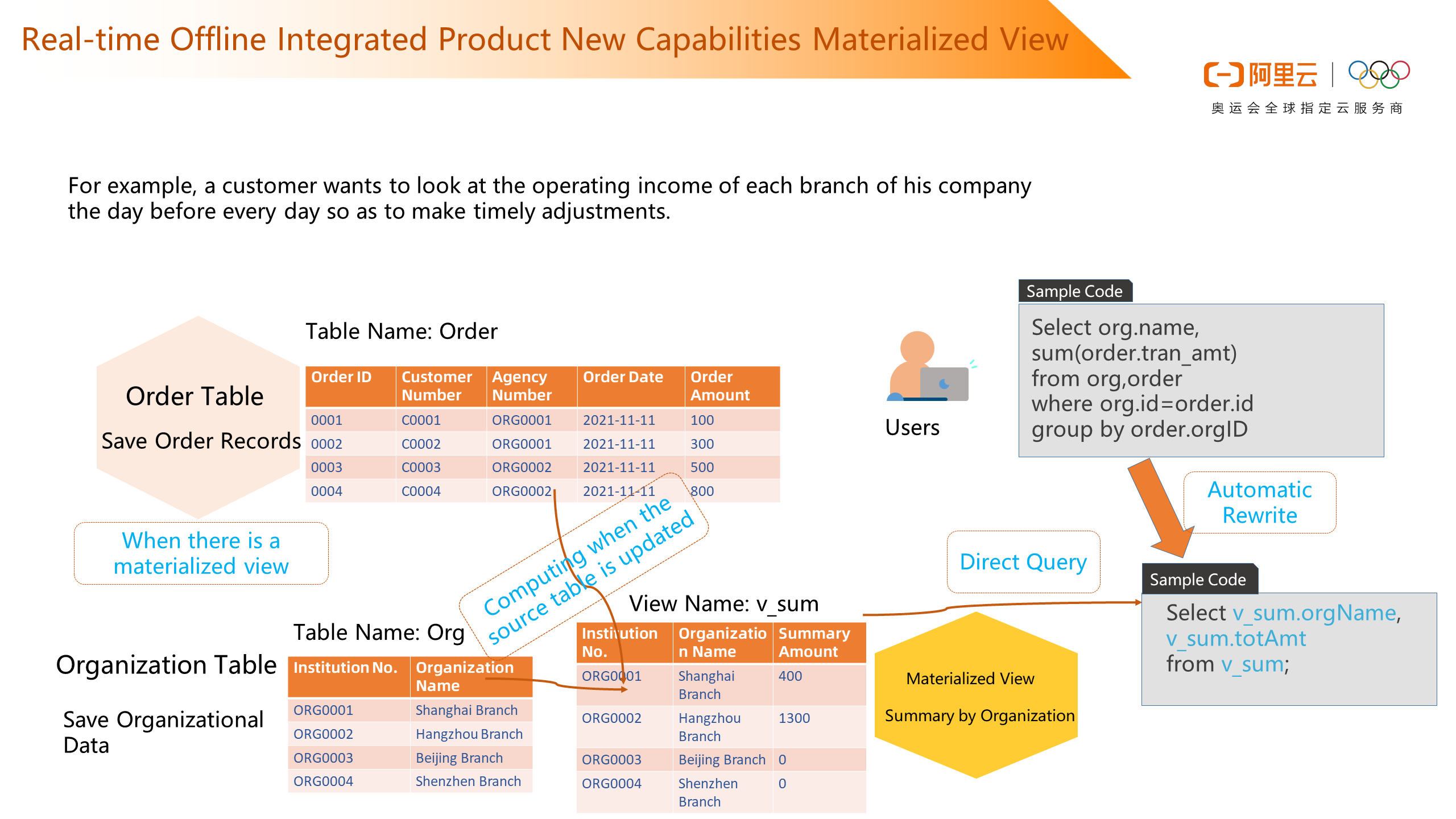

A materialized view is a database object that includes a query result, which is a local copy of remote data, or used to produce a summary table based on data table summation. As shown in the following figure, there is an order table to save detailed records and the org organization table to save organization data. If you want to check the data summary, you need to associate the data of the two tables first, as shown in the following figure. If there is a materialized view, the view chart can replace the code script of the user query summary, and the view chart can be viewed during the query. In this process, the materialized view supports users to set the data update frequency. The fastest is five minutes, and the visual chart can be updated according to requirements.

The purpose and advantage of materialized views are that data is calculated when it is written, and data is pre-calculated to improve query efficiency, transparent to customers, and automatically rewritten. For example, order data can be written in real-time. You can update the materialized view every five minutes to query the near real-time summary statistics of upper-layer application data. If the trace is in Hologres, you can use the real-time data warehouse trace. MaxCompute provides materialized view capabilities to meet customers' requirements for data timeliness, but it is not real-time data updates.

After streaming data or batch data is written in the entire development trace, you can summarize the data through materialized views and progressive computing in MaxCompute and then use MCQA query acceleration to provide the second-level external data analysis service. If you require a high latency for interactive queries, you can summarize the data to Hologres and use Hologres to provide data analysis services. This interactive response time can reach milliseconds.

From the perspective of architecture, the architecture is simplified from left to right. You can write data to MaxCompute using DataHub. You can also use real-time computing (for Apache Flink) to consume DataHub to write real-time data to Hologres to provide analytics services. This architecture has two traces. If the business response time requirements are high, you can use the real-time data warehouse link. DataHub data is written into the real-time data warehouse Hologres through Flink to provide data to data products or real-time dashboards. If you do not require a high service response time, you can write data to MaxCompute through DataHub.

When Realtime Compute for Apache Flink consumes real-time online data, different computing metrics need to be presented to MaxCompute in the offline data warehouse and aggregated with some data in MaxCompute. You can use Hologres direct read to read the aggregated data from MaxCompute. Hologres provides online data analysis services. The underlying data can be data in Hologres or MaxCompute. The current architecture mainly reflects the real-time offline integration, but the lake house is in this architecture. Both offline data warehouses and real-time data warehouses can communicate with data in the data lake.

Based on the current architecture, three dimensions of service capabilities are provided.

How can we use real-time offline integration? Look at the following example.

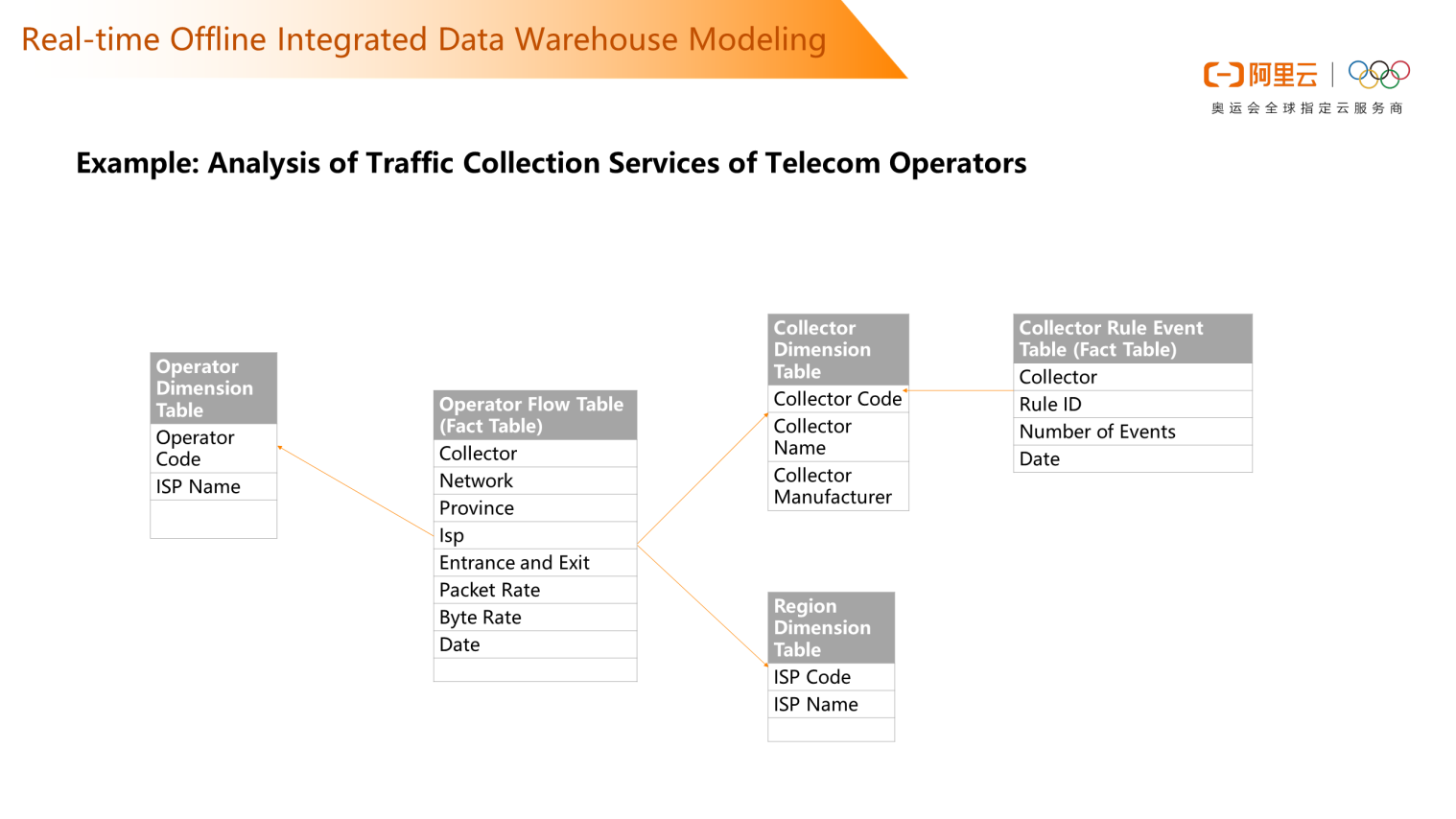

Traffic collection business analysis of telecom operators: According to the analysis of traffic collection business, the snowflake schema is more suitable for the common modeling method of the data warehouse. Complete data warehouse modeling based on business characteristics and snowflake schema modeling principles.

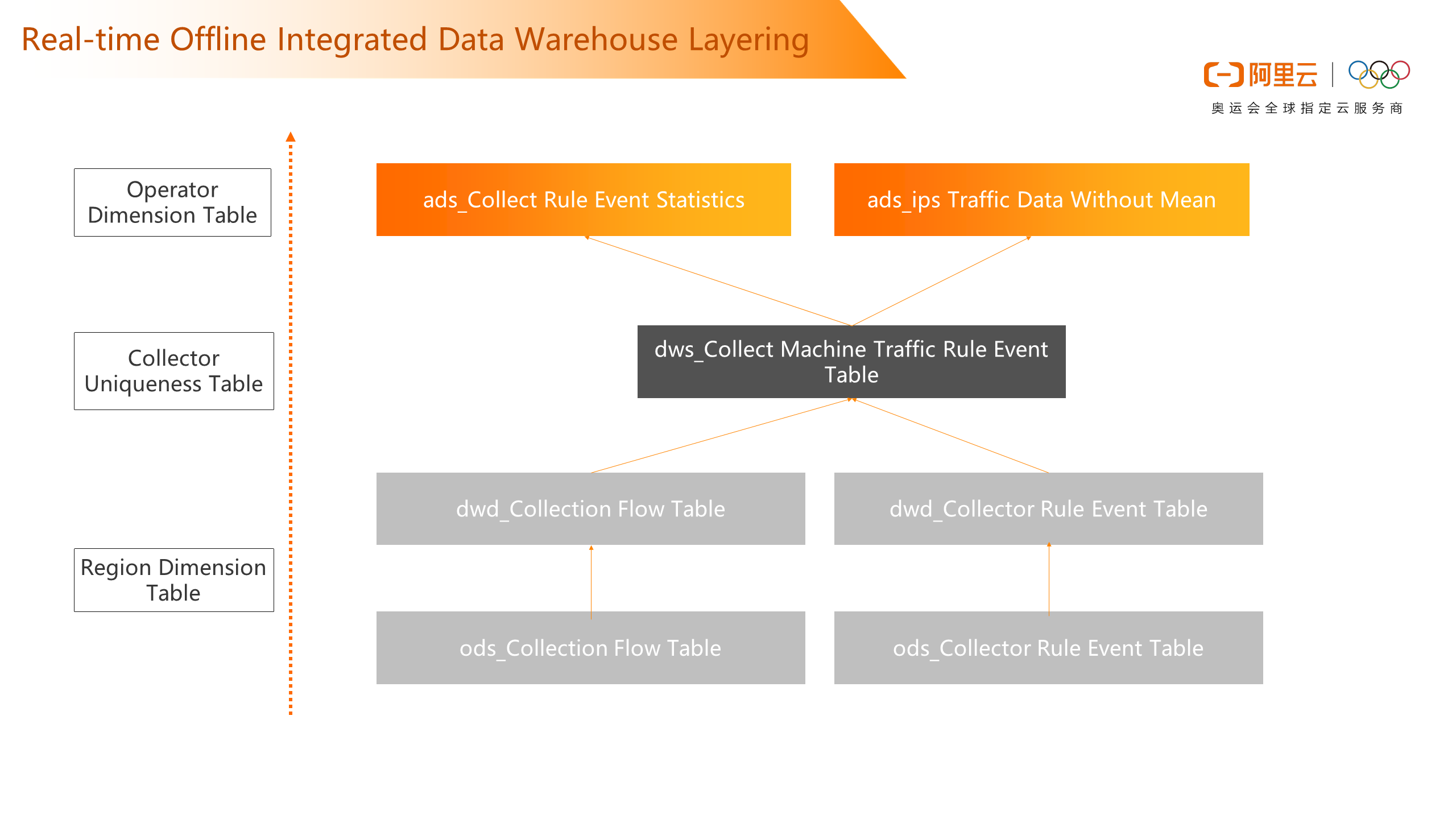

In this example, the operator flow table is a real-time data table, and a snowflake schema based on real-time traffic data is constructed to associate the collector dimension table and the region dimension table with the flow table. After the model is completed, based on the stratification of data warehouses, the ODS layer synchronizes the collected flow table data and the acquisition machine rule table to MaxCompute or Hologres for corresponding rule processing. In the layer of DWD detailed data, the collection flow schedule and the acquisition rule event schedule are mainly formed for the data after cleaning. If you use a real-time offline architecture, data at the DWD layer can be aggregated to Hologres. If the data is aggregated to MaxCompute, you can use a partition table to compute the data that matches the time or event rules within a partition table period and perform a preliminary summary within a partition table period. According to the summary table, the statistics of collection rule events (including the statistical analysis of the average value of traffic data) are completed.

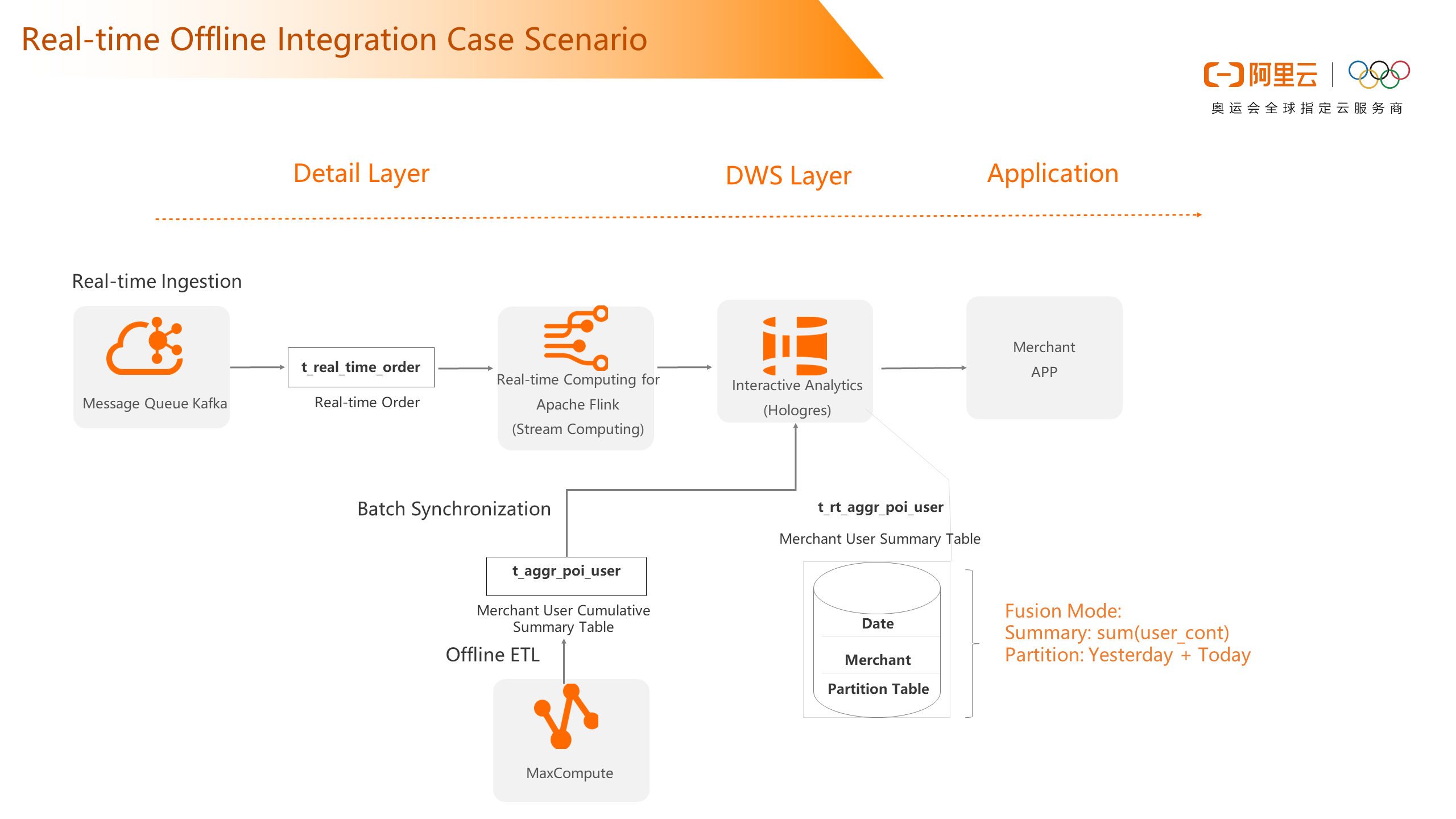

For example, merchants need to give discounts to users according to the odd number of users' history. Merchants need to see how many orders have been placed in history, historical T+1 data, and real-time data today. This scenario is a typical real-time offline integration architecture. We can design a partition table in Hologres. One is a historical partition, and the other is today's partition. Historical partitions can be produced offline. Today's metrics can be computed in real-time and written into today's partition. A simple summary is performed when querying.

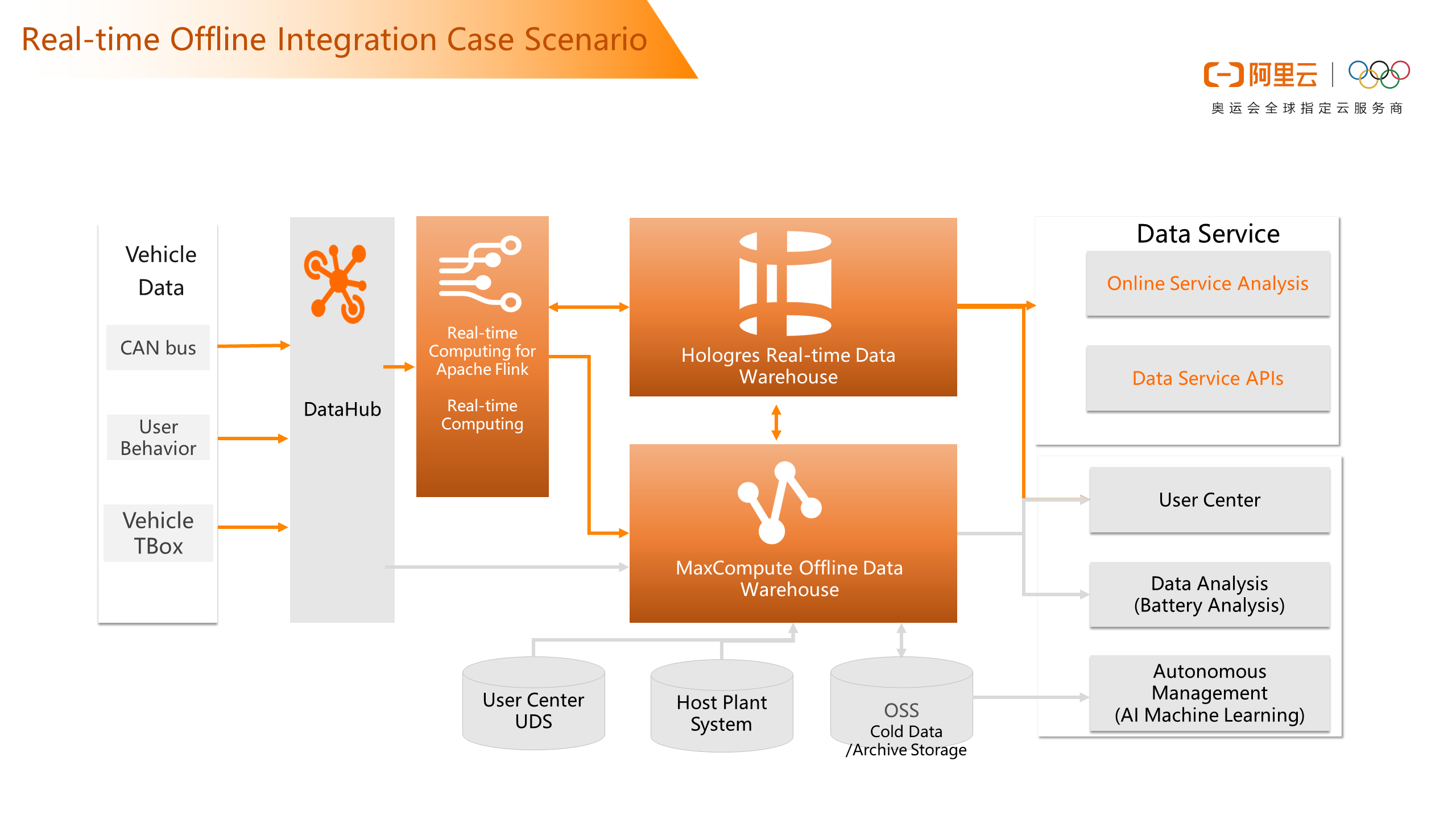

The data sources from left to right are vehicle data, CAN bus, user behavior, and vehicle TBox. Data analysis can be done through DataHub, Realtime Compute for Apache Flink consumption, Hologres real-time warehouse, and MaxCompute offline warehouse. You can use Hologres to stop providing real-time data to data servers for online service analysis and API operations. In addition, Hologres can read batch processing result data generated in MaxCompute or automatically aggregated by materialized views or progressive computing. At the same time, MaxCompute offline data warehouses also process user center UDS and host factory system data. These data include some business data or T+1 data. After processing the data, cold data can be archived to OSS. These data can be used for machine learning of standard autonomous driving. This set of application scenarios is a standard use case for real-time offline integration.

Alibaba Cloud's Cloud-Native Integrated Data Warehouse: Integrated Analysis Service Capabilities

137 posts | 20 followers

FollowAlibaba Cloud MaxCompute - January 21, 2022

Alibaba Cloud MaxCompute - September 7, 2022

ApsaraDB - January 9, 2023

Alibaba Cloud MaxCompute - January 22, 2021

Apache Flink Community China - March 29, 2021

Alibaba Cloud MaxCompute - July 14, 2021

137 posts | 20 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free