By Wang Feng (Mowen)

This article is written by Wang Feng, the initiator of Apache Flink in Chinese community and director of the real-time computing and open platform department of Alibaba Cloud Computing Platform. This article mainly introduces the current development and future plans of Flink as a unified stream-batch processing engine.

The outline of this article is as follows:

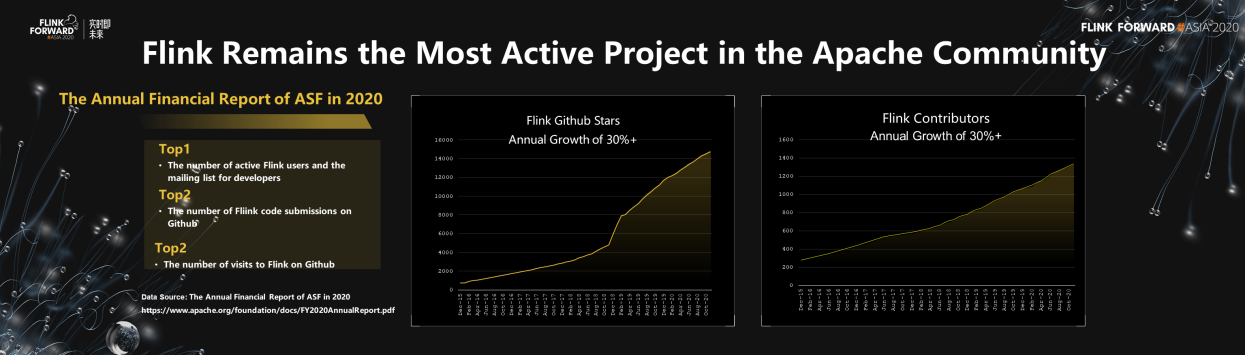

In terms of the development trend of the Flink community ecosystem in 2020, the community generally develops in a very healthy and high-speed mode. It has made great achievements especially in 2020. According to the 2020 annual financial report by Apache Software Foundation (ASF), some major Flink achievements have been listed as follows.

Based on the preceding data, it's believed that Flink stood out in many open-source Apache projects and is one of the most active Apache projects. Besides, the number of Stars on Github and the number of contributors to Flink keep positive momentum. In recent years, Alibaba has been accelerating its growth, with an average of more than 30% data growth annually. The prosperity and rapid development of the entire Flink ecosystem is obvious.

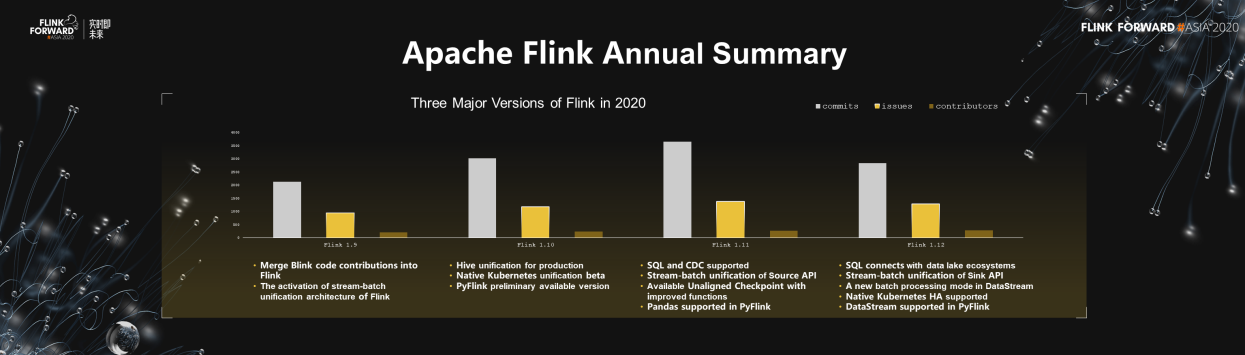

Considering the technical achievements in 2020, Flink released three major versions, namely Flink 1.10, Flink 1.11 and the latest Flink 1.12 in December.

Compared with Flink 1.9 in 2019, these three versions have been improved a lot. Flink 1.9 merged Blink code contributions into Flink, officially launching the stream-batch unification architecture of Flink. In 2020, more upgrades and implementation have been made to this architecture. Besides, in Flink Structured Query Language (SQL) development scenarios, SQL with stream-batch unification and CDC for reading binlogs from the database are available, connecting to the new data lake architecture. Since that Flink is increasingly widely used in AI scenarios, Python language is improved to ensure PyFlink fully support the development of Flink. Efforts have also been made in the Kubernetes ecosystem.

After iterations this year, Flink is able to run completely in a cloud-native way on the Kubernetes ecosystem, independent from Hadoop. In the future, Flink deployment can also be better integrated with other online services on the Kubernetes ecosystem.

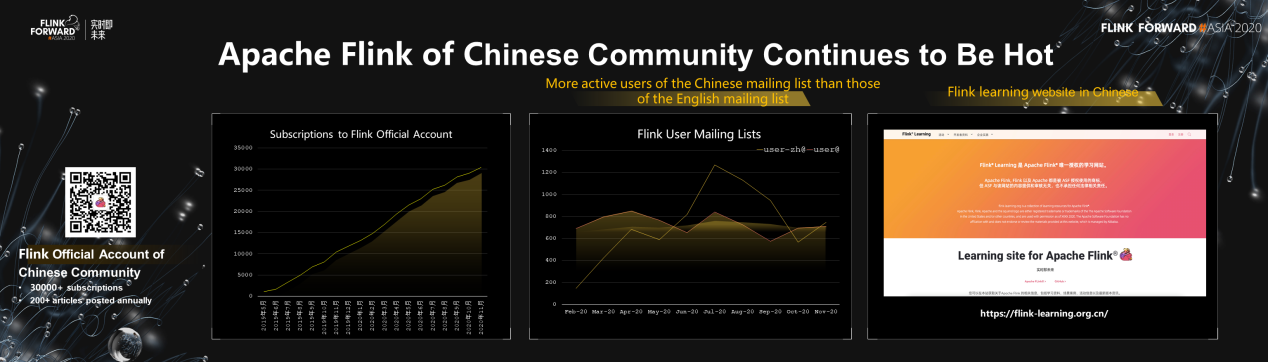

Here is the development of the Apache Flink of Chinese community.

Firstly, the Flink project may be the only one among top-level Apache projects with a Chinese mailing list. As an international software foundation, Apache mainly communicates in English. However, as Flink catches on more than ever before in China, a Chinese mailing list has also been provided. The active users of the Chinese mailing list are more active than those of the English mailing list. China has become the region with the most active Flink users.

Secondly, Flink has also launched an official account of its Chinese community, as is shown on the left of the figure above. It pushes community information, activity information and best practices weekly to allow developers to know community development. Currently, more than 30,000 active developers have subscribed. Over 200 latest news are posted annually, covering Flink technologies, ecosystems and practices.

The official Chinese website of Flink has launched not long ago. This website is intended to help more developers easily learn Flink technology and better understand the industry practices of Flink. Moreover, the Flink DingTalk group also allows technical exchanges.

Now, Flink has become the de facto standard for real-time computing. It's believed that various mainstream IT or technology-driven companies have already adopted Flink for real-time computing all around the world. Flink Forward Asia 2020 has also invited more than 40 top companies to share their Flink technologies and practices. Thanks to their sharing, it can be seen that more companies in various industries will use Flink to solve real-time data problems in the future.

In terms of the technical development of Flink in 2020, it's believed that technical innovation is the core driving force for the continuous development of open-source projects and communities. This section is divided into three perspectives. Firstly, it's the technological innovations in the stream computing engine kernel.

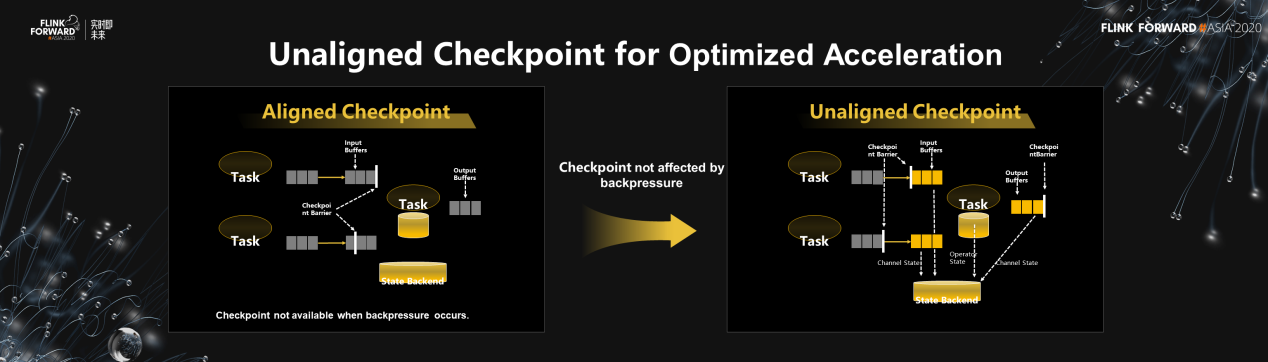

The first innovation is the Unaligned Checkpoint. The Checkpoint technology requires to insert barriers continuously into the real-time data stream to perform regular snapshots. This is one of the most basic concepts of Flink. In the existing Checkpoint mode, barriers need to be aligned, so Checkpoint may not be available when backpressure or high data computing pressure occurs. Therefore, Unaligned Checkpoint is launched this year, which enables Checkpoint to be made quickly in the case of backpressure.

Users can set alignment timeout to automatically switch between Unaligned Checkpoint and the existing aligned Checkpoint. In normal cases, an aligned Checkpoint is triggered, while switching to the Unaligned Checkpoint in the case of backpressure.

Another technological innovation lies in fault tolerance. It's known that Flink data supports exactly-once. Therefore, the availability of the entire system may have some trade-offs. To ensure strong data consistency, the fault of any Flink node will lead all nodes to roll back to the last Checkpoint, which needs to restart the entire Directed Acyclic Graph (DAG). During the restart process, the business is interrupted and rolled back for a short period of time.

In fact, many scenarios do not require strong data consistency, and the loss of a small amount of data is acceptable. Sample data statistics or feature computing in machine learning scenarios have higher requirements for data availability.

Therefore, a new fault tolerance mode has been developed, Approximate Failover. It's more flexible, allowing to only restart and recover nodes that have faults. In this way, the entire DAG does not need to be restarted, so the entire data process goes smoothly without interruption.

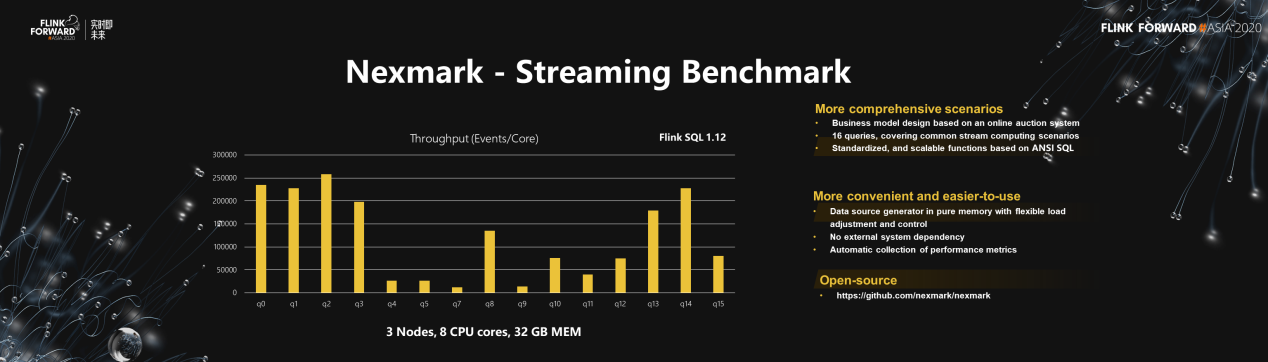

In addition, a relatively standard Benchmark tool is missing in stream computing. In traditional batch computing, various TPC Benchmark tools can perfectly cover traditional scenarios. However, in real-time stream computing scenarios, a standard Benchmark doesn't exist. Based on one article of Nexmark, the first version of Benchmark tool with 16 SQL queries, Nexmark, is launched. It has three features.

Feature 1: More comprehensive scenarios covered

Feature 2: More convenient and easier-to-use

Feature 3: Open source

Nexmark has been already open-sourced. It works for comparing stream engine differences between different Flink versions or different computing engines.

Flink is a stream computing-driven engine with Streaming as its core. However, based on the Streaming kernel, a more versatile stream-batch unification architecture can be implemented.

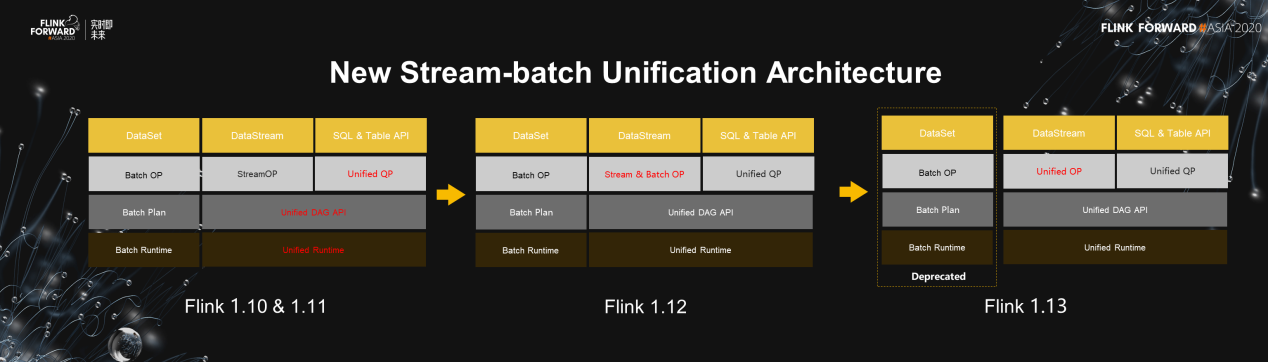

In 2020, Flink launched Flink 1.10 and Flink 1.11 that implemented stream-batch unification at the SQL layer and realize production availability. It has implemented unified SQL with stream-batch unification and Table expressions, Query Processor, and Runtime.

The just-released Flink 1.12 has implemented stream-batch unification of the DataStream API. By adding batch operators on native stream operators, DataStream has two execution modes. Besides, batch operators and stream operators can be mixed in both batch mode and stream mode.

The ongoing Flink 1.13 will completely implement stream-batch unification operators in DataStream. The entire computing framework, like SQL, provides stream-batch unification computing capabilities. In this way, the original API DataSet in Flink can be removed to fully implement the real stream-batch unification architecture.

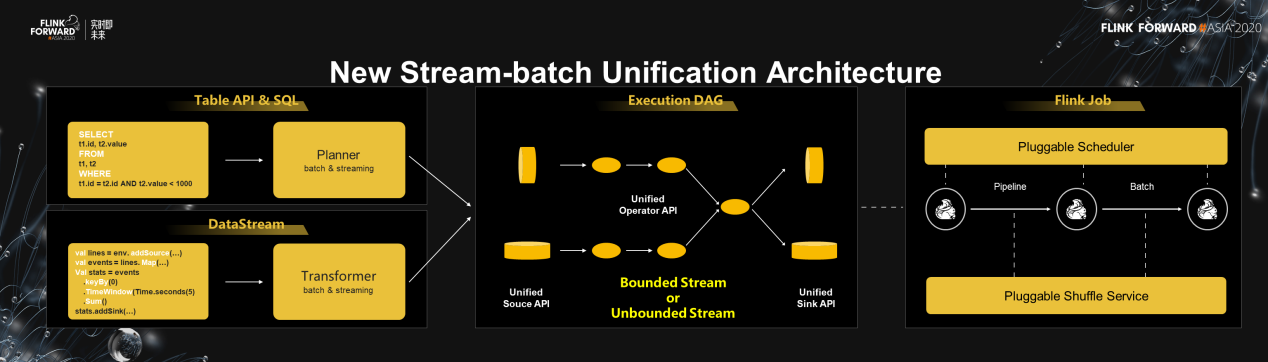

The entire Flink mechanism becomes clearer under the new stream-batch unification architecture. There are two types of APIs. One is the relational API of Table or SQL, and the other is DataStream that can control physical execution more flexibly. Both of them can implement a unified expression of stream-batch unification.

The graphs of users' demand expression can also be converted into a unified set of execution DAG, which supports Bounded Stream and Unbounded Stream, also known as the current-limited and unlimited stream modes. The Unified Connector framework is stream-batch unification architecture, enabling reading both stream storage and batch storage. The entire architecture truly integrates stream and batch storage.

Stream-batch unification architecture is also implemented at the core Runtime layer. Scheduling and Shuffle are the core components of Runtime. The Pluggable plug-in mechanism is supported at the scheduling layer, which allows different scheduling policies to handle scenarios of stream, batch, and stream-batch-mixed. The Shuffle Service also supports batch and streaming data shuffling.

An updated Shuffle Service framework is under process, Remote Shuffle Service. It can be deployed in Kubernetes to implement storage and computing separation. The computing layer and the Shuffle layer in Flink are similar to a storage service layer. The completely decoupled deployment makes Flink run more smoothly.

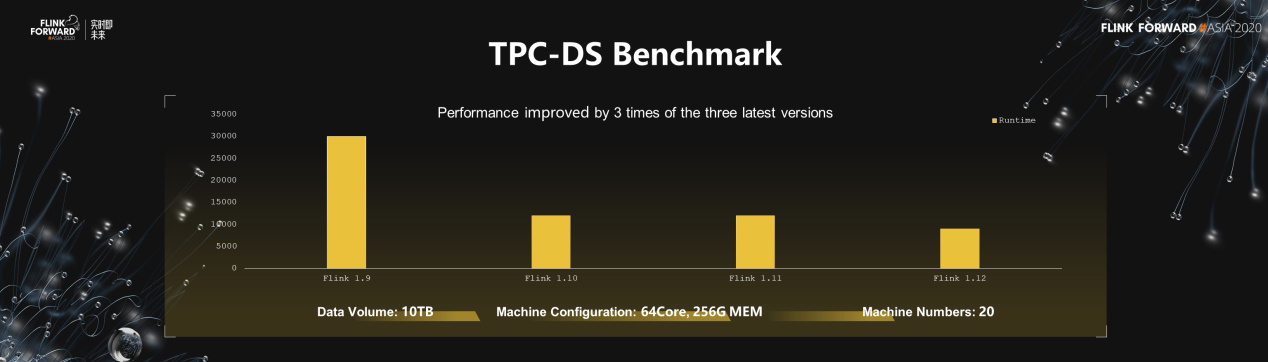

For the performance of the batch processing, Flink 1.12 has improved three times compared with Flink 1.9 of 2019. The running time of TPC-DS has been converged to less than 10,000 seconds in the case of 10 Terabytes of data and 20 machines. Therefore, the batch processing performance of Flink fully meets the production standards and is not inferior to that of any mainstream batch processing engine in the industry.

Stream-batch unification architecture is not only a technical problem. It will be explained in detail how the stream-batch unification architecture has changed the data processing methods and data analysis architectures in different typical scenarios.

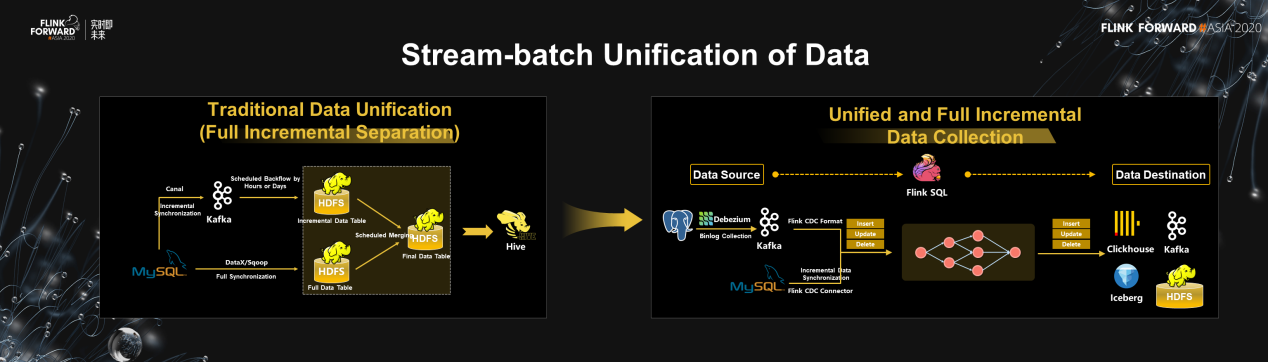

In big data scenarios, data synchronization or unification is often required to synchronize data from databases to the data warehouse or other storage systems. As is shown above, on the left is one of the traditional classic data integration modes. Full synchronization and incremental synchronization are two types of technologies. Developers need to regularly merge the full synchronized data into incremental synchronized data. Such continuous iterations synchronize data from the database to the data warehouse.

However, the stream-batch unification architecture based on Flink results in a completely different data architecture. Since that Flink SQL also supports CDC semantics for databases, such as MySQL and PG, it can be used to synchronize database data to open-source databases, such as Hive, ClickHouse, and TiDB or open-source KV storage. Based on this, the Flink connector is also stream-batch unification. It can read full data from the database and synchronize it to the data warehouse, and then automatically switch to the incremental mode. Then, it reads Binlogs via CDC to perform an incremental and full synchronization. Such automatic coordination within Flink can be realized and this is the value of stream-batch unification.

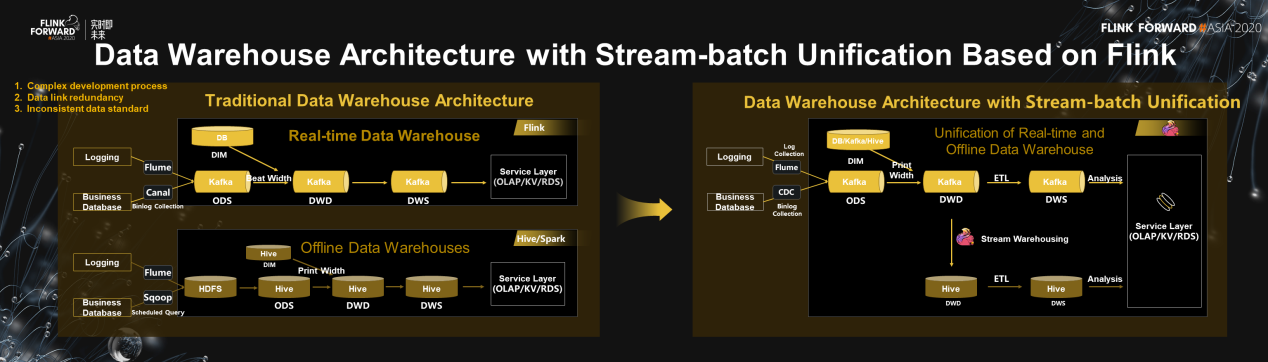

The second change is the data warehouse architecture. Currently, the mainstream data warehouse architectures are a typical offline data warehouse and a new real-time data warehouse. However, the two technology stacks are separated. In the offline data warehouses, Hive and Spark are more commonly used, while Flink with Kafka is applied in real-time data warehouses.

However, this solution still has three problems:

But a new stream-batch unification architecture can greatly address the preceding challenges.

Firstly, Flink is a set of Flink SQL development. One development team and one technology stack can do all offline and real-time business statistics with no extra cost. Secondly, there is no redundancy for data links. The DWD layer performs computations only once, free from offline computations. Thirdly, data coverage is naturally consistent. Both offline and real-time processes use the same engine, namely an SQL Module and a UDF module by the same developers. So, they are naturally the same, avoiding inconsistent real-time and offline data coverage.

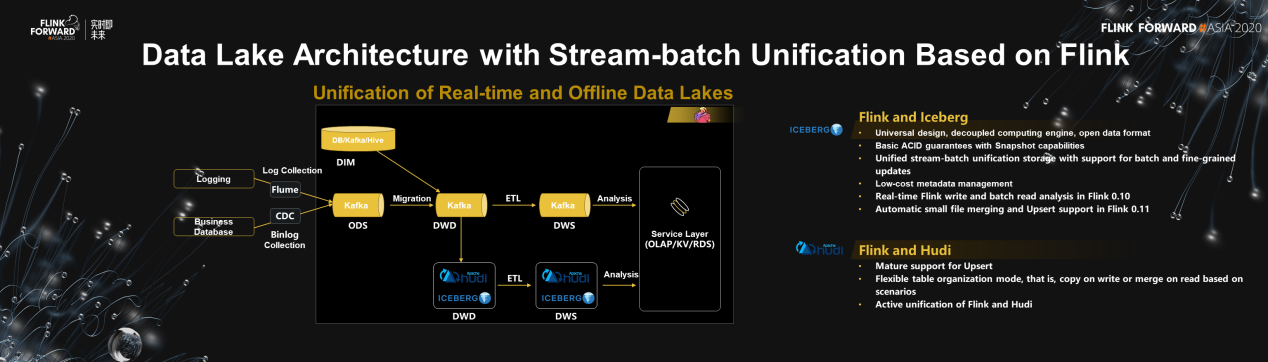

Data is generally stored at the Hive storage layer. However, as the data size gradually increases, there are also some challenges. For example, metadata management may be a bottleneck when the data file size increases. Another important issue is that Hive does not support real-time data updates. Hive cannot provide real-time or quasi-real-time data warehouses. The relatively new data lake architecture can solve these two problems to a certain extent.

Data lakes can handle more scalable metadata, and the storage of data lakes supports data updates. It is stream-batch unification storage. The combination of data lake storage and Flink enables the real-time and offline integrated data warehouse architecture to evolve into a real-time and offline integrated data lake architecture. For example:

Flink and Iceberg

Besides, Flink has been closely integrated with Hudi. A complete Flink-Hudi solution will be released in the coming months.

Flink and Hudi

As a very popular scenario, AI has a strong demand for computing power in big data. Then, it is achievements of Flink in AI scenarios and its future plans.

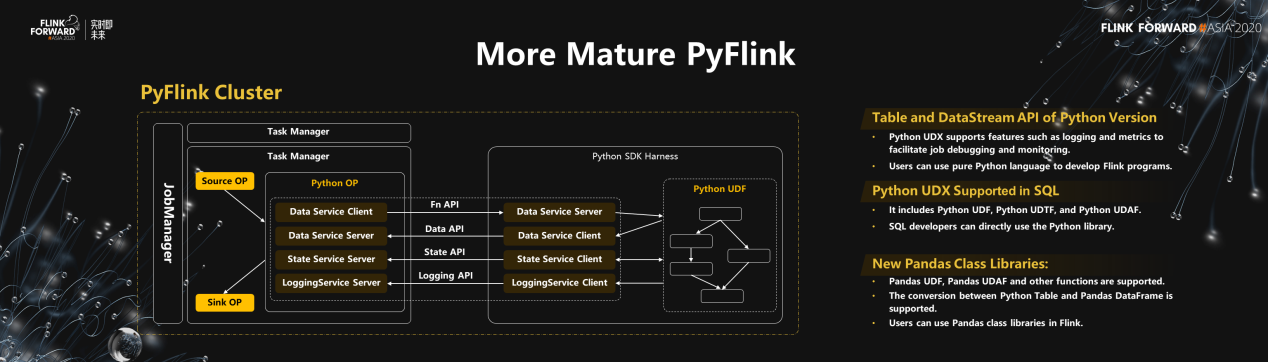

Because AI developers usually use Python, Flink provides supports for Python language. The community has done a lot of work in 2020 and PyFlink projects have also made great achievements.

Table and DataStream API of Python version:

Python UDX supported in SQL:

New Pandas class libraries:

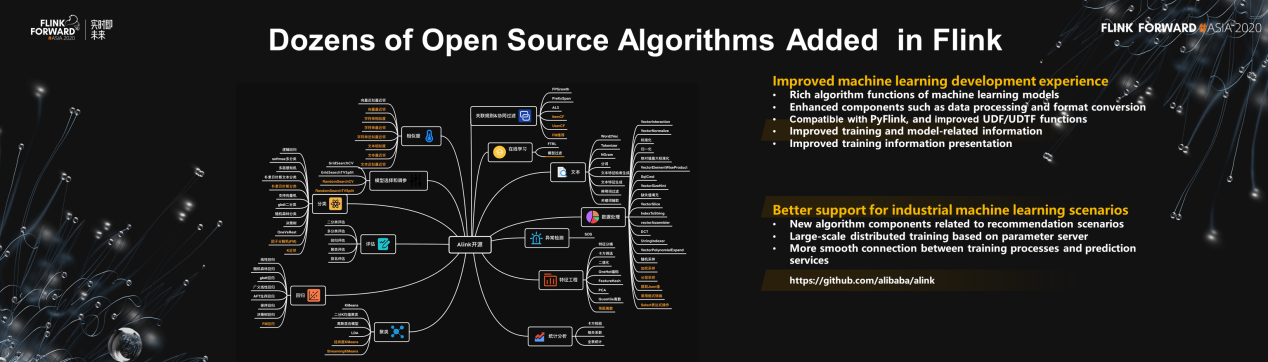

In terms of algorithm, Alibaba released Alink in 2019, a traditional machine learning algorithm that unifies stream-batch processing on Flink. In 2020, the Alibaba machine learning team also continued to open source 10 new algorithms on Alink to solve algorithm components in more scenarios. Thus, the development experience of machine learning is enhanced.

In the future, APIs with the new Flink DataStream are expected to support iteration capabilities of stream-batch unification. Alink that based on the new DataStream will be applied to machine learning of Flink for its iteration capabilities. In this way, a major breakthrough of the standard Flink machine learning can be made.

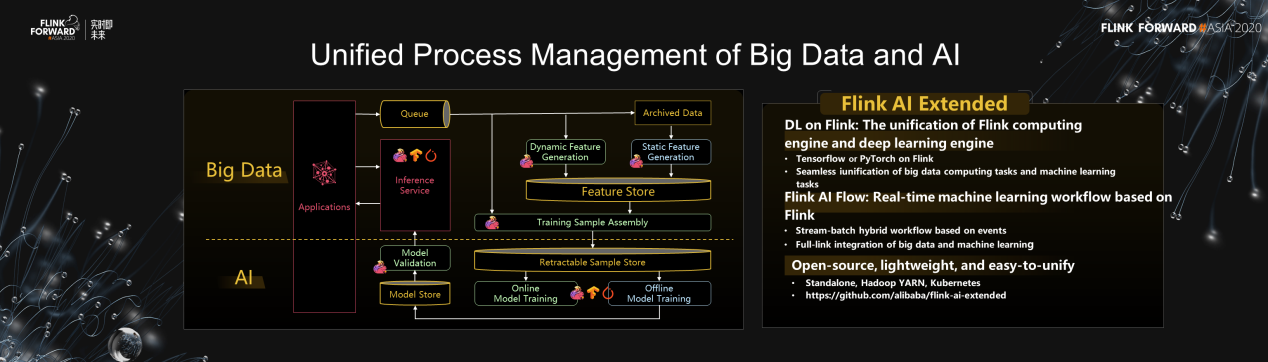

The unification of big data and AI is one of the issues worth discussing. Big data and AI technology are closely related to each other. By integrating core technologies of big data and AI, a complete set of online machine learning processes can be implemented, such as real-time recommendation. In this process, big data focuses on data processing, data verification, and data analysis, while AI focuses more on model training and prediction.

However, this whole process requires joint efforts to truly solve business problems. Alibaba is an expert in this field. Flink was first used in search recommendation scenarios. Therefore, Alibaba online search and online recommendation services are background machine learning processes implemented by Flink and TensorFlow. Besides, all business attributes are removed and only the pure open-source technical system is left behind. It's abstracted into a set of standard templates and solutions and open-sourced as Flink AI Extended.

This project mainly consists of two parts.

Deep Learning on Flink: The unification of Flink computing engine and deep learning engine

Flink AI Flow: Real-time machine learning workflow based on Flink

It's expected that by combining open-source big data with AI, everyone can quickly apply it to business scenarios and build an online machine learning business, such as real-time recommendation. This project is also very flexible that enables to run Standalone, Hadoop YARN, or Kubernetes.

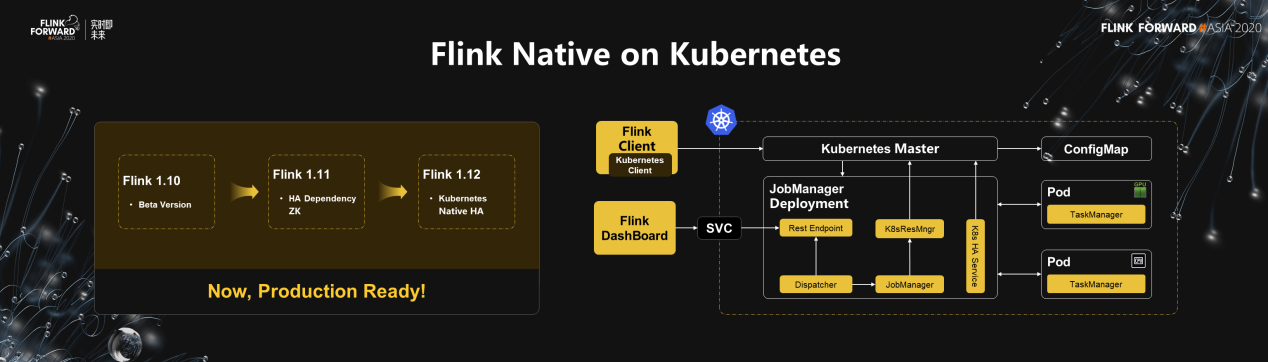

Kubernetes is a cloud-native standardized operation and expected to have a broader future. At the very least, Flink must support native operation based on Kubernetes to realize cloud-native deployment, which has already been implemented after attempts of three versions in 2020.

The job manager of Flink can directly communicate with the master node of Kubernetes to dynamically request resources and scale up or down resources based on the running load. At the same time, the connection with Kubernetes HA solution is completely achieved, with support for GPU scheduling and CPU scheduling. Therefore, the Flink Native on Kubernetes solution is now very mature. Enterprises can apply Flink-1.12 to meet their requirements for Flink deployment in Kubernetes.

Technological innovation and value must be tested by business. Business value is the final criterion. Alibaba is not only the best promoter and supporter of Apache Flink, but also the biggest user. The following describes the current development situation and follow-up plans of the Flink in Alibaba.

First of all, Flink grows dynamically in Alibaba.

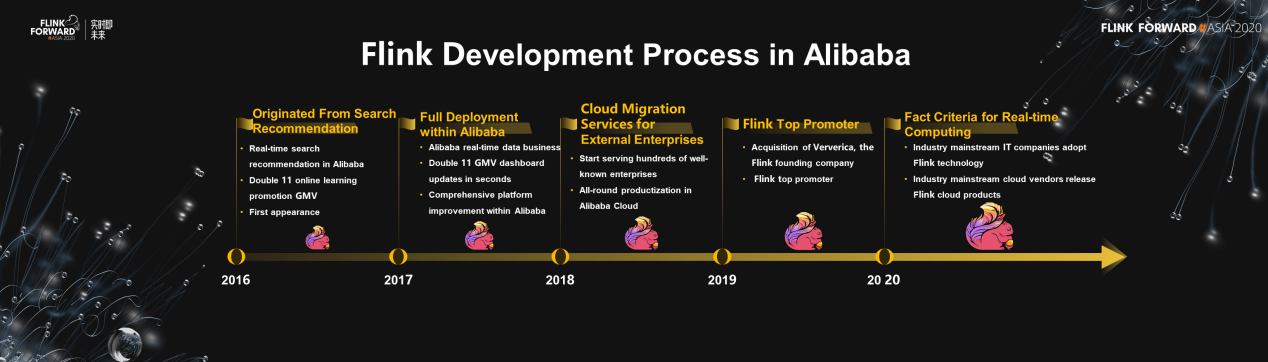

In 2016, Alibaba ran Flink on a large scale in Double 11 scenarios. The implementation in search recommendation scenarios was first achieved, which supports full-link real-time search recommendation and real-time online learning.

In 2017, Flink was recognized as a fully centralized real-time data processing engine that supports all businesses of Alibaba Group.

In 2018, cloud migration was performed to migrate Flink to the cloud for the first time, thus accumulating technologies to serve small-and-medium-sized enterprises.

In 2019, Alibaba took a step closer to internationalization by acquiring the founding company of Flink. Alibaba invested more resources and manpower to promote the development of the community.

By now, Flink has become the de facto international standard for real-time computing. Many cloud vendors and big data software vendors around the world have built Flink into their products, which becomes one standard form of cloud product.

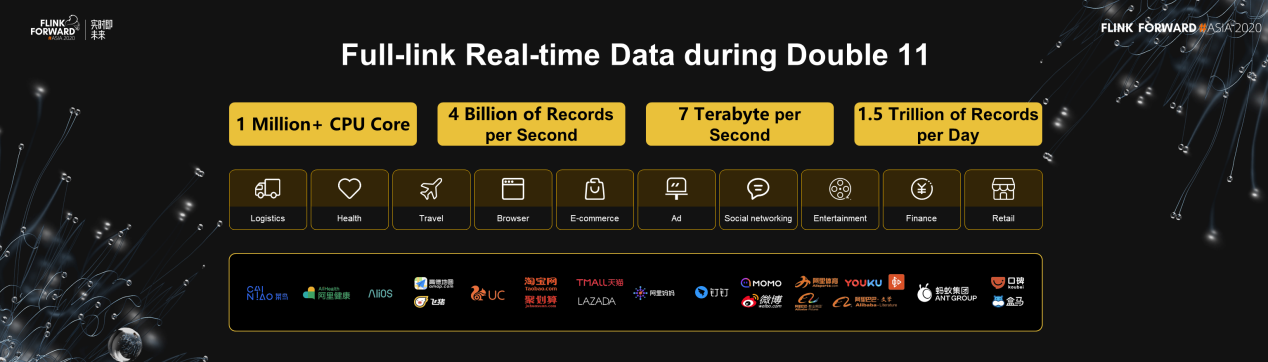

During Double 11 in 2020, the real-time computing platform based on Flink completely supported all internal scenarios of real-time data services. Millions of CPU cores are already running. With basically no increase in resources, the computing capacity has doubled compared with last year. At the same time, through technical optimization, the full-link real-time data of the entire Alibaba economy has been realized.

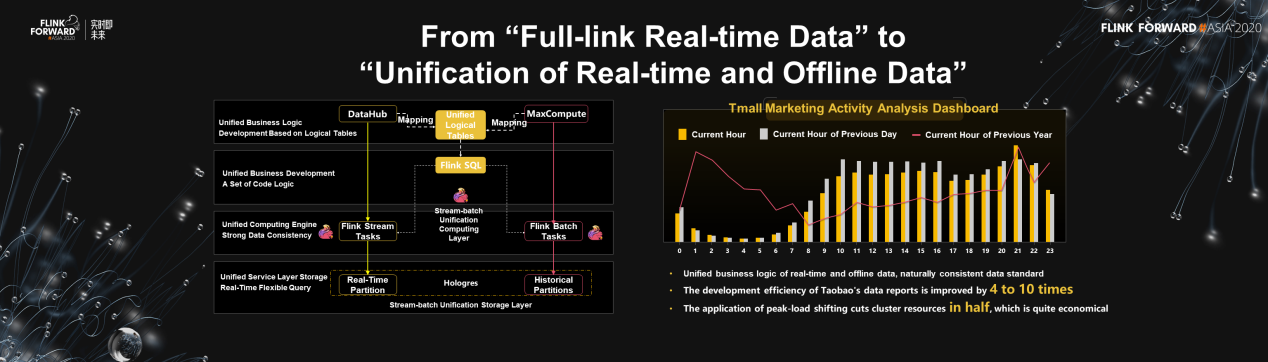

However, efforts still need to be paid to realize unification of real-time and offline data. In a big e-commerce promotion, it's required to compare real-time and offline data. The inconsistency will cause great interferences to the business. The business cannot judge whether the results are not as expected due to technical errors or the business effects beyond expectation. Therefore, Double 11 in 2020 was the first time for Alibaba to implement stream-batch unification scenarios. It also was the first time to implement business scenarios with the unification of real-time and offline data on a large scale.

The implementation of stream-batch unification scenarios in Double 11 2020 was Tmall marketing dashboard analyses. According to dashboard data, data from different dimensions can be obtained. The growth rate of user transactions of Double 11 can be checked and compared with that of last month and even that of the previous year. In this way, consistent stream-batch results are ensured.

Besides, Alibaba has combined the stream-batch integrated storage capability of Hologres and the stream-batch unification computing capability of Flink. It is to implement both a full-link data architecture with stream-batch unification and whole business architecture. Based on this architecture, the natural consistency of data can be kept, without business interference. Moreover, it improves the development efficiency of Taobao's data reports by 4 to 10 times.

On the other hand, the stream tasks and batch tasks of Flink are run in the same cluster, so the huge traffic on Double 11 may turn into a low peak at night. Therefore, a large number of offline batch analysis tasks need to be performed for the reports of the next day. Therefore, the application of peak-load shifting cuts resources in half, which is quite considerable.

At present, in addition to Alibaba, many cooperative partners, such as ByteDance, NetEase, and Zhihu, are exploring a unified stream-batch unification architecture based on Flink. 2020 was the year when the next-generation data architecture for Flink was implemented, which marked the transition from full-link real-time data to unification of real-time and offline data. In addition, Alibaba has already implemented it in the most important scenarios of Double 11.

In 2021, more enterprises will make attempts and contribute to the improvement of the new architecture, driving the community to realize stream-batch unification, offline and real-time unification, and big data and AI unification. It's expected to truly make technological innovations serve business well, change the big data processing architecture and the way big data and AI are integrated, so as to realize their value in all industries.

Application of Apache Flink in Real-time Financial Data Lake

161 posts | 46 followers

FollowAlibaba Clouder - December 2, 2020

Apache Flink Community China - January 20, 2021

Apache Flink Community - August 4, 2021

Apache Flink Community China - April 13, 2022

Alibaba Clouder - April 25, 2021

Apache Flink Community China - May 18, 2022

161 posts | 46 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Apache Flink Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free