By Li Yu (Jueding), Apache Flink PMC and Alibaba's senior technical expert.

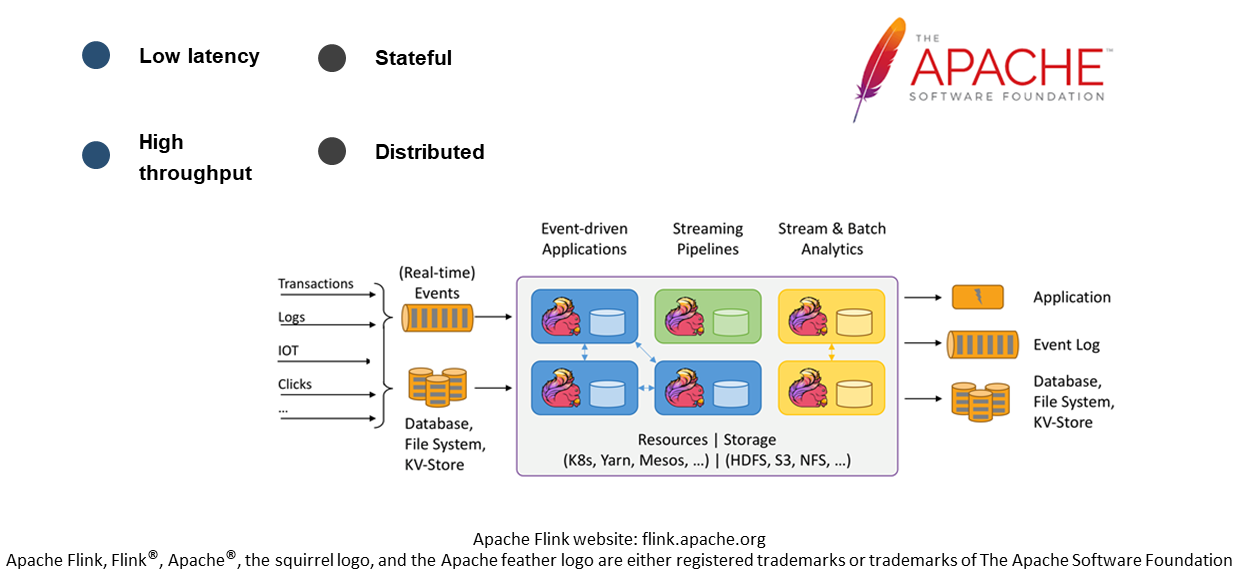

Apache Flink is an open-source stream-based stateful computing framework. It is executed in a distributed way with low latency and high throughput. In addition, it does well in handling complex stateful computational logic scenarios.

Apache Flink is a top-level project of the Apache Open-source Software Foundation. Flink originated from a well-known lab – the lab of Technical University of Berlin, just as many top-level Apache projects that originated from university labs, such as Spark originating from the UC Berkeley Lab.

The project was originally named Stratosphere, whose goal was to make big data processing more concise. Many of the initial code contributors to the project are still active on Apache's Project Management Committee today for continuous contributions to the community.

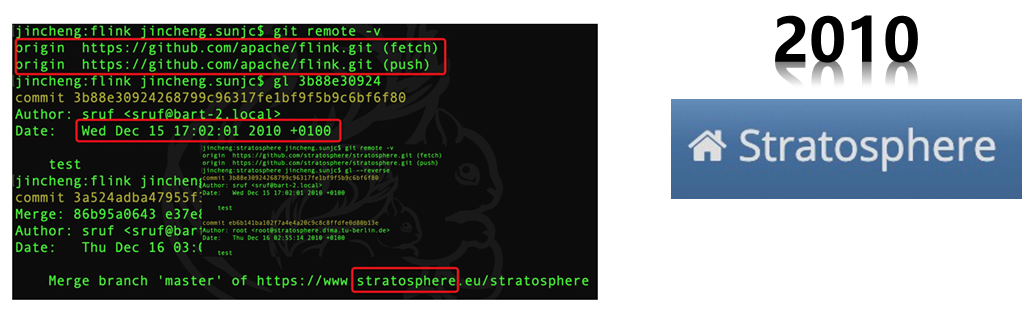

The Stratosphere project was launched in 2010. As seen from its Git commit log, its first line of code was written on December 15, 2010.

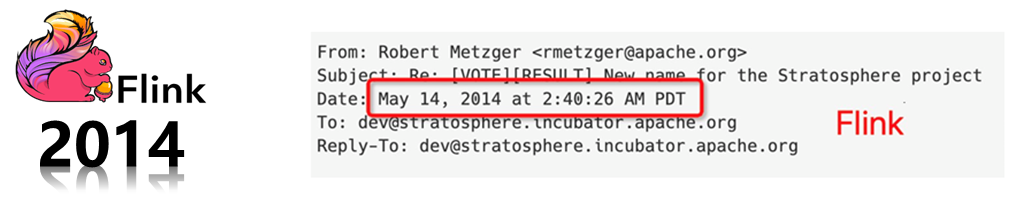

In May 2014, the Stratosphere project was donated to the Apache Software Foundation for incubation as an incubator project, renamed Flink.

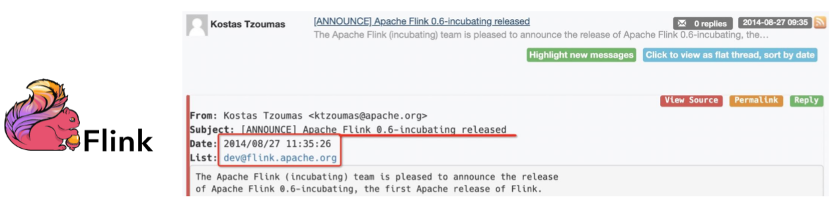

The Flink project was very active. The first version under the incubator project, v0.6-incubating, was released on August 27, 2014.

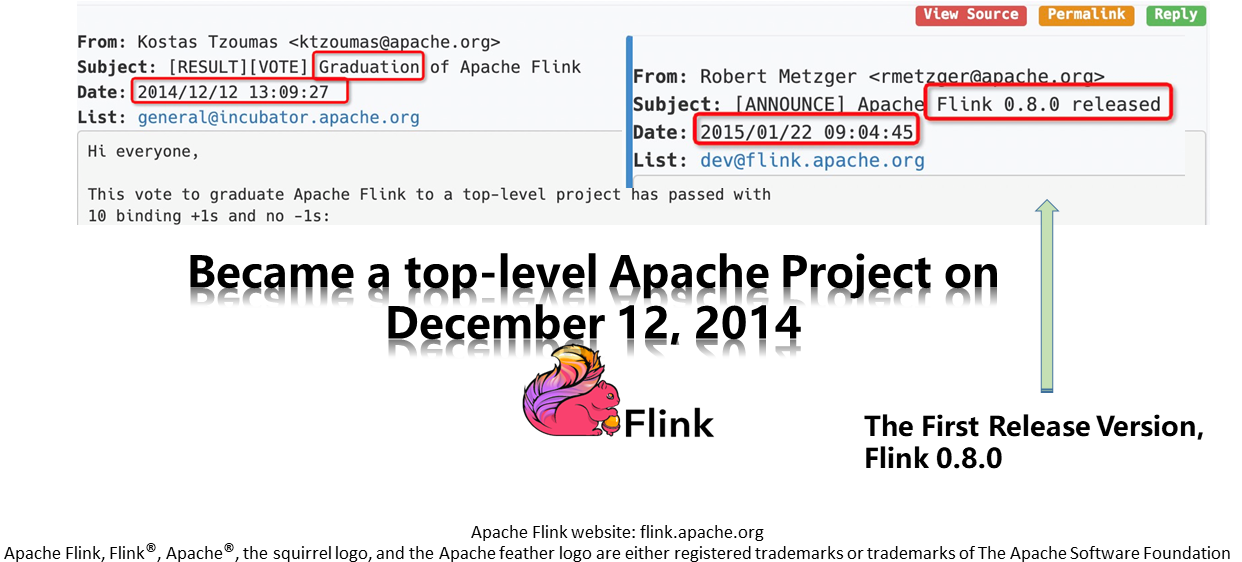

With many contributors attracted by the Flink project as well as great activity shown in this process, Flick became a top-level Apache project in December 2014.

The first official version, Flink 0.8.0, was released a month after Flink became the top project. Since then, Flink has kept its version updated basically every four months to date.

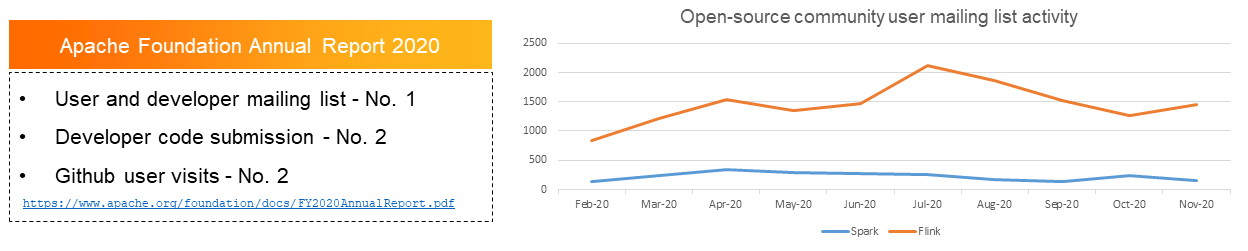

Flink has become the most active big data project in the Apache community nowadays with its user and developer mailing list ranking first in the Apache annual report 2020.

As shown in the above chart on the right, the Flink project was even more active than the Spark project that is also very active in terms of user mailing list. In addition, as for the number of developer code submissions and the Github user visits, Flink ranks second among all Apache projects and first among all big data projects.

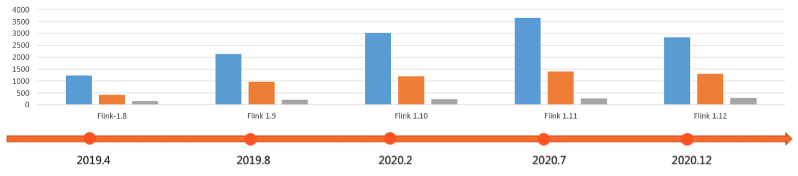

Since April 2019, the Flink community has released five versions, each of which involved more commits and contributors.

With the rapid development of the Internet, real-time big data processing is now trending in this era.

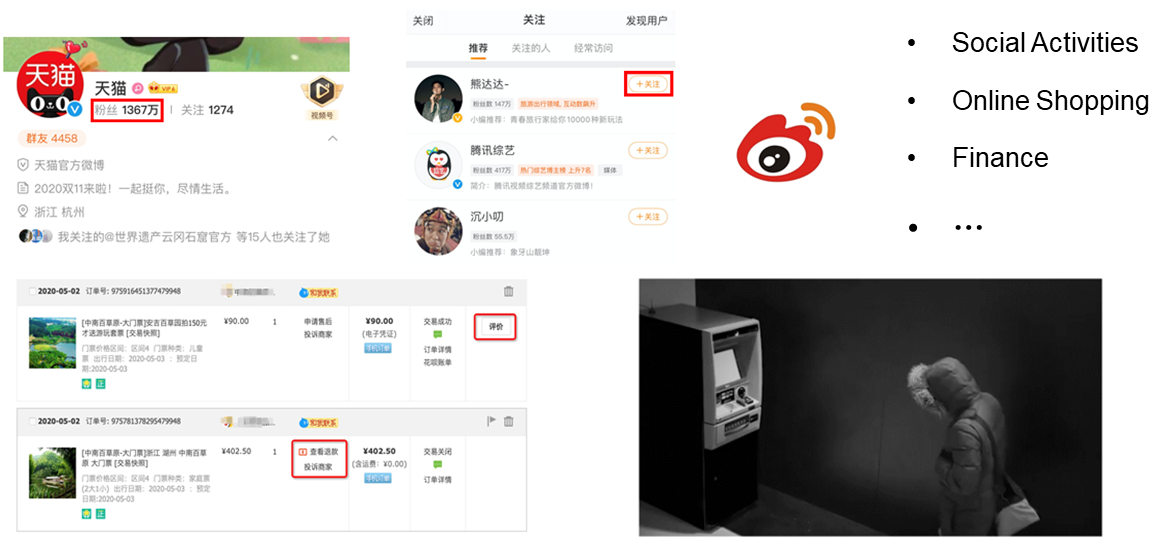

Some common scenarios in real life are shown in the preceding figure, such as the real-time big board for Spring Festival Gala live streaming, and the real-time turnover statistics and media reports in the Double 11.

Real-time traffic monitoring is supported by Alibaba Cloud's Intelligence Brain, together with real-time risk control and monitoring conducted by banks. Real-time personalized recommendations are offered by Taobao, Tmall and other applications based on users' requirements. All these examples indicate that big data processing is heading its way to real time.

With the trend of real-time computing, Flink has become a de facto standard for real-time computing both home and abroad.

As shown in the preceding figure, many domestic and overseas companies are using Flink. This includes some major international companies such as Netflix, eBay, and LinkedIn, as well as several large domestic companies such as Alibaba, Tencent, Meituan, and Kuaishou

Stream computing engines have undergone many evolutions. The first-generation stream computing engine Apache Storm is designed as stream-only with low latency. But it cannot avoid repeated processing of messages, which results in data incorrectness.

As the second-generation stream computing engine, Spark Streaming solves the problem in terms of the stream computing semantics correctness. However, with batch computing as the core of its design, its biggest issue is the high latency. Only a 10-second level of latency can be achieved instead of side-to-side latency within seconds.

Flink is the third-generation and the latest stream computing engine. It ensures low latency and message consistency semantics, and greatly reduces the application complexity in built-in state management.

The first type of Apache Flink use is in event-driven applications.

Event-driven means that an event will trigger another or many subsequent events. These events will later form some information, based on which certain processing is required.

In social scenarios, for example in Twitter, when someone is followed, the number of his followers changes. Then, if he tweets, his followers will receive a notification. This is typically called event-driven.

In addition, when it comes to online shopping, if the user makes comments on the goods, these comments will affect the star rating of the stores, and also trigger the detection of malicious negative reviews. Besides, users can also check the logistics information or other information of the goods through clicking the information flow, which may trigger a series of subsequent events as well.

On top of that, there are also anti-fraud scenarios in finance in which swindlers cheat others out of their money through text messages first and then withdraw the money at ATMs. These swindlers are captured by the camera and quickly identified, and then handled accordingly. This also involves a typical event-driven application.

To sum up, event-driven applications are stateful applications that trigger computing, status update, or external system operations based on events in the event flow. Event-driven applications are commonly used in real-time computing businesses, such as real-time recommendation, financial anti-fraud practice, and real-time rule alerting.

The second type is in data analysis applications. For example, the real-time statistics of Double 11 turnover, including PV and UV statistics.

As shown in the above map, it also includes the calculation of the download volume of open-source Apache software in different regions around the world, which is actually a process of information summarization.

What's more, the real-time analysis and aggregation of a large amount of information are also included in some real-time marketing big screens, the ups and downs in sales, as well as the month-on-month and year-on-year comparison of the marketing results, which are the scenarios where Flink is typically used.

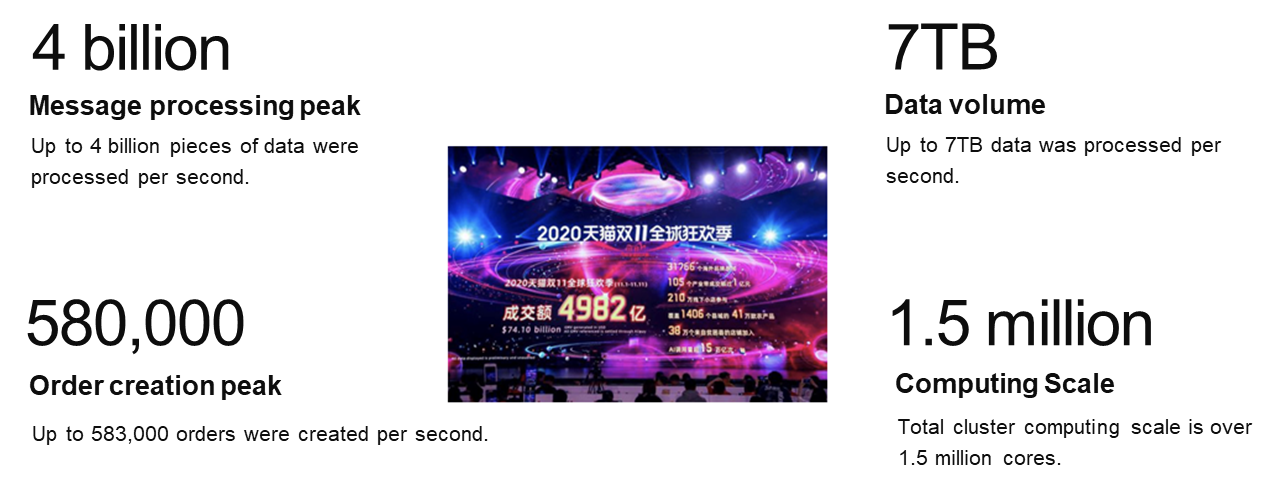

As shown in the preceding figure, based on the real-time computing platform of Flink, Alibaba processed 4 billion messages with a total data volume of 7TB during the 2020 Double 11. It also created 580,000 orders per second with the computing scale exceeding 1.5 million cores.

It can be seen that these scenarios, though with a large data volume and high real-time requirements, can be handled by Apache Flink very well.

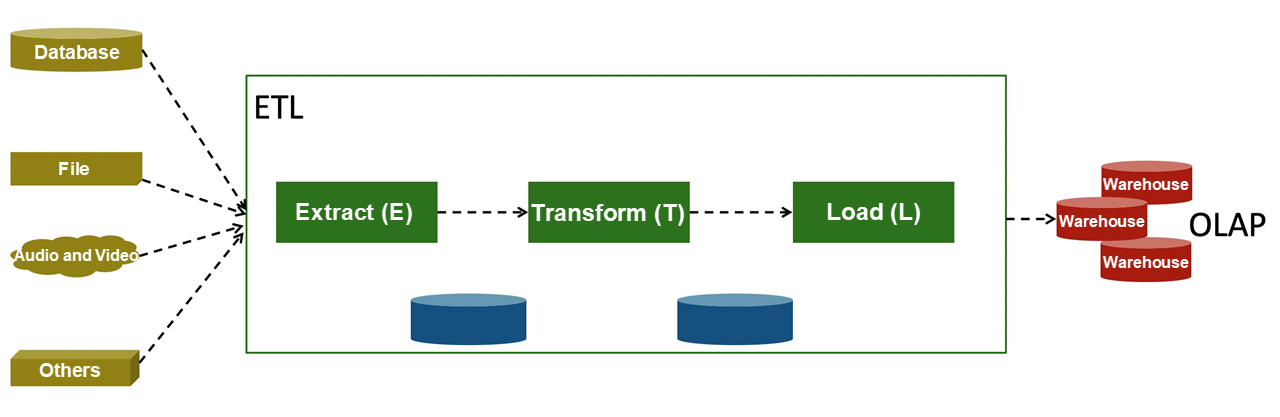

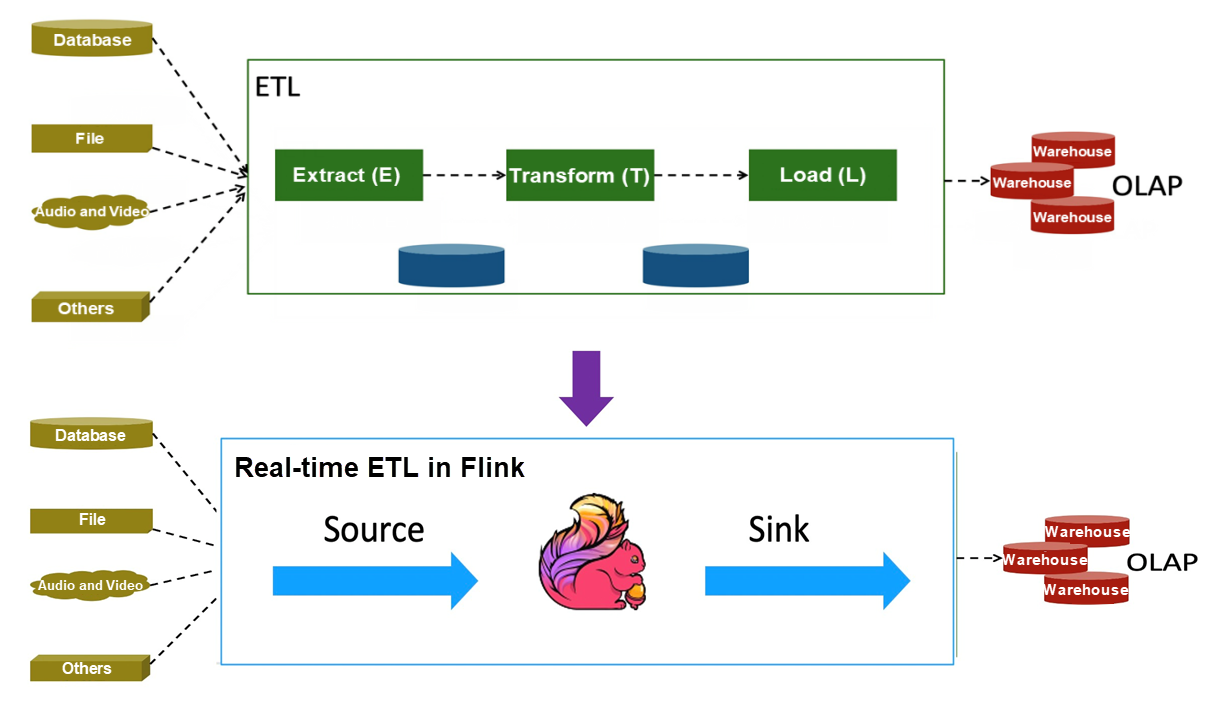

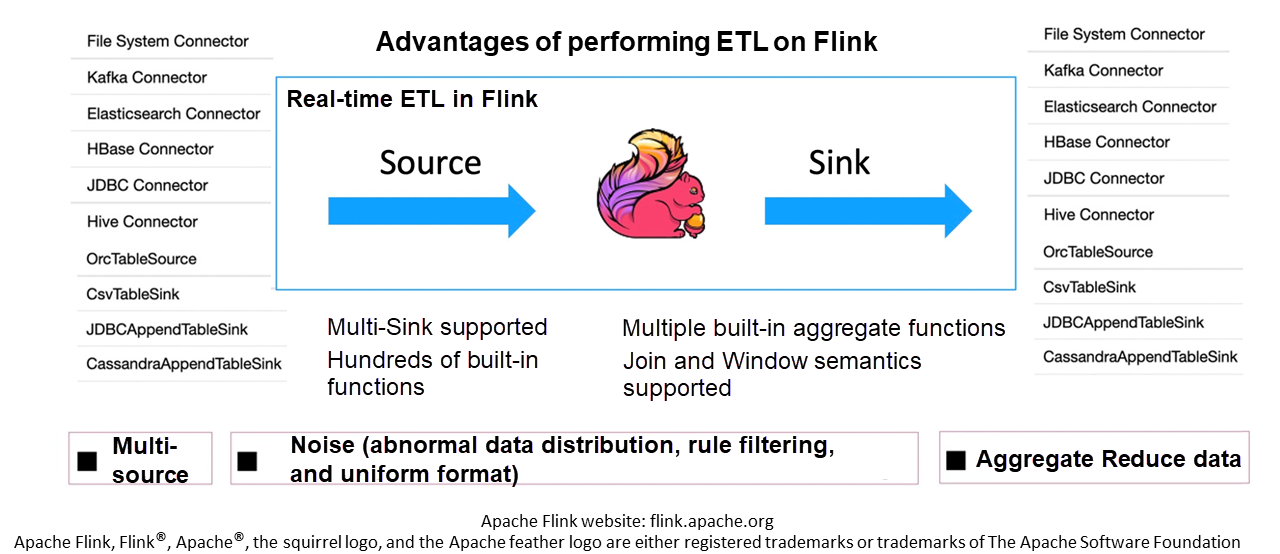

The third type is in data pipeline applications, that is, ETL.

Extract-Transform-Load (ETL) is to extract, transform, and load the data from a data source to a destination.

The traditional ETL processes data off-line, often by hour or by day.

However, as the big data processing is trending to real time, there comes a need for real-time data warehouses, requiring minute-level or second-level data update for timely query, real-time metrics as well as real-time judgment and analysis.

Flink can meet the real-time needs mentioned above to the utmost extent.

On the one hand, Flink provides abundant connectors, which support multiple data sources and data sinks, covering all mainstream storage systems. On the other hand, it has some common built-in aggregate functions to write ETL programs. Therefore, Flink is very suitable for ETL applications.

There are four core concepts: Event Streams, State, (Event) Time, and Snapshots.

Event streams can be real-time or offline. Flink is stream-based. But it can process both streams and batches. Both the input of streams and batches are event streams. The difference lies in real-time and batch.

Flink is good at stateful computing. Complex business logic is usually stateful. It not only processes a single event, but also records a series of historical information for later computing or judgment.

The main problem to be solved is how to ensure consistency when data is out of order.

Data snapshots and fault recovery have been implemented to ensure data consistency and job upgrade and migration.

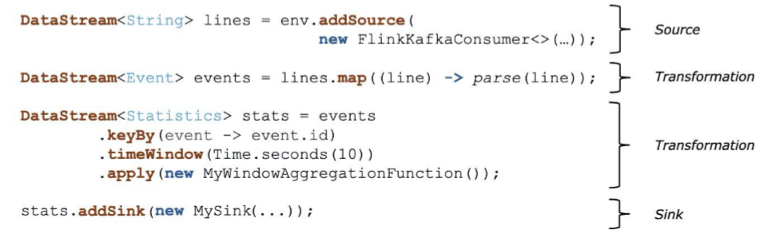

Next, let's take a look at the Flink job description and logic topology in detail.

The code shown above is a simple Flink job description. It first defines a Kafka Source to indicate that the data source comes from a Kafka message queue. Then, it parses every piece of data in Kafka. After the parsing, KeyBy the distributed data based on the event ID. Then, perform window aggregation every 10 seconds for each group. After the aggregation, the message is written to the custom Sink. Such a simple job description will map to an intuitive logic topology.

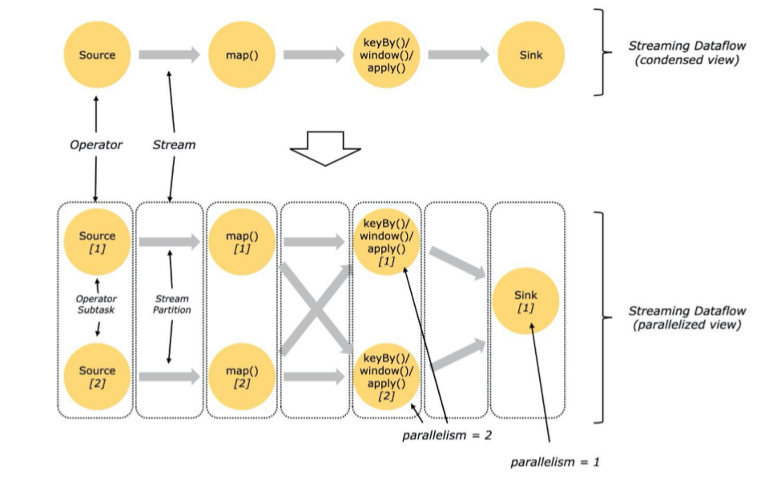

It can be seen that the logic topology contains four operators, which are Source, Map, KeyBy/Window/Apply, and Sink. The logic topology is called Streaming Dataflow.

The logic topology corresponds to the physical topology. Each of its operators can be processed concurrently for load balancing and processing acceleration.

Big data is basically processed in a distributed way, and each operator features different concurrency. If there is KeyBy, data is then grouped by key. Therefore, after the operator before the KeyBy finishes processing the data, the data is shuffled and sent to the next operator. The preceding figure shows the corresponding physical topology of the example logic topology.

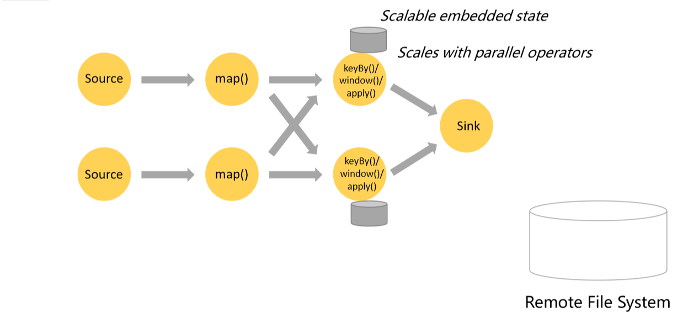

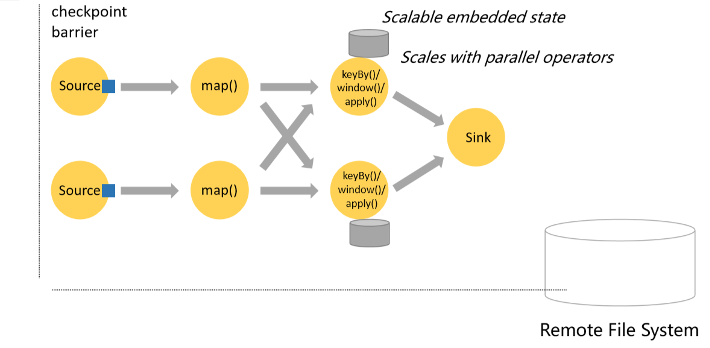

This section describes the state management and snapshots in Flink.

When performing the aggregate logic of the Window, the data is processed by the aggregate function every 10 seconds. The data within the 10 seconds needs to be stored first and then processed till the time window is triggered. The state data is stored locally as embedded storage. The embedded storage here can be the memory of a process or a persistent KV storage like RocksDB. The main difference is the processing speed and capacity.

In addition, each concurrency of the stateful operators has a local storage. Therefore, the state data can be dynamically scaled according to the parallelism of operators, thus increasing concurrency to process a large amount of data.

Furthermore, jobs may fail in many cases. How can the data consistency be ensured when re-running the job after a failure?

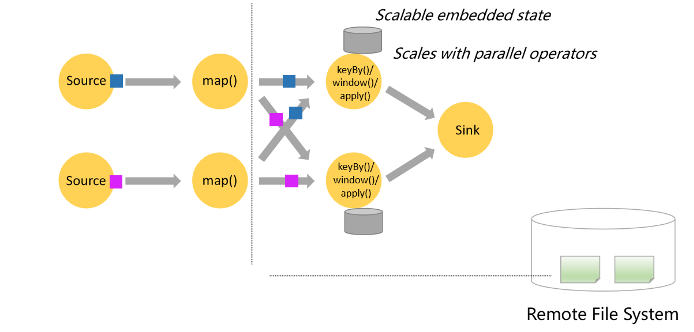

Based on the Chandy-Lamport algorithm, Flink stores the state of each node in the distributed file system as a Checkpoint. The process is as follows: first, inject Checkpoint Barrier into the data source, which is a special message.

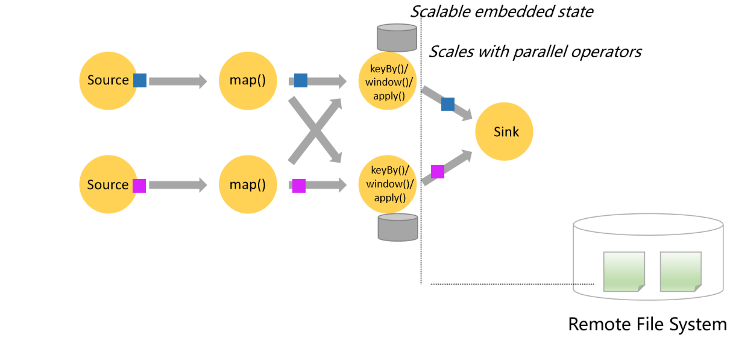

Then, the Barrier will flow with the data stream as a normal event. When the Barrier arrives at the operator, the operator will take a snapshot of its current state. When the Barrier flows to the Sink, and all the states are completely saved, a global snapshot is formed.

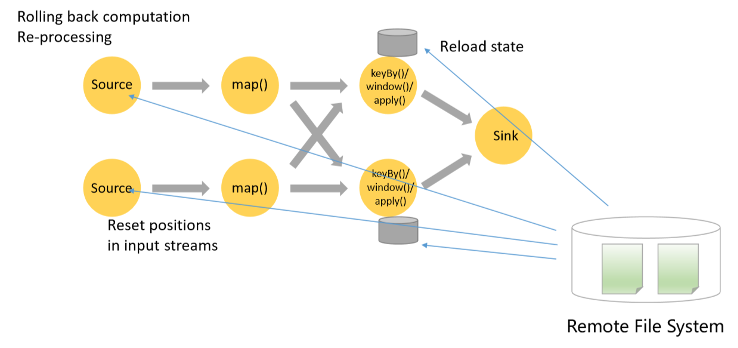

As such, when a job fails, users can roll back through the Checkpoint stored in the remote file system. That is, roll back the Source to the offset recorded by the Checkpoint; then, roll back the state of a stateful node to the time when the job fails for re-computation. This ensures data semantics consistency without computing from the beginning.

Event Time is another important definition in Flink.

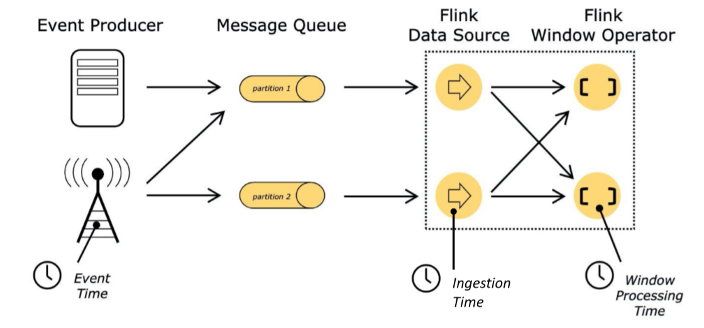

There are three types of time definitions in Flink. Event Time refers to the time when an event occurs. Ingestion Time refers to the time when an event arrives at a Flink data source, or the time when it enters the Flink processing framework. Processing Time refers to the current time when an event reaches the operator. What are the differences between them?

In reality, there may be a long interval from the time an event occurs to that the event is written to the system. For example, when forwarding, commenting, or liking the tweets on the subway where the reception is weak, these operations may not be completed until passengers leave the subway. Therefore, some events occurring earlier may arrive at the system later. However, in many scenarios, due to its better accuracy, Event Time is chosen to define the time when an event occurs.

However, in this case, due to the latency, it takes a long time for the event to arrive at the window, and the side-to-side latency may be large.

The problem of disorder also needs to be solved. Processing Time as the event time features fast processing and low latency, but it cannot reflect the exact time an event occurs. Therefore, when developing an application, the choices need to be made based on the application features.

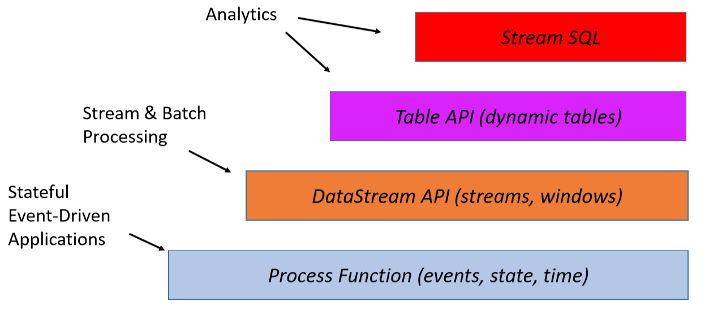

Flink can be divided into four APIs. The bottom-layer API is a Process Function that can be customized. It processes some basic elements in detail, such as time and state, to implement its own logic.

The upper layer is the DataStream API, which can process streams and batches. Additionally, it expresses the logic. It has many built-in functions of Flink, which are convenient for users to write programs.

The top layer APIs are the Table API and Stream SQL. They are very concise top-layer expressions, which are exemplified respectively as follows.

As can be seen in processElement, the logics of the event and state can be customized. In addition, a timer can be registered, and it offers a very detailed underlying control when customizing what to be processed when the timer is triggered or when the time is up.

DataStream API refers to job description. It has many built-in functions, such as Map, keyBy, timeWindow, and sum. It also contains some custom ProcessFunctions, such as the MyAggregationFunction.

The logic is more intuitive if described by the Table API and Stream SQL. Data analysts can write the logic in descriptive language without knowing the underlying details. For more information about the Table API and Stream SQL, see course 5.

There are three roles in the Flink runtime architecture.

The first one is the client, which submits its application. If the application is an SQL program, the client also runs the SQL Optimizer and generates the corresponding JobGraph. The client submits the JobGraph to the JobManager, which can be considered the master node of the entire job.

The JobManager starts a series of TaskManagers as work nodes. The work nodes are connected in series according to the job topology, with the corresponding computing logic processing involved. The JobManager mainly processes some control flows.

Let's wind up this article with the environments where Flink can be deployed.

Flink can be deployed to the YARN, Mesos, and Standalone clusters manually. It can also be submitted to the Kubernetes cloud-native environment through images.

Currently, Flink can be deployed in many physical environments.

Flink Course Series (2): Stream Processing with Apache Flink

159 posts | 46 followers

FollowApache Flink Community China - August 11, 2021

Apache Flink Community China - August 4, 2021

Apache Flink Community China - August 11, 2021

Apache Flink Community China - November 6, 2020

Apache Flink Community China - November 8, 2023

Apache Flink Community China - December 25, 2019

159 posts | 46 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free