In this blog, Cao Fuqiang, Senior System Engineer and Head of Data Computing at the Machine Learning R&D Center at Weibo, gives an introduction to the application of Realtime Compute for Apache Flink with Weibo:

Weibo is China's leading social media platform, with 241 million daily active users and 550 million monthly active users. Mobile users account for more than 94% of the daily active users.

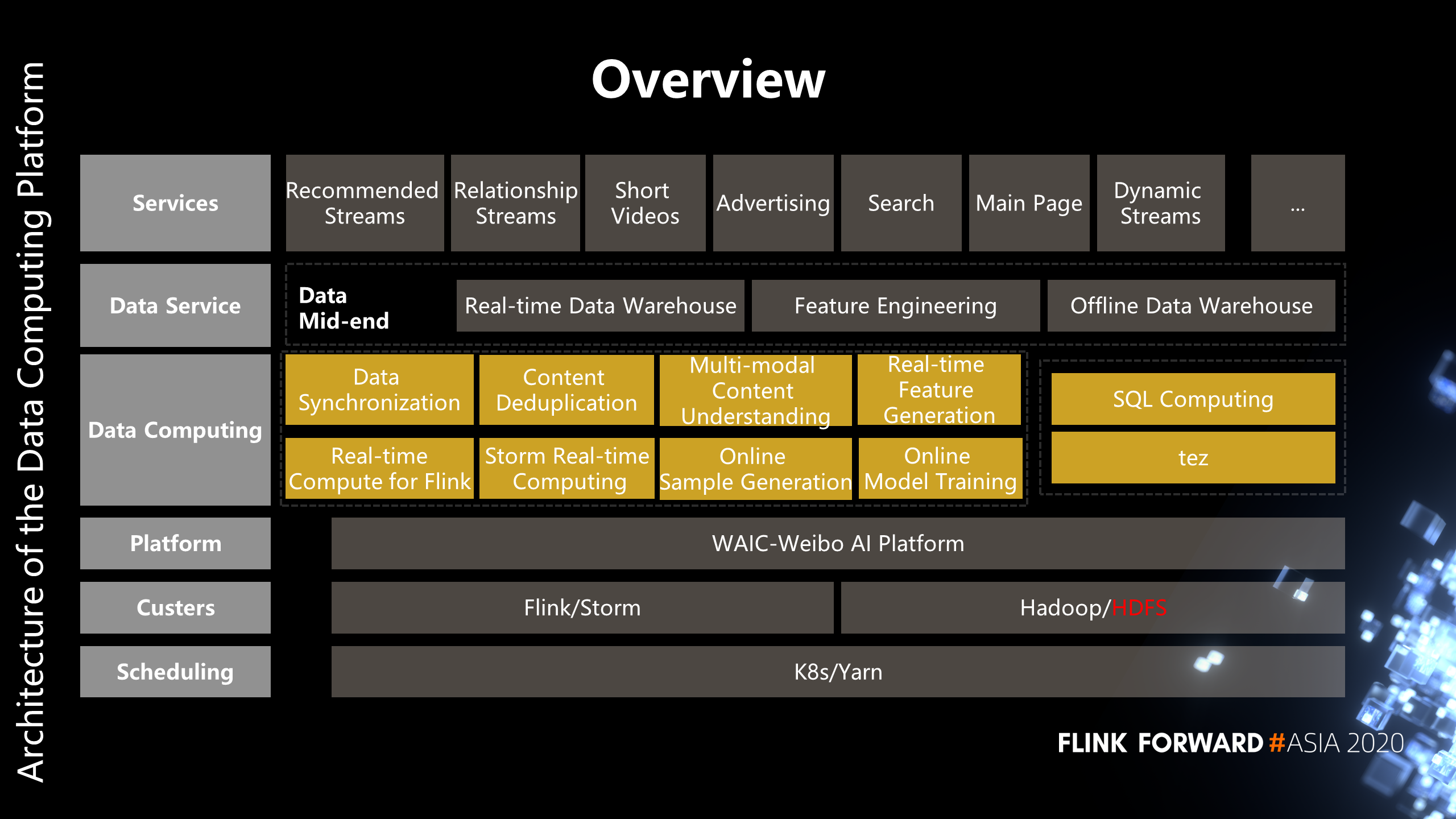

The following figure shows the architecture of the data computing platform.

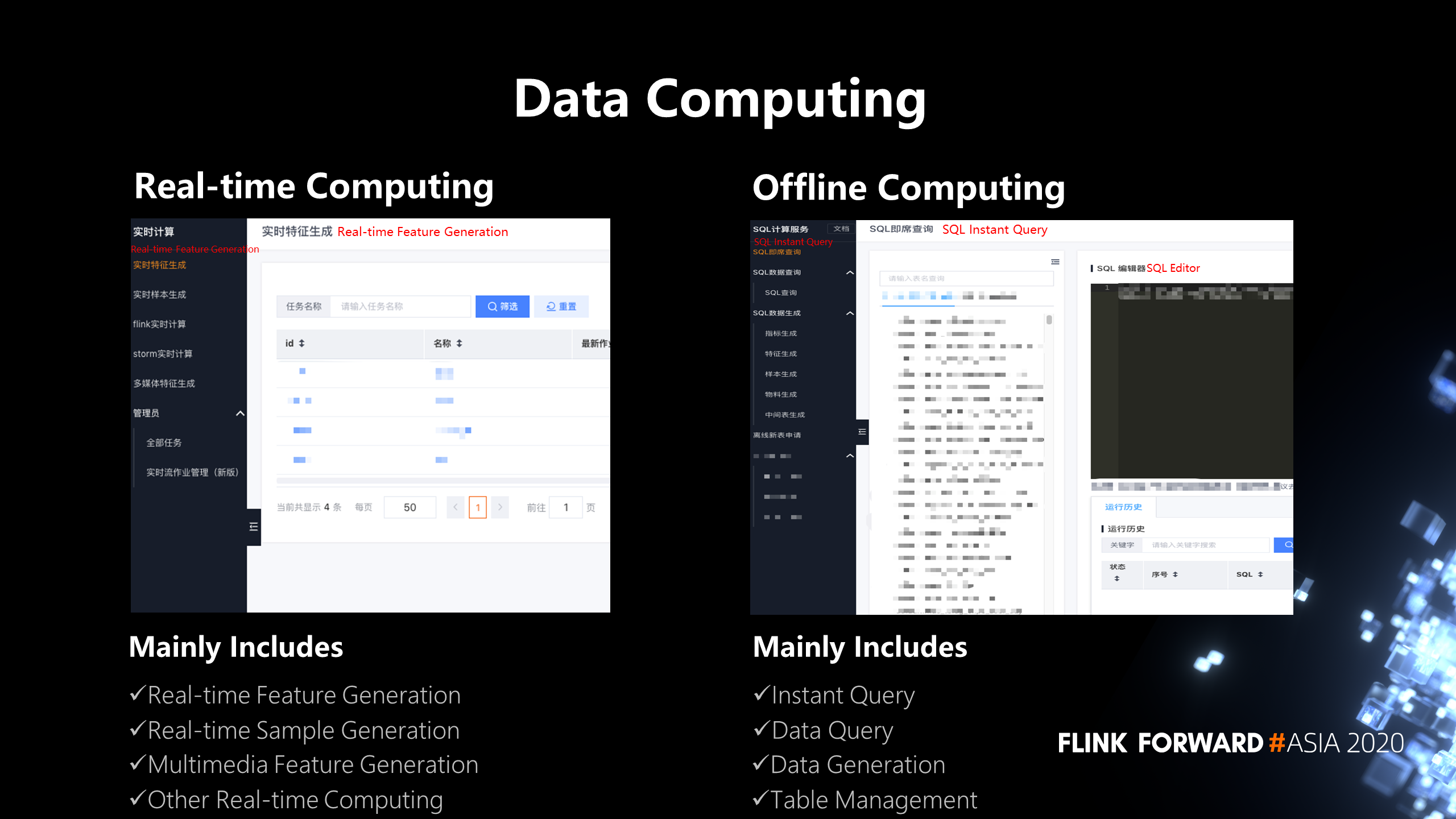

The following two figures show data computing, including real-time compute and offline computing.

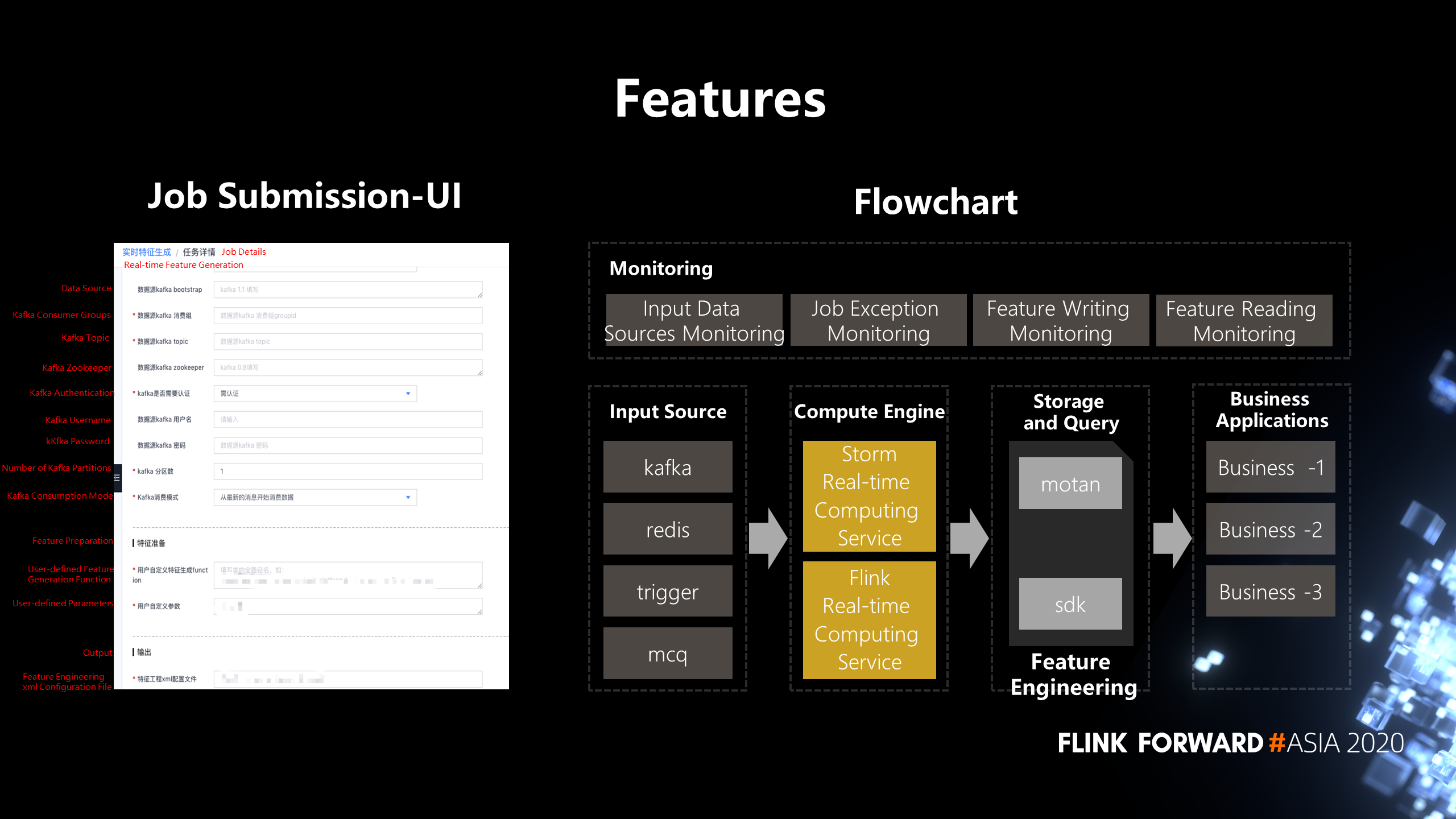

As shown in the following figure, a real-time feature generation service was built based on Flink and Storm. In general, it consists of job details, input source feature generation, output, and resource configuration. Users can develop feature-generated UDFs according to our pre-defined interfaces. Other things, such as input and feature writing, are provided by the platform, and users only need to configure them on the page. In addition, the platform provides input data source monitoring, job exception monitoring, feature writing monitoring, feature reading monitoring, all of which are generated automatically.

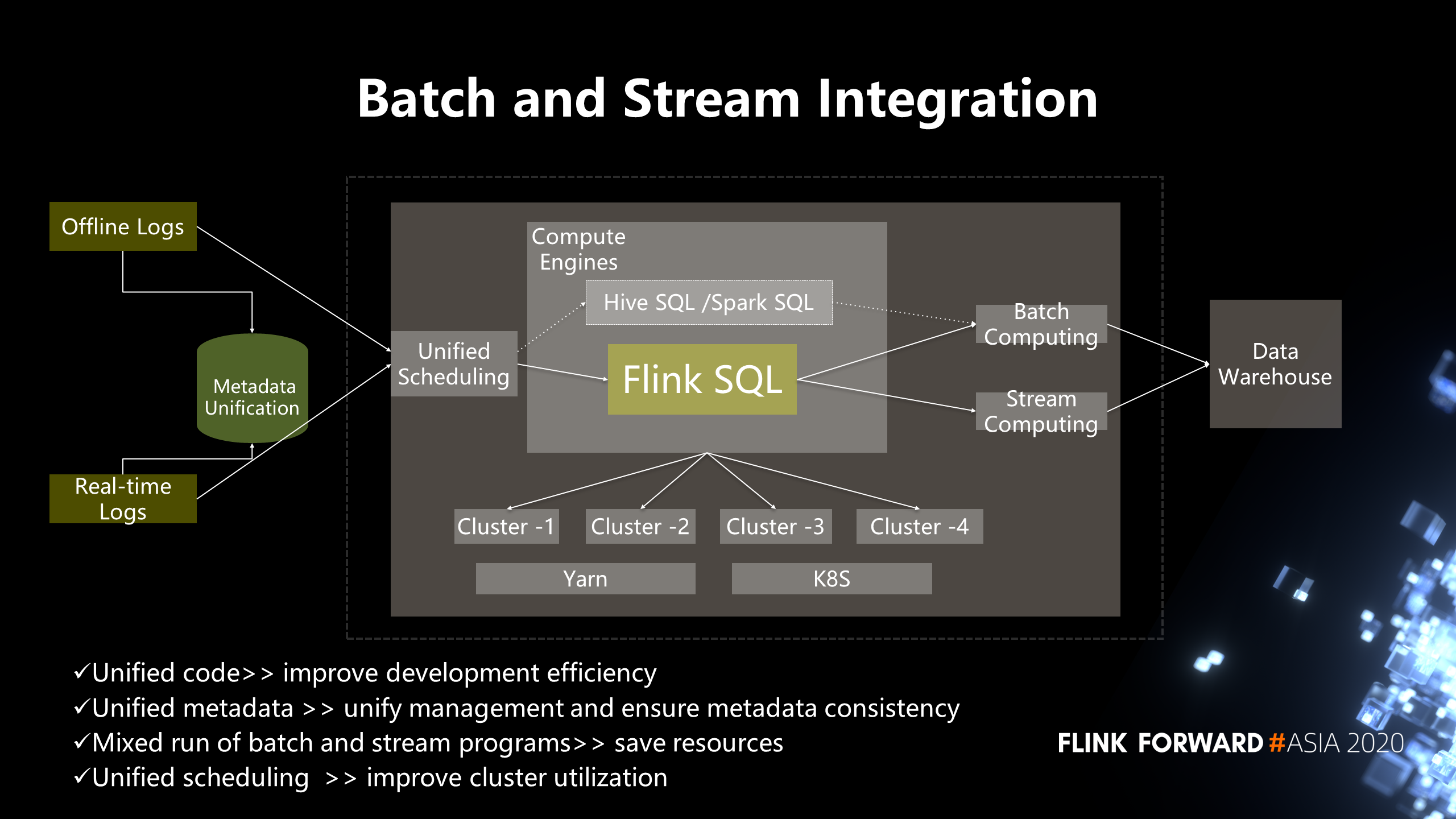

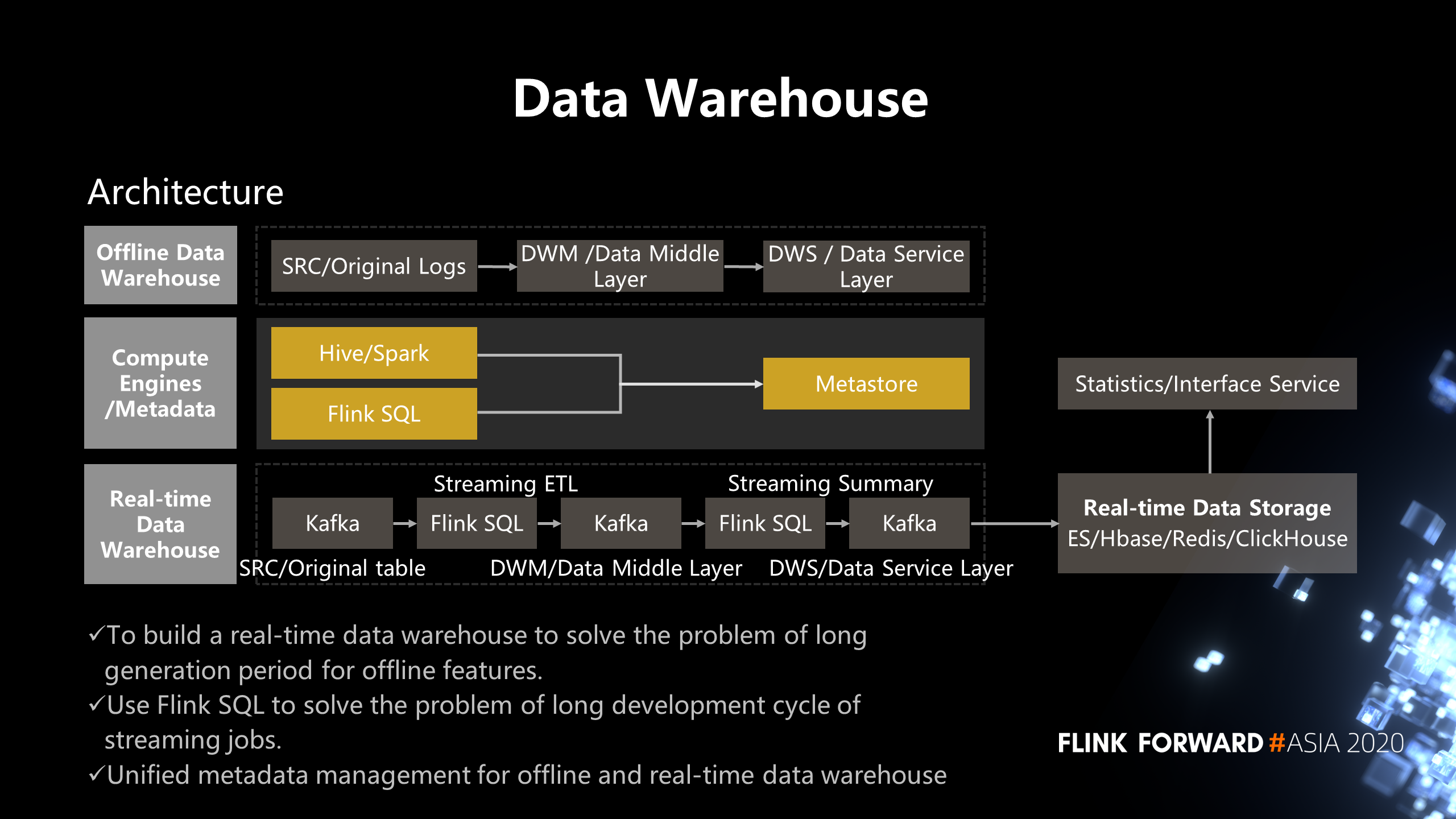

The following describes the architecture of batch and stream integration built on FlinkSQL. First, we unify metadata and integrate real-time and offline logs through the metadata management platform. After integration, we provide a unified scheduling layer when users submit jobs. Scheduling refers to dispatching jobs to different clusters based on the job type, job characteristics, and current cluster load.

Currently, the scheduling layer supports the HiveSQL, SparkSQL, and FlinkSQL computing engines. Hive and Spark SQL are used for batch computing, while FlinkSQL runs batch and streaming jobs together. The processing results are output to the data warehouse for the business side to use. The batch and stream integration architecture has four key points:

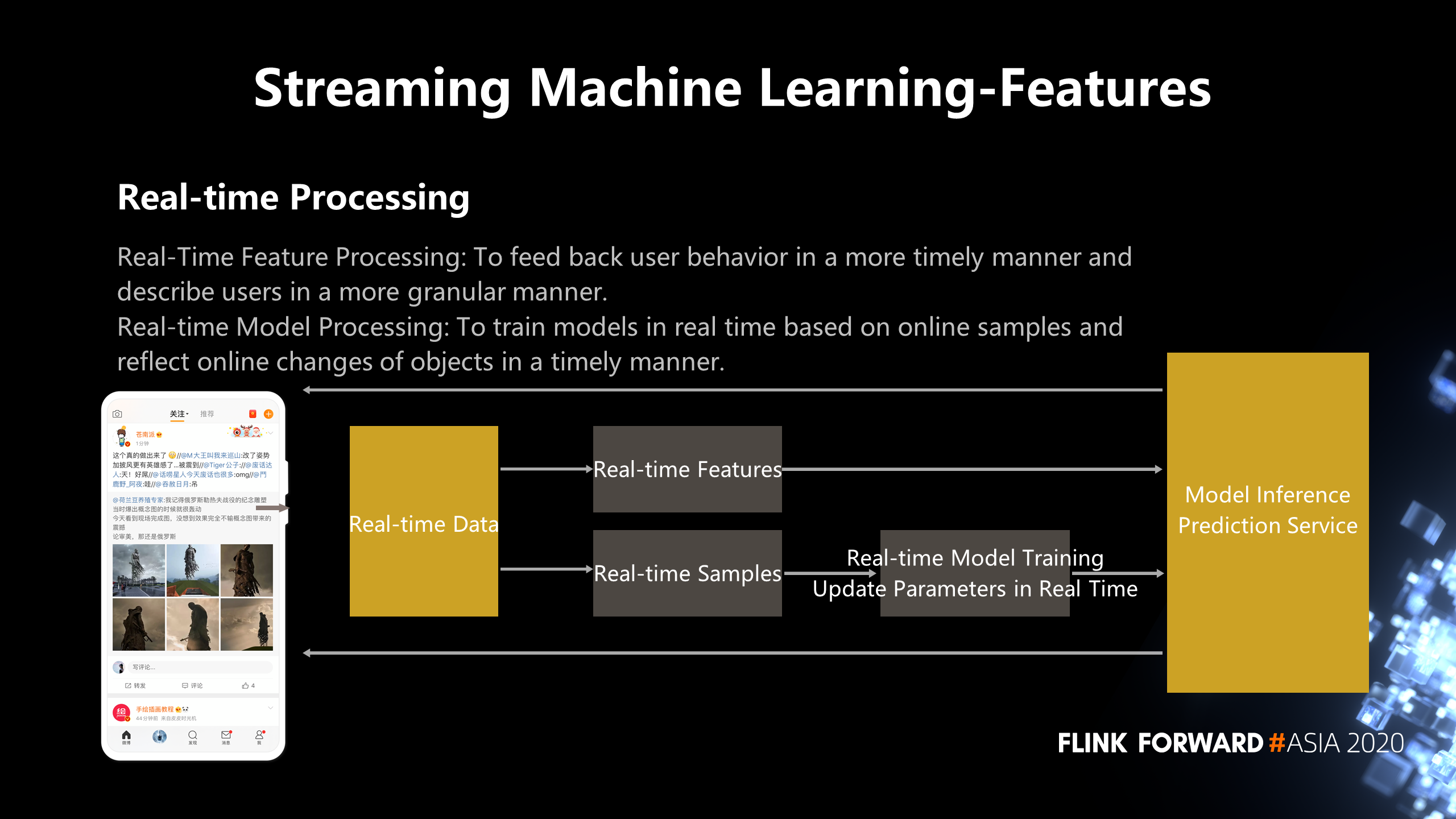

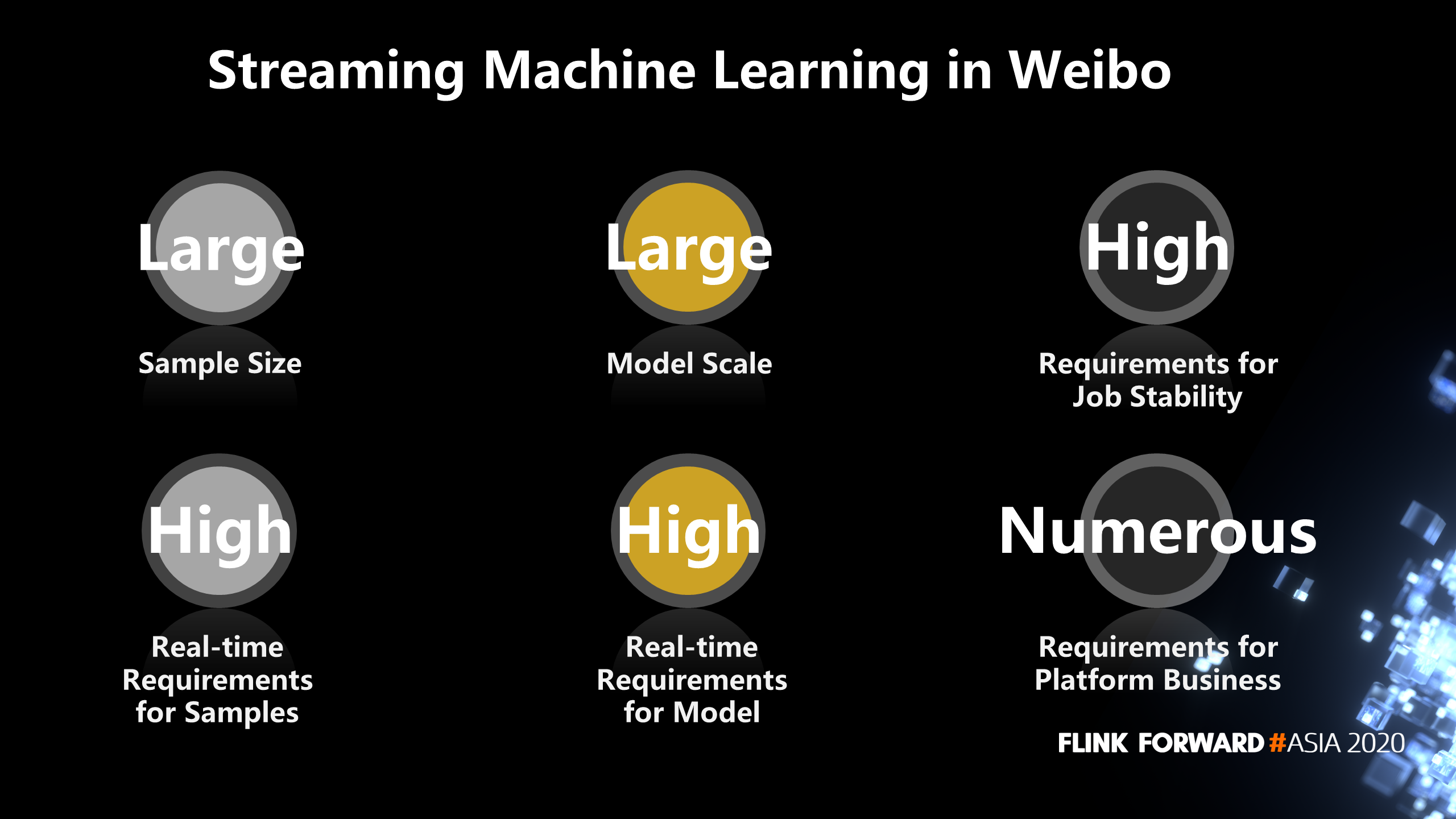

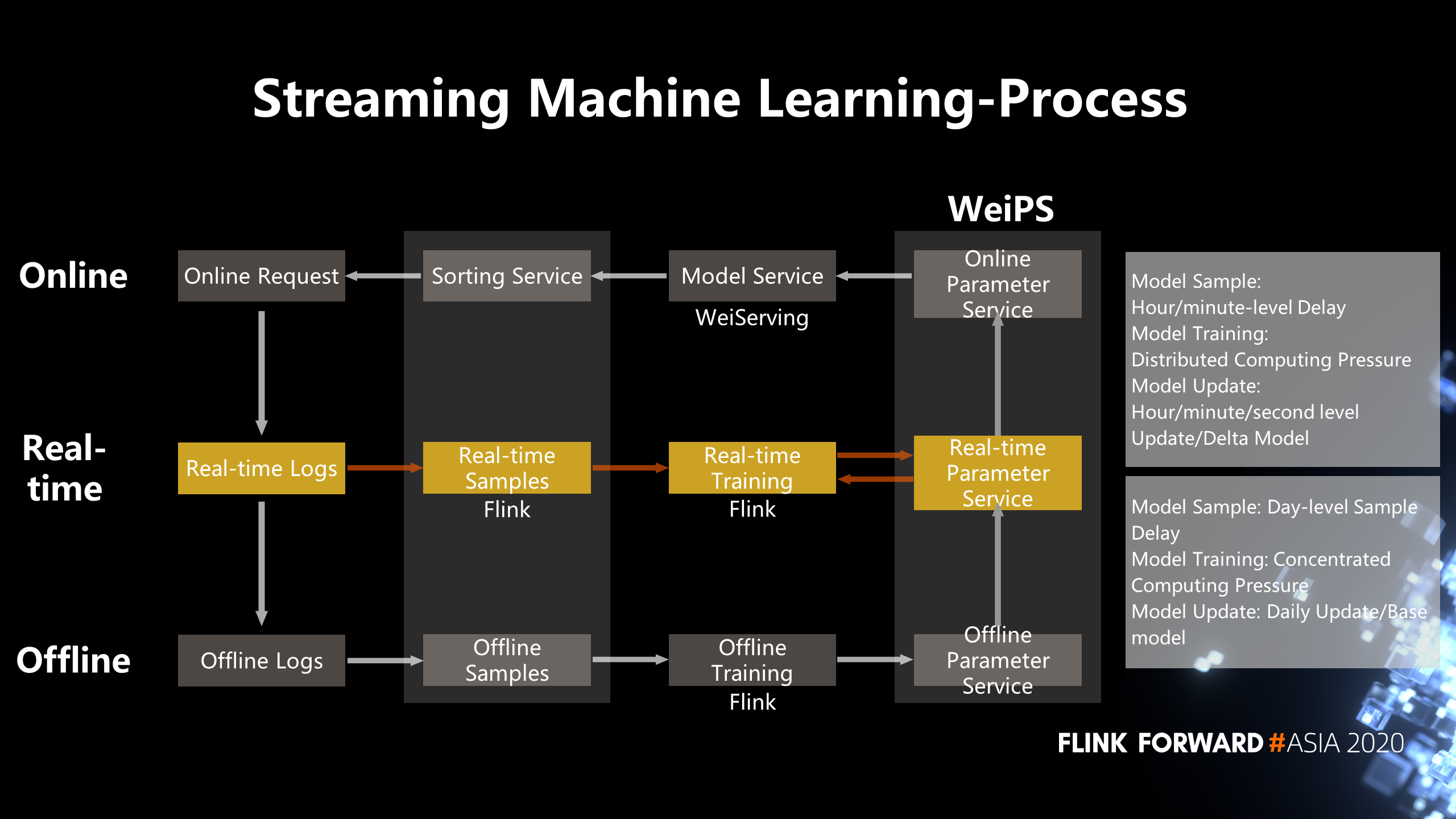

First of all, I will introduce several characteristics of streaming machine learning. The most significant feature is real-time processing. This can be divided into real-time feature processing and model processing.

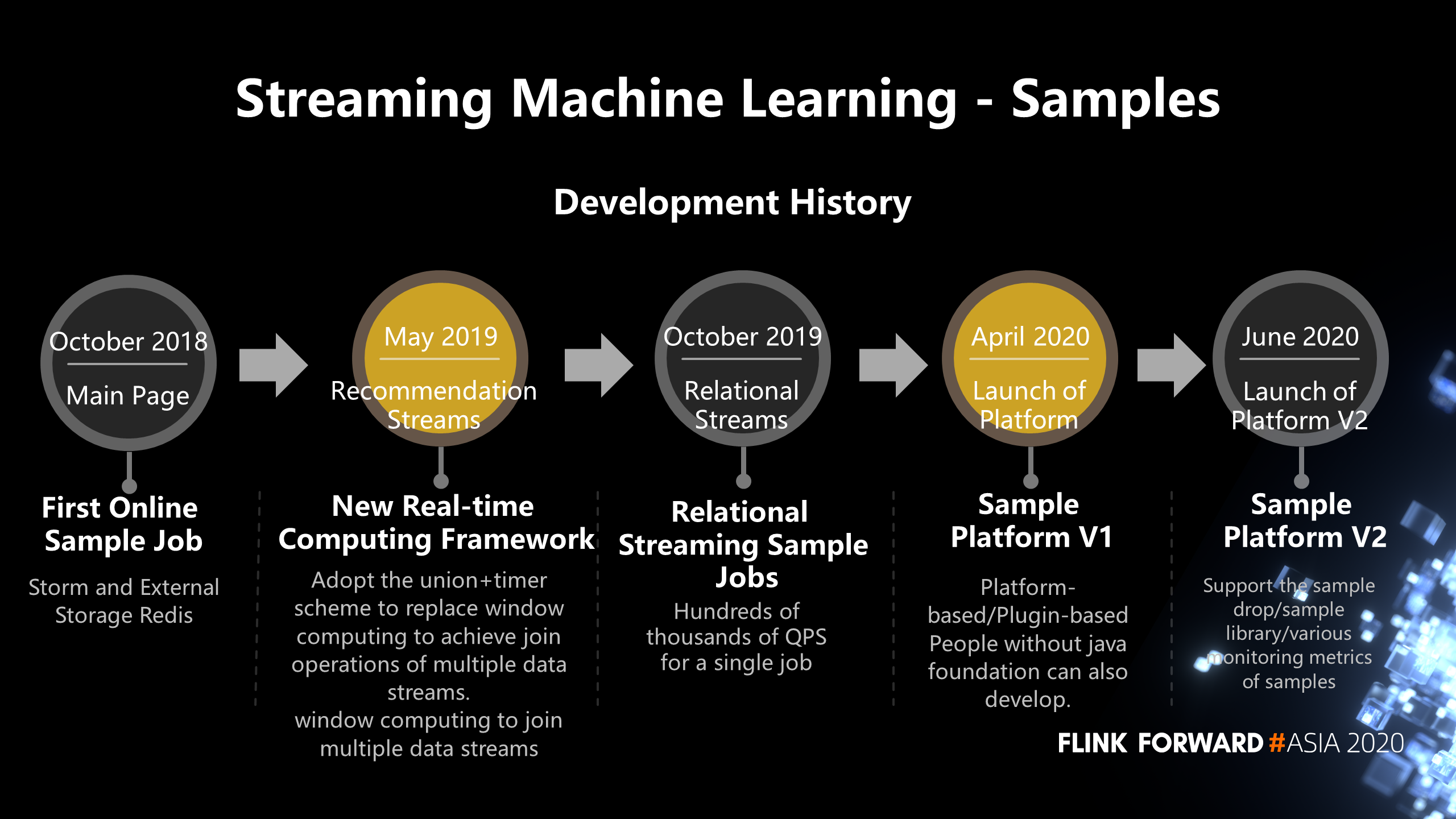

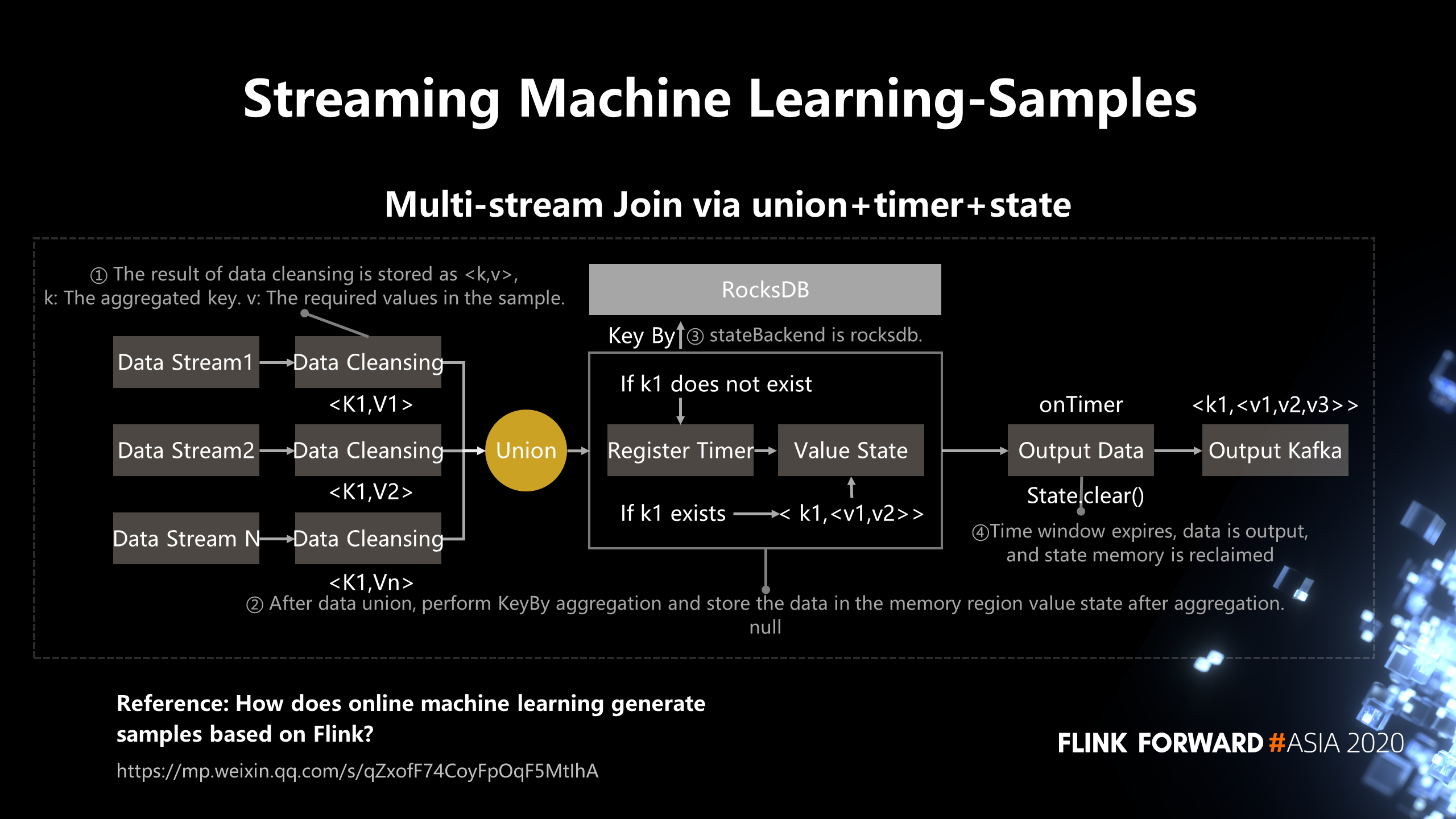

The following briefly introduces the development history of streaming machine learning samples. In October 2018, we launched our first streaming sample job, which was performed by Storm and external storage Redis. In May 2019, we adopted the new real-time compute framework, Flink, and adopted the union + timer scheme to replace window computing to achieve join operations of multiple data streams. In October 2019, we launched a sample job, with the QPS of a single job reaching hundreds of thousands. In April 2020, the sample generation process was platform-based. By June 2020, the platform had made an iteration to support the sample drop, including the sample library and the improvement of various monitoring metrics of samples.

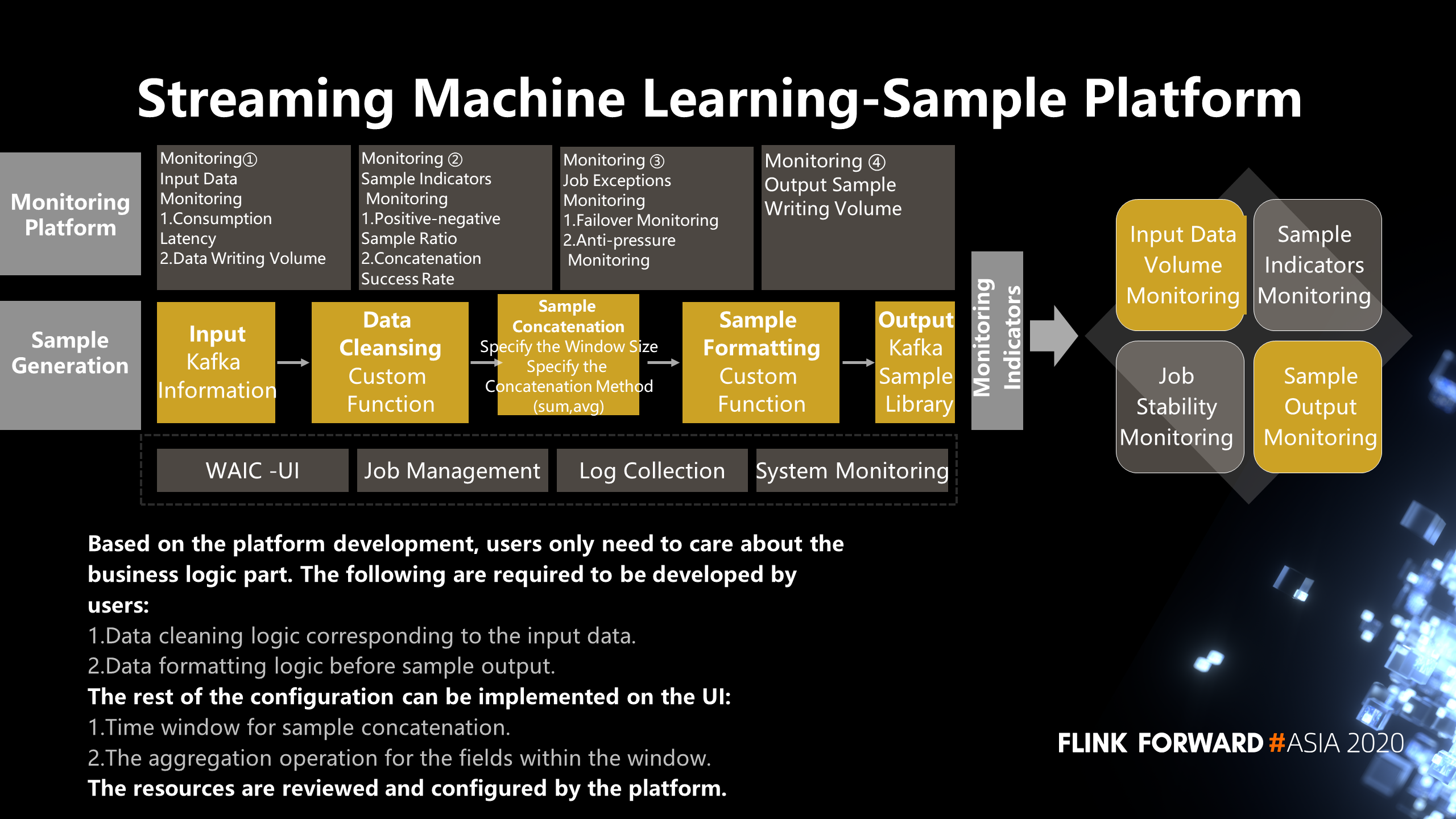

We have made the whole sample concatenation process a platform-based operation and divided it into five modules, including input, data cleaning, sample concatenation, sample formatting, and output. Based on the platform development, users only need to care about the business logic part. The following are required to be developed by users:

The rest of the configuration can be implemented on the UI:

The resources are reviewed and configured by the platform. In addition, the entire platform provides some basic monitoring, including the monitoring of input data, sample indicators, job exceptions, and sample output.

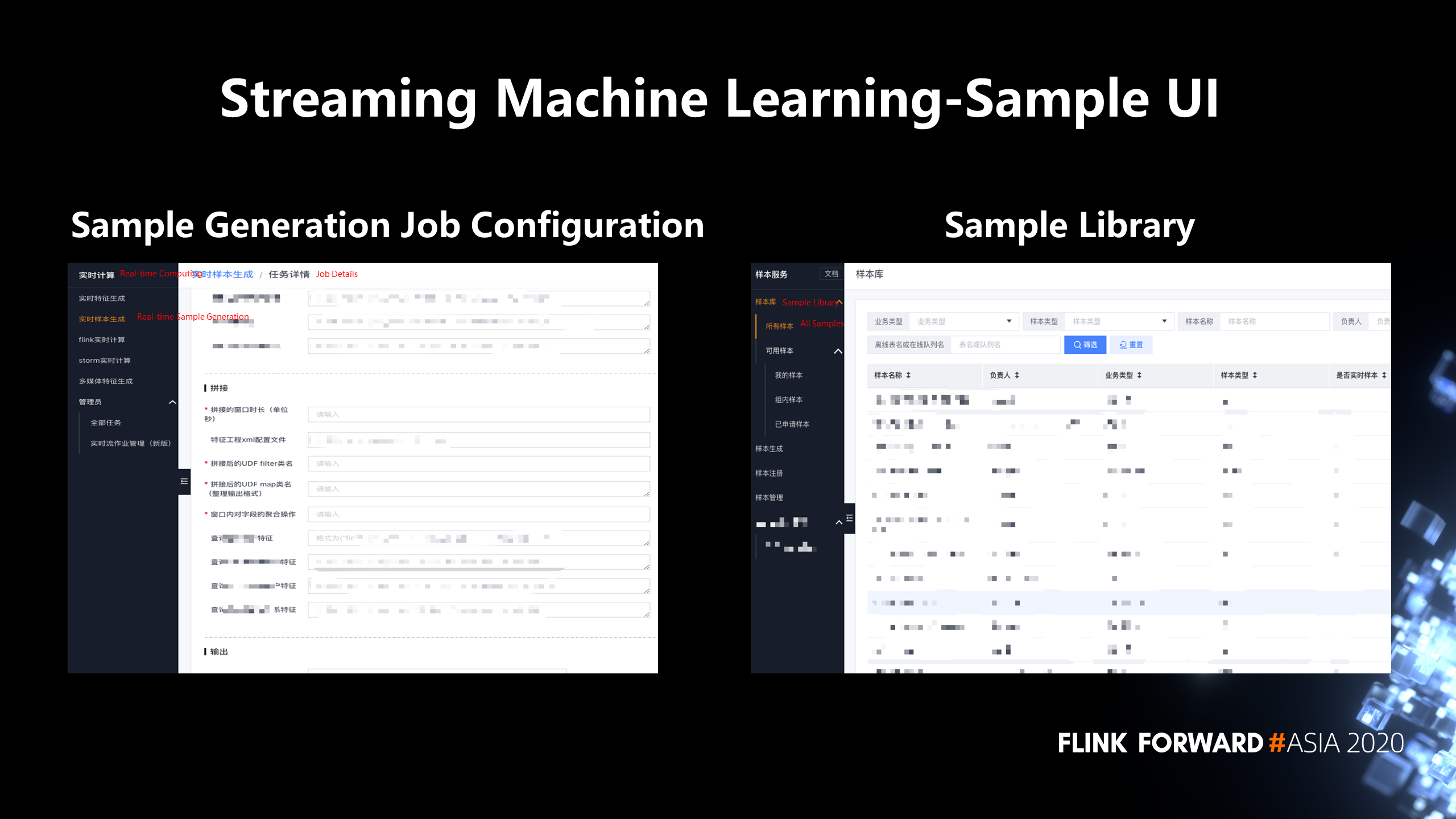

The following figure shows a sample of the streaming machine learning project. The left shows the job configuration for sample generation, and the right shows the sample library. The sample library mainly manages and displays samples, including sample description permission and sample sharing.

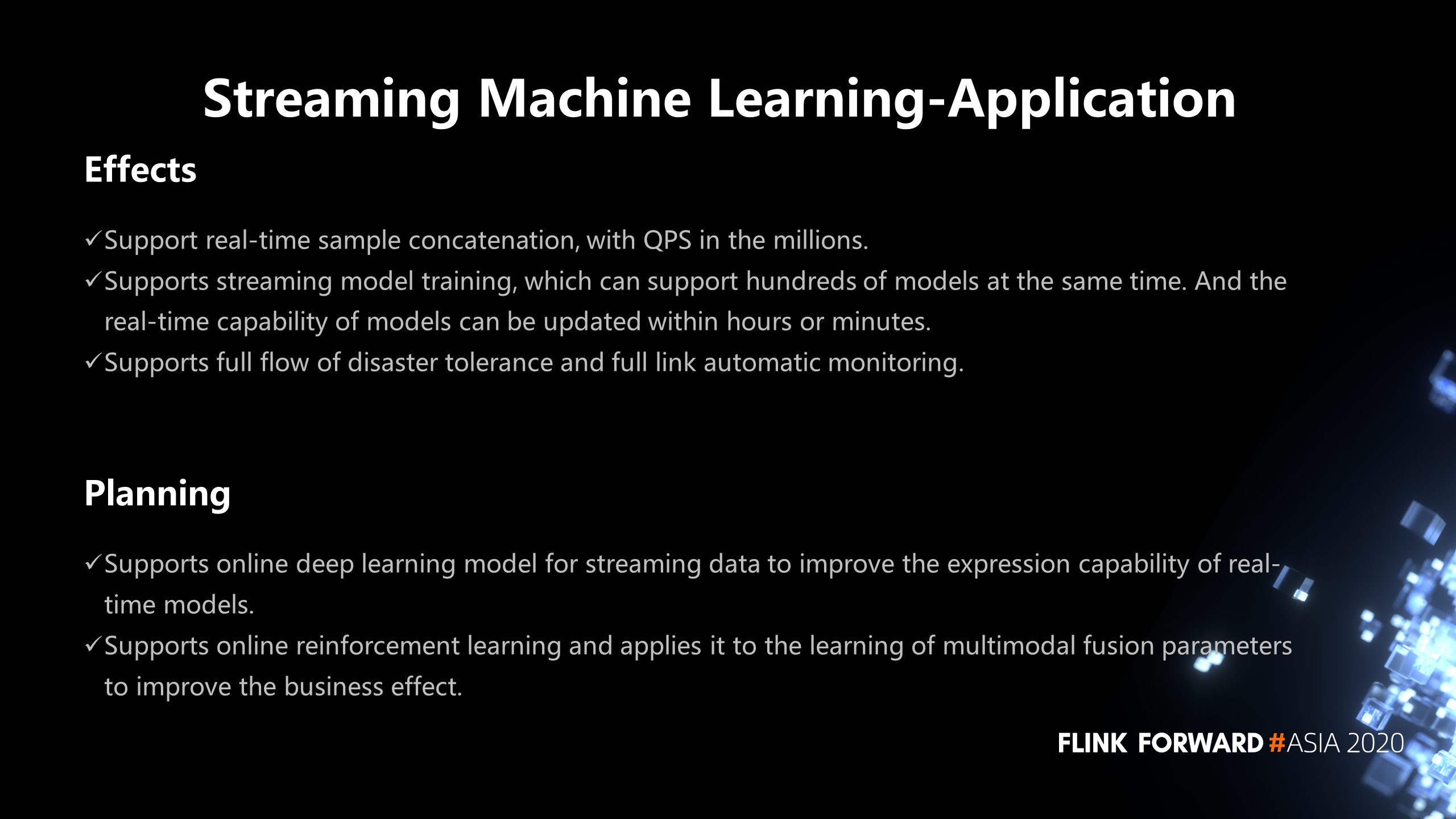

Finally, let's take a look at the effect of the streaming machine learning application. Currently, we support real-time sample concatenation, with QPS in the millions. We also support streaming model training, which can support hundreds of models simultaneously. The real-time capability of models can be updated within minutes or hours. Streaming learning supports the full flow of disaster tolerance and full link automatic monitoring. Recently, we are working on a deep learning model for streaming data to improve the expression capability of real-time models. We will also explore new application scenarios in reinforcement learning.

Multimodal is the ability or technology to implement or understand multimodal information using machine learning methods. This piece of Weibo mainly includes images, videos, audio, and text.

For example, when we started to do video classification, we only used those frames after video frame extraction (for images.) Later, during the second optimization, audio-related things and blog-related things corresponding to videos were added, which is equivalent to considering the multimodal integration of audio, images, and text to generate the video classification tags more precisely.

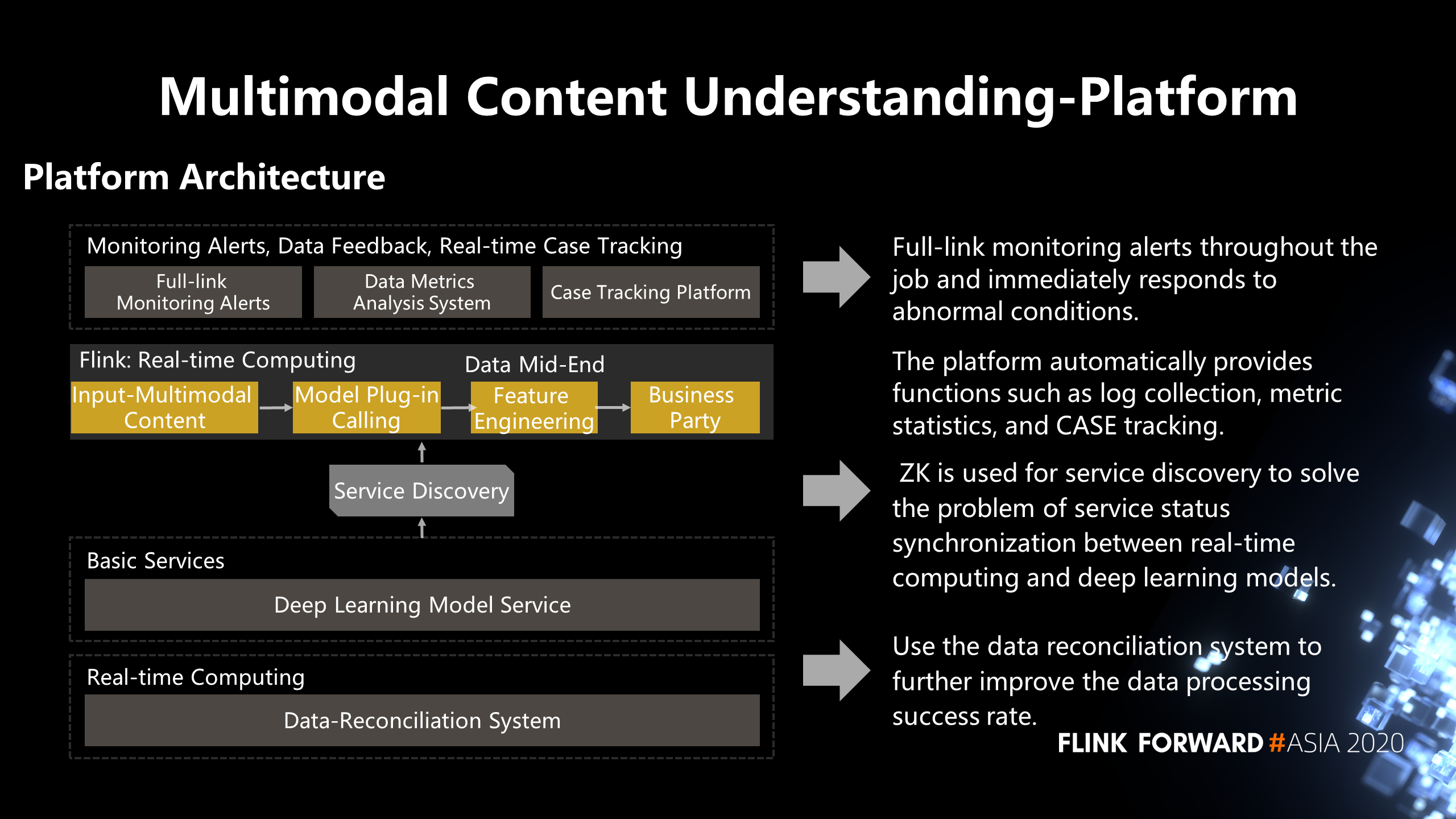

The following figure shows the platform architecture of multimodal content understanding. In the middle part, Flink performs real-time compute, receiving data, such as image streams, video streams, and blog streams. Then, it uses calling basic services in the following section through a model plug-in to implement deep learning models. After the service is called, the content features are returned. Then, we save the features in feature engineering and provide them to various business parties through the data mid-end. Alerts for full link monitoring throughout the job immediately respond to abnormal conditions. The platform provides functions, such as log collection, metric statistics, and CASE tracking automatically. In the middle part, zk is used for service discovery to solve the problem of service status synchronization between real-time compute and deep learning models. In addition to state synchronization, some load balancing policies exist. The bottom line is to use the data reconciliation system to improve the data processing success rate.

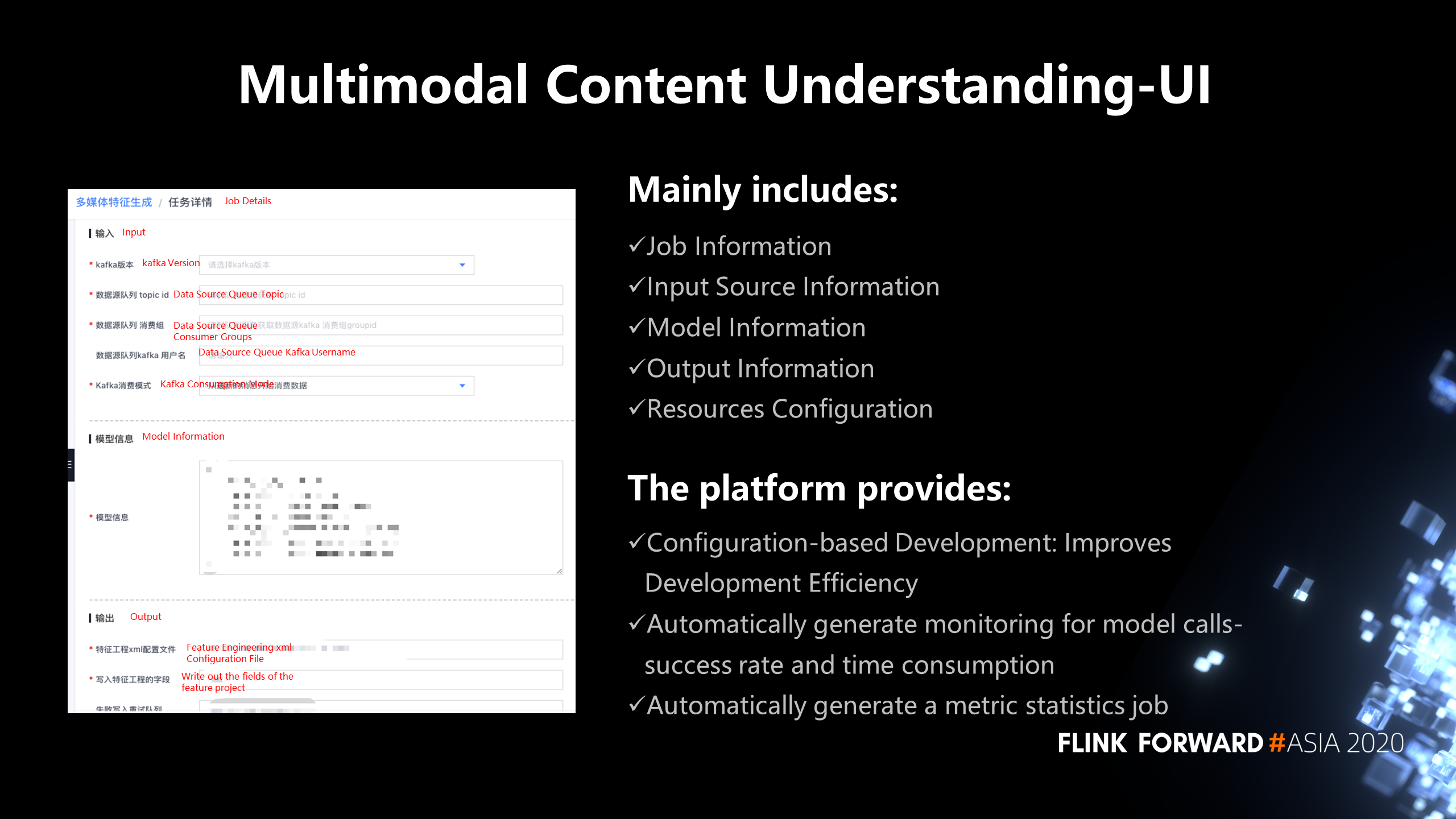

The UI for multimodal content understanding mainly includes job information, input source information, model information, output information, and resource configuration. We can improve development efficiency through configuration-based development. Then, some monitoring metrics of model calls are generated automatically, including the success rate and time spent on model calls. When the job is submitted, a job for metrics statistics is generated automatically.

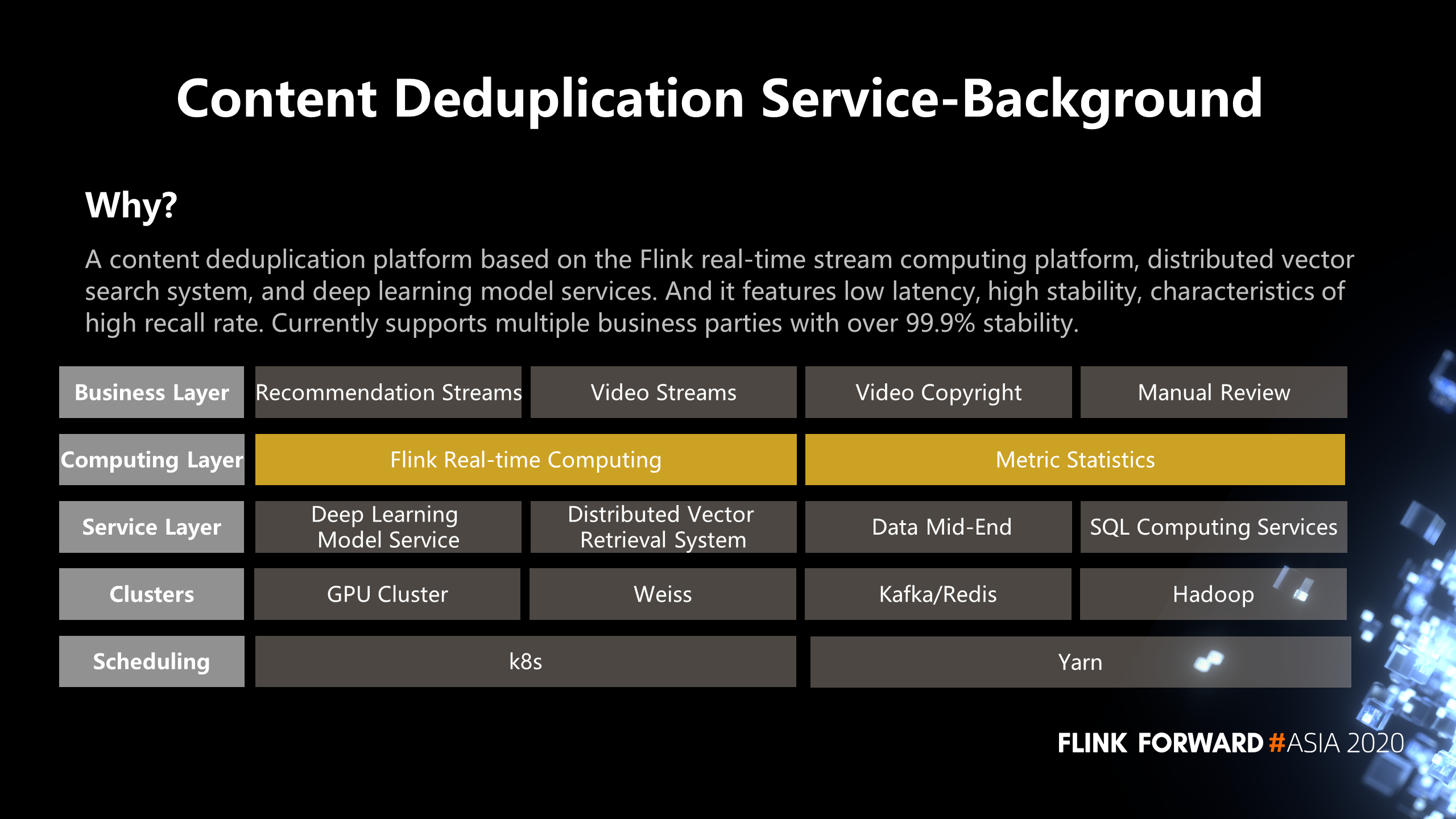

In the recommendation scenario, if you keep pushing duplicate content to the user, it will make the user experience bad. After taking this into account, we built a content deduplication platform based on the Flink real-time stream compute platform, distributed vector search system, and deep learning model services. The platform features low latency, high stability, and characteristics of a high recall rate. It currently supports multiple business parties with over 99.9% stability.

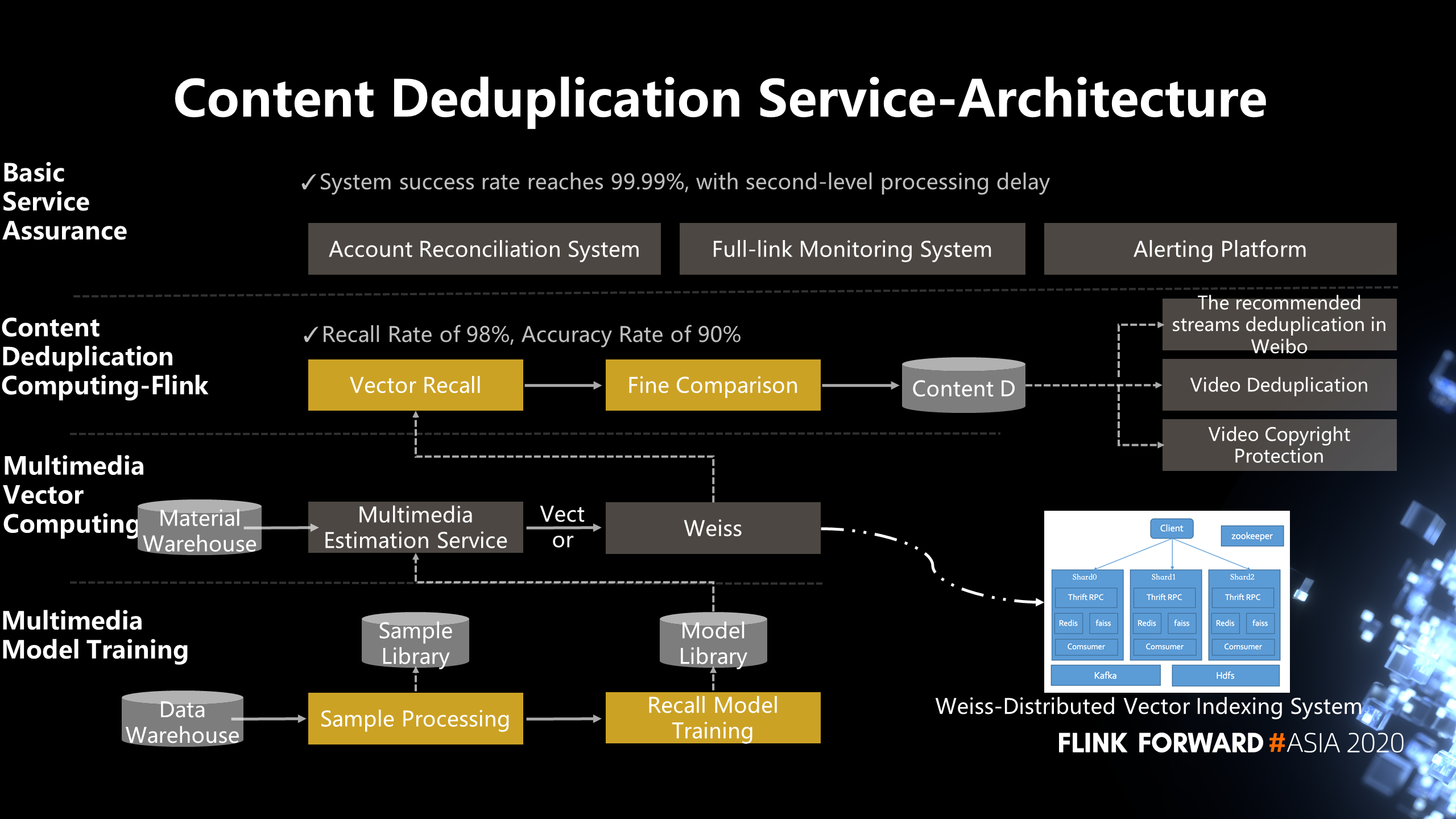

The following figure shows the architecture of a content deduplication service. The bottom section describes the multimedia model training, which is offline. For example, we can get some sample data, perform sample processing, and store the samples in the sample library. When we need to do model training, we select samples from the sample library and do model training. The training results are saved to the model library.

The main model used here for content deduplication is the vector generation model, including vectors of images, text, and videos.

After we verify that the trained model is correct, we save the model to the model library. The model library stores some basic information about the model, including the running environment and model version. Then, the model needs to be deployed online. The deployment process requires pulling the model from the model library and knowing some technical environment in which the model will run.

After the model is deployed, we retrieve materials from the real-time material library Flink and call the multimedia estimation service to generate vectors for these materials. Then, these vectors are stored in the Weiss Library, which is a vector recall retrieval system developed by Weibo. After the material is stored in the Weiss library, a batch of materials similar to this material will be recalled. In the section of fine comparison, a certain strategy is used to select the most similar one from all the recall results. Then, the most similar item and the current material are aggregated to form a content ID. Finally, when the business uses it, it deduplicates it by the corresponding content ID of the material.

There are three application scenarios of content deduplication:

We have done a lot of work in platform and service by combining the Flink real-time stream computing framework with business scenarios. We have also made many optimizations in development efficiency and stability. We improve development efficiency through modular design and platform-based development. Currently, the real-time data compute platform comes with a system that features end-to-end monitoring, metric statistics, debug case tracing (Logs Review). In addition, FlinkSQL implements batch-stream integration. These are some new changes that Flink has brought to us, and we will continue to explore the wider application of Flink with Weibo.

Flink Course Series (1): A General Introduction to Apache Flink

206 posts | 54 followers

FollowAlibaba Clouder - December 2, 2020

Apache Flink Community China - January 11, 2021

Apache Flink Community - May 7, 2024

Alibaba Container Service - October 21, 2019

Apache Flink Community China - June 28, 2021

Apache Flink Community China - January 11, 2022

206 posts | 54 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community