This article contains excerpts from a speech by Zhai Jia, the Co-Founder of StreamNative. It introduces Apache Pulsar, a next-generation cloud-native message streaming platform, and explains how to provide the foundation for batch and stream integration through the native compute-storage separation architecture of Apache Pulsar. Zhai also introduces how to combine Apache Pulsar with Flink to achieve unified batch and stream computing.

Apache Pulsar joined the Apache Software Foundation in 2017 and became a top-level project after finishing incubation at the Apache Software Foundation in 2018. By leveraging its enterprise-level features, Pulsar has attracted more attention from developers because it adopts the native architecture of compute-storage separation. It also uses the BookKeeper storage engine specially designed for message and stream storage.

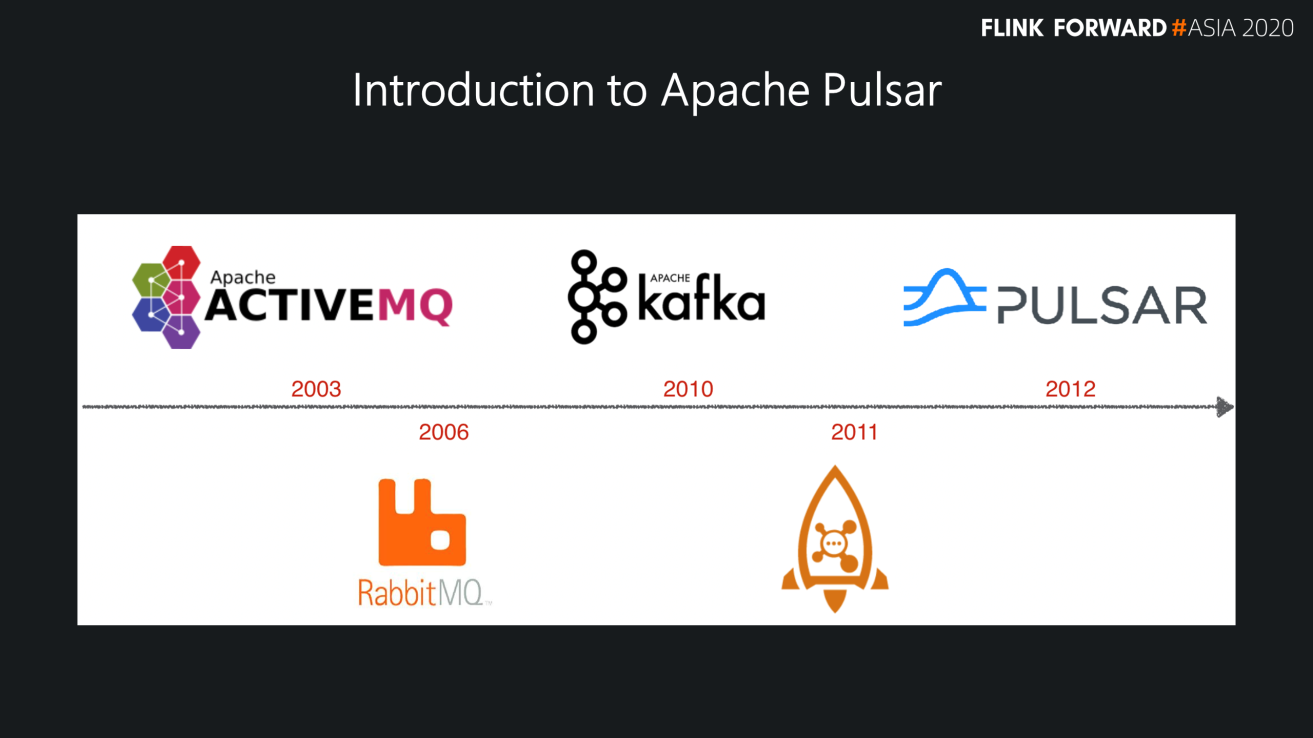

The following figure shows the open-source tools used in the messaging field. Developers engaged in messaging or infrastructure are familiar with these tools. Although the development of Pulsar started in 2012 and Pulsar did not go open-source until 2016, it had been running on Yahoo for a long time before the release. This is also the reason why it received a lot of attention from developers as soon as it went open-source. It is a system that has already been tested online.

Pulsar is fundamentally different from other messaging systems in two aspects:

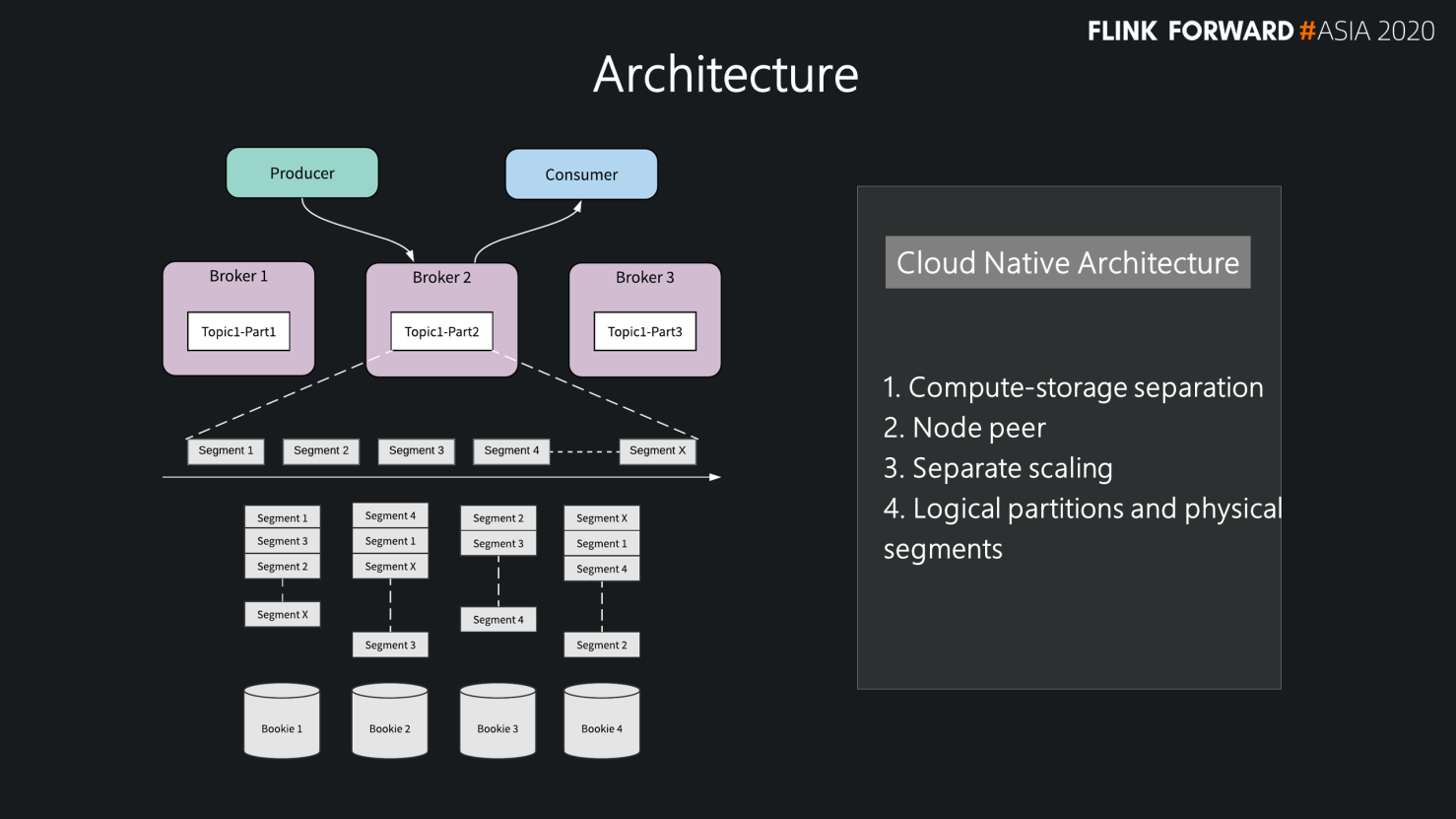

The following figure shows the architecture of compute-storage separation:

This layered architecture facilitates cluster expansion:

This cloud-native architecture has two main features:

Since data is not stored on the Broker layer, the peer node is implemented easily. However, data on the underlying storage layer in Pulsar is peer-to-peer. BookKeeper does not use the synchronization model, such as master/slave, but uses Quorum.

At the same time, this layered architecture lays a solid foundation for batch and stream integration in Flink. Since it was originally divided into two layers, it can provide two sets of different APIs according to a user's usage scenarios and different access modes of batch and stream processing.

Another benefit of Pulsar is that it has Apache BookKeeper, a storage engine specifically designed for streaming and messaging. It is a simple write-ahead-log abstraction. Log abstraction is similar to stream abstraction. All data is continuously appended from the tail.

It provides users with a relatively simple write mode and high throughput. In terms of consistency, BookKeeper combines PAXOS and ZooKeeper ZAB protocols. BookKeeper exposes the public to a log abstraction. It has high consistency and can implement log-layer storage similar to Raft. BookKeeper was designed to achieve HA in the HDFS naming node, which requires high consistency. This is why we choose Pulsar and BookKeeper for storage for many critical scenarios.

The BookKeeper design provides a special read and write isolation mechanism. It means that read and write are performed on different disks. The benefit is that in batch stream integration, mutual interference during historical data reading can be reduced. Typically, when users read the latest real-time data, they will inevitably read historical data. If there is a separate disk for historical data, historical data and real-time data will not compete for IO, which will bring a better IO experience for batch and stream integration.

Pulsar is widely used. It is commonly used in the following scenarios:

At the Pulsar Summit Asia Summit at the end of November 2020, we invited more than 40 lecturers to share their cases on implementing Pulsar.

In these scenarios, unified data processing is especially important. Regarding batch and stream integration, Flink is the first choice for many domestic users. What are the benefits of the combination of Pulsar and Flink? Why do users choose Pulsar and Flink for batch and stream integration?

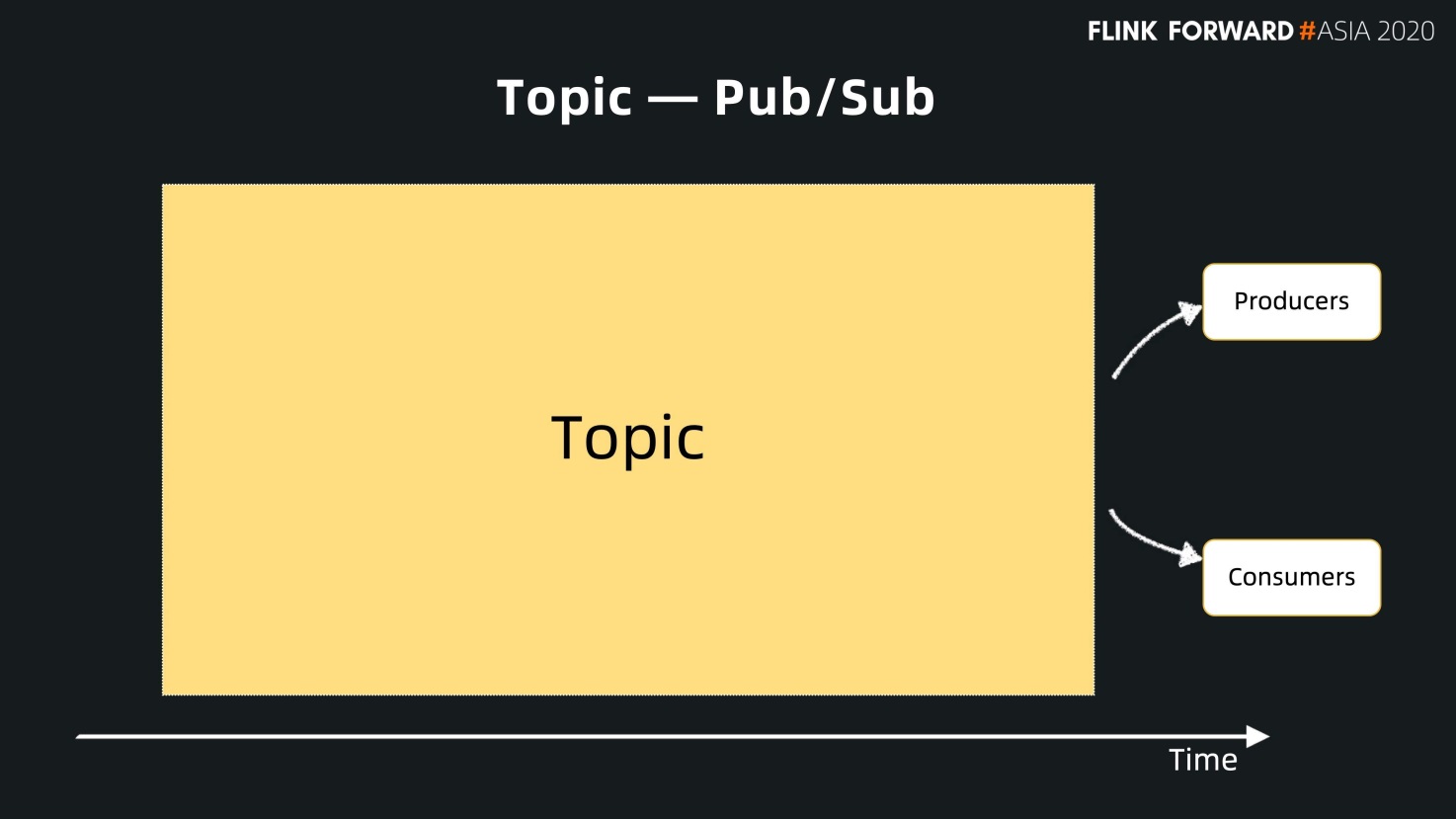

First, let's analyze from the data view of Pulsar. Like other messaging systems, Pulsar uses messages as the main body and the topic as the center. All data is sent to the topic by the producer, and the consumer subscribes to messages from the topic.

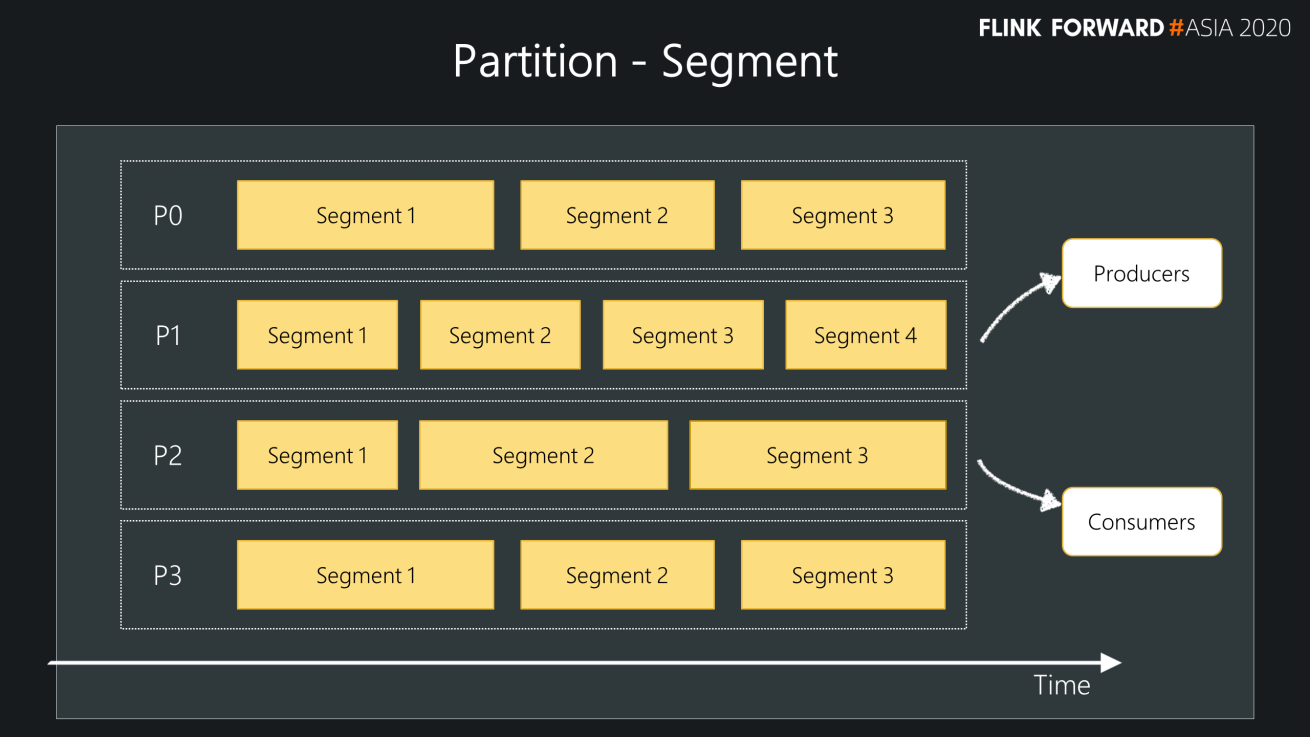

To facilitate scale-up, Pulsar also has a concept of partitioning within topics, which is similar to many messaging systems. As mentioned before, Pulsar is a layered architecture that uses partitions to expose the topic to users. However, each partition can be split into segments at a user-defined time or size. A Topic is created with only one active segment at the beginning and is split into new segments when the specified time arrives. When opening a new segment, you can select the node with the largest capacity to store new segments based on the capacity of each node.

The advantage is that each segment of the topic will be evenly distributed on each node of the storage layer to balance the data storage. Users can use the entire storage cluster to store partitions without being limited by the capacity of a single node. As shown in the following figure, the Topic has four partitions, and each partition is split into multiple segments. Users can split a segment by time (such as 10 minutes or one hour) and can also cut a segment by size (such as 1G or 2G.) Segments are sorted in ascending order of ID, while all messages in the segment are monotonically increasing based on ID. This way, segments can be sorted easily.

In terms of a single segment, let's take a look at the common concept of stream data processing. All user data is continuously appended from the tail of a stream. Similar to the concept of the stream, new data of a Topic in Pulsar is constantly added to the tail of the Topic. However, Topic abstraction in Pulsar provides some additional benefits:

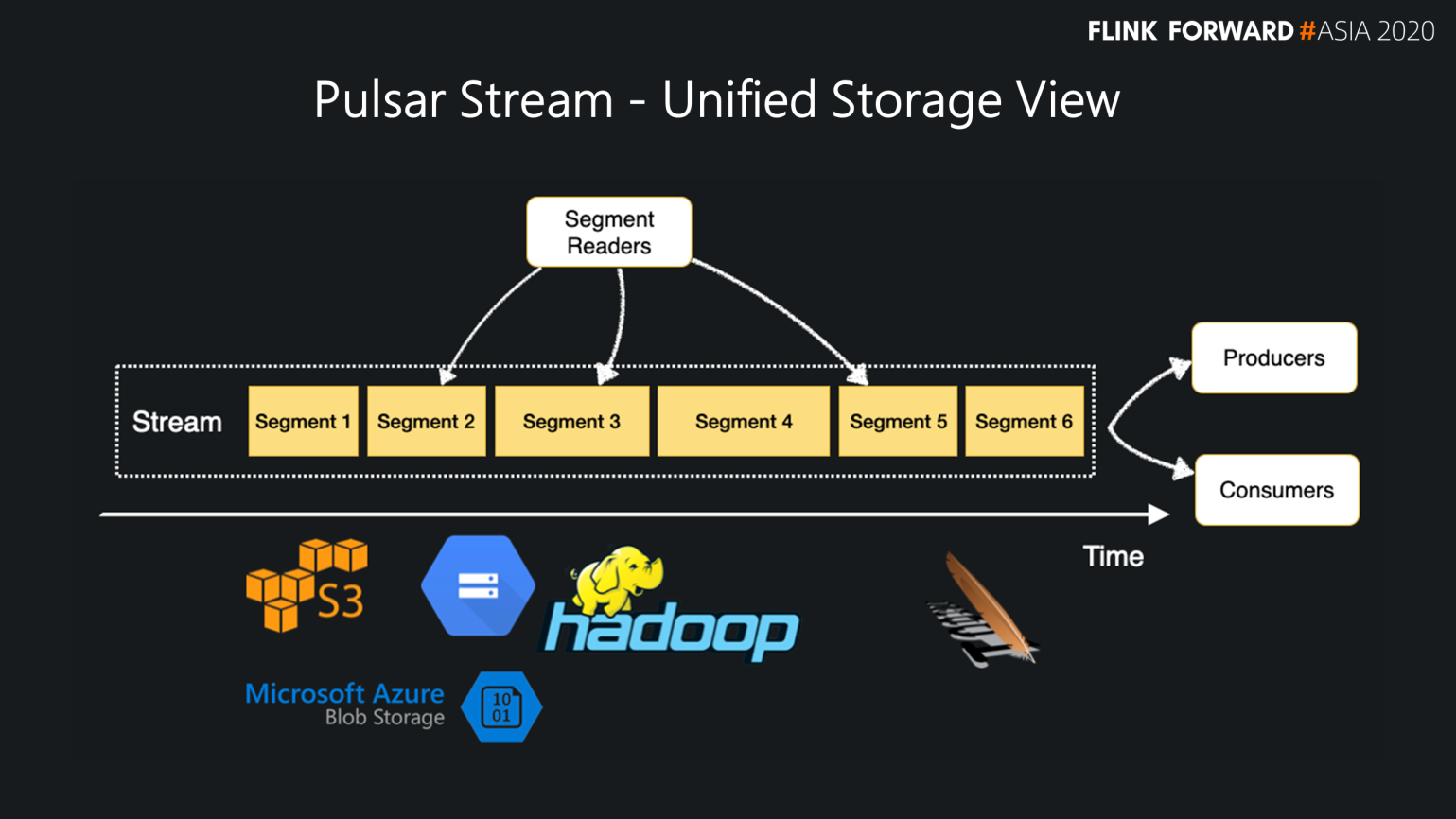

If one who works with the infrastructure sees the time-based segment architecture, they will move the old segments to secondary storage, which is the same as the case in Pulsar. According to the consumption heat of topic, users can move the old data or the data that exceeds the time limit or size to the secondary storage automatically. Users can use Google, Microsoft Azure, or AWS to store old segments and support HDFS storage.

The latest data can be returned through Bookkeeper quickly, and the old cold data can be stored in unlimited stream storage using network storage cloud resources. This shows that Pulsar supports unlimited stream storage and is a foundation of batch and stream integration.

All in all, Pulsar provides two different sets of access interfaces of real-time data and historical data by compute-storage separation. According to different internal locations of segments, users can choose the specified interface to access data based on metadata. At the same time, the segment mechanism can place old segments into secondary storage, which can support unlimited stream storage.

The unity of Pulsar exists in the management of segment metadata. Each segment can be stored into different storage mediums or formats based on time, but Pulsar provides the external logic concept of partition by managing the metadata of each segment. When accessing a segment in a partition, users can get its metadata and know its order, storage location, and type of data in the partition. Pulsar provides a unified topic abstraction for metadata management of each segment.

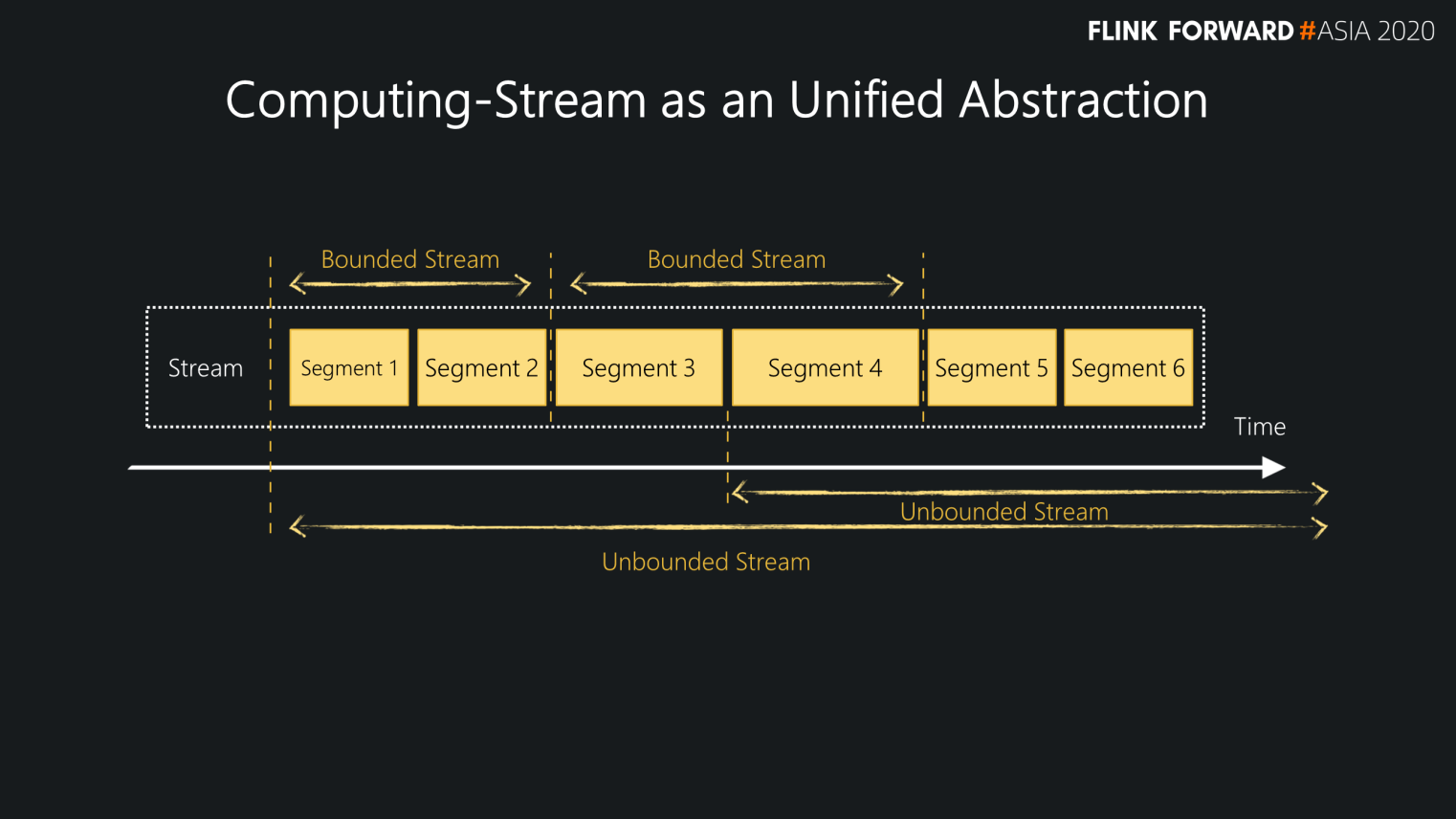

Stream is a basic concept in Flink. Pulsar can be used as a carrier of stream to store data. Batch computing can be considered as a bounded stream. For Pulsar, this is a segment with a bounded range of Topic.

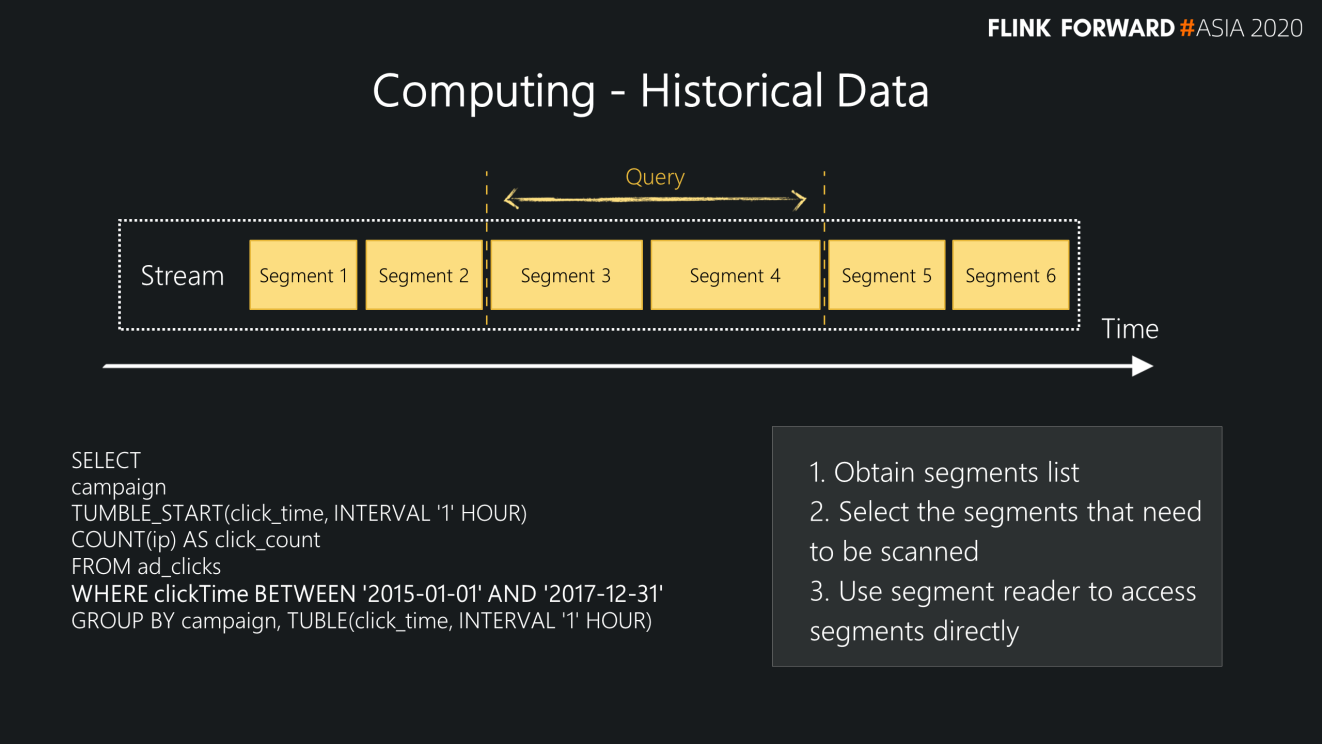

As shown in the figure, a topic has many segments. If the start time and the end time are configured, users can determine the range of segments to be read based on time. Real-time data corresponds to a continuous query or access. In the Pulsar scenario, the device consumes the tail data of a Topic continuously. So, the Pulsar Topic model can be integrated well with the Flink stream concept, and Pulsar can be used as a carrier for stream computing in Flink.

The Pulsar responds to bounded streams and unbounded streams in different modes:

The Pulsar response consists of the following steps:

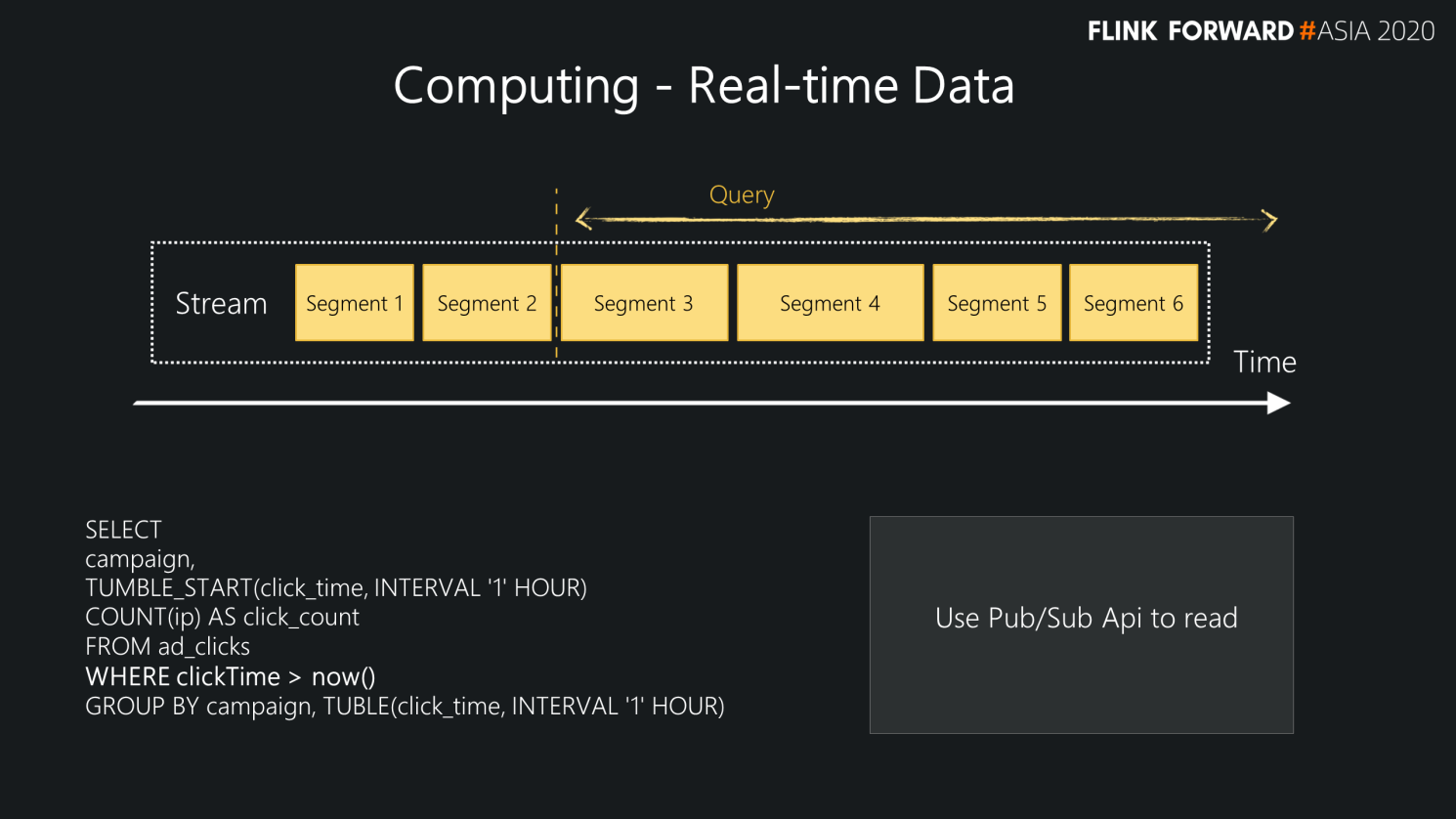

Pulsar also provides the same interface for finding real-time data as Kafka. Users can use the consumer mode to read the last segment (the latest data) and access data in real-time through the consumer interface. It keeps searching for the latest data and then performs the next search. In this case, the Pulsar Pub/Sub interface is the most direct and effective method.

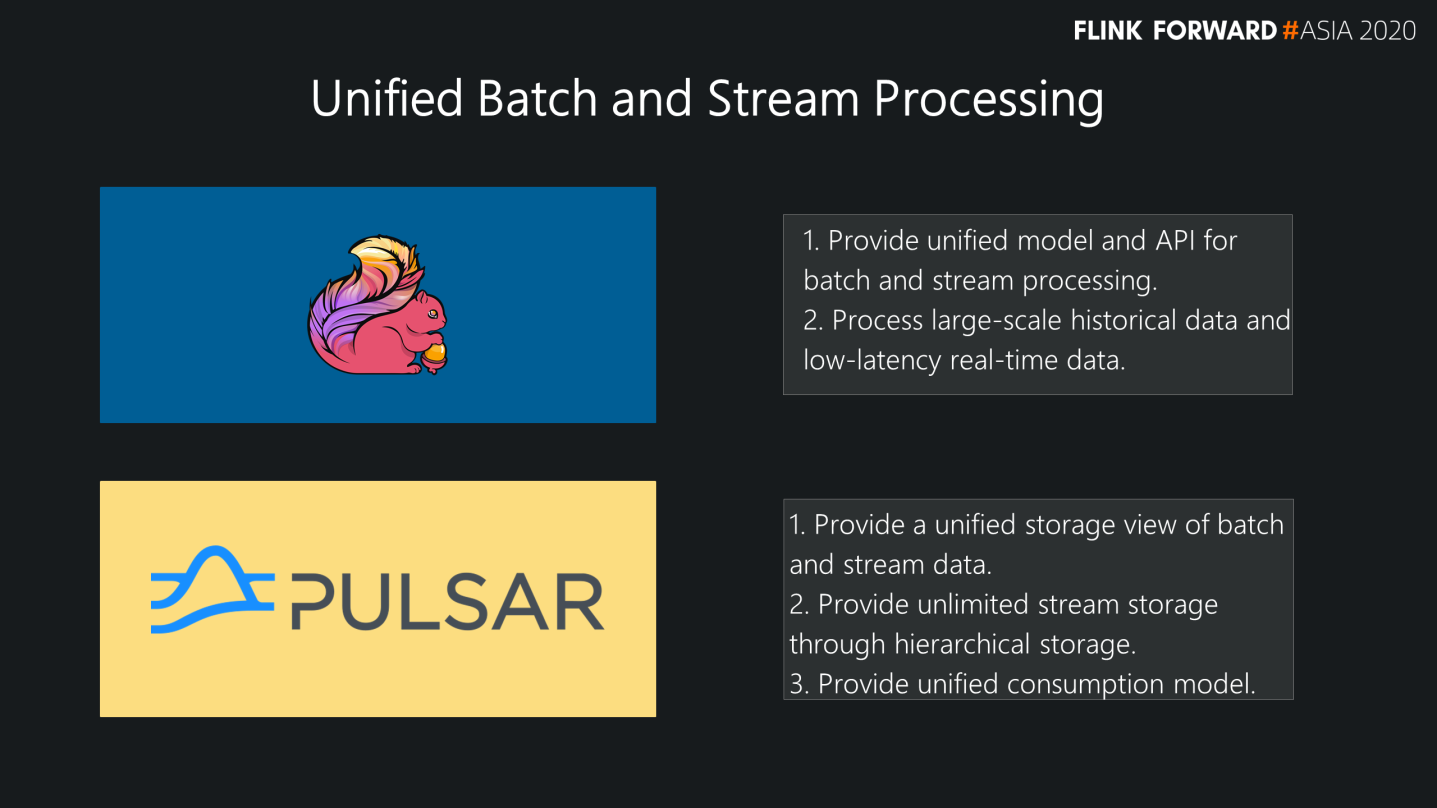

In short, Flink provides a unified view that allows users to use a unified API to process streaming and historical data. Previously, data scientists may need to write two sets of applications to process real-time data and historical data. Now, this problem can be solved with only one set of models.

Pulsar mainly provides a data carrier, which uses a stream storage carrier for the upper computing layer through the architecture based on partition and segment. Since Pulsar uses hierarchical segment architecture, it has the latest data access interfaces for stream and storage layer access interfaces that have higher concurrency requirements for batch. At the same time, it provides unlimited stream storage and a unified consumption model.

Let's take a look at the existing capabilities and progress of Pulsar.

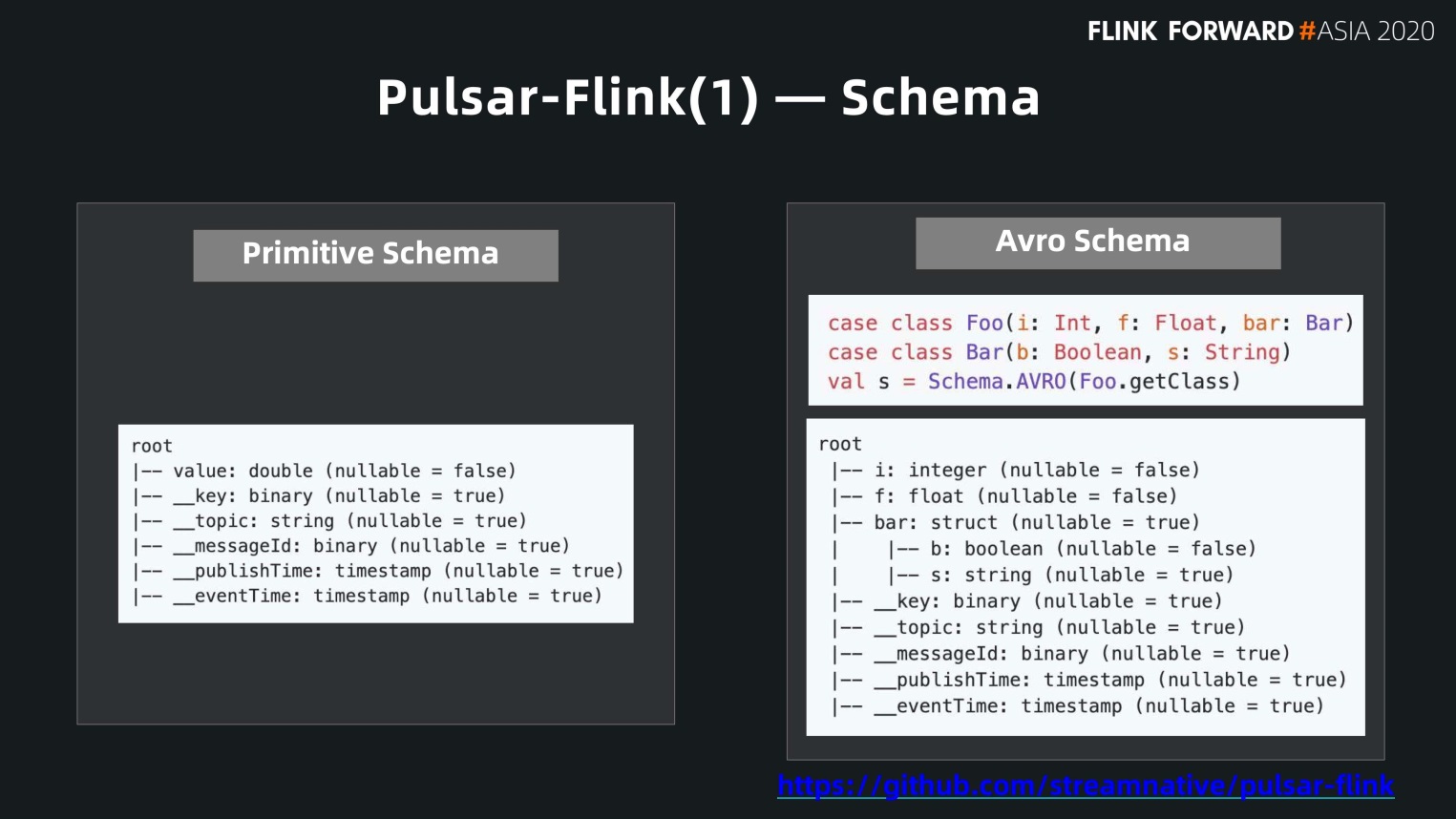

In Big Data, schema is a particularly important abstraction. The same is true for messaging. In Pulsar, if a producer and a consumer can sign a protocol through schema, there is no need for users on the production side and the consumer side to communicate the format of sending and receiving data offline. We also need the same support in the computing engine.

In the Pulsar-Flink connector, we use the Flink schema interface to connect to the Pulsar Schema. Flink can directly parse the schema stored in Pulsar. There are two types of schema:

We can also combine Flink metadata schema and Avro metadata with Flip-107 to perform more complex queries.

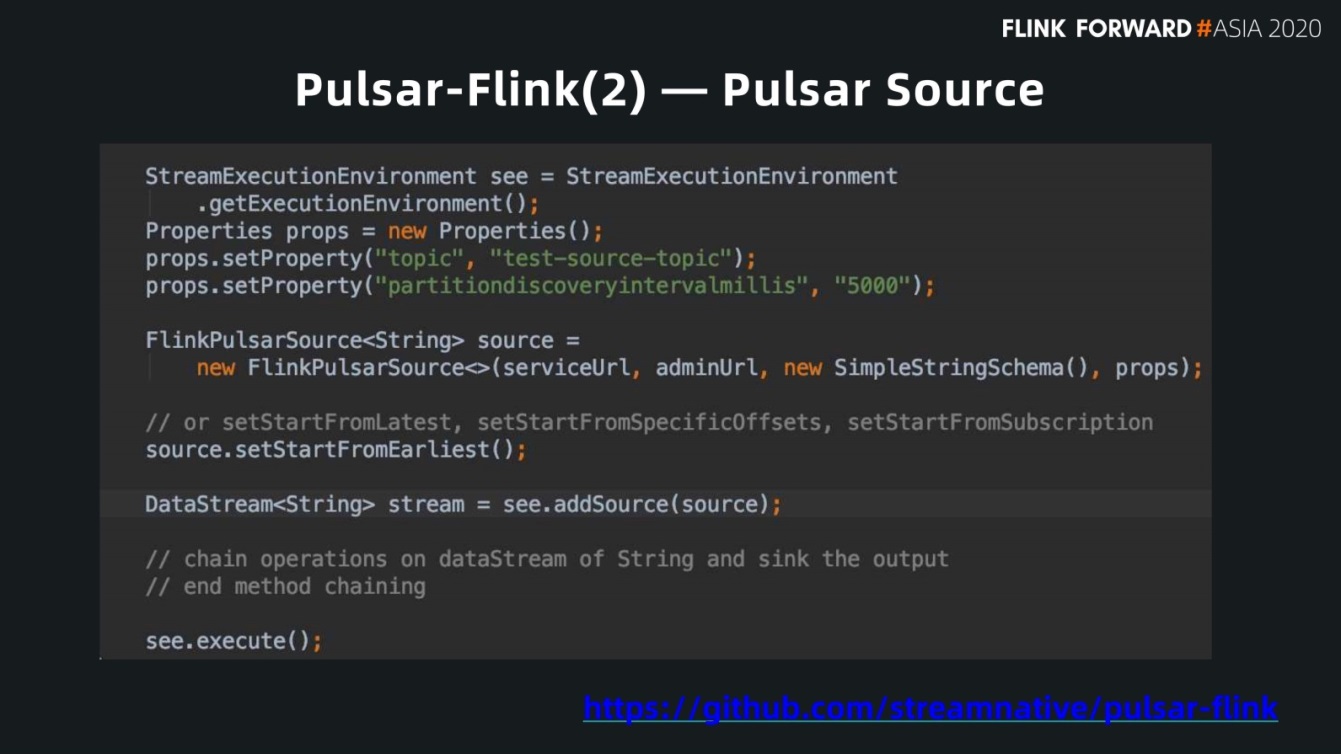

With this schema, users can use it easily as a source because it gets each message from the information of schema.

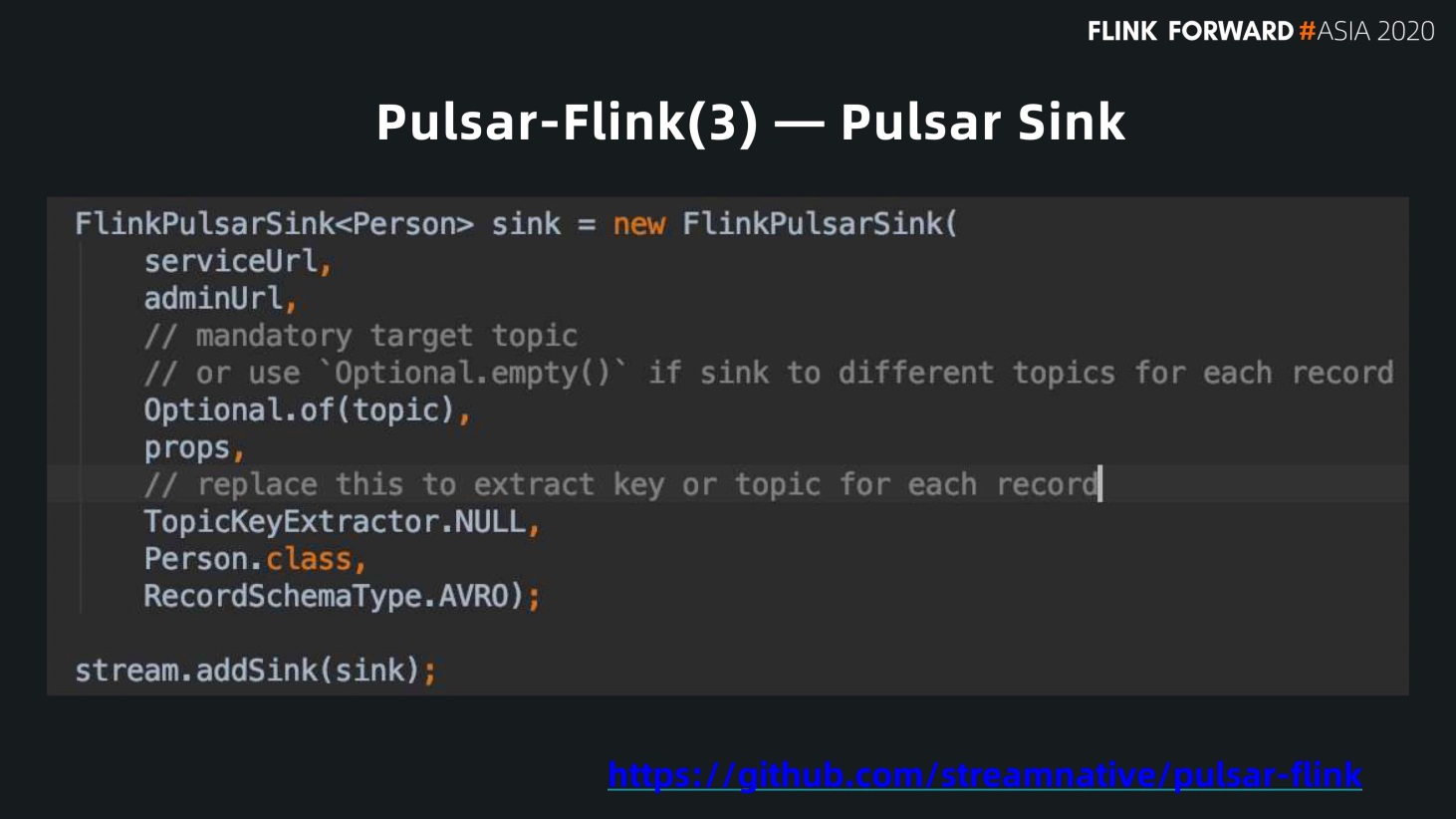

We can also return the computing result in Flink to Pulsar as a Sink.

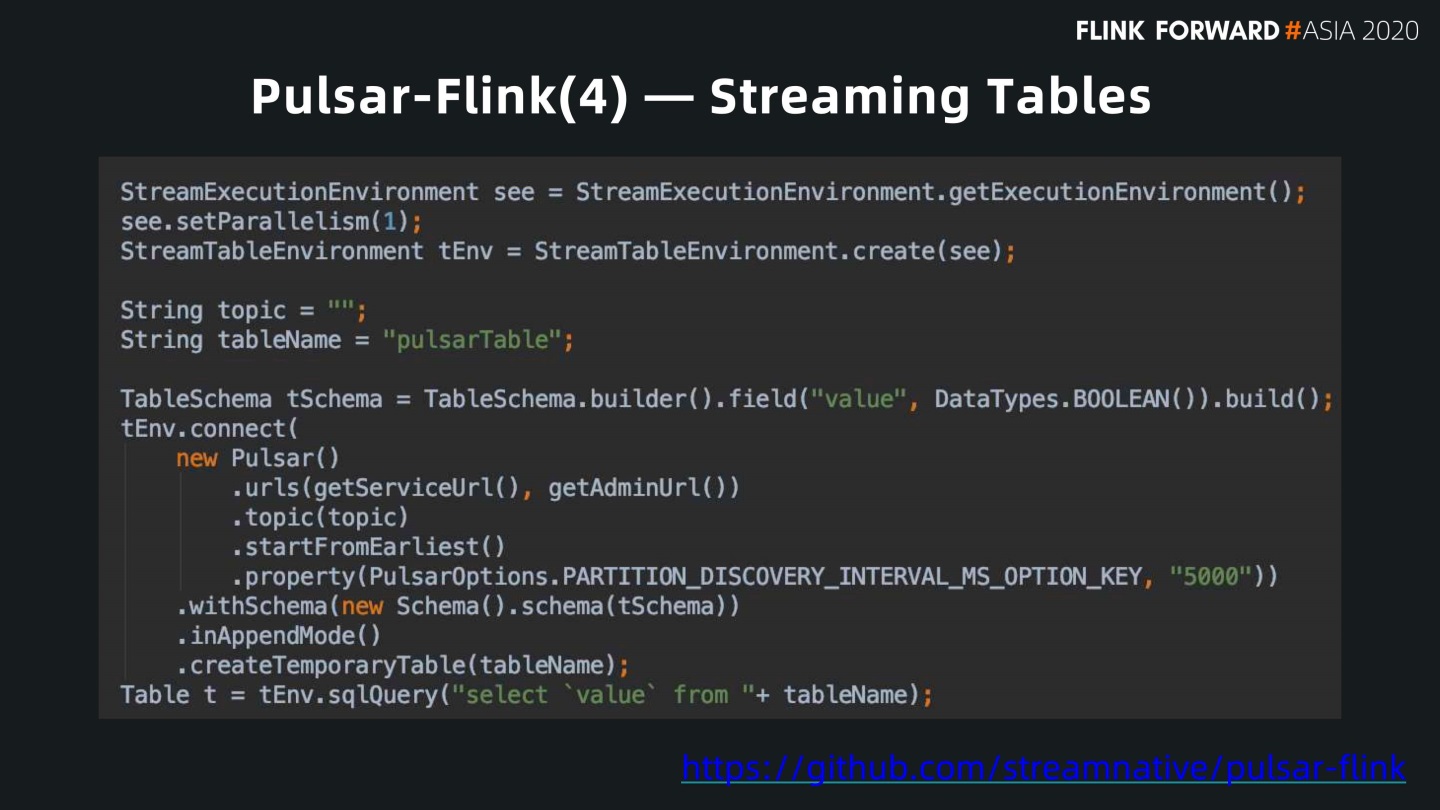

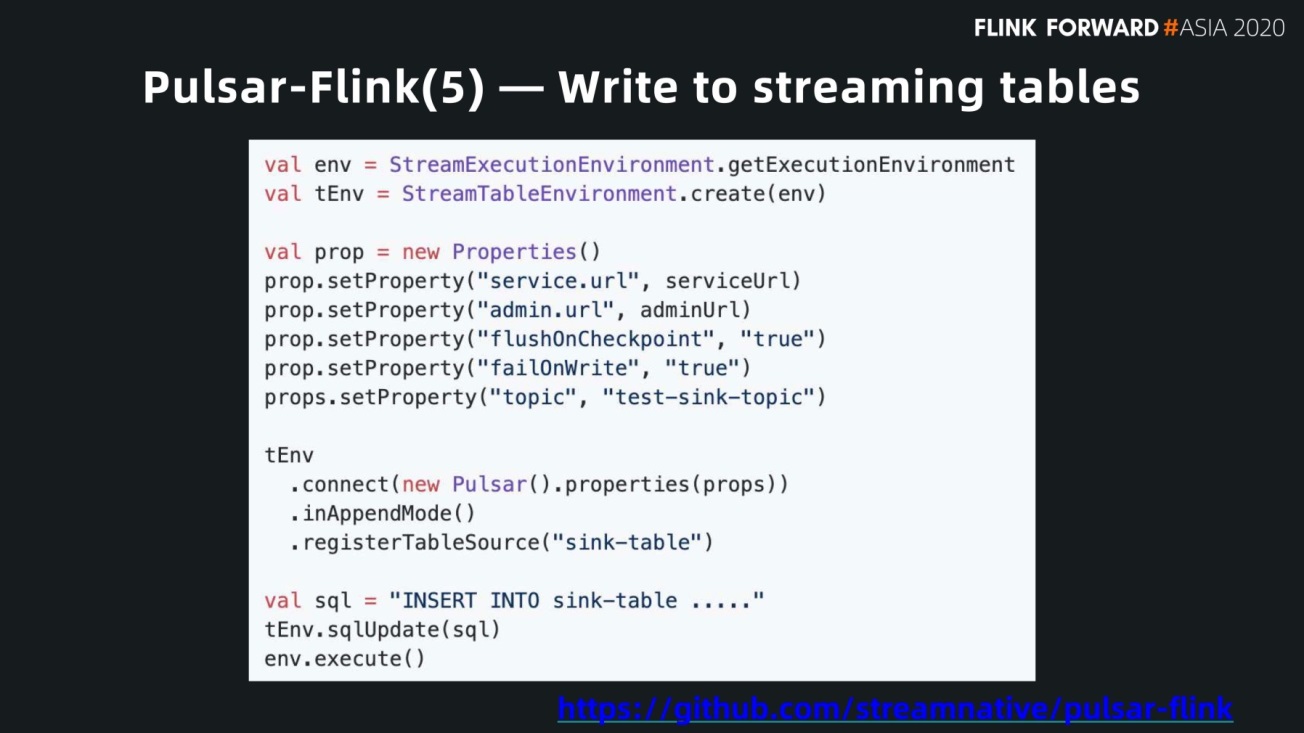

We can directly expose the Flink table to users with the support of Sink and Source. Users can use Pulsar as a table in Flink to search for data.

The following figure shows how to write the computing results or data to the Pulsar Topic.

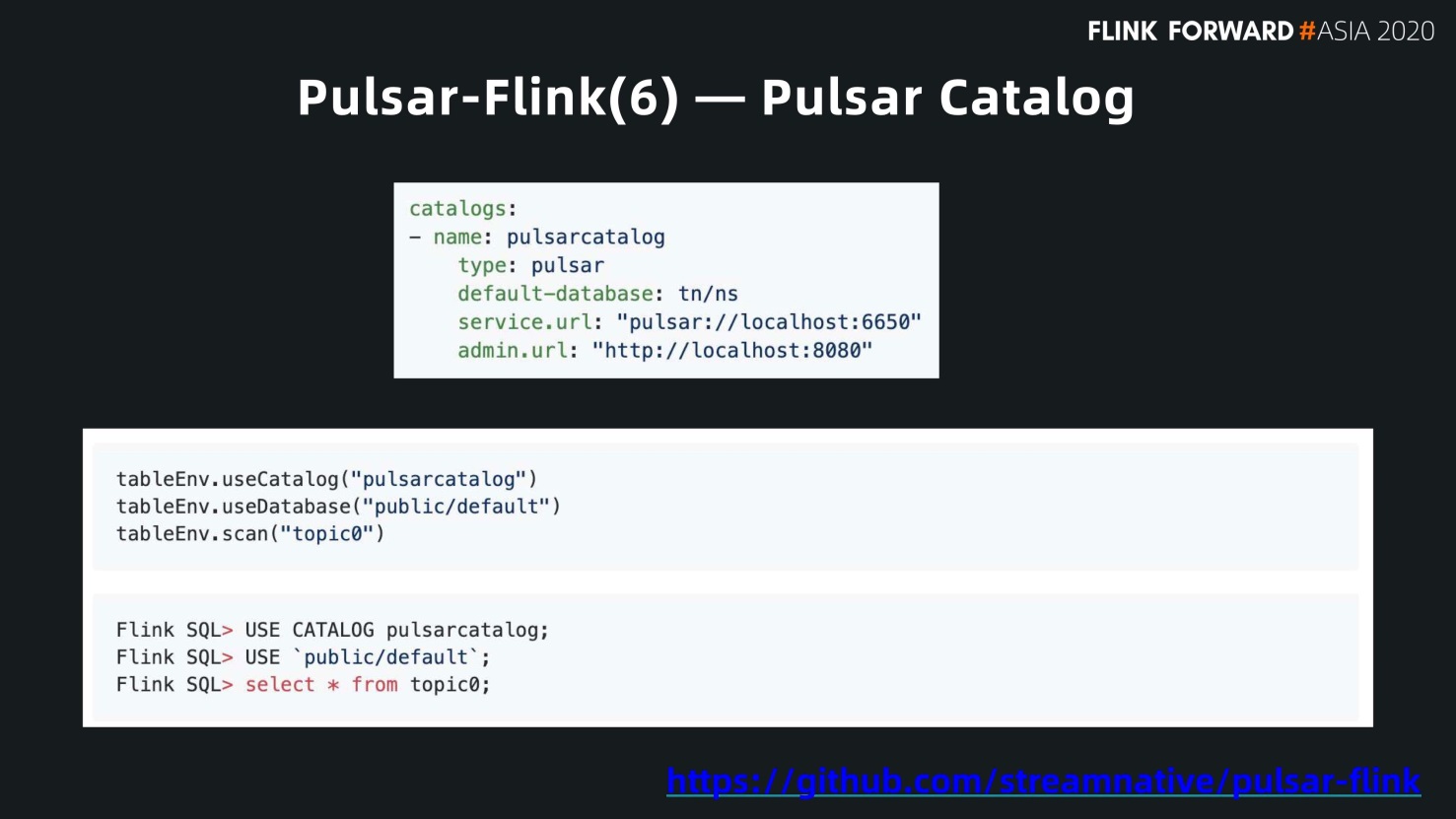

Pulsar comes with many enterprise stream features. The topic (e.g. persistent://tenant_name/namespace_name/topic_name) of Pulsar is not a concept of tiling but is divided into many levels, such as tenant level and namespace level. This can be combined with the Catalog concept commonly used in Flink.

As shown in the following figure, a Pulsar Catalog is defined, and the database is tn/ns, which is a path expression sorted by tenant, namespace, and topic. The namespace of Pulsar can be used as a Catalog in Flink. There are many topics under namespace, and each topic can be a table of Catalog, so we can easily interact with a Flink Catalog. In the following figure, the upper one is the definition of Catalog, and the lower one is the demonstration of how to use this Catalog. However, additional improvement is needed here. There are also plans to support the partition later.

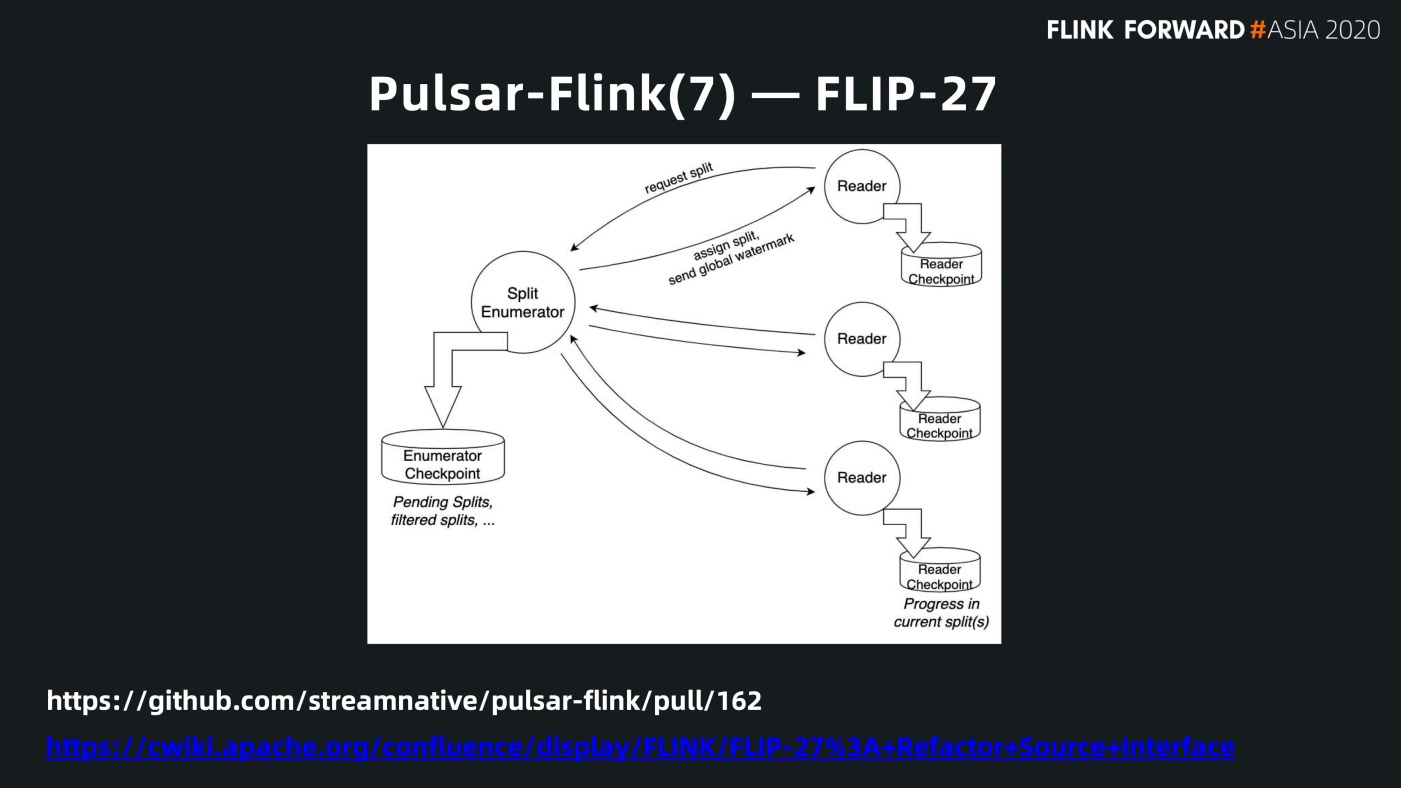

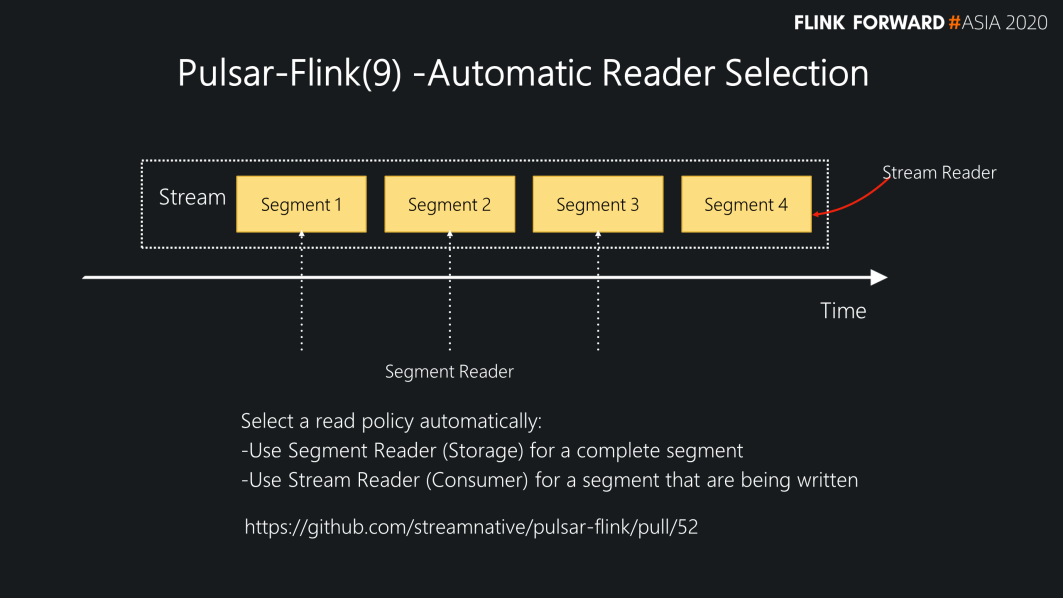

FLIP-27 is the representativeness of batch and stream integration of Pulsar – Flink. As described before, Pulsar provides a unified view that manages metadata of all topics. In this view, the information of each segment is marked according to the metadata, and batch and stream integration is achieved based on the framework of FLIP-27. In FLIP-27, there are two concepts called Splitter and reader.

First, a splitter cuts the data source and sends it to the reader for reading. For Pulsar, the splitter processes a Pulsar topic. After capturing the metadata of a Pulsar topic, the system determines the location of the segment based on the metadata of each segment. Then, it selects the most suitable reader for access. Pulsar provides a unified storage layer. Flink selects different readers to read the data of Pulsar based on the information about locations and formats of each partition by Splitter.

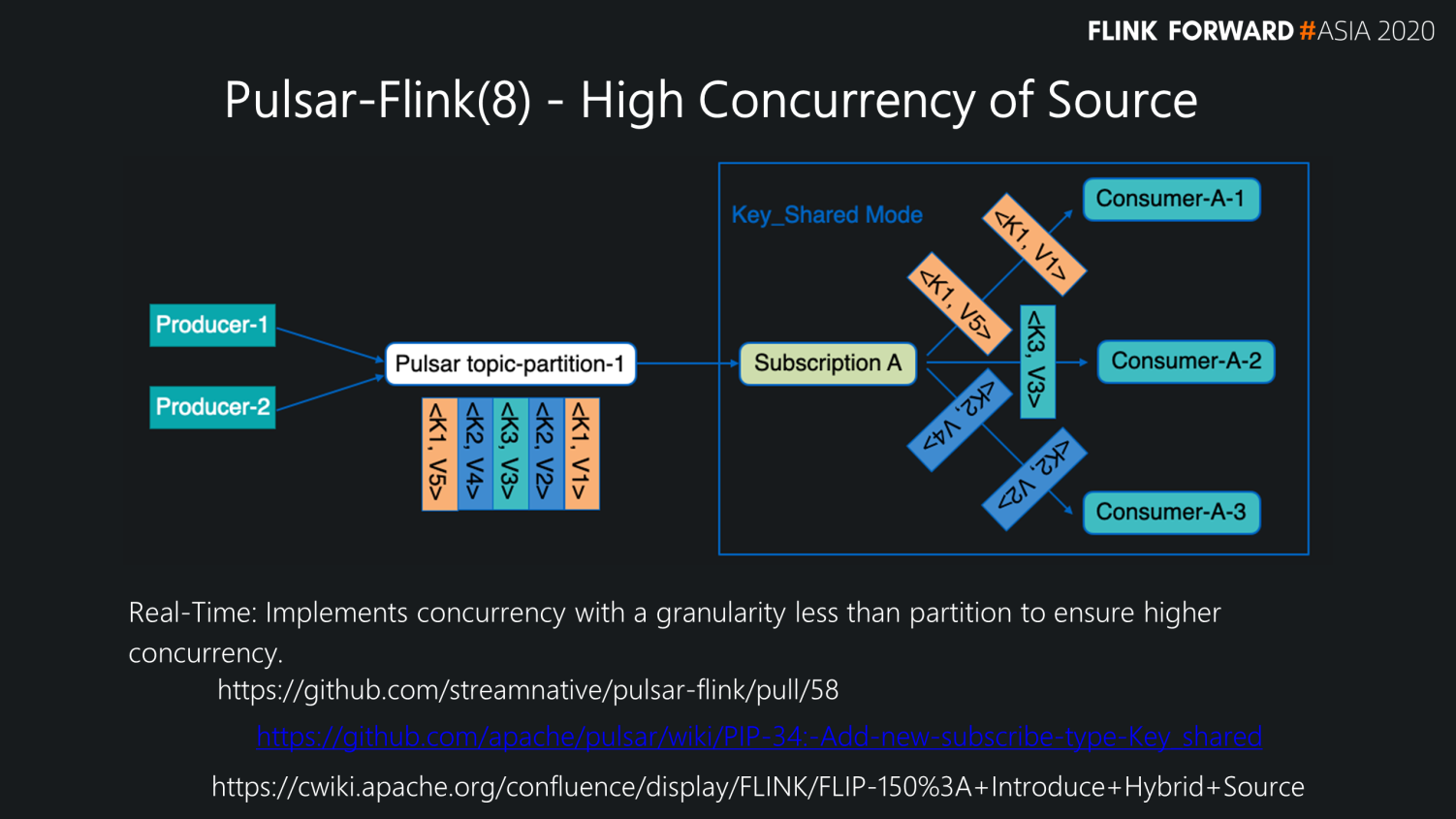

Another issue closely related to the Pulsar consumption pattern is a problem many Flink users face. How do you enable Flink to perform tasks faster? For example, a user is authorized ten degrees of concurrency, which will have ten job concurrencies. However, if a Kafka topic only has five partitions, each partition can only be consumed by one job, so five Flink jobs are idle. If you want to speed up the concurrency of consumption, you can only coordinate with the business side to create a few more partitions. Then, the consumer side, the production side, and the operation and maintenance side will find it particularly complicated. As a result, it is difficult to achieve real-time, on-demand updates.

Pulsar allows Kafka partitions to be consumed by only one active consumer. It supports the multiple consumers to consume data in Key-Shared mode. Also, it ensures the messages of each key are only sent to one consumer, which promises the concurrency of the consumer and the message order.

As for the previous scenario, we have provided support for the Key-shared consumption mode in Pulsar Flink. Although there are five partitions and ten concurrent Flink jobs, it can split the range of keys into ten. A subtask in Flink consumes one of the ten keys. This allows the consumer to decouple the relationship between the number of partitions and the Flink concurrency. Therefore, it ensures highly concurrent data processing.

The Pulsar has a unified storage base. As a result, we can select different readers based on the segment metadata of users. We have implemented this function.

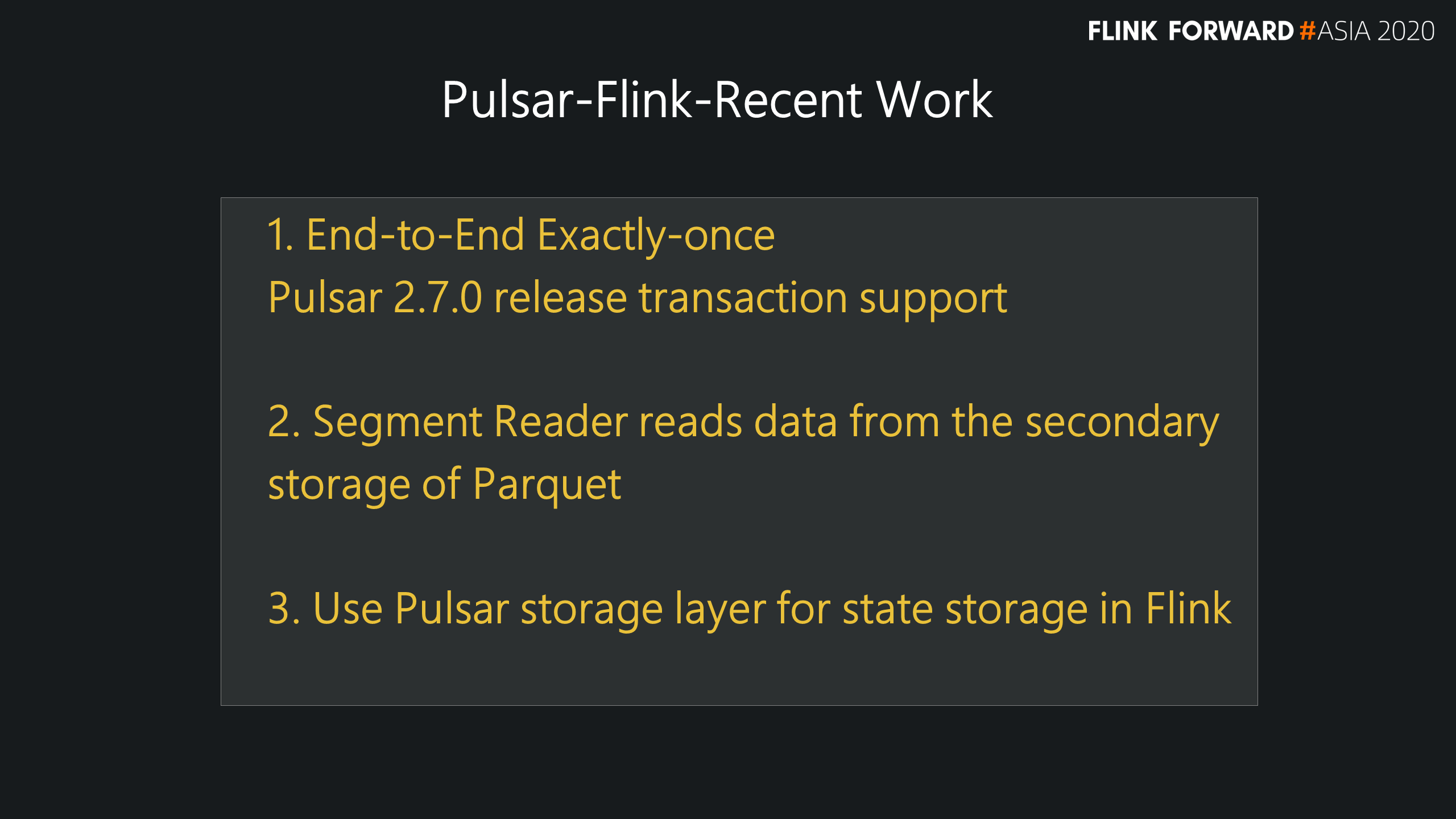

Recently, we have been working on the integration of Flink 1.12. Pulsar-Flink project is also undergoing iteration changes. For example, we have added support for transactions in Pulsar 2.7 and integrated the Exactly-Once capability of end-to-end into the Pulsar Flink repo. We have also engaged in how to read the column data with Parquet format in secondary storage and how to use the Pulsar storage layer for state storage in Flink.

Introduction to Alibaba Cloud Realtime Compute for Apache Flink

206 posts | 54 followers

FollowApache Flink Community - April 10, 2025

Apache Flink Community China - August 12, 2022

Apache Flink Community China - January 11, 2021

Apache Flink Community China - May 18, 2022

Apache Flink Community - January 31, 2024

Apache Flink Community China - April 13, 2022

206 posts | 54 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Apache Flink Community