By Jinsong Li (Zhixin)

MongoDB is a mature document database commonly used in business scenarios. Data from MongoDB is often collected and stored in a data warehouse or data lake for analysis purposes.

Flink MongoDB CDC is a connector provided by the Flink CDC community for capturing change data. It enables connecting to MongoDB databases and collections to capture changes such as document additions, updates, replacements, and deletions.

Apache Paimon (incubating) is a streaming data lake storage technology that offers high-throughput, low-latency data ingestion, streaming subscription, and real-time query capabilities.

Paimon CDC is a tool that integrates Flink CDC, Kafka, and Paimon to simplify the process of writing data into a data lake with just one click.

You can use Flink SQL or Flink DataStream API to write Flink CDC data into Paimon, or utilize the provided CDC tool by Paimon for writing data into the data lake. What are the differences between these two methods?

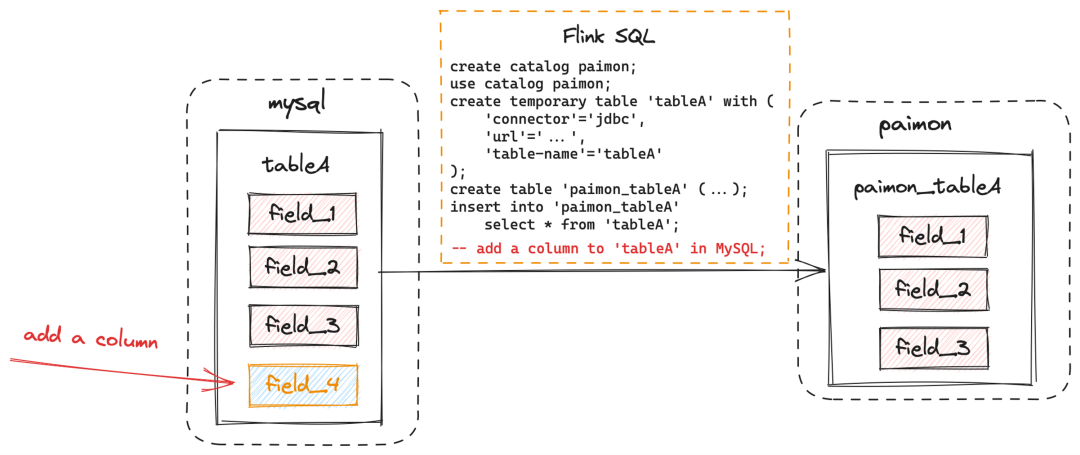

The above figure illustrates how to write data into the data lake using Flink SQL. This method is straightforward. However, when a new column is added to the source table, it will not be synchronized, and the downstream Paimon table will not be updated with the new column.

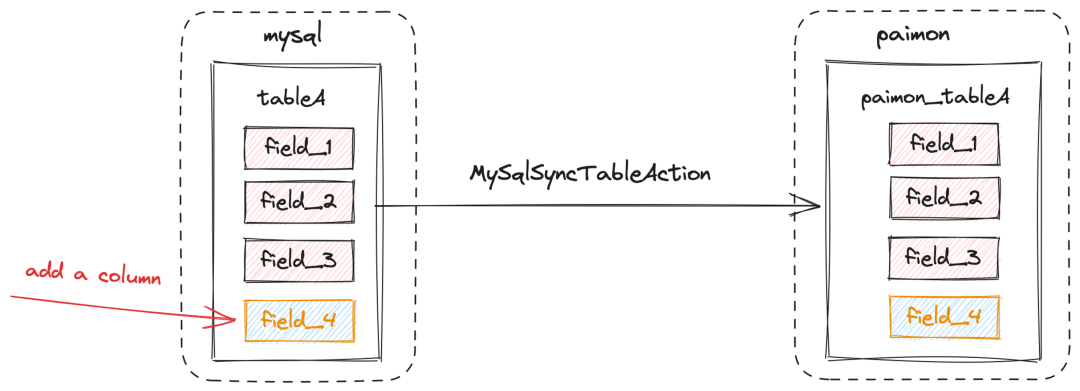

The above figure illustrates how data can be synchronized using Paimon CDC. As shown, when a new column is added to the source table, the streaming job automatically synchronizes the new column and propagates it to the downstream Paimon table, ensuring schema evolution synchronization.

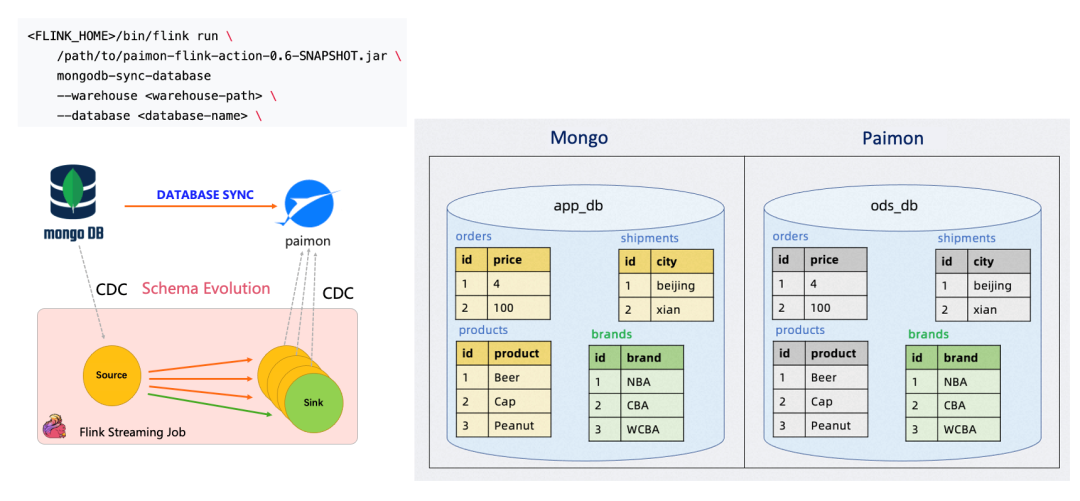

In addition, Paimon CDC also provides whole database synchronization.

Whole database synchronization offers the following benefits:

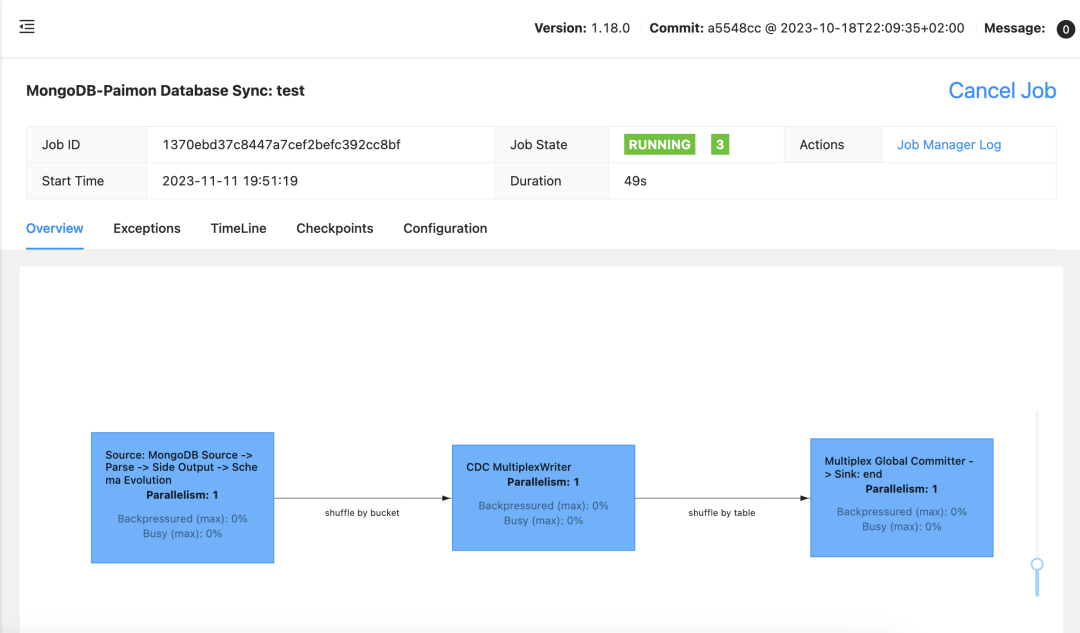

You can follow the demo steps to experience the fully automatic synchronization capabilities of Paimon CDC. The demo shows how to synchronize data from MongoDB to Paimon, as shown in the following figure.

The following demo uses Flink to write data into the data lake and Spark SQL for querying. Alternatively, you can also use Flink SQL or other computing engines such as Trino, Presto, StarRocks, Doris, and Hive for querying.

Download the free version of MongoDB Community Server.

https://www.mongodb.com/try/download/community

Start MongoDB Server.

mkdir /tmp/mongodata

./mongod --replSet rs0 --dbpath /tmp/mongodataNote: In this example, replSet is enabled. Only databases with replSet enabled will generate a changelog, and then the CDC data can be read incrementally by Flink Mongo CDC.

Download MongoDB Shell.

https://www.mongodb.com/try/download/shell

Start MongoDB Shell.

./mongoshIn addition, you need to initialize the replSet. Otherwise, the MongoDB server will keep reporting an error.

rs.initiate()Go to the official website to download the latest Flink.

https://www.apache.org/dyn/closer.lua/flink/flink-1.18.0/flink-1.18.0-bin-scala_2.12.tgz

Download the following jar files in sequence to the lib directory of Flink.

paimon-flink-1.18-0.6-*.jar, the Paimon-Flink integrated jar file:

https://repository.apache.org/snapshots/org/apache/paimon/paimon-flink-1.18/0.6-SNAPSHOT/

flink-shaded-hadoop-*.jar. Paimon requires Hadoop-related dependencies:

flink-sql-connector-mongodb-cdc-*.jar:

Set the checkpoint interval in the flink/conf/flink-conf.yaml file.

execution.checkpointing.interval: 10 sThis interval is not recommended in production. A large number of files will be generated if the interval is too short, causing increased costs. Generally, the recommended checkpoint interval is 1-5 minutes.

Start a Flink cluster.

./bin/start-cluster.shStart a Flink synchronization task.

./bin/flink run lib/paimon-flink-action-0.6-*.jar

mongodb-sync-database

--warehouse /tmp/warehouse1

--database test

--mongodb-conf hosts=127.0.0.1:27017

--mongodb-conf database=test

--table-conf bucket=1Parameter description:

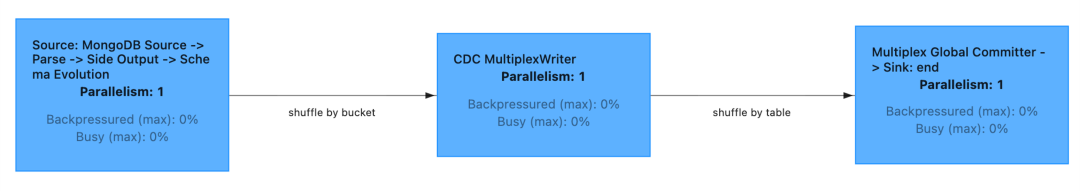

As you can see, the job has been started. The topology contains three main nodes:

Both Writer and Committer may become bottlenecks. The concurrency of Writer and Committer can be affected by the configuration of Flink.

You can turn on the full asynchronous mode to avoid the Compaction bottleneck of Writer:

https://paimon.apache.org/docs/master/maintenance/write-performance/#asynchronous-compaction

Go to the official website to download the latest version of Spark.

https://spark.apache.org/downloads.html

Download the Paimon-Spark integrated jar files.

Start Spark SQL.

./bin/spark-sql

--conf spark.sql.catalog.paimon=org.apache.paimon.spark.SparkCatalog

--conf spark.sql.catalog.paimon.warehouse=file:/tmp/warehouse1Use the Paimon Catalog to specify the database.

USE paimon;

USE rs0;First, test that the written data can be read.

Insert a piece of data into MongoDB.

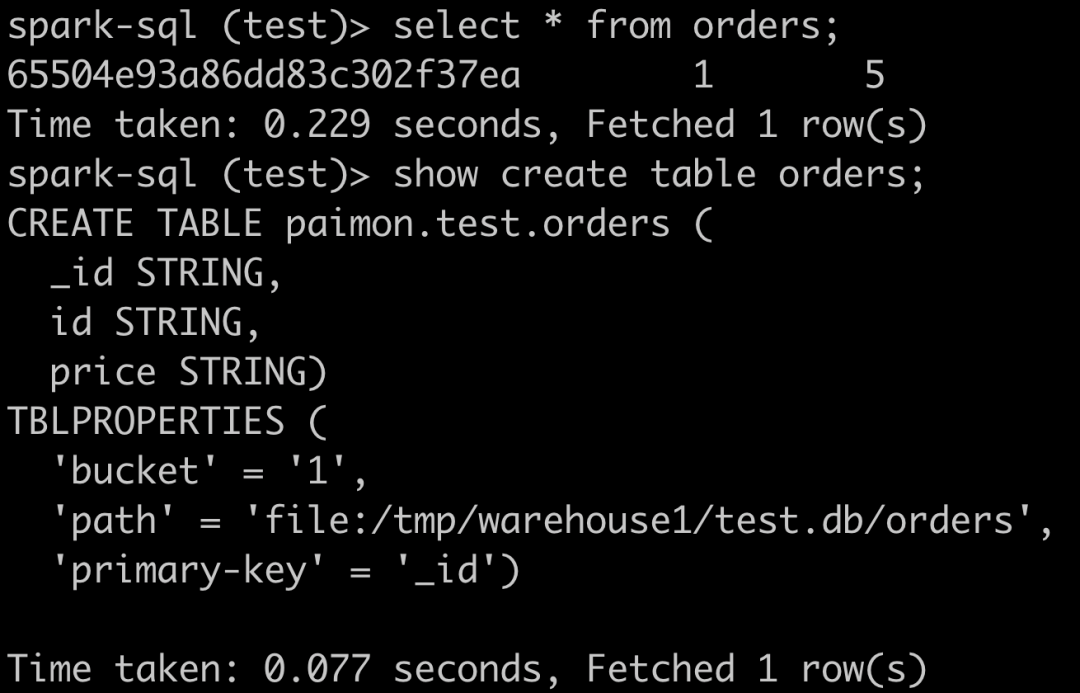

db.orders.insertOne({id: 1, price: 5})Query in Spark SQL.

As shown, this data is synchronized to Paimon, and the schema of the orders table is added with a column "_id", which is the implicit primary key automatically generated by MongoDB.

This step shows how updates are synchronized.

Update the following data in Mongo Shell.

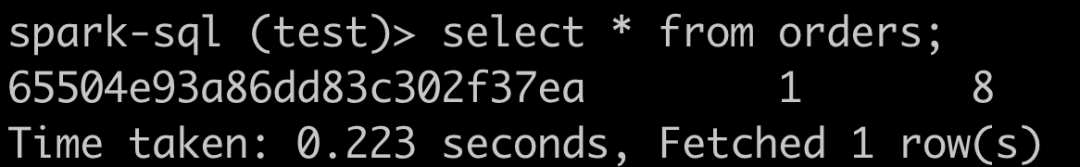

db.orders.update({id: 1}, {$set: { price: 8 }})Query in Spark.

The price of the data is updated to 8.

This step checks the synchronization of added fields.

In Mongo Shell, insert a new data record, and a new column appears.

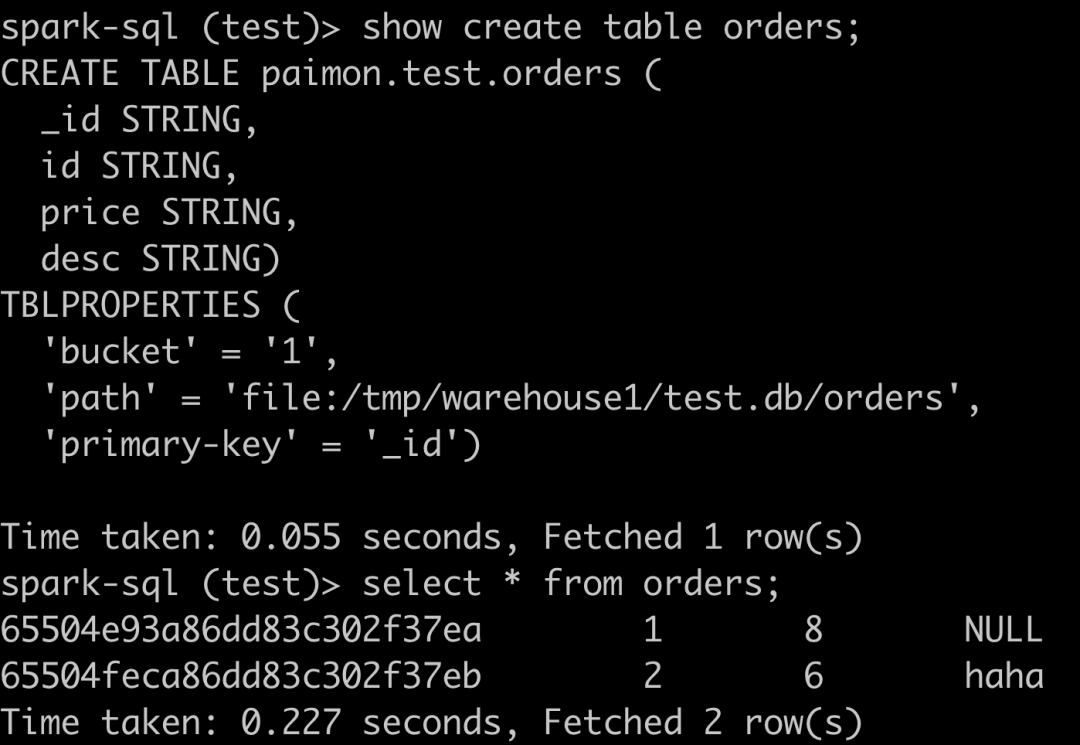

db .orders.insertOne ({ id: 2, price: 6, desc: “haha”})Query in Spark.

As shown, a column is added to the table corresponding to Paimon. The query data shows that the default value of the old data is NULL.

This step checks the synchronization of added tables.

Insert the data of a new table into Mongo Shell.

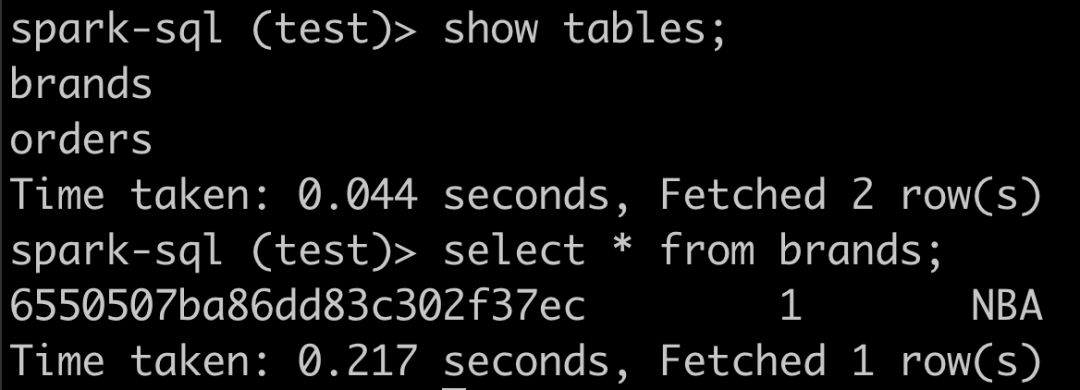

db .brands.insertOne ({ id: 1, brand: “NBA”})Query in Spark.

A table is automatically added to Paimon, and the data is synchronized.

The data ingestion program of Paimon CDC allows you to fully synchronize your business databases to Paimon automatically, including data, schema evolution, and new tables. All you need to do is to manage the Flink job. This program has been deployed to various industries and companies, bringing the ability to easily mirror business data to the lake storage.

There are more data sources for you to explore: Mysql, Kafka, MongoDB, Pulsar, and PostgreSQL.

Paimon’s long-term goals include:

• Extreme ease-to-use, high-performance data ingestion, convenient data lake storage management, and rich ecological queries.

• Easy data stream reading, integration with the Flink ecosystem, and ability to bring fresh data generated one minute ago to the business.

• Enhanced Append data processing, time travel and data sorting which bring efficient queries, and upgraded Hive data warehouses.

Official website: https://paimon.apache.org/.

206 posts | 56 followers

FollowApache Flink Community - May 28, 2024

Apache Flink Community - May 30, 2024

Apache Flink Community - August 14, 2025

Alibaba Cloud Community - January 4, 2026

Apache Flink Community - March 31, 2025

Apache Flink Community - August 1, 2025

206 posts | 56 followers

Follow ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community

5811833376407211 February 24, 2025 at 6:48 am

You beat me to this. was / is busy with a similar blog, describing same flow.Nicely done.G