Abstract: This article is compiled from a presentation by Yunfeng Zhou, a Senior Development Engineer at Alibaba Cloud and an Apache Flink Contributor, during the Apache Asia CommunityOverCode 2024 event. The content is primarily divided into the following three parts:

- From Batch / Streaming Unification to Mixing

- Technical Solution for Mixing Batch / Streaming Processing

- Community Progress and Roadmap

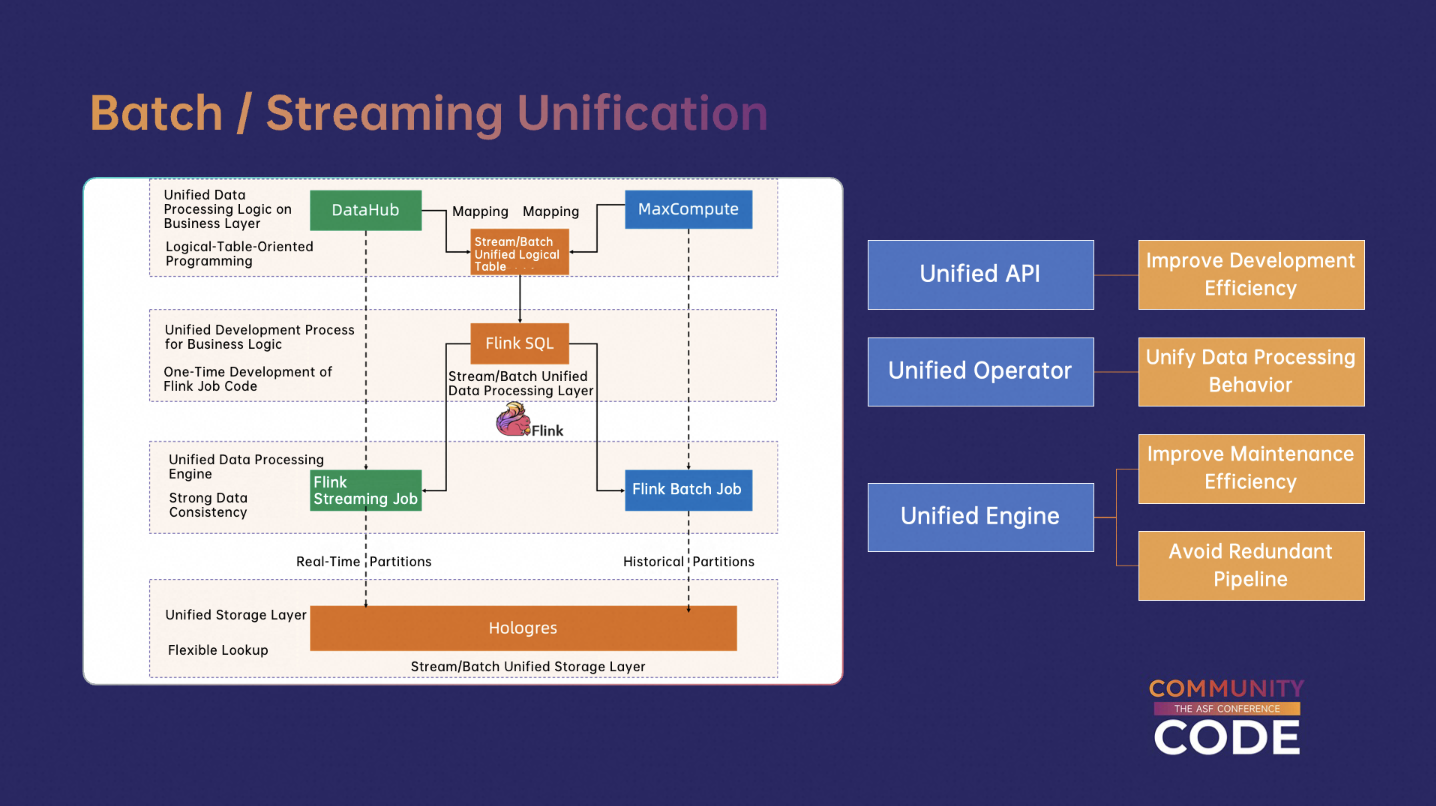

Before mixing stream and batch processing, Flink had already introduced the concept of unified stream and batch processing. This unification is primarily reflected in the following aspects:

(1)Unified API:Flink provides a unified DataStream / SQL API, enhancing users' development efficiency by allowing them to run both offline and online jobs under the same set of code, without the need to develop two separate Flink programs.

(2)Unified Operator:Flink uses the same implementation for an operator in both stream and batch jobs, ensuring consistency in data processing logic, correctness, and semantic behavior between stream and batch operations.

(3)Unified Engine:Flink runs and operates stream and batch jobs with the same engine and resource scheduling framework, eliminating the need to build different workflows and thereby optimizing operational efficiency.

These are some of the achievements Flink has made with unified stream and batch processing. However, despite the current unified framework, users still need to configure a job's execution mode and apply different optimization strategies depending on whether the job is in an offline or online scenario. These configuration strategies can still increase the operational workload for users maintaining Flink jobs, which is the problem that mixing stream and batch processing aims to address.

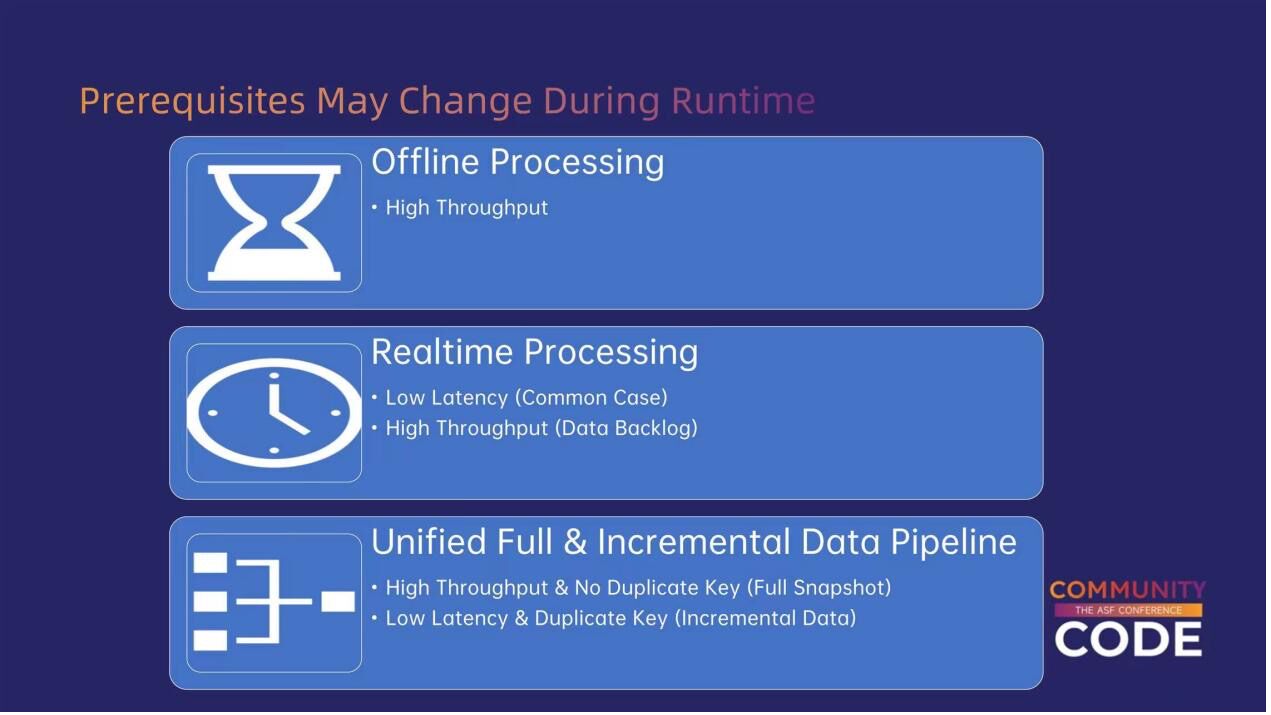

By analyzing existing scenarios that have been using stream/batch modes for online/offline scenarios, it is found that the essence of different execution modes is to employ different optimization strategies based on varying prerequisites. These prerequisites mainly include the following aspects:

(1)Performance Preference:When configuring batch jobs, users typically tend to pursue high throughput or high resource utilization. In contrast, for stream jobs, users expect low latency, high data freshness, and real-time processing.

(2)Apriori Knowledge About Data:In batch mode, all data is prepared in advance, allowing jobs to be optimized based on statistical information about the data. In contrast, in stream mode, since jobs typically do not know what data will come in the future, they need to be optimized for aspects such as random access to provide better support.

Due to differing prerequisites, Flink employs distinct optimization strategies in stream mode and batch mode. These strategies are primarily reflected in aspects such as resource scheduling, state access, and fault tolerance mechanisms:

(1)Scheduling:

(2)State Access:

(3)Fault Tolerance:

From the above examples, it is clear that Flink has been employing different optimization strategies to meet respective performance requirements and operational characteristics in stream mode and batch mode, due the difference in prerequisites they provide.

In further exploration, it is found that these prerequisites may not be constant throughout the entire lifecycle of a job; instead, they can change dynamically during runtime.

This indicates that when mixing stream and batch processing, Flink should support changing its optimization strategy based on the dynamic requirements in job during runtime.

Based on the analysis of the aforementioned prerequisites and scenarios, it is clear that the goal of mixing stream and batch processing is to eliminate the need for users to manually configure stream or batch modes. Instead, the Flink framework automatically detects users' requirements for throughput, latency, and data characteristics in different contexts (realtime and offline) and change optimization strategies accordingly. This allows Flink users to employ the benefits of stream and batch modes in the same job, without spending time operating on the job's configurations.

In this section, it is described how Flink achieves the goals aforementioned for mixing batch /stream processing.

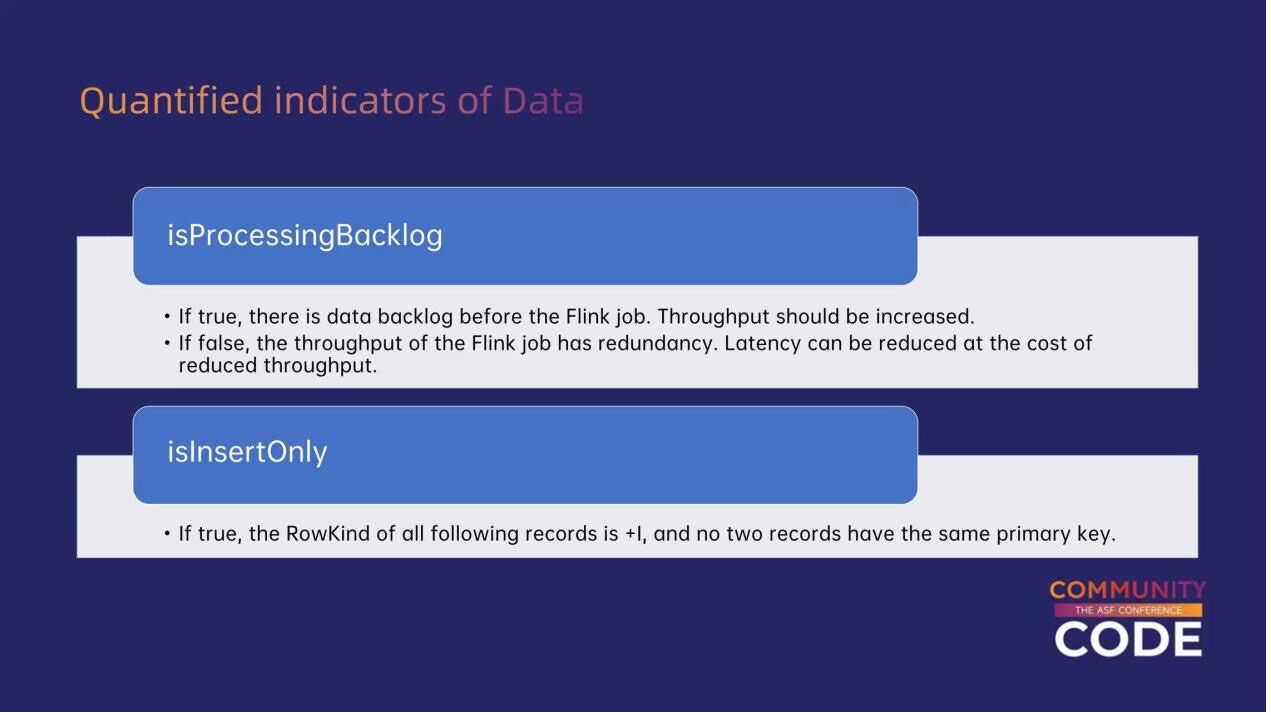

First, Flink quantifies user preferences and data characteristics using two indicators.

The first indicator is isProcessingBacklog, which can be regarded as a measure to determine whether there is data backlog. When data backlog occurs, the job needs to process this accumulated data in the shortest possible time. In such cases, the job should optimize its throughput, possibly by sacrificing latency. When there is no data backlog, the job should aim to maintain low latency and high data freshness, similar to existing stream processing modes.

The second quantifiable indicator is isInsertOnly, distinguishing scenarios like the aforementioned full stage and incremental stages within a unified full & incremental data pipeline. In case when isInsertOnly=true, all data will be of the Insert type, rather than Update or Delete types. Additionally, the primary keys of these data do not overlap.

How can we determine the value of these two quantifiable indicators? One primary approach is to obtain this information from the data sources.

For a clearly phased data source (such as a Hybrid Source), it may first consume data from a file in the file system before processing real-time messages from a message queue. In this scenario, during the initial phase of consuming the file, there will always be data backlog, resulting in isProcessingBacklog = true. Subsequently, when the job begins to consume messages from the queue, isProcessingBacklog will transition from true to false.

The same principle applies to CDC sources. In a full stage, isProcessingBacklog is equal to true, while in an incremental stage, it is equal to false.

For sources without clearly defined phases (such as message queues), the job can determine whether there is data backlog based on existing Flink metrics, such as Watermark latency. Watermark represents the current timestamp of the job's data time, and the difference between its timestamp and the system time reflects Watermark latency. When the latency exceeds a certain threshold, it indicates that there is data backlog; conversely, if the latency is below that threshold, it can be regarded as no data backlog.

As for isInsertOnly, it currently primarily supports CDC sources. In the full stage, isInsertOnly is equal to true, while in the incremental stage, isInsertOnly is equal to false.

After collecting these indicators, the next step is to implement different optimization strategies based on the value of these two indicators.

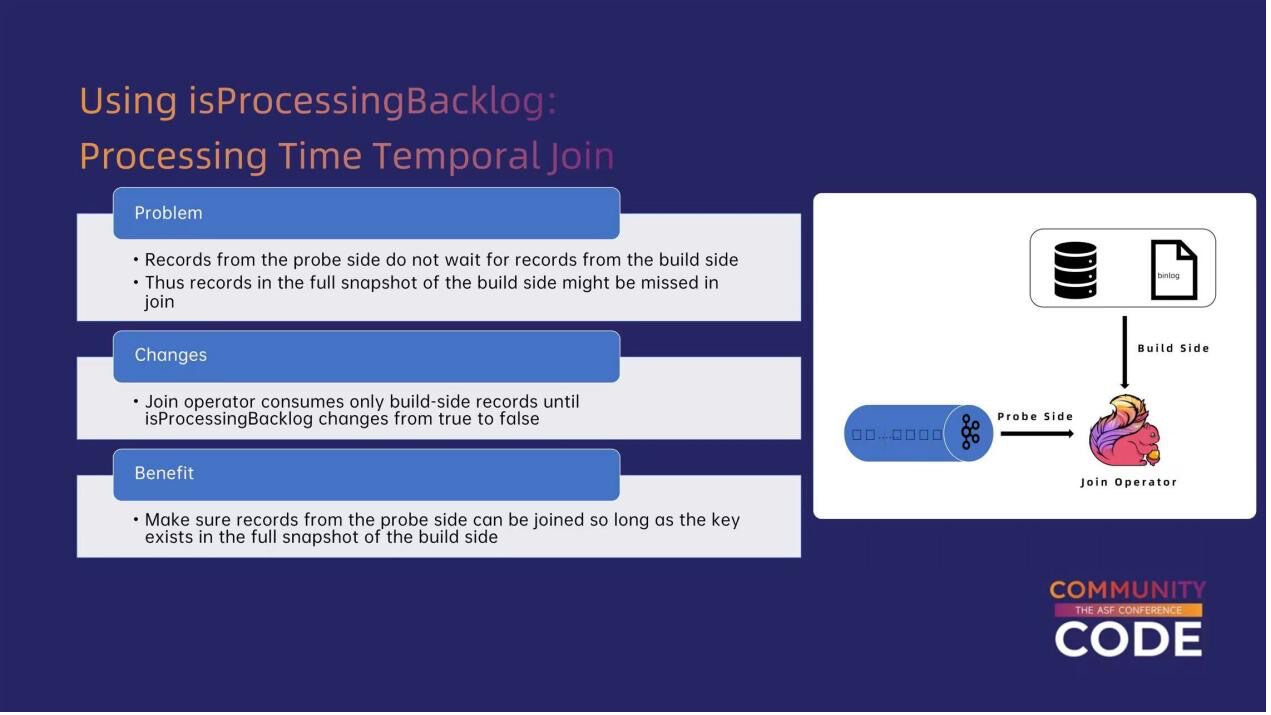

Firstly regarding isProcessingBacklog, one of the optimizations is to better support processing-time temporal joins. This type of join does not rely on the time factors of the Probe Side and Build Side data itself, but rather on the system time. When Probe Side data arrives at the Flink system, it can be joined with the most recent Build Side data based on the current state.

This approach is semantically sound; however, in practice, it may encounter the following situation: when there is data backlog, it is possible that a certain record from the Build Side cannot be received by the Flink system in a timely manner. Consequently, when another record from the Probe Side arrives for the join, it may not be able to match with this data, leading to the join outputs not containing all the expected results.

To address this issue, Flink implements the following optimization: when there is data backlog on the Build Side (i.e., isProcessingBacklog is equal to true), the join operator temporarily pauses the consumption of Probe Side data, until the job catches up with the Build Side data, thereby avoiding the previously mentioned issue of join data loss.

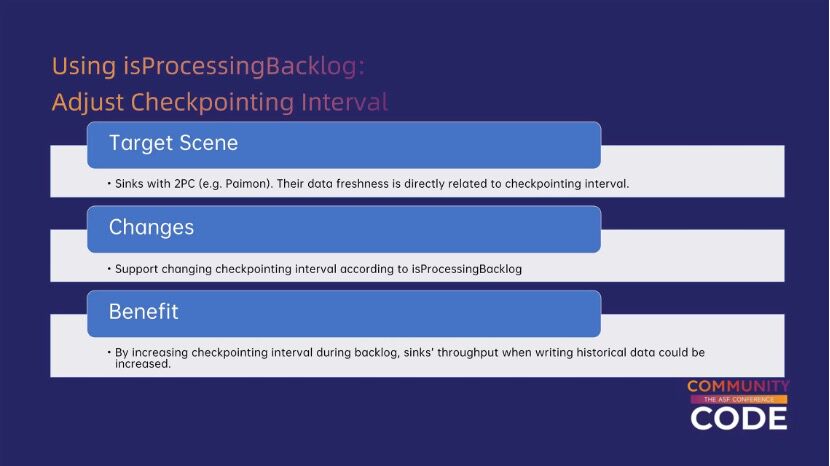

The second optimization involves adjusting the checkpoint interval in Flink. Taking Paimon Sink as an example, some Flink connectors guarantee exactly-once semantics through a two-phase commit process. The frequency of Paimon Sink's two-phase commits aligns with Flink's checkpoint interval.

Therefore, by configuring a larger checkpoint interval or disabling checkpointing when isProcessingBacklog is true during the full data synchronization phase, Paimon Sink can increase its throughput by triggering less commits and therefore having less overhead on commits.

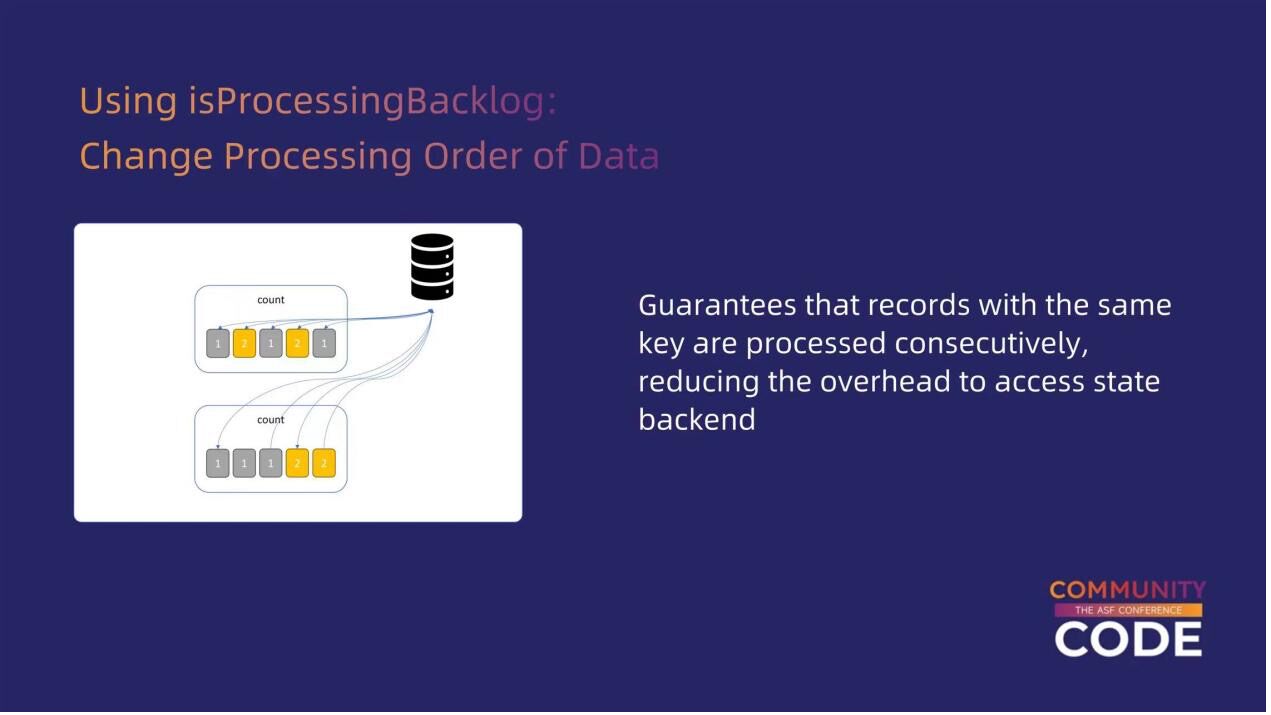

Another optimization based on isProcessingBacklog involves sorting the input data. As previously mentioned, a key advantage of batch processing jobs over stream processing jobs is the ability to continuously consume data for the same key, allowing the local Flink operator to maintain only one state corresponding to that key without incurring the overhead of random access to a Key-Value Store. Inspired by this, we apply this optimization to stream processing jobs.

Specifically, when isProcessingBacklog is true, downstream operators would temporarily pause data consumption, then sort the backlog data from upstream. Once sorting is complete, the operator resumes consumption of data and continuously process records with the same primary key. This approach optimizes the overall throughput of the job by reducing the overhead associated with random access to the state backend, without significantly increasing data latency.

Regarding isInsertOnly, some main optimization observed are in Paimon Sink and Hologres Sink.

Paimon Sink uses isInsertOnly to optimize its changelog generation process. Generally for Paimon, the changelog files represents the original input data of sink operators, while the data files are results of deduplication and updates according to the input and existing data. When isInsertOnly is true, the contents of changelog files and data files would be identical, because no deduplication or update would be performed when all records have distinct keys. Based on this information, Paimon Sink does not need to independently format, serialize records, and then write to separate changelog and data files. Instead, it only needs to write one data file and then make a copy of it as the changelog file. This approach significantly reduces CPU overhead.

Similar optimizations can be applied to Hologres Sink. When isInsertOnly is true, Hologres Sink can utilize batch inserts and avoid steps such as write-ahead logging. When writing a record, the job does not need to query the database to check for the existence of that primary key to decide whether to update existing data or insert new data, as the isInsertOnly semantics ensure that primary key duplication will not occur. Consequently, the job can skip these queries and update overhead, leading to optimized throughput.

Finally, Here is the progress of the optimizations mentioned above and some of the future plans for mixing stream and batch processing.

First, regarding isProcessingBacklog, the community has completed the implementation of generating the isProcessingBacklog signal based on different stages of the source and passing this signal downstream to non-source operators, and use it to regulate Flink's checkpoint intervals.

As for the previously mentioned functionalities—sorting all data to reduce state overhead and determining isProcessingBacklog based on watermark delays—these have been proposed and discussed within the community, but they have not yet been finalized. Each entry in the slide is accompanied by its corresponding Flink Important Proposal(FLIP) number, which interested readers can refer to for more detailed design and discussions.

Regarding isInsertOnly, the functionalities discussed earlier, including collecting the isInsertOnly signal from Change Data Capture (CDC) and utilizing this signal to optimize Paimon Sink and Hologres Sink, have been completed in the commercial version of Flink on Alibaba Cloud and are expected to be released in the next version.

Additionally, there are some semantics conflicts between the isInsertOnly signal and certain existing frameworks within Apache Flink. These conflicts are anticipated to be resolved once Flink 2.0 supports the Generalized Watermark mechanism. Therefore, we have implemented these optimizations in the commercial version on Alibaba Cloud for now, and once Flink 2.0 supports the necessary infrastructure, these optimizations will be pushed to the Flink community.

In the future, we will further advance the following areas of work:

These are some of the future optimization plans. We welcome everyone to join Alibaba Cloud's open-source big data team to collaboratively drive technological advancement and innovation.

How Flink Batch Jobs Recover Progress during JobMaster Failover?

206 posts | 56 followers

FollowApache Flink Community - April 25, 2025

Apache Flink Community China - March 17, 2023

Apache Flink Community - July 18, 2024

Apache Flink Community - January 31, 2024

Apache Flink Community - March 26, 2025

Apache Flink Community China - August 2, 2019

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More ActionTrail

ActionTrail

A service that monitors and records the actions of your Alibaba Cloud account, including the access to and use of Alibaba Cloud services using the Alibaba Cloud Management console, calling API operations, or SDKs.

Learn MoreMore Posts by Apache Flink Community