This article is organized based on a live share by Jia Jixin (Gin), Alibaba Cloud Intelligence Solution Architect.

The article mainly introduces two applications of real-time big data based on Flink. Two application cases similar to the real-world scenarios were customized to reflect the value of applications and the typical scenarios better.

The first one is the analysis of real-time API logs for applications and services. The second one is the analysis of vehicle engines using simulated IoT telemetry data and real-time exception detection to achieve maintenance prediction.

This demand is relatively common. In this scenario, an API for vehicle privacy protection is constructed. This API can carry out privacy protection processing on the photos of vehicles uploaded by users, and it is a deep learning model.

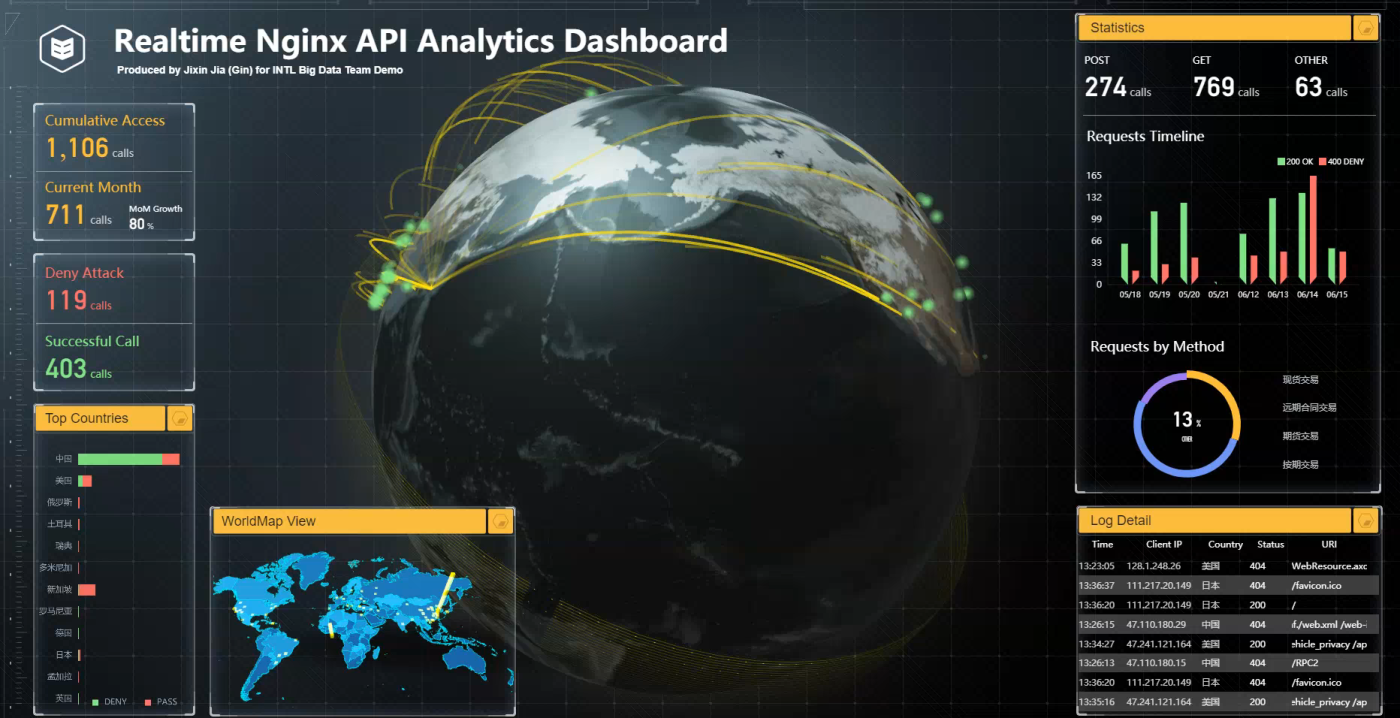

This model is encapsulated into an API and provided in ECS instances in Alibaba Cloud's public cloud for users worldwide. The first thing to do for this API is to analyze the accessing times, feedback, user location, and access features to determine whether users are attacking or in normal use.

For this purpose, it first should have the ability to collect massive and real-time application logs scattered among each server. In addition to collecting, it should be able to process logs in real-time. Processing includes queries for dimension tables and aggregation of some windows, which are common operations for streaming computing. Then, the processing results are placed in an environment with high throughput and low latency so downstream analysis systems can access data in real-time.

The whole procedure is not complicated, but it represents a very important capability. It can achieve data-driven decision-making in seconds for business decision-makers by using the real-time computing and processing of Flink.

Let's take a look at how this demo is implemented. There are several key points in this architecture:

First of all, at the top right is the built API environment, which constructs APIs by using Flask and Python combined with mainstream NGINX and Gunicorn. It is necessary to turn the API into a container image and deploy it to Alibaba Cloud's ECS instances. A seventh-layer SLB and API Gateway are installed to achieve high concurrency and low latency and help users call the API.

At the same time, in this demo, we also provide a web app so users can call the API through code and use a graphical interface to access the API. When the frontend users call the API, SLS will collect API application logs from the server of the API in real-time. After simple processing, logs are delivered to Realtime Compute for Apache Flink.

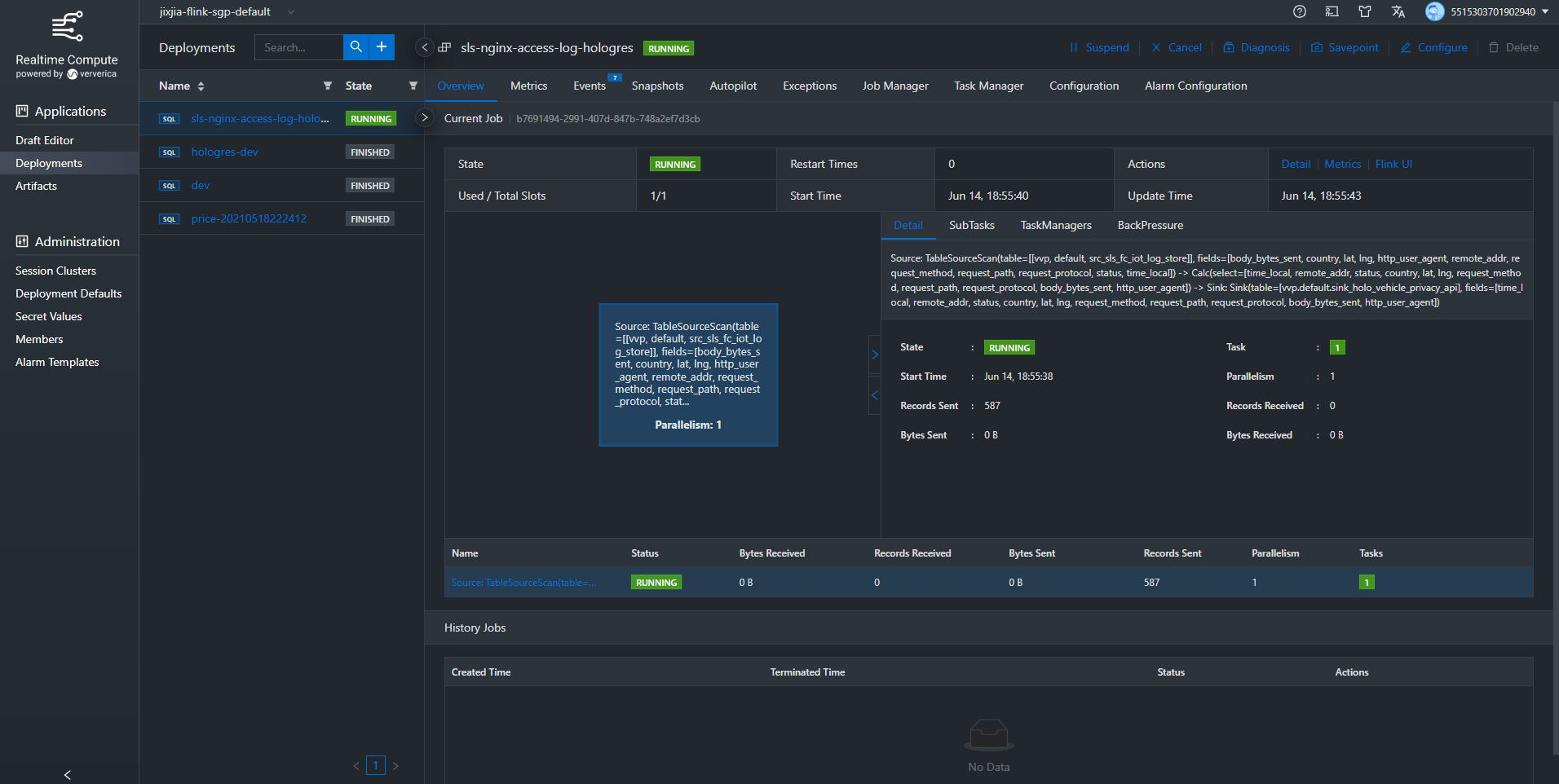

Realtime Compute for Apache Flink has a good feature. It can subscribe to the delivery of logs from SLS and use stream computing to query and merge tables in the window aggregation dimension. Another advantage is how it can use common SQL statements to customize complex business logic.

After the data is processed, Realtime Compute for Apache Flink writes the stream data to Hologres as a structured table. Hologres serves as a storage medium of the data and an OLAP-like engine that powers the display of downstream BI data. All these form the architecture for real-time collection and analysis of big data logs.

Let's look at how each component works specifically:

Users can upload photos of their vehicles through the web app, and the API will blur the photos. In the video, we can see the background of this photo is blurred after being processed by the API. The license plate part and the privacy information part are also blocked.

When users access this API, SLS collects logs in real-time in the background.

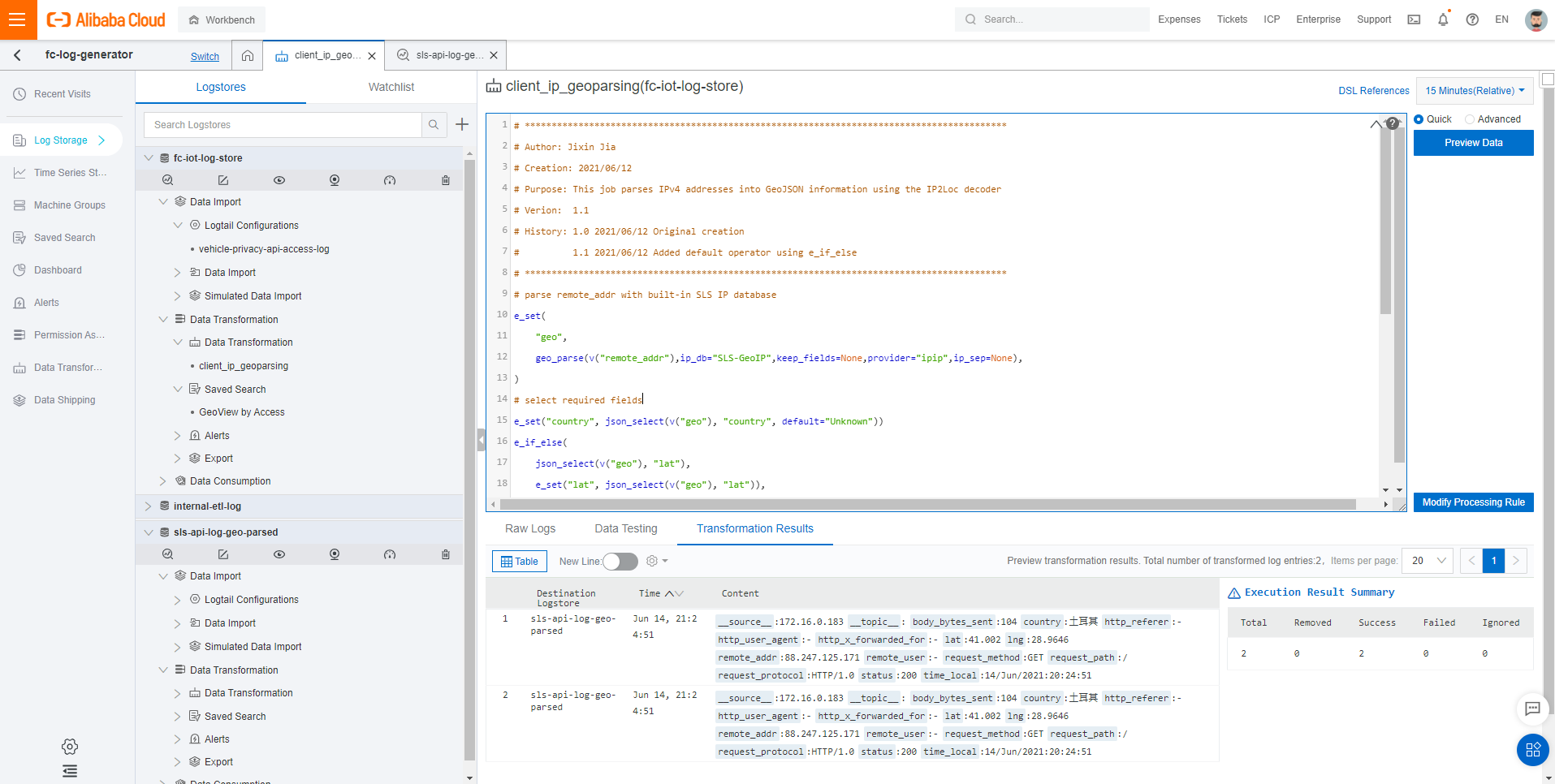

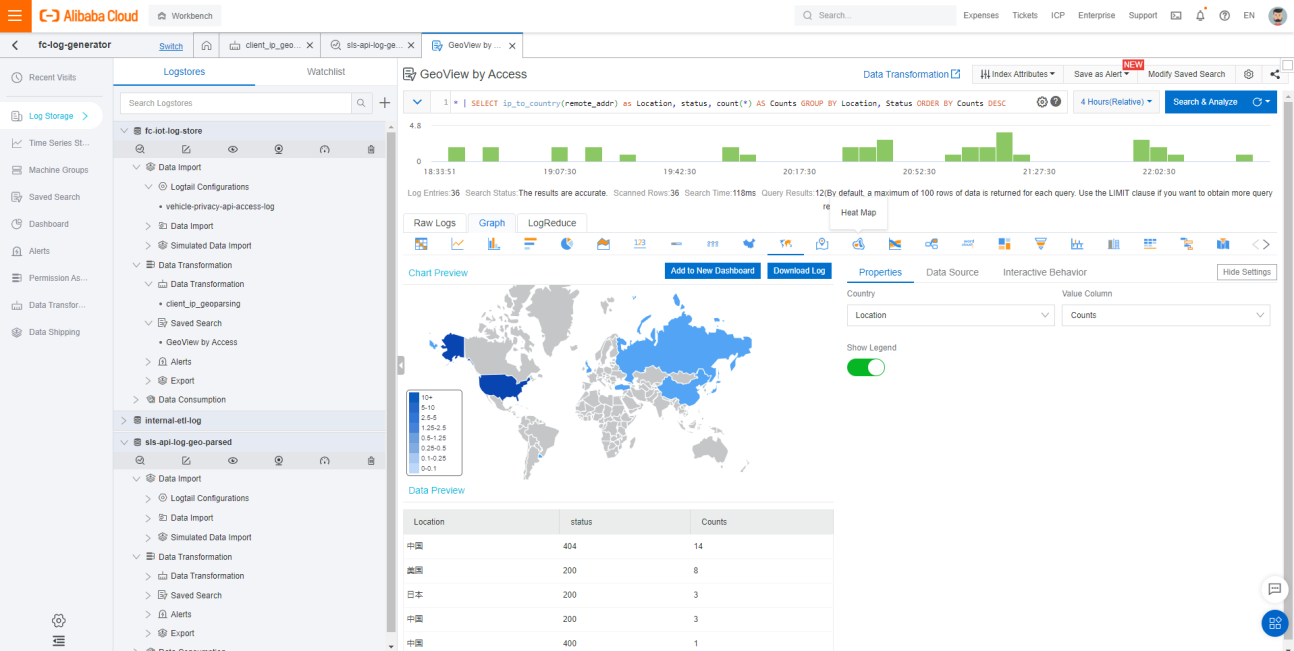

After log collection, the data conversion function of Log tail is used to parse and convert the original log to a certain extent, including parsing IP addresses into geographic information, such as nation, city, latitude, and accuracy. Subsequent downstream analysis can conveniently schedule the information. In addition to simple services, a very powerful graphical data analysis capability is provided.

It can implement a primary data analysis or data survey function here. We can see whether the conversion of the original log meets the requirements of downstream business support. After the log is collected and converted, it will be delivered to the stream processing center (Realtime Compute for Apache Flink) through Log Hub.

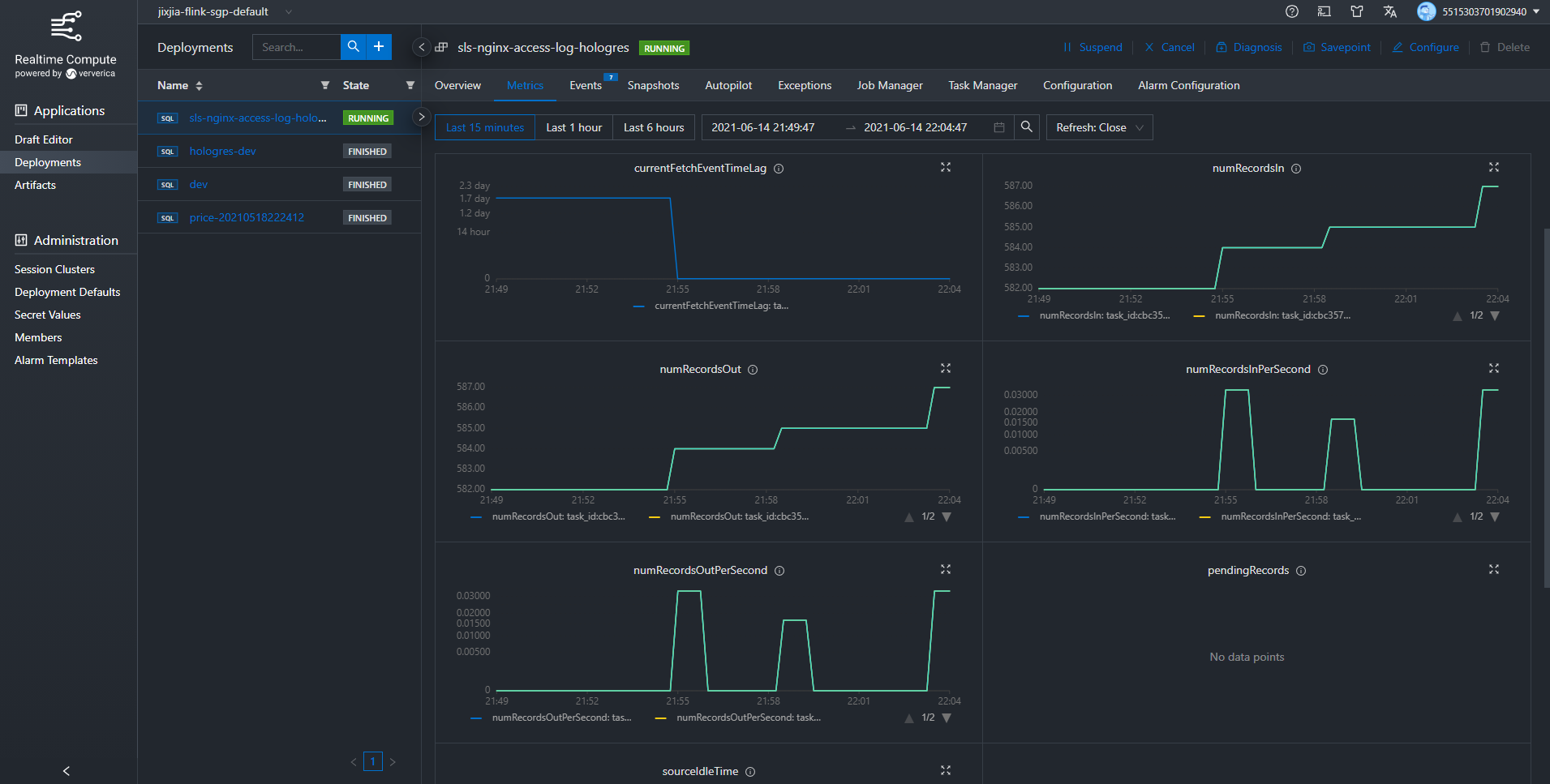

The word delivery is not particularly accurate. Realtime Compute for Apache Flink actively subscribes to the information of these processed logs of Log Store stored in Log Hub. Realtime Compute for Apache Flink is very good for one point. It can use common SQL statements to write and edit business logic and convert and handle some logical conditions. After the SQL statements are written, just click Release, and they can be packaged into a Flink job and hosted in a Flink cluster. Users can access the job through the console easily.

What is the usage frequency of the current plus cluster? How about the CPU? Is there an exception or error? What about the whole delivery situation? All related functions can be hosted through Realtime Compute for Apache Flink, which is a very big advantage. Users hardly need to worry about O&M.

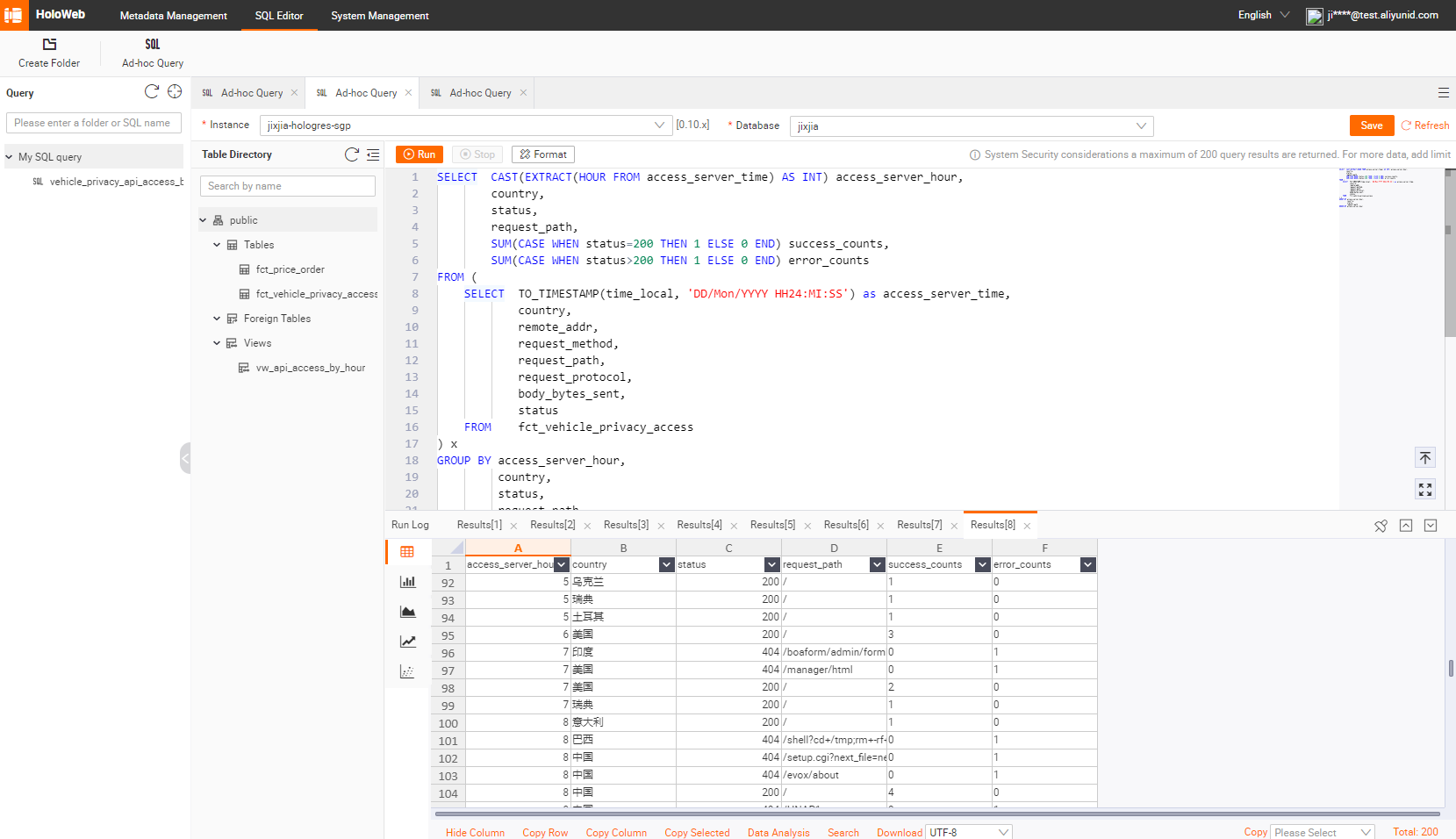

After processing by Realtime Compute for Apache Flink, the processed stream data can be written directly into Hologres in a structured table way through the interface provided. Hologres has a special feature that makes it an OLTP service and an OLAP service.

Specifically, it can be used as an OLTP service for fast writing. It can also perform high-concurrency and low-latency query and analysis on the written data at the same time, which is often referred to as the ability of an OLAP engine. Hologres combines the capabilities of the two, so it is also called HSAP.

In this architecture, it is mainly used to show the processed data to the downstream, which means end users. Business decision-makers can view the dashboard displaying real-time data.

This real-time dashboard will reflect the latest processed information with a latency of a few seconds as the API is accessed. It can reduce the delay in viewing the data.

If the traditional batch processing method is used, terabytes of data may be processed each time, taking several hours. If the end-to-end real-time computing solution with Realtime Compute for Apache Flink as the core is adopted, the time consumed can be reduced to a few seconds or less.

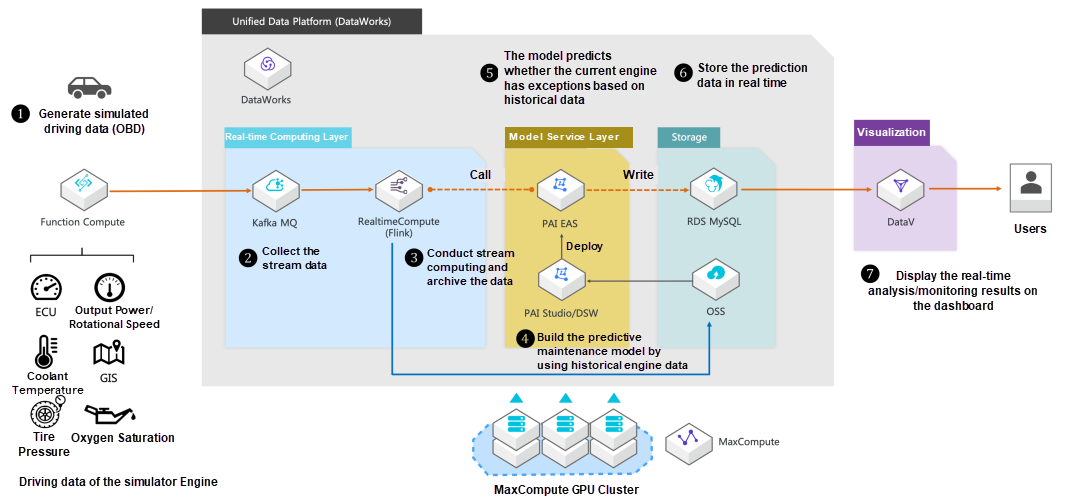

The second business scenario is to analyze and judge whether the engine of a car on the road shows some abnormal clues by combining with simulated IoT telemetry data. It can judge whether there is a possible problem in advance. If left unchecked, a component may break down after three months. This is also a requirement often mentioned in practical application scenarios, which we call predictive maintenance. Predictive maintenance can help customers save a lot of money in real-world application scenarios because replacing a component before it is damaged is more effective than repairing it after a problem occurs.

There is a diagnostic system called OBD II in the on-board equipment to realize scenarios relatively close to real-world scenarios. It often contains classic data, and some of these data are collected for processing and simulation. We have written a program to simulate the data relatively close to the vehicle engine running in the real world.

Of course, it is unlikely to let a car drive on the road, so this simulation program simulates driving data through various statistical analysis methods.

This program delivers the simulated telemetry data of the vehicle engine to Kafka. Then, Realtime Compute for Apache Flink subscribes to and consumes Kafka topics. Next, streaming computing is performed for each topic. One part of the result is archived in OSS as historical data. The other part is delivered to the developed exception detection model as a hot stream data source. The model is deployed on PAI-EAS, which can be called through Realtime Compute for Apache Flink.

After judging from the machine learning model, signs of exceptions in the current engine data are detected. Then, the result is written to the database for consumption by AB. After the data is processed in real-time by Realtime Compute for Apache Flink, some of the data is archived to OSS.

This part of the data is used as historical data for modeling or even model retraining. The feature of driving may change every once in a while, which is commonly known as Data Drifting. The newly generated historical data can be used to retrain the model, and the retrained model can be used as a Web Service and deployed on PAI-EAS so that Flink can call the model. As a result, a big data solution with Lambda architecture is implemented.

First, we need to generate the simulated telemetry data from OBD and deliver it to the cloud for analysis. Function Compute (FC) is used here because it is very convenient. It is a managed service.

We can also copy locally written Python scripts directly to FC for simulated data generation, which is very convenient.

In this demo, FC operates once every minute. In other words, one batch of telemetry data is generated every minute. The data is delivered to Kafka once every three seconds to simulate the frequency of data generation in a real environment as often as possible.

Kafka is also a commonly used Pub/Sub system for big data. It is very flexible and scalable. Message Queue for Apache Kafka of Alibaba Cloud can build a Kafka cluster in EMR or use a managed service called Kafka on MQ to build a complete service.

This is a Kafka system. This demo uses Kafka to build a managed Pop Subject System for convenience. This System is only used to store the engine data delivered by the vehicle. There could be tens of thousands or hundreds of thousands of vehicles in the production environment. If Kafka is used, it can be scaled out very conveniently. the overall architecture does not need to change much, regardless of how many vehicles there are (for example, 10 or 100,000). It can meet the demand of scaling.

Realtime Compute for Apache Flink is still adopted for real-time computing, but the Blink exclusive cluster is used in this demo. It is a so-called semi-managed real-time computing platform, but it is almost the same as the fully managed method in the previous scenario.

However, when making this demo, some regions had not yet been fully managed by Flink, so we chose an exclusive cluster service called Blink. It is also a service of Realtime Compute, and it is the same as fully managed Flink in use. Developers only need to focus on writing scripts and handling the business logic. After clicking Release, Flink manages the remaining processes. Developers only need to monitor and see if there are any exceptions and perform adjustments.

It is worth mentioning that PAI-EAS, the model calling interface, is embedded into Flink. When Flink processes stream data in real-time, it can also send part of the data to PAI for model inference. The results are merged with the real-time stream data and written into the downstream storage system, which reflects the extensibility and scalability of Flink, the computing platform.

This section shows how to use a graphical learning platform to design and develop a very simple binary classification model.

This binary classification model mainly learns to judge features from historical data of previous engines. It knows which features will be used to determine if the engine has problems and which are relatively normal values. This model lays a basis for judgment on the new engine data generated in the future, which helps business personnel predict the current engine data problems in advance.

The model has learned the relevant features and the data pattern of the historical data. The studio used in the whole process of developing this model is completely constructed by dragging and dropping, with almost no code written. It is very convenient and fast, and a model can be developed through buttons. After the model is developed, PAI can package it into a rest API and Web Service and deploy it on the PAI platform for user calls. After deployment, the services of this model are tested by test calls.

When the model is deployed, Flink can call it to determine whether there are any exceptions. After real-time processing, the stream data is written to a MySQL database.

This database will be used as a data source to provide data for the downstream real-time dashboard. As such, business personnel can see if there is any problem with the car currently driving on the road in real-time or every a few seconds.

Click the link to open the real-time dashboard.

The DataV dashboard updates the data every five seconds by default. The latest pre-telemetry data from the database, including the abnormal data, will be displayed on the dashboard every five seconds.

Red data is the data collected at this time, which means there is a problem, while blue data is normal data. The standard is completely controlled by the function computer of the previously generated simulated data. Some data that may show the engine problem is artificially added to the function computer logic, so the abnormal data in this demo occupies a larger part.

These are the two demos included in this article. If you are interested, you can use Realtime Compute for Apache Flink to build your applications.

Sort-Based Blocking Shuffle Implementation in Flink – Part 2

152 posts | 43 followers

FollowAlibaba Cloud Native - April 26, 2024

Apache Flink Community China - June 28, 2021

Apache Flink Community - April 9, 2024

Alibaba Cloud Indonesia - March 23, 2023

Alibaba Cloud Data Intelligence - July 24, 2023

Alibaba Clouder - April 25, 2021

152 posts | 43 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community