By Yingjie Cao (Kevin) & Daisy Tsang

Part 1 of this series explained the motivation behind introducing the sort-based blocking shuffle, presented benchmark results, and provided guidelines on how to use this new feature.

Like sort-merge shuffle implemented by other distributed data processing frameworks, the whole sort-based shuffle process in Flink consists of several important stages, including collecting data in memory, sorting the collected data in memory, spilling the sorted data to files, and reading the shuffle data from these spilled files. However, Flink's implementation has some core differences, including the multiple data region file structure, the removal of file merge, and IO scheduling.

Part 2 of this 2-part series will give you insight into some core design considerations and implementation details of the sort-based blocking shuffle in Flink and list several ideas for future improvements.

There are several core objectives we want to achieve for the new sort-based blocking shuffle to be implemented in Flink:

As discussed above, the hash-based blocking shuffle produces too many small files for large-scale batch jobs. Producing fewer files can help improve stability and performance. The sort-merge approach has been widely adopted to solve this problem. The number of output files can be reduced by writing to the in-memory buffer first and then sorting and spilling the data into a file after the in-memory buffer is full, which becomes (total data size) / (in-memory buffer size). Then, the number of files can be reduced further by merging the produced files, and larger data blocks can provide better sequential reads.

Flink's sort-based blocking shuffle adopts a similar logic. A core difference is that data spilling will always append data to the same file, so only one file will be spilled for each output, which means fewer files are produced.

The hash-based implementation will open all partition files when writing and reading data, which will consume resources like file descriptors and native memory. The exhaustion of file descriptors will lead to stability issues like too many open files.

Flink's sort-based blocking shuffle implementation can reduce the number of concurrently opened files significantly by always writing/reading only one file per data result partition and sharing the same opened file channel among all the concurrent data reads from the downstream consumer tasks.

Although the hash-based implementation writes and reads each output file sequentially, the large amount of writing and reading can cause random IO based on the large number of files being processed concurrently. This means that reducing the number of files can also achieve more sequential IO.

In addition to producing larger files, there are some other optimizations implemented by Flink. In the data writing phase, more writing sequential IO can be achieved by merging small output data into larger batches and writing through the writev system call. In the data reading phase, more sequential data reading IO is achieved by IO scheduling. In short, Flink tries to always read data in file offset order, which maximizes sequential reads. Please refer to the IO scheduling section for more information.

The sort-merge approach can reduce the number of files and produce larger data blocks by merging the spilled data files. One downside of this approach is that it writes and reads the same data multiple times because of the data merging. Theoretically, it may also take up more storage space than the total size of shuffle data.

Flink's implementation eliminates the data merging phase by spilling all data of one data result partition together into one file. As a result, the total amount of disk IO and storage space can be reduced. The data blocks are not merged into larger ones without the data merging. Flink can still achieve good sequential reading and high disk IO throughput with the IO scheduling technique. The benchmark results from Part 1 show more information.

Similar to the sort-merge implementation in other distributed data processing systems, Flink's implementation uses a piece of fixed size (configurable) in-memory buffer for data sorting. The buffer does not necessarily need to be extended after the task parallelism is changed, but increasing the size may lead to better performance for large-scale batch jobs.

Note: This only decouples the memory consumption from the parallelism at the data producer side. On the data consumer side, there is an improvement that works for streaming and batch jobs. (Please see FLINK-16428).

Here are several core components and algorithms implemented in Flink's sort-based blocking shuffle:

In the sort-spill phase, data records are serialized to the in-memory sort buffer first. When the sort buffer is full or all output has been finished, the data in the sort buffer will be copied and spilled into the target data file in a specific order. The following is the sort buffer interface in Flink:

public interface SortBuffer {

/** Appends data of the specified channel to this SortBuffer. */

boolean append(ByteBuffer source, int targetChannel, Buffer.DataType dataType) throws IOException;

/** Copies data in this SortBuffer to the target MemorySegment. */

BufferWithChannel copyIntoSegment(MemorySegment target);

long numRecords();

long numBytes();

boolean hasRemaining();

void finish();

boolean isFinished();

void release();

boolean isReleased();

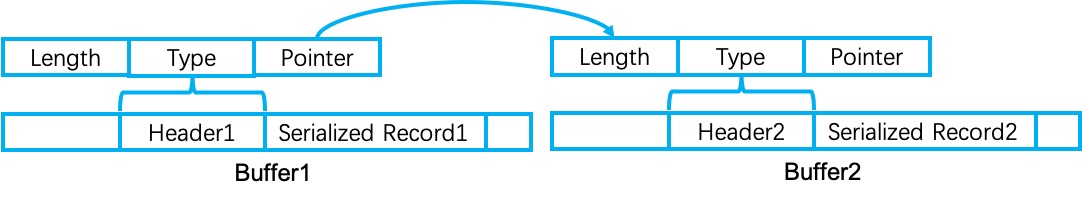

}Currently, Flink does not need to sort records by key on the data producer side, so the default implementation of sort buffer only sorts data by subpartition index, which is achieved by binary bucket sort. More specifically, each data record will be serialized and attached to a 16 bytes binary header. Among the 16 bytes, 4 bytes are for the record length, 4 bytes are for the data type (event or data buffer), and 8 bytes are for pointers to the next records belonging to the same subpartition to be consumed by the same downstream data consumer. When reading data from the sort buffer, all records of the same subpartition will be copied one by one, following the pointer in the record header. This guarantees that the order of record reading/spilling for each subpartition is the same order as when the record is emitted by the producer task. The following picture shows the internal structure of the in-memory binary sort buffer:

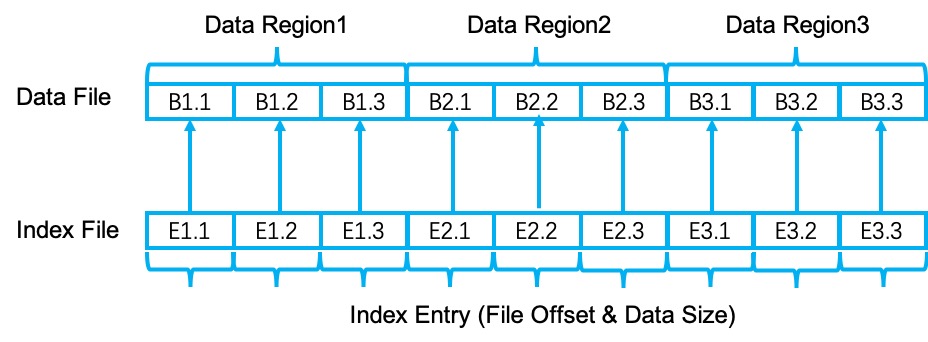

The data of each blocking result partition is stored as a physical data file on the disk. The data file consists of multiple data regions; one data spilling produces one data region. In each data region, the data is clustered by the subpartition ID (index), and each subpartition corresponds to one data consumer.

The following picture shows the structure of a simple data file. This data file has three data regions (R1, R2, R3) and three consumers (C1, C2, C3). Data blocks B1.1, B2.1, and B3.1 will be consumed by C1, data blocks B1.2, B2.2, and B3.2 will be consumed by C2, and data blocks B1.3, B2.3, and B3.3 will be consumed by C3.

In addition to the data file, there is an index file that contains pointers to the data file for each result partition. The index file has the same number of regions as the data file. In each region, there are n (equals to the number of subpartitions) index entries. Each index entry consists of two parts: one is the file offset of the target data in the data file, and the other is the data size. Flink caches the index data using unmanaged heap memory if the index data file size is less than 4M to reduce the disk IO caused by index data file access. The following picture illustrates the relationship between the index file and data file:

Based on the storage structure described above, we introduced the IO scheduling technique to achieve more sequential reads for the sort-based blocking shuffle in Flink. The core idea behind IO scheduling is pretty simple. Just like the elevator algorithm for disk scheduling, the IO scheduling for sort-based blocking shuffle always tries to serve data read requests in the file offset order. More formally, we have n data regions indexed from 0 to n-1 in a result partition file. In each data region, there are m data subpartitions to be consumed by m downstream data consumers. These data consumers read data concurrently.

// let data_regions as the data region list indexed from 0 to n - 1

// let data_readers as the concurrent downstream data readers queue indexed from 0 to m - 1

for (data_region in data_regions) {

data_reader = poll_reader_of_the_smallest_file_offset(data_readers);

if (data_reader == null)

break;

reading_buffers = request_reading_buffers();

if (reading_buffers.isEmpty())

break;

read_data(data_region, data_reader, reading_buffers);

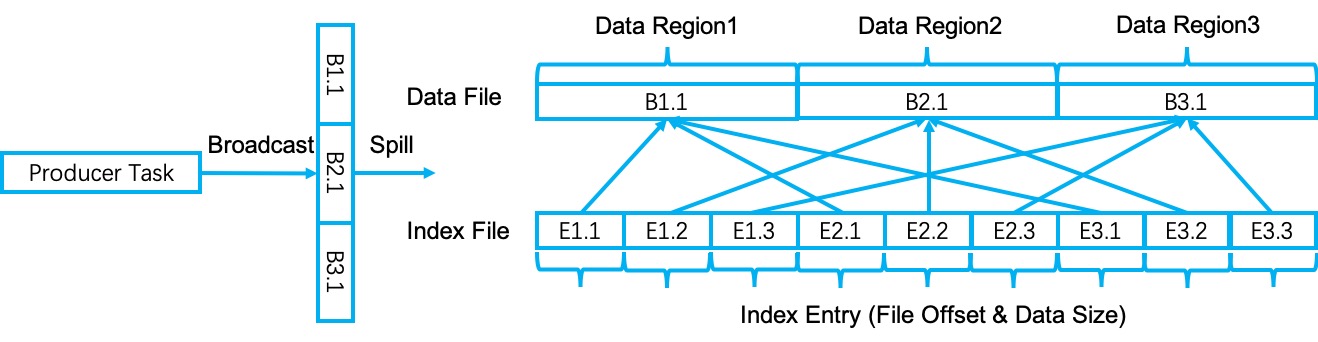

}Shuffle data broadcast in Flink refers to sending the same collection of data to all the downstream data consumers. Instead of copying and writing the same data multiple times, Flink optimizes this process by only copying and spilling the broadcast data once, which improves the data broadcast performance.

More specifically, the record will be copied and stored once when broadcasting a data record to the sort buffer. A similar thing happens when spilling the broadcast data into files. For index data, the only difference is that all the index entries for different downstream consumers point to the same data in the data file.

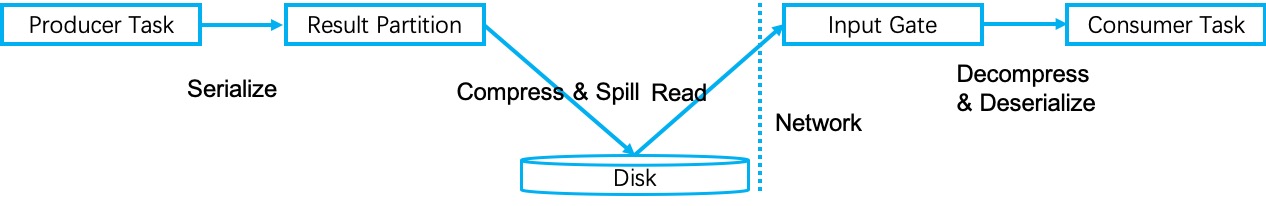

Data compression is a simple but really useful technique to improve blocking shuffle performance. Similar to the data compression implementation of the hash-based blocking shuffle, data is compressed per buffer after it is copied from the in-memory sort buffer and before it is spilled to the disk. If the data size becomes even larger after compression, the original uncompressed data buffer will be kept. Then, the corresponding downstream data consumers are responsible for decompressing the received shuffle data when processing it. The sort-based blocking shuffle reuses those building blocks implemented for the hash-based blocking shuffle directly. The following picture illustrates the shuffle data compression process:

Sort-Based Blocking Shuffle Implementation in Flink – Part 1

A Demo of the Scenario Solution Based on Realtime Compute for Apache Flink

206 posts | 56 followers

FollowApache Flink Community China - December 20, 2021

Apache Flink Community China - March 17, 2023

Apache Flink Community China - February 28, 2022

Apache Flink Community China - July 4, 2023

Apache Flink Community China - November 12, 2021

Apache Flink Community - July 18, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community