By Yingjie Cao (Kevin) & Daisy Tsang

Part 1 of this 2-part series will explain the motivation behind introducing the sort-based blocking shuffle, present benchmark results, and provide guidelines on how to use this new feature.

Data shuffling is an important stage in batch processing applications and describes how data is sent from one operator to the next. In this phase, output data of the upstream operator will spill over to persistent storages like disks. Then, the downstream operator will read the corresponding data and process it. Blocking shuffle means that intermediate results from operator A are not sent immediately to operator B until operator A has completely finished.

The hash-based and sort-based blocking shuffles are the two main blocking shuffle implementations widely adopted by existing distributed data processing frameworks:

The sort-based blocking shuffle was introduced in Flink 1.12. It was optimized further and made production-ready in 1.13 for stability and performance. We hope you enjoy the improvements, and any feedback is highly appreciated.

The hash-based blocking shuffle has been supported in Flink for a long time. However, compared to the sort-based approach, it can have several weaknesses:

Fewer data files will be created and opened, and more sequential reads are done after introducing the sort-based blocking shuffle implementation. As a result, better stability and performance can be achieved.

Moreover, the sort-based implementation can save network buffers for large-scale batch jobs. For the hash-based implementation, the network buffers needed for each output result partition are proportional to the consumers' parallelism. For the sort-based implementation, the network memory consumption can be decoupled from the parallelism, which means that a fixed size of network memory can satisfy requests for all result partitions, though more network memory may lead to better performance.

Aside from the problem of consuming too many file descriptors and inodes mentioned in the section above, the hash-based blocking shuffle also has a known issue of creating too many files, which blocks the TaskExecutor's main thread (FLINK-21201). In addition, some large-scale jobs like q78 and q80 of the tpc-ds benchmark failed to run on the hash-based blocking shuffle in our tests because of the "connection reset by peer" exception, which is similar to the issue reported in FLINK-19925. (Reading shuffle data by Netty threads can influence network stability.)

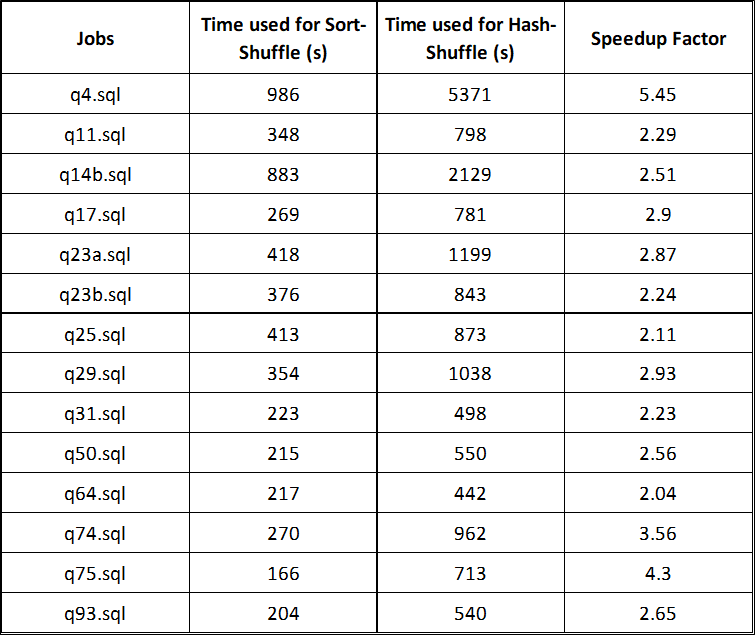

We ran the tpc-ds test suit (10T scale with 1050 max parallelism) for both the hash-based and the sort-based blocking shuffle. The results show that the sort-based shuffle can achieve 2-6 times more performance gains compared to the hash-based shuffle on spinning disks. If we exclude the computation time, some jobs can achieve up to 10 times performance gains. Here are some performance results of our tests:

The throughput per disk of the new sort-based implementation can reach up to 160MB/s for writing and reading on our testing nodes:

| Disk Name | Disk SDI | Disk SDJ | Disk SDK |

| Writing Speed (MB/s) | 189 | 173 | 186 |

| Reading Speed (MB/s) | 112 | 154 | 158 |

Note: The following table shows the settings of our test cluster. Those small shuffle size jobs will exchange their shuffle data purely via memory (page cache) because we have a large available memory size per node. As a result, evident performance differences are only seen between jobs that shuffle a large amount of data.

| Number of Nodes | Memory Size Per Node | Cores Per Node | Disks Per Node |

| 12 | About 400G | 96 | 3 |

The sort-based blocking shuffle is introduced mainly for large-scale batch jobs, but it also works well for batch jobs with low parallelism.

The sort-based blocking shuffle is not enabled by default. You can enable it by setting the taskmanager.network.sort-shuffle.min-parallelism config option to a smaller value. This means the hash-based blocking shuffle will be used for parallelism smaller than this threshold. Otherwise, the sort-based blocking shuffle will be used (since it has no influence on streaming applications.) Setting this option to 1 will disable the hash-based blocking shuffle.

You should use the sort-based blocking shuffle for spinning disks and large-scale batch jobs. Both implementations should be fine for low parallelism (several hundred processes or fewer) on solid-state drives.

There are several other config options that can have an impact on the performance of the sort-based blocking shuffle:

For more information about blocking shuffle in Flink, please refer to the official documentation.

Note: Once you get to the optimization mechanism in Part 2, we can see that the IO scheduling relies on the concurrent data read requests of the downstream consumer tasks for more sequential reads. As a result, if the downstream consumer task is running one by one (for example, because of limited resources), the advantage brought by IO scheduling disappears, which can influence performance. We may optimize this scenario further in future versions.

Learn details on the design and implementation of this feature in Part 2 of this article!

Kwai Builds Real-Time Data Warehouse Scenario-Based Practice on Flink

Sort-Based Blocking Shuffle Implementation in Flink – Part 2

206 posts | 56 followers

FollowApache Flink Community China - December 20, 2021

Apache Flink Community China - March 17, 2023

Apache Flink Community China - February 28, 2022

Apache Flink Community China - July 4, 2023

Apache Flink Community China - November 12, 2021

Apache Flink Community - July 18, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community