This article is compiled from the topic entitled "Kwai Builds Real-Time Data Warehouse Scenario-Based Practice on Flink" shared by Li Tianshuo, an expert in Kwai Data Technology, during a Flink Meetup at Beijing Station on May 22. The contents include:

Visit the GitHub page. You are welcome to give it a like and stars!

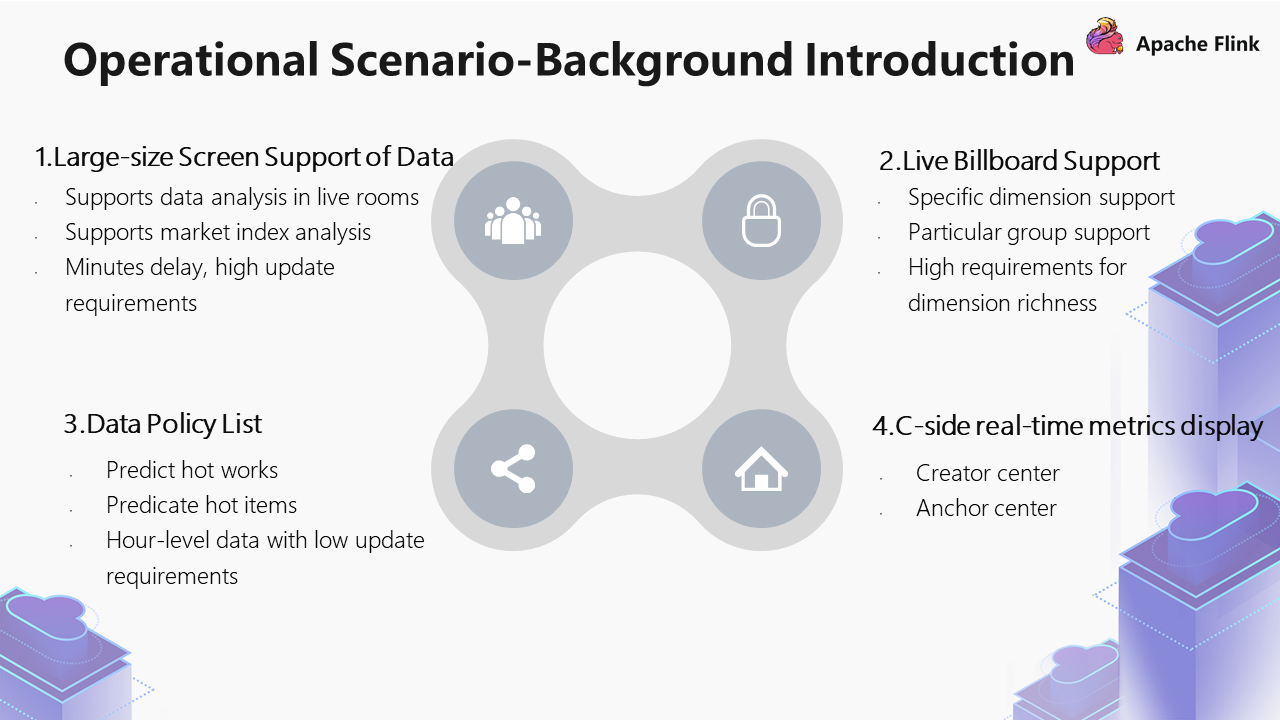

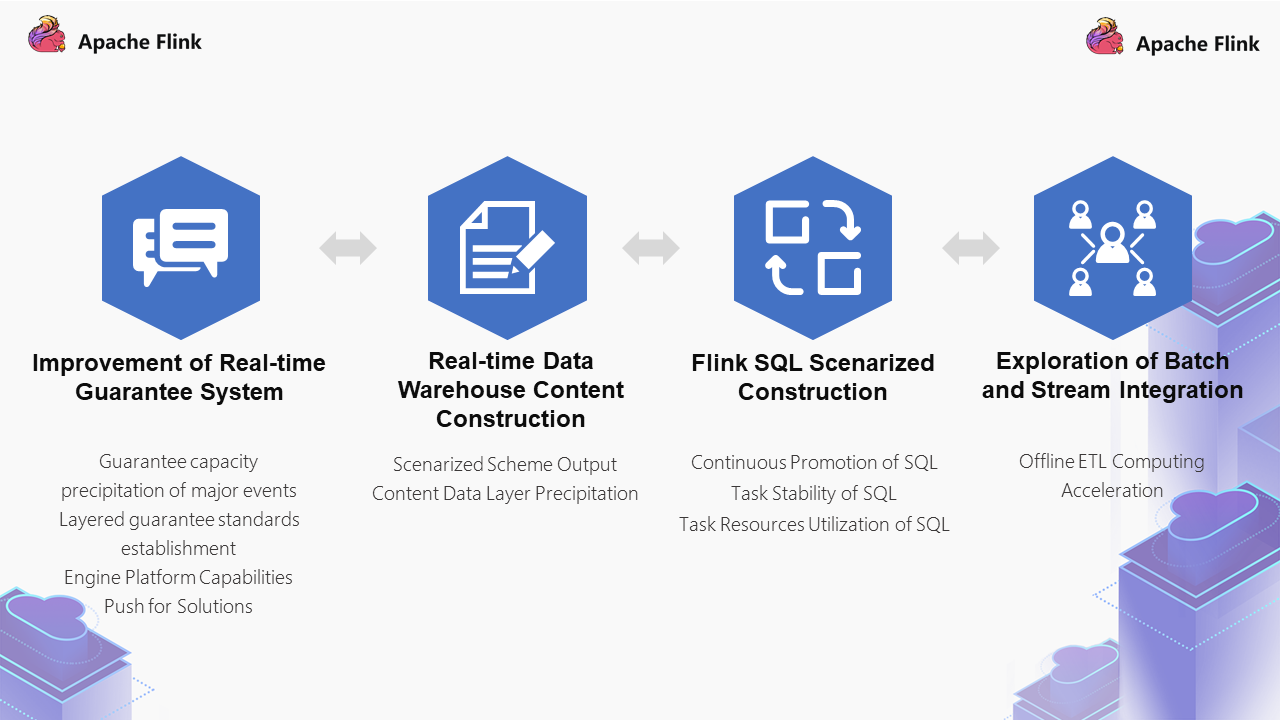

The real-time computing scenarios in Kwai business are divided into four main components:

It also includes the support of operational strategies. For example, we may discover some hot content, creators, and situations in real-time. We will output strategies based on these hot spots, which are also some support capabilities we need to provide.

Finally, it also includes the C-end data display. For example, Kwai has a creator center and an anchor center. There will be some closed broadcast pages, such as anchor closed broadcast. We also made some of the real-time data of closed broadcast pages.

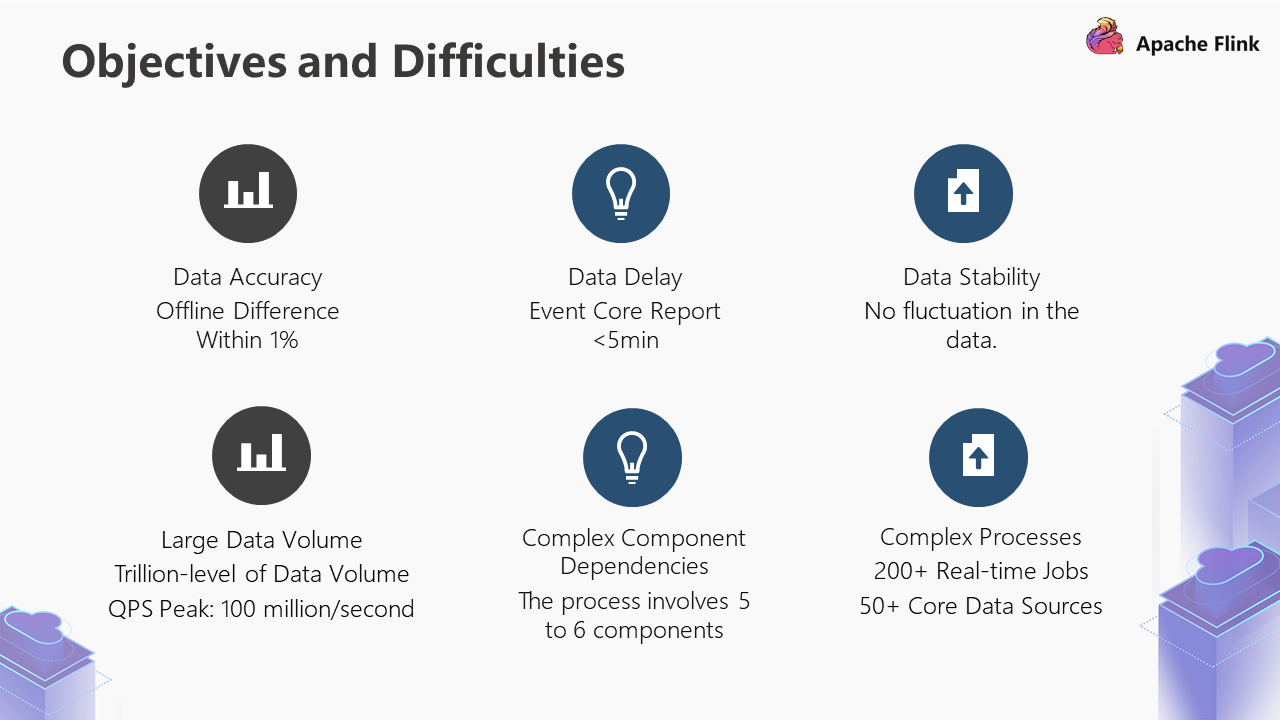

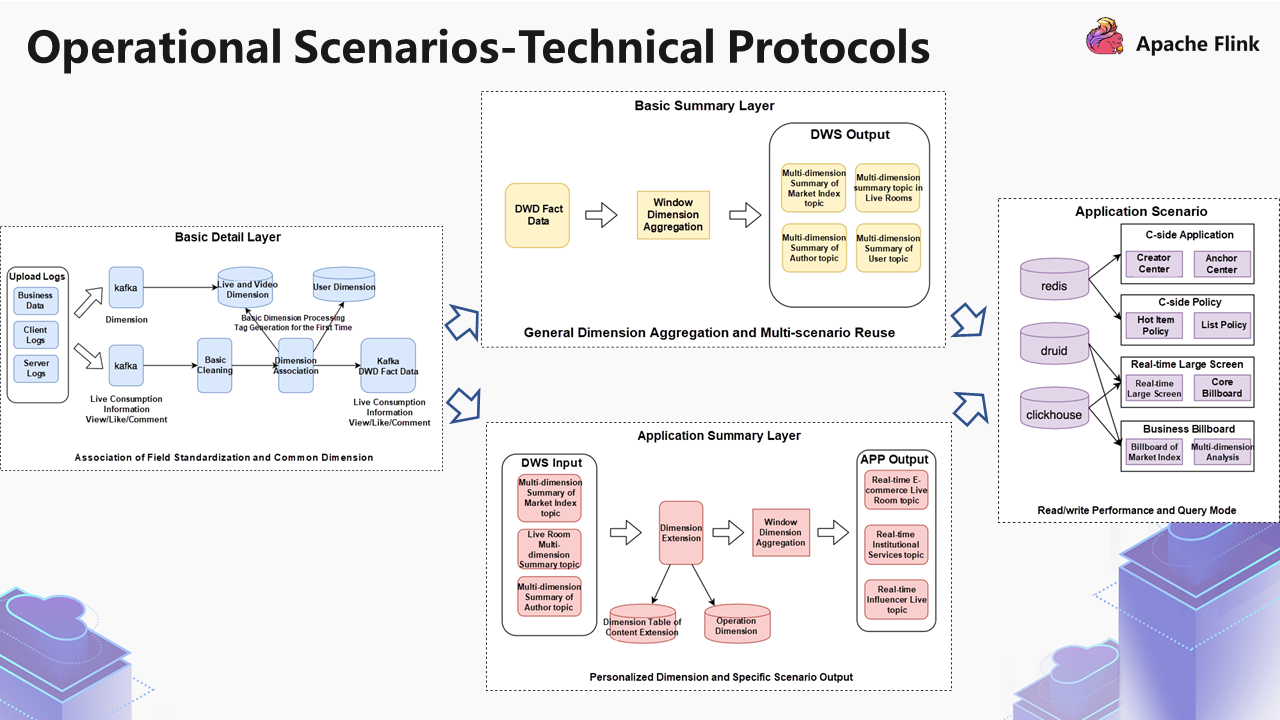

Based on the three difficulties above, we will take a look at the data warehouse architecture on the image below:

As shown above:

The overall processes can be divided into three steps:

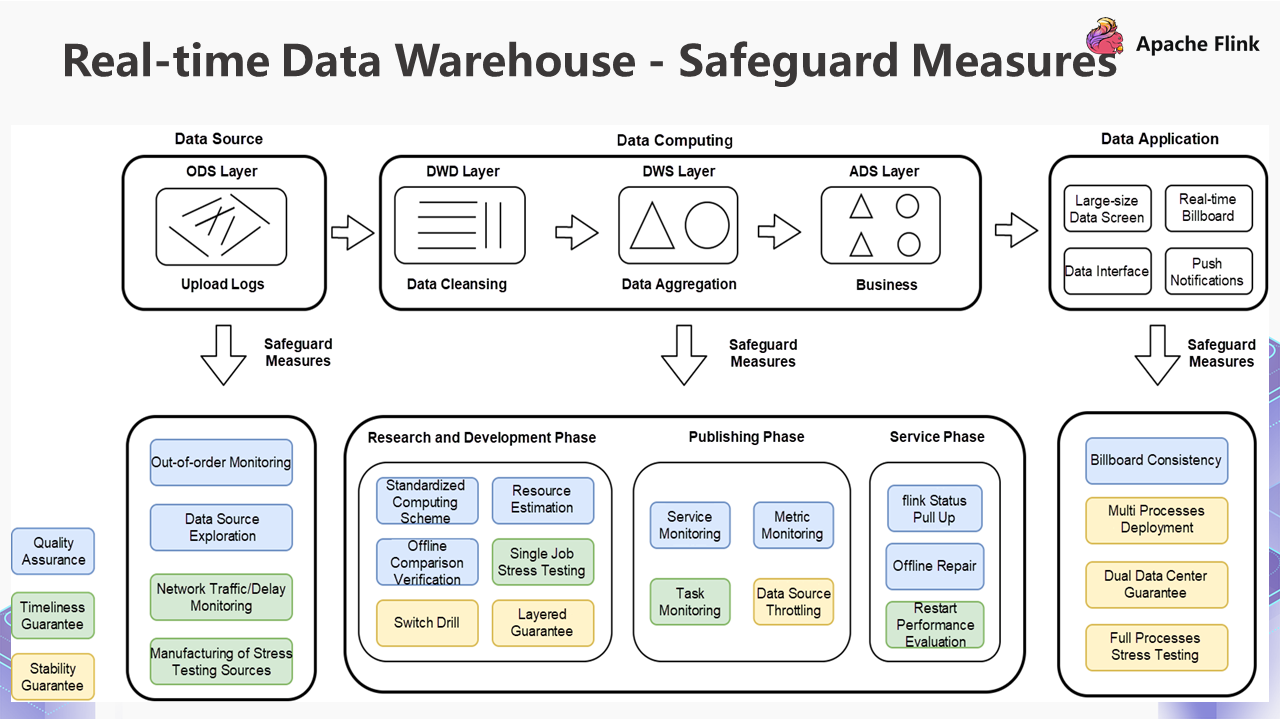

Based on the layer model above, we will take a look at the overall safeguard measures on the image below:

The guarantee layer is divided into three different parts: quality assurance, timeliness guarantee, and stability guarantee.

We will look at the quality assurance of the blue part first. For quality assurance, we have done out-of-order monitoring of data sources in the data source stage, which is based on our SDK collection, data sources, and offline consistency calibration. The computing process in the R&D phase is made up of three stages: the research and development phase, the online phase, and the service phase.

The second is the timeliness guarantee. For data sources, we also monitor the delay of data sources. There are two important things in the research and development stage:

The last one is the stability guarantee, which will be used more in large-scale activities, such as switching drills and layered guarantee. We will perform throttling based on the previous stress testing results. The purpose is to ensure the job is still stable if it exceeds the limit. There will be no instability or CP failure. After that, we will have two different standards, one is a cold standby dual data center, and the other is a hot standby dual data center.

These are the overall safeguard measures.

The first problem is PV/UV standardization. Take a look at the three screenshots below:

The first picture shows the warm-up scene of the Spring Festival Gala. This is a game page. The second and third pictures are the screenshots of the red envelopes sending activities and live room on the day of the Spring Festival Gala.

During the activity, we found that 60%-70% of the demands are to calculate the information on the page, such as:

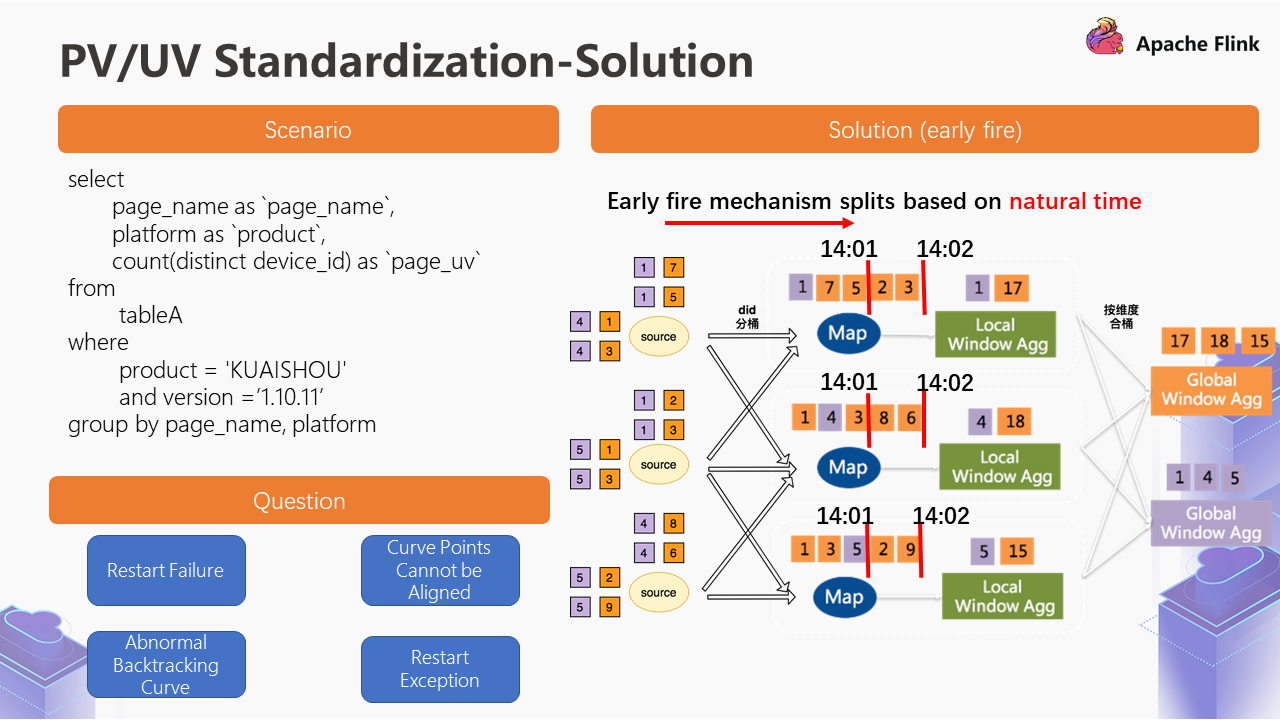

The following SQL represents the abstraction of this scenario:

Simply put, we filter conditions from a table, aggregate them according to the dimension level, and generate some Count or Sum operations.

Based on this scenario, the initial solution is shown on the right side of the figure above.

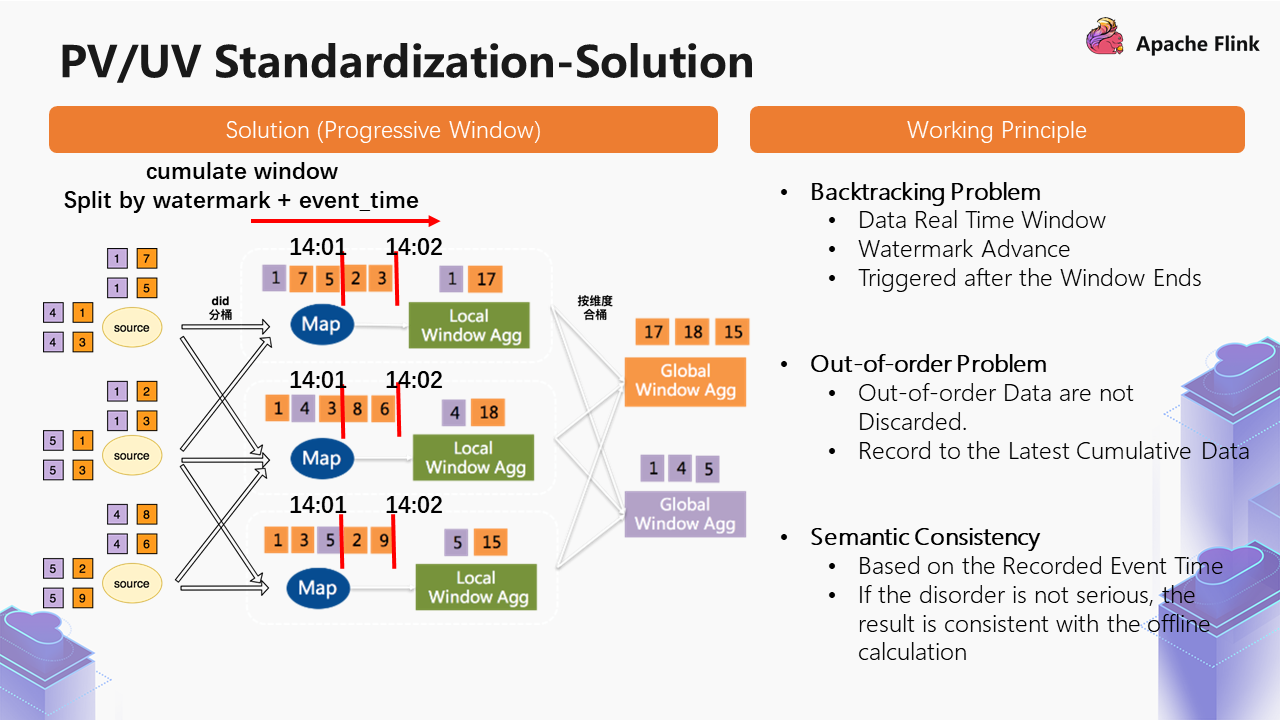

We adopted the Early Fire mechanism of Flink SQL to retrieve data from the Source data sources and then carry out the DID bucketing. For example, the purple part is divided into buckets according to this at the beginning. The reason for dividing buckets is to avoid hot spots problems of a certain DID. After bucketing, something called Local Window Agg will appear, adding the same type of data after the data bucketing. Local Window Agg is followed by the combined bucket of the Global Window Agg based on the dimensions. The concept of the combined bucket is to calculate the final results based on the dimensions. The Early Fire mechanism is to open a day-level window on the Local Window Agg and then output it to the outside every minute.

We encountered some problems in this process, as shown in the lower-left corner of the figure above.

However, if there is a delay in the overall data or the backtracking history data (for example, Early Fire is performed once a minute), the data volume will be larger when the backtracking history is performed. This may lead to the data at 14:02 being read directly when the retroactive history is performed at 14:00, and the data at the time of 14:01 will be lost. What happens if the data are lost?

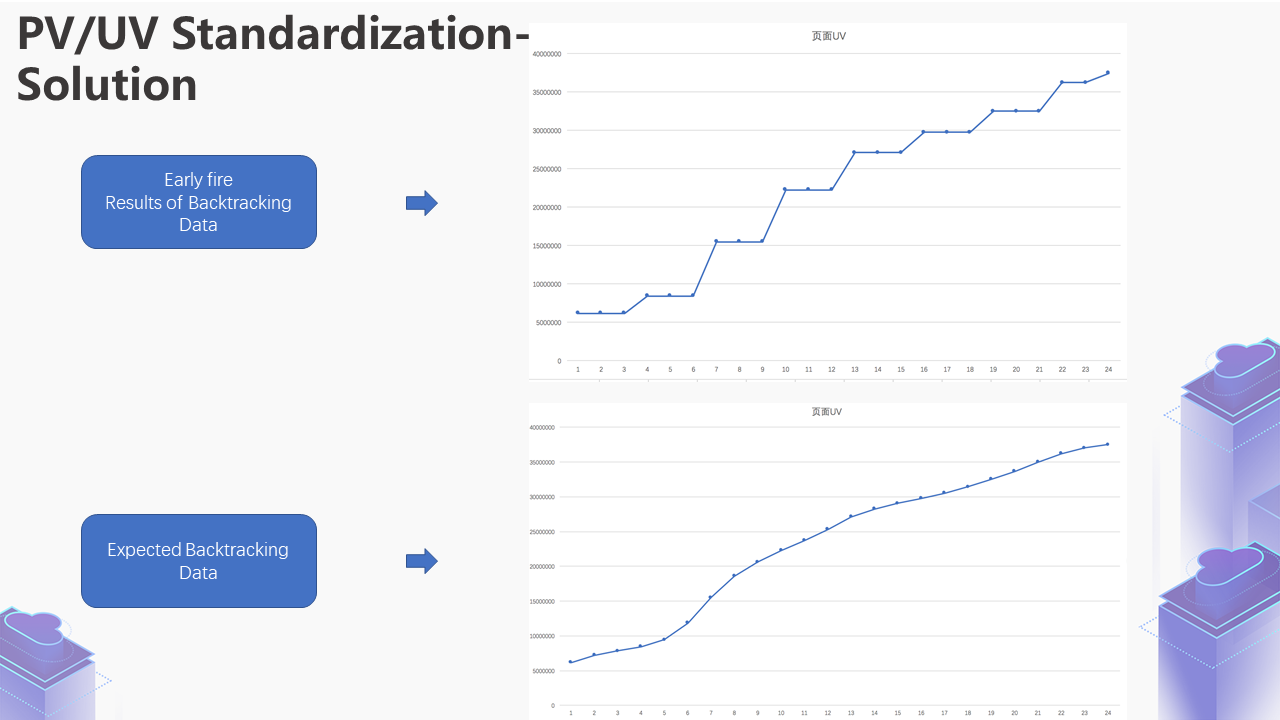

In this scenario, the curve at the top of the figure is the result of Early Fire backtracking historical data. The horizontal axis represents minutes, while the vertical axis represents the page UV up to the current moment. We found that some points are horizontal, meaning there is no data result. The curve depicts a steep increase. Then, it becomes a horizontal one. Later, it has another steep increase. The expected result of this curve is the smooth curve at the bottom of the figure.

We used the Cumulate Window solution to solve this problem, which is also involved in Flink 1.13 version, and its principle is the same.

The data opens a large day-level window, and a small minute-level window is opened under the large one. The data falls to the minute-level window according to the Row Time:

event_time of the window, and it will be triggered once. This way, the problem of backtracking can be solved. The data fall on the real window, and Watermark advances, which will be triggered after the window ends.The section above is a standardized solution for PV/UV.

The following section introduces the DAU compute:

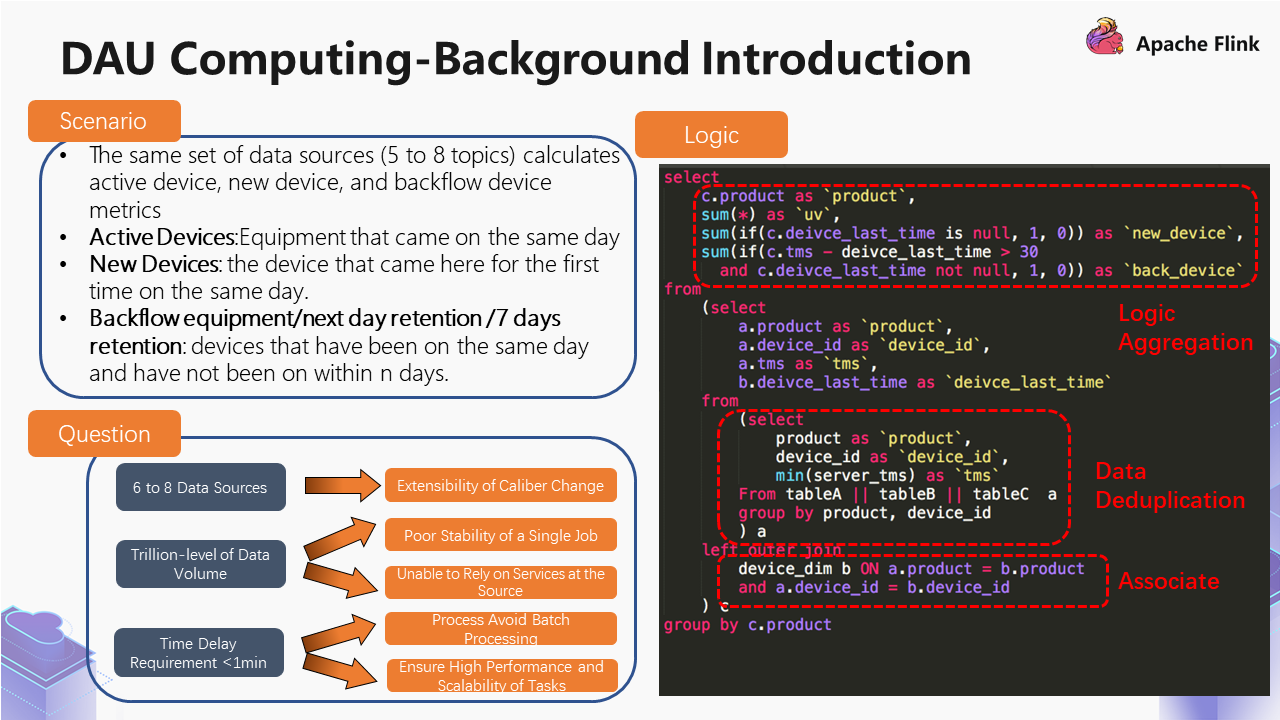

We monitored active devices, new devices, and backflow devices across the market index:

However, we may need 5-8 different topics to calculate these metrics.

We will take a look at how logic be calculated in the offline process.

First of all, we calculate the active devices, merge them, and do the day-level deduplication under a dimension. Then, we associate the dimension table, which includes the first and last time of the device. It refers to the time of the first and last access of the devices up to yesterday.

Once we get the information, we can perform logical computing. Then, we will find that the new devices and backflow devices are sub-tags in the active devices. The new devices will perform logical processing, and the backflow devices will perform logical processing for 30 days. Based on this solution, can we write a SQL to solve this problem?

We did this at the beginning, but we encountered some problems:

In view of the problems above, we will next explain how to solve them below:

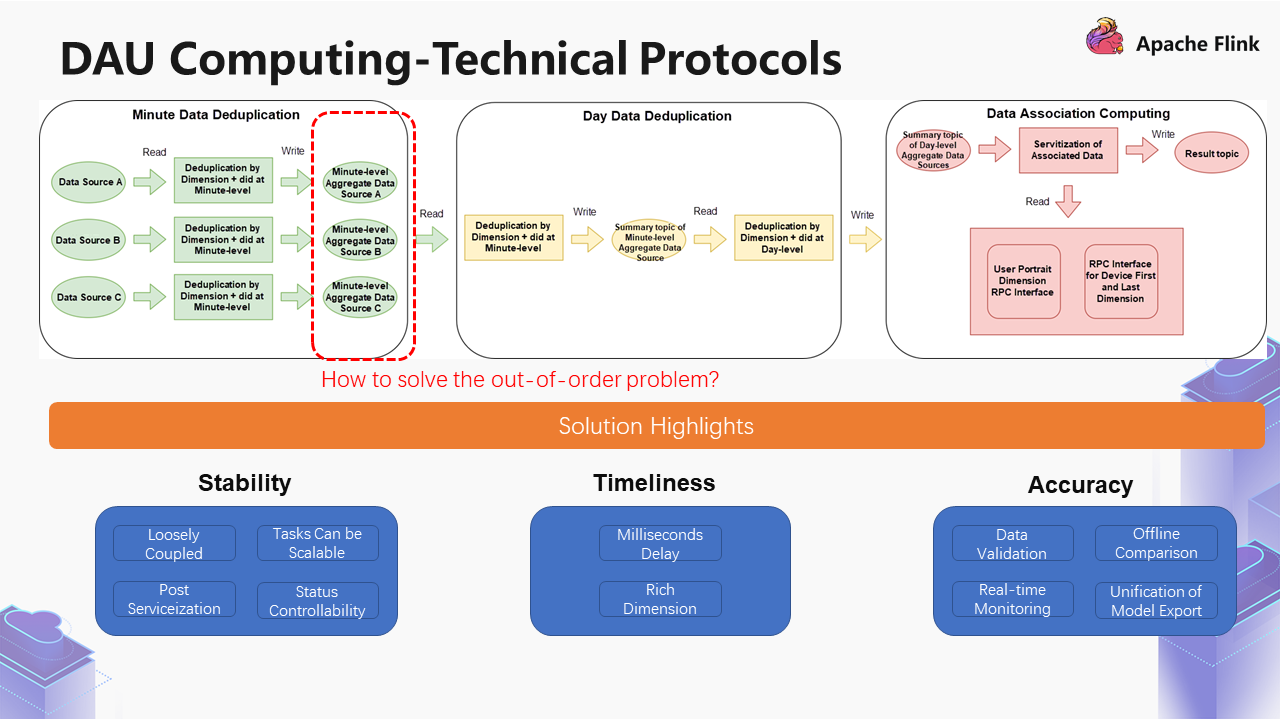

As shown in the example above, the first step is to deduplicate the three data sources (A B C) at the minute level according to the dimension and DID. After deduplication, three data sources at the minute level are obtained. Then, they are unionized together, and the same logical operations are performed.

The entry of the data sources changes from the trillions level to the tens of billions level. After the minute level is deduplicated, the generated data sources can change from the tens of billions level to the billions level.

In the case of the billions level of data, we will associate data servitization, which is a more feasible scheme. It associates the RPC interface of the user portrait. After obtaining the RPC interface, it is finally written into the target topic. This target topic will be imported into the OLAP engine to provide multiple different services, including mobile version service, large-size screen service, and metrics billboard service.

This scheme has three advantages, including stability, timeliness, and accuracy.

In this case, we encountered another problem-disorder. For the three different jobs above, there will be a delay of at least two minutes for each job to restart. The delay will cause the downstream data sources to be unconnected.

What could we do if we encounter the disorder above?

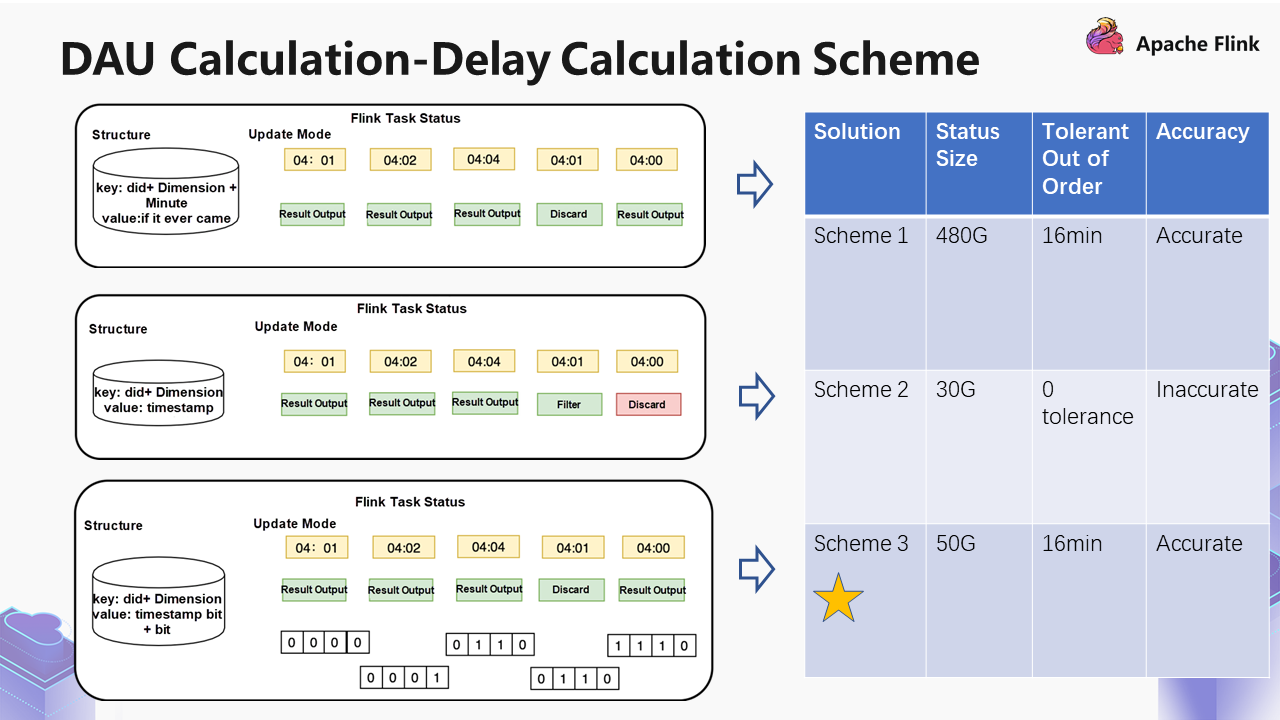

We have three solutions:

Some problems will occur with this solution. Since we save it by minute, the size of the status of saving 20 minutes is twice the size of saving 10 minutes. Later, the size of this status is uncontrollable; therefore, we changed to solution 2.

At 04:01, a piece of data comes, and the result is output. At 04:02, a piece of data comes. If it is the same DID, it will update the timestamp and still carry out the result output. 04:04 follows the same logic, and it updates the timestamp to 04:04. If a piece of 04:01 data comes later, it finds that the timestamp has been updated to 04:04, and it will discard this data.

This approach reduces some of the required statuses by itself, but there is zero tolerance for disorder. Since we are not good at solving this problem, we have come up with solution 3.

For example, a piece of data comes at 04:01, and the result is output. When a piece of data comes at 04:02, it will update the timestamp to 04:02 and record that the same device came at 04:01. If there is another piece of data at 04:04, it will make a displacement according to the corresponding time difference through this logic to ensure that it can tolerate a certain amount of disorder.

Take a look at these three solutions below:

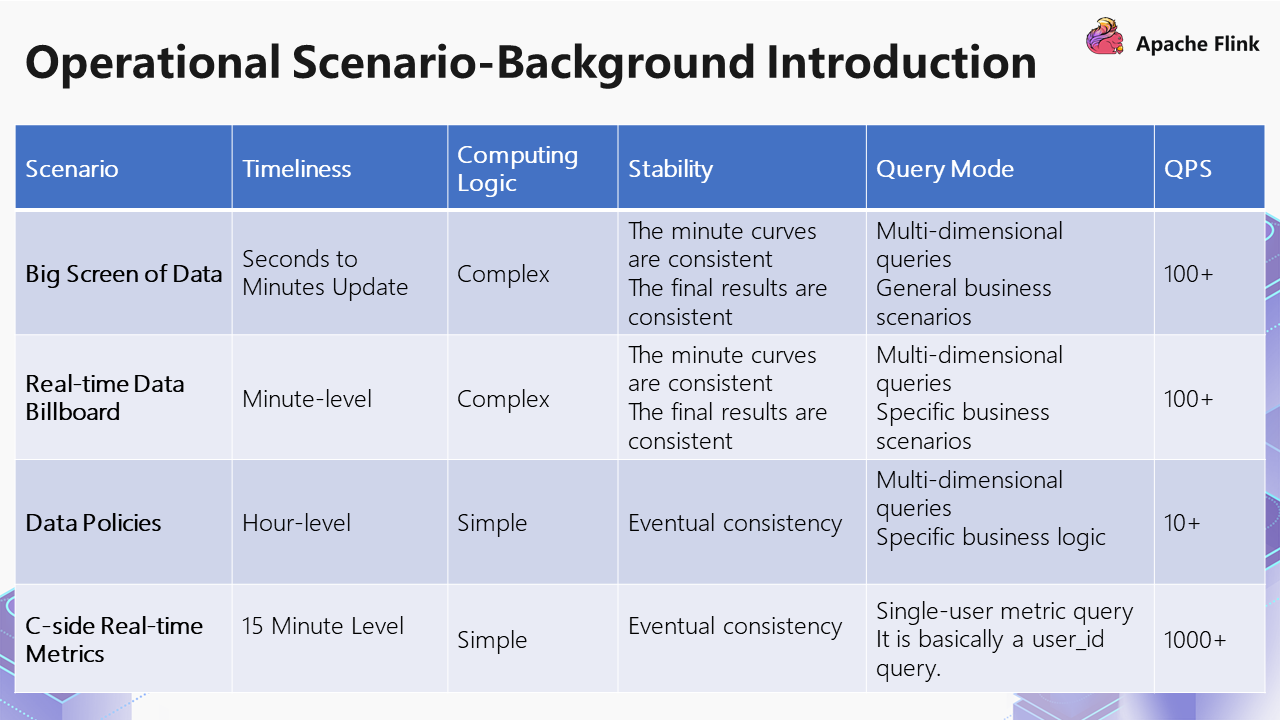

The operation scenarios can be divided into four parts:

The following is an analysis of the different scenarios generated by these four different statuses.

There is virtually no difference among the first three types except for the query mode. Some are specific business scenarios, and some are general business scenarios.

The third and fourth types have low requirements for update and high requirements for throughput, but the curves in the processes do not require consistency. The fourth query mode is more of a single entity query, querying what metrics will be used. The QPS requirements are high.

What could we do for the four different scenarios above?

After these dimensions are associated, they are finally written to the DWD fact layer of Kafka. We have performed the operation of Level 2 cache to improve performance.

Dividing into these two processes would have one benefit: One place deals with the general dimension, and the other deals with the personalized dimension. The requirements for the general dimension guarantee will be higher, while the personalized dimension will carry out a lot of personalized logic. If these two are coupled together, exceptions will occur often during the task. The responsibilities of each task are not clear, and such a stable layer cannot be constructed.

Three scenarios were introduced above. The first scenario is the computing of standardized PU/UV, the second scenario is the overall solution of DAU, and the third scenario is how to solve the problems on the operation side. We have some future plans based on this content, which are divided into 4 parts.

The first part is the improvement of the real-time guarantee system:

The second part is the real-time data warehouse content construction:

Sort-Based Blocking Shuffle Implementation in Flink – Part 1

156 posts | 45 followers

FollowAlibaba Cloud New Products - January 19, 2021

Apache Flink Community China - December 25, 2019

Apache Flink Community China - June 28, 2021

Alibaba Cloud MaxCompute - February 17, 2021

Apache Flink Community China - July 28, 2020

Apache Flink Community - June 14, 2024

156 posts | 45 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community