By Li Jinsong (Zhixin) and Li Rui (Tianli).

The features of Apache Flink 1.11 have been frozen. The stream-batch integrated data warehouse is a highlight in the new version. In this article, I will introduce the improvements of the stream-batch integrated data warehouse in Apache Flink 1.11. The official version will be released soon.

First, there is some good news! The Blink planner is now the default in the Table API/SQL.

In Apache Flink 1.11, stream computing combined with the Hive batch processing data warehouse provides real-time and exactly-once capabilities of stream processing of Apache Flink (Flink) for offline data warehouses. In addition, Apache Flink 1.11 has perfected the Filesystem connector, which makes Flink easier to use.

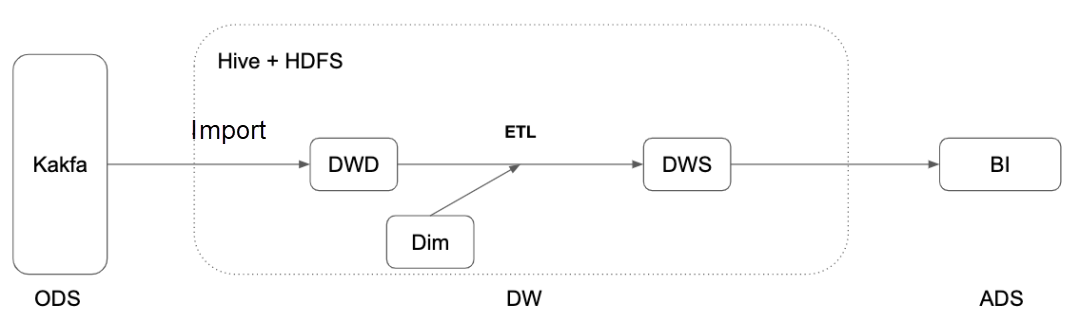

Traditional offline data warehouses are developed based on Hive and the Hadoop Distributed File System (HDFS.) A Hive data warehouse features mature and stable big data analytics capabilities. With scheduling and upstream and downstream tools, it builds a complete data processing and analytics platform:

The problems with this process are:

With the popularity of real-time computing, an increasing number of companies have started to use real-time data warehouses because the features of offline data warehouses cannot meet their requirements. Based on Kafka and Flink Streaming, a real-time data warehouse defines the stream computing jobs throughout the process in seconds or milliseconds.

Historical data can be saved in the real-time data warehouse for only 3 to 15 days. Ad-hoc queries cannot be performed in the real-time data warehouse. If you build an offline and real-time Lambda architecture, maintenance, computing and storage, consistency, and repeated development are heavy burdens.

To solve the problems of offline data warehouses, Apache Flink 1.11 provides Hive data warehouses with the real-time capability. This can enhance the real-time performance in each section without placing overly heavy burdens on the architecture.

How do you import real-time data into a Hive data warehouse? Do you use Flume, Spark Streaming, or Flink DataStream? The streaming file sink at the Table API/SQL layer is finally coming! Apache Flink 1.11 supports the streaming sink [2] of the Filesystem connector [1] and the Hive connector.

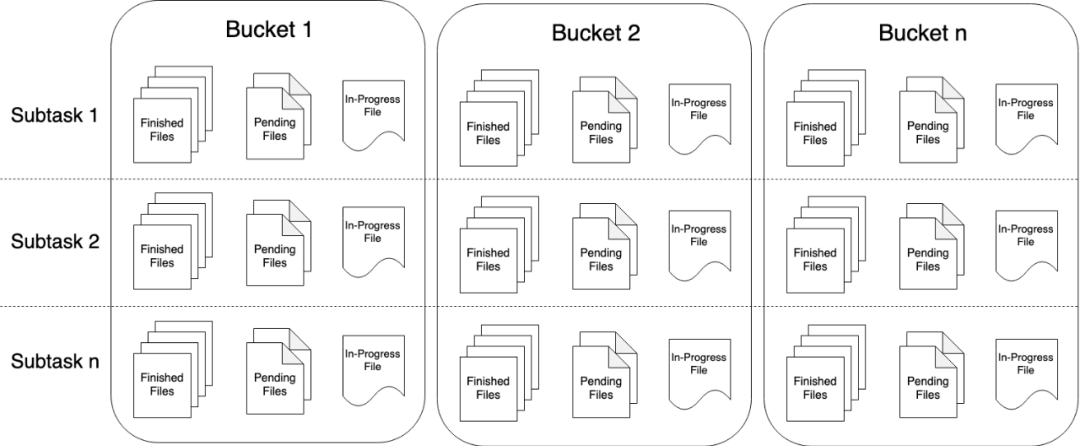

(Note: The concept of bucket in StreamingFileSink in this figure is equivalent to the concept of partition in the Table API/SQL.)

The streaming sink at the Table API/SQL layer has the following features:

StreamingFileSink, such as exactly-once, and has support for HDFS and S3, and introduces a new mechanism: partition commit.A reasonable data import into a data warehouse involves the writing of data files and partition commit. When a certain partition finishes writing, Flink must notify the Hive Metastore that the partition has finished writing, or a SUCCESS file must be added to the corresponding folder. In Apache Flink 1.11, the partition commit mechanism allows you to do the following things:

SUCCESS file and Metastore commit. You can also extend commit implementations. For example, during commit, you can trigger the Hive analysis to generate statistics or merge small files.For example:

-- Use the Hive DDL syntax with the Hive dialect.

SET table.sql-dialect=hive;

CREATE TABLE hive_table (

user_id STRING,

order_amount DOUBLE

) PARTITIONED BY (

dt STRING,

hour STRING

) STORED AS PARQUET TBLPROPERTIES (

-- Determine when to commit a partition according to the time extracted from the partition and the watermark.

'sink.partition-commit.trigger'='partition-time',

-- Configure an hour-level partition time extraction policy. In this example, the dt field represents the day in yyyy-MM-dd format and hour is from 0 to 23. timestamp-pattern defines how to extract a complete timestamp from the two partition fields.

'partition.time-extractor.timestamp-pattern'='$dt $ hour:00:00',

-- Configure delay to the hour level. When watermark is greater than the partition time plus one hour, you can commit the partition.

'sink.partition-commit.delay'='1 h',

-- The partition commit policy is to update metastore(addPartition) before the writing of the SUCCESS file.

'sink.partition-commit.policy.kind'='metastore,success-file'

)

SET table.sql-dialect=default;

CREATE TABLE kafka_table (

user_id STRING,

order_amount DOUBLE,

log_ts TIMESTAMP(3),

WATERMARK FOR log_ts AS log_ts - INTERVAL '5' SECOND

)

-- Dynamically specify table properties [3] by using table hints.

INSERT INTO TABLE hive_table SELECT user_id, order_amount, DATE_FORMAT(log_ts, 'yyyy-MM-dd'), DATE_FORMAT(log_ts, 'HH') FROM kafka_table;A large number of ETL jobs exist in the Hive data warehouse. These jobs are often run periodically with a scheduling tool. This causes two major problems:

For these offline ETL jobs, Apache Flink 1.11 provides real-time Hive streaming reading:

You can use a 10-minute partition policy. Flink's Hive streaming source and Hive streaming sink can greatly improve the real-time performance of a Hive data warehouse to the quasi-real-time minute level. Meanwhile, they also support full ad-hoc queries for a table to improve flexibility.

SELECT * FROM hive_table

/*+ OPTIONS('streaming-source.enable'='true',

'streaming-source.consume-start-offset'='2020-05-20') */;After the integration of Flink and Hive was released, most of the customers hope that Flink's real-time data can be associated with offline Hive tables. Therefore, Apache Flink 1.11 supports the temporal join [6] of real-time tables and Hive tables. Let's use an example from Flink's official documentation. Assume that Orders is a real-time table and LatestRates is a Hive table. You can perform the temporal join by using the following statements:

SELECT

o.amout, o.currency, r.rate, o.amount * r.rate

FROM

Orders AS o

JOIN LatestRates FOR SYSTEM_TIME AS OF o.proctime AS r

ON r.currency = o.currencyCurrently, the temporal join against Hive tables only supports only processing time. We cache the data of Hive tables to memory and update the cached data at regular intervals. You can specify the interval for updating the cache by using lookup.join.cache.ttl. The default interval is one hour.

The lookup.join.cache.ttl parameter must be configured to the properties of Hive tables, so each table supports different configurations. In addition, you must load each entire Hive table into memory, so this feature only applies to scenarios that involve small Hive tables.

Flink on Hive users cannot use Data Definition Language (DDL) easily for the following reasons:

To solve the preceding two problems, we proposed FLIP-123 [7], which provides users with Hive syntax compatibility through the Hive dialect. The ultimate goal of this feature is to provide users with an experience similar to Hive CLI or Beeline. With this feature, you do not have to switch between Flink and Hive CLI. You can migrate some Hive scripts to Flink and run them in it.

In Apache Flink 1.11, the Hive dialect supports most common DDL operations, such as CREATE TABLE, ALTER TABLE, CHANGE COLUMN, REPLACE COLUMN, ADD PARTITION, and DROP PARTITION. To this end, we implement an independent parser for the Hive dialect. Based on the dialect that you specified, Flink determines the parser that will be used to parse SQL statements. You can specify an SQL Dialect by using the table.sql-dialect parameter. The default value of this parameter is default, which represents Flink's native dialect. However, if this parameter is set to hive, the Hive dialect is used. If you are an SQL user, you can set the table.sql-dialect in the yaml file to specify the initial dialect of the session or use the set command to adjust the dialect as needed without restarting the session.

For more information about the functions supported by the Hive dialect, see FLIP-123 or Flink's official documentation. The following are some design principles and notes for using this feature:

Apache Flink 1.10 supports vectorized reading of ORC (Hive 2+), but this is very limited. To this end, Apache Flink 1.11 has added more support for vectorization:

Vectorization is supported for all versions of Parquet and ORC. This feature is enabled by default and can be disabled manually.

In Apache Flink 1.10, Flink's documentation lists the required Hive dependencies. We recommend that you download the dependencies. Since this is still a little troublesome, Apache Flink 1.11 provides built-in dependencies [10]:

flink-sql-connector-hive-1.2.2_2.11-1.11.jar: the dependency of Hive 1.0.flink-sql-connector-hive-2.2.0_2.11-1.11.jar: the dependency of Hive 2.0 to 2.2.flink-sql-connector-hive-2.3.6_2.11-1.11.jar: the dependency of Hive 2.3.flink-sql-connector-hive-3.1.2_2.11-1.11.jar: the dependency of Hive 3.0.Download a separate package and then run HADOOP_CLASSPATH to start Flink on Hive.

In addition to Hive-related features, Apache Flink 1.11 has also introduced a lot of enhancements for the stream-batch integration.

For a long time, the Filesystem connector of the Table API in Flink only supported the CSV format but didn't support partitions. In some respects, the Filesystem connector did not support common big data computing scenarios.

In Apache Flink 1.11, the entire Filesystem connector is renewed [1]:

INSERT OVERWRITE.Various formats are supported:

For example:

CREATE TABLE fs_table (

user_id STRING,

order_amount DOUBLE,

dt STRING,

hour STRING

) PARTITIONED BY (dt, hour) WITH (

'connector'='filesystem',

'path'='...',

' format'='parquet',

'partition.time-extractor.timestamp-pattern'='$dt $hour:00:00',

'sink.partition-commit.delay'='1 h',

'sink.partition-commit.policy.kind'='success-file')

)

-- stream environment or batch environment

INSERT INTO TABLE fs_table SELECT user_id, order_amount, DATE_FORMAT(log_ts, 'yyyy-MM-dd'), DATE_FORMAT(log_ts, 'HH') FROM kafka_table;

-- Query by partition

SELECT * FROM fs_table WHERE dt='2020-05-20' and hour='12';Before Apache Flink 1.11, the yarn per-job or session mode expanded infinitely. You could use only the YARN queue to limit resource usage. However, traditional batch jobs are big concurrent jobs, which run on a limited number of resources in stages. Therefore, Apache Flink 1.11 has introduced Max Slot [11] to limit the resource usage of YARN applications.

slotmanager.number-of-slots.maxBy using Max Slot, you can define the maximum number of slots allocated by a Flink cluster. This configuration option is used to limit the resource consumption for batch workloads. We do not recommend configuring this option for streaming jobs. If you do not have sufficient slots, streaming jobs may fail.

Apache Flink 1.11 is also a major revision, where the Flink community has introduced a lot of features and improvements. The biggest goal of Flink is to help businesses build a stream-batch integrated data warehouse and a comprehensive, smooth, and high-performance all-in-one data warehouse. I hope you can get more involved in our community activities and share your questions and ideas with us. You are also welcome to participate in our discussions and development, to help us make Flink even better.

[1] https://cwiki.apache.org/confluence/display/FLINK/FLIP-115%3A+Filesystem+connector+in+Table

[2] https://issues.apache.org/jira/browse/FLINK-14255

[3] https://cwiki.apache.org/confluence/display/FLINK/FLIP-113%3A+Supports+Dynamic+Table+Options+for+Flink+SQL

[4] https://issues.apache.org/jira/browse/FLINK-17434

[5] https://issues.apache.org/jira/browse/FLINK-17435

[6] https://issues.apache.org/jira/browse/FLINK-17387

[7] https://cwiki.apache.org/confluence/display/FLINK/FLIP-123%3A+DDL+and+DML+compatibility+for+Hive+connector

[8] https://issues.apache.org/jira/browse/FLINK-14802

[9] https://issues.apache.org/jira/browse/FLINK-16450

[10] https://issues.apache.org/jira/browse/FLINK-16455

[11] https://issues.apache.org/jira/browse/FLINK-16605

Li Jinsong (Zhixin) is an Apache Flink committer and Alibaba Technical Expert that has long been committed to stream-batch integrated computing and data warehouse architecture.

Li Rui (Tianli) is an Apache Hive PMC member and Alibaba Technical Expert that was mainly involved in open-source projects, such as Hive, HDFS, and Spark at Intel and IBM before joining Alibaba.

206 posts | 56 followers

FollowApache Flink Community China - March 29, 2021

Apache Flink Community China - January 11, 2021

Apache Flink Community China - February 19, 2021

Apache Flink Community China - March 17, 2023

Apache Flink Community China - April 13, 2022

Alibaba Clouder - December 2, 2020

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Apache Flink Community