By Yu Teng, R&D Director from Dell EMC, Collated by Haikai Zhao, Dell EMC intern

This article introduces the evolution of big data architectures, Pravega, advanced features of Pravega, and scenarios of the Internet of Vehicles (IoV.) It focuses on why Dell EMC developed Pravega, challenges that Pravega tackled for big data processing platforms, and benefits from the combination of Pravega and Flink.

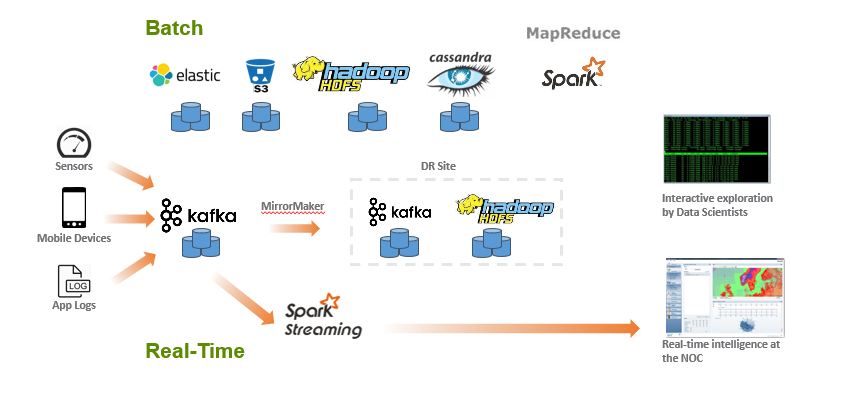

Effectively extracting and providing data is crucial to the success of big data architecture. Data extraction requires two kinds of policies due to differences in processing speed and frequency. The preceding figure shows a typical Lambda architecture, which divides the big data architecture into two separate sets of computing infrastructure: batch processing and real-time stream processing.

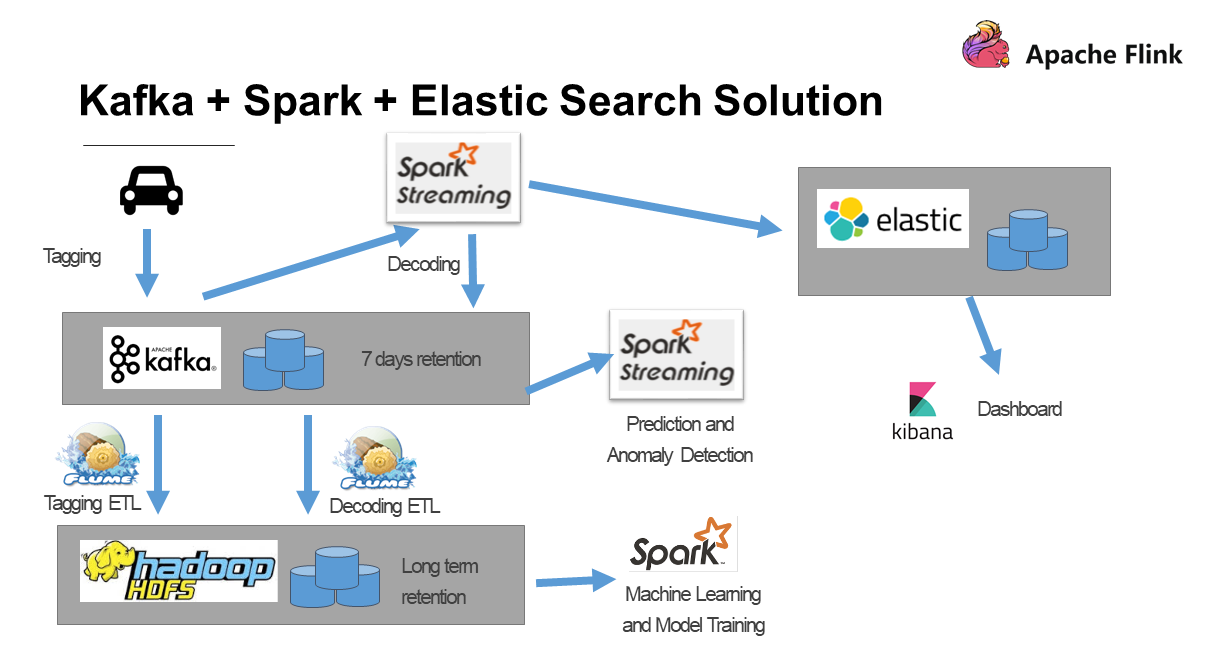

For real-time processing, data from sensors, mobile devices, or application logs are usually written to a message queuing system, such as Kafka. Message queuing provides temporary data buffers for stream processing applications. Spark Streaming reads the data from Kafka for real-time stream computing, but Kafka does not store historical data permanently. If your business logic is to analyze both historical data and real-time data, this pipeline cannot work. To compensate for this, we need to develop an additional pipeline for batch processing. This is the Batch part in the figure.

The batch processing pipeline aggregates many open-source big data components, such as ElasticSearch, Cassandra, Spark, and the Hadoop Distributed File System (HDFS.) This pipeline uses Spark to implement large-scale MapReduce operations. The results are more accurate because all historical data is computed and analyzed, but the latency is relatively large.

This classic big data architecture has the following three problems:

Let's take a look at some features of streaming storage before we introduce Pravega. Mixed storage is required to obtain big data architecture that unifies stream processing and batch processing.

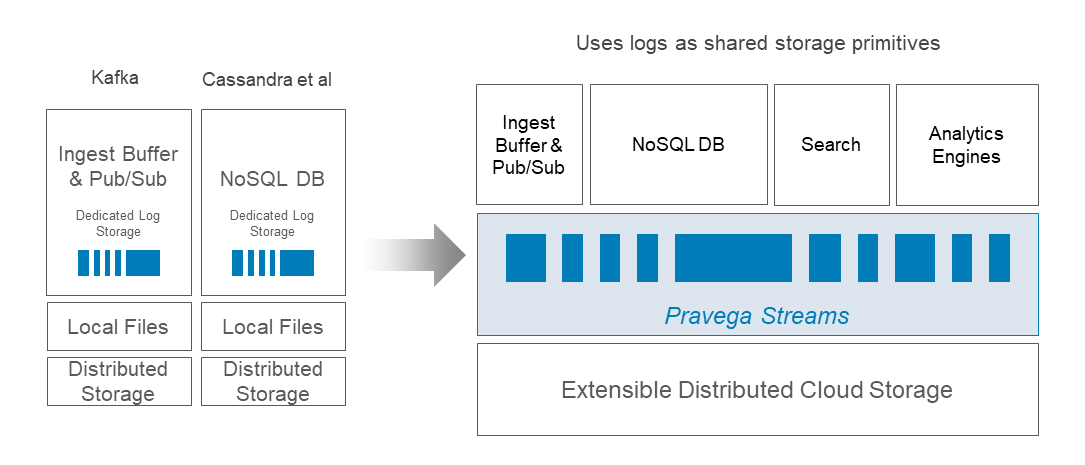

For distributed storage components such as Kafka and Cassandra, their storage architectures from top to bottom adopt the pattern from dedicated log data storage to local file storage to distributed storage on clusters.

The Pravega team tried to reconstruct the streaming storage architecture by introducing Pravega Stream (Stream) as the basic unit of streaming storage. A Stream is a named, durable, append-only, and unbounded sequence of bytes.

As shown in the preceding figure, the bottom layer of the storage architecture is the scalable distributed cloud storage. At the middle layer, log data is stored as Streams that are shared storage primitives. Then, based on the Streams, different features are provided upward, such as message queuing, NoSQL, full-text streaming data search, and Flink-based real-time and batch analytics. In other words, Stream primitives avoid data redundancy caused by movements of the original data in multiple open-source storage and search products in the existing big data architecture. Stream primitives enable a unified data lake at the storage layer.

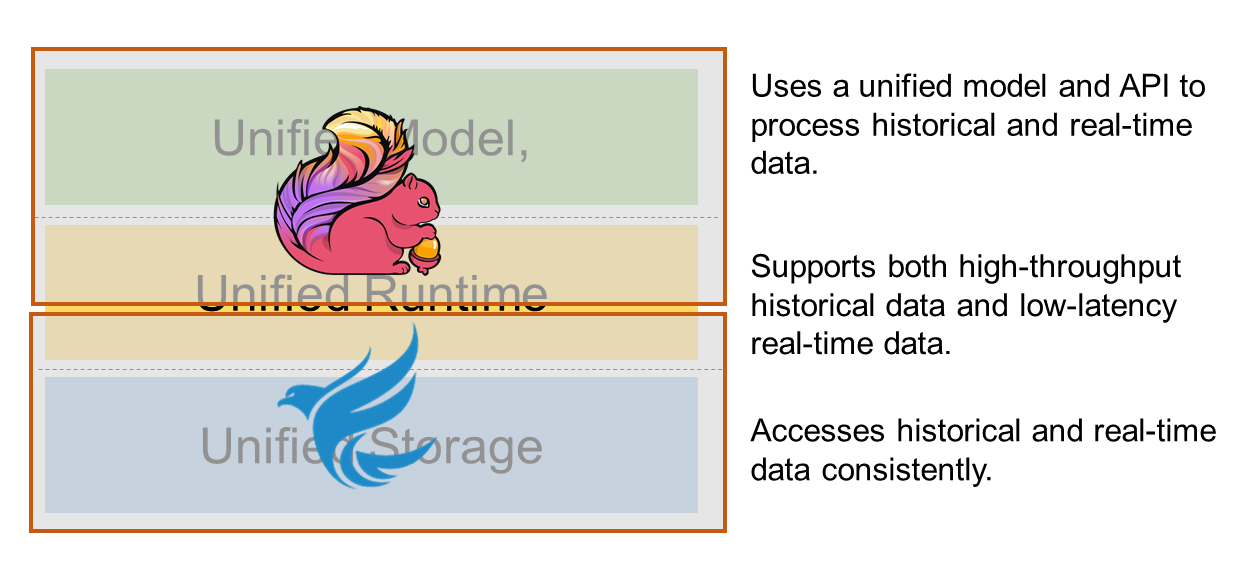

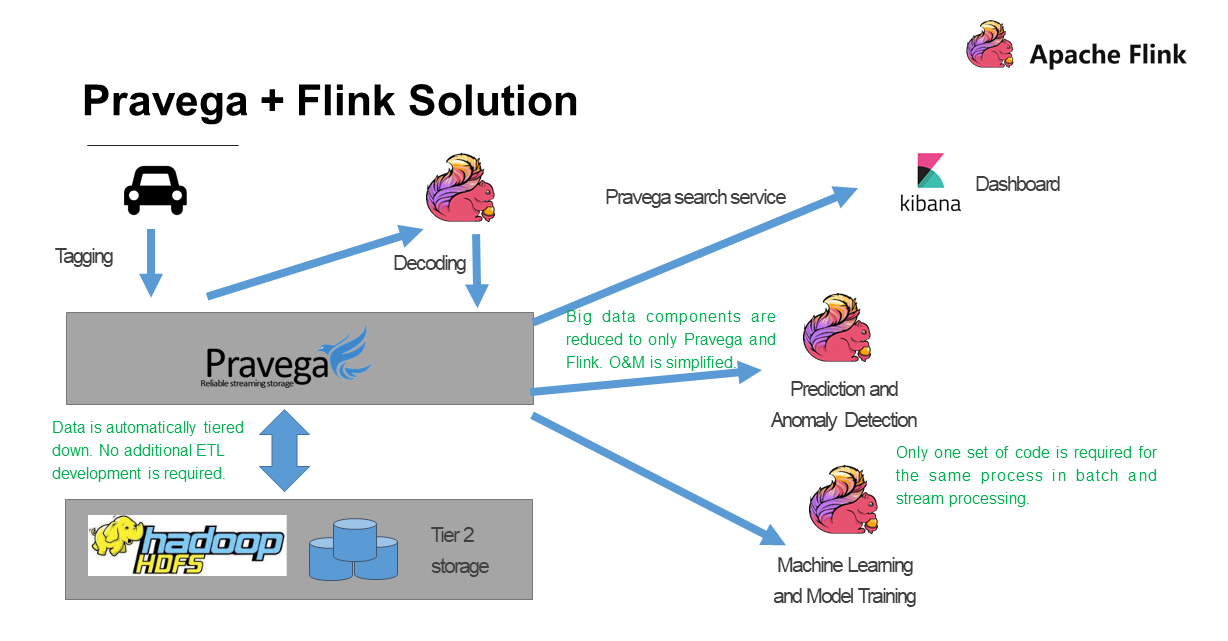

Our big data architecture uses Flink as the computing engine and unifies batch processing and stream processing by using a unified model and APIs. Pravega used as the storage engine provides a unified abstraction for streaming storage, which allows consistent access to historical data and real-time data. This unification leads to a closed loop from storage to computing, allowing you to process both high-throughput historical data and low-latency real-time data. Meanwhile, the Pravega team also developed the Flink-Pravega Connector to provide the exactly-once semantics for the entire pipeline of computing and storage.

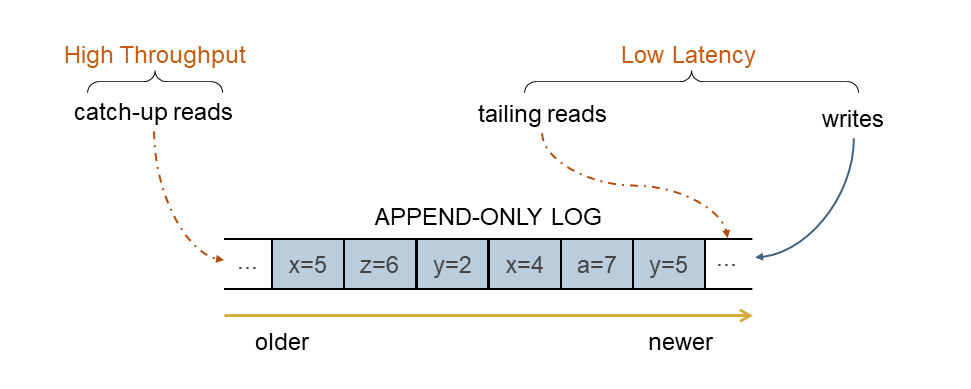

Pravega is designed to provide real-time streaming storage. Applications store data permanently to Pravega, where Streams can store an unbound volume of data for an unlimited period. The same Reader API supports tail reads and catch-up reads and effectively unifies offline and real-time computing.

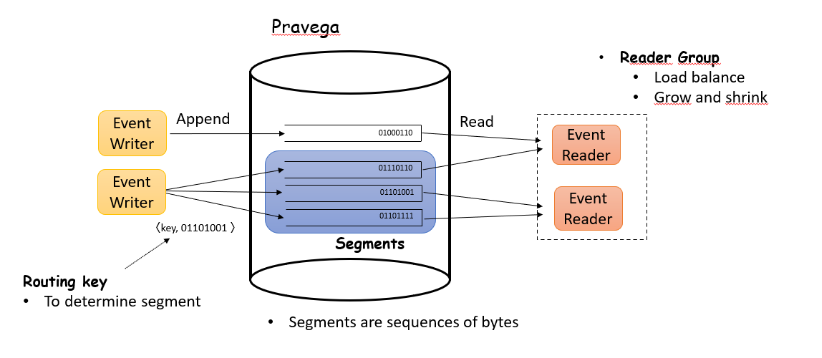

Let's introduce the basic concepts of Pravega based on the preceding figure:

Pravega organizes data into Streams. A Stream is a named, durable, append-only, and unbound sequence of bytes.

A Stream is split into one or more Stream Segments. A Stream Segment is a data shard in a Stream, which is an append-only data block. Pravega implements auto scaling based on Stream Segments. The number of Stream Segments is automatically and continuously updated based on data traffic.

Pravega's client API allows applications to write data to and read data from Pravega in the form of Events. An Event is represented as a collection of bytes within a Stream. For example, writing a temperature record from an IoT sensor to Pravega is an Event.

Every Event has a Routing Key. A Routing Key is a string used by developers to group similar Events. Events associated with the same Routing Key are written to the same Stream Segment. Pravega provides read and write semantics based on Routing Keys.

It is used to load balance reads. You can dynamically increase or decrease the number of Readers in a Reader Group to change the concurrency of data reads. For more information, see the official documentation of Pravega by clicking this link.

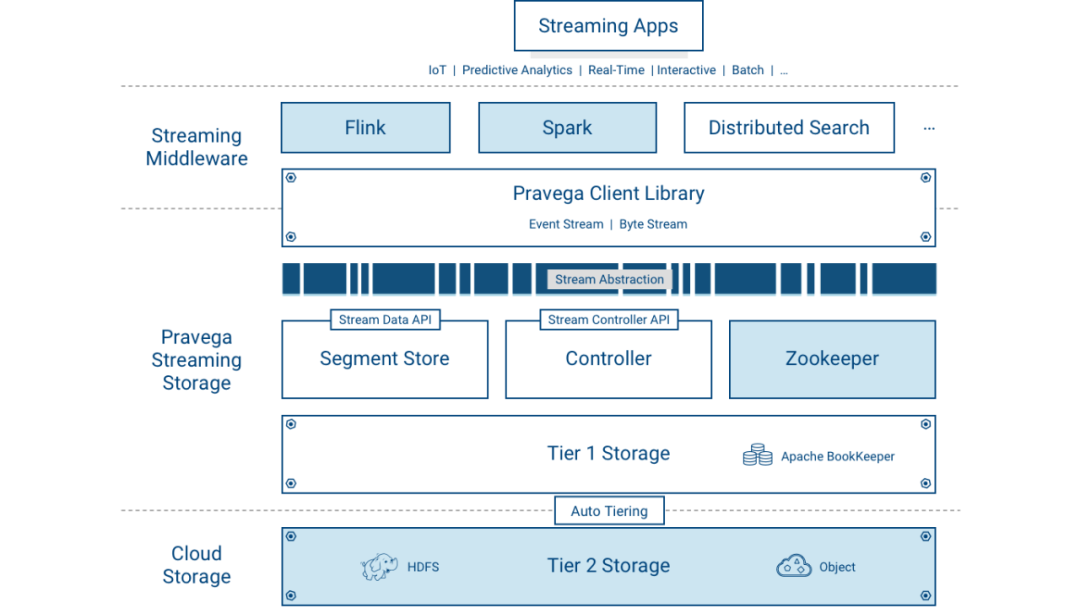

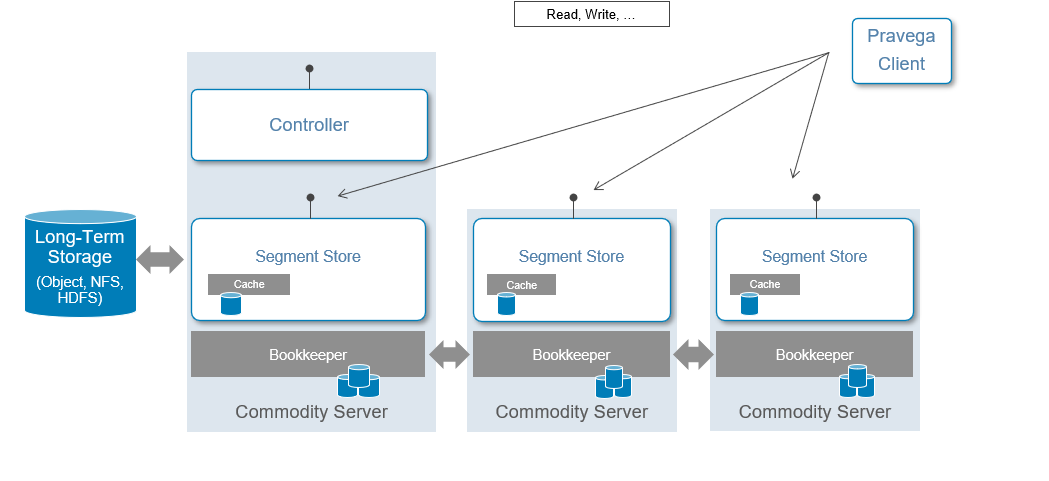

At the control plane, Controller instances act as the primary nodes of a Pravega cluster to manage Segment Stores at the data plane. This provides the functionality to create, update, and delete Streams. In addition, it monitors the cluster status in real-time, acquires streaming data, and gathers metrics. A cluster usually has three Controller instances to ensure its high availability.

At the data plane, the Segment Store provides the API for reading data from and writing data to a Stream. In Pravega, data is stored in tiers.

Tier 1 Storage is typically deployed within the Pravega cluster and used for low-latency and short-time hot data. Each Segment Store has the Cache to speed up data reading. Pravega uses Apache BookKeeper to implements Tier 1 Storage to provide low-latency log storage.

Tier 2 Storage provides long-term storage. It is normally deployed outside the Pravega cluster and used for streaming data (cold data.) Pravega uses HDFS, Network File System (NFS), and enterprise-grade storage products, such as Dell EMC's Isilon and Elastic Cloud Storage (ECS) to implement Tier 2 Storage.

When data is written to Tier 1 Storage, the data is stored in all relevant Segment Stores through BookKeeper, which ensures that the data is written successfully.

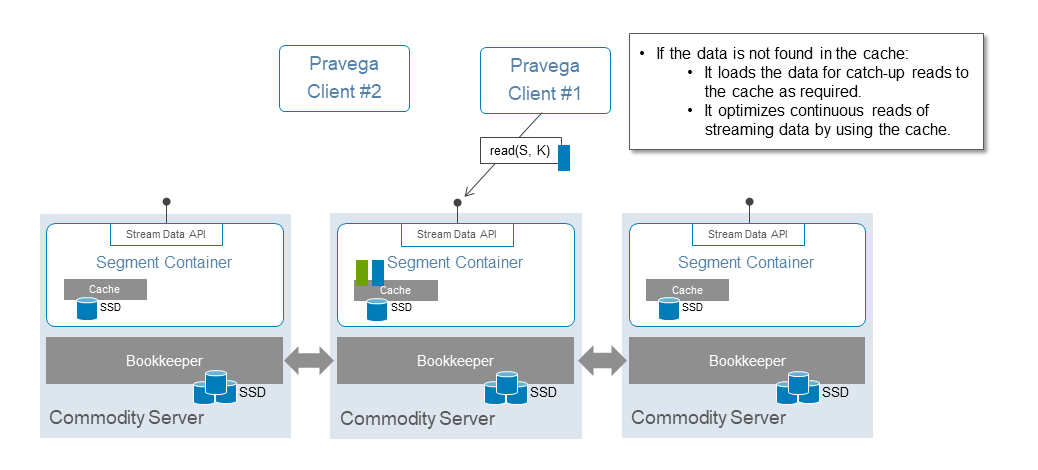

Read/write splitting helps optimize the read and write performance because it allows reading only from the Cache in Tier 1 Storage and Tier 2 Storage instead of reading from BookKeeper in Tier 1.

When you initiate a request to read data from Pravega, Pravega will decide whether to perform a low-latency tail read from the Cache in Tier 1 or a high-throughput catch-up read from Tier 2 Storage, such as object storage or NFS. If the data is not stored in the Cache, data is loaded to the Cache as needed. Read operations are transparent to you.

The BookKeeper in Tier 1 never reads but only writes data in the case of zero cluster failure.

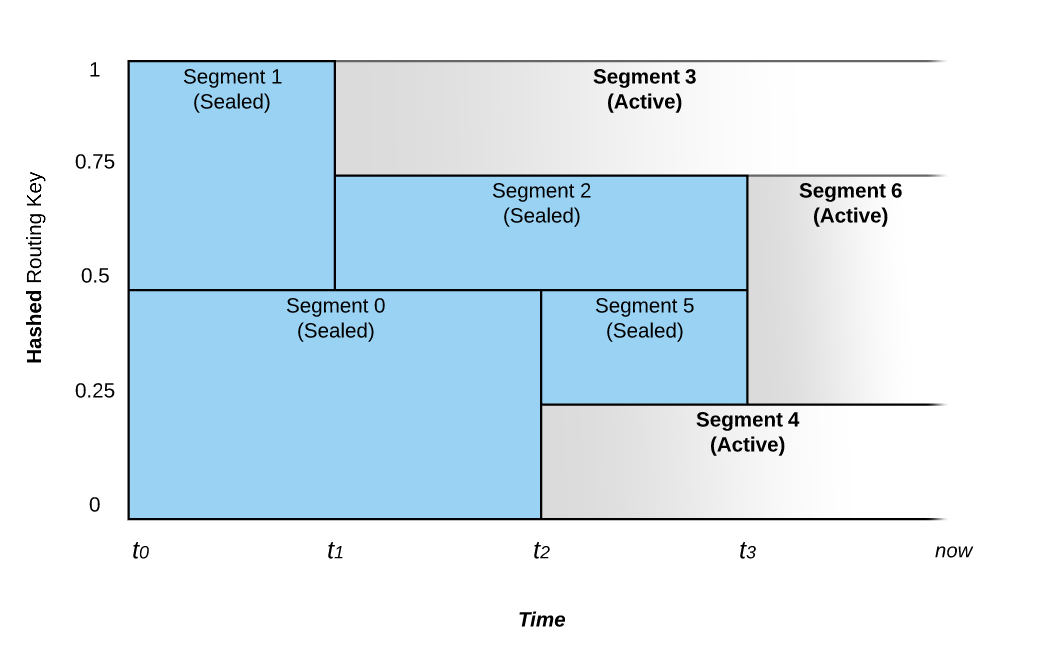

The number of Stream Segments in a Stream can automatically grow and shrink over time based on the I/O load it receives. This feature is known as auto scaling. Let's take the preceding figure as an example:

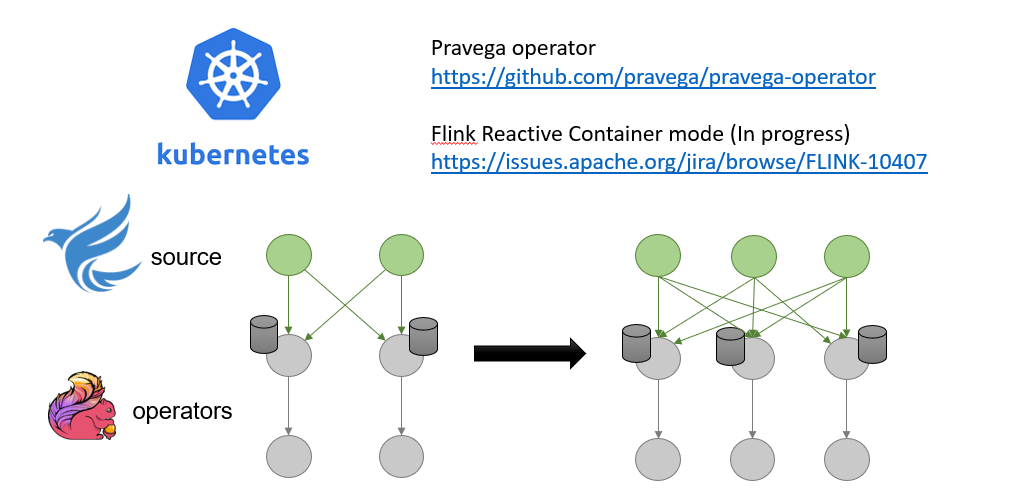

Pravega uses Kubernetes Operator to deploy stateful applications for components in a cluster, which makes auto scaling of applications more flexible and convenient. Currently, Pravega also works with Ververica on implementing Kubernetes pod-level auto scaling in Pravega and auto scaling by rescaling the number of tasks in Flink.

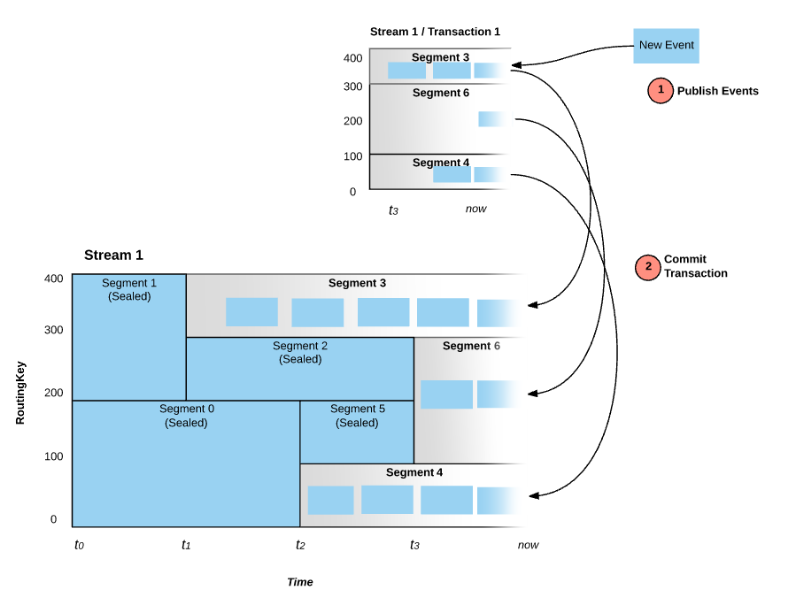

Pravega also supports transactional writes. Before a transaction is committed, data is written to different Transaction Segments based on Routing Keys. At this time, Transaction Segments are invisible to the Reader. After a transaction is committed, Transaction Segments are appended respectively to the tail of Stream Segments. At this time, Transaction Segments are visible to the Reader. The support for writing transactions is also the key to implementing the end-to-end exactly-once semantics with Flink.

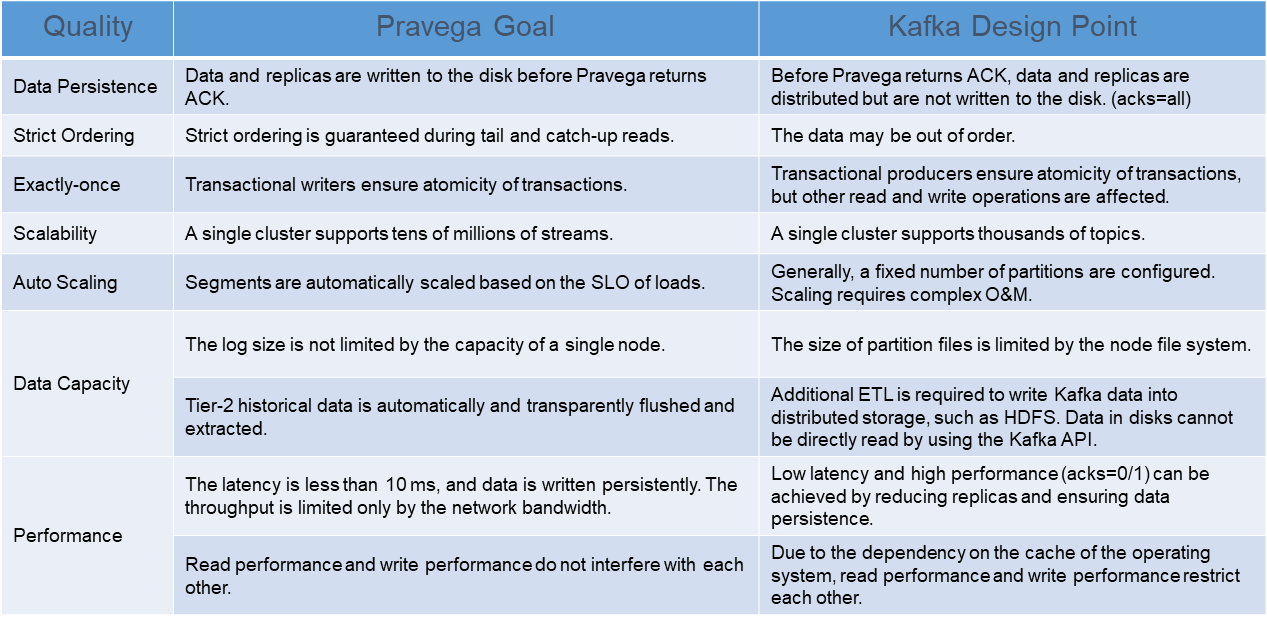

First, the key difference lies in the positioning of Kafka and Pravega; the former acts as a message queuing system, whereas the latter serves as a storage engine. Pravega focuses more on storage characteristics such as dynamic scaling, security, and integrity.

In stream processing, data should be deemed continuous and infinite. As message-oriented middleware based on the local file system, Kafka appends a published message to the end of the log file and tracks its content (offset mechanism.) By using this method, Kafka simulates infinite data streams but is inevitably subject to the upper limit of file descriptors and the disk capacity of the local file system. Therefore, it cannot ensure infinity.

The comparison between Pravega and Kafka is detailed in the figure.

To facilitate the use with Flink, we provide the Pravega-Flink Connector. The Pravega team also plans to contribute this connector to the Flink community. The connector has the following features:

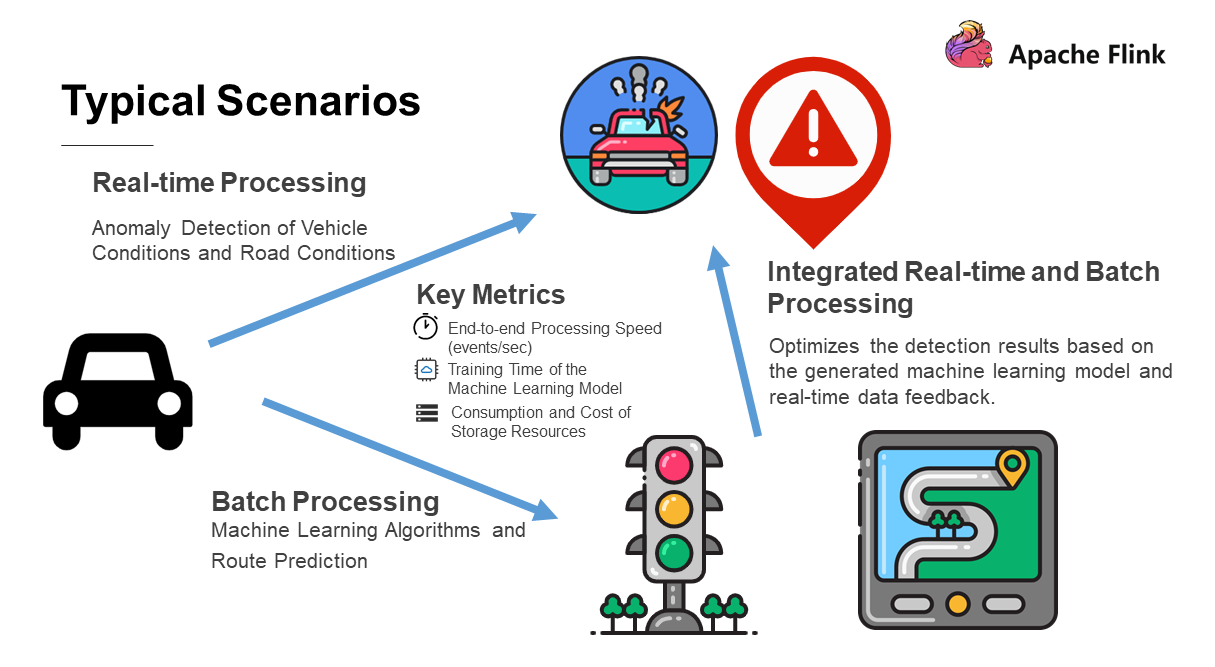

Let's take connected autonomous vehicles that can generate petabyte-level data as an example. This scenario has the following needs:

The key indicators that concern customers are:

Here is a comparison of solutions before and after the introduction of Pravega.

Pravega greatly simplifies the big data architecture:

Flink has become a shining star among stream computing engines, but there is still a gap in the streaming storage field. Pravega is designed to fill this gap for the big data architecture. "All problems in the computer field can be solved by adding an extra middle layer." Essentially, Pravega acts as a decoupling layer between the computing engine and the underlying storage and aims to resolve the challenges for the new generation of big data platforms at the storage layer.

Flink Is Attempting to Build a Data Warehouse Simply by Using a Set of SQL Statements

A Deep Dive into Apache Flink 1.11: Stream-Batch Integrated Hive Data Warehouse

150 posts | 43 followers

FollowApache Flink Community China - April 19, 2022

Apache Flink Community China - January 11, 2021

Apache Flink Community China - August 12, 2022

Apache Flink Community China - April 13, 2022

Apache Flink Community China - February 19, 2021

Apache Flink Community China - April 23, 2020

150 posts | 43 followers

Follow IoT Platform

IoT Platform

Provides secure and reliable communication between devices and the IoT Platform which allows you to manage a large number of devices on a single IoT Platform.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Global Internet Access Solution

Global Internet Access Solution

Migrate your Internet Data Center’s (IDC) Internet gateway to the cloud securely through Alibaba Cloud’s high-quality Internet bandwidth and premium Mainland China route.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Apache Flink Community