A data warehouse service is a fundamental requirement for a company whose data volume has grown to a certain magnitude. A data warehouse is also an essential part of data intelligence. The fast acquisition of data feedback not only helps improve the product quality and user experience but it also enables scientific decision making for companies. This makes real-time access to data particularly important.

Currently, most enterprises build one offline data warehouse and one real-time data warehouse. They use the real-time data warehouse for businesses that require low latency and the offline data warehouse for complex businesses. In this case, the architecture requires many systems and computing frameworks and therefore is rather complex. To maintain such the architecture, enterprises must hire a wealth of talent, resulting in high labor costs. In addition, it is difficult to locate problems in the architecture, and end-users are required to learn multiple syntaxes. By analyzing the current data warehouse architecture, this article explores whether offline and real-time data warehouses can be integrated and whether Flink's unified architecture can resolve most issues.

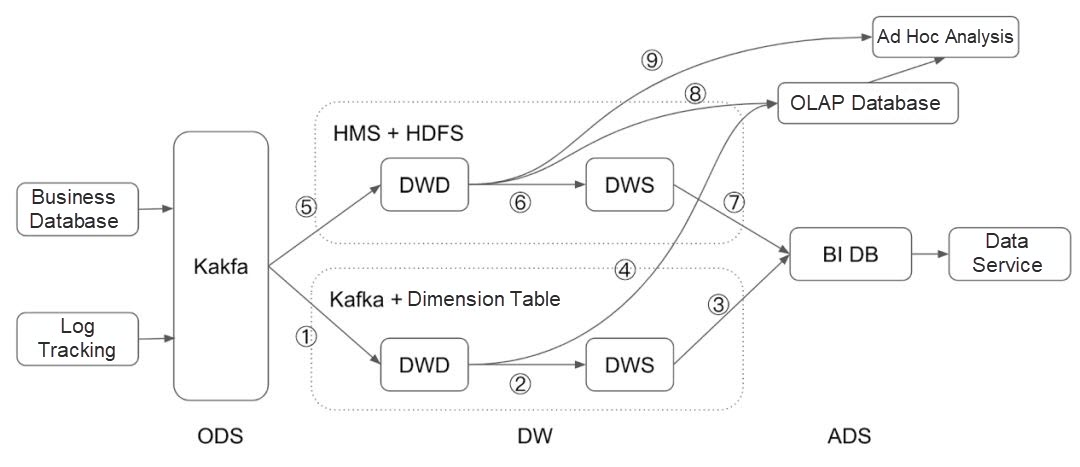

A data warehouse can be divided into the operational data store (ODS) layer, the data warehouse (DW) layer, and the application data service (ADS) layer.

The ODS layer stores raw data from logs or business databases. In conventional offline data warehouses, this layer may periodically synchronize raw data to data warehouses by using the Change Data Capture (CDC) tool. You can use a set of unified Kafka services to assume the role of the ODS layer to warehouse data in near real-time and unify real-time and offline data warehouses at this layer.

The DW layer is generally divided into the following layers:

Different from the DWS layer, this layer stores the final data and directly provides data services for users without re-computing.

The data services are mainly provided in the following pipelines:

Lambda architectures remain the most popular data warehouse architectures. Despite their complexity, Lambda architectures are most flexible for businesses because they can be used for the required business scenarios.

Lambda Architecture Provides Two Pipelines

The preceding figure indicates data conversion steps 1 to 9 during big data computing. This article will analyze these data conversions and explore the role Flink can play in this process.

Let's start with metadata management. In offline data warehouses, the HMS manages the metadata but Kafka cannot manage metadata alone. We recommend two practices.

You can create the Confluent Schema Registry service, to obtain the schema information of a table by using the URL of the Confluent. For a table with hundreds of fields, this service frees you from writing Flink jobs. Flink is working on integrating the schema's inference feature with Confluent Schema Registry. However, in this method, you still have to create tables and enter the corresponding URL of the Confluent.

Currently, Flink provides built-in HiveCatalog, allowing you to directly integrate Kafka tables into the HMS and allowing users to directly use these tables in SQL. To allow you to flexibly specify configurations in some start offset scenarios of Kafka, Flink currently provides measures such as LIKE[1] and Table Hints[2].

In Flink, HiveCatalog is used in both offline and real-time data warehouses.

use catalog my_hive;

-- build streaming database and tables;

create database stream_db;

use stream_db;

create table order_table (

id long,

amount double,

user_id long,

status string,

ts timestamp,

⋯ -- 可能还有几十个字段

ts_day string,

ts_hour string

) with (

'connector.type' = 'kafka',

⋯ -- Kafka table相关配置

);

-- build batch database and tables;

create database batch_db;

use batch_db;

create table order_table like stream_db.order_table (excluding options)

partitioned by (ts_day, ts_hour)

with (

'connector.type' = 'hive',

⋯ -- Hive table相关配置

);When HiveCatalog is used, batches and streams can be fully reused for subsequent processing, delivering the same user experience.

Computing steps :one: and :five: indicate real-time data warehousing and offline data warehousing, respectively. Recently, offline data warehousing with near real-time performance has become a common practice in data warehouses. Flink's data warehousing feature brings offline data closer to real-time.

Previously, most data was imported by using DataStream and StreamingFileSink. However, Apache ORC was not supported and HMS cannot be updated.

After Flink streaming was integrated with Hive, Hive's streaming sink[3] was provided, facilitating the use of SQL statements. You can use built-in functions and user-defined functions (UDFs) in SQL statements and reuse streams and batches to execute stream processing jobs and batch processing jobs concurrently.

insert into [stream_db.|batch_db.]order_table select ⋯ from log_table;Computing steps :two: and :six: indicate intermediate data processing for the real-time data warehouse and the offline data warehouse, respectively. This process involves the following types of computations:

Different from real-time processing, batch processing involves only the dimension table data generated at a certain point in time. In contrast, streaming jobs run continuously and focus on dynamic dimension tables instead of static data only.

In addition, to improve the efficiency of the join operation, a streaming job usually joins a database table instead of only a Hive table.

Example:

-- stream 维表

use stream_db;

create table user_info (

user_id long,

age int,

address,

primary key(user_id)

) with (

'connector.type' = 'jdbc',

...

);

-- 将离线数仓的维表导入实时数仓中

insert into user_info select * from batch_db.user_info;

-- 维表Join,SQL批流复用

insert into order_with_user_age select * from order_table join user_info for system_time as of order_table.proctime on user_info.user_id = user_info.user_id;In a real-time data warehouse, you must periodically schedule and update dimension tables to a real-time dimension table database. Therefore, we can ask: Can we directly join Hive dimension tables in an offline data warehouse? Currently, the community is also developing Hive dimension tables. The challenges faced by the community are:

Example:

select age, avg(amount) from order_with_user_age group by age;A simple SQL statement with an aggregate function is executed differently during stream processing and batch processing.

The greatest difference is that a streaming job is a dynamic table[4] and outputs constantly changing results. Simply put, during stream processing, the output data volume of a dynamic table is driven by the input data volume. In contrast, during batch processing, a dynamic table is output only after all the input is obtained, and therefore its results change dynamically:

After stateful computing is performed, the data output is:

Example:

-- batch:计算完成后,一次性输出到mysql中,同key只有一个数据

-- streaming:mysql里面的数据不断更新,不断变化

insert into mysql_table select age, avg(amount) from order_with_user_age group by age;

-- batch: 同key只有一个数据,append即可

insert into hive_table select age, avg(amount) from order_with_user_age group by age;

-- streaming: kafka里面的数据不断append,并且多出一列,来表示这是upsert的消息,后续的Flink消费会自动做出机制来处理upsert

insert into kafka_table select age, avg(amount) from order_with_user_age group by age;An offline data warehouse can perform computing step :nine: and can perform ad hoc queries on DWD or DWS data, allowing data analysts to query data flexibly.

Currently, one of the major weaknesses of real-time data warehouses is the lack of support for ad hoc queries because real-time data warehouses do not store historical data. Kafka may be able to store data for more than three days, but this leads to high storage costs and low query efficiency.

We have the idea of providing the following unified sink components for batch processing and stream processing in online analytical processing (OLAP) databases:

This article first described existing Lambda architectures and then analyzed the capabilities of Flink's one-stack data warehouse and computing solution. Some of the new features described in this article are still undergoing rapid iteration. With continuous exploration and practice, we hope to gradually promote integrated computing. We hope that the data warehouse architectures of the future will unify batch and real-time processing, providing a unified user experience:

[1] https://cwiki.apache.org/confluence/display/FLINK/FLIP-110%3A+Support+LIKE+clause+in+CREATE+TABLE

[2] https://cwiki.apache.org/confluence/display/FLINK/FLIP-113%3A+Supports+Table+Hints

[3] https://cwiki.apache.org/confluence/display/FLINK/FLIP-115%3A+Filesystem+connector+in+Table

[4] https://ci.apache.org/projects/flink/flink-docs-master/dev/table/streaming/dynamic_tables.html

[5] https://cwiki.apache.org/confluence/display/FLINK/FLIP-105%3A+Support+to+Interpret+and+Emit+Changelog+in+Flink+SQL

Demo: How to Build Streaming Applications Based on Flink SQL

152 posts | 44 followers

FollowApache Flink Community China - September 27, 2020

Apache Flink Community China - February 19, 2021

Apache Flink Community China - July 28, 2020

Apache Flink Community China - June 8, 2021

Alibaba Cloud Data Intelligence - August 8, 2024

Apache Flink Community China - September 27, 2020

152 posts | 44 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community