By Jiongsi

Within the SREWorks community, numerous staff members are engaged in constructing O&M data warehouses. These individuals consistently face similar challenges and issues.

• Storing a large volume of data in a data warehouse incurs costs, yet much of the stored data remains unused.

• Loading data into the warehouse involves high Extract, Transform, Load (ETL) learning and management costs, along with challenges related to staff coordination and delayed ETL adjustments after upstream structural changes.

• Due to timeliness and accuracy concerns, many scenarios cannot fully rely on data warehouses.

These issues create significant hurdles for data warehouse advocates. Despite their efforts to build a unified data warehouse, various limitations prevent them from fully realizing its value. In this article, we explore an LLM-based data warehouse solution that addresses these three problems at the architectural level.

Let's revisit our initial goal in constructing an O&M data warehouse: to enable querying or measuring all the relevant O&M objects using a single SQL statement. While various design solutions exist, we can summarize them into two abstract models: Push and Pull.

• Push involves actively managing the ETL links for data. For instance, we can utilize big data solutions such as Flink and MaxCompute to process data and load it into data warehouses. When querying data, you can directly perform SELECT operations on the data warehouse to retrieve results. However, this approach has its drawbacks: 1. High ETL management and maintenance costs. 2. Reduced data accuracy compared to the data source.

• Pull refers to not actively fetching all the data but rather retrieving it from various data sources during execution. Presto serves as a typical example. The advantage of this solution lies in avoiding ETL management and achieving better data accuracy since it's pulled in real time. However, it introduces complexity during the query phase, leading to slower query speeds.

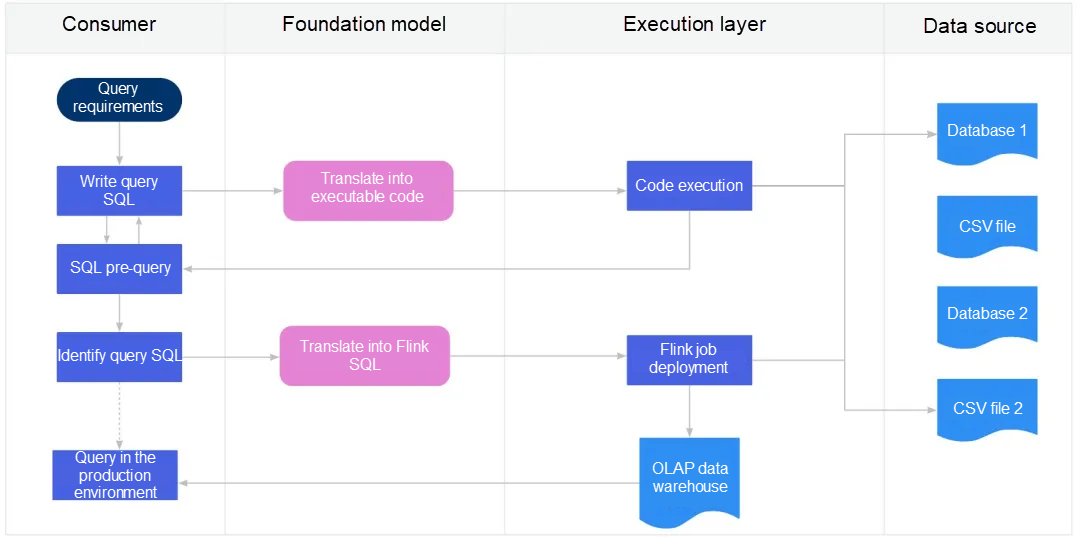

Now, is there a solution that can combine the benefits of both models? After familiarizing myself with the foundation model, I believe such a solution is feasible and design the following flow chart.

Many users find Federated Query engines such as Presto impressive once they start using them. Instead of dealing with multiple for loops, a single SELECT-JOIN statement can often solve complex problems. However, Presto is primarily designed for analytical workloads, making it less suitable for critical links with high-frequency queries.

The gap between Federated Query SQL and for loop code seems thin, akin to a single layer of yarn. But now, with the advent of foundation models, that layer has been directly pierced. Our idea is straightforward: since foundation models can effortlessly convert user requirements into SQL, it should be equally feasible to transform user-written SQL into code.

import os

import sys

from openai import OpenAI

import traceback

from io import StringIO

from contextlib import redirect_stdout, redirect_stderr

client = OpenAI()

def get_script(content):

return content.split("```python")[1].split("```")[0]

def execute_python(code_str: str):

stdout = StringIO()

stderr = StringIO()

return_head = 1000

context = {}

try:

# Redirect stdout and stderr to execute the code.

with redirect_stdout(stdout), redirect_stderr(stderr):

exec(code_str, context)

except Exception:

stderr.write(traceback.format_exc())

# Obtain the stdout, stderr, and context after the execution.

stdout_value = stdout.getvalue()[0:return_head]

stderr_value = stderr.getvalue()[0:return_head]

return {"stdout": stdout_value.strip(), "stderr": stderr_value.strip()}

prompt = """

You are a database expert. I will provide you with a SQL snippet and ask you to convert it into executable Python code.

Currently, we have the connection information of these two databases:

1. Database name: processes, connection string: mysql://root@test-db1.com:3306/processes

The following is the table structure of this database:

CREATE TABLE `process_table` (

`process_name` varchar(100) DEFAULT NULL,

`start_time` datetime DEFAULT NULL,

`end_time` datetime DEFAULT NULL,

`server_name` varchar(100) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

2. Database name: servers, connection string: mysql://root@test-db2.com:3306/servers

The following is the table structure of this database:

···

CREATE TABLE `server_table` (

`server_name` varchar(100) DEFAULT NULL,

`ip` varchar(100) DEFAULT NULL,

`zone` varchar(100) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

···

When writing Python code, provide only the necessary database information based on the SQL content.

Only the Python code is returned, and descriptions are included as comments. The output of executing the Python code should be in the JSON format.

User's SQL statement that you need to convert:

"""

query_sql = "select * from processes.process_table a join servers.server_table b on a.server_name = b.server_name where b.zone = 'ZoneA';"

messages = [

{"role": "system", "content": prompt},

{"role": "user", "content": query_sql}

]

res = client.chat.completions.create(messages=messages, model="gpt-4")

print(res.choices[0].message.content)

exec_result = execute_python(get_script(res.choices[0].message.content))

print("result:")

print(exec_result)

if exec_result["stderr"] == "" and exec_result["stdout"] != "":

print(exec_result["stdout"])

sys.exit(0)This example is straightforward, even without using LangChain. We implemented federated query based on OpenAI's interface:

• Prepare the connection string and data structure in advance and input them to the LLM.

• Provide the SQL statement to the LLM. Ensure that the table name in the SQL statement matches the table name in the data structure to avoid confusion.

• Execute the code generated by the LLM to obtain the query result.

Let's examine the results of the execution.

# Import required libraries

import pandas as pd

from sqlalchemy import create_engine

# Create connection engines

engine1 = create_engine('mysql://root@test-db1.com:3306/processes')

engine2 = create_engine('mysql://root@test-db2.com:3306/servers')

# Query data from process_table in processes database

df1 = pd.read_sql('SELECT * FROM `process_table`', engine1)

# Query data from server_table in servers database

df2 = pd.read_sql('SELECT * FROM `server_table` WHERE `zone` = "ZoneA"', engine2)

# Merge both dataframes on server_name

result = pd.merge(df1, df2, how='inner', on='server_name')

# Convert dataframe to JSON

out_json = result.to_json(orient='records')

print(out_json)

About this code:

We began by importing the required database and establishing two database connections using the provided credentials. Subsequently, we retrieved data from the two databases independently, merged the resulting data frames based on the "server_name" field, and finally converted the combined result into the JSON format.

result:

{'stdout': '[{"process_name":"Process1","start_time":1703259365000,"end_time":1703266565000,"server_name":"Server1","zone":"ZoneA"},{"process_name":"Process2","start_time":1703262965000,"end_time":1703270165000,"server_name":"Server2","zone":"ZoneA"}]', 'stderr': ''}

[{"process_name":"Process1","start_time":1703259365000,"end_time":1703266565000,"server_name":"Server1","zone":"ZoneA"},{"process_name":"Process2","start_time":1703262965000,"end_time":1703270165000,"server_name":"Server2","zone":"ZoneA"}]In practical operations, the code provided by LLM appears relatively random. At times, pandas is used for data processing, while other times it's pymysql. Despite this uncertainty, the outcome remains consistent. After multiple attempts, we discovered that the results remained unstable, occasionally due to flawed code resulting in errors. Building upon the chain of thought model established in the previous article, we can incorporate an error-reporting feedback mechanism, allowing the system to autonomously modify problematic code.

for i in range(3):

print("Retry No.:", i +1, "")

messages = [

{"role": "system", "content": prompt + "\n" + query_sql},

]

if exec_result["stderr"] != "":

messages.append({"role": "user", "content": res.choices[0].message.content + "\n\n" + exec_result["stderr"] + "\nAn error is reported. Correct the error and generate code again."})

else:

messages.append({"role": "user", "content": res.choices[0].message.content + "\n\n" + "Nothing is returned. Generate code again."})

res = client.chat.completions.create(messages=messages, model="gpt-4")

print(res.choices[0].message.content)

exec_result = execute_python(get_script(res.choices[0].message.content))

print("result:")

print(exec_result)

if exec_result["stderr"] == "" and exec_result["stdout"] != "":

print(exec_result["stdout"])

sys.exit(0)

print("Query failed.")By incorporating error feedback, we observe that this query becomes highly stable. Although occasional errors occur in the code generated by LLM, the results consistently stabilize through self-modification and optimization based on the feedback report. However, it's worth noting that queries with automatic error modification experience a noticeable delay.

| Total | Correct at the first query | Correct till the second query | Correct till the third query | Failed | |||||

| Queries | 20 | 7 | 35% | 9 | 45% | 0 | 0 | 4 | 20% |

| Average duration | 43.0s | 13.2s | 45.3s | N/A | 91.2s | ||||

Among the 20 tests conducted, the initial query success rate stands at approximately 30%. However, through error-reporting feedback and optimization, this success rate can soar to 80%. Notably, when examining each query statement, we observe significantly higher accuracy in code written using pandas. For future prompt optimization, consider providing guidelines on dependent database usage to further boost success rates. Additionally, it's worth noting that certain code errors, initially set in the wrong direction, remain uncorrectable even with feedback and optimization. A striking example is the 0% success rate for queries labeled correct till the third query. While the current test LLM exhibits slow reasoning speed, deploying it locally could significantly enhance its theoretical reasoning speed.

Currently, LLM-based queries exhibit performance comparable to that of Presto, and in certain aspects, LLM surpasses Presto. Here are the key distinctions:

• Data source extension: While Presto necessitates adapter development to connect to other data sources, the LLM solution simplifies this process. You only need to instruct LLM on querying a specific data source. In fact, due to its extensive programming knowledge, LLM may not even require explicit teaching.

• White-box and complex query optimization: When dealing with accuracy issues in complex scenarios, diagnosing problems within the Presto engine can be challenging. In contrast, the LLM solution relies on human-readable code. You can request LLM to write code in a programming language familiar to you, and it even accommodates comments on each line.

Similar to Presto, the LLM-based query solution is primarily suitable for pre-query deployment. In production environments, relying on LLM to generate query code every time would be prohibitively slow. However, there are ways to accelerate LLM queries. Remember the Push and Pull modes we discussed earlier? Federated queries operate in Pull mode, while streaming ETL relies on the Push mode. What if we directly translate query statements into streaming ETL statements and preprocess the necessary data into a database beforehand? Could this approach eliminate performance bottlenecks?

The data synchronization logic of stream computing is notably simpler compared to analytical queries. In this context, you only need to analyze SQL statements and synchronize data based on the required fields. While I will not provide the complete code here, I will share the relevant prompt.

Currently, we have the connection information of these two databases:

1. Database name: processes, connection string: mysql://root@test-db1.com:3306/processes

The following is the table structure of this database:

CREATE TABLE `process_table` (

`process_name` varchar(100) DEFAULT NULL,

`start_time` datetime DEFAULT NULL,

`end_time` datetime DEFAULT NULL,

`server_name` varchar(100) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

2. Database name: servers, connection string: mysql://root@test-db2.com:3306/servers

The following is the table structure of this database:

···

CREATE TABLE `server_table` (

`server_name` varchar(100) DEFAULT NULL,

`ip` varchar(100) DEFAULT NULL,

`zone` varchar(100) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

···

You are a Flink expert. I will provide you with a SQL snippet. My task is to identify the necessary fields and write appropriate Flink SQL for pre-processing in ClickHouse.

The original table names are retained in ClickHouse, maintaining a 1-to-1 mapping.

The goal is to ensure that users can execute the SQL correctly in ClickHouse without altering the original requirement SQL.

In the Flink SQL code, we will focus solely on data synchronization. Do not add additional data processing logic.

Please provide the complete Flink SQL code in the result without any text description. If any description is necessary, mark it with comments in the code.

User's requirement SQL:

select a.process_name,b.server_name from processes.process_table a join servers.server_table b on a.server_name = b.server_name where b.zone = 'ZoneA';Comparing this prompt to the one that previously generated query code, we notice a similarity except that the target has been explicitly modified to instruct the LLM to generate Flink SQL. Below, you'll find the Flink SQL generated by the LLM:

-- Create a Flink SQL connection to the process_table table in the processes database

CREATE TABLE process_table (

process_name STRING,

server_name STRING

) WITH (

'connector' = 'mysql-cdc', -- Use the MySQL CDC connector

'hostname' = 'test-db1.com', -- The hostname of the source database

'port' = '3306', -- The port of the source database

'username' = 'root', -- The username of the source database

'database-name' = 'processes', -- The name of the source database

'table-name' = 'process_table' -- The name of the table in the source database

);

-- Create a Flink SQL connection to the server_table table in the servers database

CREATE TABLE server_table (

server_name STRING,

zone STRING

) WITH (

'connector' = 'mysql-cdc', -- Use the MySQL CDC connector

'hostname' = 'test-db2.com', -- The hostname of the source database

'port' = '3306', -- The port of the source database

'username' = 'root', -- The username of the source database

'database-name' = 'servers', -- The name of the source database

'table-name' = 'server_table' -- The name of the table in the source database

);

-- Create a connection to ClickHouse to maintain the mapping between it and the source database.

CREATE TABLE clickhouse_process_table (

process_name STRING,

server_name STRING

) WITH (

'connector' = 'clickhouse',

'url' = 'clickhouse://localhost:8123',

'table-name' = 'process_table'

);

CREATE TABLE clickhouse_server_table (

server_name STRING,

zone STRING

) WITH (

'connector' = 'clickhouse',

'url' = 'clickhouse://localhost:8123',

'table-name' = 'server_table'

);

-- Synchronize data using Flink SQL

INSERT INTO clickhouse_process_table

SELECT process_name, server_name

FROM process_table;

INSERT INTO clickhouse_server_table

SELECT server_name, zone

FROM server_table;As anticipated, the LLM has generated accurate Flink SQL statements, synchronizing only the fields required by the query requirement SQL. Any unused fields remain unsynchronized (such as the ip field in server_table). In this context, we can enhance the stability of the generated code in a way similar to the error-reporting feedback and self-optimization discussed in Chapter 3, enabling direct deployment and execution in production. However, detailed discussion of this improvement is beyond the scope here.

Using LLM, we generate two snippets from a query requirement SQL: one for Pull (directly querying returned results for pre-query debugging) and the other for Push (constructing a consumption link into a real-time warehouse). Leveraging LLM, we can build ETL production links with several benefits:

• Avoid over-processing in the ETL process: Process only necessary fields, avoiding excessive computation and storage overhead.

• Reduce the consumer's cost of maintaining ETL processing: While Flink SQL boasts good maintainability, some users find the SQL writing approach geared toward the computing process uncomfortable. They may find direct query SQL generation more accessible, lowering the maintenance threshold.

• Unified data link: The query-driven data model encourages users to focus on requirements tied to source tables. ETL-generated real-time data resembles a materialized view, simplifying accuracy verification.

If data warehouse construction remains a challenge to you, consider exploring this LLM-based solution. Welcome to the SREWorks DataOps & AIOps community!

SREWorks open source address: https://github.com/alibaba/sreworks

Exploring DevOps in the Era of AI Foundation Models Part I: ReAct Engineering

Exploring DevOps in the Era of AI Foundation Models Part Ⅲ: Dive Into Agent

44 posts | 1 followers

FollowAlibaba Cloud Data Intelligence - August 14, 2024

Alibaba Cloud Data Intelligence - August 7, 2024

Alibaba Cloud Big Data and AI - January 8, 2026

Alibaba Cloud Community - September 6, 2024

Alibaba Cloud Community - August 9, 2024

Alibaba Cloud Native Community - November 6, 2025

44 posts | 1 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence

Santhakumar Munuswamy August 12, 2024 at 1:33 am

Thank you for sharing