By Jiongsi

The emergence of artificial intelligence (AI) foundation models has gradually affected our daily lives and the way we work. AI, which is ubiquitous in our lives, makes our lives more intelligent and convenient. The efficiency and accuracy of AI foundation models have greatly improved our efficiency in solving problems during our daily work.

We cannot ignore the huge impact and potential of AI foundation models on DevOps. This series of articles aims to explore this possibility and try to answer the question of whether AI foundation models can be integrated into our DevOps to bring us greater convenience and value. We look forward to finding a new way to integrate AI foundation models with DevOps and opening up a new and more efficient way of DevOps.

Usually, the word tool man is used humorously and with a touch of self-deprecation. We have all likely faced a pile of trivial and chaotic tasks, and with a reluctant laugh, referred to ourselves as the tool man. But why is this word mentioned here? The purpose is to visually understand how AI foundation models are used in the later stages of programming. These models are designed with the capability to operate various tools.

As you may have guessed, I will be discussing the most common structure in AI foundation models: Agent + Tool.

With the rise of frameworks such as LangChain, AI has evolved from being just a tool to becoming a true tool man. AI acts as the real operator, using tools rather than being the tools themselves. AI can not only follow our instructions but also adeptly use various tools to solve problems and create value.

The word agent has a long history in computer science. In the early days, it was used to describe an entity or medium that acted as a bridge between the cyber world and the physical world. Take the browser's User Agent as an example. It serves as a way for the browser to identify itself when interacting with a network server. In this process, the browser acts as an agent between the user and the web content.

With the rapid development of artificial intelligence technology, the meaning of the word agent has gradually become more enriched and profound. In AI, the word agent often refers to models or systems that can autonomously make decisions, respond to environmental changes, and execute various instructions.

Now that you understand the background of Agent + Tool, let's dive into how Agents and Tools work in LangChain.

Here is a simple common example:

import os

from subprocess import Popen, PIPE

from langchain.llms import OpenAI

from langchain.tools import StructuredTool

from langchain.agents import initialize_agent, AgentType

def ssh(command:str, host: str, username: str = "root") -> str:

"""A tool that can connect to a remote server and execute commands to retrieve returned content."""

return os.popen(f"ssh {host} -l{username} '{command}'").read()

agent = initialize_agent(

[StructuredTool.from_function(ssh)],

OpenAI(temperature=0),

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

verbose=True

)

agent.run("Show me how long the machine at IP address 8.130.35.2 has been running for")In LangChain, tools are provided to easily convert a function into a tool. When the agent is initialized, the tool is invoked. Essentially, the agent takes the tool and becomes a tool man.

Next, let's explore how the tool man thinks and utilizes the tool.

> Entering new AgentExecutor chain...

Action:

{

"action": "ssh",

"action_input": {

"command": "uptime",

"host": "8.130.35.2"

}

}

Observation: 15:48:44 up 25 days, 41 min, 0 users, load average: 1.04, 1.48, 2.20

Thought: I have the answer

Action:

{

"action": "Final Answer",

"action_input": "This machine has been running for 25 days and 41 minutes."

}

> Finished chain.After reading this example, many might wonder: Aren't large language models only used for conversations, like discussing strategies on paper? How can they do so many things instantaneously? Now that SSH can execute uptime, can it easily execute rm -rf /?

Very good. Let's continue with these questions and explore how to install "limbs" for a large language model.

In the above example, the large language model interface is called twice, and we extract the prompt each time.

Prompt at the First Call

System: Respond to the human as helpfully and accurately as possible. You have access to the following tools:

ssh: ssh(command: str, host: str, username: str = 'root') -> str - A tool that can connect to a remote server and execute commands to retrieve returned content., args: {{'command': {{'title': 'Command', 'type': 'string'}}, 'host': {{'title': 'Host', 'type': 'string'}}, 'username': {{'title': 'Username', 'default': 'root', 'type': 'string'}}}}

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or ssh

Provide only ONE action per $JSON_BLOB, as shown:

{

"action": $TOOL_NAME,

"action_input": $INPUT

}

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

$JSON_BLOB

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

{

"action": "Final Answer",

"action_input": "Final response to human"

}

Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:.

Thought:

Human: Show me how long the machine at IP address 8.130.35.2 has been running forReturn at the First Call

Action:

{

"action": "ssh",

"action_input": {

"command": "uptime",

"host": "8.130.35.2"

}

}Prompt at the Second Call

System: Respond to the human as helpfully and accurately as possible. You have access to the following tools:

ssh: ssh(command: str, host: str, username: str = 'root') -> str - A tool that can connect to a remote server and execute commands to retrieve returned content., args: {{'command': {{'title': 'Command', 'type': 'string'}}, 'host': {{'title': 'Host', 'type': 'string'}}, 'username': {{'title': 'Username', 'default': 'root', 'type': 'string'}}}}

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or ssh

Provide only ONE action per $JSON_BLOB, as shown:

{

"action": $TOOL_NAME,

"action_input": $INPUT

}

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

$JSON_BLOB

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

{

"action": "Final Answer",

"action_input": "Final response to human"

}

Begin!Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:.

Thought:

Human: Show me how long the machine at IP address 8.130.35.2 has been running for

This was your previous work (but I haven't seen any of it!I only see what you return as final answer):

Action:

{

"action": "ssh",

"action_input": {

"command": "uptime",

"host": "8.130.35.2"

}

}

Observation: 16:38:18 up 25 days, 1:30, 0 users, load average: 0.81, 0.79, 1.06

Thought:Return at the Second Call

I can provide the human with the uptime of the machine

Action:

{

"action": "Final Answer",

"action_input": "The machine has been running for 25 days, 1 hour and 30 minutes."

}Valid "action" values: "Final Answer" or ssh. This means it must either provide the answer directly or use the tool for assistance.action: ssh. This way, the framework layer knows which tool to call, passes the parameters to that tool, and returns the result at the end of the second call.Final Answer, indicating that the machine has been running for over 25 days.At this point, someone might wonder: Why do we need to propagate all the data every time we make a call? Don't we usually have context when interacting with foundation models? This is actually an engineering problem:

Large language models can only generate a single text prediction at a time. How can we achieve anthropomorphic conversation? Before each conversation, the model incorporates previous rounds of conversational data to create the illusion of remembering past content. However, as the conversation grows longer and each round of data is included, what if the model cannot handle it? One solution could be to delete older data. Is this akin to human forgetfulness? For instance, when discussing a third topic, we might forget what the first topic was about.

Whenever we use the LLM interface, each call generates a new text prediction. Consequently, we must provide all relevant data with every request.

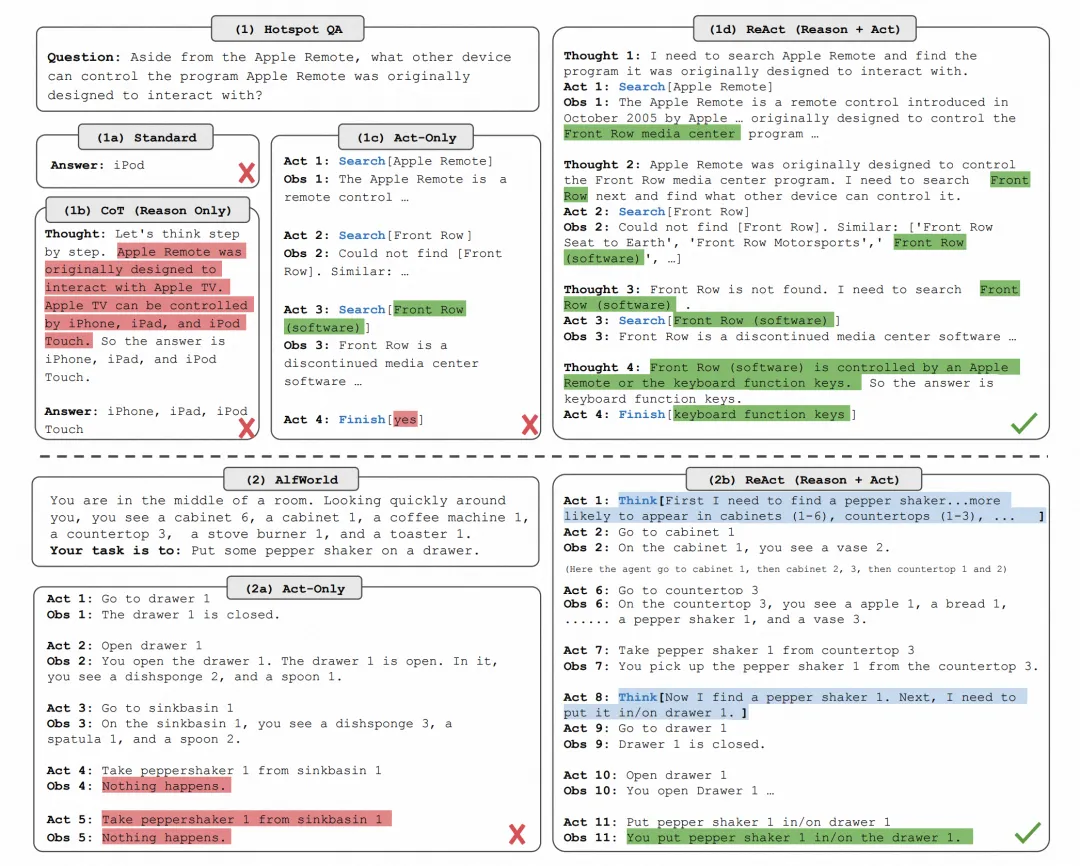

Now, you might wonder why you cannot achieve a call-tool interaction directly using a prompt, but you can do so with a framework. This is what ReAct, short for Reasoning and Acting, does. In this approach, a foundational model constructs a sequence of actions (Act) based on logical reasoning (Reason) to achieve a desired goal. The key lies in coordinating the large language model to access external information and interact with other features. Think of the large model as the brain, and the ReAct framework as the means by which the brain controls the hands and feet.

In the ReAct process, we can focus on three key elements:

Given the power of the ReAct process, can it handle more intricate chains of thought? Here is an example to illustrate.

Here is an example of grabbing a blog RSS subscription and then analyzing it:

Example of grabbing and analyzing blog RSS subscription

from typing import Dict

import sys

import traceback

from io import StringIO

from contextlib import redirect_stdout, redirect_stderr

from langchain.llms import OpenAI

from langchain.tools import StructuredTool

from langchain.agents import initialize_agent, AgentType

def python(code_str: str, return_context: bool=False) -> Dict:

"""This is a Python execution tool. You can input a piece of Python code, and it will return the corresponding execution results. By default, it returns the first 1000 characters of both stdout and stderr. It's recommended to use the print() function to directly display the results."""

# Create StringIO objects for capturing output and errors.

stdout = StringIO()

stderr = StringIO()

return_head = 1000

context = {}

try:

# Redirect stdout and stderr to execute the code.

with redirect_stdout(stdout), redirect_stderr(stderr):

exec(code_str, context)

except Exception:

stderr.write(traceback.format_exc())

# Obtain the stdout, stderr, and context after the execution.

stdout_value = stdout.getvalue()[0:return_head]

stderr_value = stderr.getvalue()[0:return_head]

if return_context == True:

return {"stdout": stdout_value, "stderr": stderr_value, "context": context}

else:

return {"stdout": stdout_value, "stderr": stderr_value, "context": {}}

agent = initialize_agent([StructuredTool.from_function(python)], OpenAI(temperature=0.3), agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

resp = agent.run("Retrieve updates from this XML blog subscription: https://elastic.aiops.work/blog/feed")

print("=== resp ===")

print(resp)We do not offer specific grabbing tools for the foundation model. Instead, we provide a Python executor to determine if it can retrieve the desired information. Now, let's examine the execution process:

Execution process of grabbing and analyzing blog RSS subscription

> Entering new AgentExecutor chain...

Thought: The user is asking for a summary of updates from an XML blog feed. I will need to fetch the XML from the provided URL and parse it to extract the relevant information. Python has several libraries that can help with this, such as 'requests' for fetching the data and 'xml.etree.ElementTree' for parsing the XML. I'll write a Python script to do this and use the execute_python tool to run it.

Action:

{

"action": "python",

"action_input": {

"code_str": "import requests\nfrom xml.etree import ElementTree as ET\n\n# Fetch the XML data\nresponse = requests.get('https://elastic.aiops.work/blog/feed')\n\n# Parse the XML\nroot = ET.fromstring(response.content)\n\n# Extract the relevant information\nupdates = [{'title': item.find('title').text, 'link': item.find('link').text, 'pubDate': item.find('pubDate').text} for item in root.findall('.//item')]\n\n# Print the updates\nfor update in updates:\n print(f\"Title: {update['title']}\nLink: {update['link']}\nPublished Date: {update['pubDate']}\n\")",

"return_context": false

}

}

Observation: {'stdout': '', 'stderr': 'Traceback (most recent call last):\n File "/Users/****/test.py", line 22, in python\n exec(code_str, context)\n File "<string>", line 15\n print(f"Title: {update[\'title\']}\n^\nSyntaxError: EOL while scanning string literal\n', 'context': {}}

Thought:The Python code failed to execute due to a SyntaxError. It seems like the print statement in the code is not properly formatted. I need to correct the print statement and try again.

Action:

{

"action": "python",

"action_input": {

"code_str": "import requests\nfrom xml.etree import ElementTree as ET\n\n# Fetch the XML data\nresponse = requests.get('https://elastic.aiops.work/blog/feed')\n\n# Parse the XML\nroot = ET.fromstring(response.content)\n\n# Extract the relevant information\nupdates = [{'title': item.find('title').text, 'link': item.find('link').text, 'pubDate': item.find('pubDate').text} for item in root.findall('.//item')]\n\n# Print the updates\nfor update in updates:\n print(f\"Title: {update['title']}\\nLink: {update['link']}\\nPublished Date: {update['pubDate']}\\n\")",

"return_context": false

}

}

Observation: {'stdout': 'Title: Being one of the only woman in her computer science program didn’t stop Hannah Mudge from pursuing her dream of becoming a software engineer\nLink: https://www.elastic.co/blog/culture-hannah-mudge-dream-of-becoming-software-engineer\nPublished Date: Fri, 07 Jul 2023 00:00:00 GMT\n\nTitle: Accelerating R&D in pharma with Elasticsearch, ESRE, LLMs, and LangChain — Part 1\nLink: https://www.elastic.co/blog/research-development-pharma-elasticsearch-esre-llms-langchain-1\nPublished Date: Fri, 07 Jul 2023 00:00:00 GMT\n\nTitle: Being one of the only woman in her computer science program didn’t stop Hannah Mudge from pursuing her dream of becoming a software engineer\nLink: https://www.elastic.co/blog/culture-hannah-mudge-dream-of-becoming-software-engineer\nPublished Date: Fri, 07 Jul 2023 00:00:00 GMT\n\nTitle: Accelerating R&D in pharma with Elasticsearch, ESRE, LLMs, and LangChain — Part 1\nLink: https://www.elastic.co/blog/research-development-pharma-elasticsearch-esre-llms-langchain-1\nPublish', 'stderr': '', 'context': {}}

Thought:The Python code executed successfully and returned the recent updates from the XML blog feed. The updates include the title, link, and published date of the blog posts. I will now format this information into a readable format for the user.

Action:

{

"action": "Final Answer",

"action_input": "Here are the recent updates from the blog:\n\n1. Title: Being one of the only woman in her computer science program didn’t stop Hannah Mudge from pursuing her dream of becoming a software engineer\nLink: https://www.elastic.co/blog/culture-hannah-mudge-dream-of-becoming-software-engineer\nPublished Date: Fri, 07 Jul 2023 00:00:00 GMT\n\n2. Title: Accelerating R&D in pharma with Elasticsearch, ESRE, LLMs, and LangChain — Part 1\nLink: https://www.elastic.co/blog/research-development-pharma-elasticsearch-esre-llms-langchain-1\nPublished Date: Fri, 07 Jul 2023 00:00:00 GMT"

}

> Finished chain.

=== resp ===

Here are the recent updates from the blog:

1. Title: Being one of the only woman in her computer science program didn’t stop Hannah Mudge from pursuing her dream of becoming a software engineer

Link: https://www.elastic.co/blog/culture-hannah-mudge-dream-of-becoming-software-engineer

Published Date: Fri, 07 Jul 2023 00:00:00 GMT

2. Title: Accelerating R&D in pharma with Elasticsearch, ESRE, LLMs, and LangChain — Part 1

Link: https://www.elastic.co/blog/research-development-pharma-elasticsearch-esre-llms-langchain-1

Published Date: Fri, 07 Jul 2023 00:00:00 GMT1. The foundation model authored a Python code snippet to retrieve content via requests and parse it as XML. Curiously, it seemed to recognize the fields directly without reading the text. If you have access to a foundation model, consider asking if it's familiar with the RSS XML format. In cases like this, we usually have to explore the content firsthand to determine which fields to extract.

2. It's unfortunate that the initial attempt could have succeeded, but it overlooked the fact that strings enclosed in regular quotation marks in Python cannot span multiple lines. Consequently, an error occurred. Interestingly, a chain of thought emerged: based on the returned results, it deduced that the error originated in the print section. Adjustments were made to the code within that section. This process is quite remarkable. If I were to encounter this error after writing the initial code snippet, I might not immediately discern the issue and could mistake it for a field-related error or something similar.

The Python code failed to execute due to a SyntaxError. It seems like the print statement in the code is not properly formatted. I need to correct the print statement and try again.

3. On the second attempt, it promptly corrected itself by condensing the print section into a single line. Below, I've included both code versions generated by the foundation model for comparison:

First code version

# First code version

import requests

from xml.etree import ElementTree as ET

# Fetch the XML data

response = requests.get('https://elastic.aiops.work/blog/feed')

# Parse the XML

root = ET.fromstring(response.content)

# Extract the relevant information

updates = [{'title': item.find('title').text, 'link': item.find('link').text, 'pubDate': item.find('pubDate').text} for item in root.findall('.//item')]

# Print the updates

for update in updates:

print(f"Title: {update['title']}

Link: {update['link']}

Published Date: {update['pubDate']}

")Second code version

# Second code version

import requests

from xml.etree import ElementTree as ET

# Fetch the XML data

response = requests.get('https://elastic.aiops.work/blog/feed')

# Parse the XML

root = ET.fromstring(response.content)

# Extract the relevant information

updates = [{'title': item.find('title').text, 'link': item.find('link').text, 'pubDate': item.find('pubDate').text} for item in root.findall('.//item')]

# Print the updates

for update in updates:

print(f"Title: {update['title']}\nLink: {update['link']}\nPublished Date: {update['pubDate']}\n")In the second example, we observe that when the foundation model encounters an error while invoking the tool, it leverages its existing knowledge to analyze the error information, make adjustments, and modify the code until the execution succeeds. At this juncture, this tool man not only possesses tools but also demonstrates human-like reasoning and problem-solving abilities through multiple attempts.

Are some of you already eager to try this? Let's discuss how to integrate Agent and Tool into ordinary programming.

In distributed computing, programming paradigms such as MapReduce simplify the process. After creating the mapper and reducer, you can run programs on clusters of thousands of servers without the need to understand the intricacies of distributed communication synchronization or worry about machine failures. Interestingly, even the mapper and reducer themselves are no longer required. A SELECT SQL query will automatically be split into several mappers and reducers for execution.

In practice, the MapReduce programming paradigm closely resembles the organizational structure of a large company. Here's how it works: The overall task is initially divided into smaller components, akin to how a company assigns work to various teams (referred to as "Mappers"). These Mappers, analogous to front-line employees, handle specific tasks within their designated areas. Each group concentrates on its assigned work, processing data or tasks.

Individuals within an organization do not need to concern themselves with other departments or production links. Their primary focus is completing their assigned work. Once they finish their tasks, these teams or employees submit their deliverables to their managers (known as "Reducers"). These "managers" then consolidate and aggregate all the deliverables to create a higher-level report or decision. Ultimately, senior management (also referred to as "Reducers") makes final decisions or generates reports based on the aggregated data. This process mirrors how a company's CEO or board of directors makes company-wide decisions, drawing insights from information gathered across various departments.

Let's consider the tool man we previously created. Can we directly fit this tool man into the MapReduce model? Would there be significant issues if we placed this tool man in a senior management role for decision-making? After all, the expertise and knowledge of this tool man might surpass that of some professional managers.

However, we must also recognize that the MapReduce structure isn't necessarily the optimal solution for complex engineering scenarios. Its design was influenced by limitations in scheduler intelligence. Looking ahead, if the scheduler evolves into a foundational model, could it create a more effective structure than MapReduce based on specific business contexts? Perhaps we do not even need to adhere strictly to MapReduce. Could we blend in sociological elements and call it an AI foundation model sociological computing architecture? Furthermore, we need to explore communication mechanisms between multiple AI foundation models to collaboratively address complex problems.

Let's revisit our current programming model and explore how to implement this engineering structure.

In programming, decorators are a frequently used design pattern that enhances code readability, maintainability, and separation of concerns. For instance, in the Flask framework for Python, a basic route might appear as follows:

@app.route('/hello')

def hello_world():

return 'Hello, World!'Can Agent and Tool also utilize decorators in our programming? Absolutely! LangChain provides decorators, including @tool. However, despite having these tools at our disposal, we sometimes struggle to implement the engineering structure we envisioned. If we are dealing with a simple decorator, it does not appear complicated, and we can create a straightforward implementation.

• @tool() behaves similarly to LangChain, allowing a function to be transformed into a tool after outsourcing.

• In @agent(tools=[...], llm=..., ...), once a function is outsourced, it becomes an agent, and its output serves as the prompt filled in agent.run(...). Furthermore, the agent itself can also function as a tool for other agents.

This is a bit abstract. Let's look at an example.

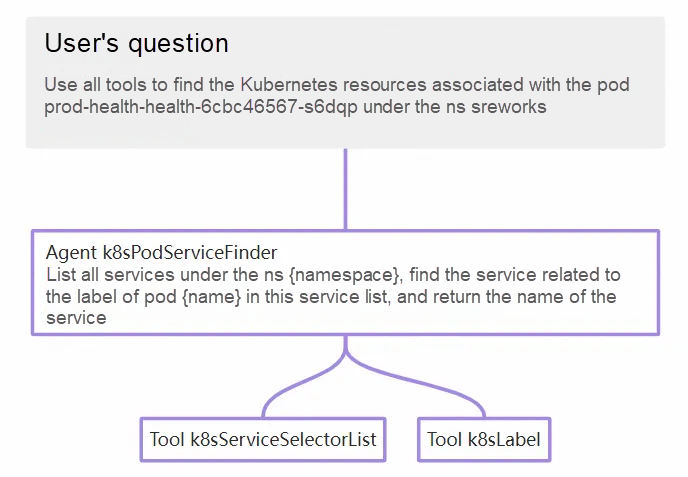

Unlike the previous straightforward cases, let's delve into the background: In cloud-native scenarios, workloads often rely on services, and when troubleshooting issues, we frequently seek the service associated with the specified pod. The purpose of the example is to leverage the foundation model to assist us in locating the service.

import os

import sys

from subprocess import Popen, PIPE

sys.path.insert(0, os.path.split(os.path.realpath(__file__))[0] + "/../../")

from aibond import AI

from langchain import OpenAI

ai = AI()

def popen(command):

child = Popen(command, stdin = PIPE, stdout = PIPE, stderr = PIPE, shell = True)

out, err = child.communicate()

ret = child.wait()

return (ret, out.strip(), err.strip())

@ai.tool()

def k8sLabel(name: str, kind: str, namespace: str) -> str:

"""This tool can fetch the labels of Kubernetes objects."""

cmd = "kubectl get " + kind + " " + name + " -n " + namespace + " -o jsonpath='{.metadata.labels}'"

(ret, out, err) = popen(cmd)

return out

@ai.tool()

def k8sServiceSelectorList(namespace: str) -> str:

"""This tool can find all services within a namespace in Kubernetes and retrieve the label selectors for each service."""

cmd = "kubectl get svc -n " + namespace + " -o jsonpath=\"{range .items[*]}{@.metadata.name}:{@.spec.selector}{'\\n'}{end}\""

(ret, out, err) = popen(cmd)

return out

@ai.agent(tools=["k8sLabel", "k8sServiceSelectorList"], llm=OpenAI(temperature=0.2), verbose=True)

def k8sPodServiceFinder(name: str, namespace: str) -> str:

"""This tool can find the services associated with a Kubernetes pod resource."""

return f"List all services under the ns {namespace}, find the service related to the label of pod {name} in this service list, and return the name of the service"

a = ai.run("Use all tools to find the Kubernetes resources associated with the pod prod-health-health-6cbc46567-s6dqp under the ns sreworks", llm=OpenAI(temperature=0.2), agents=["k8sPodServiceFinder"], verbose=True)

print(a)• Finally, when we want to solve the problem, we rely on the agent k8sPodServiceFinder.

• k8sPodServiceFinder itself is also an agent. It converts the parameters name and namespace into a prompt. At the same time, it relies on the tools k8sLabel and k8sServiceSelectorList to solve the problem. It teaches the foundation model how to use these two tools in the prompt.

• The following figure shows the final hierarchy of the entire execution.

Given our use of decorators in programming, managing this hierarchy becomes straightforward, even if it's slightly more complex. Overall, we must take into account the following points:

The decorators mentioned above form a framework that includes some syntactic sugar. The central component is LangChain. For those interested in hands-on practice, you can refer to the framework code.

https://github.com/alibaba/sreworks-ext/tree/master/aibond

With the framework mentioned earlier, we can explore various AI-related programming approaches. However, we often find ourselves restricted to writing simple functions rather than more complex ones. Why? The limitation arises because only functions can be directly converted into tools. In most programming scenarios, we rely on object-oriented programming (OOP). Suddenly, we're faced with using plain functions everywhere, which can feel a bit uncomfortable. Now, let's consider a question: Why can't a class object be converted into a tool? Functions are stateless, while class objects are instantiated and maintain state. However, as we discussed during the prompt analysis, we propagate all relevant information each time we call, so incorporating class instance data should not pose a problem.

So, we can set up such a stateful function, like a proxy, and wrap a class object in it:

def demo_class_tool(func: str, args: Dict, instance_id: str = None) -> Dict:

"""

This is a tool that requires instantiation. You can first call the '__init__' function to instantiate, this call will return an 'instance_id'. Subsequently, you can use this 'instance_id' to continue operating on this instance.

Below are the available funcs for this tool:

- func: __init__ args: {{'url': {{'title': 'Url', 'type': 'string'}} }}

- func: read args: {{'limit': {{'title': 'Limit', 'type': 'intger', 'default': '1000'}}

"""

...• Provide instructions on how to use this tool and specify which functions it includes (that is, how many functions are part of the class object).

• Guide the foundation model in calling __init__ first to get the instantiated instance_id.

• Then, instruct the foundation model to use this instance_id and reference the parameters in our description to invoke the function. This approach enables the implementation of a class call.

Considering the initial example of checking the machine running duration, we can revisit its implementation using class objects.

Use class objects to view the running duration of the machine

import os

import sys

import paramiko

sys.path.insert(0, os.path.split(os.path.realpath(__file__))[0] + "/../../")

from aibond import AI

from tools.Linux.SSH import SshClient

from langchain import OpenAI

ai = AI()

class SshClient():

"""A tool that can connect to a remote server and execute commands to retrieve returned content."""

_client = None

def __init__(self, host: str, username: str = "root", password: str = None):

self._client = paramiko.SSHClient()

self._client.set_missing_host_key_policy(paramiko.AutoAddPolicy())

self._client.connect(host, username=username, password=password)

def exec_command(self, command: str) -> Dict:

stdin, stdout, stderr = self._client.exec_command(command)

retcode = stdout.channel.recv_exit_status()

output_stdout = stdout.read().decode('utf-8')

output_stderr = stderr.read().decode('utf-8')

stdin = None

stdout = None

stderr = None

return {"stdout": output_stdout, "stderr": output_stderr, "exitStatus": retcode}

resp = ai.run("Show me how long the machine at IP address 8.130.35.2 has been up for", llm=OpenAI(temperature=0.2), tools=[SshClient], verbose=True)

print("=== resp ===")

print(resp)• Upon introducing an SSH client, we've transformed the original command execution with the machine IP address into a two-step process. First, we instantiate sshClient, and then we invoke sshClient.exec_command(...) to execute the command. Now, let's explore whether the foundation model can grasp this approach.

Execution procedure of using class objects to view the running duration of the machine

> Entering new AgentExecutor chain...

Action:

{

"action": "SshClient",

"action_input": {

"sub_func": "__init__",

"sub_args": {

"host": "8.130.35.2",

"username": "root",

"password": ""

}

}

}

Observation: {'instance_id': 'cbbb660c0bc3'}

Thought: I need to use the instance_id to execute a command

Action:

{

"action": "SshClient",

"action_input": {

"sub_func": "exec_command",

"sub_args": {

"command": "uptime"

},

"instance_id": "cbbb660c0bc3"

}

}

Observation: {'stdout': ' 23:18:55 up 25 days, 8:11, 0 users, load average: 0.29, 0.55, 0.84\n', 'stderr': '', 'exitStatus': 0}

Thought: I have the answer

Action:

{

"action": "Final Answer",

"action_input": "This machine has been up for 25 days, 8 hours, and 11 minutes."

}

> Finished chain.

=== resp ===

This machine has been up for 25 days, 8 hours, and 11 minutes.We observe that the foundation model comprehends the instantiation process effectively. Initially, it employs the IP address for instantiation and subsequently executes the command.

If class objects could be directly transformed into tools, AI programming would become significantly less challenging. Some class objects might even serve as tools for Agents without requiring additional processing. Now, let's explore whether this approach can also be applied to processing big data: load the data into an object and provide various methods for the foundation model to analyze or digest it. Additionally, could this concept be extended to summarizing large texts?

The implementation of invoking class objects as tools has already been integrated into the framework. Feel free to give it a try!

https://github.com/sreworks/aibond/blob/master/aibond/core.py

For more exploration after the class object toolization, see the second article.

When beginners delve into programming, the concept of OOP can initially seem complex. To aid their understanding, visual teaching methods often come into play, such as using analogies like hands and feet to explain the idea of a class. In my early days, I naively believed that if each class had these hands and feet, the entire program would come to life. However, as I wrote more code, I realized a gap existed. While we tend to view classes as static containers, real-world objects should exhibit initiative and dynamism. They should talk (communicate) and work (perform tasks). Just like in reality, each individual should be an independent entity capable of autonomous thought and action.

As mentioned earlier, our tool man can indeed accomplish this. The example above demonstrates that a class object can be transformed into a tool for the agent's use. Now, can we embed the foundation model driver within this class object to give it life? When the agent makes a call, it seeks not only a result but also a communication style oriented toward outcomes.

These explorations will continue in subsequent parts.

• React: Synergizing Reasoning and Acting in Language Models

• Aibond Use Cases

Exploring DevOps in the Era of AI Foundation Models Part Ⅱ: Data Warehouse Based on LLMs

44 posts | 1 followers

FollowAlibaba Cloud Data Intelligence - August 14, 2024

Alibaba Cloud Community - August 9, 2024

Alibaba Cloud Data Intelligence - August 8, 2024

Alibaba Cloud Native Community - November 6, 2025

Alibaba Clouder - June 23, 2020

Alibaba Cloud Community - September 6, 2024

44 posts | 1 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence