Platform for AI (PAI) allows you to integrate Elasticsearch with a Retrieval-Augmented Generation (RAG)-based large language model (LLM) chatbot. This way, the accuracy and diversity of the answers generated by models are enhanced. Elasticsearch features efficient data retrieval capabilities and provides special features such as dictionary configuration and index management. This allows the RAG-based LLM chatbot to identify user requirements better and provide more appropriate and valuable feedback. This article describes how to associate Elasticsearch with a RAG-based LLM chatbot when you deploy the RAG-based LLM chatbot. This article also describes the basic features provided by a RAG-based LLM chatbot and the special features provided by Elasticsearch.

Elastic Algorithm Service (EAS) is an online model service platform of PAI that allows you to deploy models as online inference services or AI-powered web applications. EAS provides features such as auto scaling and blue-green deployment. These features reduce the costs of developing stable online model services that can handle a large number of concurrent requests. In addition, EAS provides features such as resource group management and model versioning and capabilities such as comprehensive O&M and monitoring. For more information, see EAS overview.

With the rapid development of AI technology, generative AI has made remarkable achievements in various fields such as text generation and image generation. However, the following inherent limits gradually emerge while LLMs are widely used:

To address these challenges and enhance the capabilities and accuracy of LLMs, RAG is developed. RAG integrates external knowledge bases to significantly mitigate the issue of LLM hallucinations and enhance the capabilities of LLMs to access and apply up-to-date knowledge. This enables the customization of LLMs for greater personalization and accuracy.

Alibaba Cloud Elasticsearch is a fully managed cloud service that is developed based on open source Elasticsearch. Alibaba Cloud Elasticsearch is compatible with all features provided by open source Elasticsearch. You can use this out-of-the-box service by using the pay-as-you-go billing method. In addition to Elastic Stack components such as Elasticsearch, Logstash, Kibana, and Beats, Alibaba Cloud Elasticsearch cooperates with Elastic and provides the X-Pack commercial plug-in free of charge. X-Pack advanced features provided in the open source Elasticsearch Platinum edition are developed by the open source Elasticsearch team based on the X-Pack plug-in. The features include security, SQL plug-in, machine learning, alerting, and monitoring. Alibaba Cloud Elasticsearch is widely used in scenarios such as real-time log analysis and processing, information retrieval, multidimensional data queries, and statistical data analytics. For more information, see What is Alibaba Cloud Elasticsearch?

EAS provides a self-developed RAG systematic solution with flexible parameter configurations. You can access RAG services by using a web user interface (UI) or calling API operations to configure a custom RAG-based LLM chatbot. The technical architecture of RAG focuses on retrieval and generation.

In this example, an Elasticsearch cluster is used to show how to use EAS and Elasticsearch to deploy a RAG-based LLM chatbot by performing the following steps:

1. Prepare a vector database by using Elasticsearch

Create an Elasticsearch cluster and prepare the configuration items on which a RAG-based LLM chatbot depends to associate the chatbot with the Elasticsearch cluster.

2. Deploy a RAG-based LLM chatbot and associate it with the Elasticsearch cluster

Deploy a RAG-based LLM chatbot and associate it with the Elasticsearch cluster on EAS.

3. Use the RAG-based LLM chatbot

You can connect to the Elasticsearch cluster in the RAG-based LLM chatbot, upload business data files, and then perform knowledge Q&A.

A virtual private cloud (VPC), a vSwitch, and a security group are created. For more information, see Create a VPC with an IPv4 CIDR block and Create a security group.

This practice is subject to the maximum number of tokens of an LLM service and is designed to help you understand the basic retrieval feature of a RAG-based LLM chatbot.

Log on to the Alibaba Cloud Elasticsearch console. In the left-side navigation pane, click Elasticsearch Clusters. On the page that appears, click Create. The following table describes the key parameters. For information about other parameters, see Create an Alibaba Cloud Elasticsearch cluster.

| Parameter | Description |

|---|---|

| Region and Zone | The region and zone in which the cluster resides. Select the region in which EAS is deployed. |

| Instance Type | The type of the cluster. Select Standard Edition. |

| Password | The password that is used to access the cluster. Configure a password and save the password to your on-premises machine. |

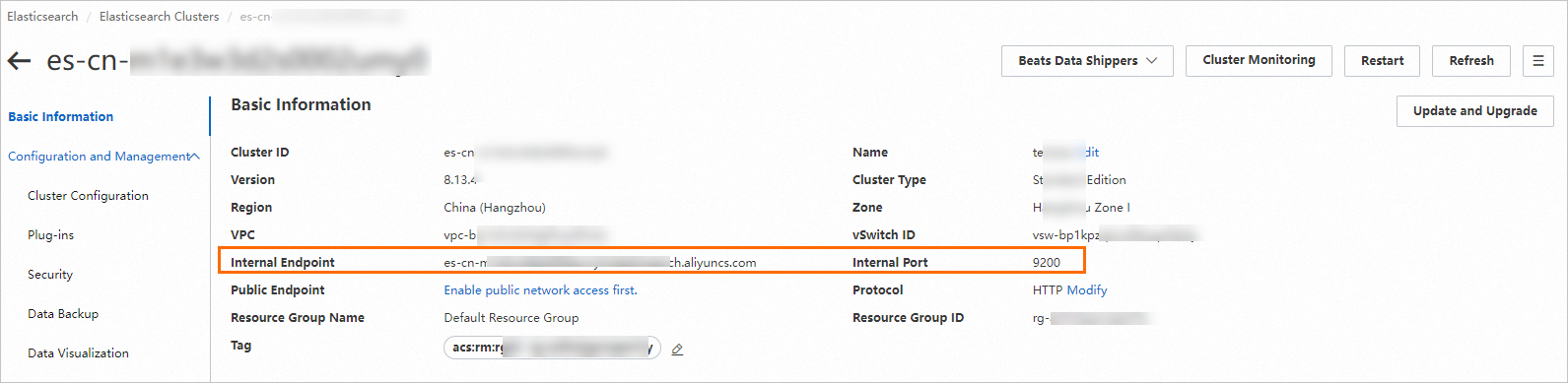

1. Prepare the URL of the Elasticsearch cluster.

Format: http://<Internal endpoint>:<Port number>.

Note: If you use an internal endpoint, make sure that the Elasticsearch cluster and RAG-based LLM chatbot reside in the same VPC. Otherwise, the connection fails.

2. Prepare the index name.

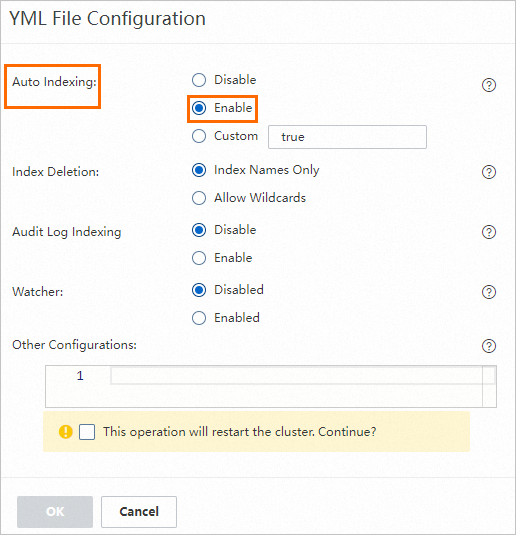

In the left-side navigation pane, choose Configuration and Management > Cluster Configuration. On the page that appears, click Modify Configuration in the upper-right corner of the YML File Configuration section. In the YML File Configuration panel, select Enable for the Auto Indexing parameter. For more information, see Configure the YML file.

After the settings are complete, you can configure a custom index name when you deploy a RAG-based LLM chatbot. For example, you can set the index name to es-test.

3. Prepare the username and password of the Elasticsearch cluster.

The default username of an Elasticsearch cluster is elastic. The password is the one you specify when you create the Elasticsearch cluster. If you forget your password, you can reset the password. For more information, see Reset the access password for an Elasticsearch cluster.

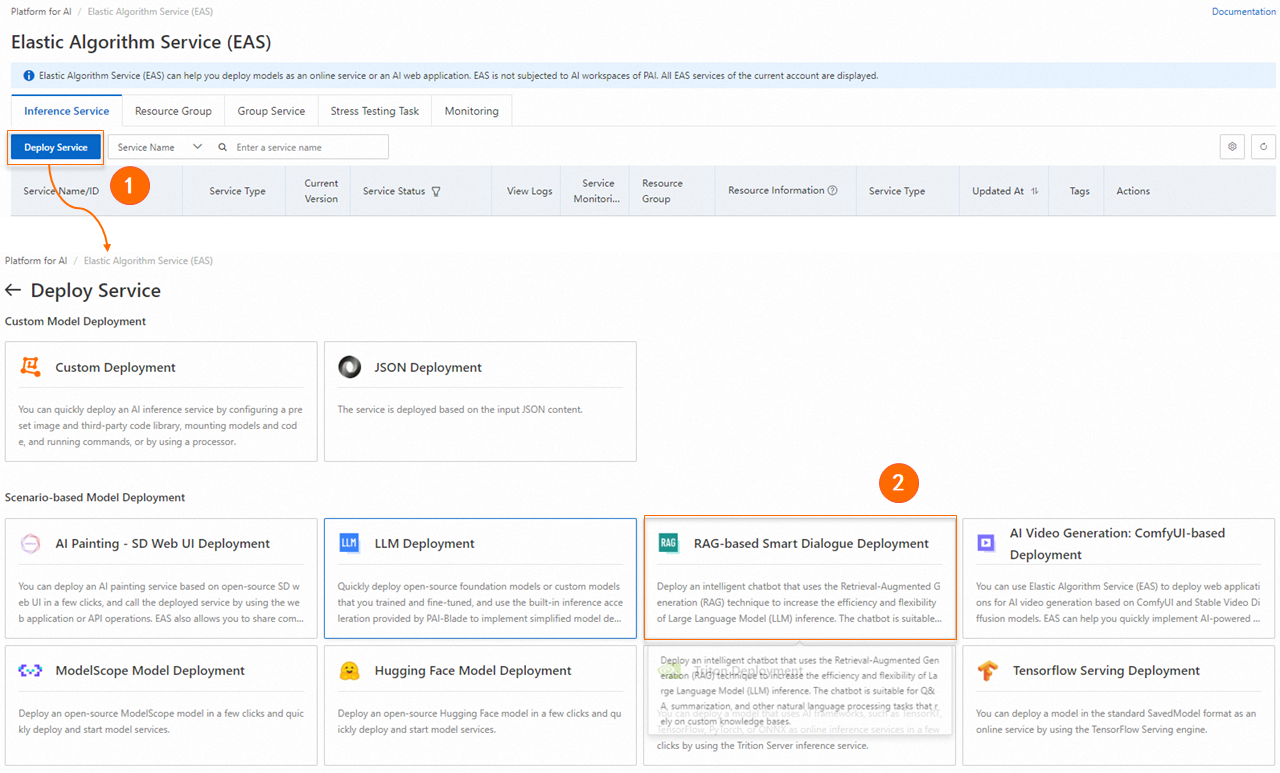

1. Log on to the PAI console. In the upper part of the page, select the region in which you want to create a workspace. In the left-side navigation pane, choose Model Training > Elastic Algorithm Service (EAS). On the page that appears, select the desired workspace and click Enter Elastic Algorithm Service (EAS).

2. On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Scenario-based Model Deployment section, click RAG-based Smart Dialogue Deployment.

3. On the RAG-based LLM Chatbot Deployment page, configure the key parameters described in the following table. For information about other parameters, see Step 1: Deploy the RAG-based chatbot.

|

Parameter |

Description |

|

|

Basic Information |

Model Source |

Select Open Source Model. |

|

Model Type |

The model type. In this example, Qwen1.5-1.8b is used. |

|

|

Resource Configuration |

Resource Configuration |

The system recommends the appropriate resource specifications based on the selected model type. If you use other resource specifications, the model service may fail to start. |

|

Vector Database Settings |

Vector Database Type |

Select Elasticsearch. |

|

Private Endpoint and Port |

Enter the Elasticsearch cluster URL that you obtained in Step 2. Specify the value in the http://<Internal endpoint>:<Port number> format. |

|

|

Index Name |

The name of the index. You can enter a new index name or an existing index name. If you use an existing index name, the index schema must meet the requirements of the RAG-based chatbot. For example, you can enter the name of the index that is automatically created when you deploy the RAG-based chatbot by using EAS. |

|

|

Account |

Enter elastic. |

|

|

Password |

The password that you specify in Step 2. |

|

|

VPC Configuration (Optional) |

VPC |

The VPC in which the Elasticsearch cluster resides. |

|

vSwitch |

||

|

Security Group Name |

||

4. After you configure the parameters, click Deploy.

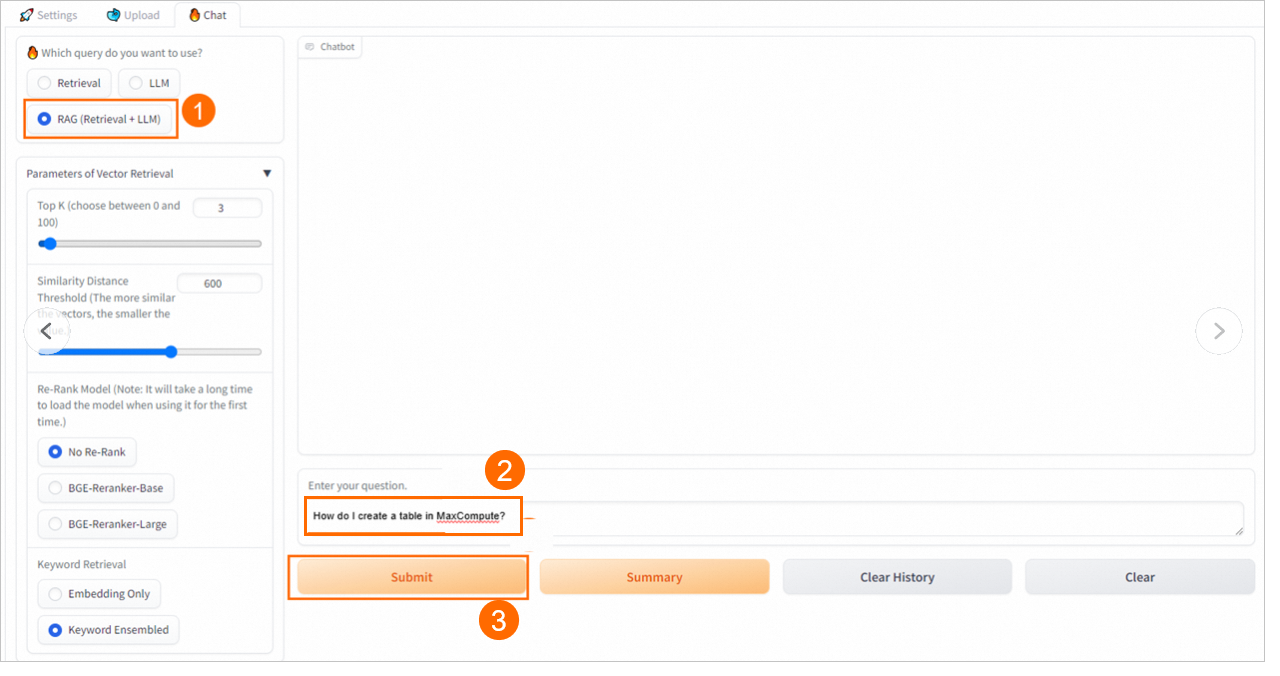

The following section describes how to use a RAG-based LLM chatbot. For more information, see RAG-based LLM chatbot.

The system recognizes and applies the vector database settings that are configured when you deploy the chatbot. Click Connect ElasticSearch to check whether the vector database in the Elasticsearch cluster is connected. If the connection fails, check whether the vector database settings are correct based on Step 2: Prepare configuration items. If the settings are incorrect, modify the configuration items and click Connect ElasticSearch to reconnect the Elasticsearch cluster.

Upload your knowledge base files. The system automatically stores the knowledge base in the PAI-RAG format to the vector database for retrieval. You can also use existing knowledge bases in the database, but the knowledge bases must meet the PAI-RAG format requirements. Otherwise, errors may occur during retrieval.

1. On the Upload tab, configure the chunk parameters.

The following parameters controls the granularity of document chunking and whether to enable Q&A extraction.

| Parameter | Description |

|---|---|

| Chunk Size | The size of each chunk. Unit: bytes. Default value: 500. |

| Chunk Overlap | The overlap between adjacent chunks. Default value: 10. |

| Process with QA Extraction Model | Specifies whether to extract Q&A information. If you select Yes, the system automatically extracts questions and corresponding answers in pairs after knowledge files are uploaded. This way, more accurate answers are returned in data queries. |

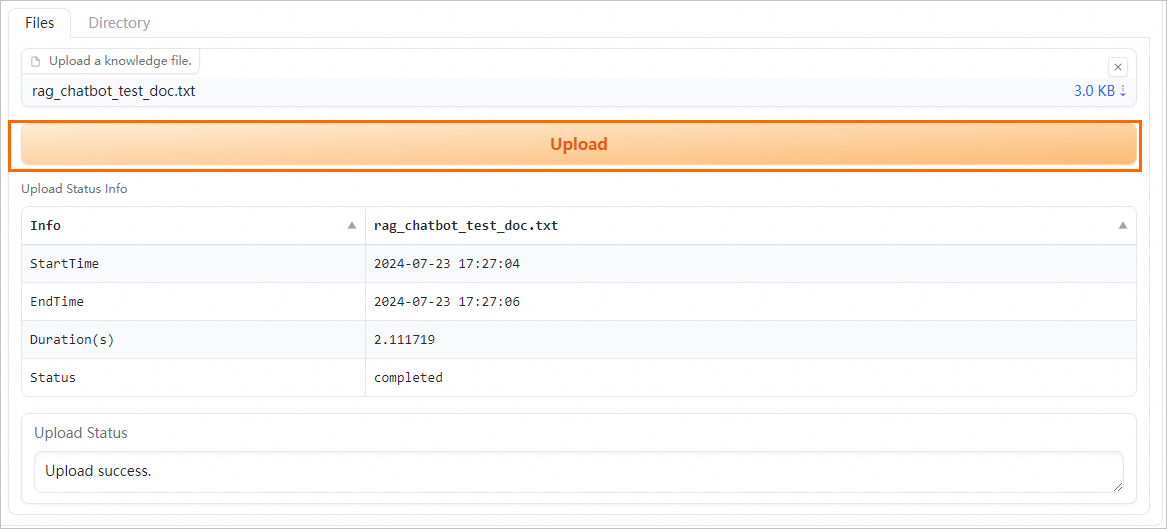

2. On the Files tab or Directory tab, upload one or more business data files. You can also upload a directory that contains the business data files. Supported file types: txt,. pdf, Excel (.xlsx or. xls),. csv, Word (.docx or. doc), Markdown, or. html. For example: rag_chatbot_test_doc.txt.

3. Click Upload. The system performs data cleansing and semantic-based chunking on the business data files before uploading the business data files. Data cleansing includes text extraction and hyperlink replacement.

The RAG-based LLM chatbot enters the results returned from the vector database and the query into the selected prompt template and sends the template to the LLM application to provide an answer.

Alibaba Cloud Elasticsearch comes with a built-in IK analysis plug-in named analysis-ik. The analysis-ik plug-in acts as a dictionary that contains common words of various categories. The analysis-ik plug-in comes with a built-in main dictionary and a stopword dictionary. The main dictionary is used to analyze complex text. The stopword dictionary is used to remove meaningless high-frequency words. This enhances retrieval efficiency and accuracy. Although the built-in dictionaries of analysis-ik are comprehensive, they may not include specific terminology in specialized fields such as law and medicine, as well as product names, company names, and brand names in the default knowledge base. To enhance retrieval accuracy, you can create custom dictionaries based on your business requirements. For more information, see Use the analysis-ik plug-in.

Prepare a custom main or stopword dictionary on your on-premises machine. The dictionary file must meet the following requirements:

.dic. The file name must be 1 to 30 characters in length and can contain letters, digits, and underscores (_). For example, you can prepare a dictionary file named new_word.dic.cloud server

custom tokenAfter you prepare the dictionary file, you need to upload the dictionary file to the specified location. In this example, Standard Update is used to describe how to upload the dictionary file. For more information, see Use the analysis-ik plug-in.

1. Go to the details page of an Elasticsearch cluster.

Navigate to the desired cluster.

2. In the left-side navigation pane of the page that appears, choose Configuration and Management > Plug-ins.

3. On the Built-in Plug-ins tab, find the analysis-ik plug-in and click Rolling Update in the Actions column.

4. In the Configure IK Dictionaries - Rolling Update panel, click Edit on the right side of the dictionary that you want to update, upload a dictionary file, and then click Save.

You can use one of the following methods to update a dictionary file:

Upload OSS File: Configure the Bucket Name and File Name parameters, and click Add.

Note

.dic. The file name must be 1 to 30 characters in length and can contain letters, digits, and underscores (_). icon next to the file to download and modify it. Then, delete the file and upload the file again.

icon next to the file to download and modify it. Then, delete the file and upload the file again.5. Click OK. After the dictionary file is updated, reconnect the RAG-based LLM chatbot to the Elasticsearch cluster on the web UI. For more information, see Connect to a vector database.

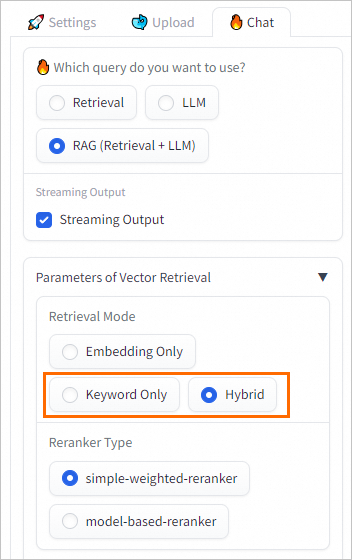

After the RAG-based LLM chatbot is reconnected to the Elasticsearch cluster, perform knowledge Q&A on the web UI. If you select Keyword Only or Hybrid for the Retrieval Mode parameter, you can perform full-text queries by using the updated dictionary file of the Elasticsearch cluster.

Elasticsearch provides the index management feature. Effective index management can allow a RAG-based LLM chatbot to efficiently and accurately retrieve valuable information from vast datasets and generate high-quality answers. To manage indexes, perform the following steps:

1. Go to the details page of an Elasticsearch cluster.

Navigate to the desired cluster.

2. In the left-side navigation pane of the page that appears, choose Data Visualization.

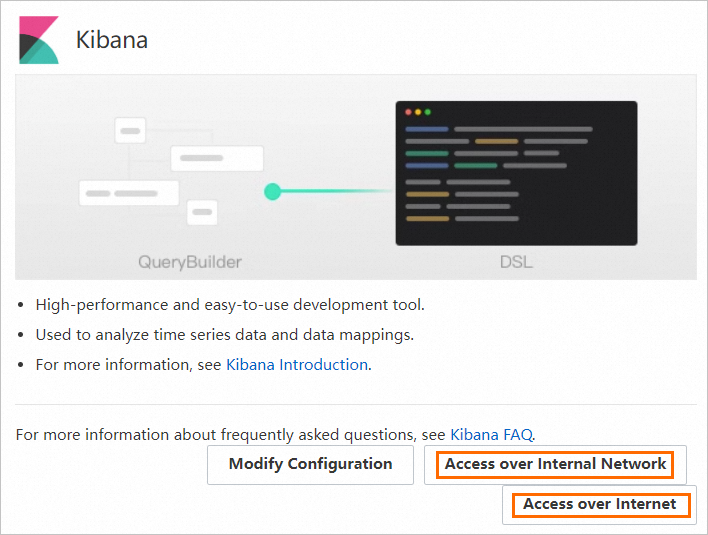

3. In the Kibana section of the page that appears, click Modify Configuration. On the Kibana Configuration page, configure a private or public IP address whitelist for Kibana.

For more information, see Configure a public or private IP address whitelist for Kibana.

4. Log on to the Kibana console.

Note

The Access over Internet or Access over Internal Network entry is displayed only after the Public Network Access or Private Network Access switch is turned on for Kibana.

On the Kibana logon page, enter the username and password.

5. View and manage indexes.

icon and choose Management > Stack Management.

icon and choose Management > Stack Management.

Use PAI-Blade and TorchScript Custom C++ Operators to Optimize a RetinaNet Model

44 posts | 1 followers

FollowAlibaba Cloud Data Intelligence - June 20, 2024

Alibaba Cloud Data Intelligence - November 27, 2024

JJ Lim - January 23, 2025

Data Geek - February 25, 2025

Alibaba Cloud Data Intelligence - June 17, 2024

Muhamad Miftah - February 23, 2026

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence