The Elastic Algorithm Service (EAS) module of Platform for AI (PAI) is a model serving platform for online inference scenarios. You can use EAS to deploy a large language model (LLM) with a few clicks and then call the model by using the Web User Interface (WebUI) or API operations. After you deploy an LLM, you can use the LangChain framework to build a Q&A chatbot that is connected to a custom knowledge base. You can also use the inference acceleration engines provided by EAS, such as BladeLLM and vLLM, to ensure high concurrency and low latency.

The application of LLMs, such as the Generative Pre-trained Transformer (GPT) and TongYi Qianwen (Qwen) series of models, has garnered significant attention, especially in inference tasks. You can select from a wide range of open source LLMs based on your business requirements. EAS allows you to quickly deploy mainstream open source LLMs as an inference service with a few clicks. Supported LLMs include Llama 3, Qwen, Llama 2, ChatGLM, Baichuan, Yi-6B, Mistral-7B, and Falcon-7B. This article describes how to deploy an LLM in EAS and call the model.

PAI is activated and a default workspace is created. For more information, see Activate PAI and create a default workspace.

If you use a Resource Access Management (RAM) user to deploy the model, make sure that the RAM user has the permissions to use EAS. For more information, see Grant the permissions that are required to use EAS.

The inference acceleration engines provided by EAS support only the following models: Qwen, Llama 2, Baichuan-13B, and Baichuan2-13B.

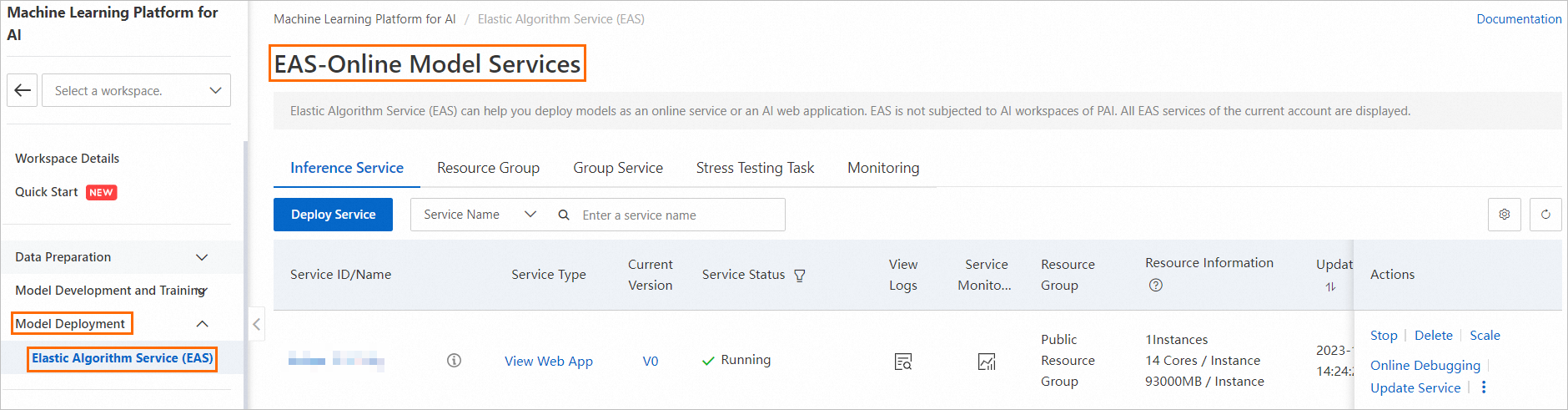

1. Go to the EAS-Online Model Services page.

2. On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the dialog box that appears, select LLM Deployment and click OK.

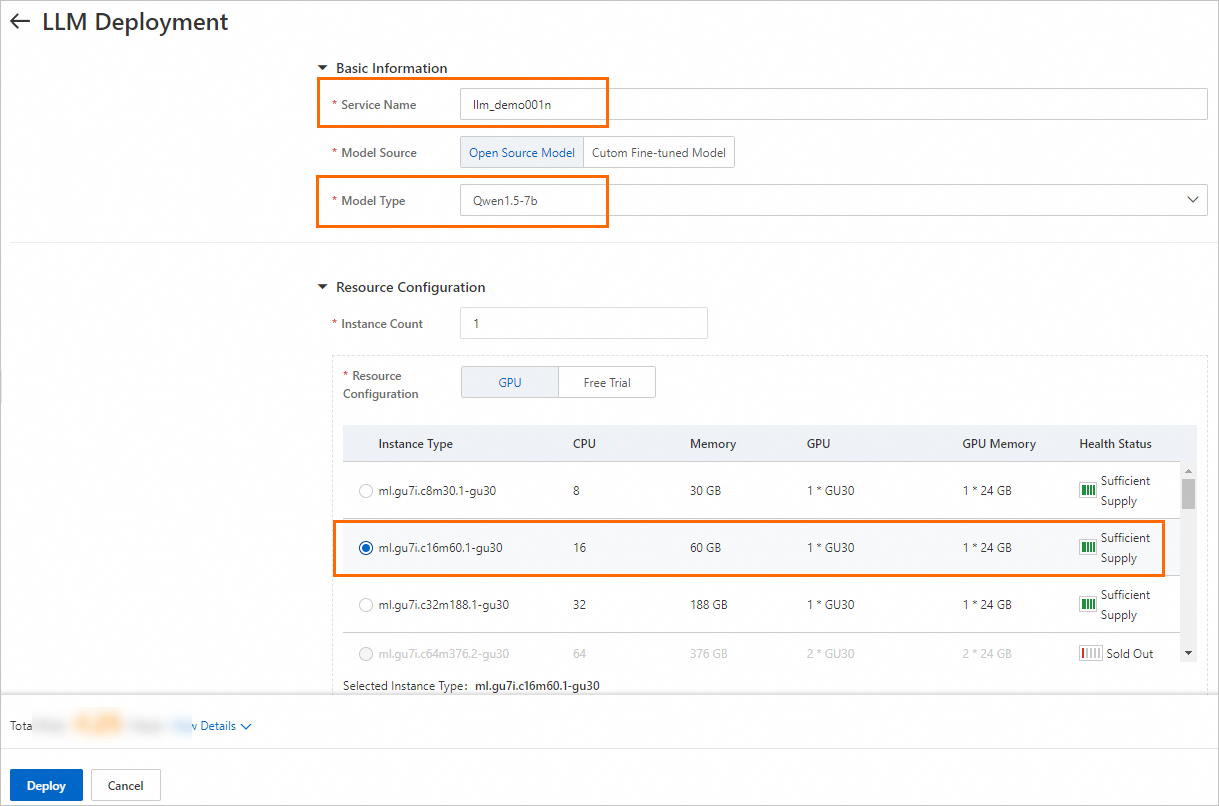

3. On the LLM Deployment page, configure the parameters. The following table describes the required parameters. Retain the default settings for other parameters.

| Parameter | Description |

| Service Name | The name of the service. In this example, the service is named llm_demo001. |

| Model Type | The model that you want to deploy. In this example, Qwen1.5-7b is used. EAS provides various model types, such as ChatGLM3-6B and Llama2-13B. You can select a model type based on business requirements. |

| Resource Configuration | In this example, the Instance Type parameter is set to ml.gu7i.c16m60.1-gu30 for cost efficiency. Note: If the resources in the current region are insufficient, you can deploy the model in the Singapore region. |

| Inference Acceleration | Whether to enable inference acceleration. In this example, Not Accelerated is used. |

4. Click Deploy. The model deployment requires approximately five minutes.

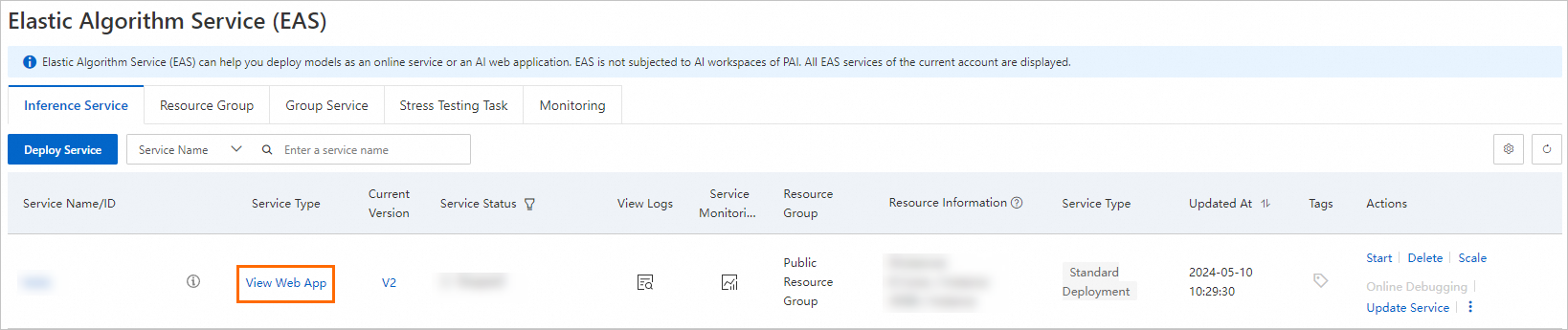

1. Find the service that you want to manage and click View Web App in the Service Type column to access the web application interface.

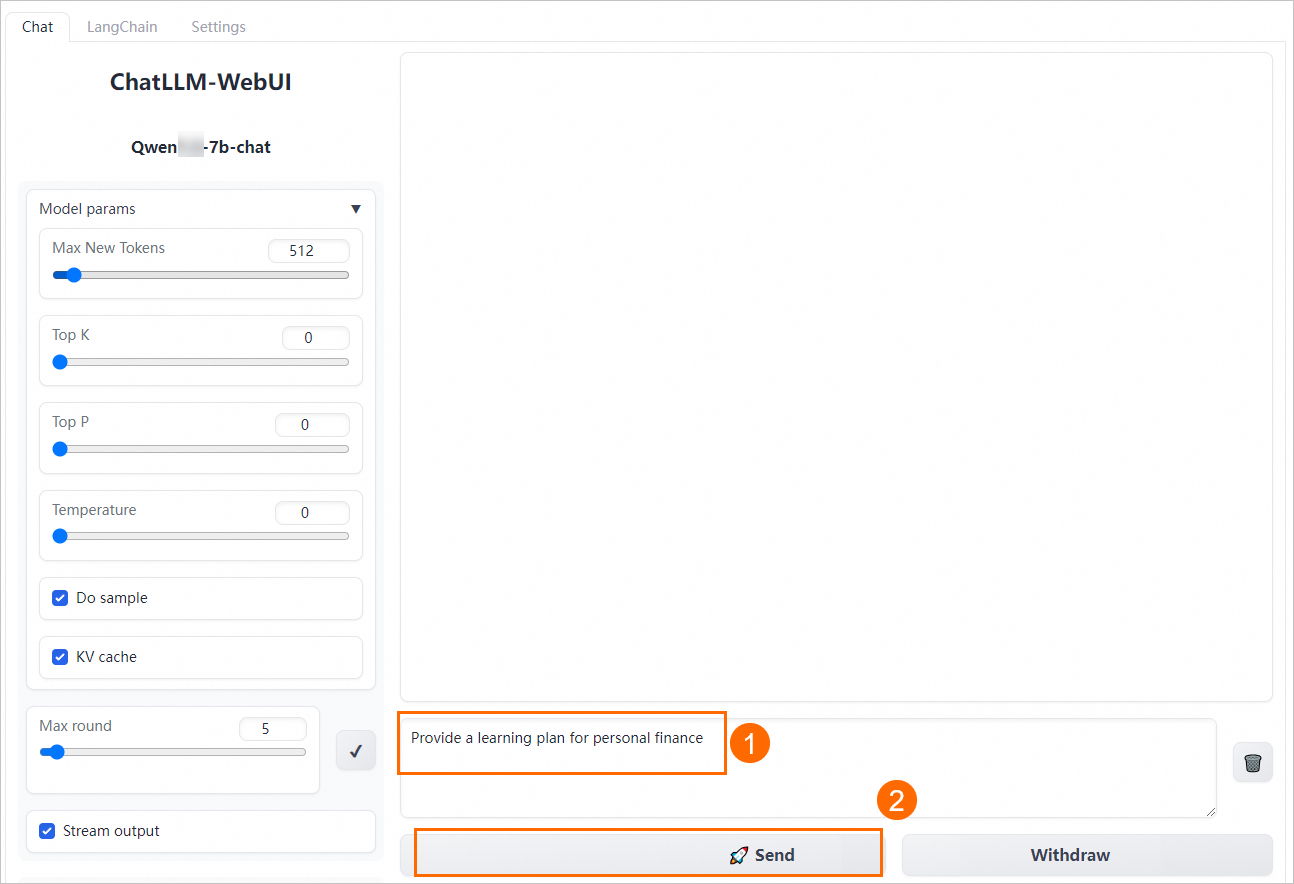

2. Perform model inference by using the WebUI.

Enter a sentence in the input text box and click Send to start a conversation. Sample input: Provide a learning plan for personal finance.

44 posts | 1 followers

Followray - April 16, 2025

Alibaba Cloud Data Intelligence - November 27, 2024

Alibaba Cloud Data Intelligence - December 27, 2024

Alibaba Cloud Data Intelligence - November 27, 2024

Alibaba Cloud Data Intelligence - June 17, 2024

Farruh - January 12, 2024

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence