By JV Roig

Welcome to part 2 in this series of generative AI deployment approaches on Alibaba Cloud.

In part 1 of this series, we tackled IaaS (Infrastructure as a Service) genAI deployments, using Elastic Compute Service (ECS). As we saw from part 1, this is a very powerful and flexible way to deploy generative AI. Unfortunately, it comes with a lot of steps, and lots of management overhead because we're responsible for the operation and maintenance of the entire stack.

Could we make that easier, faster, and with less overhead? Yes, we can!

Today, we'll tackle the PaaS (Platform as a Service) approach, using Alibaba Cloud Platform for AI (PAI). We'll see how a PaaS approach helps us reduce the operational overhead involved, and lets us deploy useful generative AI services in minutes.

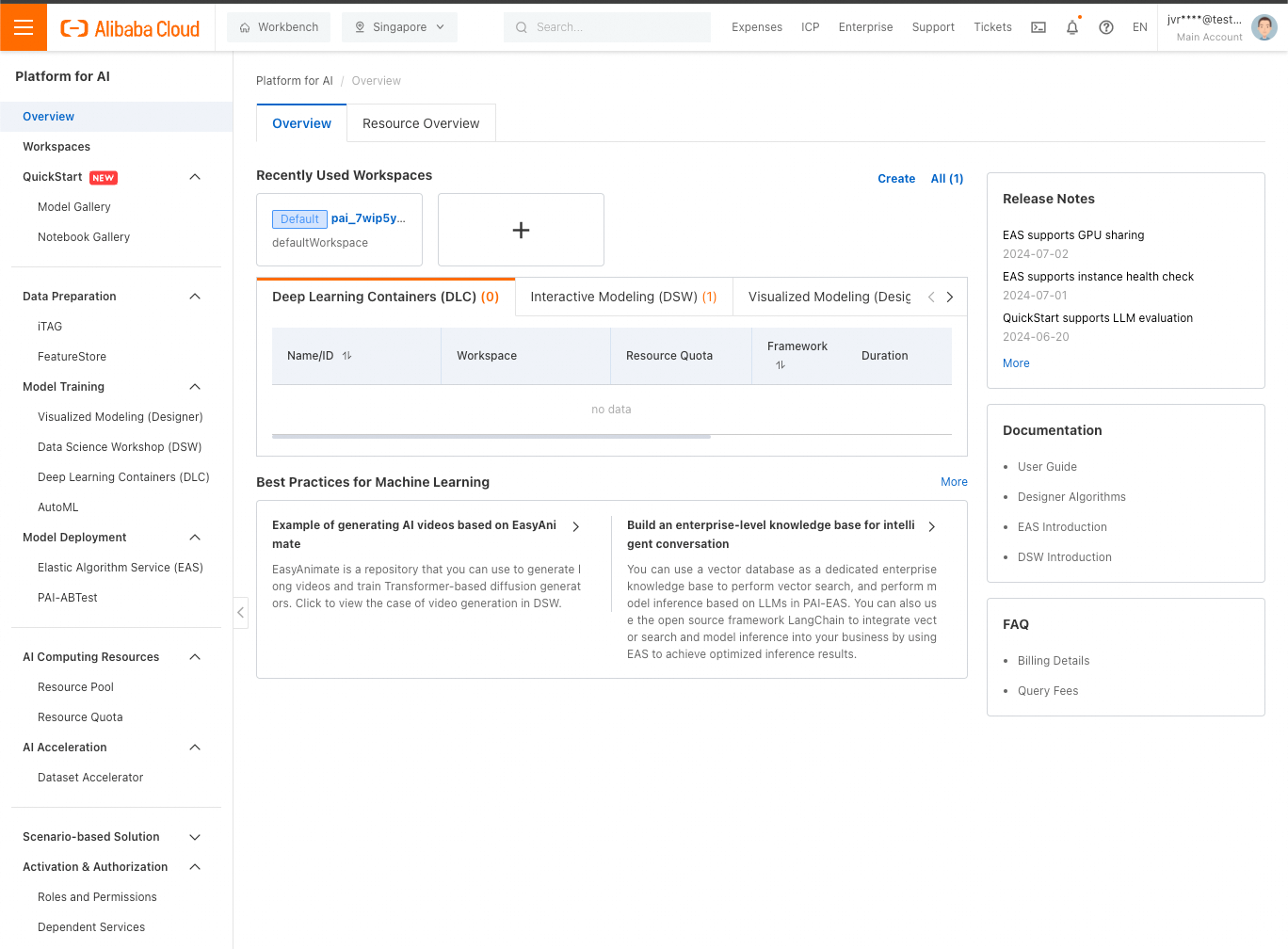

Log in to your Alibaba Cloud web console, and then search for PAI. Click the result that appears (Platform for AI), and you’ll see the PAI console:

We can deploy an LLM quickly from either QuickStart (that’s near the top of the left-hand side bar) or EAS/Elastic Algorithm Service (that’s near the middle of the left-hand side bar). In either case, the service is deployed as an EAS instance, so you’ll eventually end up in EAS no matter what.

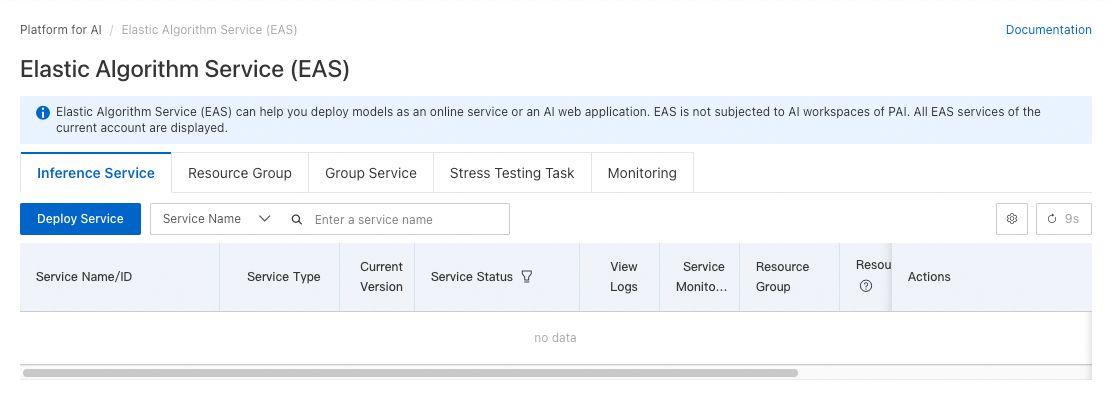

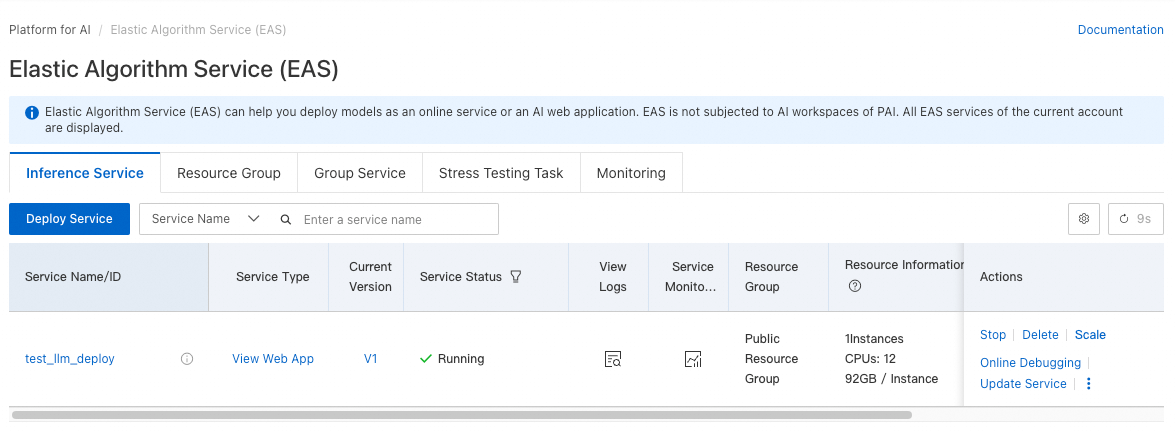

Let's jump straight into EAS. Click Elastic Algorithm Service under Model Deployment, and you'll see this screen:

Click Deploy Service.

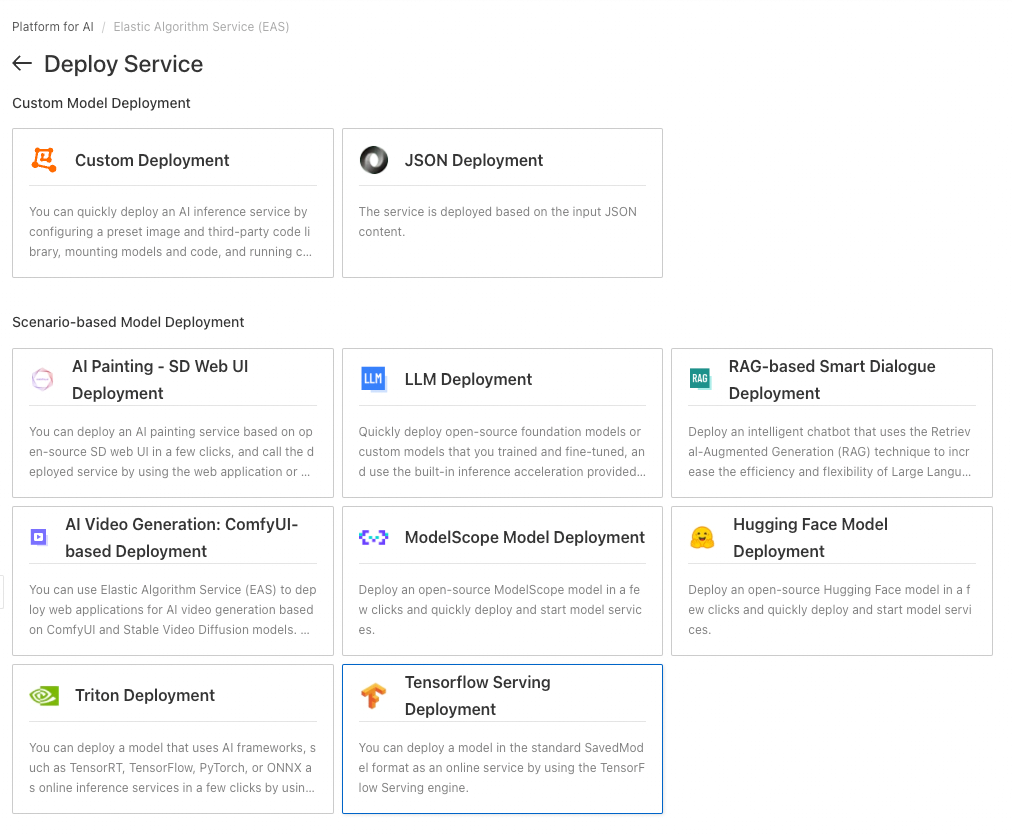

For this tutorial, click LLM Deployment under Scenario-based Model Deployment.

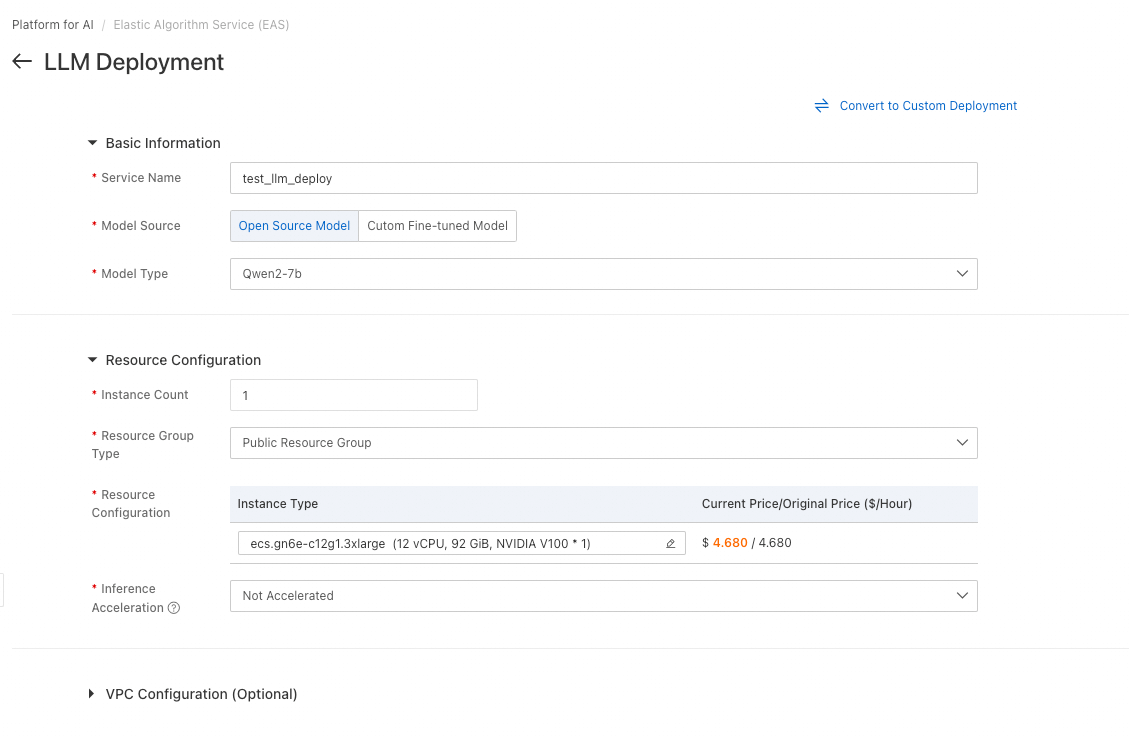

For this sample deployment, you can follow the settings as seen in the screenshot above:

• We give a name to our service, such as test_service_deployment

• We choose Open Source Model, and choose a small model like Qwen2-7b (note: be careful not to accidentally choose the giant 72b version, that’s very different)

• We retain the default instance type, but remove acceleration (BladeLLM needs a different GPU type; that’s not important right now for this basic tutorial)

That’s all we need to change. Click Deploy.

Unlike our ECS-based IaaS genAI deployment, this is all we need to do to create our infrastructure! In just a few clicks and taking mere minutes, our infrastructure is all set.

That’s the power and convenience of using a Platform-as-a-Service approach to genAI.

It can take a few minutes to finish deploying, and then you’ll see its status change to Running:

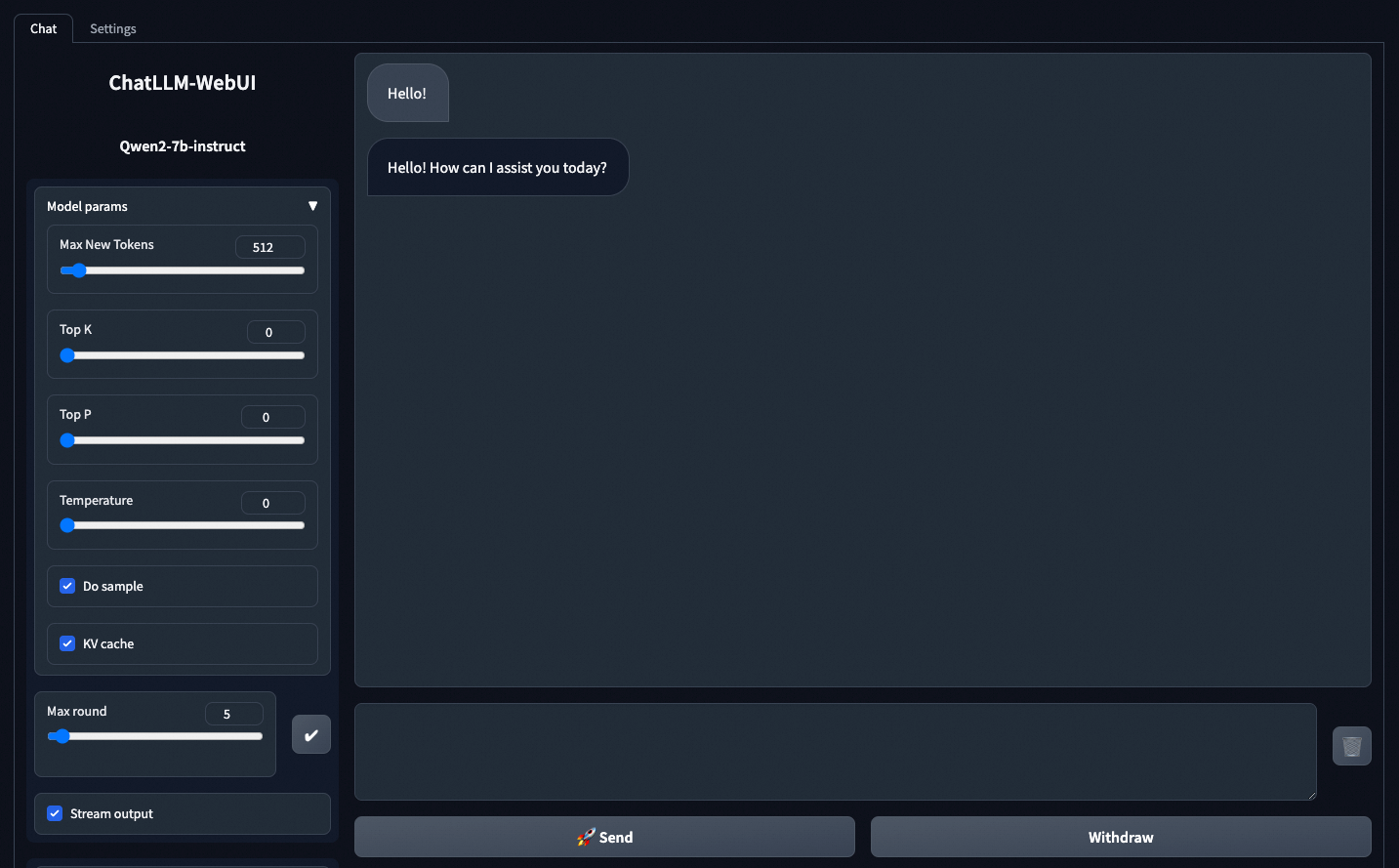

There’s a View Web App link. Click it to access a demo chat application that’s built-in to the service, allowing you to test hands-on easily:

The very first message you send takes a little while, because the LLM needs time to get loaded into memory at first. Your next messages, however, will be lightning fast after that initial load.

Of course, that web app isn’t really our main concern, that’s just a bonus. Now let’s get started integrating our LLM service into our applications using the API!

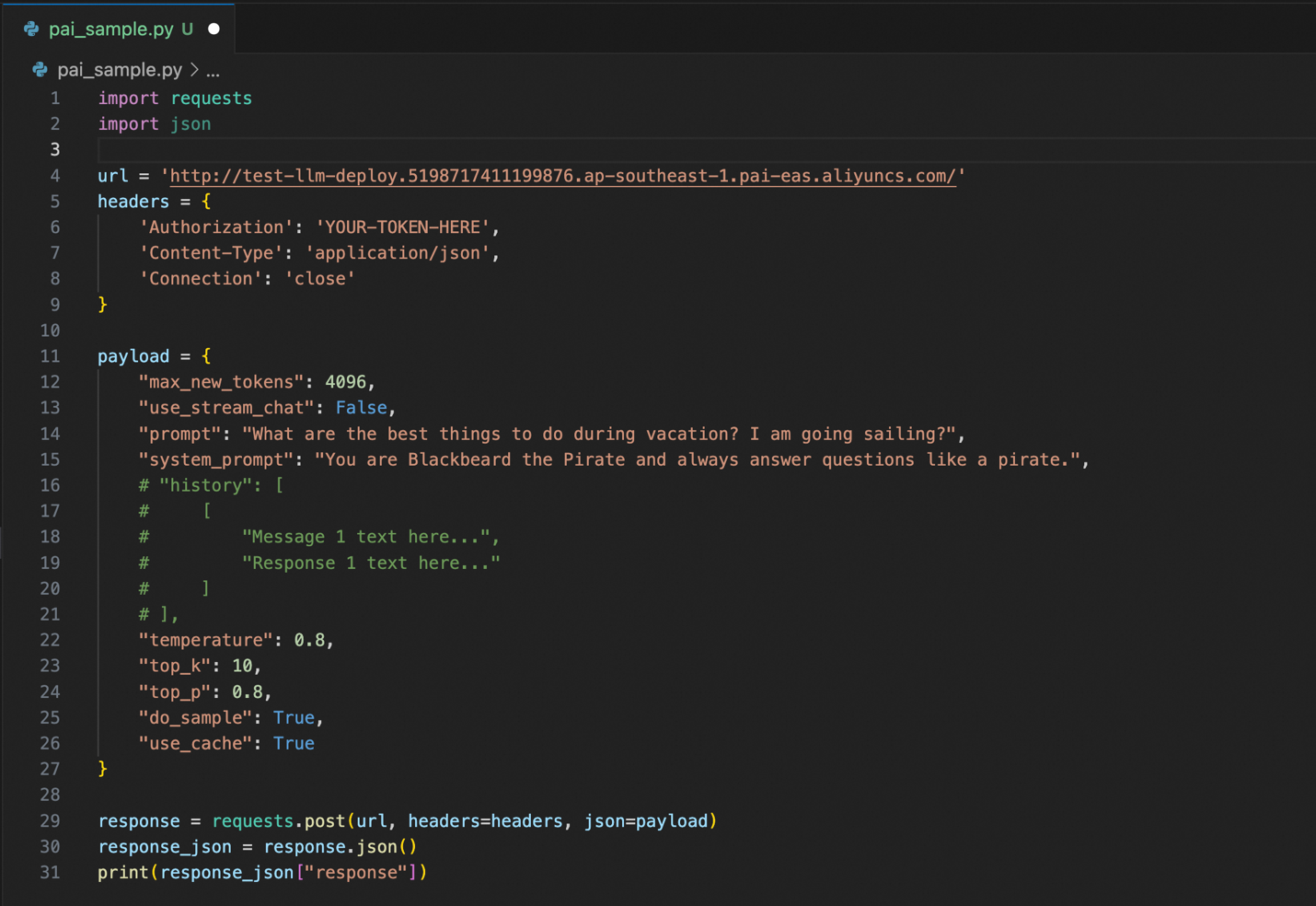

Here's sample code that uses our newly-deployed LLM API service:

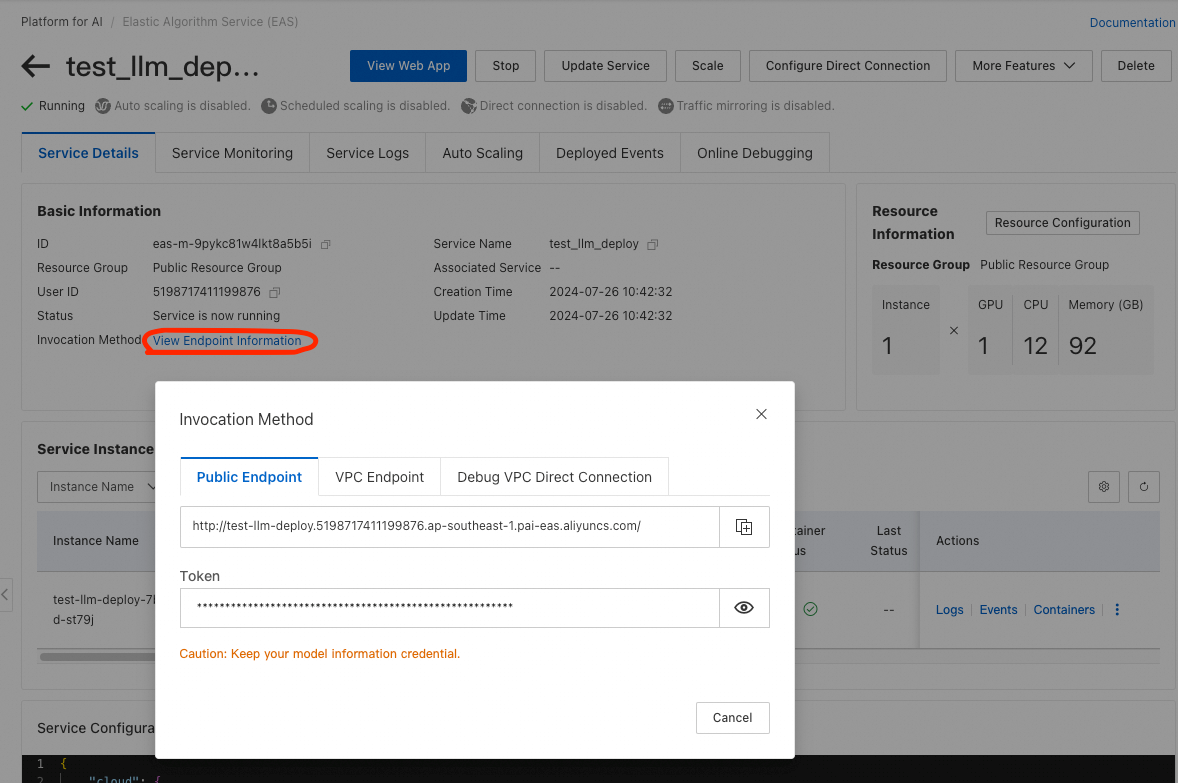

You’ll have to change the url and Authorization parameters (lines 4 and 6) with your actual ones from PAI EAS. In the PAI EAS console, click the name of your deplopyed service, and then click View Endpoint Information from the Service Details screen that appears. A pop-up with your endpoint URL and authentication token will appear.

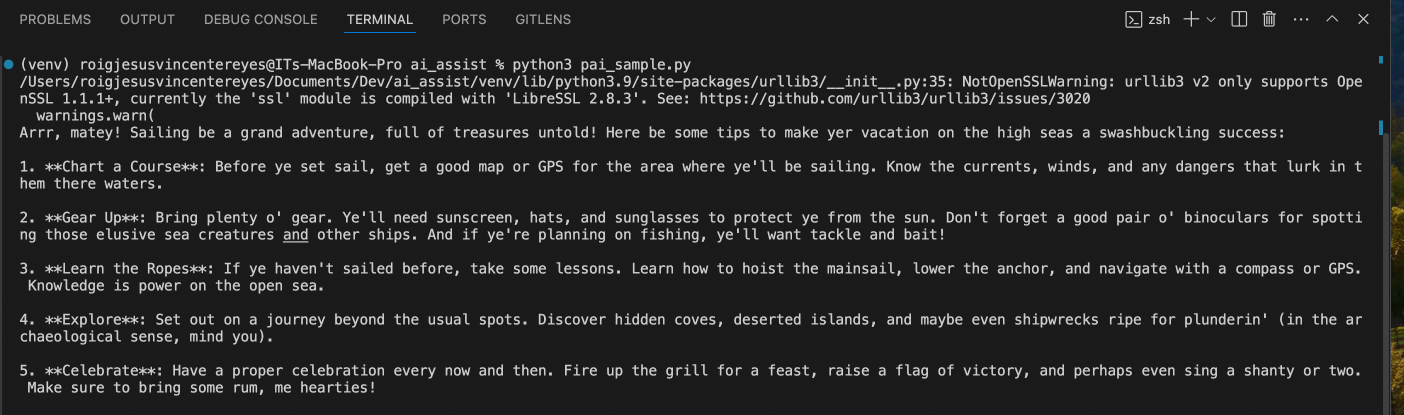

Back to our Python code. If we run that Python code, we get the following response:

Just like in our ECS-based (IaaS) genAI deployment, the LLM responded like a pirate (because of our system prompt) and has given us ideas for our sailing vacation (because of our prompt).

Compared to our previous deployment from Part 1 of this series, everything was just easier and faster to deploy and use – from hours to minutes, compared to ECS!

Now, you might ask: JV, is there an EVEN FASTER way?

Yes there is!

Next time, in Part 3, we'll examine that even faster way: Alibaba Cloud Model Studio.

See you then!

ABOUT THE AUTHOR: JV is a Senior Solutions Architect in Alibaba Cloud PH, and leads the team's data and generative AI strategy. If you think anything in this article is relevant to some of your current business problems, please reach out to JV at jv.roig@alibaba-inc.com.

3 posts | 0 followers

FollowAlibaba Cloud Philippines - August 19, 2024

Regional Content Hub - September 18, 2024

Farruh - September 22, 2023

Alibaba Cloud Philippines - August 6, 2024

JJ Lim - December 29, 2023

Alibaba Cloud Indonesia - November 22, 2023

3 posts | 0 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Philippines

Santhakumar Munuswamy August 12, 2024 at 1:24 am

Thank you for sharing