By Wenyun

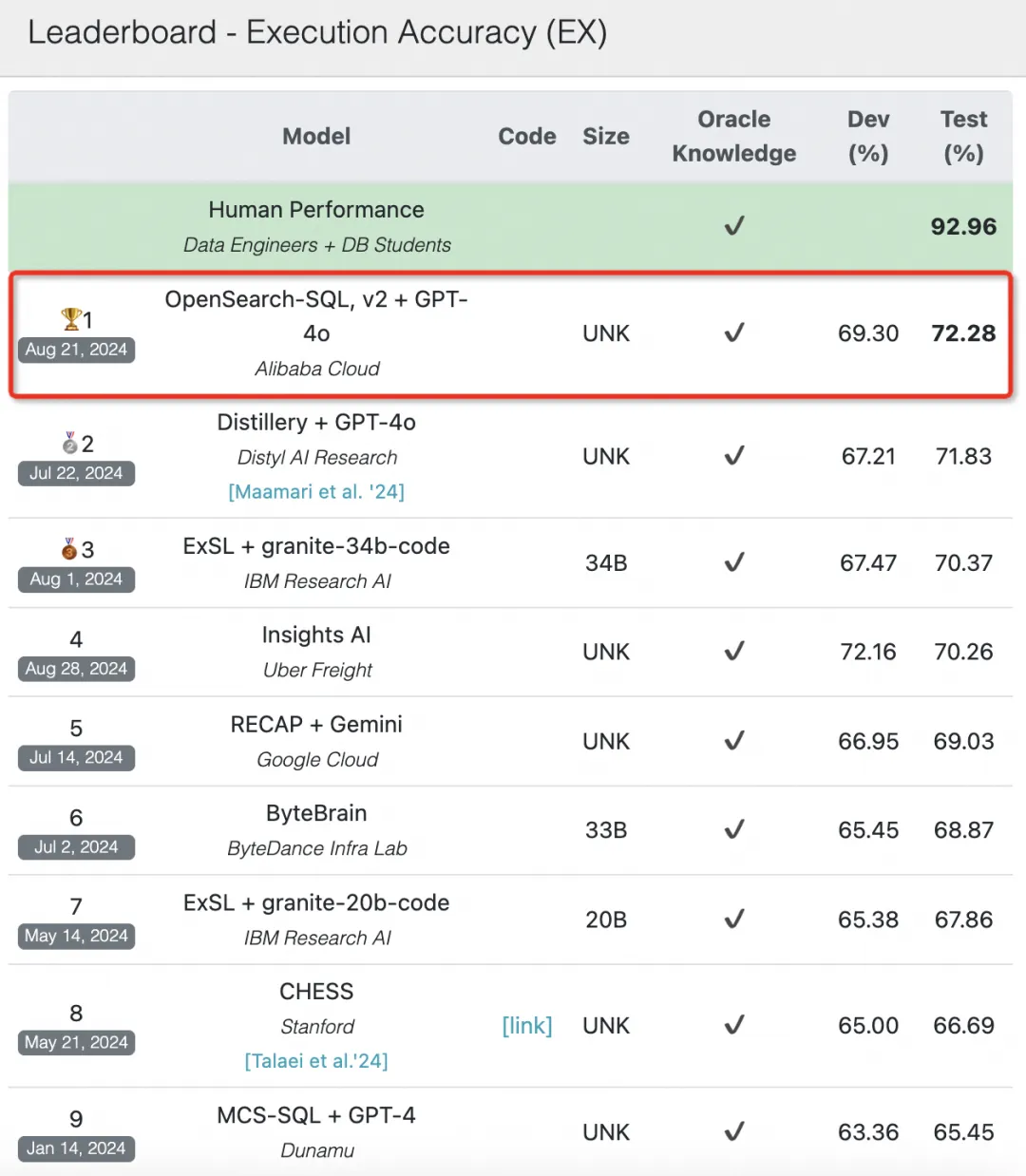

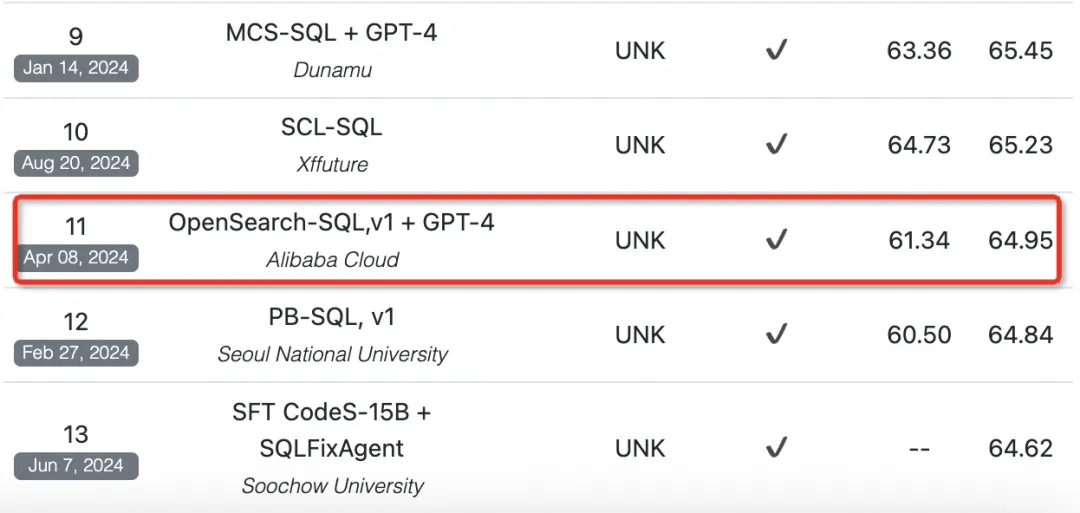

The Text-to-SQL task aims to convert natural language queries into Structured Query Language (SQL), enabling non-technical users to access and manipulate databases easily. Recently, Alibaba Cloud's OpenSearch engine has achieved top ranking on the BIRD dataset leaderboard for Text-to-SQL tasks, thanks to its consistency alignment technology. This article will introduce the evolution of Text-to-SQL technology and provide an in-depth analysis of the OpenSearch-SQL approach.

Source: 2024.8.29

Additionally, the Text-to-SQL feature is now available in Alibaba Cloud OpenSearch. Welcome to try it out.

Platform: https://www.alibabacloud.com/help/en/open-search/search-platform/

Research in the Text-to-SQL field has a long history, with the core focus being on how to enable people to ask questions in natural language and receive precise SQL query results. In the past, due to the complex grammar and logical requirements, the Text-to-SQL field was mainly of importance in academia. However, with the development of large model technologies, industrial-grade Text-to-SQL solutions began to emerge.

The main challenge in Text-to-SQL technology lies in accurately parsing user intent, identifying entities and relationships in the question, and mapping them to the tables, columns, and corresponding SQL operations in the database. This process not only requires the model to have a strong language comprehension capability but also a deep understanding of SQL syntax, along with good generalization across various database structures.

To advance the field, a series of public datasets and benchmark tests, such as WikiSQL, Spider, and BIRD, have been introduced. They provide standards for model training and evaluation, promoting technical exchange and competition. These rich resources have enabled Text-to-SQL systems to evolve from handling simple queries to managing complex SQL queries involving multiple inferences, comparison operations, and aggregate functions, greatly expanding their application scenarios.

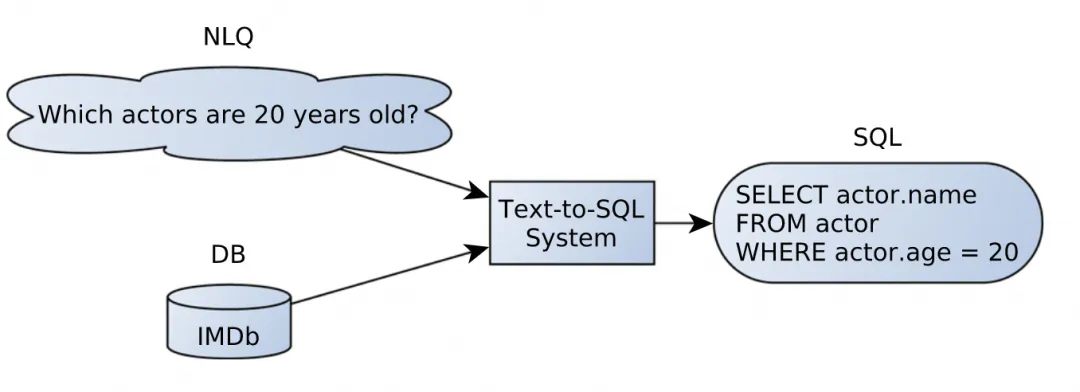

Below is a simple example of Text-to-SQL:

A Survey on Deep Learning Approaches for Text-to-SQL

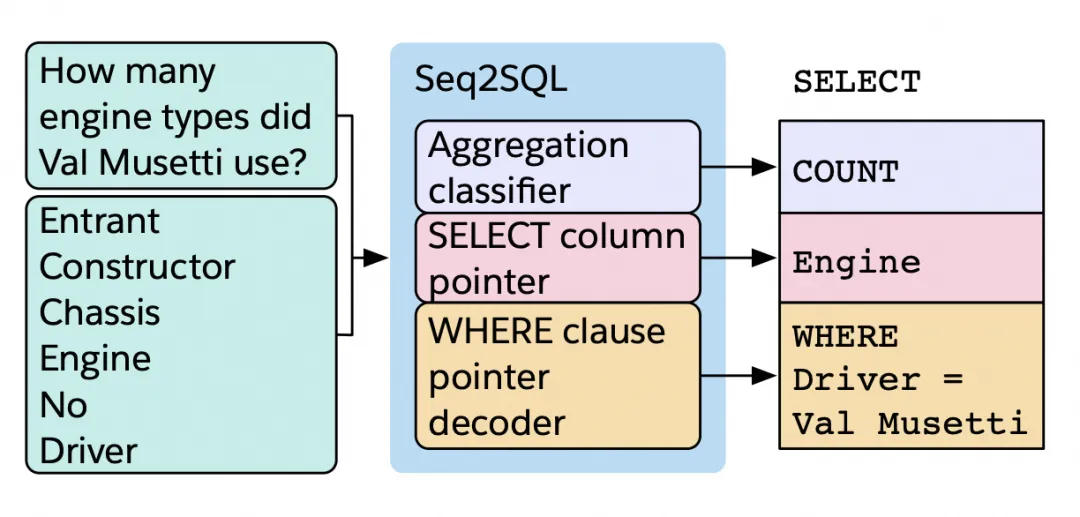

These methods are based on the structure of SQL, decomposing the SQL generation process into multiple sub-modules, such as SELECT, AGG functions, and WHERE conditions. During the subsequent generation, specific methods are chosen for each slot in these modules, enhancing the flexibility and accuracy of the generated SQL.

Seq2SQL is an early representative of this approach, using a neural network's classification task to predict and fill in the content of slots within the decomposed structure. Although this architecture-based decomposition significantly simplifies the complexity of the Text-to-SQL task, it also limits its ability to handle more complex SQL. For example, for relatively simple datasets such as WikiSQL, this method can achieve good results; however, for problems involving complex syntax such as Spider, this method often fails to achieve the desired results.

Seq2SQL: Generating Structured Queries from Natural Language Using Reinforcement Learning

Other similar approaches include Coarse2Fine and RYANSQL. These not only generate the contents of slots within a specific structure but also attempt to have the model first generate the structure of the natural language query (NLQ). This enhancement improves their scalability when handling complex syntax and diverse database environments.

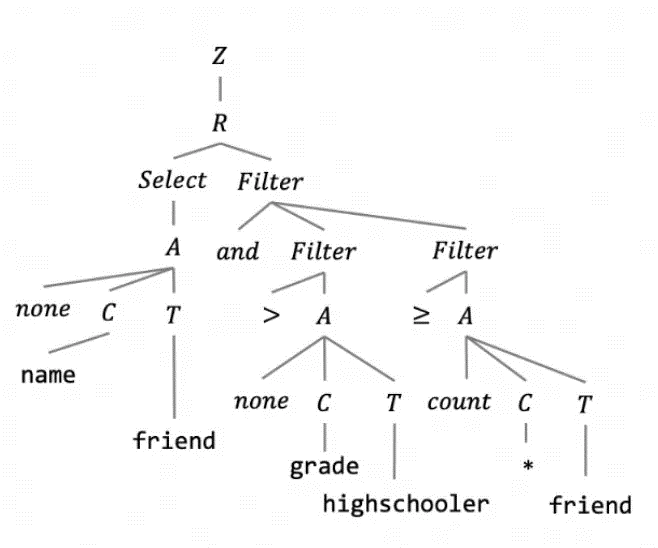

Some researchers have found that, compared with directly generating SQL, it is easier to maintain consistency with natural language by generating other content during the decoding process. This involves first generating an intermediate language, which could be an existing language or one specifically constructed for the task, before generating the SQL, known as the intermediate language-based approach.

Towards Complex Text-to-SQL in Cross-Domain Database with Intermediate Representation

For example, IRNet, as shown in the figure, constructs an intermediate language specifically for SemQL, allowing the model to simplify the process of generating SQL through this intermediate representation. Once the intermediate language is generated, the process continues to generate the final SQL.

Traditional model-based methods also include algorithms that use pre-trained models in place of traditional encoders, analyze syntax trees with graph structures, and focus on filtering database information. Overall, Sketch-based and intermediate language-based methods serve as supplements to address the limitations of model capabilities. However, the effectiveness of the ultimately generated SQL heavily relies on the representational power of the manually designed structures by researchers, which restricts the transferability of these methods.

As the LLM capabilities continue to improve, LLM-driven approaches are showing stronger transferability and reasoning abilities compared with traditional methods, bringing the Text-to-SQL task into a new phase. In this phase, more complex SQL tasks can be effectively handled without being constrained by manually designed frameworks. From the perspective of datasets, the difficulty of tasks faced by LLM-driven methods has gradually shifted from Spider to BIRD, indicating a significant improvement in handling more complex queries.

For example, the classic model T5-Base, which is used in traditional methods, achieves 71.1% accuracy on Spider but only 7.06% on BIRD. On the other hand, GPT-4 reaches 83.9% accuracy on Spider and 54.89% on BIRD. This comparison reflects the notable advantages of LLM-driven methods in terms of transferability and tackling more complex problems.

Although there isn't yet a clearly defined unified framework for LLM-driven Text-to-SQL tasks, the current effective frameworks can generally be summarized into the following four parts:

1. Preparation Phase: Gather the necessary information needed by the database.

2. Extraction Phase: Extract the required information based on the specific question to help reduce the complexity of the task for the model.

3. Generation Phase: Use the large model to generate SQL based on all the prepared information, while also designing some strategies for generating SQL.

4. Optimization Phase: Automatically refine the SQL based on certain rules or the execution results.

Among the LLM-driven methods, some representative models include:

These methods have all achieved good results in practical applications, showcasing the potential of large model technology in Text-to-SQL tasks.

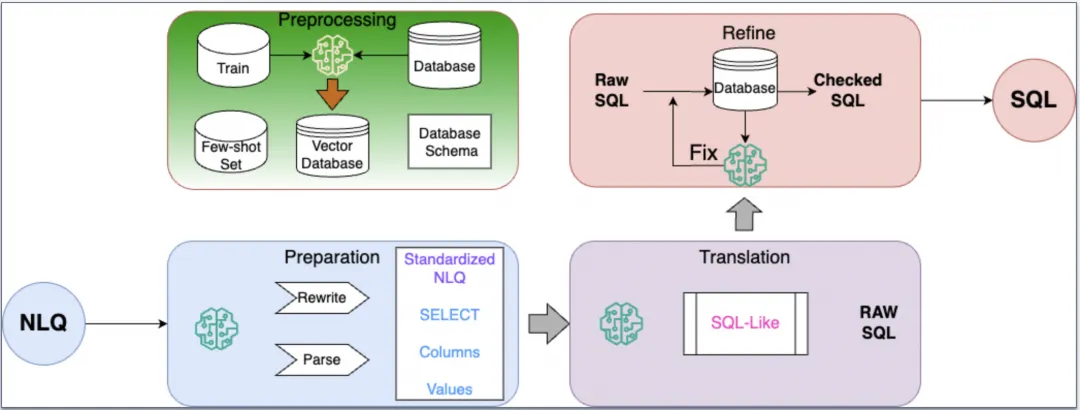

After analyzing LLM-based Text-to-SQL methods, we proposed OpenSearch-SQL to provide a standardized process for Text-to-SQL approaches and to address some common issues present in current methods. OpenSearch-SQL comes in two versions, both adhering to a multi-agent framework as follows:

In the OpenSearch-SQL, v1 (hereafter referred to as v1) version, we first defined the aforementioned Text-to-SQL framework.

Despite the v1 version achieving good results, through in-depth analysis, we found that during the multi-agent collaboration, the complexity of tasks in the generation phase and the failure to follow instructions were the main reasons for the LLM generating inaccurate SQL. The specific issues are as follows:

1. High complexity in the generation phase:

The process of converting SQL components such as tables, columns, and values into a complete SQL query involves complex reasoning. Current methods often require the model to directly complete this conversion, which undoubtedly increases the difficulty of the generation task.

2. Failure to follow instructions:

i. Field and value extraction: The extracted content is incomplete or inconsistent.

ii. Generation phase: The model does not fully utilize the extracted information during the generation phase.

iii. SQL style mismatch: Although the generated SQL may be logically sound, it may not match the style expected by the database.

iv. Ignoring requirements: Even when requirements are clearly stated in the prompt, the LLM might still overlook these requirements.

To solve the problems in the v1 version, in OpenSearch-SQL, v2, we defined two problems at the beginning:

To lower the complexity of the SQL generation process, we introduced a novel approach to decompose the generation task. While some existing methods break down the SQL generation into sub-tasks and then merge them, in real-world scenarios, this decomposition can lengthen the generation chain and introduce errors during the decomposition and recombination processes, making it difficult to effectively combine the individual sub-SQLs. Therefore, our goal was to design a more efficient decomposition method that would simplify the generation process while reducing error accumulation, thus improving the overall accuracy and efficiency of SQL generation.

We propose progressively generating different parts of the SQL, such as SELECT, WHERE, GROUP BY, and others. Therefore, we use a COT approach to gradually generate and analyze the SELECT, Column, and Value in SQL and the SQL itself.

What is the phone number of the school that has the highest average score in Math?#reason: The question want to know the phone number of the school, so the SQL SELECT schools.Phone and the condition is the school that has the highest average score in Math.

#columns: schools.Phone, schools.CDSCode, satscores.AvgScrMath

#values: highest average score in Math refers to ORDER BY satscores.AvgScrMath DESC LIMIT 1

#SELECT: phone number of the school refers to schools.Phone

#SQL-like: SELECT schools.Phone FROM schools ORDER BY satscores.AvgScrMath DESC LIMIT 1

#SQL: SELECT T1.Phone FROM schools AS T1 INNER JOIN satscores AS T2 ON T1.CDSCode = T2.cds ORDER BY T2.AvgScrMath DESC LIMIT 1The benefits of such an approach over decomposing tasks and merging them are:

For the second issue, we first analyzed a classic method to improve model performance on subtasks: Post-training.

While post-training methods such as SFT, RLHF, and DPO can lead to a decrease in general capabilities, they are effective in quickly aligning the style of subtasks. However, this alignment often comes with certain challenges:

Therefore, when aligning subtasks, it is necessary to carefully balance the advantages and potential risks, seeking a method that achieves alignment without compromising general capabilities.

In practice, we found that large base models often fail to follow instructions accurately when dealing with complex tasks, which is a significant reason why they perform worse than SFT models. So, can we ensure better instruction adherence in the large models within the Agent framework?

With this idea in mind, we conducted a series of experiments and observed two key phenomena:

1. Difficulty Correlation: When a complex task is decomposed into simpler subtasks, the adherence to instructions for these subtasks is higher.

2. Generation Variability: Even for SQL queries that the model can generate correctly, there is still a certain probability of errors, and this probability is positively correlated with the difficulty of the problem. Experimentally, the same prompt can result in about a 10% difference in bad cases between two experiments.

Based on these observations, we implemented a Double Check + Vote mechanism in OpenSearch-SQL. This mechanism works through the following steps:

a) Task Decomposition: Decompose the task into simpler subtasks to reduce the complexity of what the LLM needs to handle. This also makes it easier to check the quality of instruction adherence by the LLM and allows for re-generation if necessary. The steps involved in this task are:

i) Reduce the complexity of instructions through decomposition.

ii) Since the decomposed subtasks are simpler, it is easier to verify the results of the LLM's instruction adherence. In practice, this step can even be done through a simple check for alignment without needing the LLM to work within the Agent.

b) SQL Error Correction: Further correct the SQL based on the analysis of its execution results.

i) Use different prompts for different types of errors.

ii) Execute multiple generated SQL results for voting to reduce the impact of model variability.

c) Vote: With the self-consistency and vote mechanism, choose the SQL result with the highest consistency as the answer, and among those with the same result, select the one with the shortest execution time. This step improves both the accuracy and efficiency of the SQL.

In terms of consistent alignment, there is still much room for optimization. By atomizing model outputs, there is significant potential to raise the upper limit of Text-to-SQL tasks. By atomizing Agent tasks, large models can quickly build chains for various tasks and have the capability for hot-swapping, allowing for flexible integration with different tasks. This mechanism not only enhances the model's adaptability but also helps developers rapidly implement specific functionalities based on particular needs, thereby improving overall efficiency and effectiveness.

After understanding the specific methods and performance of OpenSearch-SQL, businesses and developers can now quickly get started with its powerful features. Currently, OpenSearch-SQL is officially available, and users can try it out on the OpenSearch-SQL search development platform, experiencing its excellent performance and application effect.

44 posts | 1 followers

FollowFarruh - June 30, 2025

Regional Content Hub - August 4, 2025

Regional Content Hub - August 4, 2025

Regional Content Hub - July 23, 2025

Regional Content Hub - July 28, 2025

Alibaba Cloud MaxCompute - January 22, 2021

44 posts | 1 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More OpenSearch

OpenSearch

OpenSearch helps develop intelligent search services.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence