The speech mainly covers three aspects:

• Overview of Alibaba Cloud MaxCompute

• Evolution of Alibaba's Data Platform

• MaxCompute 2.0 Moving Forward

Alibaba Cloud MaxCompute is formerly known as ODPS, which is a unified big data platform within Alibaba. The transformation from ODPS to MaxCompute is actually the evolution of Alibaba's big data platform. So next, I will share with you the development of Alibaba's big data service in the past seven to eight years, and the future technology development trends.

First of all, let's talk about the basic orientation of MaxCompute. Here is a demonstration of a fleet of aircraft carriers. If we compare Alibaba's whole data system to such a fleet, MaxCompute will be the aircraft carrier in the central position, where 99% of Alibaba's data storage and 95% of its computing capacity are generated.

Every day, more than 14,000 developers of Alibaba are working on the platform, which means one in four employees of the company is using the platform. Meanwhile, over 3 million jobs are running on the platform each day, covering nearly all the internal data systems, including Zhima Credit of Alipay, daily bills of Taobao merchants, and the processing of huge data traffic during the Double 11 shopping events. The MaxCompute platform contains thousands of servers distributing as clusters in different regions, which gives the platform strong disaster recovery ability based on multiple clusters. In terms of public clouds, MaxCompute has achieved an increase of 250% each year in both the number of users and computing capacity. In addition, MaxCompute is connected to the Apsara Stack platform and provides dozens of deployment schemes, including government businesses such as overall security and water conservancy, and City Brain projects. In fact, nearly all of the City Brain projects are using this system for data storage and big data computing at the bottom layer. That is the basic orientation of MaxCompute.

The following figure shows the technology layout of the MaxCompute platform. The bottom layer is the computing platform, and the data bus for inbound and outbound data called DataHub lies on the bottommost level which also provides services for public clouds currently. The data flows into the MaxCompute platform through DataHub, and a computing system for the complete data platform is formed through interactions with all the other platforms, including the AI platform. Above the bottom layer are development kits like Dataworks and MaxCompute Studio, which include basic management and recognition of data, development of data, and development and management of jobs. Based on such development and basic platform, the computing services provided to upper layers include speech-to-text, optical character recognition, machine translation, intelligent brain and other similar products. The application layer includes traditional Taobao products like Taobao and Tmall, new products like AutoNavi and Cainiao, as well as Youku Tudou to provide all the technical services. That is the internal and external layout of the MaxCompute platform.

At the early days of Alibaba, the BUs operated separately from each other, just like what any other company would go through at startup. About more than one year after that, Alibaba encountered the Greenplum ceiling, and the data volume was approximately 10 times larger than before. However, it was found that the number of machines that Greenplum could support per cluster was limited to 100, but even 100 machines per cluster were far from enough for a thriving business like Alibaba. In September 2009, Alibaba Cloud was founded with a vision to build a complete computing platform that covers the underlying distributed storage system (Pangu), the distributed scheduling system (Fuxi), and the distributed big data storage service (ODPS), which is now known as MaxCompute.

About a year later, the first platform was put into operation, with ODPS acting as a core computing engine. Fast forward to 2012, the platform became able to run steadily, and we set our sights on unified data storage, unified data standardization, and unified security management. These objectives were achieved in 2013, when we went a step further to initiate the commercialization process. At that time, a "5K" project was launched, which aimed to support 5,000 or more machines per cluster and to provide the multi-cluster capability. This second-level scalability was a basic underpinning of a data platform within Alibaba. During the time this product was developed, the BUs were operating separately from each other, and many of them used the open-source Hadoop system, so two systems coexisted at the same time. One was called Yunti 1, which was based on the open source system, and the other was called Yunti 2, which was based on Alibaba's independently developed system. At that time, Alibaba's Hadoop clusters became the largest in Asia, consisting of 5,000 machines and with the ability to process PBs of data. From 2014 to 2015, two technical systems were used in parallel, so Alibaba decided to unify these systems into one system, and that was why the "Moon Landing" program was launched. Several requirements must be considered to implement the "Moon Landing" process: multi-cluster capability, good security, and the ability to process massive data as well as finance-level stability. Based on these requirements, Alibaba chose the Yunti 2 system, which is known today as MaxCompute. From 2016 to 2017, MaxCompute began to support all businesses within Alibaba, and was also geared to serve external customers. With the capability to support over 10,000 machines across multiple clusters, MaxCompute has deployed clusters globally, including in East US, West US, Singapore, Japan, Australia, Hong Kong, Germany, and Russia.

Next, I will share with you more details about the "Moon Landing" program and why we chose to go this route. As mentioned earlier, there were dozens of computing platforms among the various BUs prior to the implementation of the "Moon Landing" program, which could be an inevitable problem for any startup business. As a result, two technical systems were widely used: an open-source system and an independently developed system. In fact, these two systems shared some complimentary aspects in technology and architecture, but were different from each other in technical evolution, data storage format, scheduling methods, and external computing interface. We encountered the following problems:

• Poor scalability. Two or three years ago, Hadoop components like NameNode, JobTracker, and HiveServer were still single-point and had some stability problems.

• Low performance. The engine performance was not enough to support 5,000 or more machines per cluster. In other words, linear scalability could be accomplished for clusters with less than 5,000 machines, but was hard to achieve when there were more than 5,000 machines in a single cluster.

• Inadequate security, which was worth noting. That was because a standard multi-tenant system was adopted across Alibaba Group for clusters covering over 10,000 users. Here is how multi-tenancy works within Alibaba. Alibaba has a lot of BUs that consist of a lot of departments. Each department has several teams and employees. Different permissions are granted to each BU and each department to ensure data is shared securely. However, back then, a file-based authorization system was used, which was not flexible enough to meet this requirement.

• Poor stability. Multi-cluster and cross-cluster disaster recovery was unavailable.

Besides, the code was open source, but it would take a long time for the code to be fed back to the community, so many clusters ended up being a "independently developed" system. This resulted in further version inconsistencies and the inability of clusters to communicate with each other. The problem was that the data from Taobao could not be used by Tmall, nor could the data from Microfinance be used by other BUs. It was very difficult to request permissions from each other, and smooth communication was impossible across the whole system. But we all know that Alibaba does not rely on physical assets, nor does it have any goods and warehousing. Instead, data assets are at the very core of Alibaba's business operation. If data cannot be communicated or transmitted efficiently in a platform-based system, it would pose a huge threat to the development of the company.

Therefore, Alibaba went through such a "long" and "expensive" moon landing process. Initiated by Alibaba Group, the Moon Landing program included 24 officially named projects. Alibaba Finance was the first to take on this journey, followed by Taobao. These 24 projects went on for a year and a half, which linked up all the data together.

For the "Moon Landing" program to be implemented successfully, the MaxCompute platform did the following:

• Ensured that the performance of the platform was at least as adequate as that of other platforms to support what the Hadoop clusters could offer.

• Ensured compatibility with the programming model and other aspects at the programming interface level.

• Provided a complete set of cloud migration tools and data migration/comparison tools to make it easy to migrate from the Hadoop system to MaxCompute.

• Since the platform had to be upgraded while the business was running, we made seamless upgrade schemes with business entities, just like "changing an airplane engine while in flight".

There are three benefits after the unification:

• A unified big data platform is created for the entire group. The "Moon Landing" program puts together all the machine and data resources within Alibaba. Because of the "1+1>2" effect of data, the overall utilization of clusters, the efficiency of employees and the data transfer have been significantly improved after all the data is connected. Up till now, Alibaba Group's internal computing business is running on the MaxCompute clusters, and the total storage is in exabytes (EB) with millions of ODPS_TASK running every day.

• The new platform is safe, manageable and open. The data stored within Alibaba is mostly transaction or financial data, which is different from that of other companies, and therefore it has very high requirements for data security. For example, the permissions for users accessing the fields in the same table are different. The MaxCompute platform can provide the security to this granularity. During the "Moon Landing" process, the data was unified, classified, tagged and masked, and the ODPS authorization process, and the virtual domain access to the cloud query version were also completed.

• The new platform has achieved high-performance and comprehensive data unification. While integrating the data, Alibaba also unified its management platform, such as unifying the scheduling center, synchronization tools and data maps, to achieve the comprehensive unification of Alibaba's data system. In addition, after business improvement and organization as well as personnel integration on the new platform, the teams are able to achieve better performance optimization and feature tuning on a platform to a large extent. As a result, hundreds of PBs of storage resources were saved in 2014, and the number of jobs and the computing workload were both reduced by 30%-50%, and some historical issues were finally solved.

The following describes how to implement deployment at the underlying and business layer after Alibaba has built its central big data platform.

At the 2016 Computing Conference - Hangzhou Summit, Alibaba released MaxCompute 2.0 with the brand new SQL engine, providing the ability of processing non-structured data. In 2017, Alibaba further optimizes MaxCompute for continuous innovations. As shown in the following figure, MaxCompute 2.0 implements many technological innovations by providing DataWorks and MaxCompute Studio at the first layer of the architecture that support multiple computing modes such as bulk processing, interactive computing, in-memory computing, and iterative computing. As for APIs, NewSQL, a new query language, will be available later this year. It is a brand new big data language defined by Alibaba, which provides both traditional SQL features and imperative and declarative programming.

For the engine innovation, the optimizer supports optimizing by both the cost and the run history. In addition, MaxCompute 2.0 implements fully asynchronous I/O at run time. In terms of the metadata management, resource scheduling, and task scheduling, two major changes are made. The first change is to implement bubble-based scheduling, which requires both upstreams and downstreams are completely pulled when all job data is combined for Bubble Shuffle. This change is resource-consuming, and Bubble balances between resource consumption and efficiency by introducing a rational failover group. The other change is to implement the flexible interaction between Hadoop and Spark clusters, thus improving the ecosystem and openness. At the underlying layer of the architecture, the upgraded MaxCompute supports Index and AliORC files apart from original file formats. The AliORC format is compatible with the Apache ORC format, but features better performance. Additionally, the upgraded MaxCompute supports the hierarchical storage for memories, caches, SSDs, SSD-accompanied HDDs, and cold storage with erasure-coding. In fact, an highly-intensive storage prototype is available internally and will be migrated to the public clouds in the near future.

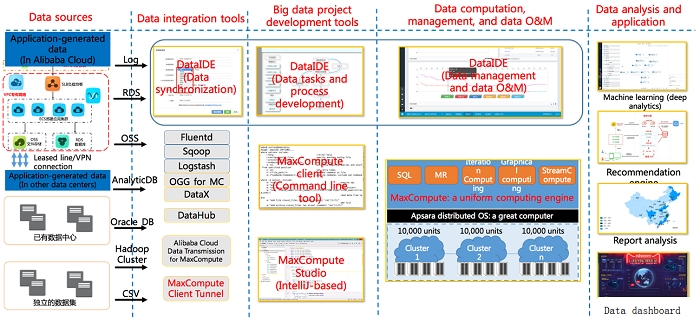

The following figure illustrates the typical MaxCompute scenarios, which are also common for most employees from Alibaba Group and Alibaba Cloud. Generally, building a big data system consists of the data source determination, development, and production stages. The data source can be an application, a service of a application, or a log of a application. Application information can be uploaded as a log or message. In addition, given that the information is mostly stored in a database, the binlog of the database can be collected and synchronized to the data platform. Except the aforementioned possibilities, the data source can also be the existing data. If such data exists, you can upload it to the cluster by actively reading and manually uploading the data, and using the synchronization center. After determining the data source, you can enter the development stage, which consists of three parts. The first one is data discovery, namely, determining available data and writing a job or the data using DataWorks. The development stage provides general computing, machine learning, graphic computing, and stream computing.

After the development is completed, the workloads of the production stage are classified as three types. The first type is referred to as Workflow. For example, a billing report generated on a monthly basis is a typical Workflow job. This type of jobs features a predictable regularity such as daily, hourly, or monthly and a high workload. Another type of the three types is Interactive Analysis, namely interactive querying. For example, you may want to view certain data statistics on one day and make business decisions based on these statistics. These statistics may be written to a report on the next day and can be repeatedly adjusted by the developer by submitting a job, which is essentially some sort of interaction between the data and the developer. The last type is temporal or stream-oriented data processing. One of the typical scenarios of this type is the big screen visualization of "Double 11", which features the typical characteristics of rolling stream computing.

The requirements of those three types of scenarios are different from each other. At the data source layer, stream control isolation is a technical key point when the amount of the uploaded data is large. During the production phase, data integrity check is also required for data uploading including performing additional rule checking. When data uploading becomes regular, data fracture may occur due to system, application, or data source issues during the daily data uploading. If this is the case, the data completion ability is required by the system. Meanwhile, the system must provide necessary support for the development stage because this stage normally carries a small data amount, experiences frequently updated code and scripts, and probably stays in the trial-and-error status for most times of the phase. Under these conditions, quasi-real-time development ability, development efficiency, and debugging efficiency are required for the system, which are essentially the requirements on manpower. During the production stage, the job is typically stable but consumes a large amount of resources and data. Under this condition, the stability requirement is demanding, and the system must provide system-level optimization and computing capabilities. So far, the typical big data scenario has been analyzed from the Alibaba perspective from development to launching.

The following figure shows the data coordinate system with the computing workload and latency axes. For the data amount, it ranges from 100 GB to 10 TB and then up to the PB level. On Double 11, the MaxCompute platform processed hundreds of PBs of data. For the latency, it is quite low for most cases. For the orange oblique line in the figure, it means that the cost increases as the data amount and the real-time requirement improves. In other words, a greater obliqueness of the oblique line is required for big data computing, namely more data can be processed in a shorter duration and with less cost.

Jobs can be classified as three types, namely the data cleansing, data warehouse creation, and report jobs, which normally run in the units of hour and day. Based on Alibaba's statistics, 20% of these types of jobs consume 80% of computing resources. Because such jobs are characteristic of regular running and regular querying, they feature a high running efficiency. Comparatively, real-time monitoring jobs are typical StreamCompute jobs, such as monitoring alarming and big screen broadcasting. Interactive jobs are normally classified as two types, namely the analysis and BI jobs. For the BI type, queries and intermediate systems are invisible to most users, and only the BI environment such as the Alibaba Cloud as a QuickBI system for external users is visible. This system can be accessed by configuration and drag-and-drop, and its users are typically non-technicians. Also, this system imposes a demanding interactivity requirement because it works on the UI and also a demanding latency requirement for completing such jobs in seconds or tens of seconds in most cases. For this reason, the system normally carries a small data amount and requires that data is ready beforehand. However, the interactive analysis job features a medium-small data amount imposes a certain latency requirement. Thus, different technical optimization solutions apply to different types of jobs. Specifically, the Data Workflow solution suits for pipeline jobs, which require a high running performance and efficiency. For development and BI jobs, development efficiency and serialization is critical because such jobs feature a high workload but a relatively low overall resource occupation rate. For the development of the MaxCompute big data platform in this year, serialization and development efficiency enhancement are focused while the overall system efficiency needs to be further improved.

Among the aforementioned three workload types, the following emphatically describes the big data computing in a BI job. Such job imposes a demanding timeliness requirement such as on optimizing OnlineJob, caching active tables, and Index Support. Also, it requires an improved query plan, runtime optimization, further improved ecosystem connection and storage formats, and the index support for data to aggregate data to a small unit during calculation.

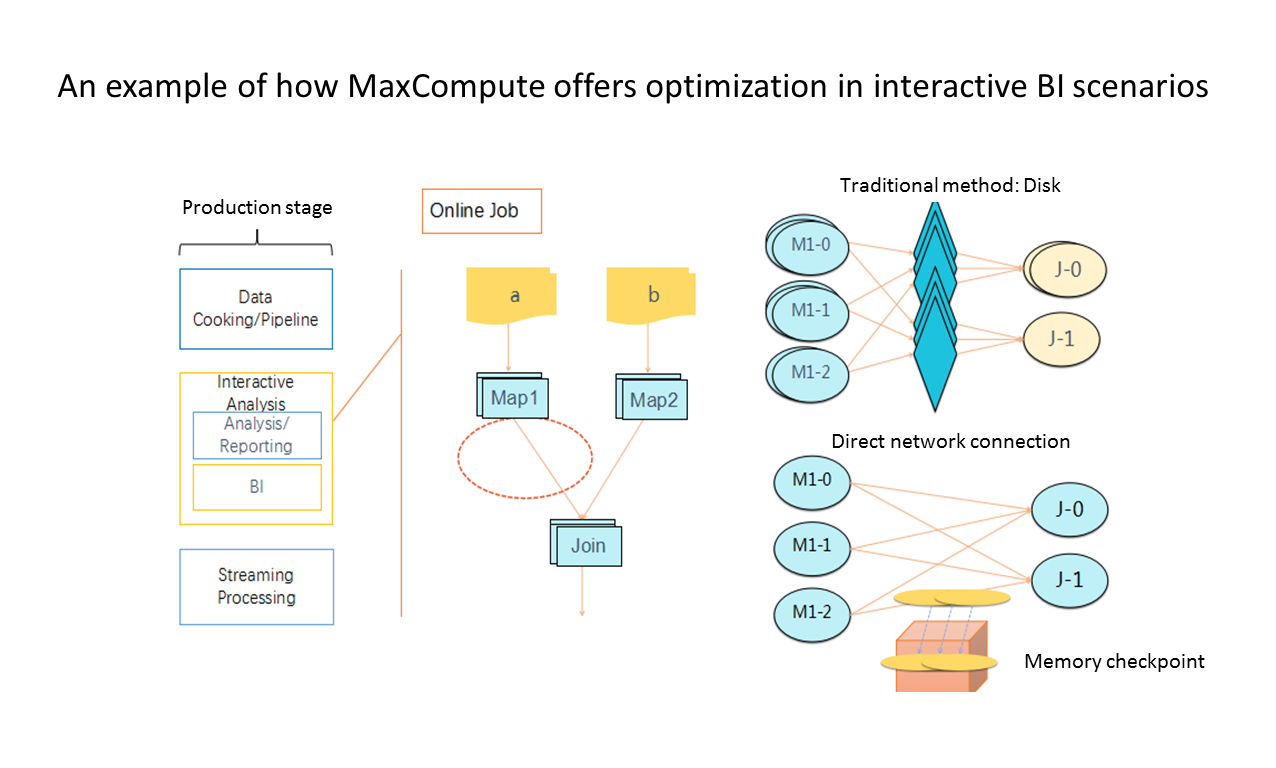

The following figure shows the basic design philosophy of OnlineJob, which mainly orients to mid-scale and low-latency interactive scenarios and provides reliable services. Currently, 60% of Alibaba internal jobs run in this mode. This mode features the following technologies:

• Resident process (Stand by as a service), where the process is not destroyed but remains in the waiting status after the job is completed.

• Achieves the process multiplex between jobs.

• Direct network connection, avoiding data copies on disks.

• Event-driven scheduling.

• Automatic switchover based on the statistical and historical information with no interruption to users.

The following figure displays another optimization case in interactive BI scenarios. Traditionally, when multiple Mappers are pulled by MapReduce and shuffled, data is stored on a disk and then read by a subsequent Join operation. This process is fractured and requires a data exchange between the disks. For the same purpose, MaxCompute directly connects to the network and starts the second session without having to wait the first session to complete. However, this practice is disadvantageous for resulting in a big group during failover, and thus Checkpoint needs to be performed additionally in the memory in a timely manner. Alternatively, the Checkpoint operation can be migrated to an SSD or another computer to improve efficiency, reduce the latency, and ensure that the group is still available during failover.

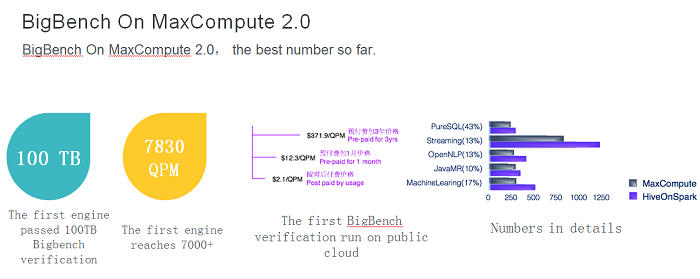

This year, MaxCompute and Intel have jointly benchmarked and tested the new big data standards of 2017 BigBench. In addition to standards sorting, over 30 queries including basic SQL Query, MapReduce Query, and machine learning jobs are also used for the benchmark test and the scale and cost effectiveness are both highly concerned. Supported by the massive data volume of Alibaba, MaxCompute is the first engine that scales the query amount from 10 TB to 100 TB and scores 7,000 points during the benchmark test across the globe. Besides, MaxCompute is also the first platform that provides query services based on the public cloud at a pretty low cost.

Why choose MaxCompute as the big data platform

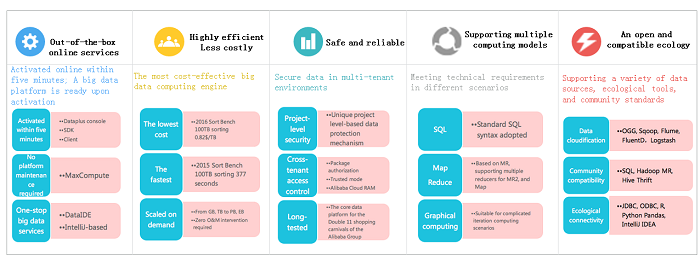

What makes you select MaxCompute as your big data computing platform? MaxCompute provides the following commercial benefits:

• MaxCompute is an out-of-box system that eliminates all your system scale concerns.

• MaxCompute provides peak performance at a low cost.

• Guarded by Alibaba security systems, MaxCompute ensures data security in a multi-tenant environment.

• MaxCompute supports multiple distributed computing models.

• MaxCompute supports cloud migration tools including the community compatibility and ecological connectivity tools.

If your data is stored on Alibaba Cloud, multiple options are provided to migrate your data to MaxCompute. If your data is stored offline, you can migrate your data from the existing data set to Alibaba Cloud through the private line or a VPN connection. After that, you can develop your data using tools of Data Integration and big data projects. For common data integration, you can develop it with Dataworks and special plug-ins. If you compute your data on MaxCompute , you can also access the machine learning platform and use other Alibaba Cloud products such as the Recommendation Engine and report analysis tools.

Protecting Websites through Semantics-Based Malware Detection

2,599 posts | 764 followers

FollowAlibaba Cloud MaxCompute - October 12, 2018

Alibaba Cloud MaxCompute - August 31, 2020

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Clouder - November 27, 2017

Alibaba Cloud MaxCompute - August 31, 2020

Alibaba Cloud MaxCompute - March 2, 2020

2,599 posts | 764 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Clouder