By Li Yuqian from Alibaba Cloud ECS

Enterprises that are new to the cloud are often confused, "Is it necessary to do resource scheduling independently on the cloud? If it is necessary, how can I do it? Based on the current application, what is the most suitable cloud resource scheduling management solution scenarios of the enterprise? Mr. Li Yuqian, Alibaba Cloud Technical Expert at Elastic Computing, shares some methodologies for reference based on his years of experience.

As enterprises move towards digitalization and full cloud migration, more enterprises will grow on the cloud. The cluster management and scheduling technology of cloud computing support all on-cloud business applications. Engineers should understand cluster management scheduling today as they used to know the operating system. Engineers in the cloud era need to have a better understanding of the cloud, in order to make the best out of cloud computing.

For enterprises that have migrated to the cloud, what are the methods if they want to deeply manage their cloud computing resources? Before the official introduction, I will introduce several relevant concepts to help you understand the following content:

ICR stands for Immediate Capacity Reservation. You can buy it at any time, and it works immediately after a successful reservation, which means the capacity is locked down for your exclusive use. When you specify attributes, such as zone, instance type, and types of operating system, the system will generate a private pool to reserve resources that match the specified attributes. If you choose the capacity of private pool when creating pay-as-you-go instances, the resource certainty can be guaranteed. Billing continues according to the pay-as-you-go instance rate regardless of whether the capacity reservation is used to create instances. The billing stops until the capacity reservation is released.

Alibaba Cloud Resource Orchestration Service (ROS) provides a simple and easy-to-use method to manage and maintain cloud computing resources automatically. Users can use JSON/YAML templates to describe the configurations and dependencies of multiple cloud computing resources, such as ECS, RDS, and SLB. Meanwhile, the deployment and configuration of all cloud resources in different regions and under multiple accounts can be completed automatically to implement Infrastructure as Code.

Alibaba Cloud Operation Orchestration Service (OOS) is a comprehensive cloud-based automated O&M platform that manages and executes O&M tasks. Applicable scenarios include event-driven, batch operations, timed O&M tasks, and cross-region. OOS provides features, such as approval and notification for important scenarios. OOS supports cross-product operations and can manage diverse Alibaba Cloud products, such as ECS, RDS, SLB, and VPC.

Users can set the scaling rules according to their business needs and policies. When business loads increase, Auto Scaling adds ECS instances automatically to ensure sufficient computing capabilities (and vice versa) to save costs. It is suitable for applications with fluctuating or stable business loads.

Alibaba Cloud Dedicated Host (DDH) is a specialized solution for enterprises that features dedicated physical resources, more flexible deployment, more abundant configurations, and more cost-effective performance. A dedicated host provides dedicated physical resources for each tenant. As the only tenant of each host, you do not need to share all the physical resources of the host with other tenants. You can also create ECS instances on the host flexibly. Like other ECS instances, you can mount cloud disks to the host and communicate through VPC, which is highly flexible and easy to use.

It is important to know whether it is necessary to perform resource scheduling after cloud deployment. After business migration to the cloud, the core R&D investment of an enterprise remains the internal business-related R&D investment. If the enterprise reinvests time and effort in the R&D of cloud resource scheduling, the R&D costs will increase. In this case, it is necessary to evaluate whether the investment costs match the benefits. Generally, a comprehensive assessment is needed from the following aspects.

This scale depends on the number of ECS instances running on the cloud. If the number of resources is small, it is unnecessary to introduce its own scheduling. There is no uniform standard for the quantity of ECS instances in the industry. Since the resource service capabilities of different cloud platforms, internal SRE professional skills, SRE dependent tools, and IT environment maturity vary, it is difficult to give an accurate reference value. Let's imagine the number is 1,000. If the number of ECS resources on the cloud is smaller than 1,000, Alibaba Cloud ROS, OOS, or Auto Scaling can manage these resources. When more than 1,000 ECS resources are deployed on the cloud, take an exclusive resource orchestration service for on-cloud resources into consideration.

Here, pressure on resource costs refers to the situation when a small amount of computing instance resources is available on the cloud, and the business is still developing rapidly with the demand for cloud resources increasing continuously. For example, the number of computing instances on the cloud has reached 5,000, and the number of business application apps also exceeds 100. At this time, you can manage more than 5,000 on-cloud instances in the hope of reducing the computing costs and optimizing the business orchestration to improve the business stability through appropriate hybrid deployment.

Enterprises need to recruit and train staff to schedule and manage their own cloud resources.

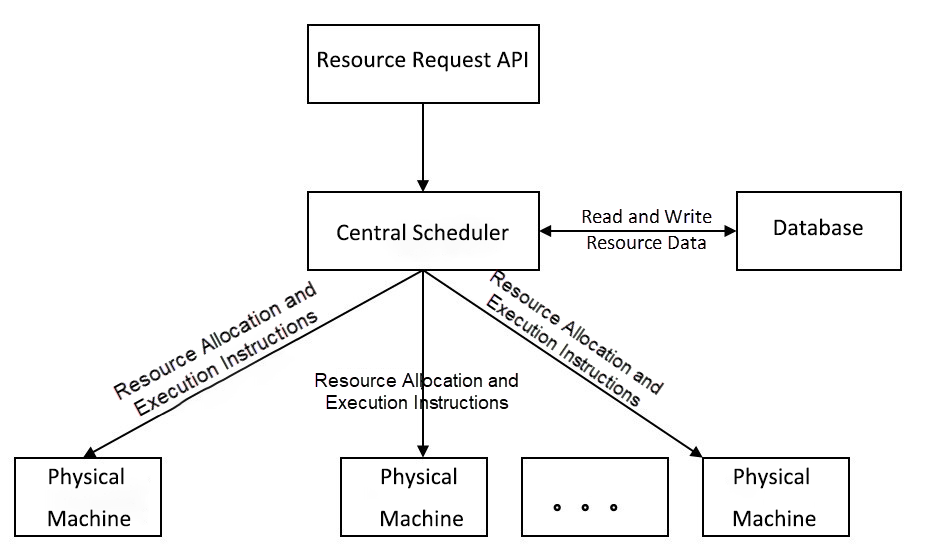

How do we schedule resources when we haven't migrated to the cloud? Let's look at the basic responsibility modules required to run a simple and straightforward scheduling system. As shown in Figure 1, the simplest and most direct scheduling system modules are the resource request API module, central scheduler module, database module, and physical machine node module.

Figure 1: Simple and Direct Scheduling Module Structure

The resource request API is used to create, start, or stop resources. The central scheduler is responsible for reading the resource information of physical machines in the database and allocating, deducting, and recycling resources. The database is responsible for storing various data that is dependent on the scheduling, including the resource information of physical machines. The physical machine node module responds to the requests from the scheduler and executes commands for creating, releasing, starting, or stopping resources. Then, it writes the processed data to the database.

Based on Figure 1, can the relevant modules still work after cloud migration? The answer is yes, but with some adaptations. Each cloud platform vendor provides only the resource information of virtualization computing instances but not the physical server information.

After cloud migration, resource scheduling is viable in the following scenarios:

The section above is a description of modules based on the simplest resource scheduling. In real-world production, it will be relatively more complex. There will be more modules, such as resource monitoring, resource data initialization, and data update, together with the automatic replacement of abnormal resource nodes.

Based on the simplest resource scheduling module, the resource data and resource operation APIs are currently available on the cloud. Let's look at the practical process, the possible solutions, and their advantages and disadvantages.

Currently, various forms of cloud computing resources are available on the cloud. For example, Alibaba Cloud provides a wide range of Workload-specific compute instances, dedicated host compute instances, and private pool services. For example, Capacity Reservation (CR) is suitable for scenarios, such as capacity planning and cloud resource determination, and Immediate Capacity Reservation (ICR). The following is a practical solution for enterprises to schedule resources based on the three sales forms of cloud resources above.

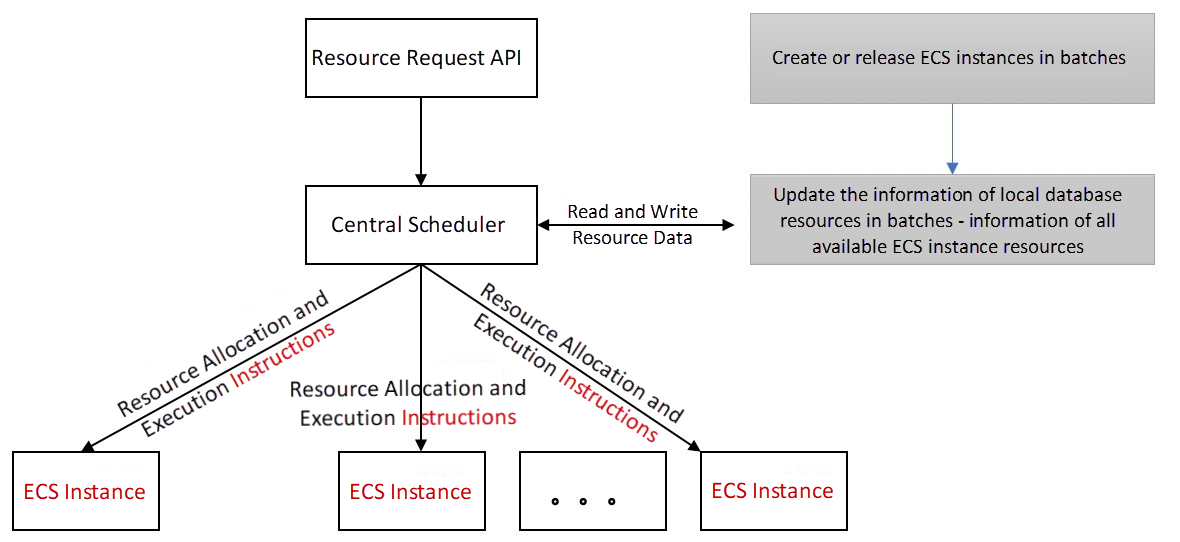

Figure 2 shows the on-cloud resource scheduling (minimum set) of an enterprise based on general ECS computing instances. For the resource database part, the resource data comes from ECS computing instances, and the physical nodes in Figure 1 are replaced with ECS compute instances. The commands in Figure 1 are considered internal protocols. In this scenario, the powerful dependencies are defined below:

Figure 2: Resource Scheduling on the Cloud for Enterprises Based on ECS Computing Instances

As shown in Figure 2, the node performing resource allocation is an ECS instance. The resources are allocated continuously on the ECS instances. Typically, multiple containers run on the same ECS instance. When the ECS instance is of high specifications and the core number of the vCPU (virtual CPU) is 32, the CPU capability of the instance is equal to a conventional physical machine with a 32-core vCPU.

As shown in Figure 2, the scale of the self-scheduling cloud resource pool is the scale of the purchased cloud ECS instances.

Advantages:

An enterprise controls information about ECS instance types, the number of ECS instances, and the geographical distribution of ECS instances independently and finely. This enables the enterprise to purchase ECS instances based on service Workload characteristics and deploy container instances in a targeted manner.

They can also be hosted directly by container services of the cloud platform. For example, ACK is used for hosting container services.

Disadvantages:

An ECS computing instance must be purchased in advance to enter the resource database scheduled on the cloud. To reduce costs, only purchase ECS instances when the resource pool is expanded as needed, instead of purchasing ECS instances in batches in advance. If instances are purchased in batches in advance but are not used, these instances will be wasted.

The other disadvantage is that only the internal protocol of capacity operation is supported.

Suitable Scenarios:

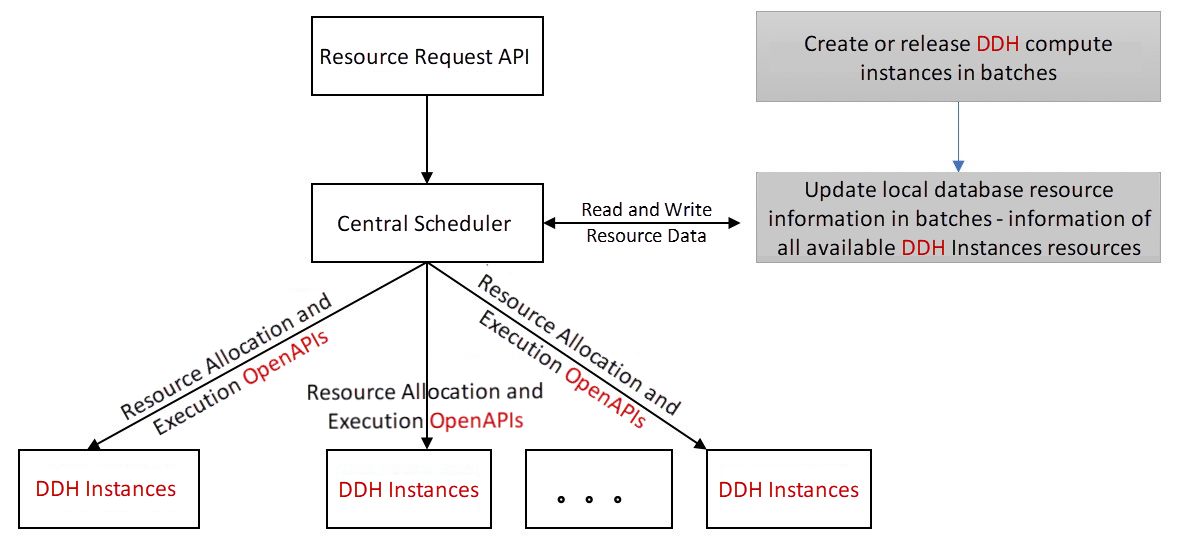

Figure 3 shows the minimal set of on-cloud resource scheduling based on DDH computing instances. For the resource database section, the resource data comes from the DDH computing instances.

Figure 3: On-Cloud Resource Scheduling for Enterprises Based on DDH Computing Instances

The physical nodes in Figure 1 are replaced with DDH computing instances. The operation instructions in Figure 1 are changed into OpenAPI protocol, which also supports the internal protocol of the container. In this scenario, the powerful dependencies are defined below:

As shown in Figure 3, the scale of the cloud resource pool scheduled by enterprises is the scale of the resource formed by the purchased DDH instances.

Advantages:

An enterprise can control the instance type, number, and geographical distribution of dedicated hosts on its own in a fine-grained manner. Therefore, based on the characteristics of the business Workload, enterprises can purchase DDH computing instances and orchestrate and deploy container instances or ECS instances accordingly.

High-density container deployment is supported. You can also deploy common ECS instances and custom ECS instances on DDH.

Disadvantages:

A DDH computing instance must be purchased in advance to enter the resource database scheduled on the cloud. To save costs, only purchase DDH computing instances when the resource pool needs to be expanded, instead of purchasing DDH computing instances in advance. If batches are purchased in advance but not used, these instances will be wasted.

A DDH computing instance is a physical machine. Therefore, a DDH instance costs more than an ECS instance. The capacity of a single DDH is large. There are fewer DDH nodes than ECS nodes with the same size of resource pool. As a result, the number of nodes deployed on DDH is sometimes higher than on ECS.

Suitable Scenarios:

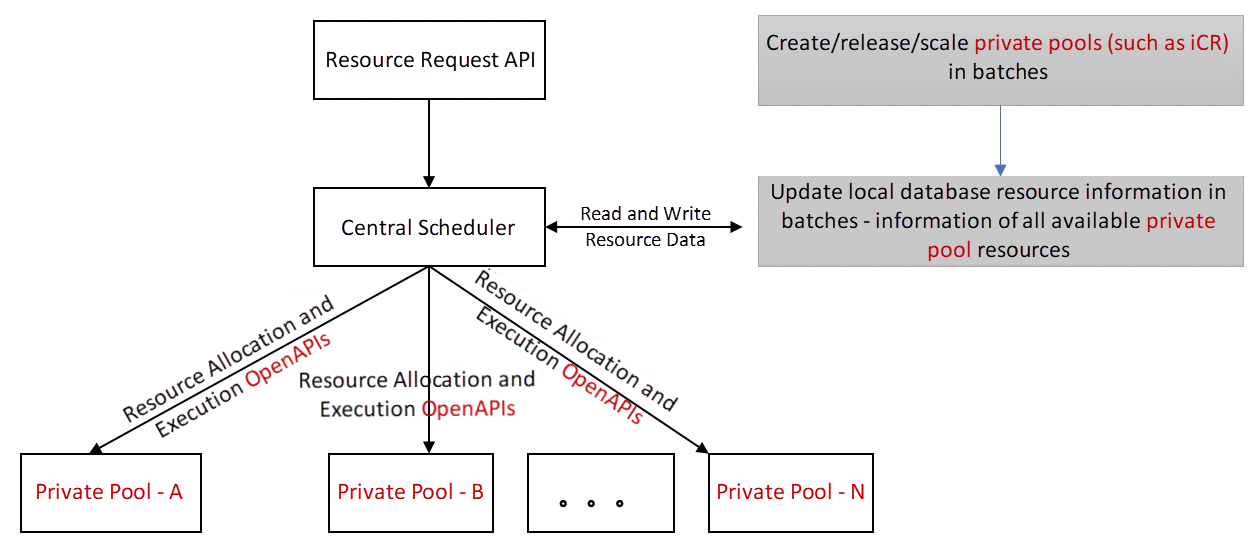

Figure 4 shows the private pool-based resource scheduling (minimum set) on the cloud. For the resource database section, resource data comes from the private pool computing instances.

Figure 4: Private Pool-Based Resource Scheduling on the Cloud for an Enterprise

Compared with Figure 1, where the physical nodes are replaced with private pools, the operation instruction in Figure 1 is changed from internal protocol to OpenAPI protocol. In this scenario, the powerful dependencies are defined below:

As shown in Figure 4, the scale of the cloud resource pool scheduled by the enterprise itself is the specified private pool capacity dynamically scaled up or down or the private pools newly added or released.

Advantages:

Enterprises control capacity independently and in a fine granularity to plan and allocate cloud capacity. The original perception of resources is converted into the capacity, which is more in line with business development demands.

It is oriented to the private pool and retains the characteristics of resources while supporting business-oriented capacity or computing power planning.

The cost of the private pool is more competitive. When the private pool is not used, the remaining capacity of the private pool can be shared with other users or retained with a low discount.

Compared with ECS compute instances and DDH compute instances, once the private pool capacity is reserved successfully, the resources will be delivered successfully. However, the purchase of a large number of resources on ECS or DDH may fail due to insufficient inventory.

Disadvantages:

Enterprises are required to plan the trend of cloud capacity changes in real-time to plan the capacity of private pools in quasi-real-time. Currently, the cloud platform also provides capacity prediction, which helps enterprises carry out capacity planning more accurately.

Compared with pre-created ECS computing instances or DDH instances, a private pool allows ECS instances to be created in real-time, with some almost negligible latency.

Suitable Scenarios:

After cloud migration, enterprises can still schedule cloud resources in a fine-grained manner and choose resource management modes as needed. We recommend scheduling cloud resources based on predefined capacities. In addition to fine-grained resource scheduling, 100% delivery of resources and discounts for long-term use are guaranteed as well.

Li Yuqian is a Technical Expert of Alibaba Cloud Elastic Computing with five years of practical experience managing and scheduling large-scale cluster resources. He has comprehensive and in-depth practices and problem-solving experience in terms of long-time service and co-location scheduling. He is good at stability-first cluster scheduling strategy, stability architecture design, and global stability data analysis practice, and the Java and Go programming languages. He has also submitted more than ten related invention patents.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

8 Things Customers Expect When Interacting with Brands Digitally

2,593 posts | 793 followers

FollowAlibaba Clouder - July 12, 2019

Alibaba Cloud Community - December 21, 2021

Alibaba Developer - March 11, 2020

Alibaba Cloud Community - May 16, 2022

Data Geek - November 11, 2024

Alibaba Clouder - September 2, 2020

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Clouder