During the session on big data technology at the 2019 Apsara Conference held in Hangzhou, Guan Tao, Senior Director of Alibaba Cloud Computing Platforms, delivered a speech titled "Progress and Prospects of Alibaba's Big Data Technology." This article describes the shift of customer value in the big data domain from the perspective of Alibaba, and then summarizes the development focuses of core technologies. Finally, it describes the work involved in building an intelligent big data platform, from engine optimization to "autonomous driving," with several typical cases.

The following introduces highlights from Guan Tao's lecture.

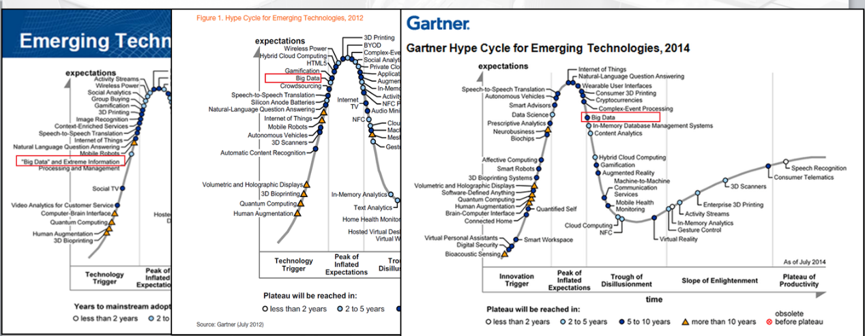

Big data technology has been around for 20 years, and Alibaba's Apsara platform has a history of 10 years. The preceding figure shows the Hype Cycle for Emerging Technologies published by Gartner, a world-famous research and advisory company. Emerging technologies refer to technological innovations seen in a certain period of time. The horizontal axis depicts the progression of an innovation in five stages, from its trigger and initial over-enthusiasm, through a period of disillusionment to continuous development. Each innovation on the Hype Cycle is assigned to a category that represents how long it will take to reach the plateau of productivity from its current position. In 2014, big data reached the end of the peak of inflated expectations. In 2015, Gartner retired big data from the hype cycle and many people were arguing about where big data should be positioned. The Gartner analyst Betsy Burton concluded, "Big Data...has become prevalent in our lives." What she means is that big data is no longer a specific technology, but a comprehensive technological domain with a wide range of common applications. Alibaba is of the opinion that big data moved from the early adoption stage to the mainstreaming stage around 2014 and has since driven many changes in value proposition.

The preceding figure shows a comparison of the early adoption and mainstreaming stages of big data. In the early adoption stage, ease of use is the top priority. Agility is the next thing to concern users. It is very important to quickly make adjustments and modifications to meet business needs when platforms, supporting components, and toolchains are still immature. Finally, the applications of the technology need to achieve certain goals. However, these applications do not have to be comprehensive or even stable. They are acceptable if they can be tested through trial and error. The characteristics of the early adoption stage are very different from and can even be contradictory to those of the mainstreaming stage. In the mainstreaming stage, costs and performance become critical. This is especially true of costs as surveys show that users are very concerned about costs. Users not only want to know how much they will have to pay for big data processing, but also how to control costs in the event of massive data volume growth. In the mainstreaming stage, when applications reach large scales, the ability to offer enterprise-grade services becomes crucial. For example, Alibaba's big data platform generates Alipay statements for merchants every day. Therefore, the settlement systems between merchants and merchants, merchants and their vendors and clients, and merchants and banks have zero tolerance for errors. The shift from the early adoption stage to the mainstreaming stage requires a rich and complete toolchain and ecosystem and the integration of these two components to achieve a high level of overall performance.

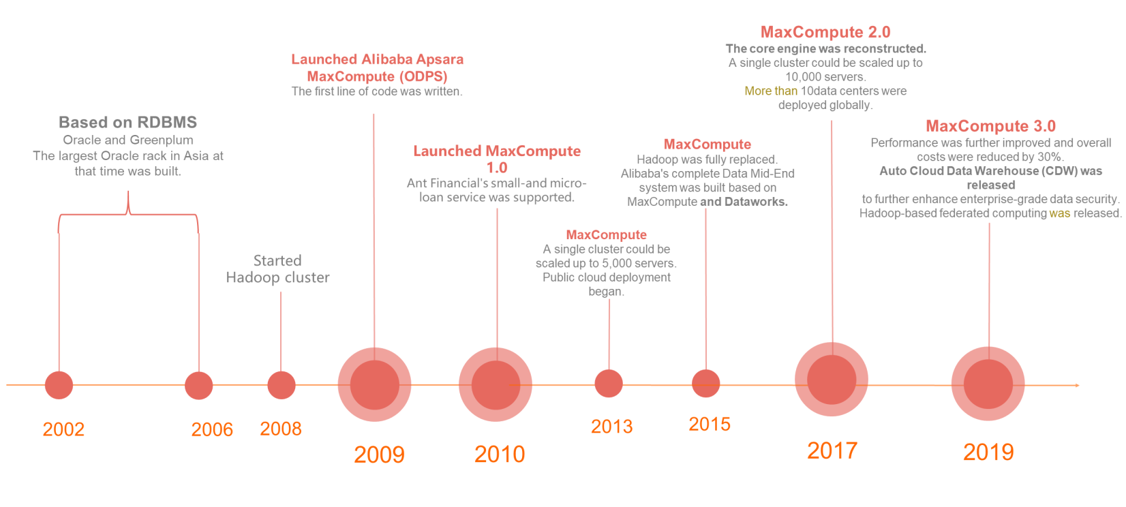

MaxCompute is the underlying system of the Apsara platform and supports most of Apsara's data storage and computing demands. In partnership with Oracle, Alibaba began to build its data warehouse, mainly for accounting and internal use, in 2002. Four years later, Alibaba had the largest Oracle rack in Asia. In 2008 and 2009, Alibaba launched the Hadoop and Apsara systems respectively, followed by the well-known Moon Landing system. In 2015, the Moon Landing system was completed, all data was brought together, and a data base was established as a unified storage system, an intermediate Unified Computing System, and a data mid-end. The entire system is centered on Data Mid-End and represents the integration of big data within Alibaba. In 2016, the MaxCompute 2.0 project was launched, which almost completely replaced the system used from 2010 to 2015 and began to provide services to domestic cloud computing customers. In 2019, with the launch of MaxCompute 3.0, in addition to performance and costs, more attention is given to the challenge of ultra-large data volumes that have made it impossible for real humans to make optimizations in the big data field. This means it is difficult for mid-end engineers to manually perform modeling and optimization for the mid-end. Alibaba believes that artificial intelligence (AI) is the future and that the ability to optimize big data through AI technology is crucial.

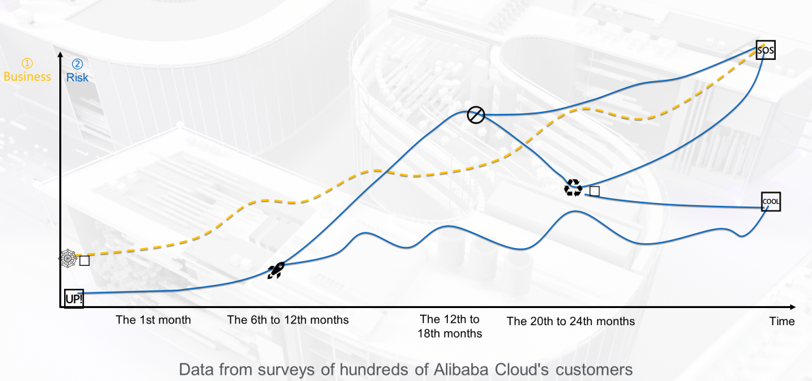

The preceding figure shows the results derived from surveys of hundreds of customers. The yellow curve represents the business growth of the company and departments, and the blue curve represents the process of big data application. In the first year, the curve rises slowly. In the mainstreaming period, development begins to take off when people uncover the technological uses and value of big data. Early in this stage, the curve is not flat but steep, indicating rapid growth.

With this trend comes a problem. The growth rate of data volumes, computing workloads, and financial expenses has outpaced the growth of the existing business and may continue to rise. At this time, an appropriate system, coupled with good optimization and governance measures can slow down the growth of data volumes to bring it into line with applications and development, while ensuring sustainable costs. For example, when the business volume increases five-fold, the costs only double. If you cannot bring down the data size, the data center will become a cost center. In such a case, the value of massive data and computing workloads will not be apparent. To solve this problem, it is necessary to provide better service capabilities with high performance and low costs to reduce the costs at the platform layer and enable better data governance. In addition, big data can also be optimized through intelligent approaches.

Alibaba faces four challenges in building a high-performance and cost-effective computing platform:

To address these challenges, Alibaba has made the following improvements to its computing platform:

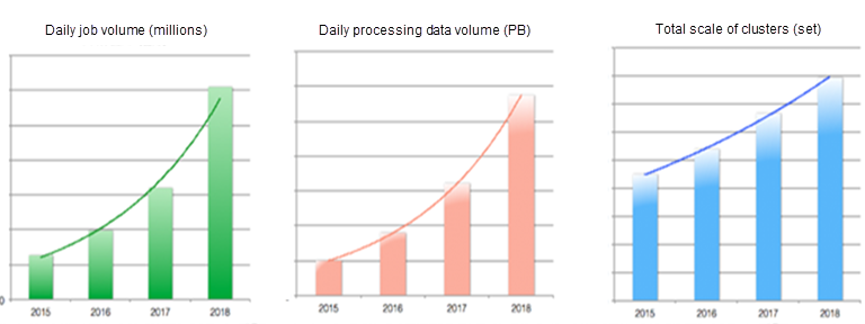

The preceding figure shows an example of Double 11 from 2015 to 2018. The left shows the daily job volume, the middle shows the daily processing data volume, and the right shows the cost curve. This provides that through the Apsara platform and its technical capabilities, Alibaba has brought its business growth rate into line with its cost growth rate.

In addition, we have made more optimizations:

Engines:

Storage: Compatible with open-source Apache ORC. With the new C++ writer and improved C++ reader, reading performance is 50% faster than that of CFile2 and open-source ORC.

Resources: A set of computing and scheduling capabilities across clusters. The servers in multiple clusters are aggregated into one computer.

Scheduling system optimization: The average cluster utilization rate is 70%. In addition to single-job metrics, the throughput rate of the overall cluster is emphasized.

Servers: The utilization rate of online servers is increased by more than 50% by using hybrid deployment technology. It also supports business elasticity to cope with Double 11.

The following are some relevant data and cases:

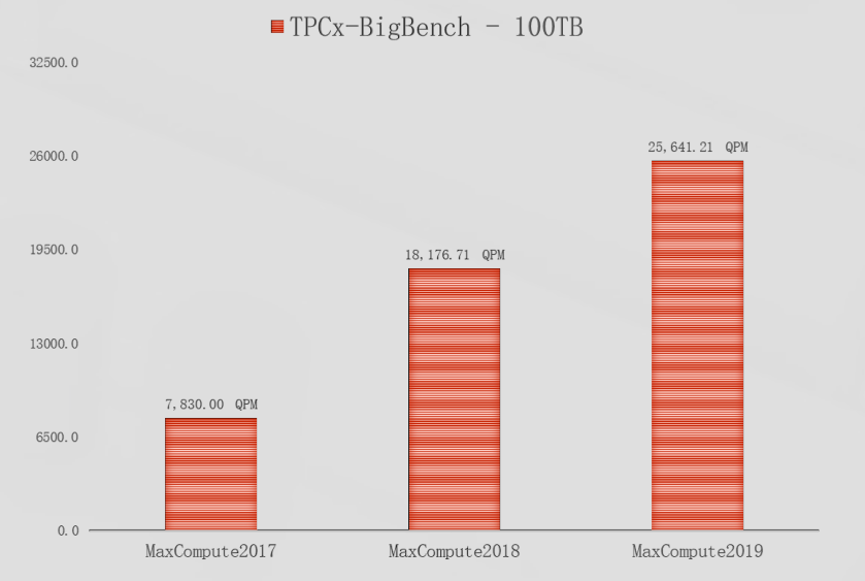

The preceding figure shows the TPCx-BigBench (100TB) benchmark test results for MaxCompute from 2017 to 2019. As you can see, its QPM basically doubled every year.

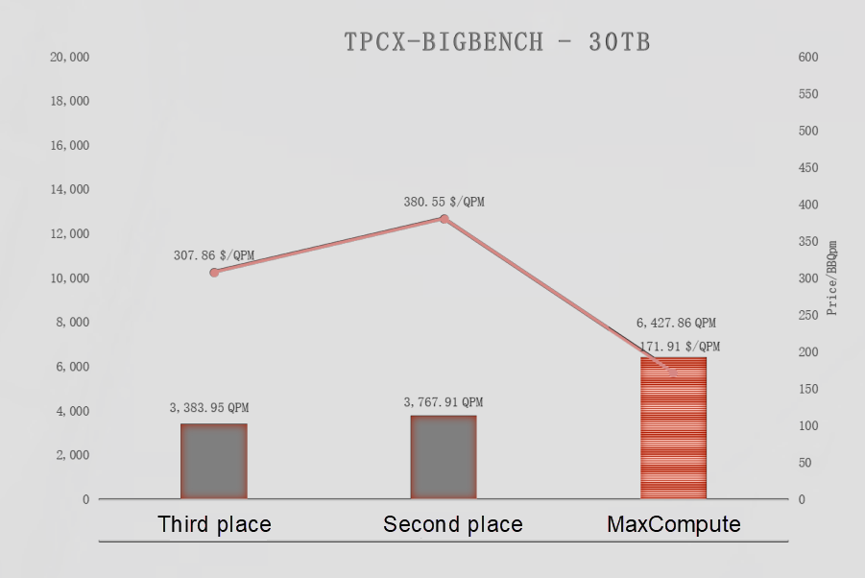

As shown in the preceding figure, compared with other systems in the industry, MaxCompute delivers twice the performance at only half the costs.

The construction of a versatile enterprise-grade computing platform at the system backend can be broken down into four parts:

1. Reliable data joining points (data chassis) are required. Many companies view their data as an asset, and therefore data security is crucial. Specifically, this includes the following content:

2. Disaster recovery is the responsibility of enterprises themselves. They can achieve certain capabilities by selecting different disaster recovery offerings, including the following:

3. Privacy breaches are a growing concern. Despite this, Alibaba has been able to maintain a perfect record in privacy protection due to its high standards for data management, sharing, and security. The following are the specific aspects:

4. The optimization of internal scheduling capability and scalability in the system includes the following:

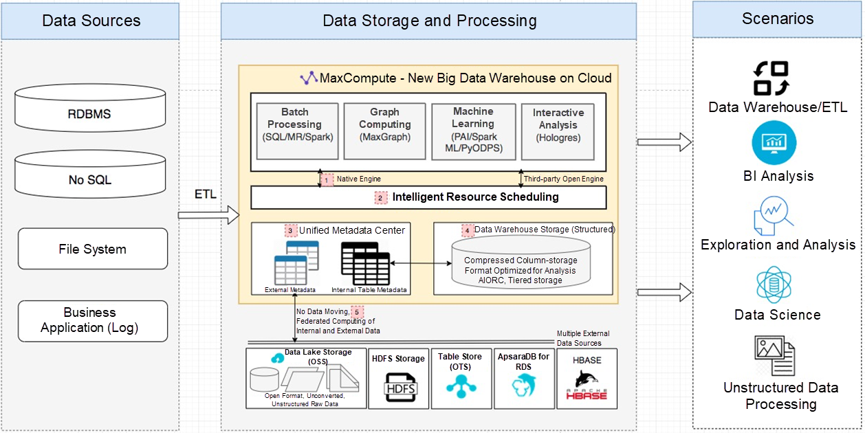

The preceding figure illustrates a case of Apsara and MaxCompute platform integration. The first layer is the unified storage layer, opening both the MaxCompute engine and other engines. The abstraction layer in the middle is the federated computing platform. Federation refers to the abstraction of data, resources, and interfaces into a set of standard interfaces, which can be used by Spark and other engines and form a complete ecosystem. The second line is the external ecosystem of MaxCompute. There are many types of data sources, which exist not only in Alibaba's own storage, but also in database systems and file systems. In addition, users can include other systems to work without moving data. This is called federated computing.

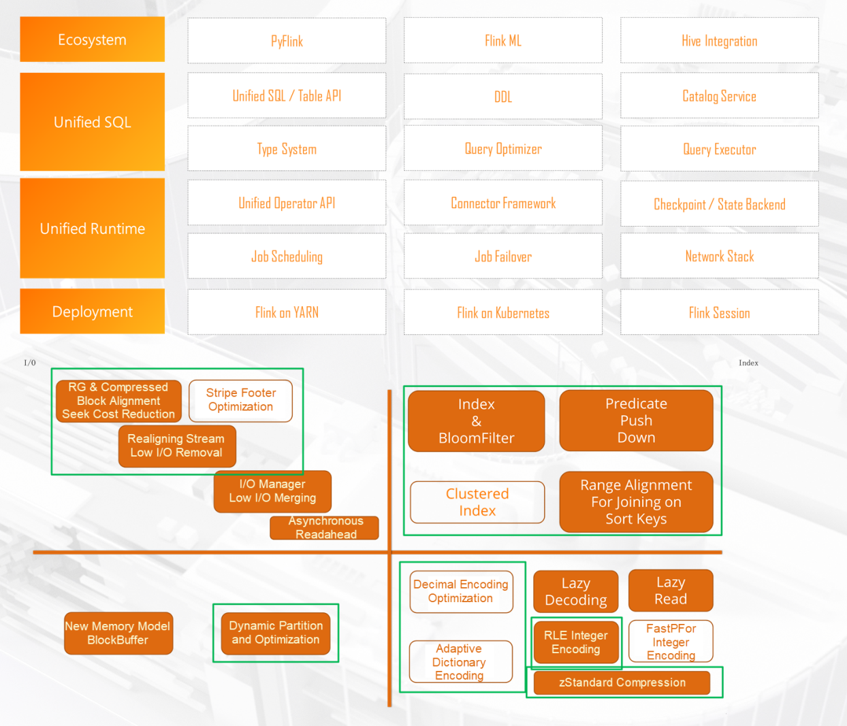

Previously, Blink was a separate branch of the Flink community. As a system of best development practices within Alibaba, Blink became a default community with version 1.9, contributing to the SQL engine, scheduling system, and Algo on Flink. With the acquisition of a company affiliated with Flink, we will promote the development of Flink.

Finally, there is the development of the storage layer. The preceding figure shows the modifications of compression, read and write, and data-related formats. All the modifications will be pushed to the community. The orange font indicates the modification was made according to design standards.

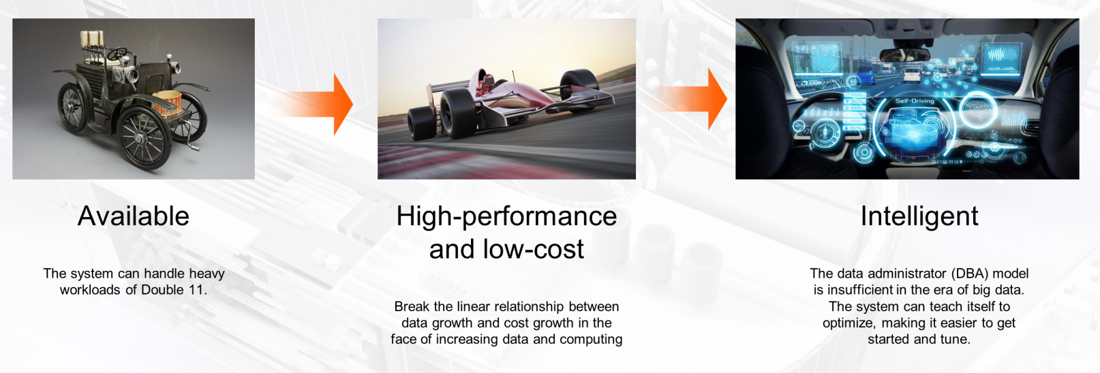

Computing engine optimization involves not only the engine itself, but also its "autonomous driving" capability. The preceding figure illustrates the evolution of the Apsara system by using cars as a metaphor. The first phase emphasizes availability, such as whether the system can support the overwhelming workloads of Double 11. In the second phase, higher performance and lower costs are the goals of optimization. When it comes to the third phase, performance becomes the ultimate goal.

Three key challenges have emerged within Alibaba:

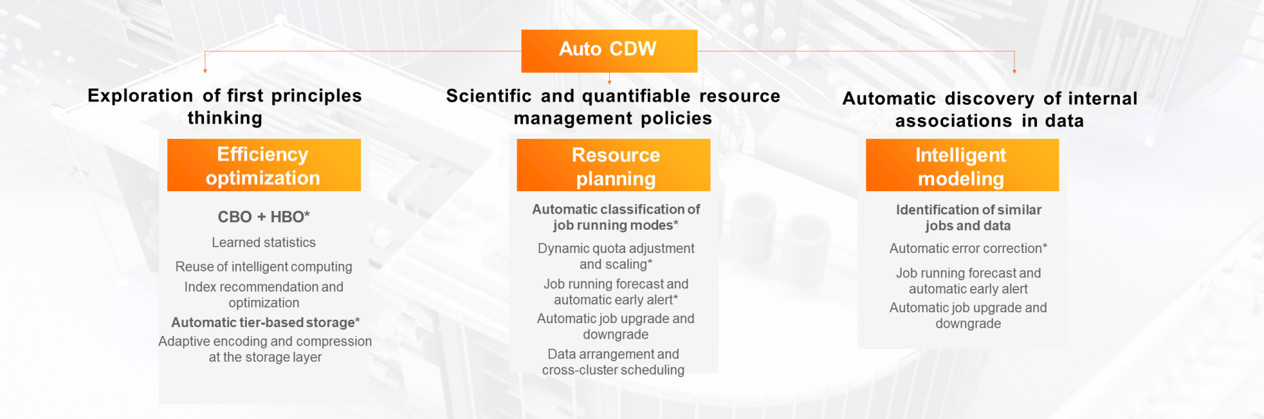

From the perspective of Auto Cloud Data Warehouse (CDW), three aspects can be optimized. The first aspect is efficiency, including HBO that is based on historical information. This process can be understood in this way: When a new job that the system is not familiar with runs in the system, the system allocates resources in a conservative manner to complete the job. The system tuning tends to be careful the first time, but gradually improves and better adapts to the job. Four days later, the job performance is much better. With HBO optimization, Alibaba's resource utilization reaches 70%. In addition, learned statistics, reuse of intelligent computing, and intelligent data stratification are also included.

The second aspect is resource planning. When 100,000 machines in the cloud are distributed in different data centers, data planning and resource scheduling are carried out automatically rather than manually. This includes the automatic classification of job running modes, among which three are for very large jobs and highly interactive jobs. In addition, dynamic quota adjustment, scaling, job running prediction and automatic early alerts, automatic job upgrade and downgrade, data arrangement, and cross-cluster scheduling are also included.

The third aspect is intelligent modeling that includes the identification of similar jobs and data, automatic error correction, job running prediction, automatic early alerts, and automatic job upgrade and downgrade.

These three aspects have great development potential in the field of Auto CDW. In the preceding figure, the items followed by * are functionalities soon to be announced by Alibaba.

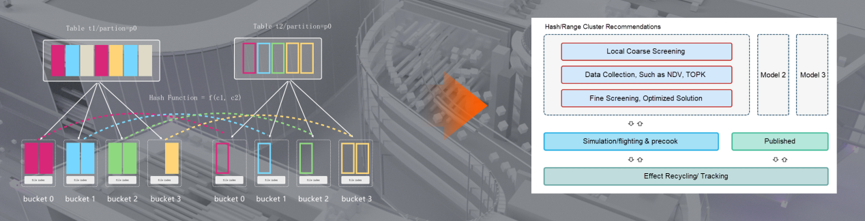

Cost modules are assimilated according to relationships between running jobs so that the adjustment solution with the best index can be found and then pushed. For example, based on MaxCompute, 300,000 fields in 80,000 tables are selected within Alibaba Group, and the best clustering solution for 44,000 tables is recommended, reducing costs by an average of 43%.

On September 1, 2019, Alibaba reduced its overall storage prices by 30%. This was partly due to the Auto Tiered Store technology shown in the preceding figure, which enables automatic separation of hot and cold data. In the past, data was separated by two methods. In the first method, the system automatically performed cold compression, which could reduce costs by about two thirds. The second method was to allow users to add flags. However, when there are tens of millions of tables in the system, it is difficult for data development engineers to identify how the data is used. In this case, economic models can be used to build the relationship between access and storage, and the hot and cold levels can be automatically customized based on different partitions of different jobs. In this way, Alibaba was able to reduce the compression ratio from 3 to 1.6, improving the overall storage efficiency by 20%.

A cloud system relies on multiple data centers deployed around the world. However, data generation is related to businesses, and the association among data must not be broken. Therefore, questions, such as in which types of data centers data should be stored and what kinds of jobs should be scheduled to achieve optimal performance, concern optimal matching on a global scale. Internally, Alibaba integrated static job arrangement and dynamic scheduling to develop a system called Yugong. The two diagrams on the right of the preceding figure show the principles of this system.

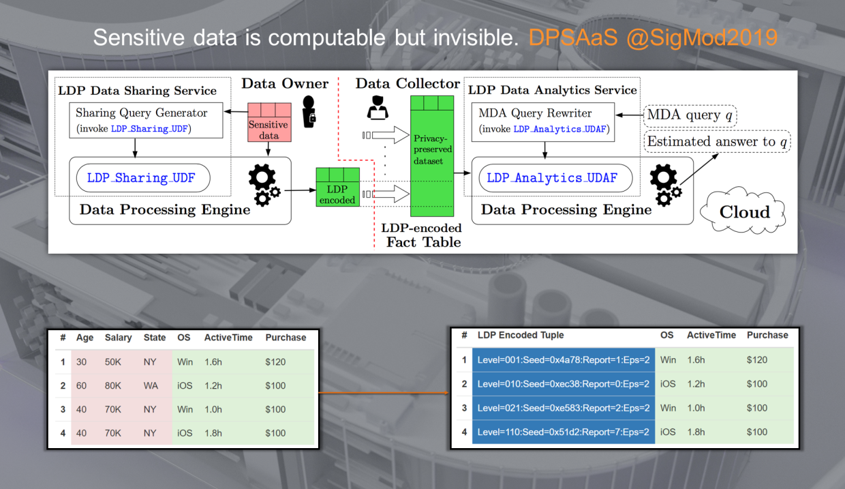

The computing of sensitive data is called secret computing. This makes privacy data computable but invisible. In the preceding figure, the first three columns contain sensitive data, whereas the last three contain non-sensitive data. All the sensitive data is masked by using differential privacy and therefore can not be queried, but the computing results are still correct. With this solution, Alibaba is trying to strike a balance between data sharing and privacy protection.

Alibaba is exploring how to perform operations on graph-based relationships, find the optimal balance between systems, perform privacy-based computing, improve scheduling to meet multiple objectives, and achieve better results while drastically reducing data volume at the sampling layer.

New Horizons of the MaxCompute Ecosystem: Rich, Connected, and Integrated

The Journey of an SQL Query in the MaxCompute Distributed System

137 posts | 21 followers

FollowAmuthan Nallathambi - June 4, 2023

Alibaba Clouder - April 29, 2020

Apache Flink Community China - September 27, 2019

Alibaba Clouder - September 25, 2019

Data Geek - January 15, 2025

Alibaba EMR - March 16, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba Cloud MaxCompute