During the session on big data technology at the 2019 Apsara Conference held in Hangzhou, Hou Zhenyu, Chen Yingda, and Dai Xiening, senior staff engineers at Alibaba Cloud, jointly delivered a speech titled "The Journey of a SQL Query in the MaxCompute Distributed System." This article introduces the MaxCompute computing platform, mega-scale enterprise-grade SQL engine, and their characteristics. It also describes how to build an enterprise-grade distributed intelligent scheduling execution framework, and finally introduces the next-generation columnar storage engine AliOrc and its optimization methods.

The following introduces highlights from their lecture.

With a massive user base, MaxCompute supports various key businesses and complex scenarios of the Alibaba Group, core businesses of multiple emerging Internet enterprises, and key industries related to people's lives and national security. Its mega-scale computing storage includes more than 10 million computing tasks per day, exabytes of storage capacity, more than 100,000 servers, and more than 10 data centers deployed around the world.

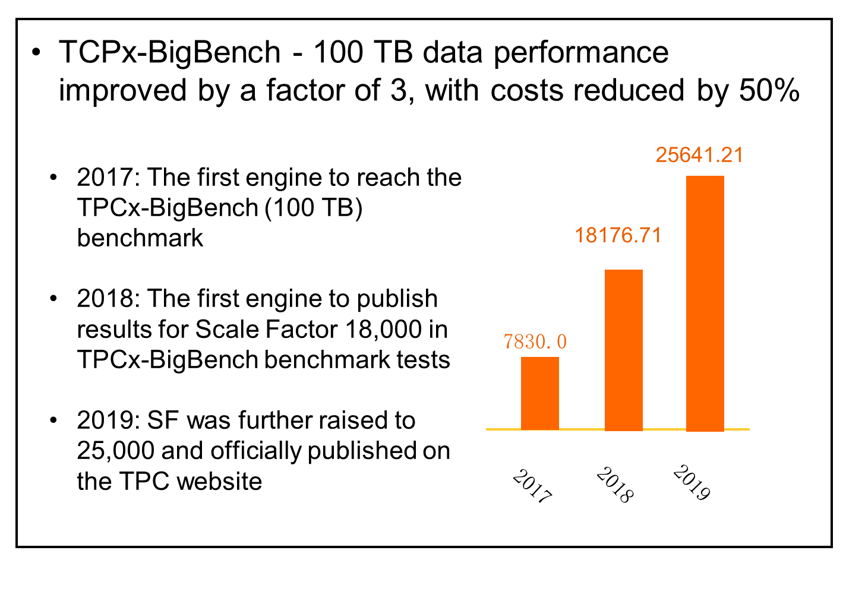

TPCx-BigBench measures the computing performance of big data systems. It covers some complex types, including machine learning scenarios and businesses that are almost big data scenarios. In 2017, Alibaba Cloud, with MaxCompute, was the first cloud provider to reach the TPCx-BigBench (100 TB) benchmark. In 2018, MaxCompute became the first engine to publish results for Scale Factor 18,000 in TPCx-BigBench benchmark tests. In 2019, that figure was further raised to 25,000 and officially published on the TPC website.

MaxCompute is not only widely used within the Alibaba Group, but also employed by many famous Internet companies and applications related to people's lives and national security.

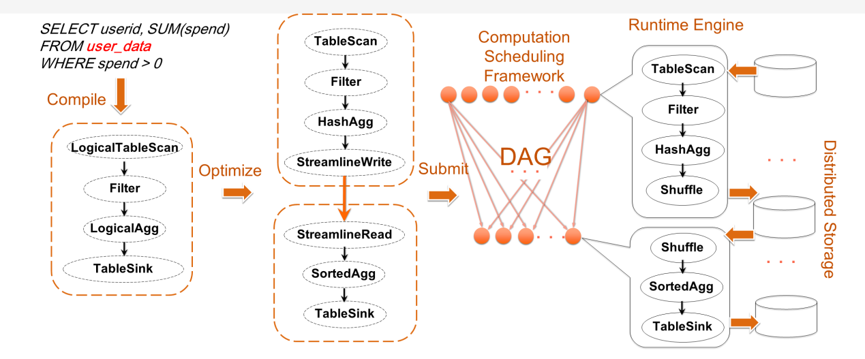

The preceding figure shows the workflow of an SQL query. First, an SQL statement is compiled to generate a logical execution plan, which can be understood by computers. Then, the logical execution plan goes through the optimize process, in which it is translated into a physical execution plan specific to and optimal for the current cluster and runtime, regardless of its complexity. Each optimize task does not have to be related to the original SQL query. The computing scheduling framework then makes arrangements for tasks to be reasonably and quickly executed. After the arrangements are applied to machines, each machine will have an SQL runtime (runtime engine), which can understand the physical execution plan and read the data from storage step by step. After shuffling, the results are returned to storage. We can see that the performance of the runtime itself is very critical. One SQL statement may consume hundreds of terabytes of data. Therefore, the storage performance is also of great importance.

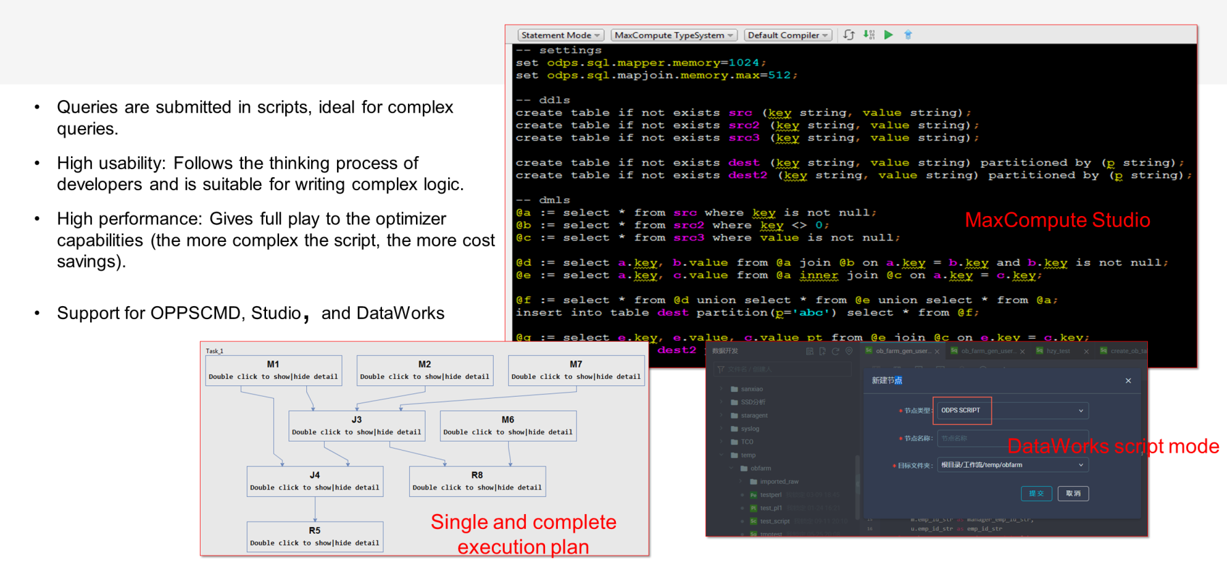

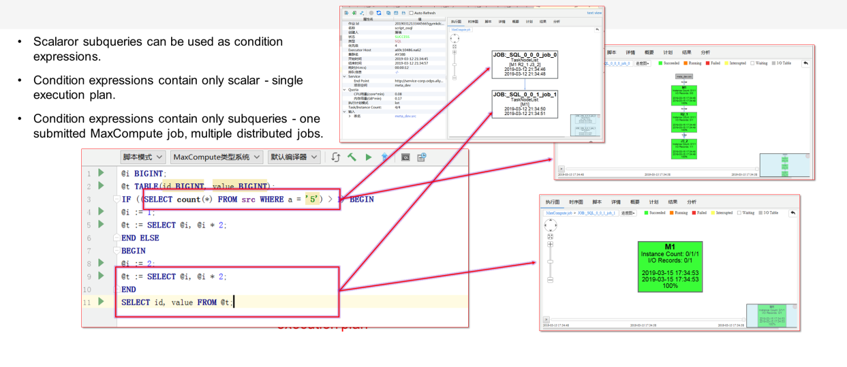

The preceding figure shows an SQL script, with configuration statements on the top and the created tables on the bottom. Each line is an SQL statement, which can be linked together in a script. In this way, when describing a very complex logic, you do not need to write a nested script. Therefore, this method is more flexible and can support more complex business scenarios. Alibaba has very complex business scenarios. Before this method was supported, users wrote nested scripts that were complicated, convoluted, and full of repetitions. When a script did not work, it was split up and linked by using external calls. When users cannot afford the cost of maintenance, they introduce additional overhead for performance purposes, and subsequent statements need to reference previous statements. However complex the script is, after going through the compiler, it is still a single and complete execution plan, causing no additional cost. The more context the optimizer sees, the more opportunities for optimization. After forming a single and complete execution plan, the entire business model can be executed in the most efficient way. DataWorks also supports this mode. In script mode, you can write SQL statements similar to C++ or Java.

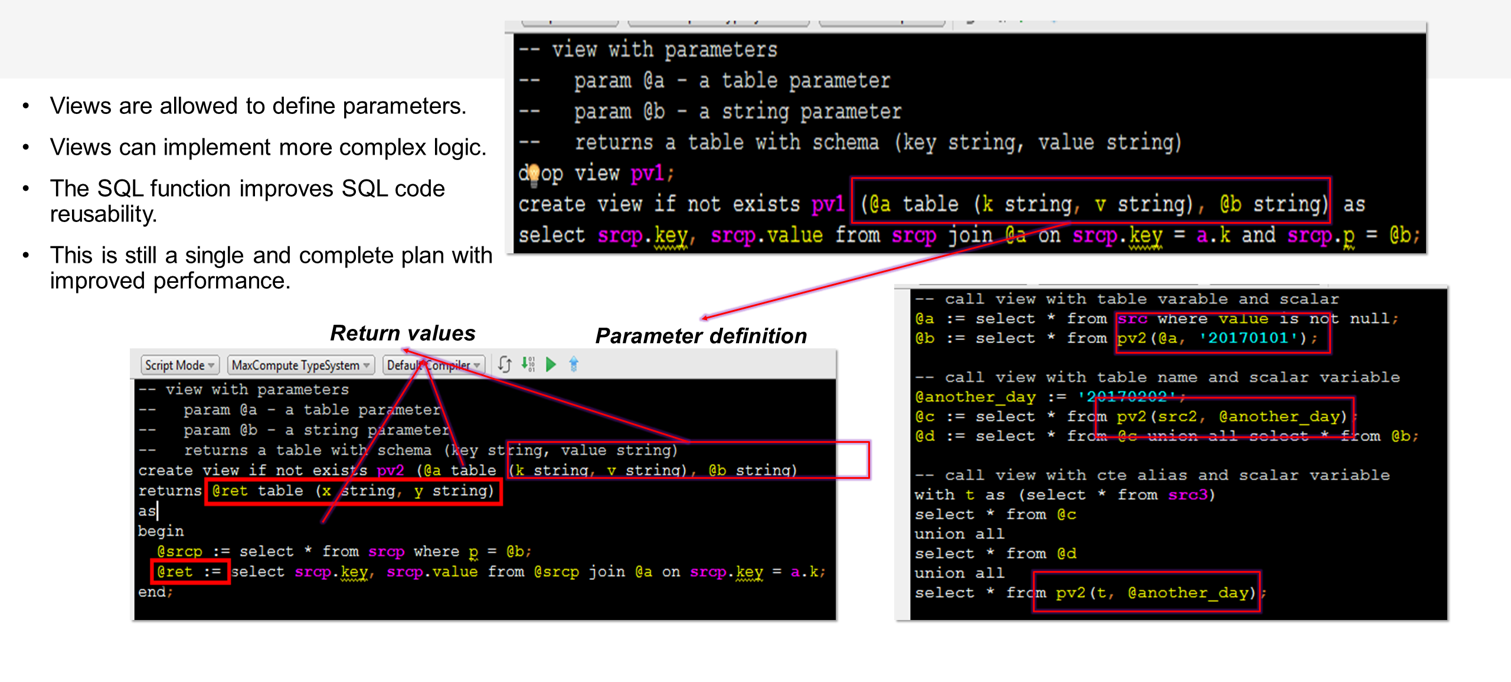

When writing in C++ or Java, a function is often extracted from the public logic and put into a module. This process is regarded as a code reuse mechanism. However, standard SQL, and especially big data SQL, does not have this mechanism. For Alibaba scenarios with high complexity, this mechanism can be extremely useful. The underlying datasets provide the basic data needed by various departments that consume this data in different ways. In this case, extracting a function like C++ or Java is possible in MaxCompute. In MaxCompute, you can encapsulate some complex SQL logic and data reading and pass table variables to the red boxes in the preceding figure, which were originally used as normal views. In this way, you can implement the features like functions do in C++ or Java. With the encapsulation of common business logic in SQL and the script mode described above, parameterized views can organize complex SQL business logic to support highly complex business scenarios.

Although big data generally does not support IF/ELSE, there are situations where IF/ELSE is needed. For example, assume you need to perform full calculation once a week and incremental calculations every day. If IF/ELSE is not supported, you need to split the script into two and serially connect them through the scheduling framework. However, MaxCompute allows you to directly write IF statements or SELECT statements coupled with the script mode. If an abnormal result is returned, you can directly put it in an expression to determine the branch for which to execute the SQL query. All SQL features are designed for complex application scenarios.

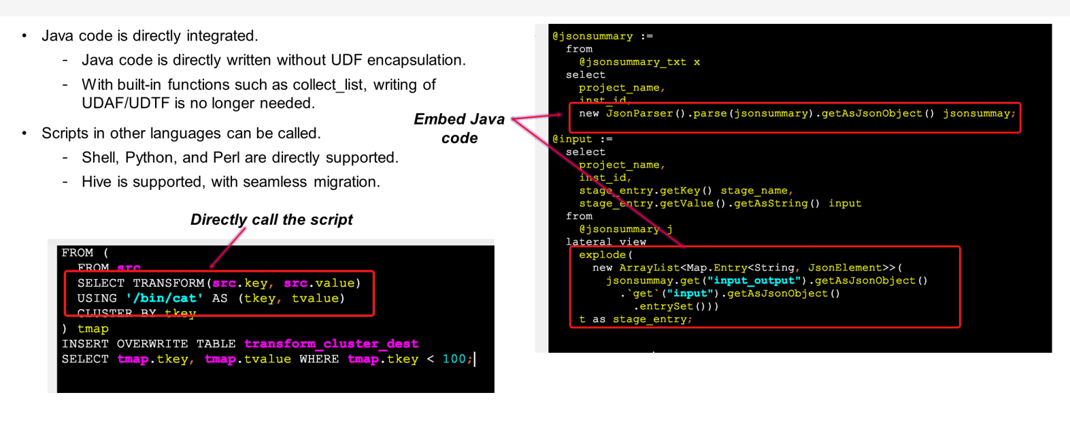

Generally, SQL provides basic data types, and sometimes complex data types as well, which are all within a given scope. MaxCompute can directly support especially complex data types. The framework on the right shows an example of a seamless integration of Java and SQL, without the need for UDF encapsulation. On the left, you can see SELECT TRANSFORM, which is able to directly calls shell scripts in SQL and is fully compatible with Hive.

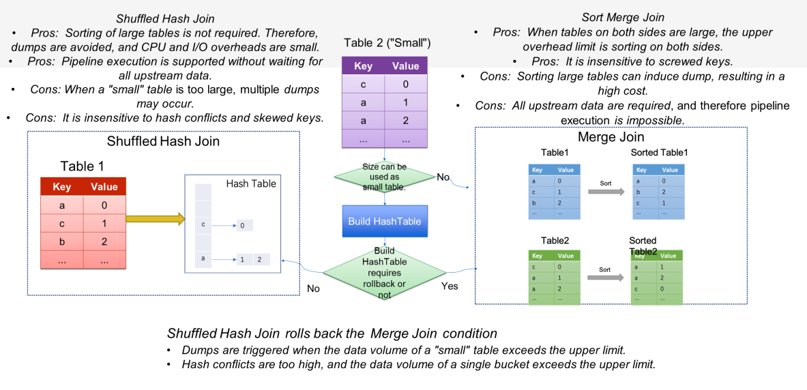

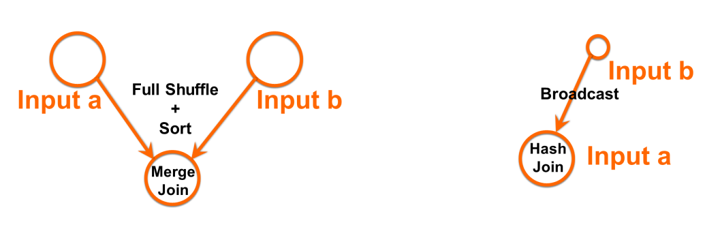

Adaptive Join includes Hash Join and Merge Join. Hash Join delivers better performance, but its performance sharply deteriorates when used in unsuitable scenarios, especially ones where there are a lot of hash conflicts. Merge Join provides a minimum performance limit. You can dynamically select a suitable scenario for intelligent selection.

Shuffle has also been optimized for specific mega-scale systems to improve shuffling performance by 70%, enhance the performance and stability of mega-scale shared clusters, and reduce I/O pressure. The optimization methods include the following:

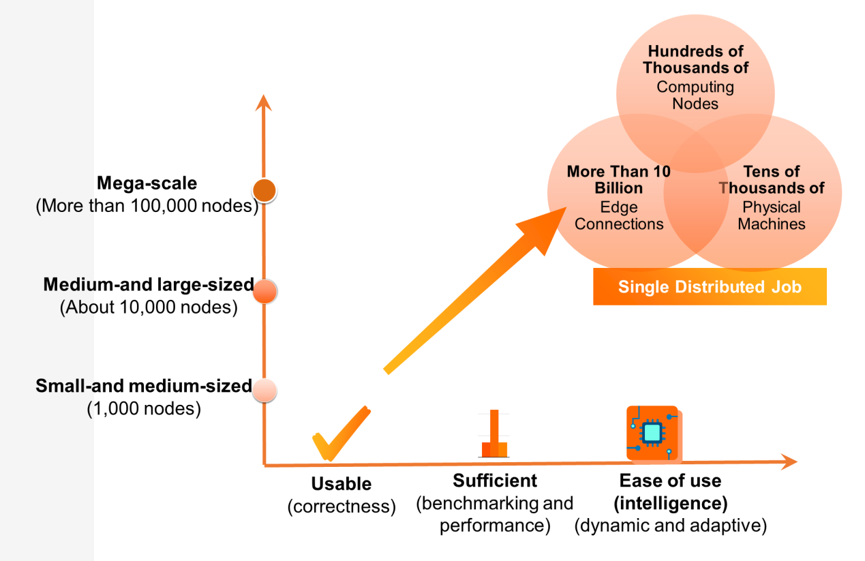

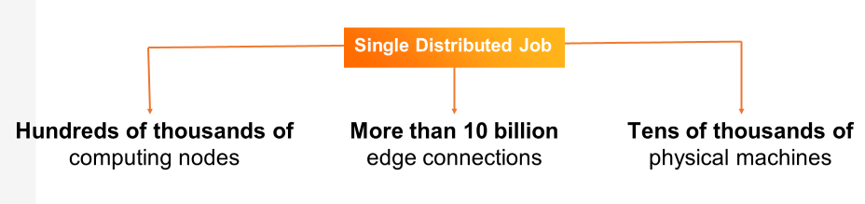

The entire system develops in two dimensions. One dimension is system scale. As the scale of the system continues to grow, the distributed scheduling execution system has to handle tens of millions of problems per day. In Alibaba, a single distributed job may involved hundreds of thousands of computing nodes, tens of billions of connections, and tens of thousands of physical machines.

The other dimension is system maturity. A system must go through three stages before it grows to be an enterprise-grade distributed execution scheduling system. In the first stage, the system must be usable, which emphasis correctness. The results of running a job on a stand-alone system and a distributed system are different. It is important to ensure the correct output of jobs in the right way when faced with various node failures, network layer failures, and disaster recovery issues, especially for a mega-scale system. In the second stage, the system should be sufficient, which means that each computing system must be honed to the extent that it can generate standard results on various benchmarks in order to improve performance. In the third stage, the system should be easy-to-use, or intelligent, which refers to having dynamic and adaptive abilities in the dynamic execution process and adjusting the job execution plans according to different characteristics of jobs.

The enterprise-grade distributed computation scheduling framework involves three phases:

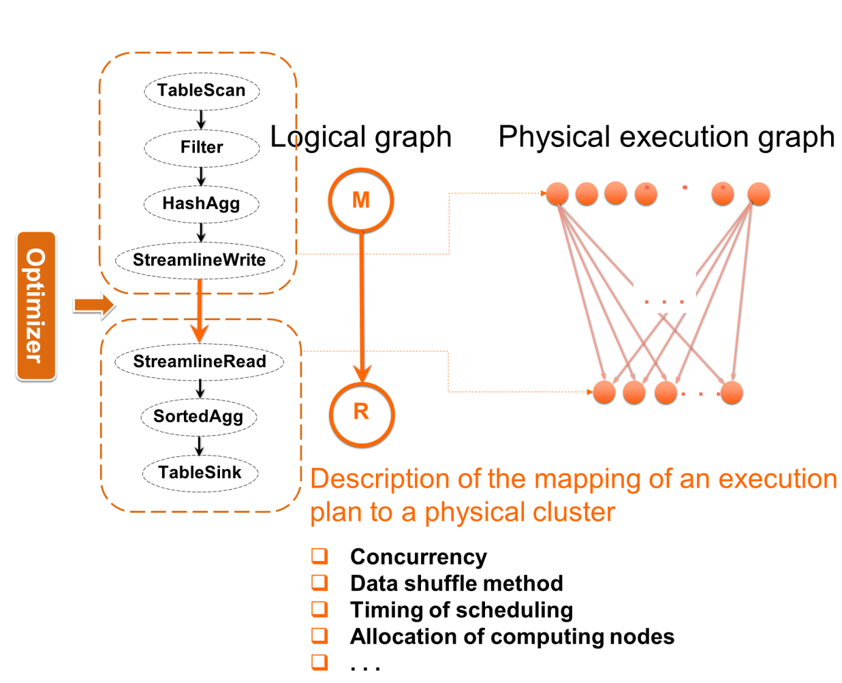

The preceding figure shows the execution process of a job in the distributed system after it leaves the optimizer, which can be understood as a process of mapping from logical graph to physical graph.

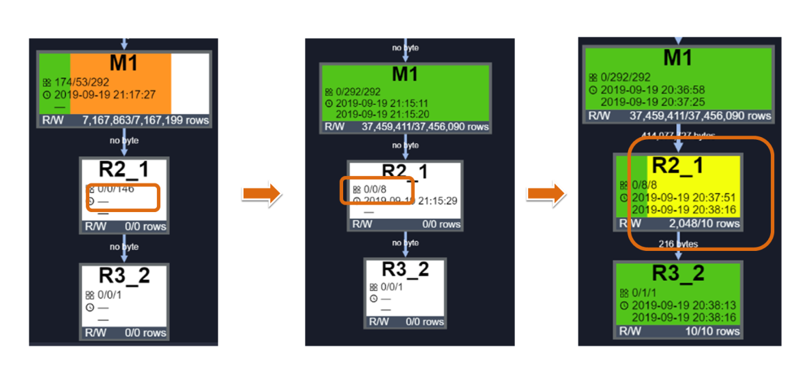

The preceding figure shows the three stages of a job. In the first stage, the job is submitted and run. In the second stage, concurrence is dynamically adjusted according to the output. In the third stage, the required data is generated and the job ends.

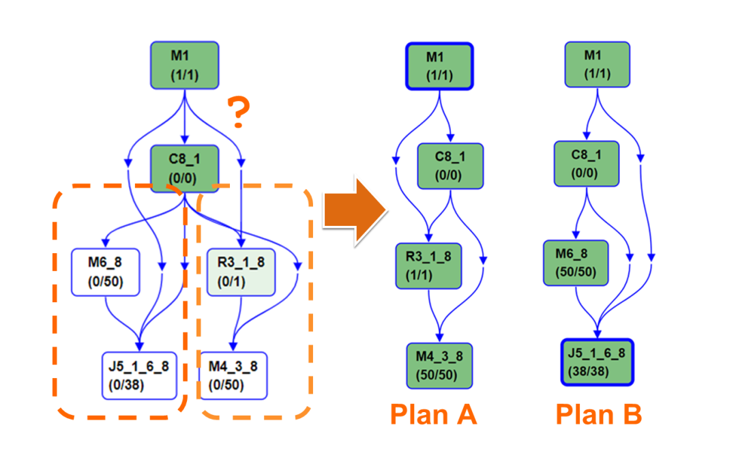

The preceding figure shows the dynamic logical graph of intelligent DAG execution, including the Sorted Merge Join and Broadcast Join algorithms. Sorted Merge Join is a classic distributed join algorithm that supports large-scale jobs. It has broad applications by virtue of its reliability, but incurs higher costs because it involves full shuffling and sorting. In addition, shuffling can cause data skew. Broadcast Join applies only to specific types of jobs (when one input can be loaded into the memory of a single computing node). Its use in unsuitable situations can result in OOM and job failure.

In its ideal situation, data is evenly distributed and the data attributes can be understood. Therefore, the optimizer can generate the "optimal" plan, especially when performing benchmarking. However, due to inaccurate source data and unstable attributes of intermediate data, it is impossible to estimate the attributes of the generated data. Therefore, the optimizer is allowed to provide a non-deterministic plan (Conditional Join). In this case, the optimizer generates plans for two execution paths, and the scheduling execution framework can dynamically adjust the execution of logic graph according to the size of the data generated upstream.

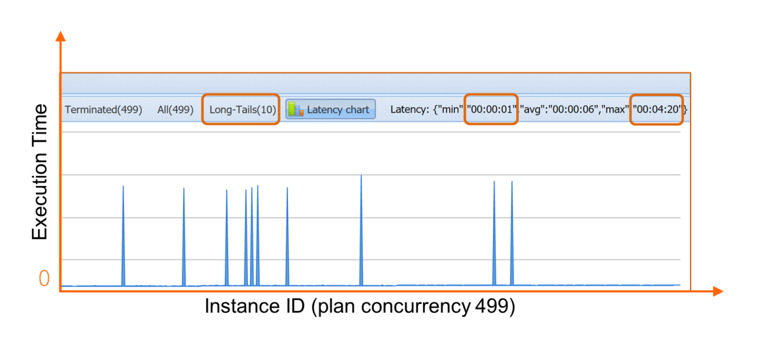

The preceding figure shows an example of concurrency. In a simple concurrency adjustment method, the average value is directly used as the concurrency based on the total amount of data from upstream, and only downward adjustment is supported. However, the data may be skewed and this method is no longer applicable. The following are two new scheduling methods:

The agility of scheduling is crucial for jobs at this large scale. Alibaba wants to allow a job in a large cluster to understand the status of each computing node and physical machine to enable intelligent fault tolerance and predictive fault tolerance. As jobs become larger and larger, the performance improvement delivered by a good scheduling framework will be increasingly significant.

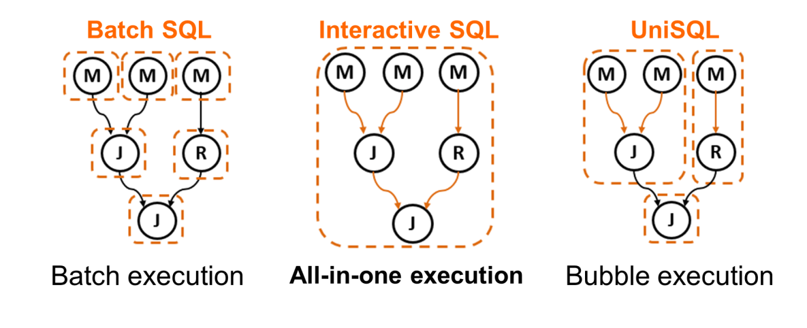

As the base of the Apsara system, Alibaba's computing platform may run jobs in addition to SQL. In the most typical scenario, SQL statements are batch executed. Batch execution and all-in-one execution represent two extremes in resource utilization and performance optimization. A user concerned about both execution performance and resource utilization will try to strike a balance between the two. Therefore, Alibaba supports bubble scheduling, which allows for simultaneous scheduling of the subgraphs of a job and distributed scheduling of downstream subgraphs. The results are different for different SQL queries. For example, in the case of TPCH11, bubble scheduling improves performance by 66% compared to batch scheduling and achieves 95% performance with three times less resources compared to all-in-one scheduling.

In AliOrc, both the start point and end point are on the storage layer, and the reading and writing of data mark the start and end of AliOrc execution. As the foundation of AliOrc, the storage engine plays an important role.

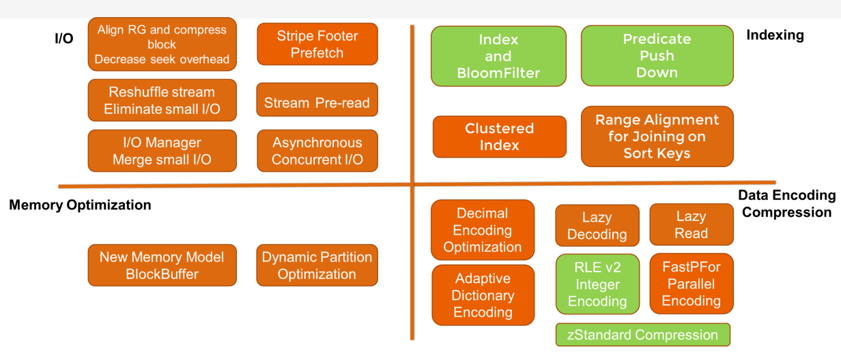

This column-based computing engine was developed from Apache Orc. On this basis, Alibaba has made many deep optimizations, including I/O, memory optimization, indexing, and data encoding compression. Some of these optimizations have been contributed to the community.

This next-generation columnar storage engine uses the following technologies:

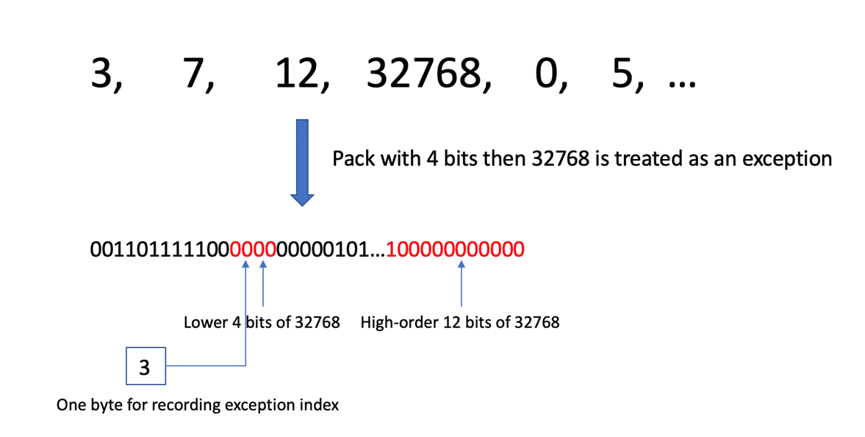

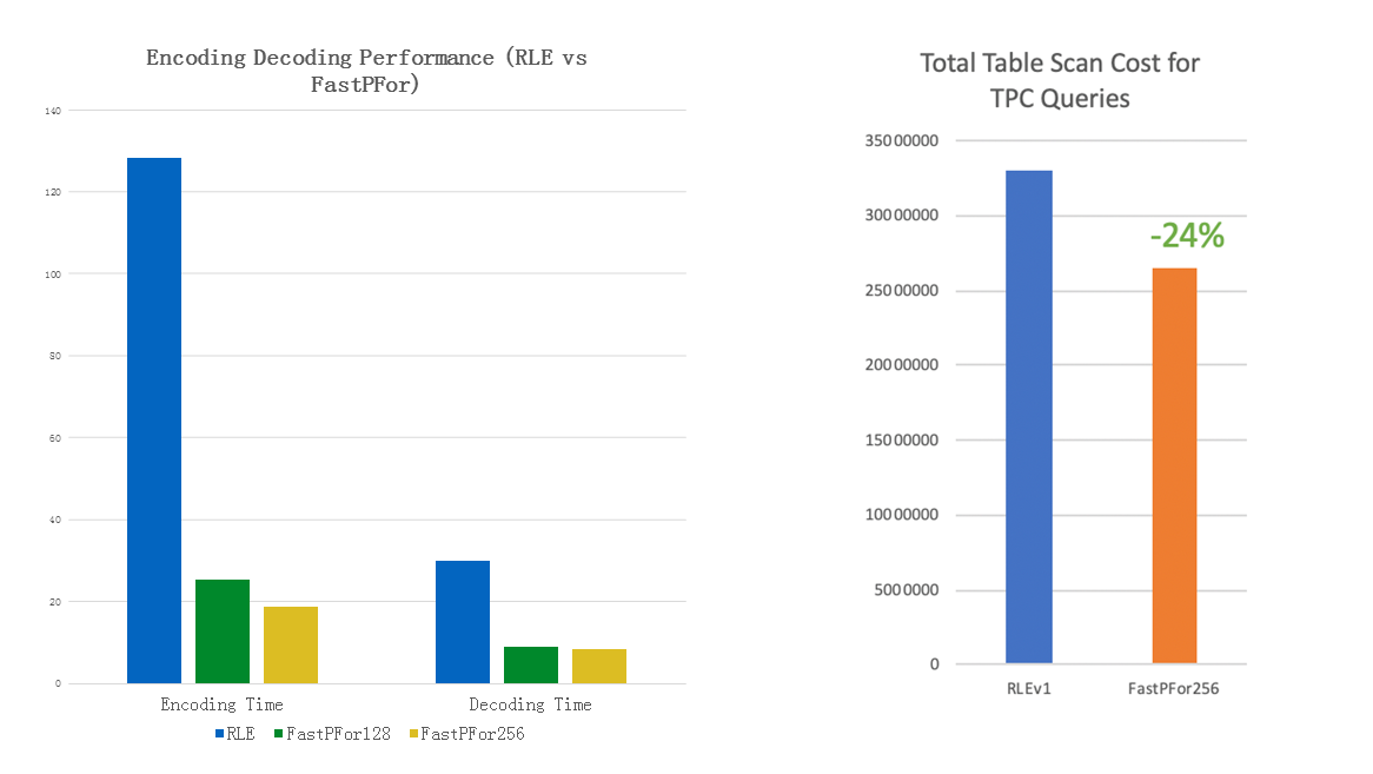

For a series of large and small numbers, 4 bytes are generated when directly stored. For small numbers, a lot of meaningless zeros will be generated in the front. The main idea of parallel encoding is to delete redundant information, leave meaningful batches, and pack them together. The advantage of this encoding method is that it can implement parallelization. In addition, some extensions have been introduced, including the optimization of ordered data and data encoding. At the same time, the encoding storage format has been redesigned, making it more conducive to memory alignment and column storage.

Test results show that this encoding technology is 4 to 6 times faster than traditional run-length encoding, the compression ratio is increased by about 10%, and the TPC Benchmark table scanning efficiency is increased by 24%. The reason for these improved results is that a single AVX256 command can process eight 64-bit numbers or sixteen 32-bit numbers. At the same time, function template expansion is used to minimize the failure of loop and branch prediction.

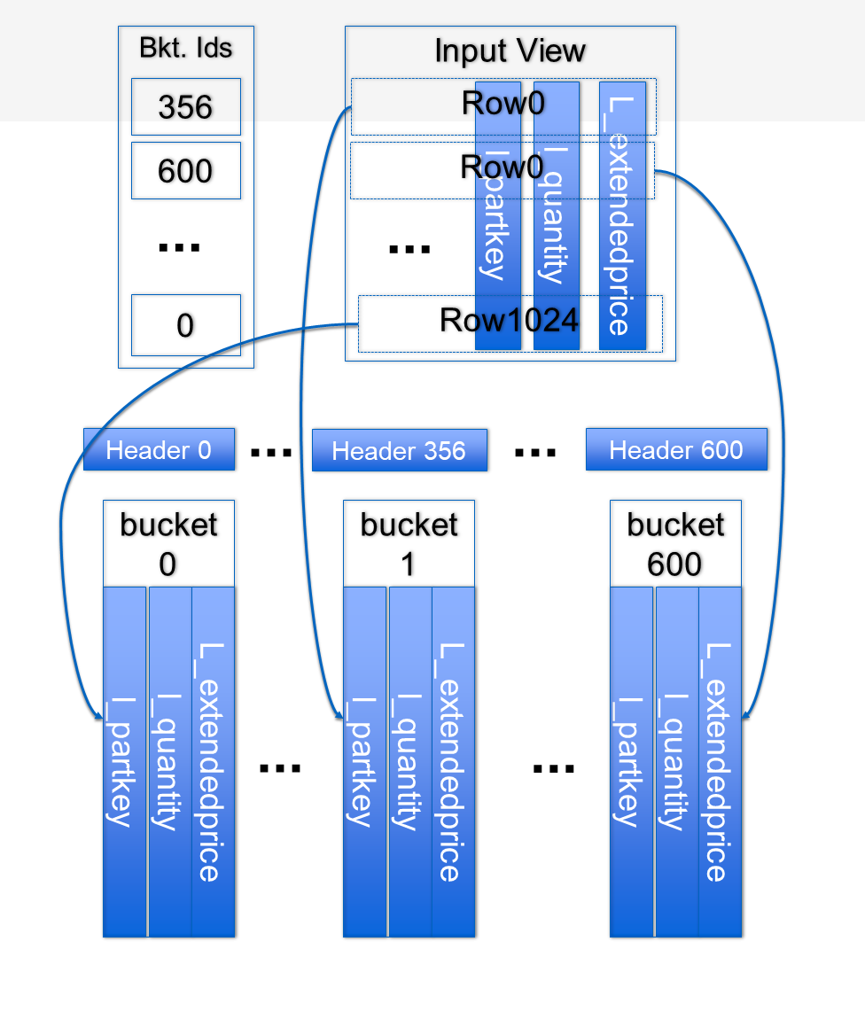

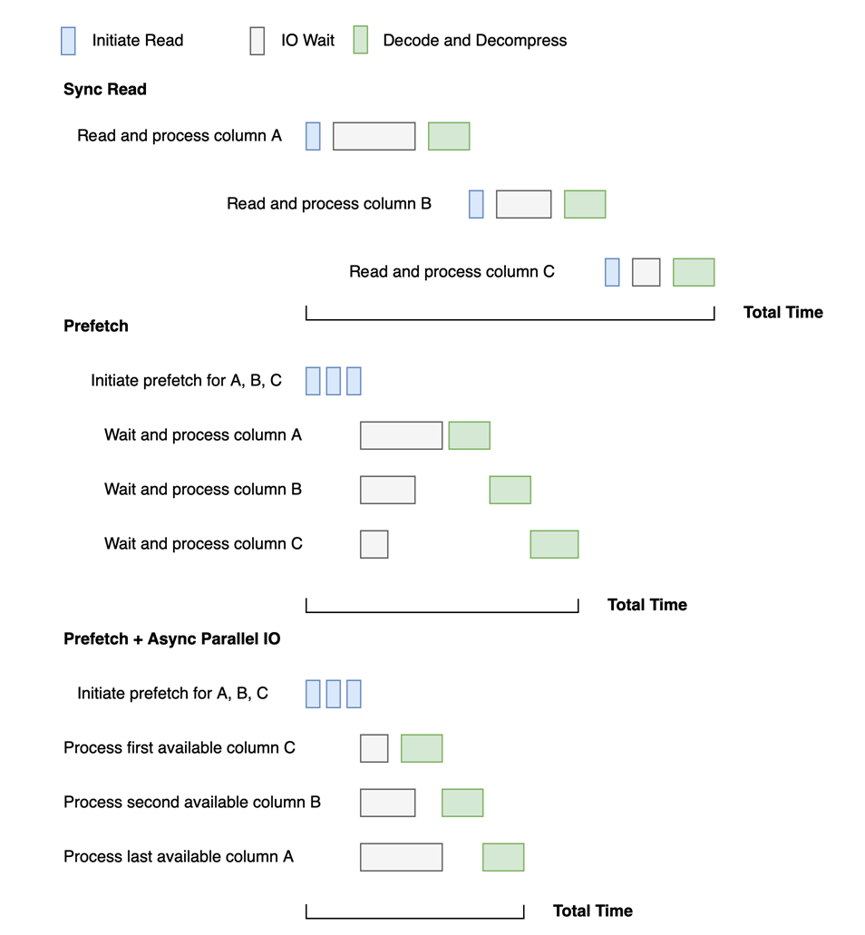

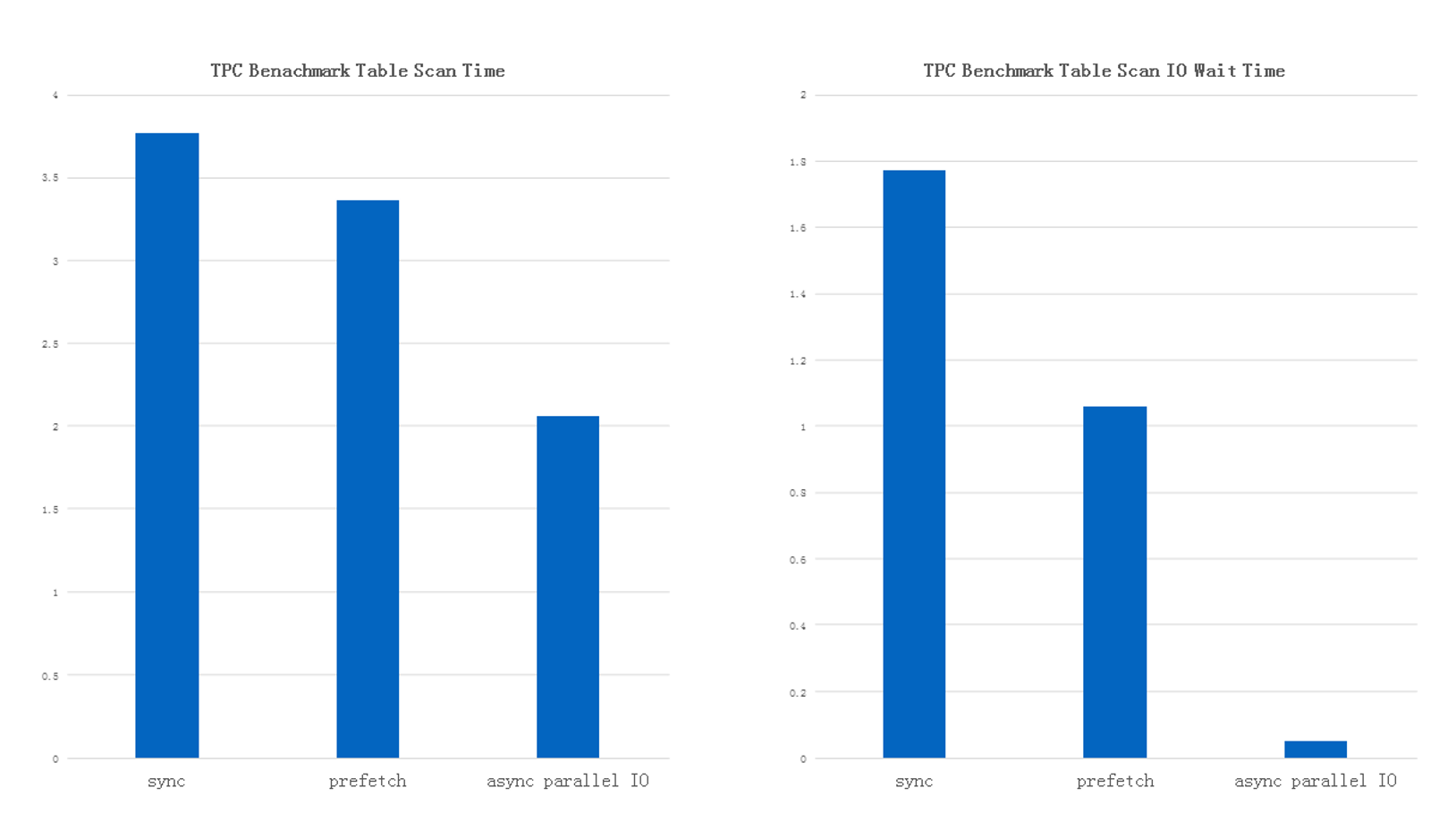

AliOrc is a column-based storage engine, which means the data in the same column is stored in the same place. The advantage is that the storage engine does not have to read all columns but only selected columns. Suppose there are three columns, A, B, and C, as shown in the preceding figure. The earliest I/O model was serialized and had considerable wait time. Therefore, Alibaba developed an improved Prefetch model in which I/O does not need to be sent one by one. At the very beginning, three read engines were sent out together, but the system still needed to wait for their responses one by one. Although some improvements have been made, an I/O wait time still exists. At present, the improved model consists of prefetch and async parallel I/O, which parallelizes all I/O operations. After the three read operations are sent together, the system does not have to wait for them in the original order of A, B, and C. Instead, it can extract and decode the data in the order it is returned. In this way, the I/O wait time is reduced to a minimum.

As shown in the preceding figure, compared to sync read, async parallel read reduces I/O wait time by 97% and end-to-end time by 45%.

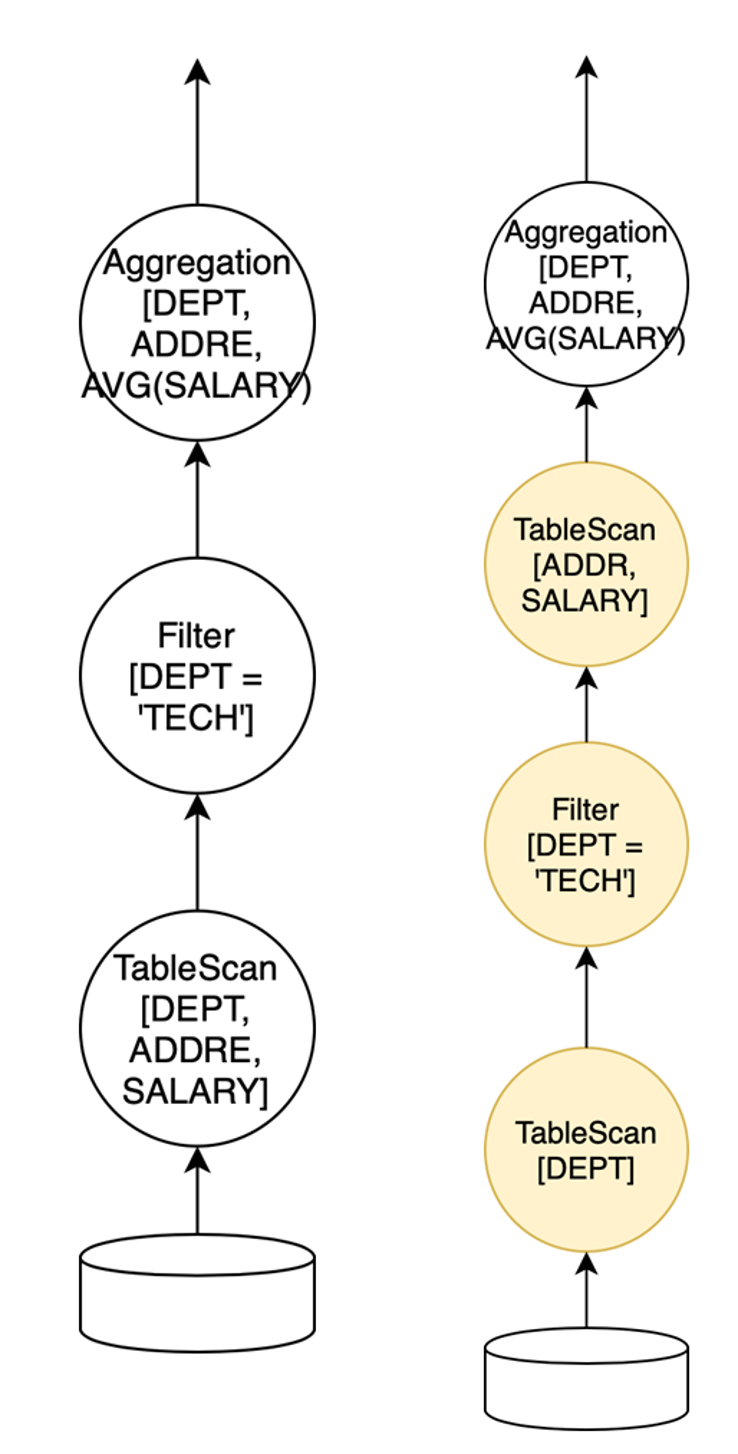

To further improve performance and reduce the amount of data read, the key focus is reducing the amount of data decoding and decompression.

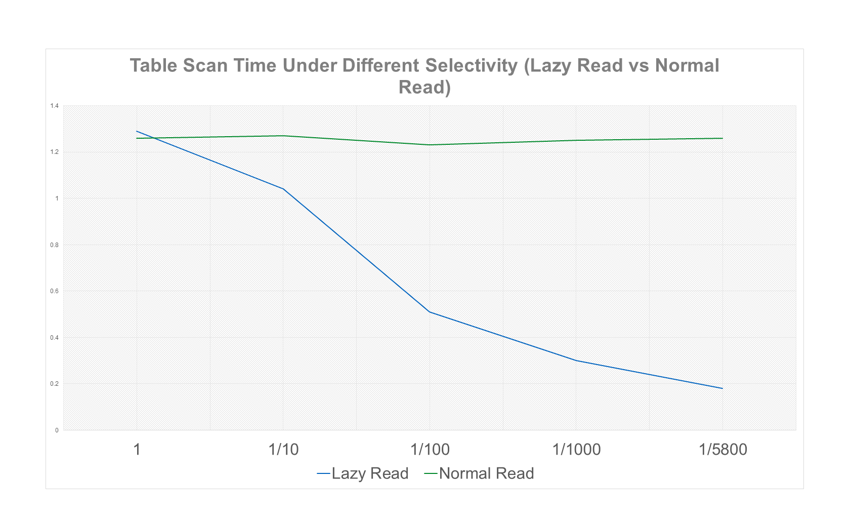

The preceding figure shows an example of lazy read. By reading only the DEPT column and deferring the reading of the ADDRESS and SALARY columns until after filtering, unnecessary data reading is significantly reduced.

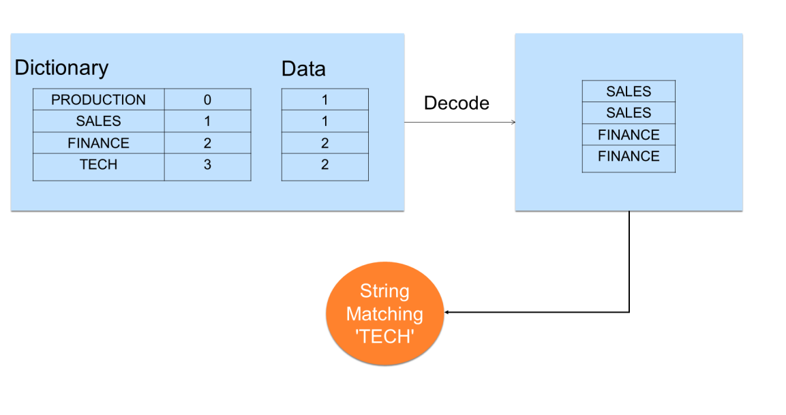

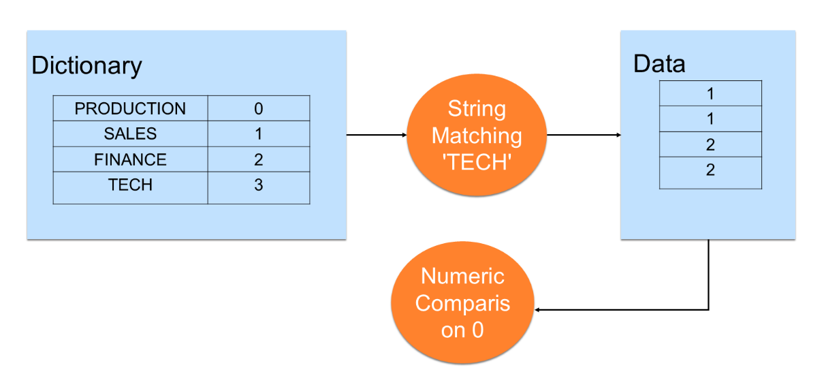

The method of dictionary encoding is used for string-type columns, in which different keys in strings are identified and given IDs. When storing data, you only need to store the IDs instead of the whole string. However, this method is time-consuming. Therefore, the following improvements have been made:

Lazy decoding is used to skip the decoding step, match the dictionary directly, and then use IDs to search data columns. This reduces string matches and the time needed for dictionary decoding.

The preceding figure shows the comparison between lazy read and normal read. The horizontal axis shows the data filtered by the filter, and "1" indicates no filtering. The vertical axis represents the time spent. After lazy read, the volume of data read decreases, the selectivity increases, and the read time also decreases significantly.

Alibaba Risk Control Brain: Exploration and Practices in Big Data Applications

137 posts | 20 followers

FollowAlibaba Clouder - March 31, 2021

Alibaba Clouder - November 6, 2017

Alibaba Clouder - September 29, 2019

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Clouder - November 27, 2017

Alibaba Cloud MaxCompute - December 13, 2018

137 posts | 20 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Cloud MaxCompute