By Li Chao, Senior Technical Expert at Alibaba Cloud Intelligence

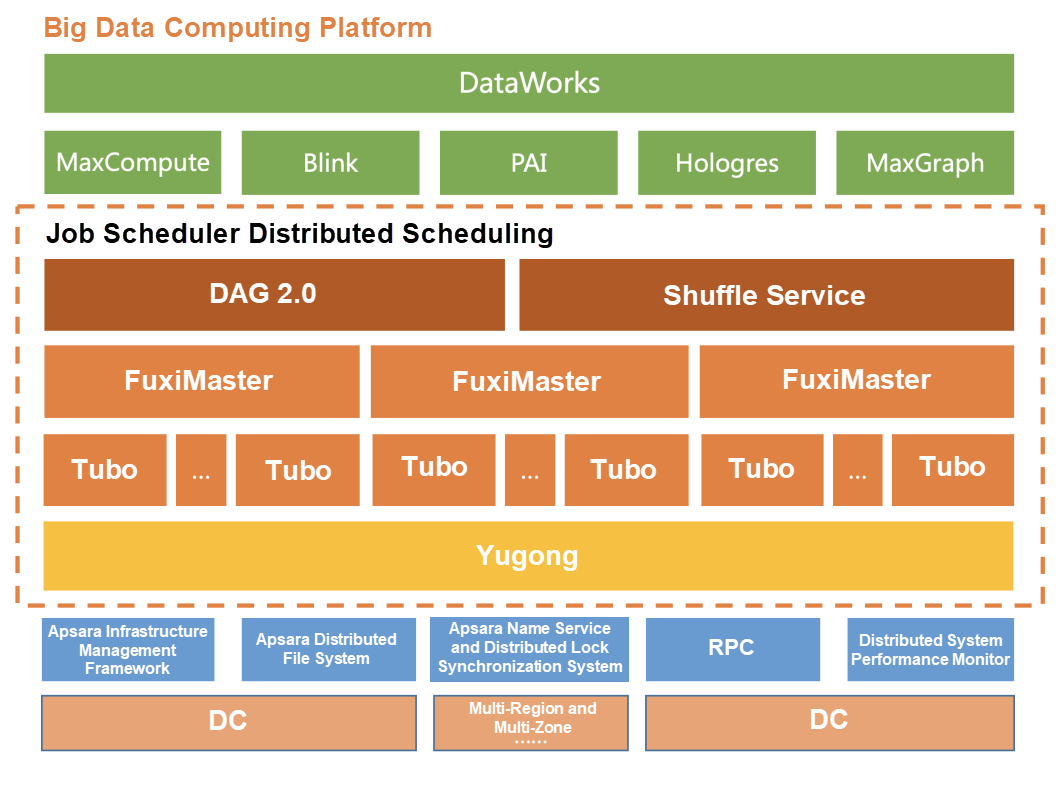

Job Scheduler is one of the three major services created when Apsara was founded 10 years ago. The other two services were the Apsara Distributed File System and Distributed Computing (MaxCompute). Job Scheduler was designed to schedule large-scale distributed resources, essentially for multi-target optimal matching.

As the business requirements of the Alibaba economy and Alibaba Cloud became more, especially during the Double 11 Global Shopping Festival, Job Scheduler evolved from a standalone resource scheduler (similar to Hadoop YARN) to a core scheduling service of the big data computing platform. It covers data scheduling (Data Placement), resource scheduling (Resource Manager), computing job scheduling (Application Manager), local micro autonomous scheduling (the single server scheduling described in this article), and other functions. It is dedicated to building differentiated capabilities superior to the mainstream capabilities in each sector.

Job Scheduler has made technical progress and breakthroughs in each of the past 10 years, such as in 5K in 2013, the Sort Benchmark world championship in 2015, large-scale online service and offline task co-location in 2017, and Yugong release and paper acceptance by the VLDB in 2019. By considering big data and cloud computing scheduling challenges, this article describes the progress Job Scheduler has made in different sectors and introduces Job Scheduler 2.0.

Over the past 10 years, cloud computing has developed exponentially and the big data industry has transitioned from traditional on-premises Hadoop clusters to a reliance on elastic and cost-effective computing resources on the cloud. Having earned the trust of many big data customers, Alibaba's big data system shoulders a great responsibility. To meet the needs of our customers, we launched MaxCompute, a large-scale, multi-scenario, cost-effective, and O&M-free general-purpose computing system.

During each Double 11 over the past 10 years, MaxCompute has supported the vigorous development of Alibaba's big data and the increase in physical servers in a cluster from several hundred to 100,000.

Both online services and offline tasks need to automatically match massive resource pools to a large number of diverse computing requirements from customers. To achieve this, a scheduling system is required. This article describes the evolution of the Alibaba big data scheduling system Job Scheduler to 2.0. First, I will introduce some concepts.

In 2013, Job Scheduler reconstructed the system architecture in the Apsara 5K project, solved online problems, such as scale, performance, utilization, and fault tolerance, and four championships were obtained in the Sort Benchmark competition in 2015. This showed Job Scheduler 1.0 was now mature.

In 2019, Job Scheduler reconstructed the technical details of the system architecture, and Job Scheduler 2.0 was released. Job Scheduler 2.0 is our next-generation distributed scheduling system for data, resource, computing, and single server scheduling. After comprehensive technical upgrades, Job Scheduler 2.0 can meet scheduling requirements in new business scenarios involving full-area data layout, decentralized scheduling, online and offline task co-location, and dynamic computing.

Alibaba has deployed DCs all around the world. A copy of the local transaction order information is generated every day for each region and stored in the nearest DC. The Beijing DC runs a scheduled task every day to collect statistics about all order information in the world, so it needs to read transaction data from other DCs. When data is produced and consumed in different DCs, cross-DC data dependencies occur.

Figure Above: Alibaba Global DCs

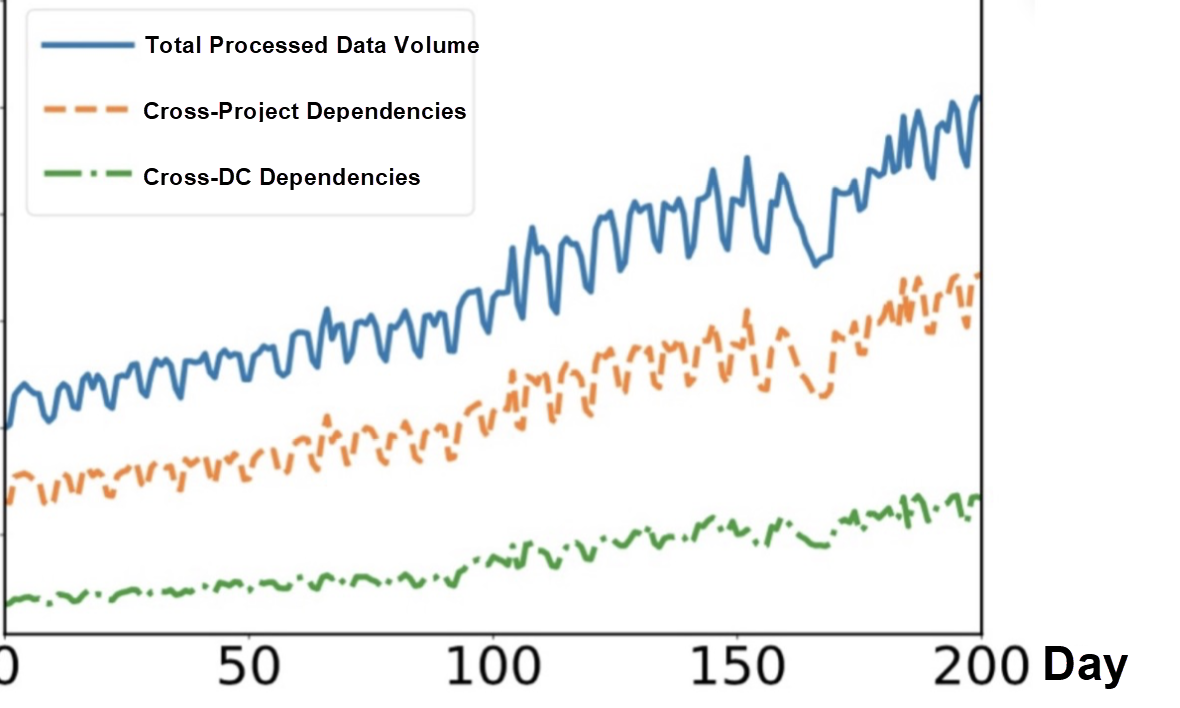

MaxCompute runs tens of millions of computing jobs every day and processes data volumes in the exabytes. These computing jobs and data are distributed in global DCs, and complex business dependencies result in a large number of cross-DC dependencies. Compared with intra-DC networks, cross-DC networks, and especially cross-region networks, are expensive and feature low bandwidth, high latency, and low stability. For example, the latency of intra-DC networks is typically less than 100 μs. However, the latency of cross-region networks can reach dozens of milliseconds. Therefore, it is important to hyperscale computing platforms, such as MaxCompute, to efficiently convert cross-DC dependencies to intra-DC data dependencies to reduce cross-DC network bandwidth consumption, lower costs, and improve system efficiency.

Figure Above: Increase in MaxCompute Data and Dependencies

To convert cross-DC dependencies to intra-DC data dependencies, we add a scheduling layer to schedule data and computing jobs between DCs. This scheduling layer is independent of intra-DC scheduling and aims to find an optimal balance among cross-region storage redundancy, computing, long-distance transmission bandwidth, and performance. This scheduling layer provides cross-DC data caching, overall business layout, and job-level scheduling policies.

When mutual dependencies between projects are concentrated in a few jobs and the input data volume of these jobs is much greater than their output data volume, job-level scheduling can be used to schedule these jobs to the DCs where the relevant data is stored and then remotely write the job outputs to the original DCs. This is more effective than data caching and overall project migration. To use job-level scheduling, however, we need to solve certain problems, such as how to predict input and output data volumes and the resource consumption of jobs before they run and how to prevent job operations and the user experience from being affected when jobs are scheduled to remote DCs.

Essentially, data caching, business layout, and job-level scheduling all aim to reduce cross-DC dependencies, optimize the data locality of jobs, and reduce network bandwidth consumption in a cross-region multi-DC system.

We propose cross-region or cross-DC data caching to strike an optimal balance between data storage and bandwidth when redundant storage is limited. First, we designed an efficient algorithm based on MaxCompute job and data features. This algorithm can automatically add data to and remove data from the cache based on the historical workload of jobs and their data size and distribution.

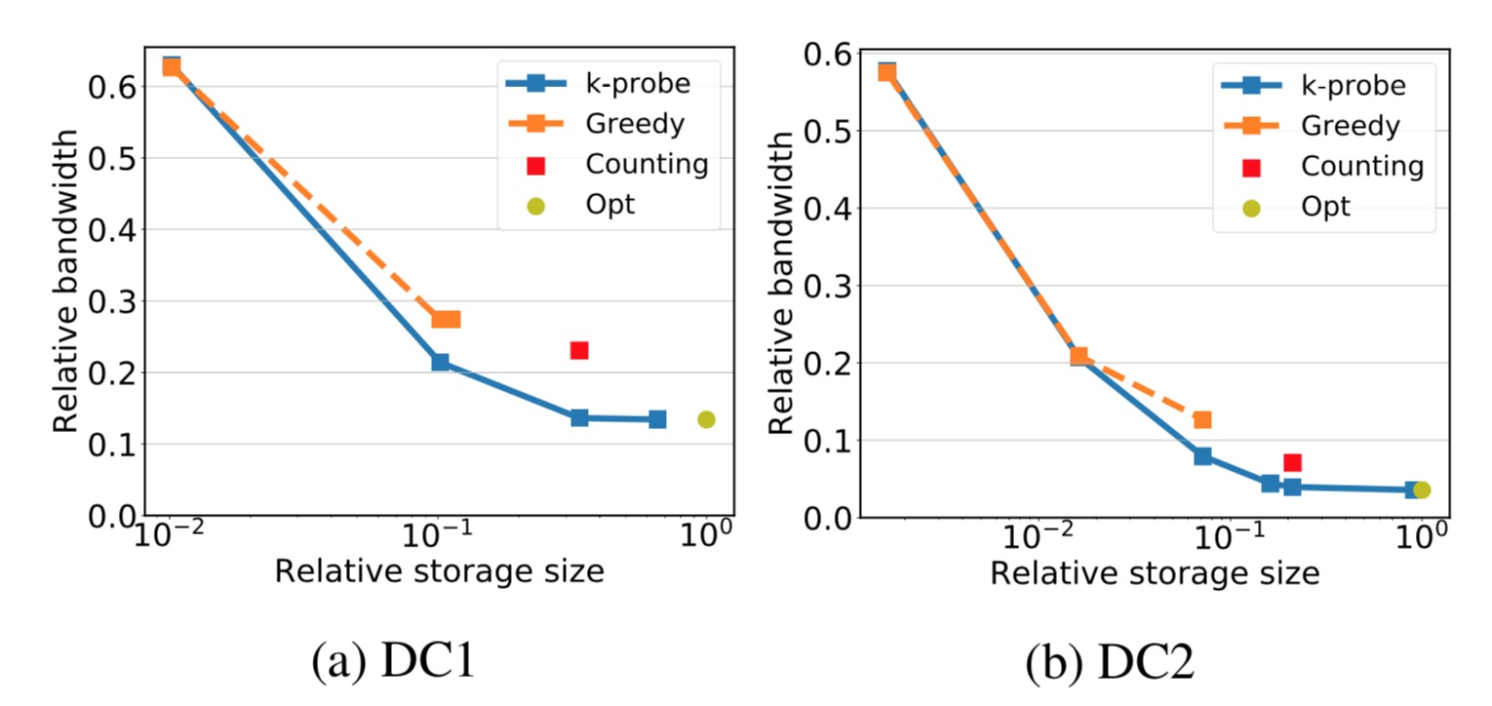

We researched multiple data caching algorithms and compared them. The following figure shows the benefits of different data caching algorithms. The horizontal axis indicates the redundant storage size, and the vertical axis indicates the consumed bandwidth. When the redundant storage increases, the bandwidth cost and benefit-cost ratio (BCR) both decrease. We finally chose the k-probe algorithm, which strikes an optimal balance between storage and bandwidth.

With the continuous development of upper-layer businesses, their requirements for underlying resources and data requirements also change. For example, when the cross-DC dependencies of a cluster increase rapidly, data caching cannot completely ensure local reading and writing and a large amount of cross-DC traffic will be generated. Therefore, we need to periodically analyze the business layout. Based on businesses' requirements for computing and data resources and cluster and DC planning, we can migrate businesses to reduce cross-DC dependencies and balance the loads of clusters.

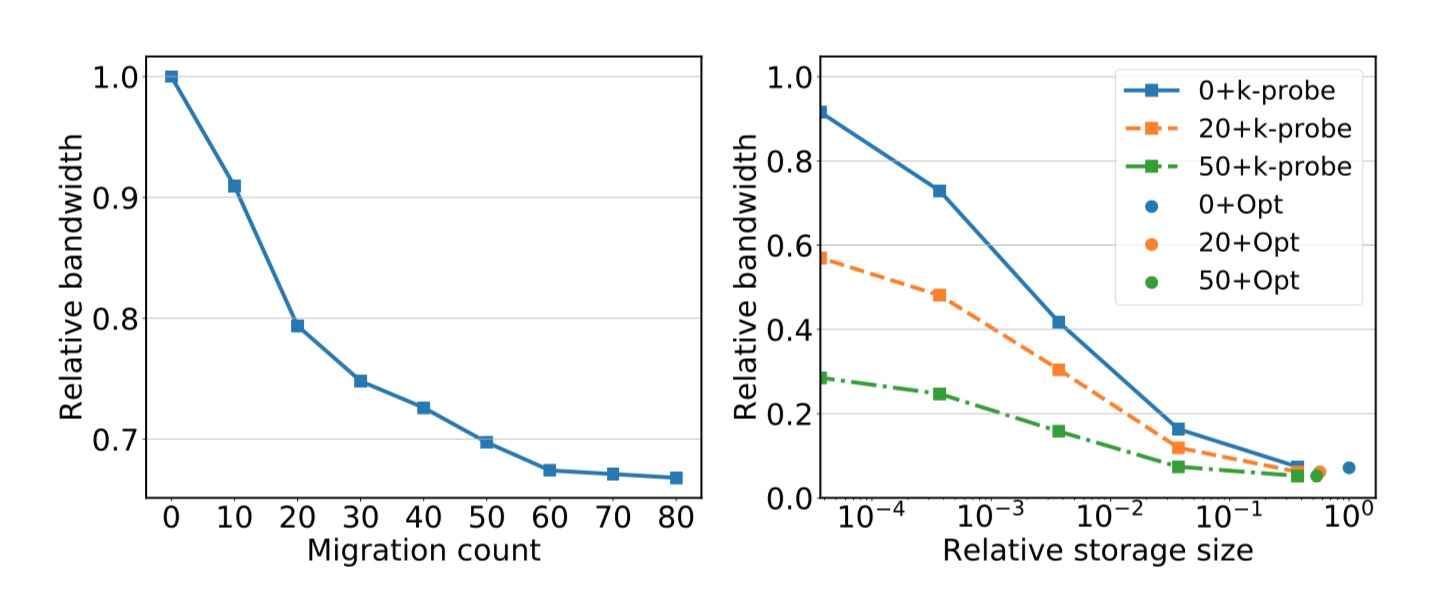

The following charts analyze the benefits of business migration at a given time point. In the chart on the left, the horizontal axis shows the number of migrated projects, and the vertical axis shows the reduced bandwidth ratio. When 60 projects are migrated, bandwidth consumption is reduced by 30%. The chart on the right shows the optimal bandwidth consumption with 0, 20, and 50 projects migrated. The horizontal axis shows redundant storage, and the vertical axis shows the bandwidth.

By breaking the limitations of DC-based planning of computing resources, we allow jobs to run on any DC, at least in theory. We can break down the scheduling granularity to the job-level and find the most suitable DC for each job based on its data and resource requirements. Before we schedule a job, we need to know the input and output of the job. Currently, we have two methods to obtain this information. For periodic jobs, we can analyze the historical running data of the jobs to estimate their input and output. For occasional jobs that generate high levels of cross-region traffic, we can dynamically schedule them to the DCs where the relevant data is stored. When calculating job scheduling, we also need to consider job requirements for computing resources to prevent all jobs being scheduled to DCs where hot data is stored, resulting in a backlog of tasks.

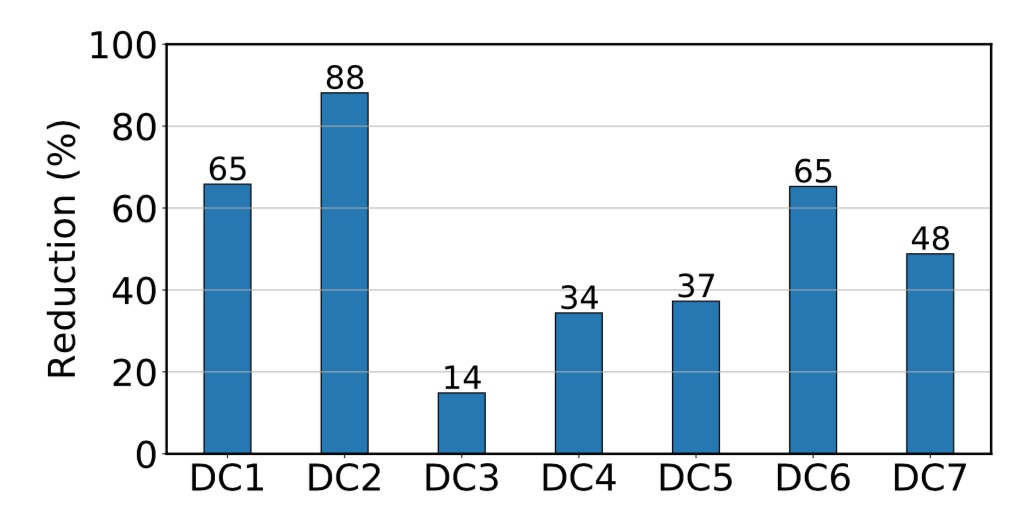

For online systems, these three policies are used together. Data caching is used for periodic jobs and hot data dependencies. Job-level scheduling is used for occasional jobs and historical data dependencies. The overall business layout is performed periodically for global optimization and cross-DC dependency reduction. With these three policies, cross-region data dependency is reduced by 90%, cross-DC bandwidth consumption is reduced by 80% through 3% redundant storage, and the ratio of converting cross-DC dependencies to local read and write operations is improved to 90%. The following figure shows the bandwidth benefits by DC.

In 2019, the MaxCompute platform generated data volumes in the exabytes during Double 11. It processed tens of millions of jobs, and billions of workers ran on millions of compute units (CUs.) To quickly allocate resources to different computing tasks and ensure high-speed resource transfer in hyperscale (more than 10,000 servers in a single cluster) and high-concurrent scenarios, a smart "brain" is required. This role is played by the cluster resource management and scheduling system called Resource Manager.

The Resource Manager system connects thousands of compute nodes, abstracts massive heterogeneous resources in DCs, and provides them to upper-layer distributed applications, allowing you to use cluster resources. The core capabilities of the Resource Manager system include scale, performance, stability, scheduling performance, and multi-tenant fairness. A mature Resource Manager system needs to consider the five factors shown in the following figure, which is challenging.

In 2013, the 5K project first proved Job Scheduler's large scale capability. Later, the Resource Manager system evolved continuously and began to support Alibaba's big data computing resource requirements through the MaxCompute platform. In core scheduling metrics, the Resource Manager system surpassed open-source systems: (1) In clusters with 10,000 servers, the scheduling latency is within 10 ms and the worker startup latency is within 30 ms. (2) Dynamic resource scheduling for 100,000 tenants is supported. (3) The annual stability of the scheduling service is 99.99%, and service faults can be rectified in several seconds.

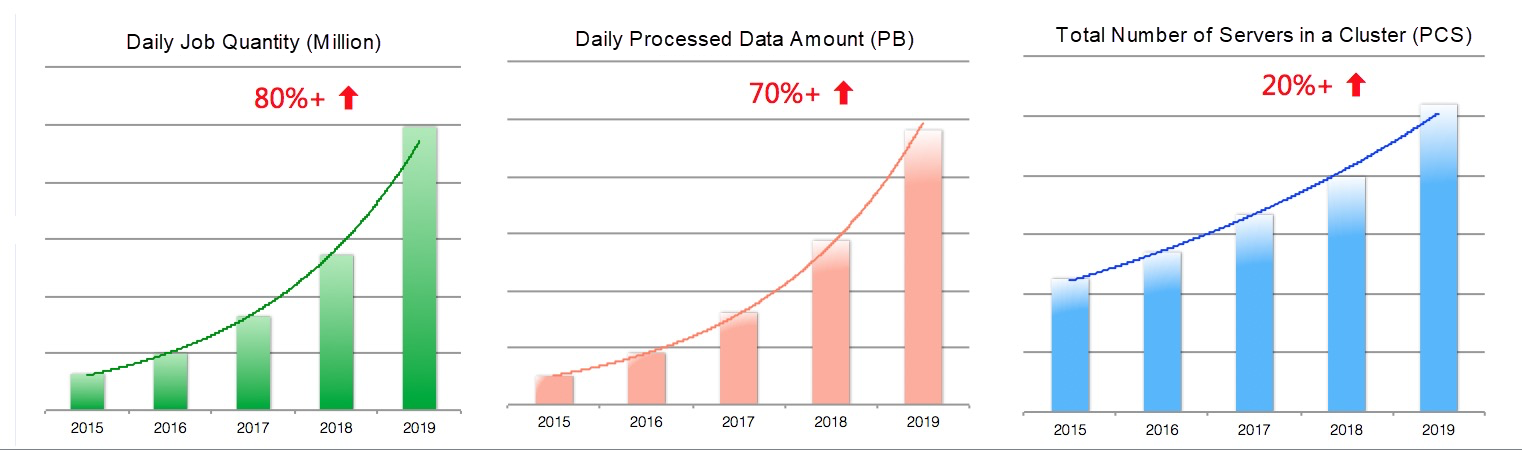

Big data computing scenarios and requirements are increasing rapidly. The following figure shows the upward trends of MaxCompute computing jobs and data over the past several years. A single cluster can now support tens of thousands of servers and soon will need to support hundreds of thousands of servers.

Figure Above: MaxCompute Online Jobs from 2015 to 2018

The increase in scale also results in greater complexity. When the server scale is doubled, the resource request concurrency is also doubled. To prevent core capabilities, such as the performance, stability, and scheduling performance from decreasing, we can continuously optimize the scheduler performance to scale out servers in clusters. This was the main effort of Resource Manager 1.0. However, due to the physical limitations of single servers, this optimization method has an upper limit. Therefore, we need to optimize the architecture to solve scale and performance scalability issues.

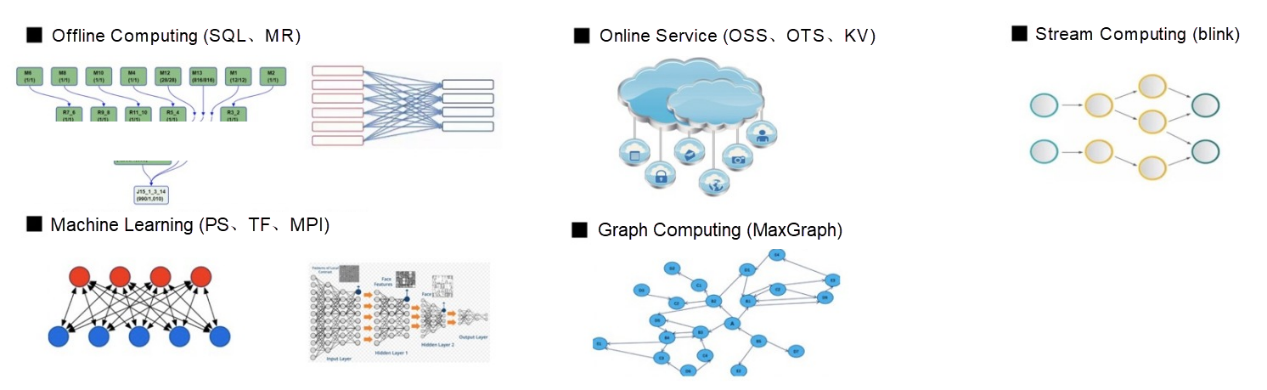

Job Scheduler supports various big data computing engines, including offline computing (SQL and MR), real-time computing, graph computing, and the AI-oriented machine learning engines that have developed rapidly in recent years.

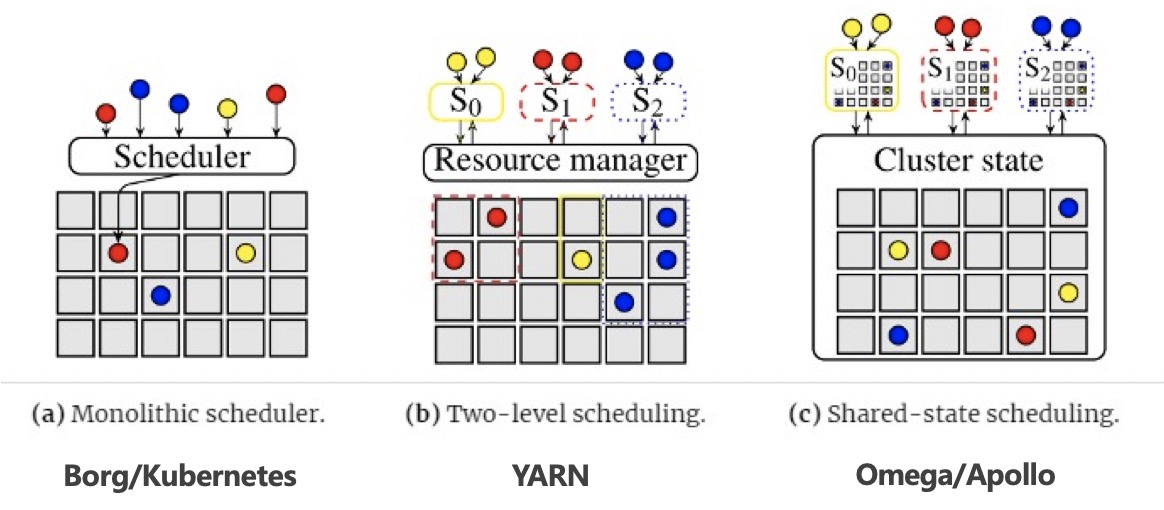

Figure Above: Types of Resource Scheduler Architectures

Resource scheduling requirements vary in different scenarios. For example, SQL jobs usually have small sizes, short running duration, low resource matching requirements, and high scheduling latency requirements. Machine learning jobs usually have large sizes and long running duration, and the scheduling result directly affects the running time. To ensure a better scheduling result, a higher scheduling latency can be accepted. A single scheduler cannot meet these diversified resource scheduling requirements because different scenarios require custom scheduling policies and independent optimization.

The Resource Manager system is one of the most complex and important modules in a distributed scheduling system. Strict production and release processes are required to ensure its stable online operation. Single schedulers place high requirements on developers. Faults have large impacts, and test and release periods are long, which significantly reduces the efficiency of scheduling policy iteration. These shortcomings are apparent when rapid scheduling performance optimization is needed for different scenarios. Therefore, we needed to optimize the architecture to ensure the smooth phased release of the Resource Manager system and significantly improve engineering efficiency.

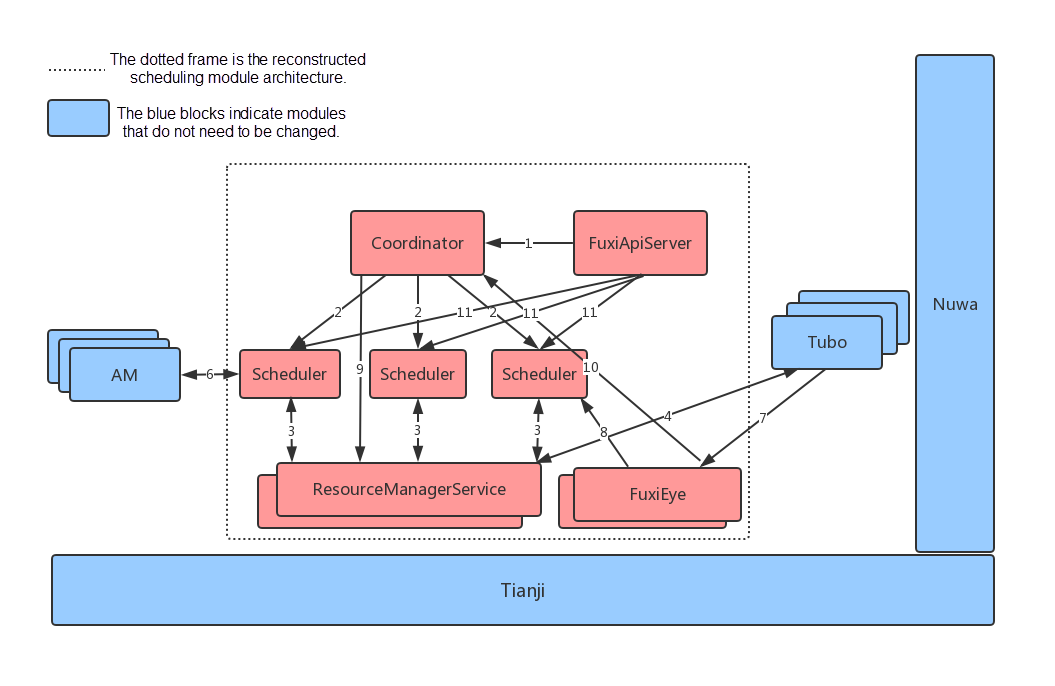

To solve the preceding size and scalability issues, meet scheduling requirements in different scenarios, and support phased release in the architecture, Resource Manager 2.0 dramatically reconstructed the scheduling architecture inherited from Resource Manager 1.0 and introduced a decentralized multi-scheduler architecture.

Figures Above: Types of Resource Scheduling Architectures

We decoupled the core resource management and resource scheduling logic in the system to ensure that both had multi-partition scalability, as shown in the following figure.

Figure Above: Job Scheduler Multi-Scheduler Architecture

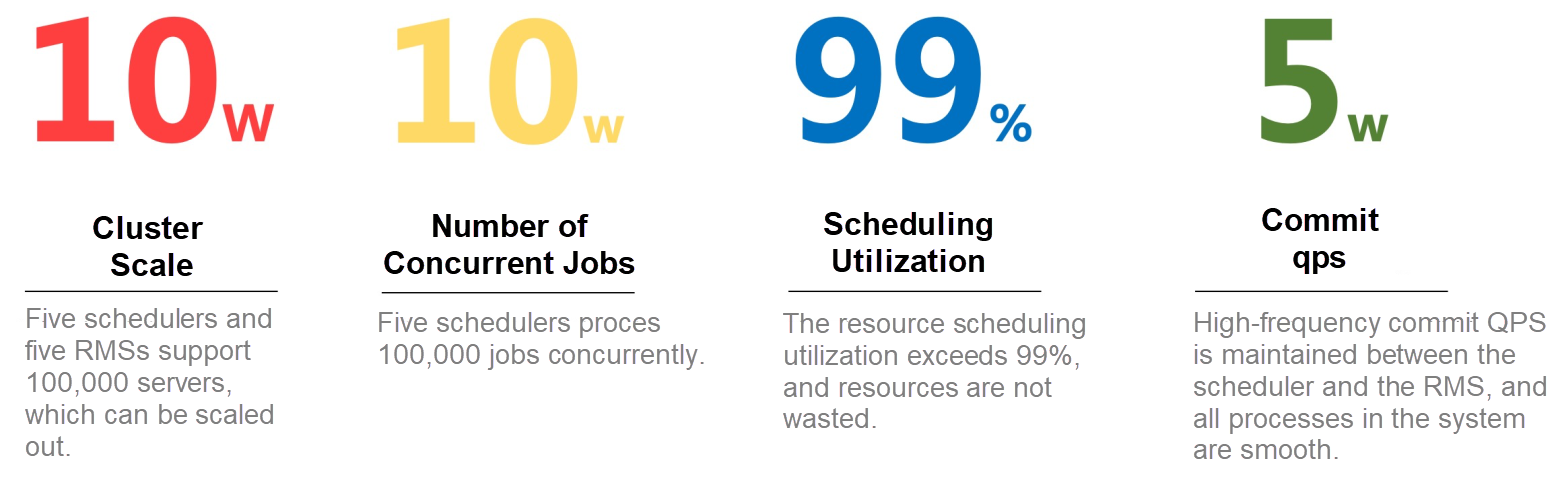

The following figure shows core scheduler metrics in scenarios with 100,000 servers in a single cluster and 100,000 concurrent jobs. There are five schedulers and five RMSs. Each RMS is responsible for 20,000 servers, and each scheduler processes 20,000 jobs concurrently. As shown in the figure, the scheduling utilization of the 100,000 servers exceeds 99%, and the average number of slots that a scheduler commits to the RMS reaches 10,000 slots per second.

While the core metrics of a single scheduler remain unchanged, the decentralized multi-scheduler architecture ensures a scalable server scale and application concurrency, solving the cluster scalability issue.

The new Resource Manager architecture has now been fully released, and its metrics have stabilized. Based on the multi-scheduler architecture, we use independent schedulers to continuously optimize scheduling in machine learning scenarios. By testing the dedicated scheduler, we ensured the smooth phased release of the Resource Manager system and a shorter development and release periods for scheduling policies.

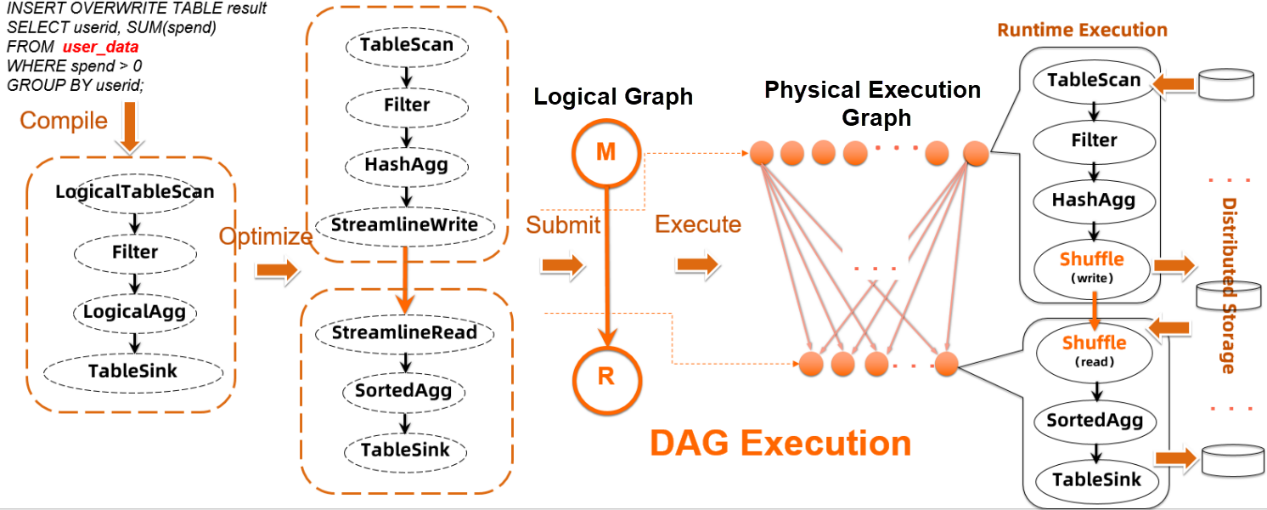

The biggest difference between distributed jobs and single server jobs is that data needs to be processed by different compute nodes. This means that data must be split and then aggregated, distinguished in different logic running phases, and shuffled between logic running phases. The central management node of each distributed job is the application master (AM.) The AM is also called a DAG component because its most important responsibility is to coordinate job execution in the distributed system, including compute node scheduling and data shuffling.

The management of jobs' logical phases and compute nodes and the selection of shuffling policies are important prerequisites for the correct completion of distributed jobs, including traditional MR jobs, distributed SQL jobs, and distributed machine learning, or deep learning jobs. The following figure shows an example of distributed SQL job execution in MaxCompute to help us better understand the position of Application Manager (DAG and shuffle) in the big data platform.

In this example, the user has an order table (order_data) that stores a large volume of transaction information. The user wants to know the total expenses of each user after transaction orders that exceed RMB 1000 are aggregated by userid. Therefore, the user submits the following SQL query.

INSERT OVERWRITE TABLE result

SELECT userid, SUM(spend)

FROM order_data

WHERE spend > 1000

GROUP BY userid;After the SQL query is compiled and optimized, an optimization plan is generated and submitted to Job Scheduler-managed distributed clusters for execution. This simple SQL query is changed to a DAG with M and R logical nodes, that is, a typical MR job. After the DAG is submitted to Job Scheduler, it is changed to the physical execution graph on the right based on the concurrency, shuffling method, and scheduling time of each logical node and other information. Each node in the physical execution graph represents an execution instance, which contains an operator for processing data. For a typical distributed job, the instance contains the shuffle operator, which shuffles data between external storage and network exchange nodes. A complete computing job scheduling process contains DAG execution and data shuffling in the preceding figure.

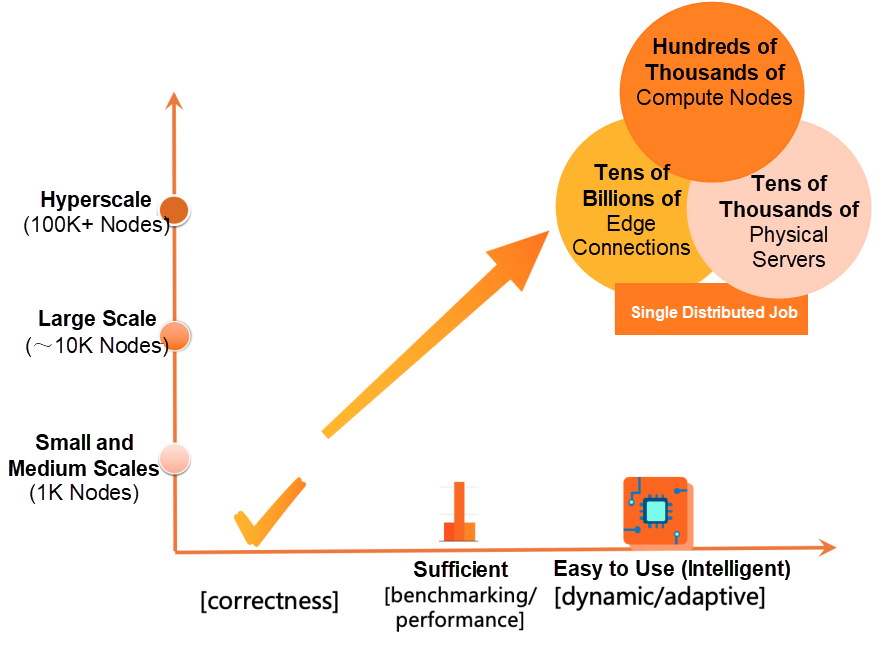

After 10 years of development and iteration, the Application Manager of Job Scheduler has become an important infrastructure for big data computing in the Alibaba Group and Alibaba Cloud. Application Manager now serves various computing engines, such as MaxCompute SQL and PAI. It processes tens of millions of distributed DAG jobs on about 100,000 servers and exabytes of data every day. With the abrupt increase in the business scale and amount of data to process, the Application Manager needs to serve more and more distributed jobs. In the face of diversified business logic and data sources, the Application Manager has already shown it can provide the availability and capabilities required by Alibaba in the past. With Application Manager 2.0, we started to explore the advanced intelligent execution capabilities.

From big data practices on the cloud and in the Alibaba Group, we have found that the Application Manager needs to meet both hyperscale and intelligence requirements. Based on these requirements, we developed the Job Scheduler Application Manager 2.0. In the following sections, I will introduce Application Manager 2.0 in terms of DAG scheduling and data shuffling.

Typically, the DAGs of traditional distributed jobs are statically specified before jobs are submitted. As a result, there is little room for dynamic adjustment of job operations. In DAG logical and physical graphs, the distributed system must understand the job logic and features of data to process in advance and be able to accurately answer questions related to the physical features of nodes and connection edges during job running. However, many questions related to data features in the running process can only be accurately answered during execution. Static DAG execution may cause a non-optimal execution plan to be selected, resulting in a low runtime efficiency or job failure. The following shows a common join example of distributed SQL jobs.

SELECT a.spend, a.userid, b.age

FROM (

SELECT spend, userid

FROM order_data

WHERE spend > 1000

) a

JOIN (

SELECT userid, age

FROM user

WHERE age > 60

) b

ON a.userid = b.userid;This example describes how to obtain detailed information about users who are older than 60 and spend more than 1000. The age and expense information is stored in two tables, and therefore, a join is required. Typically, join can be implemented in two ways.

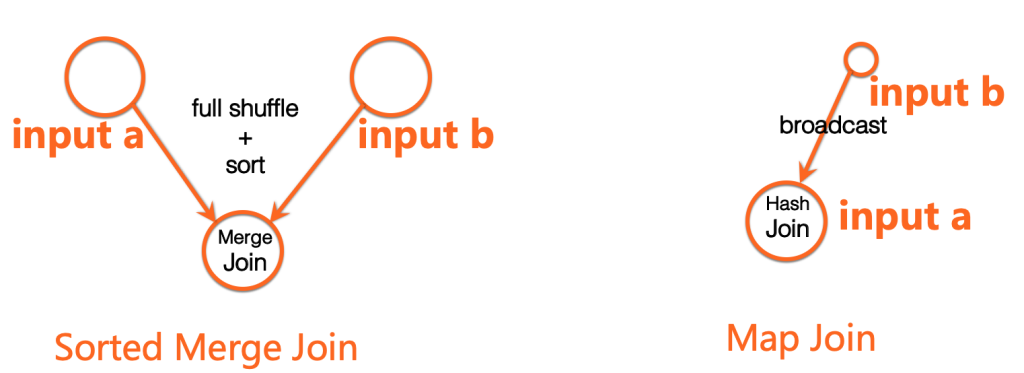

One is sort-merge join, as shown on the left in the following figure. After clauses a and b are executed, data is partitioned by join key (userid), and the downstream node performs merge join by key. To perform merge join, shuffling (data sly) needs to be performed for the two tables. If data skew occurs, such as when one userid has many transaction records, a long tail will occur during merge join and the execution efficiency will be affected.

The other is map join (hash join), as shown on the right in the following figure. In the preceding SQL job, if the number of users who are older than 60 is few and data can be stored in the memory of one compute node, shuffling does not need to be performed for this small table. Instead, all data is broadcasted to each distributed compute node that processes the large table. Shuffling does not need to be performed for the large table, and hash tables are established in the memory to complete join. Map join can dramatically reduce large table shuffling and prevent data skew, improving job performance. However, if map join is selected and the small table data amount exceeds the memory limit during execution (such as when the number of users who are older than 60 is large), the SQL query will fail due to OOM and must be re-executed.

During actual execution, the specific data amount can be perceived only after the upstream processing is completed. Therefore, it is difficult to determine whether map join can be used for optimization before jobs are submitted. As shown in the preceding figure, DAGs have different structures in map join and sort-merge join. DAG scheduling must be dynamic enough during execution so that DAGs can be dynamically modified to ensure optimal execution efficiency. In the Alibaba Group and cloud business practices, map join is widely used for optimization. With the increasingly in-depth optimization of the big data platform, the DAG system must become more and more dynamic.

Most DAG scheduling frameworks in the industry do not have clear stratification between logical and physical graphs, lack dynamic capabilities during execution, and cannot meet requirements of various computing modes. For example, the Spark community previously proposed a join policy adjustment at runtime (Join: Determine the join strategy (broadcast join or shuffle join) at runtime.) However, no solution has yet been found.

With MaxCompute update, optimizer capability enhancement, and new feature evolution for the PAI platform, the capabilities of upper-layer computing engines have continually increased. Therefore, we need the DAG component to be more dynamic and flexible in job management and DAG execution. In this context, the Job Scheduler team started the DAG 2.0 project to better support upper-layer computing requirements and computing platform development over the next 10 years.

Through foundational design, such as clear stratification of logical and physical graphs, extendable state machine management, plugin-based system management, and event-driven scheduling policies, DAG 2.0 centrally manages various computing modes of the computing platform and provides better dynamic adjustment capabilities at different layers during job running. Dynamic job running and a unified DAG execution framework are two features of DAG 2.0.

As mentioned before, many problems related to the physical features of distributed jobs are imperceptible before they run. For example, before a distributed job runs, only originally entered basic features, such as the data volume can be obtained. For deep DAG execution, this means that only the physical plan, such as concurrency selection, of the root node can be reasonably determined. In contrast, the physical features of downstream nodes and edges can only be guessed at using certain specific rules. This causes uncertainty during execution. A good distributed job execution system is required to dynamically adjust job running based on intermediate running results.

However, only the DAG or AM, as the unique central node and scheduling control node for distributed jobs, can collect and aggregate relevant data and dynamically adjust job running based on the data features. They can adjust simple physical execution graphs (such as dynamic concurrency) as well as the complex shuffling method and data orchestration method. The logical execution graph may also need to be adjusted based on data features. Dynamic adjustment of the logical execution graph is a new direction in distributed job processing and a new solution we are exploring in DAG 2.0.

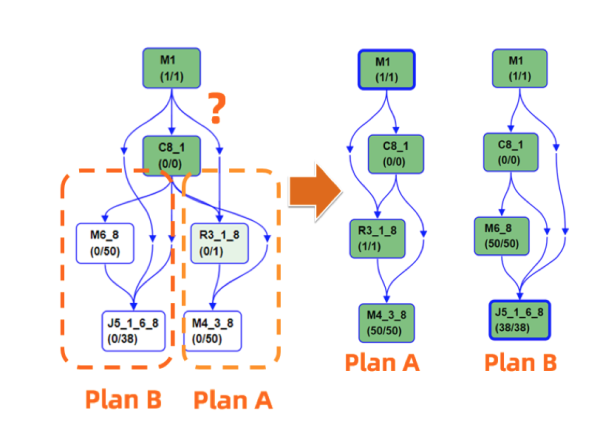

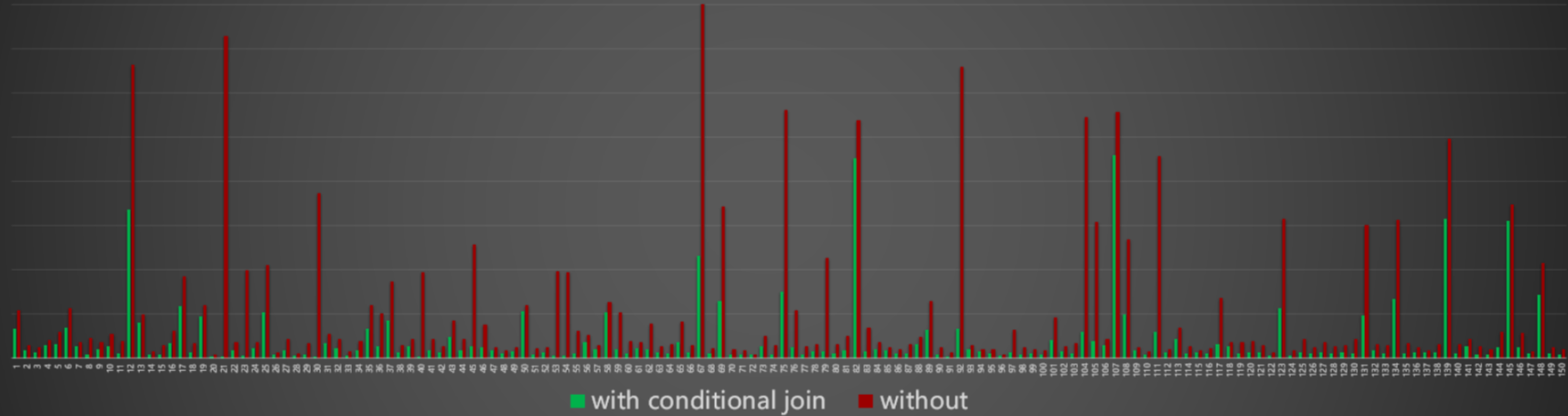

Take map join optimization as an example. Map join and default sort-merge join are actually two different optimizer execution plans. At the DAG layer, they correspond to two different logical graphs. The dynamic logical graph adjustment capability of DAG 2.0 properly supports dynamic optimization of job running based on intermediate data features. Through thorough cooperation with the upper-layer engine optimizer, DAG 2.0 provides the first conditional join solution in the industry. As shown in the following figure, when the join algorithm cannot be determined in advance, the distributed scheduling execution framework allows the optimizer to submit a conditional DAG. This DAG contains the execution plan branches of the two join methods. During execution, the AM dynamically selects a branch (plan A or plan B) to execute based on the upstream data amount. This dynamic logical graph execution process ensures that an optimal execution plan is selected based on generated intermediate data features when a job runs. In this example:

In addition to the typical map join scenario, MaxCompute is working on new solutions to other user pain points. It has already achieved better performance by using the dynamic adjustment capabilities of DAG 2.0, such as intelligent dynamic concurrency adjustment. During execution, MaxCompute dynamically adjusts the concurrency based on partition data statistics. It automatically combines small partitions to prevent unnecessary resource use and splits large partitions to prevent unnecessary long tails.

In addition to using dynamic adjustment to significantly improve the SQL job running performance, DAG 2.0 can describe different physical features of nodes and edges in the abstract and hierarchical node, edge, and graph architecture to support different computing modes. The distributed execution frameworks of various distributed data processing engines, including Spark, Flink, Hive, Scope, and TensorFlow, are derived from the DAG model proposed by Dryad. We think that an abstract and hierarchical description of graphs can better describe various models in a DAG system, such as offline, real-time, streaming, and progressive computing.

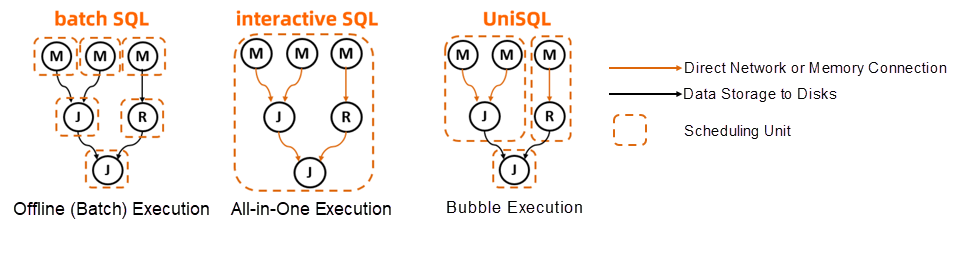

If we segment distributed SQL jobs, we will find that either the throughput (large scale and high latency) or latency (small or medium data volume and fast speed) is optimized. Hive is a typical example of throughput optimization, and Spark and various distributed MPP solutions are typical examples of latency optimization. When Alibaba was developing its distributed system, there were two noticeable execution modes: batch offline SQL jobs and interactive firm real-time SQL jobs. In the past, resource management and job running for these two modes were implemented based on two completely separated sets of code. These two sets of code and the features of the two modes could not be reused. As a result, we could not strike a balance between resource utilization and execution performance. In the DAG 2.0 model, these two modes are integrated and unified through physical feature mapping of nodes or edges. Offline jobs and firm real-time jobs can be accurately described after they map different physical features on logical nodes and edges.

Based on the unified architecture of offline jobs and firm real-time jobs, the unified description method strikes a balance between high resource utilization for offline jobs and high performance for firm real-time jobs. When the scheduling unit can be adjusted freely, a new hybrid computing mode is possible. We call it the bubble execution mode.

This bubble mode enables DAG users (the developers of upper-layer computing engines like the MaxCompute optimizer) to flexibly cut out bubble subgraphs in the execution plan based on the execution plan features and engine users' sensitivity to resource usage and performance. In the bubble, methods, such as direct network connection and compute node prefetch, are used to improve performance. Nodes that do not use the bubble execution mode still run in the traditional offline job mode. In the new unified model, the computing engine and execution framework can choose between different combinations of resource utilization and performance based on actual requirements.

The dynamic adjustment feature of DAG 2.0 ensures that many execution optimizations can be decided on during job running, which ensures better execution performance. For example, dynamic conditional join improves the performance of Alibaba jobs by 300% compared to static execution plans.

The bubble execution mode balances the high resource utilization of offline jobs and the high performance of firm real-time jobs, which is clearly demonstrated in 1 TB TPC-H test sets.

In big data computing jobs, data transfer between nodes is called data shuffling. Mainstream distributed computing systems all provide a data shuffle service subsystem. In the preceding DAG computing model, upstream and downstream data transfer between tasks is a typical shuffling process.

In data-intensive jobs, the shuffling phase takes a long time and occupies a large number of resources. The survey results of other big data companies show that resource usage of all jobs on their big data computing platforms exceeds 50% in the shuffling phase. According to statistics, shuffling accounts for 30% to 70% of the job running time and resource consumption in MaxCompute production. Therefore, optimizing the shuffling process can improve the job running efficiency, reduce the overall resource usage and costs, and improve the competitive advantages of MaxCompute in the cloud computing market.

In terms of the shuffling media, the most widely used shuffling method is based on the disk file system. This method is simple and direct and usually depends only on the underlying distributed file system. It can be used for all types of jobs. For typical real-time or firm real-time computing jobs in the memory, shuffling through a direct network connection is usually used to ensure the highest performance. In Job Scheduler Shuffle 1.0, these two shuffling modes are optimized to ensure efficient and stable job running in off-peak and peak hours.

First, we will use the shuffling of offline jobs based on the disk file system as an example due to its wider applicability.

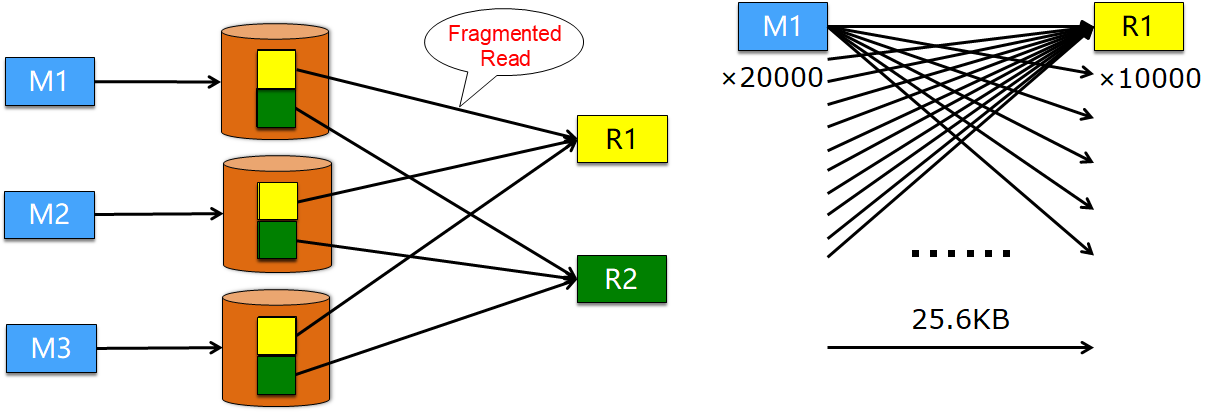

Usually, each mapper generates a disk file, which includes data that the mapper writes to all downstream reducers. Each reducer needs to read its own data from files written by all mappers. In the following figure, an MR job of a typical scale is shown on the right. When each mapper processes 256 MB of data and there are 10,000 downstream reducers, each reducer reads 25.6 KB of data from each mapper. This is a typical fragmented read in a storage system with HDD as the medium. If the disk IOPS can reach 1000, the throughput is 25 MB/s. This greatly influences the performance and disk pressure.

Shuffling based on the disk file system – Fragmented read of a 20,000 x 10,000 MR job

Improving the concurrency is usually one of the most important ways to accelerate distributed jobs. However, a higher concurrency exacerbates the fragmented read phenomenon when the same amount of data is processed. Generally, improving the concurrency to the extent permitted by the cluster scale while tolerating a certain level of I/O fragmentation is better for the job performance. Fragmented I/Os are common online, and disks are under high pressure.

As an online example, assume that the size of a single read request of some mainstream clusters is 50 KB to 100 KB, and the Disk util metric is maintained at 90% (a warning line) for an extended period. This limits job scale expansion, but is there a way to ensure both high job concurrency and disk efficiency?

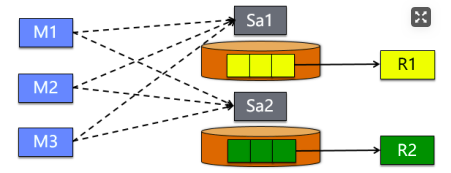

To solve the preceding read fragmentation problem and the resulting adverse effects, we create a shuffling mode based on the shuffle service. The basic method of the shuffle service is to deploy a shuffle agent node on each server in a cluster to collect shuffle data written to the same reducer, as shown in the following figure.

Each mapper transmits shuffle data to the shuffle agent of each reducer. The shuffle agent collects data from all mappers to the reducer and stores it to the shuffle disk file. These two processes are performed concurrently.

With the collection feature, the shuffle agent changes the fragmented input data of each reducer to continuous data files, which benefits the HDD. Read and write operations to the disk are continuous throughout the shuffling process. Standard TPC-H and other tests show that the performance is improved by dozens of percentage points several times over, the disk pressure is dramatically reduced, and the utilization of CPU and other resources is improved.

Ideas similar to the shuffle service are presented from Alibaba staff and external experts. However, these ideas have only been implemented for rating and on small scales. This is because it is difficult to process errors that occur in different processes in the shuffle service mode.

For example, shuffle agent file loss or damage is a common problem in big data jobs. To address this problem, shuffling based on the disk file system can locate the mapper from which the faulty data file comes and rectify the fault by running the mapper again. However, in the preceding shuffle service process, the shuffle file provided by the shuffle agent contains shuffle data from all mappers. To re-generate the damaged file, all mappers need to run again. If the shuffle service mode applies to all online jobs, it is unacceptable.

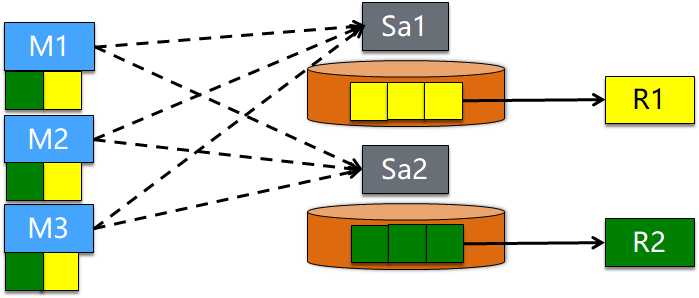

To solve this problem, we designed a mechanism that uses two data replicas. In most cases, reducers can read data generated by agents efficiently. When a few agents lose data, reducers can read backup data. Backup data regeneration depends only on a specific upstream mapper.

To be specific, shuffle data generated by mappers is not only sent to the corresponding shuffle agents but also backed up locally using a format similar to that of the shuffle data stored in the disk file system. The writing cost of this data replica is low but the read performance is poor. Backup data is read-only when the replica of the shuffle agent is incorrect. Therefore, this mechanism has only a minor impact on overall job performance and will not increase the cluster-level disk pressure.

Compared with shuffling based on the disk file system, shuffling based on the shuffle service with an effective fault tolerance mechanism not only provides better job performance but also lays a solid foundation for application to all online jobs. This is possible because the task retry ratio due to incorrect shuffle data is reduced by an order of magnitude.

In addition to the preceding basic features, the Job Scheduler online shuffle system applies more features and optimizations to further improve the performance and stability and reduce the cost. Some examples are provided below.

1. Throttling and Load Balancing

In the preceding data collection model, the shuffle agent sent mapper data and stored it to the disk. In distributed clusters, disk and network problems may affect data transmission in this process, and node pressure may also affect the shuffle agent status. When a shuffle agent is overloaded due to cluster hotspots or other reasons, we provide the necessary throttling measures to alleviate the pressure on the network and disk. Unlike the preceding model, in which each reducer has one shuffle agent to collect data, we use multiple shuffle agents to undertake the same work. When data skew occurs, this method effectively allocates the pressure to multiple nodes. The online performance shows that these measures mostly eliminate the need for throttling due to congestion and unbalanced cluster loads during shuffling.

2. Faulty Shuffle Agent Switchover

When a shuffle agent does not properly process the data of a reducer due to a software or hardware fault, subsequent data can be promptly switched to another normal shuffle agent. In this way, more data can be read from the shuffle agent side, reducing inefficient access to backup replicas.

3. Shuffle Agent Data Retrieval

When a shuffle agent switchover occurs, such as because the server is offline, data generated by the original shuffle agent may be lost or inaccessible. When subsequent data is sent to a new shuffle agent, Job Scheduler will load the lost data from the backup replica and send it to the new shuffle agent to ensure that all subsequent reducer data can be read from the shuffle agent side. This significantly improves job performance in fault tolerance scenarios.

4. Exploration of New Shuffling Modes

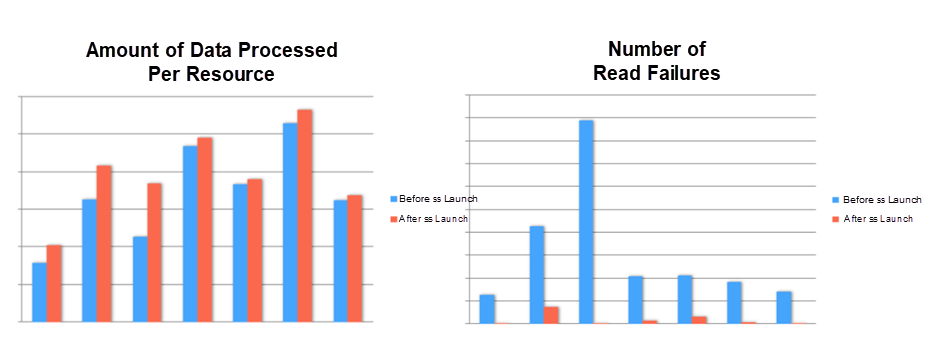

The preceding data collection model and comprehensive extended optimization improve the data volume processed per resource in online clusters by about 20% and reduce the task retry ratio due to errors by about 5% compared with shuffling based on the disk file system. Is this the most efficient shuffling method?

We applied a new shuffling model to some jobs in production environments. In this model, the sender of mapper data and the receiver of reducer data use an agent to transfer shuffling traffic. Some online jobs use this method to further improve performance.

Offline big data jobs may process the majority of computing data. However, in many scenarios, jobs are run in real-time or firm real-time mode in mainstream big data computing systems, such as Spark and Flink. The entire data flow of these jobs takes place in the network and memory to ensure excellent runtime performance with a limited job scale.

Job Scheduler DAG also provides real-time or firm real-time job runtime environments. The traditional shuffling method uses a direct network connection, which has better performance than offline shuffling. However, the traditional shuffling method requires all nodes of a job to be scheduled before the job can run. This limits the job scale. In most scenarios, the shuffle data generation speed of the computing logic cannot fully use up the shuffle bandwidth, so compute nodes have to wait for data. Therefore, performance is improved at the cost of wasted resources.

We apply the shuffle service to memory storage to replace shuffling through a direct network connection. On one hand, this mode decouples upstream and downstream scheduling so that all nodes of a job do not need to be scheduled at the same time. On the other hand, it can accurately predict the data read and write speeds and properly schedule downstream nodes to achieve the same job performance as shuffling through a direct network connection and reduce resource consumption by more than 50%. This shuffling method also enables multiple DAG adjustment capabilities at runtime in the DAG system to be applied to real-time or firm real-time jobs.

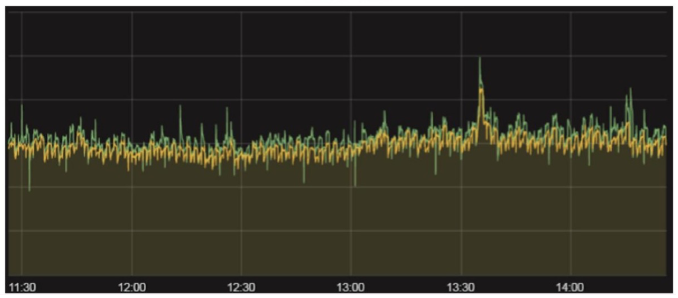

Job Scheduler Shuffle 2.0 is fully applied to production clusters. Given the same amount of data to process, job resources are reduced by 15%, the disk pressure is reduced by 23%, and the task retry ratio due to errors is reduced by 5%.

Performance and stability improvement of typical online clusters (different groups of data indicate different clusters)

In TPC-H and other standard test sets, firm real-time jobs that use shuffling based on memory data has the same performance as those that use shuffling through a direct network connection, with only 30% resource usage and a larger job scale. In addition, more dynamic scheduling features of DAG 2.0 are applied to firm real-time jobs.

Single server scheduling is used to properly allocate limited resources of different types on a single server to each job. This is done to ensure the job running quality, resource utilization, and job stability when a large number of distributed jobs are aggregated on a server.

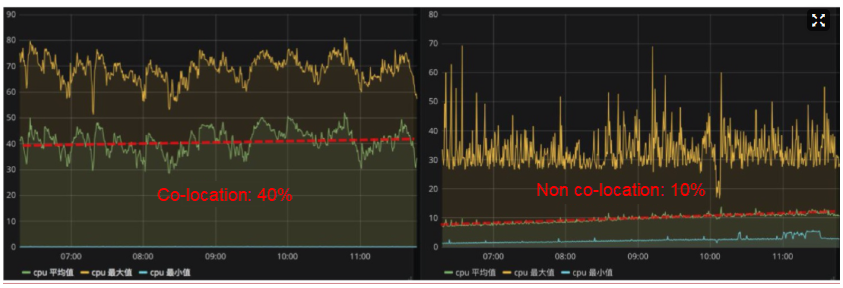

Businesses of an Internet company are typically classified into offline and online businesses. Alibaba's online businesses, such as Taobao, Tmall, DingTalk, and Blink, are sensitive to the response latency. When service jitter occurs, exceptions may result, such as the failure to add products to the cart, order failures, browsing lag, and failure to send DingTalk messages. These exceptions may significantly affect the user experience. In addition, to cope with various large promotional activities, such as 618 and Double 11, a large number of servers need to be prepared in advance. Due to the preceding reasons, the routine resource utilization of these servers is less than 10%, which results in wasted resources. Alibaba's offline businesses are totally different. The MaxCompute platform processes all offline big data computing businesses. Cluster resources are always overloaded, and the data and computing job volumes increase rapidly every year.

With insufficient online business resource utilization and overloaded offline computing, can we co-locate online businesses and offline computing jobs to improve resource utilization and dramatically reduce costs?

1. Online Service Quality

The average CPU utilization of online clusters is only about 10%. Co-location aims to provide the remaining resources for offline computing by MaxCompute to reduce costs. How can we improve resource utilization without affecting online services?

2. Offline Computing Stability

When resource conflicts occur, online services are given priority because a failure to log on to Taobao or Tmall or place an order is a critical fault. However, if there is no limit on the sacrifice of offline computing, the service quality will be greatly reduced. For example, it would be unacceptable if a 1-minute DataWorks job required 10 minutes or even 1 hour to run.

3. Resource Quality

E-commerce businesses use the rich container mode to integrate multiple container-level analysis methods. We need to accurately analyze the resource profile of offline job resource usage based on offline job features and evaluate the resource interference and stability of co-located clusters.

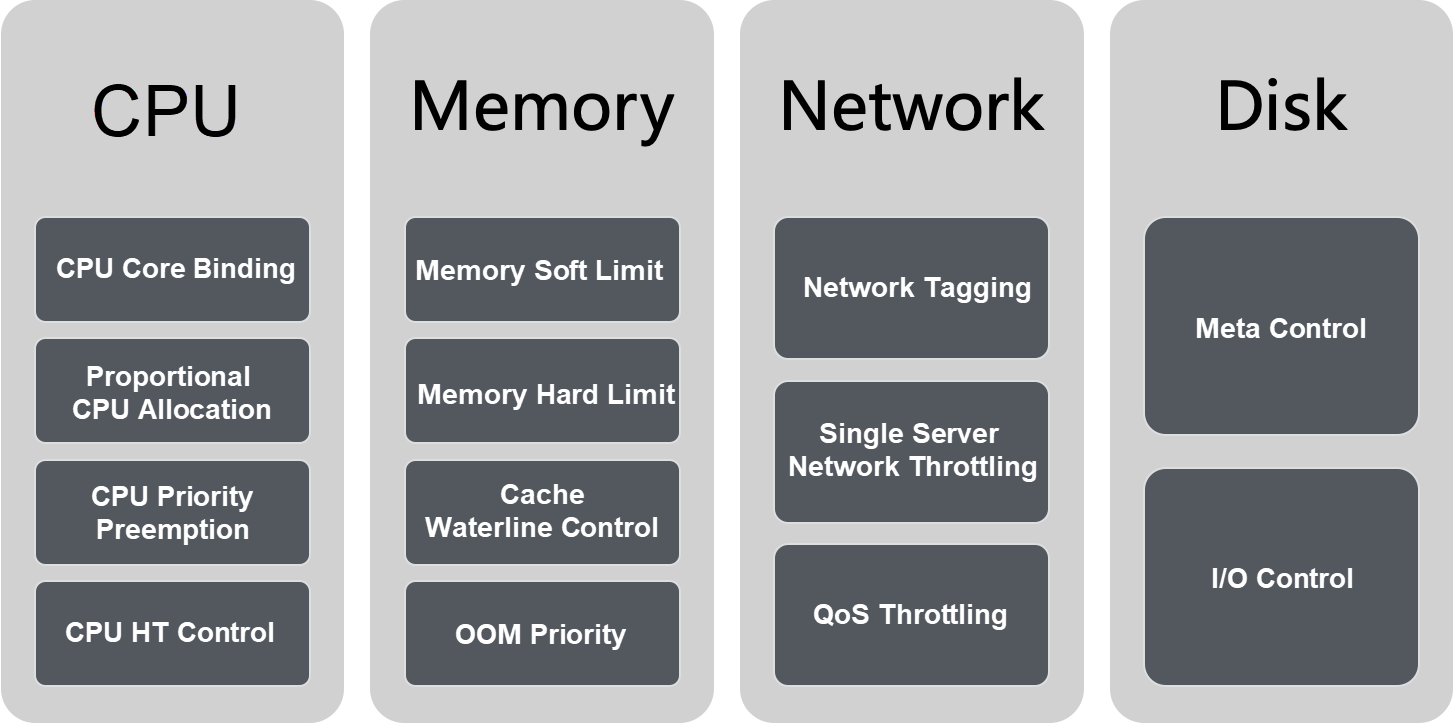

The physical resources of a single server are limited and can be divided into scalable resources and non-scalable resources based on their characteristics. CPU, network, and I/O resources are scalable, but memory resources are not. Different isolation solutions are applied to resources of different types. In addition, there are various job types in general-purpose clusters, and different types of jobs have different requirements for resources. We classify resource requirements into online and offline resource requirements. Each type includes different requirements of different priorities, introducing a great deal of complexity.

Job Scheduler 2.0 proposes a set of resource classification logic based on the resource priority, which finds a solution to the complex requirements of resource utilization and multi-level resource assurance.

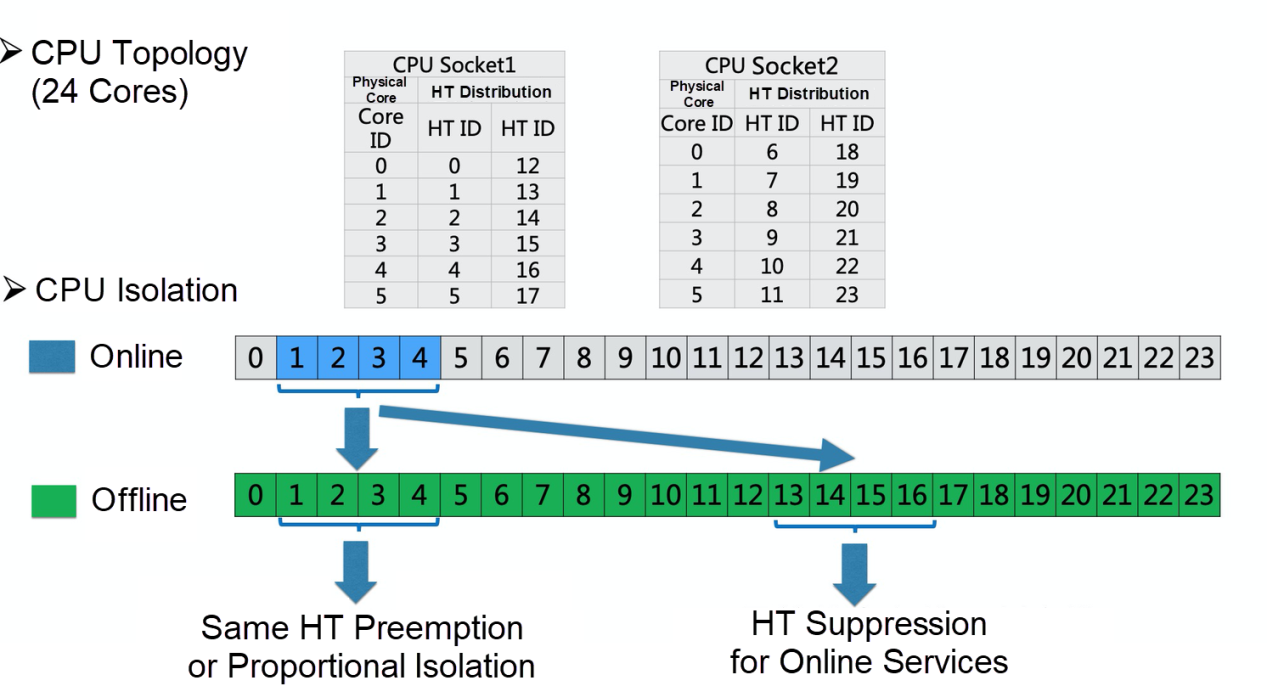

Next, we will describe layered CPU management. The management policies for other types of resources will be described in subsequent articles.

Through the precise combination of multiple kernel policies, we classify CPU resources into high, medium, and low priorities.

| CPU Level | Resource Isolation Policy |

|---|---|

| Gold | Gold services have exclusive CPU cores, which do not overlap with silver CPU cores, but do overlap with bronze CPU cores. When gold CPU cores overlapped with bronze CPU cores are required, they will be preempted using the resource isolation mechanism. |

| Silver | Silver services do not have exclusive CPU cores. Silver CPU cores do not overlap with gold CPU cores, but do overlap with bronze CPU cores. When silver CPU cores overlapped with bronze CPU cores are required, they will be preempted using the resource isolation mechanism. |

| Bronze | Bronze CPU cores overlap with gold and silver CPU cores for load shifting. Bronze CPU cores may be preempted at any time based on the resource isolation mechanism. |

The following figure shows the isolation policy.

The following table describes the mapping between resources of different types and jobs of different priorities.

| Scheduling System | Gold | Silver | Bronze |

|---|---|---|---|

| Online | Services that are latency-sensitive | Most common online services | None |

| Online | None | Offline CPU-sensitive jobs | Common offline jobs |

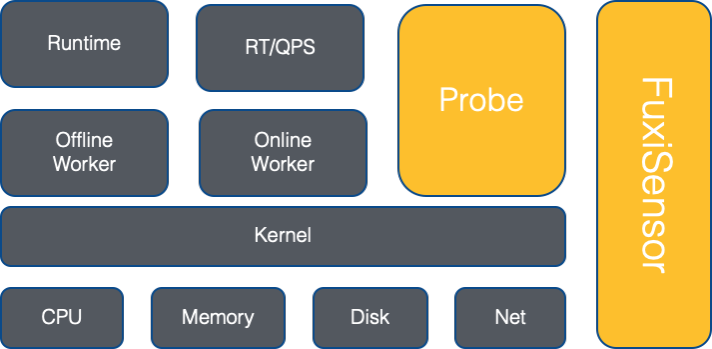

Accurate profiles of resource usage are important to Job Scheduler, allowing it to balance resource allocation and investigate, analyze, and solve resource problems. Alibaba and the industry have many solutions for online job resources. Common resource collection solutions have the following issues and cannot be used in the data collection phase for the resource profiles of offline jobs.

Therefore, we have proposed the resource profile solution FuxiSensor. The preceding figure shows the architecture. We also use SLS for data collection and analysis. Resource profiles are implemented at different levels, such as clusters, jobs, servers, and workers, ensuring data collection precise to the second. In co-location and MaxCompute practices, the resource profile solution has become the unified entry for resource problem monitoring and alerting, stability data analysis, abnormal job diagnosis, and resource monitoring as well as a key metric for successful co-location.

The routine resource utilization is increased from 10% to more than 40%.

Online jitter is less than 5%.

To ensure stable online service quality, we upgraded online resources to a high priority based on comprehensive priority-based isolation for different types of resources. To ensure stable offline computing quality, we set priorities for offline jobs and used various management policies. With fine-grained resource profiles, we ensured resource usage evaluation and analysis. These measures promote the large-scale application of co-location in Alibaba, improving cluster resource utilization, and reducing offline computing costs.

After 10 years of development from 2009 to 2019, Job Scheduler is still evolving to meet emerging business requirements and lead the development of distributed scheduling technologies. In the future, we will continue to innovate along the following lines:

Finally, we warmly welcome other teams from the Alibaba Group to participate in discussions and join in building a world-class distributed scheduling system.

For more information, visit the MaxCompute Official Website

New Enterprise Capabilities of MaxCompute: Continuous Data and Service Protection in the Cloud

Job Scheduler DAG 2.0: Building a More Dynamic and Flexible Distributed Computing Ecosystem

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - August 31, 2020

Apache Flink Community - April 25, 2025

Alibaba Container Service - August 25, 2020

Alibaba Clouder - December 2, 2020

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Developer - May 21, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn MoreMore Posts by Alibaba Cloud MaxCompute