By Guo Hua

A data warehouse is a basic service that is required when a company's data grows to a certain size. Data warehouse construction is an essential part of "data intelligence." This article describes six aspects of a data warehouse: overview, development path, construction methods, architecture evolution, application, and real-time compared to offline data warehouses.

A data warehouse is a subject-oriented, integrated, non-volatile, and time-variant data collection. It is primarily used to support decision-making.

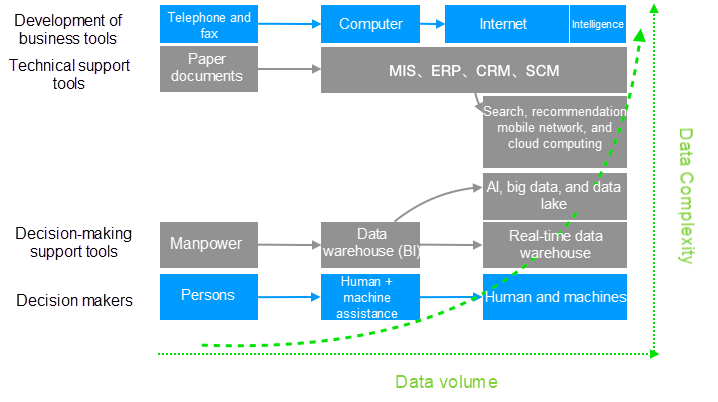

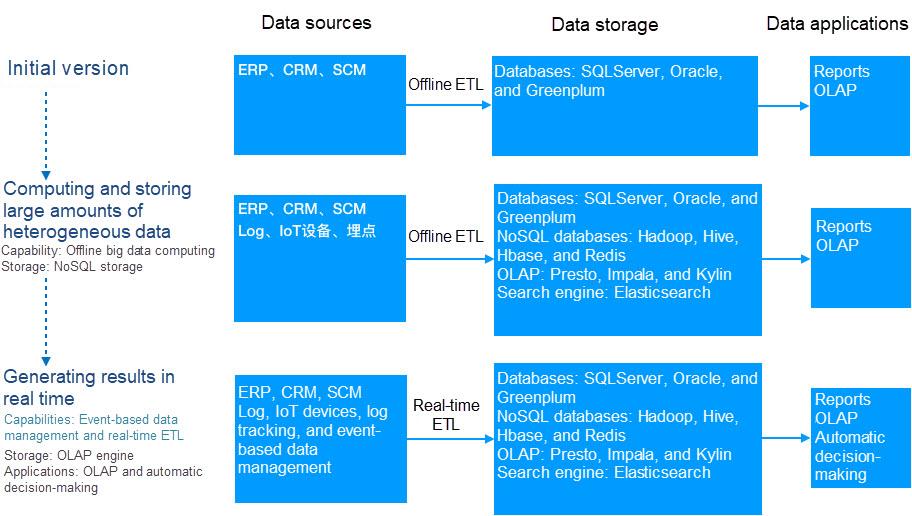

Data warehouses evolve along with the enterprise informatization process. In this process, existing information tools are quickly upgraded, and new tools are put into use. Data grows in diversified new formats, and decision-making requirements become increasingly stringent. All these have been boosting the development of data warehouse technologies.

Development Trends

Data warehouse development involves two parts: construction and application.

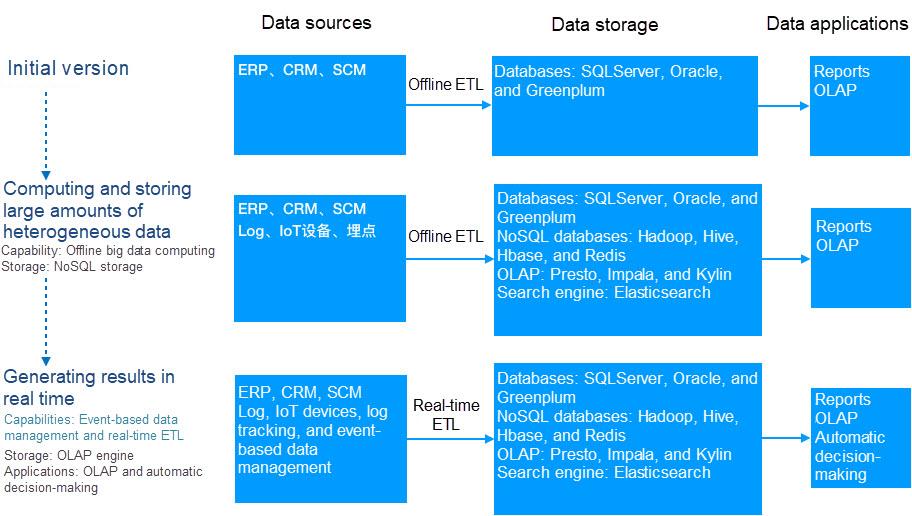

Simply put, we need data warehouses to:

A. Generate results.

B. Process and save large amounts of heterogeneous data in real time.

Note: Data lake technology is beyond the scope of this article.

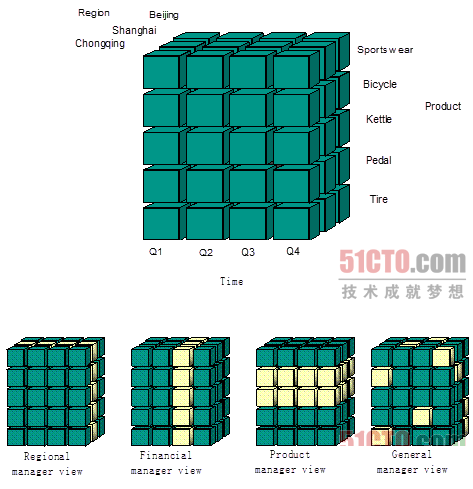

Data warehouses can be constructed based on a company's actual business to serve analytic requirements on various topics, such as suppliers, products, customers, and warehouses.

This type of warehouse mainly focuses on data reports and analytic functions, such as data cubes, data rollup, data drilling, data slicing, and data rotation.

This type of warehouse deals with star data models made up by fact tables and dimension tables.

Note: The image is from 51 CTO.

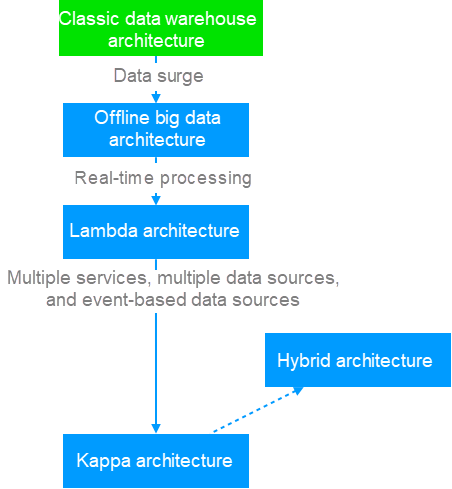

The concept of a data warehouse was proposed by W.H. Inmon in 1990, who also provided a complete construction approach. The advent of the Internet brought explosively increasing data volumes.

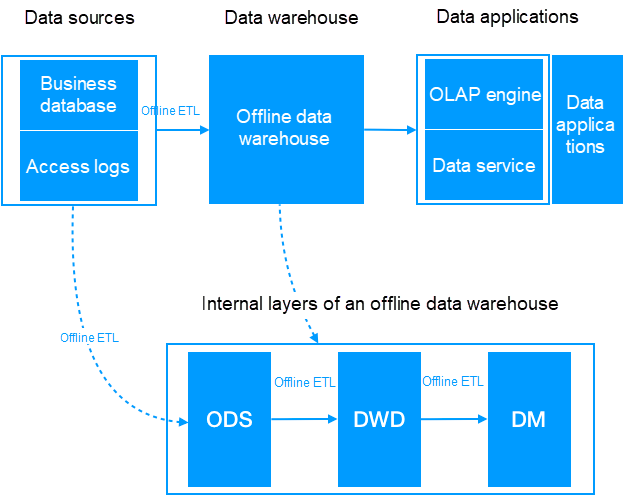

Data sources are imported into offline data warehouses. Downstream applications can directly read the data market or add data services, such as MySQL or Redis, based on business needs. A data warehouse is typically divided into three layers:

1 Operational Data Store (ODS): stores raw data.

2 Data Warehouse Detail (DWD): defines a fact and dimension tables with reference to topics and stores most fine-grained fact data.

3 Data Market (DM): known as data warehouse summary of data details based on the DWD layer, according to different business needs.

A typical data warehouse storage service is Hive HDFS, where the ETL can be MapReduce script or Hive SQL.

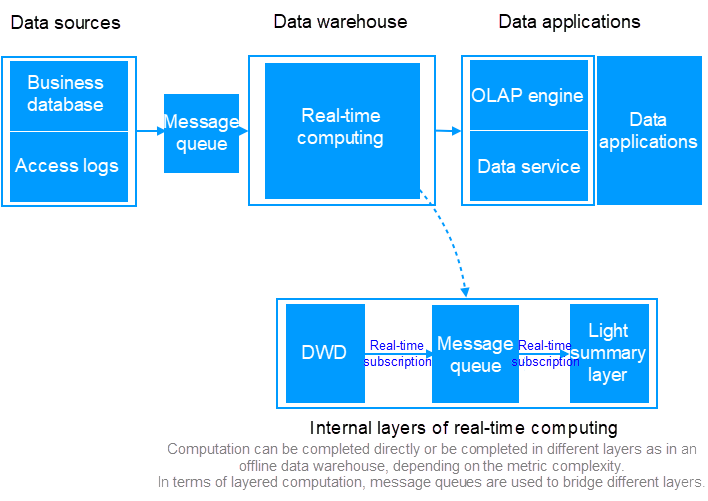

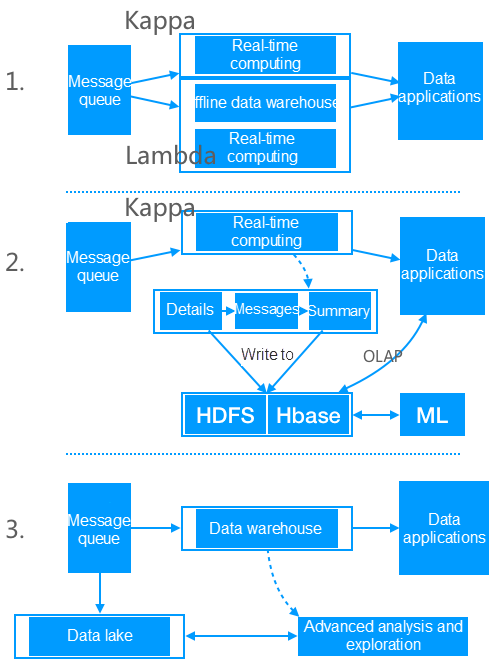

With the development of big data applications, there is a gradual need for the system to meet some real-time requirements. To compute some real-time metrics, we added a real-time computing chain to the offline data warehouse and restructured the data sources to deal with data streams (send data to message queues). We use a real-time computing service to subscribe to message queues, directly compute the incremental metric data, and push the results to a downstream data service to merge offline and real-time results.

Note: Metrics computed by stream computing are also computed through batch processing, and the batch processing results prevail. In other words, the batch processing results will eventually override stream processing results. This is a compromise when the stream processing engine is imperfect.

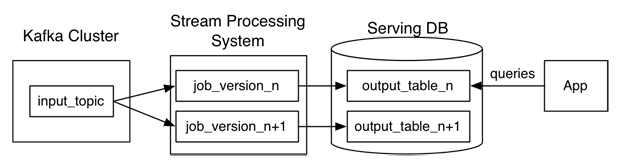

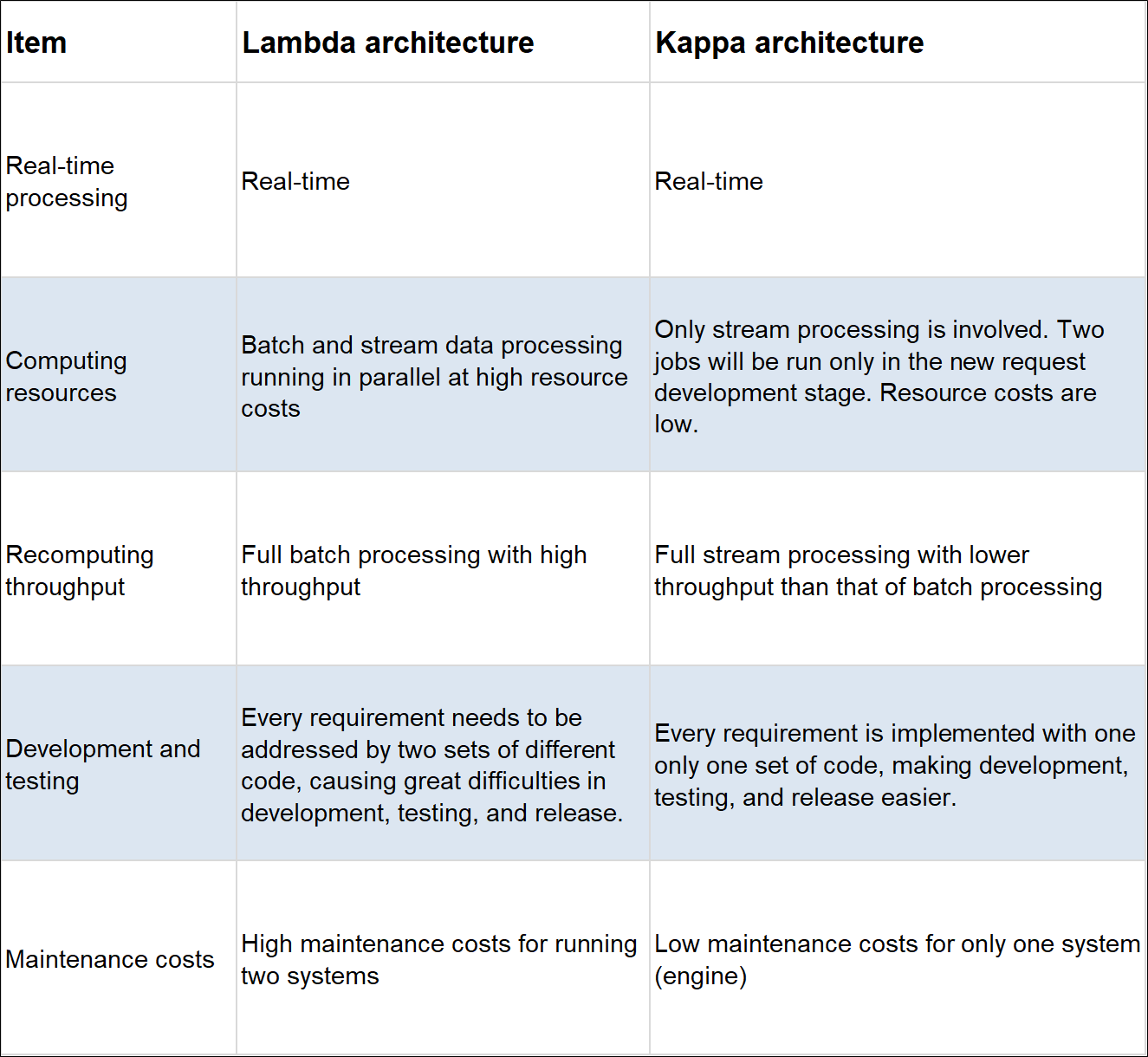

Although the Lambda architecture meets the real-time requirements, it requires more development and maintenance effort. The background of the Lambda architecture is that the stream processing engine is not perfect, yet. The stream processing result is inaccurate and used for reference only. Later, some stream processing engines, such as Flink, were developed to improve stream processing results. To solve the problem of two sets of code, Jay Kreps of LinkedIn proposed the Kappa architecture.

Reprocessing is the biggest concern with Kappa architecture. However, it is not complex:

1 In real-life scenarios, we do not usually use pure Lambda or Kappa architectures. In fact, we use hybrid architecture in most cases. For example, we use Kappa to compute the most real-time metrics and use Lambda to recompute a few key metrics (for example, money related) to verify the stream computing results.

2 Now, the intermediate results of the Kappa architecture can be used for machine learning (offline training) by many big data systems. Therefore, the intermediate real-time processing results need to be stored in corresponding storage engines for use in machine learning. Sometimes, we may also need to query for the DWD data. In this case, we need to write the DWD data to a corresponding engine. For details, refer to subsequent examples.

3 In addition, with diversified data development, it is increasingly difficult for data warehouses that define a schema in advance to meet flexible exploration and analytic requirements. Data lake technology attempts to solve that problem. In a data lake architecture, all raw data is cached in a big data storage system, which supports parsing raw data, as needed for future data analysis. Simply put, a data warehouse works in the schema-on-write mode, and a data lake works in the schema-on-read mode.

In this section, I describe the Cainiao real-time data warehouse shared by the Cainiao warehousing and distribution team. It describes the following aspects: overall design, data model, and data assurance.

Note: Thanks to Yuan Qiao for his generous contribution.

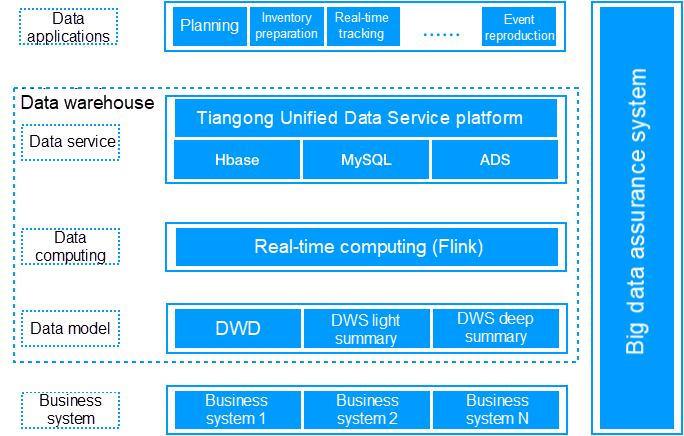

The following diagram shows the overall design of the Cainiao data warehouse. Based on business system data, Cainiao adopts the middle-layer concept in data model design to build a real-time data warehouse for product warehousing and distribution. Cainiao uses Flink, a simple-to-use real-time computing engine with excellent performance, as the primary computing engine. With regards to data service, Cainiao uses Tiangong data service middleware to avoid a direct connection to the database. In addition, the Tiangong data service middleware supports the flexible configuration and switches between primary/secondary databases in seconds. In terms of data application, Cainiao builds a comprehensive data system for product warehousing and distribution during big promotions covering five dimensions: planning, inventory preparation, real-time tracking, after sales service, and event reproduction.

To construct a real-time data warehouse for product warehousing and distribution, use the middleware-based method and avoid IT silos, to save computation costs, simplify usage, improve reusability, and ensure consistency. We can divide a real-time middleware-based data warehouse into two layers.

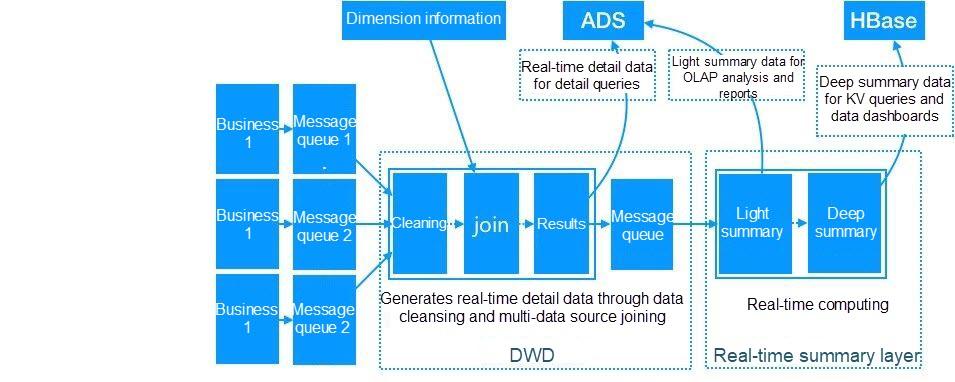

The real-time computing system subscribes to message queues of business data. Then, it associates dimensional attributes in business systems and dimension tables with the same granularity through several procedures, such as data cleansing, multi-data source joining, streaming data computing, and offline dimension information processing, to simplify data usage and improve data reusability. As a result, the final real-time detail data is produced. This data is divided to two branches. The first branch directly goes to the ADS for real-time detail queries, and the second branch goes to message queues for underlying computing.

A real-time summary layer is built on the concept of data domain + business domain. Unlike the offline data warehouse, the summary layer is composed of a light summary layer and a deep summary layer, which generates output at the same time. Output of the light summary layer is written to ADS for complex OLAP queries by front-end products, such self-service analytic tools and data report generation tools. Output of the deep summary layer is written to HBase for simple KV queries by front-end products to improve query performance, for example, the real-time dashboard.

Note:

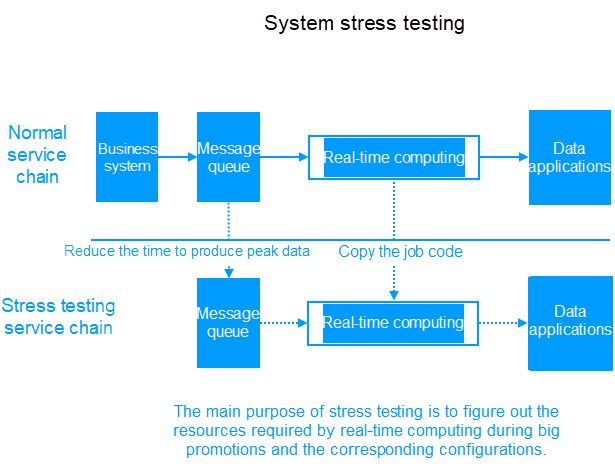

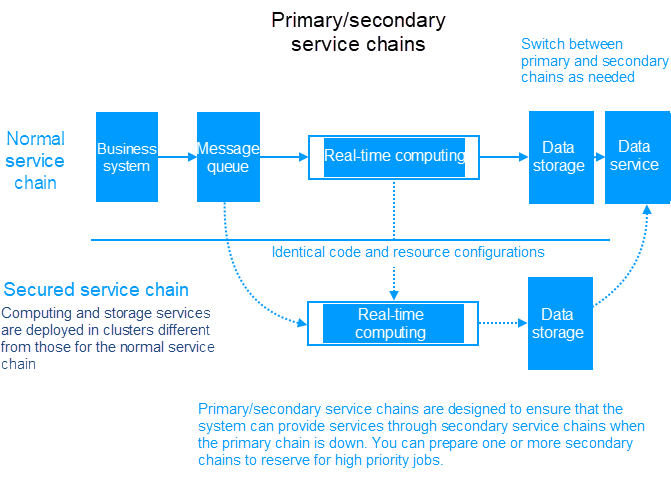

Alibaba organizes a variety of big promotions, such as the 11.11 Global Shopping Festival. Traffic and data volume increase dramatically during these big promotions. In real-time data processing, a real-time data warehouse is more sensitive to data volume and has higher stability requirements than offline systems. To address requirements, two preparations are required:

After reading the previous sections and taking a closer look at the Cainiao data warehouse, you may want to know the differences and similarities between a real-time and offline data warehouse.

Note: JOIN of real-time stream data in real-time data warehouses has hidden time semantics.

206 posts | 56 followers

FollowApache Flink Community China - April 13, 2022

Apache Flink Community China - September 27, 2020

Apache Flink Community China - June 28, 2020

Apache Flink Community China - July 28, 2020

Alibaba EMR - January 10, 2023

Hologres - May 31, 2022

206 posts | 56 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community