By Fu Dian

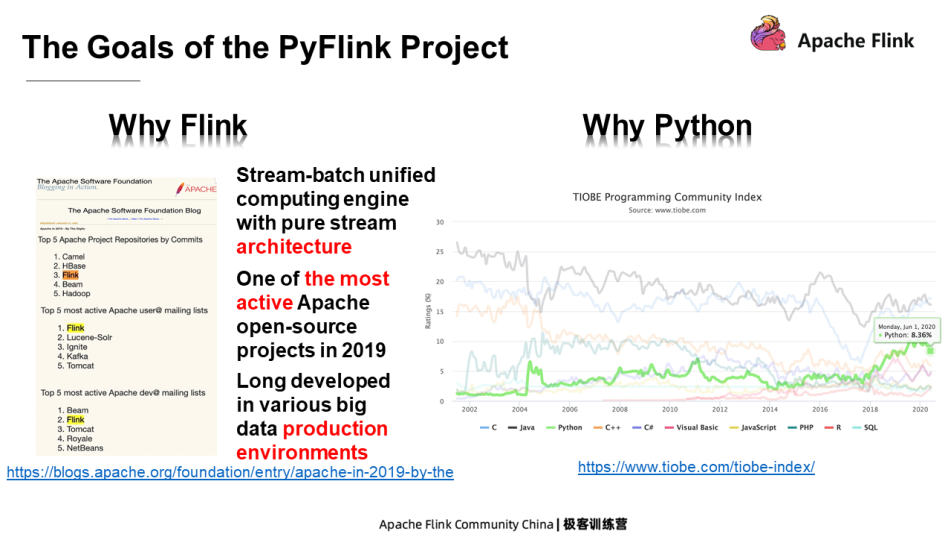

PyFlink is a sub-module of Flink and a part of the entire Flink project, mainly providing the Python language for Flink. It is the most important development language in the fields, such as machine learning and data analysis. The PyFlink project is launched to meet the needs of more users and broaden the Flink ecosystem.

The PyFlink project has two main goals. The first goal is to export the computing capabilities of Flink to Python users. That is, to provide a series of Python APIs in Flink for users who are familiar with the Python language to develop Flink jobs.

The second goal is to make a distributed Python ecosystem based on Flink. Although a series of Python APIs are provided in Flink for Python users, it takes some time for the users to learn how to use the Python APIs of Flink and understand the usage of each API. Therefore, it is hoped that users can use the APIs of the Python library that they are familiar with at the API layer, and use Flink as the underlying computing engine so as to reduce their learning costs. This is what to do in the future and is currently in the start-up phase.

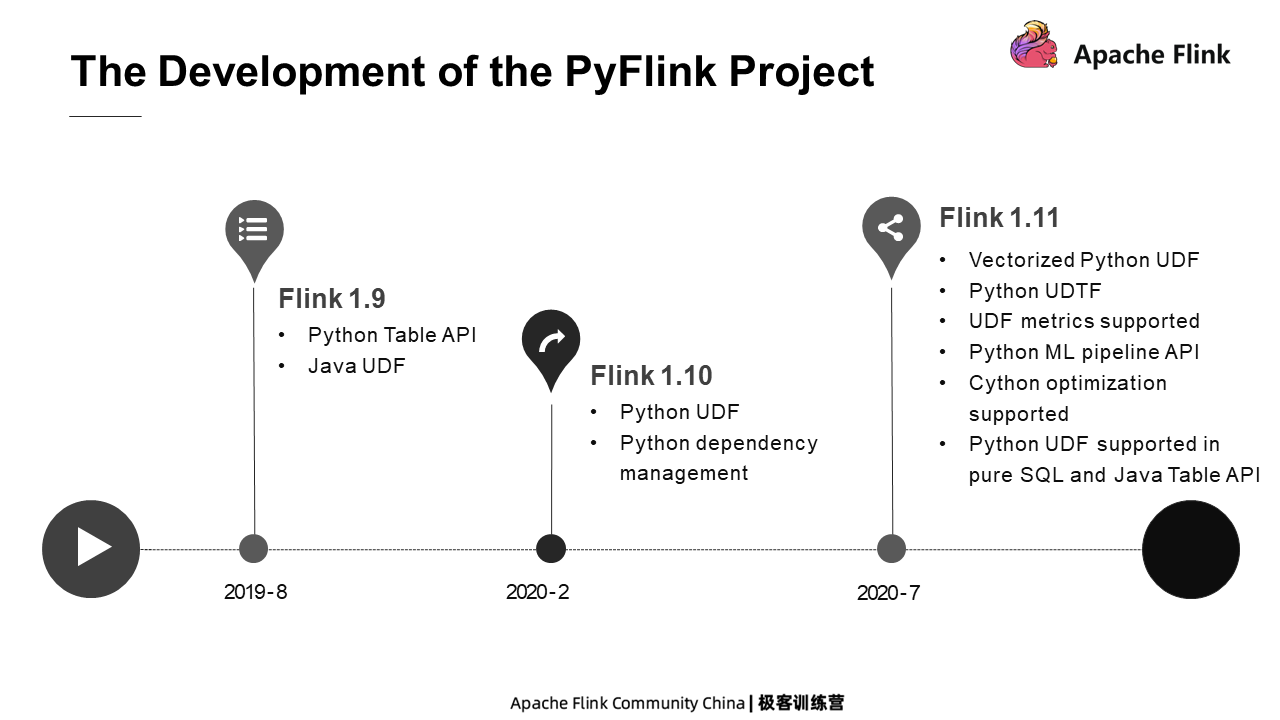

The following figure shows the development of the PyFlink project. Currently, three versions have been released, and more and more contents have been supported.

This section mainly introduces the following features, including Python Table API, Python UDF, vectorized Python UDF, Python UDF Metrics, PyFlink dependency management, and Python UDF execution optimization.

Python Table API allows users to develop Flink jobs in Python. Flink provides three types of APIs: Process, Function, and Table API. The first two are relatively underlying APIs. The logics of the jobs developed based on Process and Function are executed strictly according to the user-defined behavior. While Table API is an upper API. For jobs developed based on Table API, the logic is executed after a series of optimizations.

As its name implies, Python Table API provides Python language for Table API.

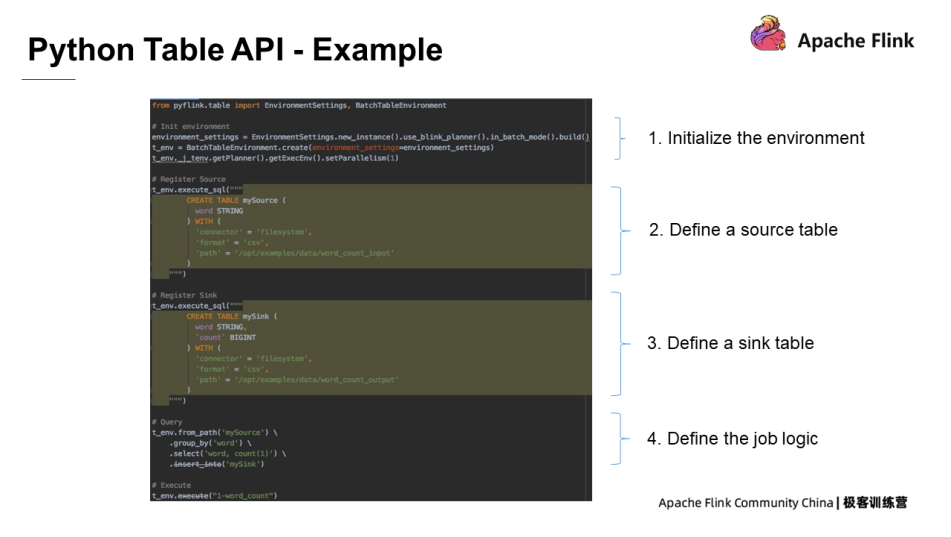

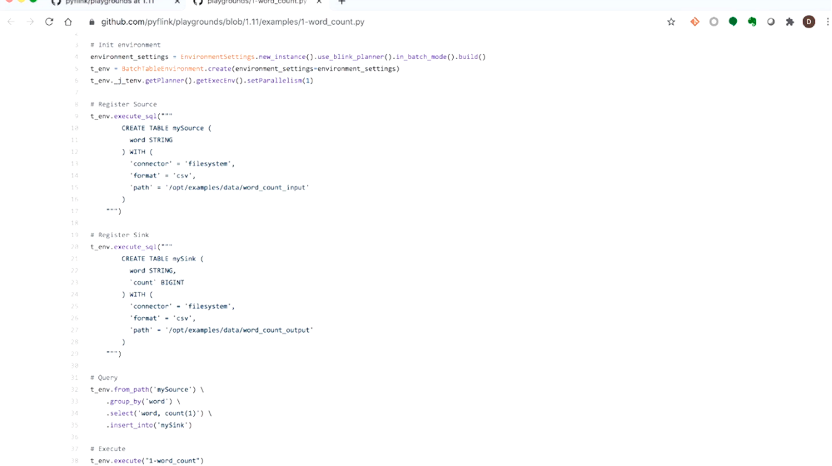

The following is a Flink job developed based on Python Table API. The job logic is to read the file, compute the word count, and write the computation results to the file. It seems simple but includes all the basic processes for developing a Python Table API job.

The first step is to define the execution mode of the job, such as in batch mode or stream mode, the concurrency and the job configuration. The next step is to define the source table and the sink table. The source table defines where the data source of the job comes from and what the data format is. The sink table defines where to write the execution result of the job, and what the data format is. The final step is to define the execution logic of the job, as computing the written count in this example.

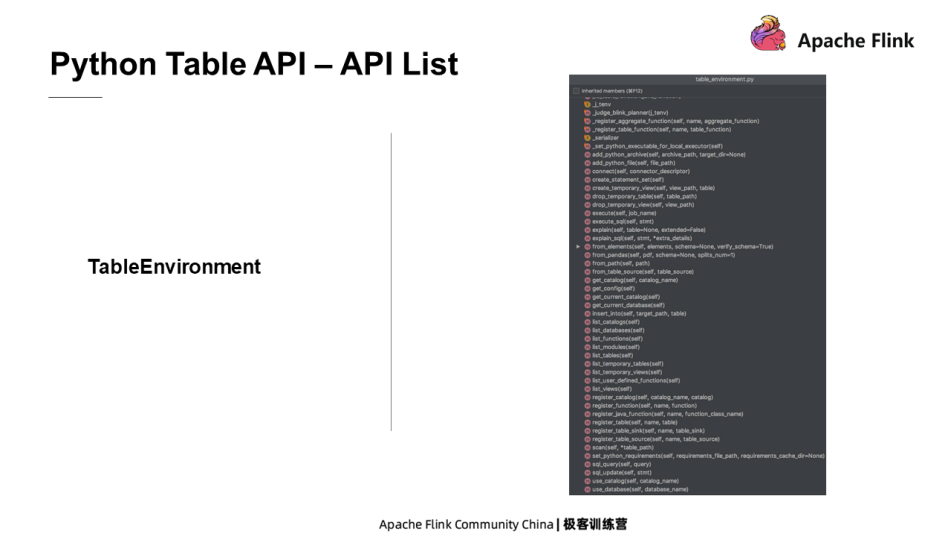

The following figure shows part of the Python Table API, which is abundant in quantities and functions.

Python Table API is a relational API which is similar to SQL in terms of function. The Custom functions in SQL are very important which can extend the range that SQL can be used. Likewise, Python UDFs are designed for developing custom functions in Python to extend the scenarios in which Python Table API is used. In addition to Python Table API jobs, Python UDFs can also be used in Java Table API jobs and SQL jobs.

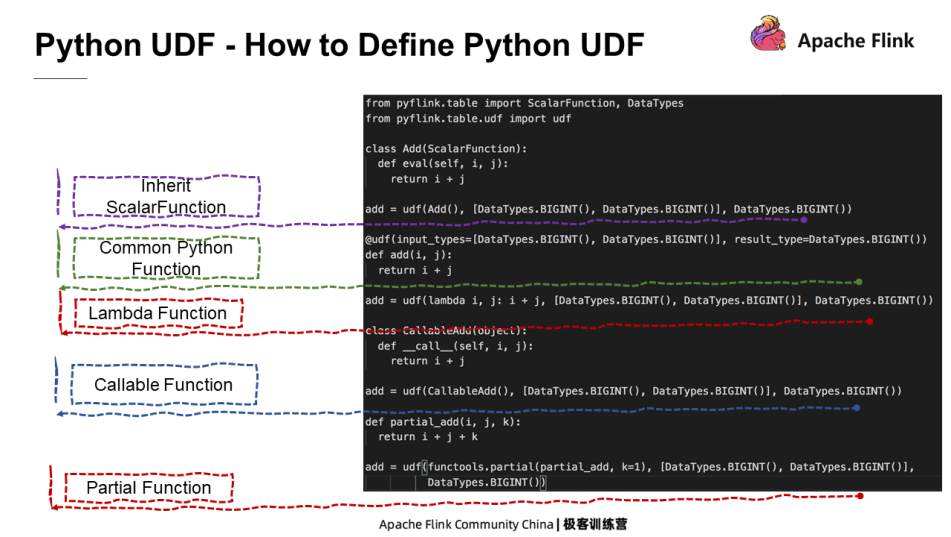

There are multiple ways to define Python UDFs in PyFlink. Users can define a Python class that inherits the ScalarFunction, or define a common Python function or a Lambda function to implement the logic of a UDF. Besides, a Python UDF can be defined by using Callable Function and Partial Function. Users can choose the best way to go according to their own needs.

There are also multiple ways to use Python UDFs in PyFlink, including Python Table API, Java table API, and SQL, which will be introduced in detail in the following part.

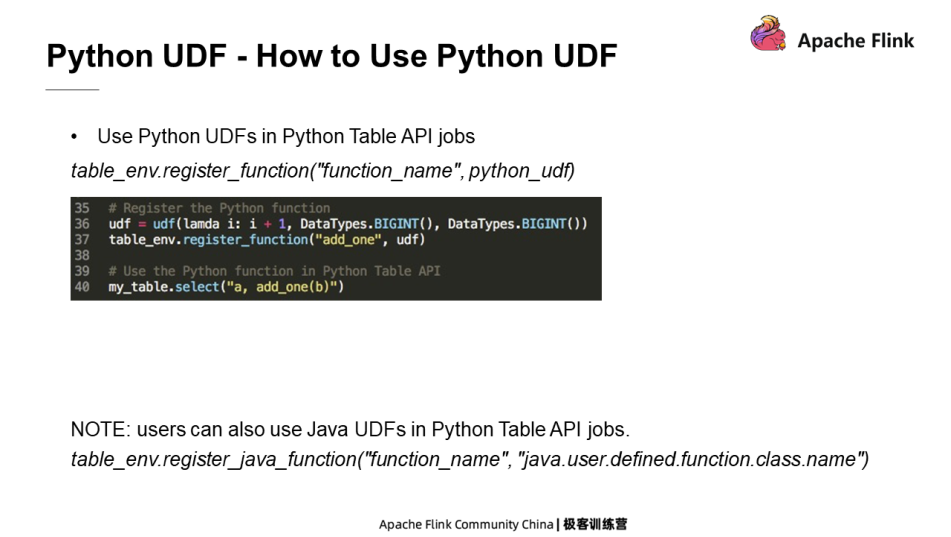

For the use of Python UDFs in Python Table API, first register the Python UDF after defining the Python UDF. Users can call the table environment register to register the Python UDF, and then name it. After that, the Python UDF can be used through this name in the job.

Python UDFs are used in a similar way in the Java Table API but registered differently. In Java Table API jobs, DDL statements are needed for registration.

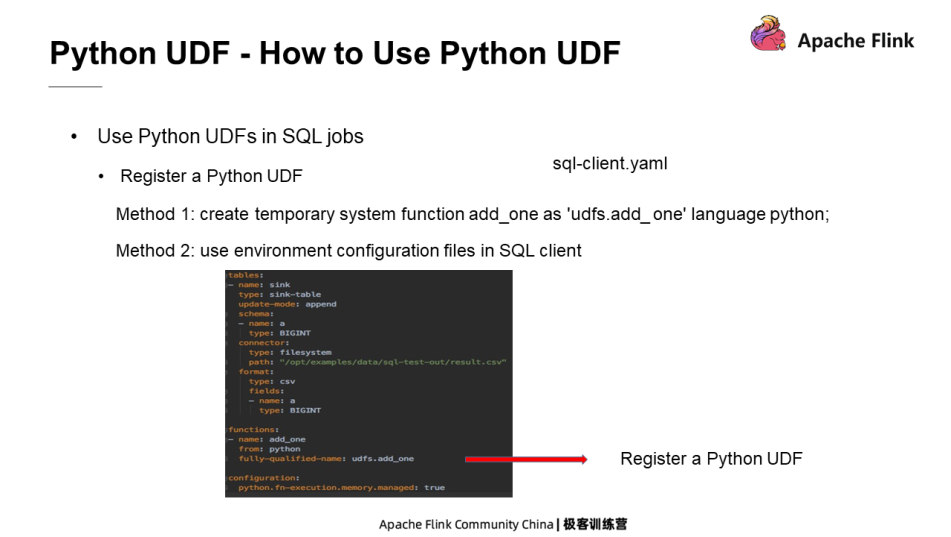

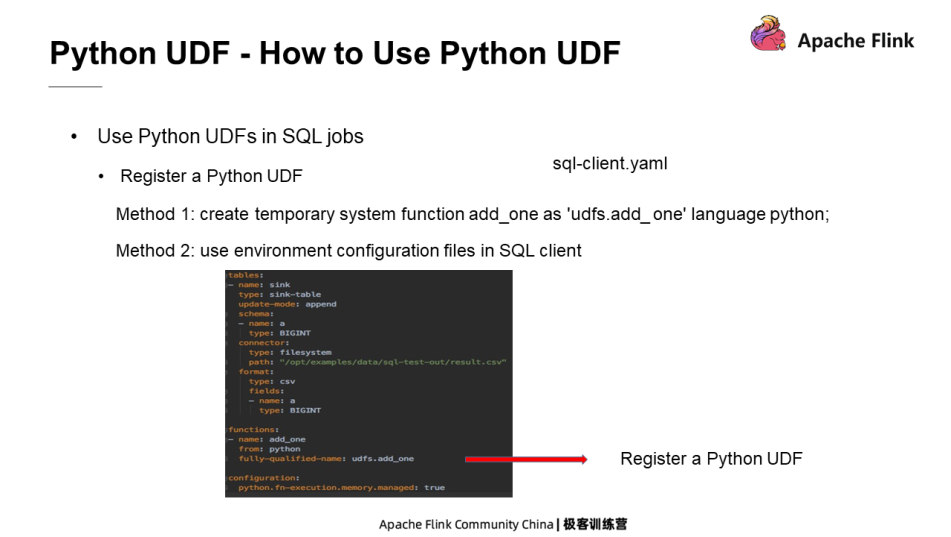

Besides, users can also use Python UDFs in SQL jobs. Users need first to register the Python UDF in the similar way as mentioned before. This step can be implemented by using DDL statements in an SQL script or in the environment configuration file of the SQL Client.

This section describes the execution architecture of the Python UDF. Flink is written in Java and runs in Java virtual machine (JVM). While the Python UDF runs in the Python VM. Therefore, data communication between the Java process and the Python process is required. The state, log, and metrics are also needed to be transmitted between the two processes. Four types of transmission protocols are to be supported.

Vectorized Python UDFs are designed to enable Python users to develop high-performance Python UDFs by using Python libraries commonly used in data analysis, such as Pandas and NumPy.

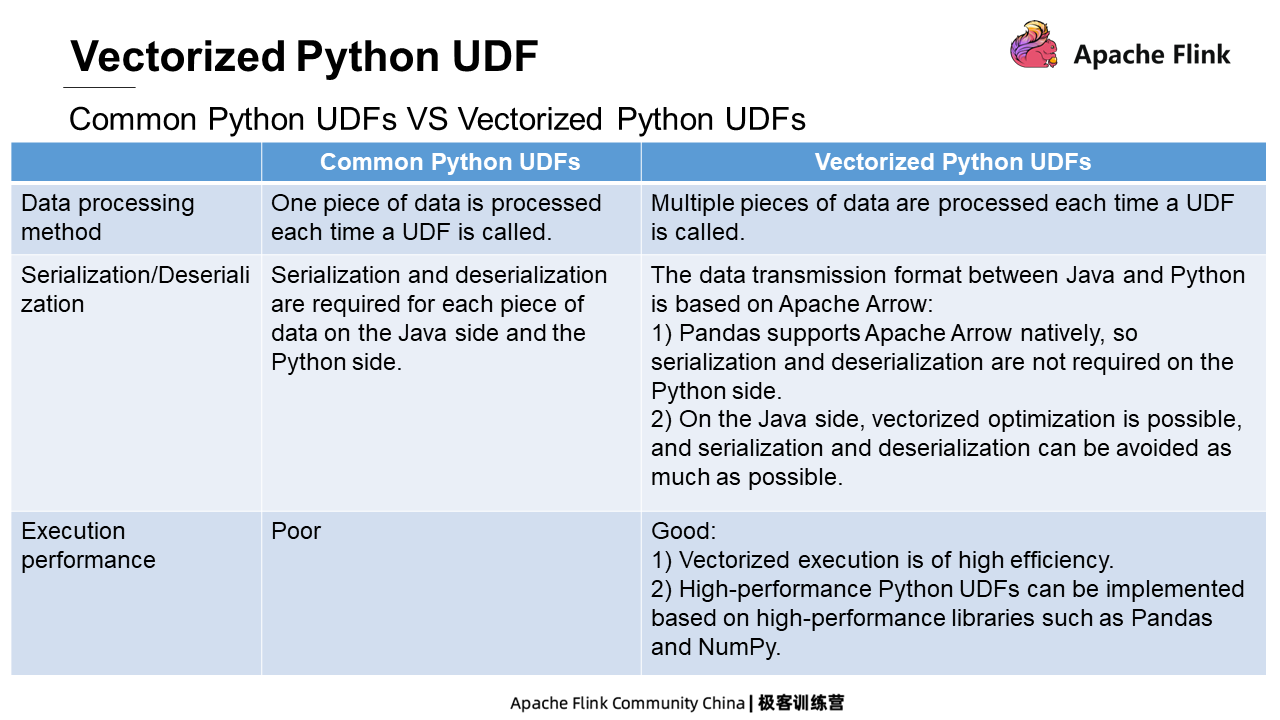

The “vectorized feature” of the vectorized Python UDF is concluded after comparing it with the common Python UDF. The differences between them are shown in the following figure.

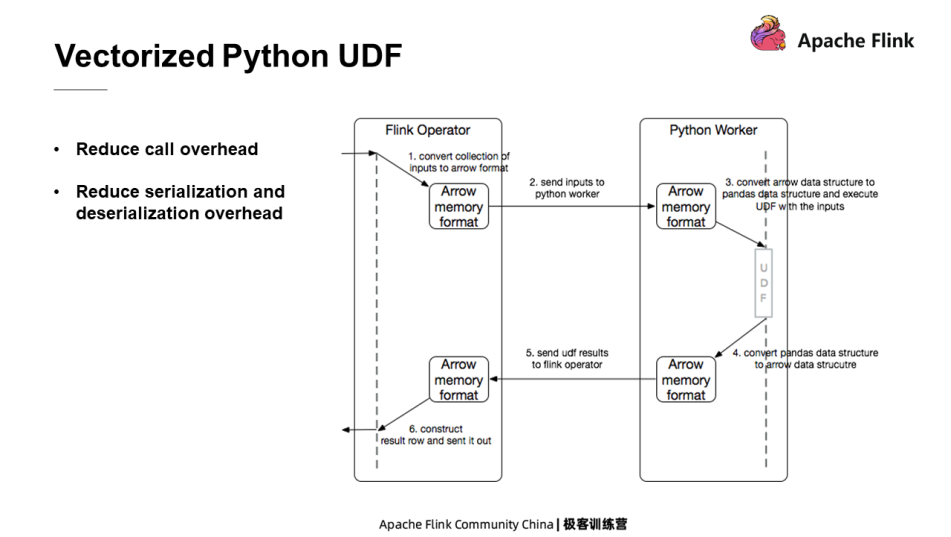

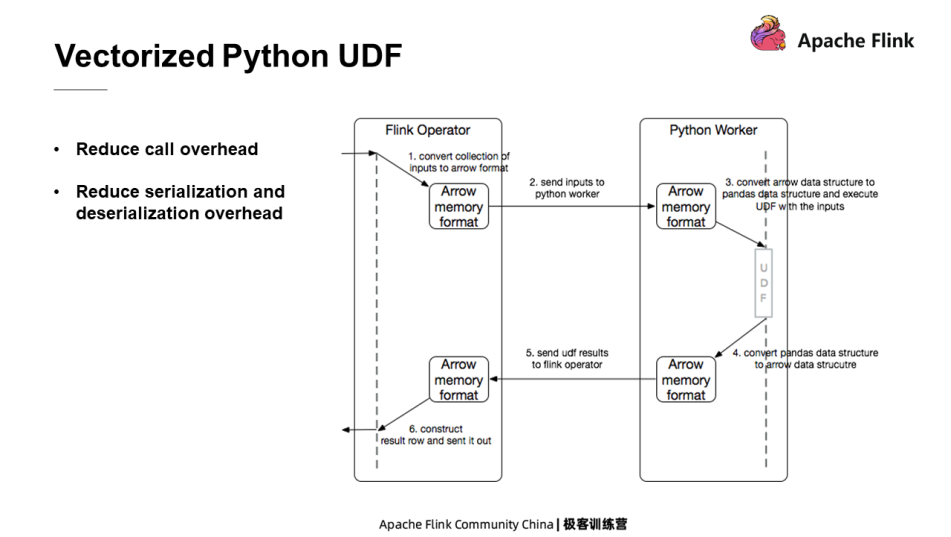

The following figure shows the execution of the vectorized Python UDF. On the Java side, Java converts multiple data records saved into the Arrow format and sends them to the Python process. After receiving the data, the Python process converts it into the Pandas data structure and calls the custom vectorized Python UDF. At the same time, the execution result of the vectorized Python UDF is reconverted into the Arrow format and then sent to the Java process.

The vectorized Python UDF is similar to the common Python UDF in usage, except for the following differences. First of all, a UDF type needs to be added to declare the vectorized Python UDF. At the same time, the UDF input and output types are Pandas Series.

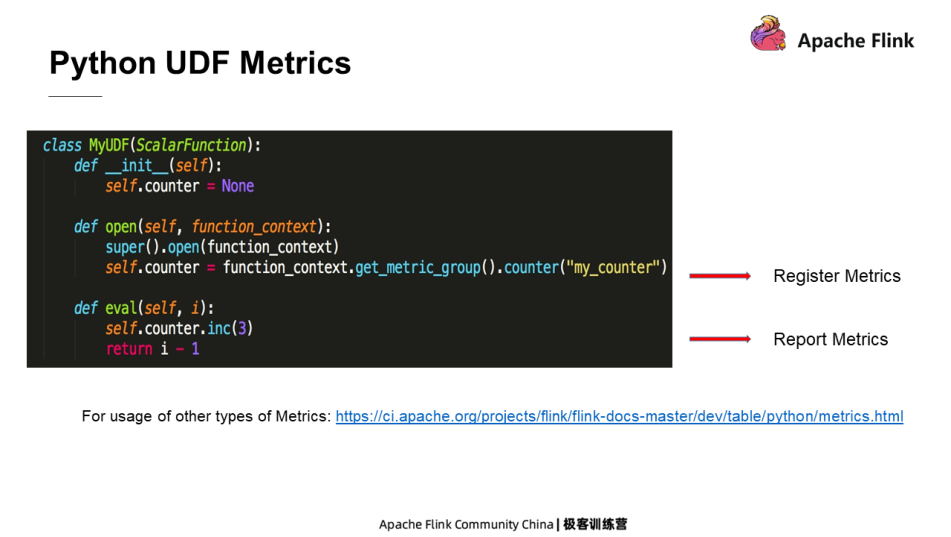

As mentioned earlier, a Python UDF can be defined in multiple ways. However, to use Metrics in a Python UDF, the Python UDF must inherit the ScalarFunction for definition. A Function Context parameter is provided in the open method of Python UDF. Users can register Metrics through the Function Context parameter. Then, the registered Metrics object can be used for reporting.

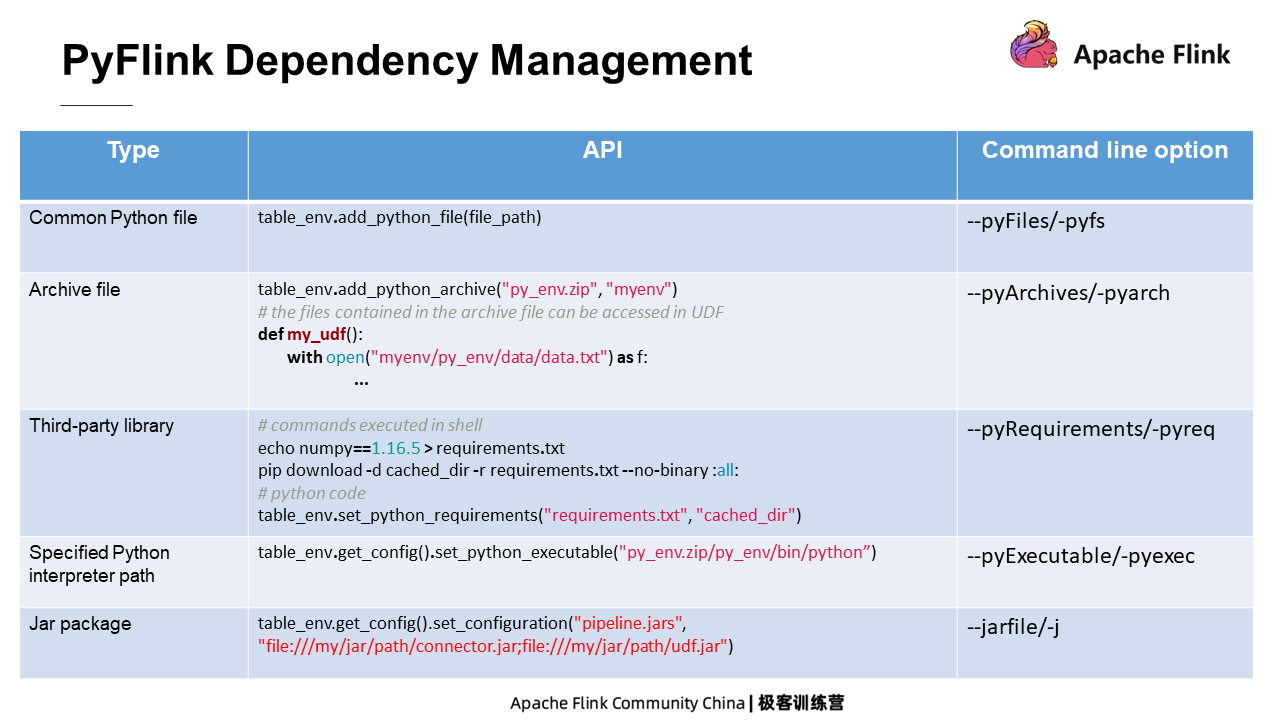

PyFlink dependencies are mainly divided into the following types: common PyFlink file, archive file, third-party library, PyFlink interpreter, and Java Jar package. For different dependencies, PyFlink provides two solutions. One is API, and the other is command line option. Users can choose either of them.

The execution optimization is mainly on the execution plan and the runtime. Similar to SQL jobs, for a job with a Python UDF, the optimizer first generates the optimal execution plan according to the predefined rules. After the execution plan is determined, other optimization methods can be used to achieve a high execution efficiency as much as possible.

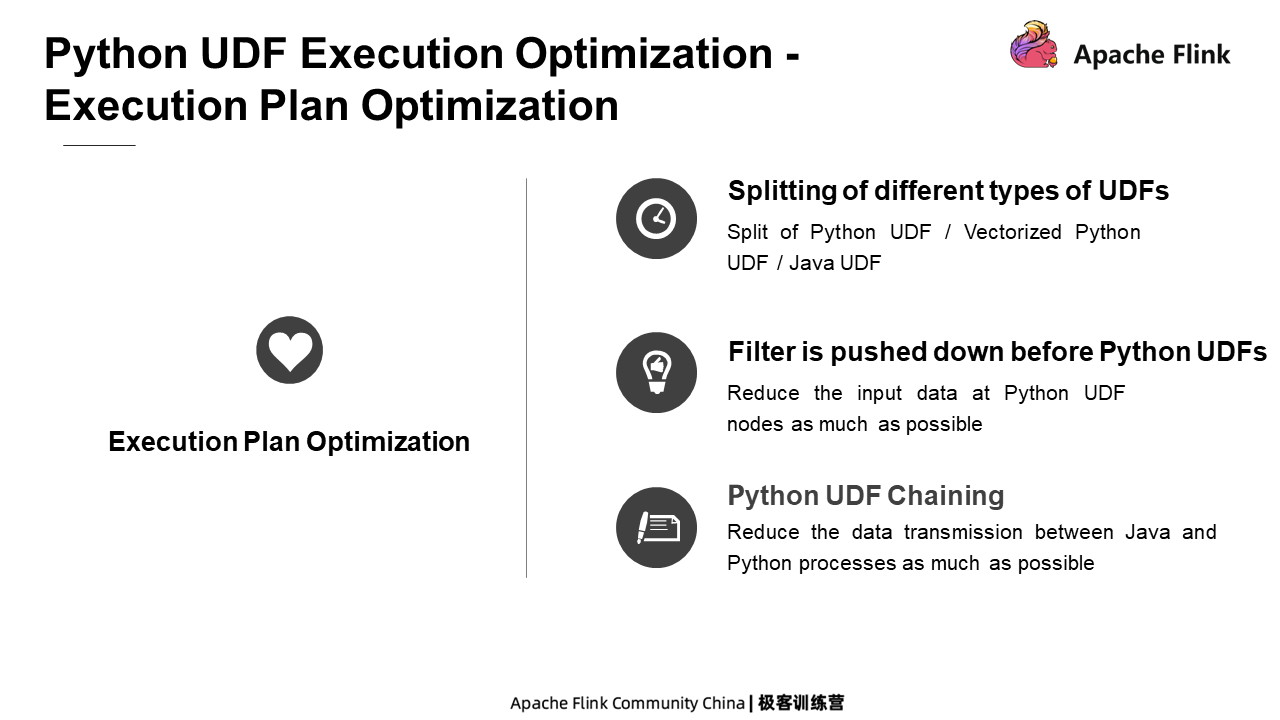

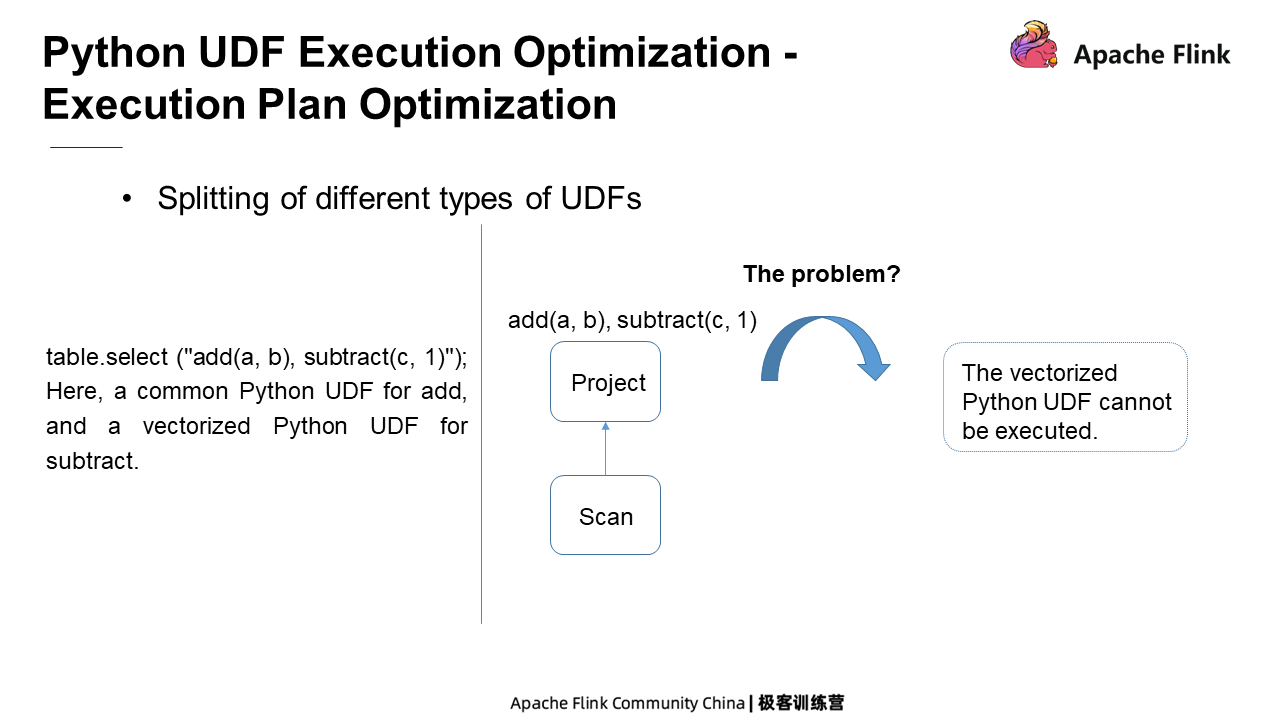

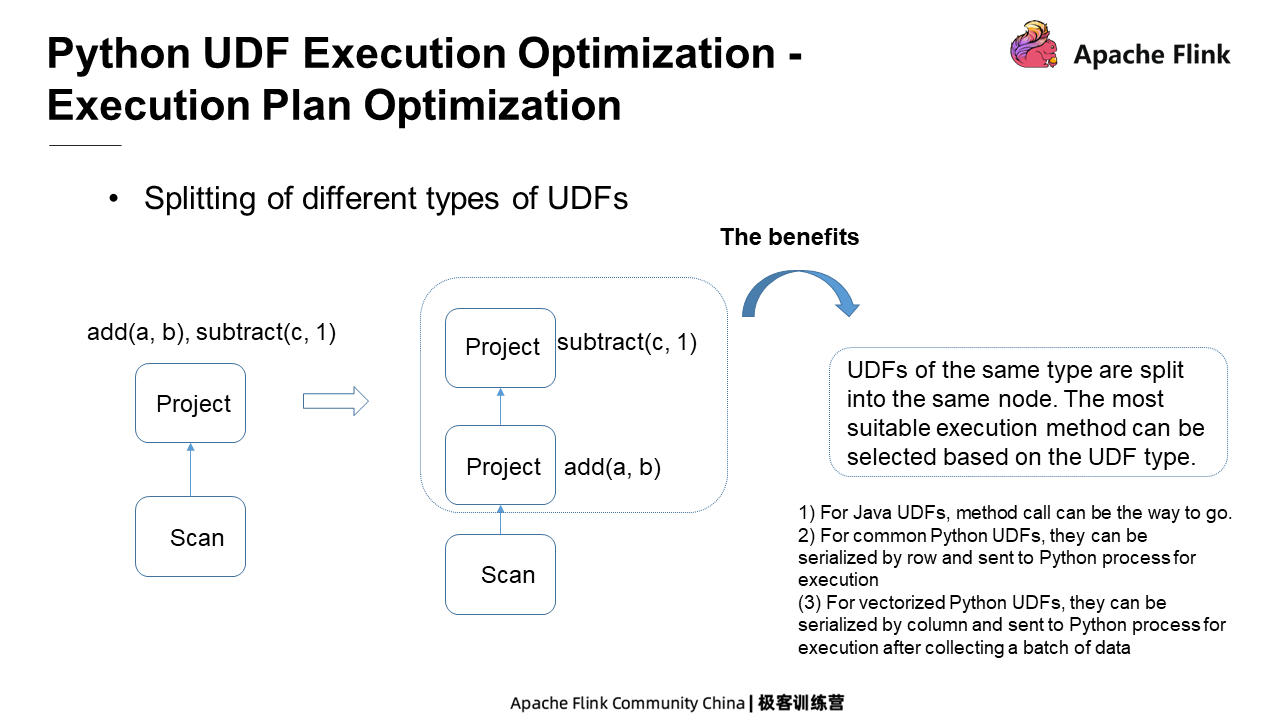

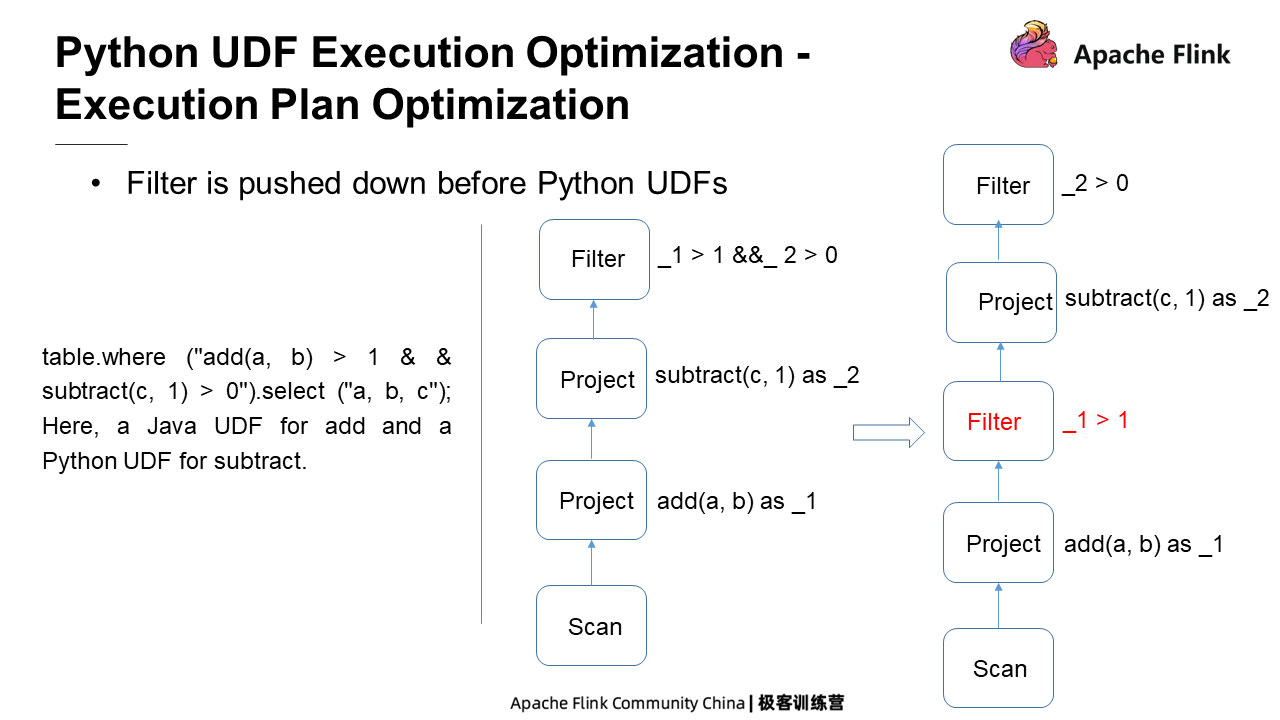

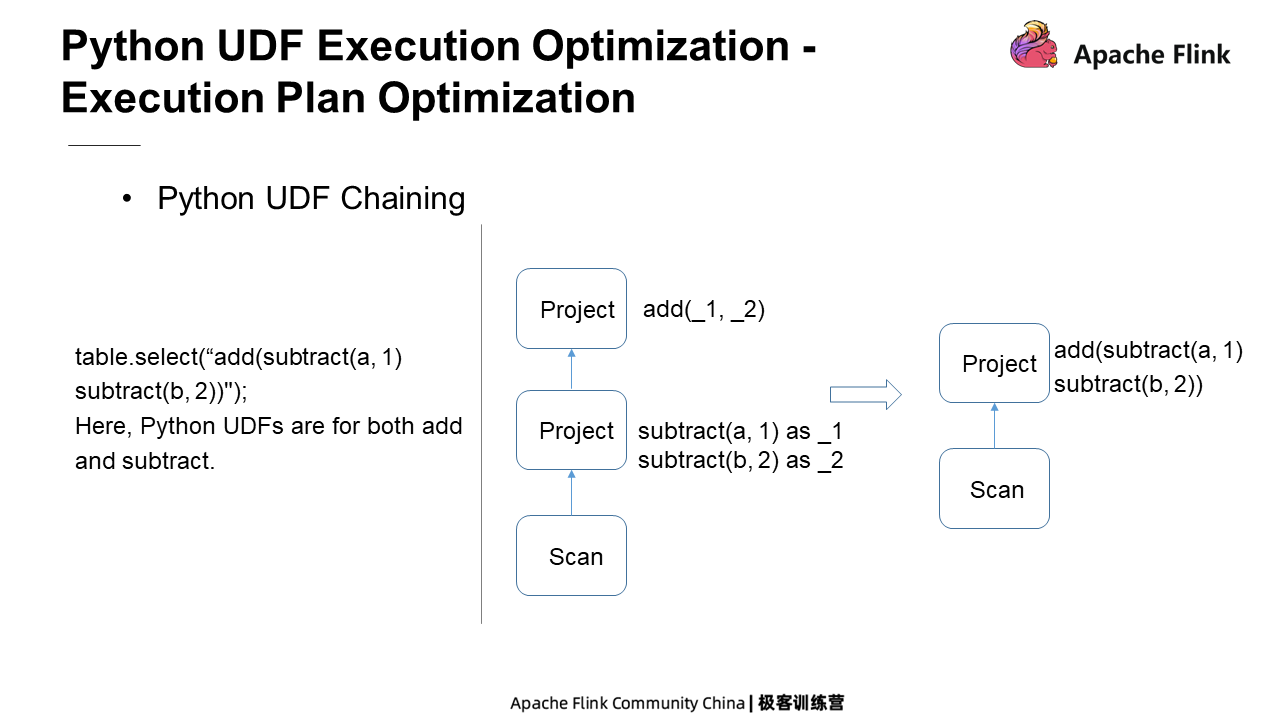

The optimization of the execution plan is carried on mainly from the following perspectives. Firstly, the splitting of UDFs of different types. A node may contain different types of UDFs at the same time, which cannot be executed together. Secondly, the Filter pushdown. It aims to reduce the amount of input data in nodes that contain Python UDFs as much as possible, thereby improving the execution performance of the entire job. Thirdly, the Python UDF Chaining. The communication, as well as the serialization and deserialization between Java and Python processes, leads to high overheads. However, Python UDF Chaining can minimize the overhead of the communication between Java and Python processes.

Suppose that there is a job containing two UDFs, with the Python UDF for add, and the vectorized Python UDF for subtract. By default, there is a project node in the execution plan of this job where the two UDFs are located at the same time. This execution plan is not viable because the common Python UDF processes one piece of data at a time, while the vectorized Python UDF processes multiple pieces of data at a time.

However, the project node can be split into two project nodes. The first one only contains common Python UDFs, while the second only contains vectorized Python UDFs. Different types of Python UDFs are split into different nodes, each node containing only one type of UDFs. Therefore, the operator can choose the most suitable execution mode based on the type of UDFs it contains.

The Filter pushdown aims at reducing the data volume of the Python UDF node as much as possible by pushing down the filtering operators before the Python UDF node. Suppose that there is a job whose original execution plan contains two project nodes, add and subtract, as well as a Filter node. This execution plan is runnable, but needs optimization. As can be seen, since the Python node is located before the Filter node, the Python UDF has already performed calculations before the Filter node. However, the input data of the Python UDF node can be greatly reduced if pushing down the Filter node to before the Python UDF.

Suppose that there is a job containing two types of UDFs, add and subtract, which are both common Python UDFs. There is an execution plan which contains two project nodes. The first project node computes the subtract first, and then passes it to the second project node for execution.

The main problem here is that since subtract and add are located at two different nodes, the computing results need to be sent back to the Java process from the Python process, and then sent to the second node of the Python process for execution. This means that the data is transmitted between the Java process and the Python process, resulting in completely unnecessary overheads for communication, serialization and deserialization. Therefore, the execution plan can be optimized as shown in the following figure, in which the add node and the subtract node run together. After the subtract node outputs the result, the add node can be directly called.

Currently, there are three methods to improve the runtime execution efficiency of Python UDFs. The first one is to improve the execution efficiency of Python code by Cython optimization. The second one is to customize the serializer and deserializer between Java and Python processes to improve serialization and deserialization efficiency. The third one is to implement vectorized Python UDFs.

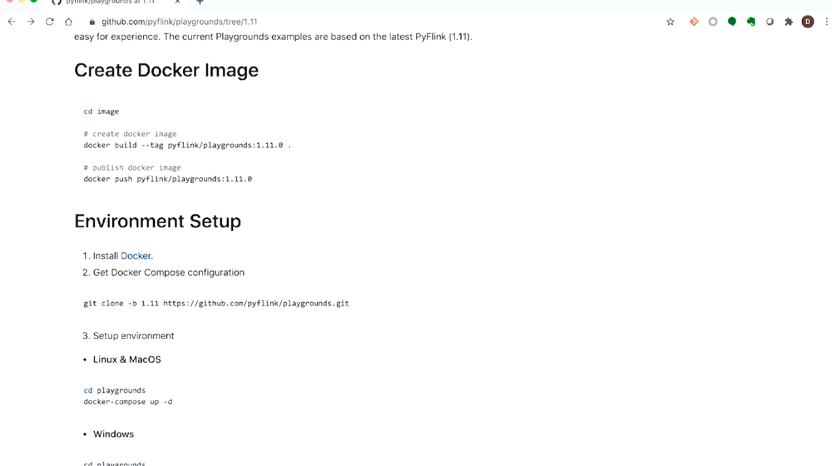

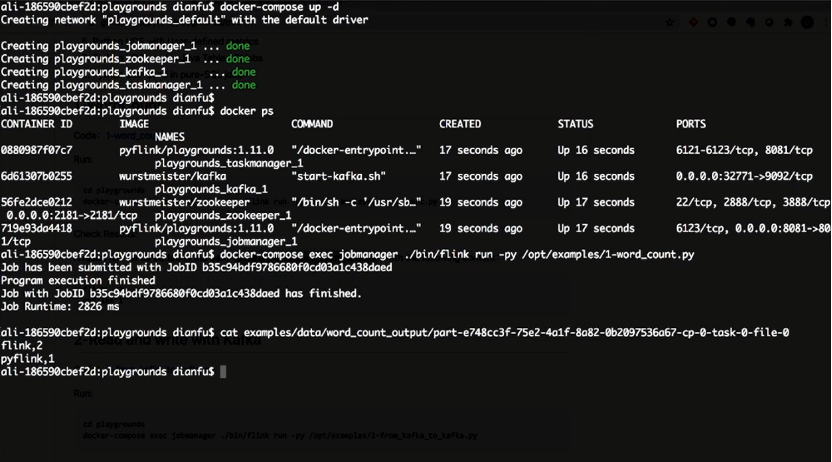

First, please go to this page which provides some PyFlink demos. These demos are run in docker. So please install the docker environment before running them.

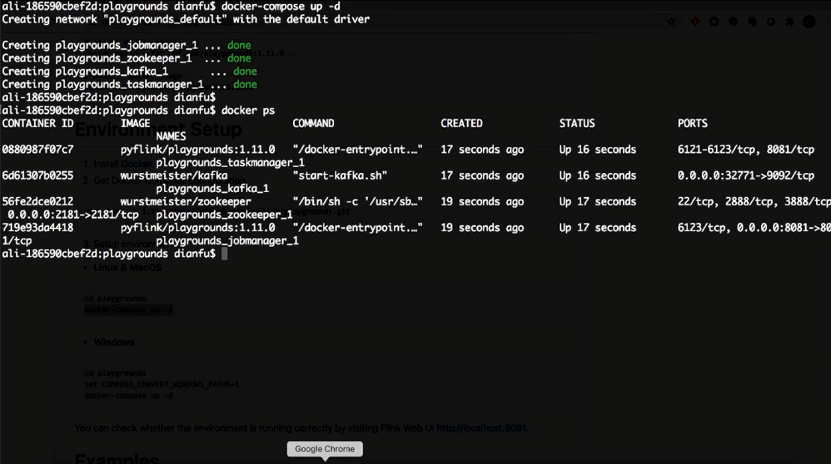

Then, run the command to start a PyFlink cluster. All the example PyFlink jobs run later will be submitted to the cluster for execution.

The first example job is the word count. First, define the environment, source, and sink in it. Then, run the job.

It can be seen from the following execution result that the word Flink appears twice and the word PyFlink appears once.

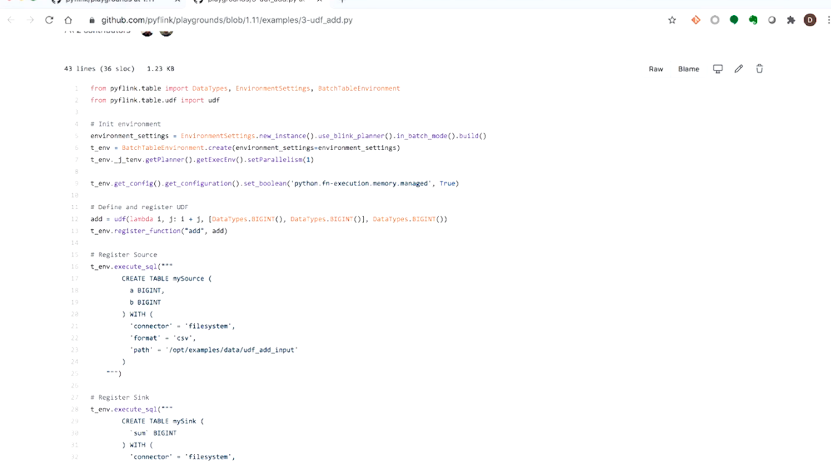

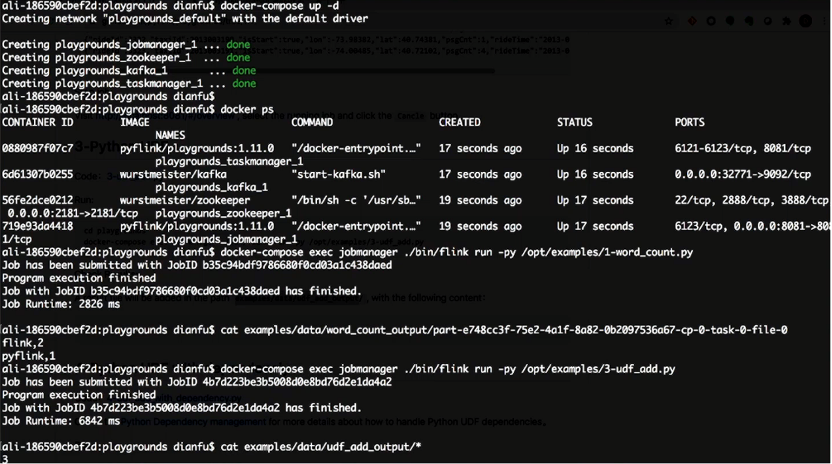

Next, run a Python UDF job. It is similar to the one mentioned above. First, define this job so that it can run in batch mode by using PyFlink with a job concurrency of 1. The difference is that a UDF is defined in the job. Its input includes two columns, both of the Bigint type with the corresponding output types. The logic of this UDF is to add the two columns together as a result for output.

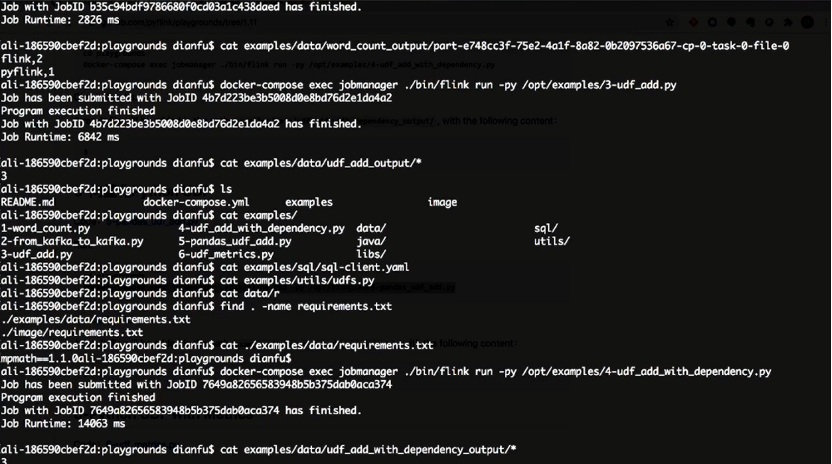

Execute the job, and the execution result is 3.

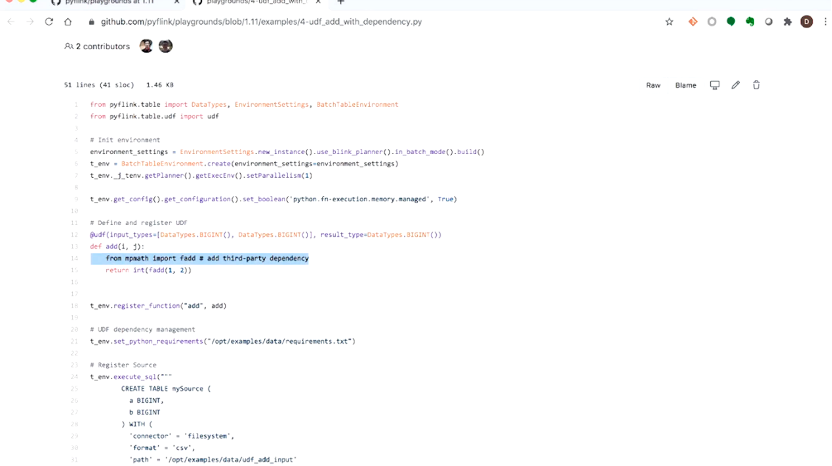

Then, run a Python UDF with dependencies. The UDF of the previous job does not contain any dependencies and directly adds the two input columns together. In this example, the UDF introduces a third-party dependency, which can be implemented through API set python requirements.

Next, run the job. Its execution result is the same as above, because the logics of the two jobs are similar.

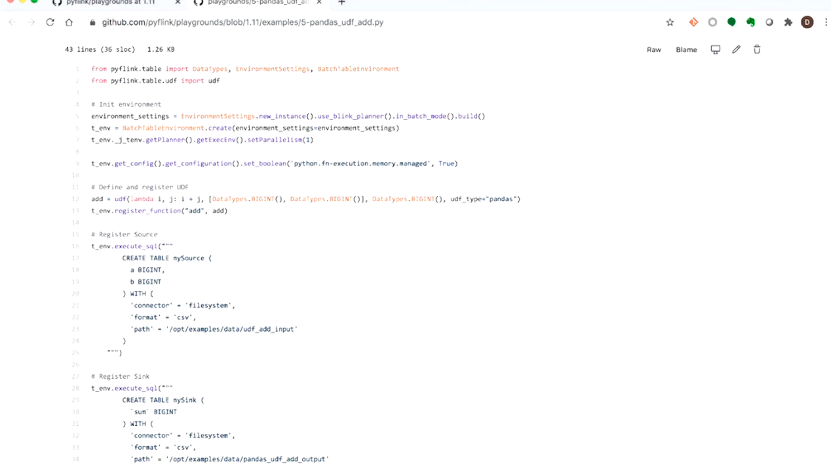

Now, let's look at the example job of vectorized Python UDF. A UDF type field is added to the UDF definition to declare a vectorized Python UDF. Other logics of the vectorized Python UDF are similar to those of a common Python UDF. Its final execution result is also 3, because it has the same logic as above which is to calculate the sum of two pages.

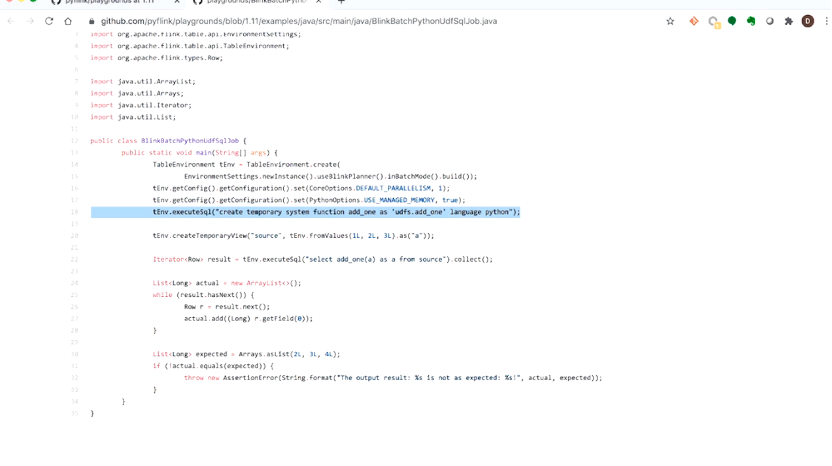

The following example shows how to use Python in a Java Table job. Another Python UDF is used in this job, which is registered by using the DDL statement and then used in the execute SQL statement.

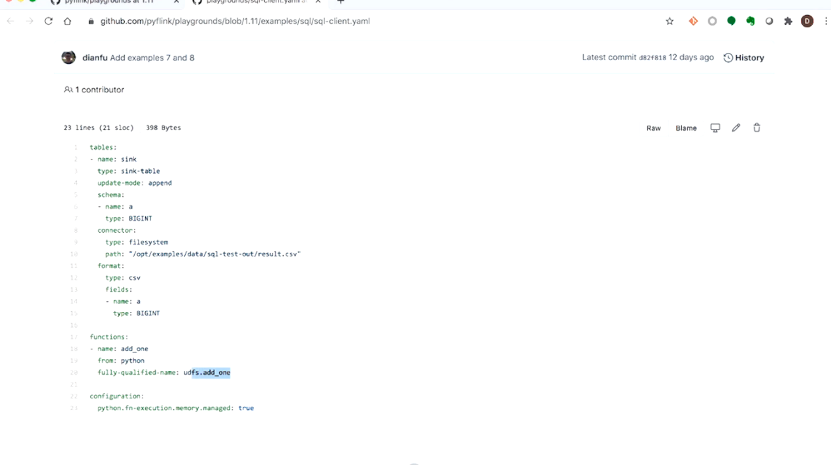

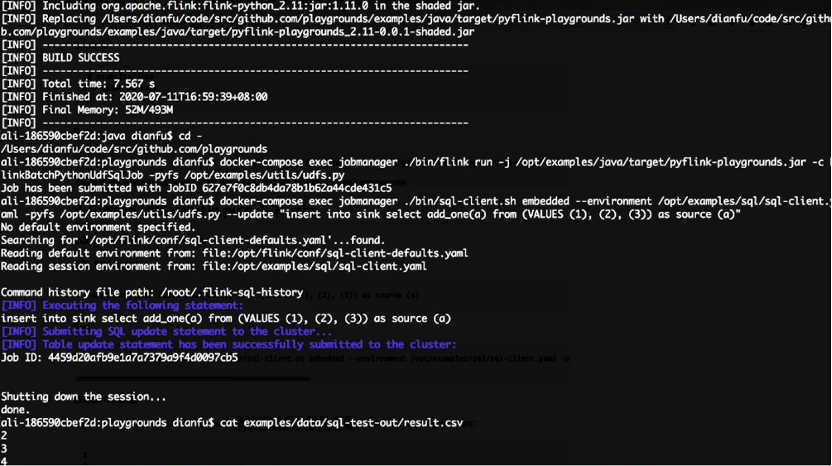

Then there is an example of using Python UDFs in SQL jobs. In the resource file, a UDF named add1 of the Python type is declared. Its UDF location is there to see.

Run it, and the execution result is 234.

Currently, PyFlink only supports the Python Table API. It is planned that the DataStream API, Python UDAF, and Pandas UDAF will be supported in the next version. Besides, the execution efficiency of PyFlink will continuously be optimized at the execution layer.

Here are some resource links, including PyFlink documentation.

Well, that's all for today. Please stay tuned to our subsequent courses.

Flink Course Series (5): Introduction and Practice of Flink SQL Table

206 posts | 56 followers

FollowApache Flink Community China - April 23, 2020

Alibaba Clouder - April 25, 2021

Apache Flink Community China - December 25, 2019

Apache Flink Community China - August 22, 2023

Data Geek - May 9, 2023

Apache Flink Community China - September 29, 2021

206 posts | 56 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More .COM Domain

.COM Domain

Limited Offer! Only $4.90/1st Year for New Users.

Learn MoreMore Posts by Apache Flink Community