By Li Rui

Before explaining the principles, let's first discuss the reasons for using Flink SQL. SQL is a standard data query language, and Flink SQL can integrate with various systems through Catalog. In addition, it provides a variety of built-in operators and functions. Moreover, Flink SQL can process batch data and streaming data simultaneously, significantly improving the efficiency of data analysis.

Then, why does Flink SQL connect to external systems? Flink SQL itself is a stream computing engine that does not maintain any data. For Flink SQL, all data is stored in external systems; all tables are stored in external systems. Only when it connects to these external systems it can read and write data.

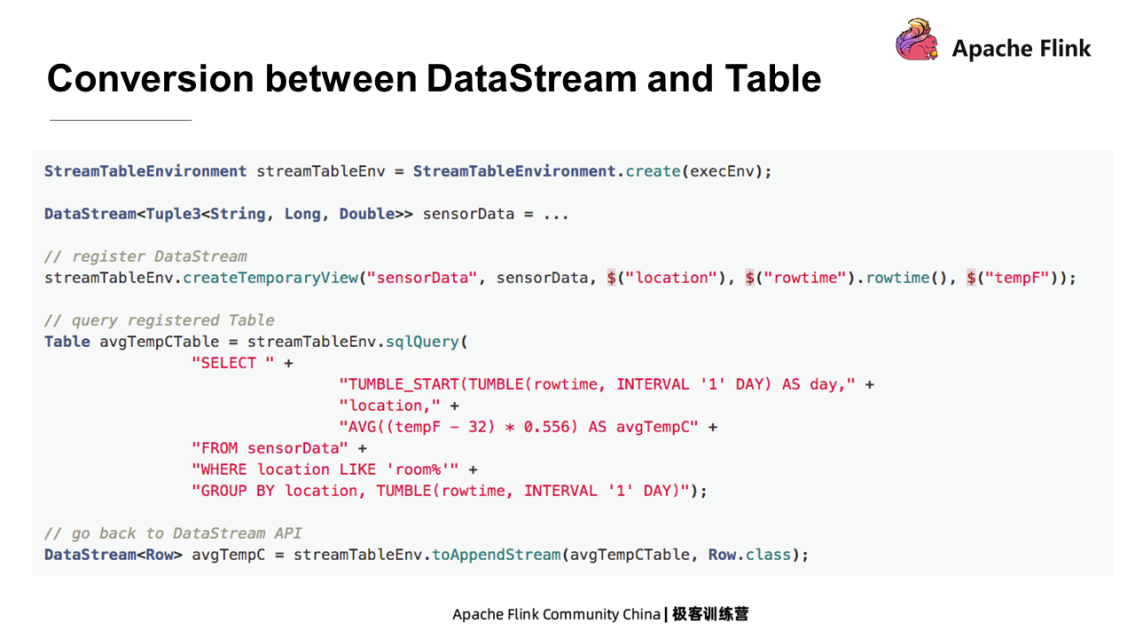

Before explaining how Flink SQL connects to external systems, let's examine how DataStream and Table are converted in Flink. Assume a DataStream program that can be converted into a Table and queried with powerful Flink SQL. As shown in the following example, this is similar to the internal connection of Flink SQL.

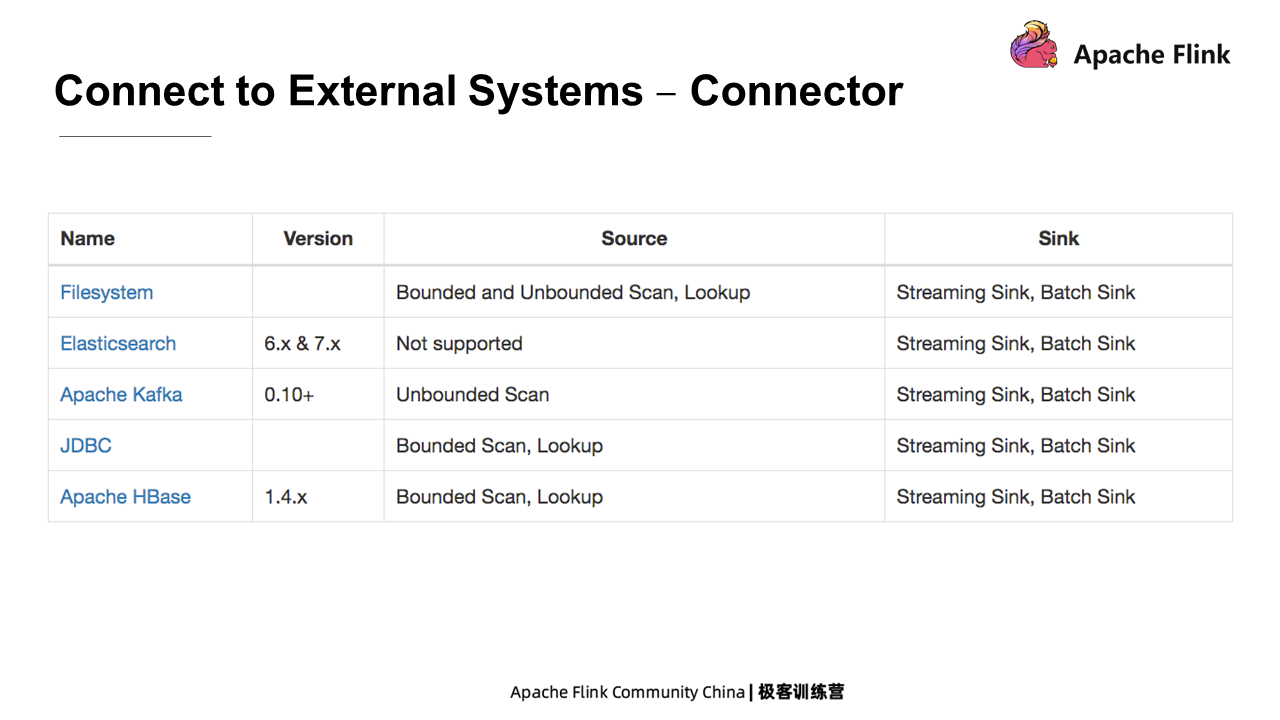

For Flink SQL, the components for connecting to external systems are called Connectors. The following table lists several commonly used Connectors supported by Flink SQL. For example, Filesystem for connecting to a file system, and JDBC for connecting to an external relational database. Each Connector is mainly responsible for implementing one source and one sink. The source reads data from external systems, and the sink writes data to external systems.

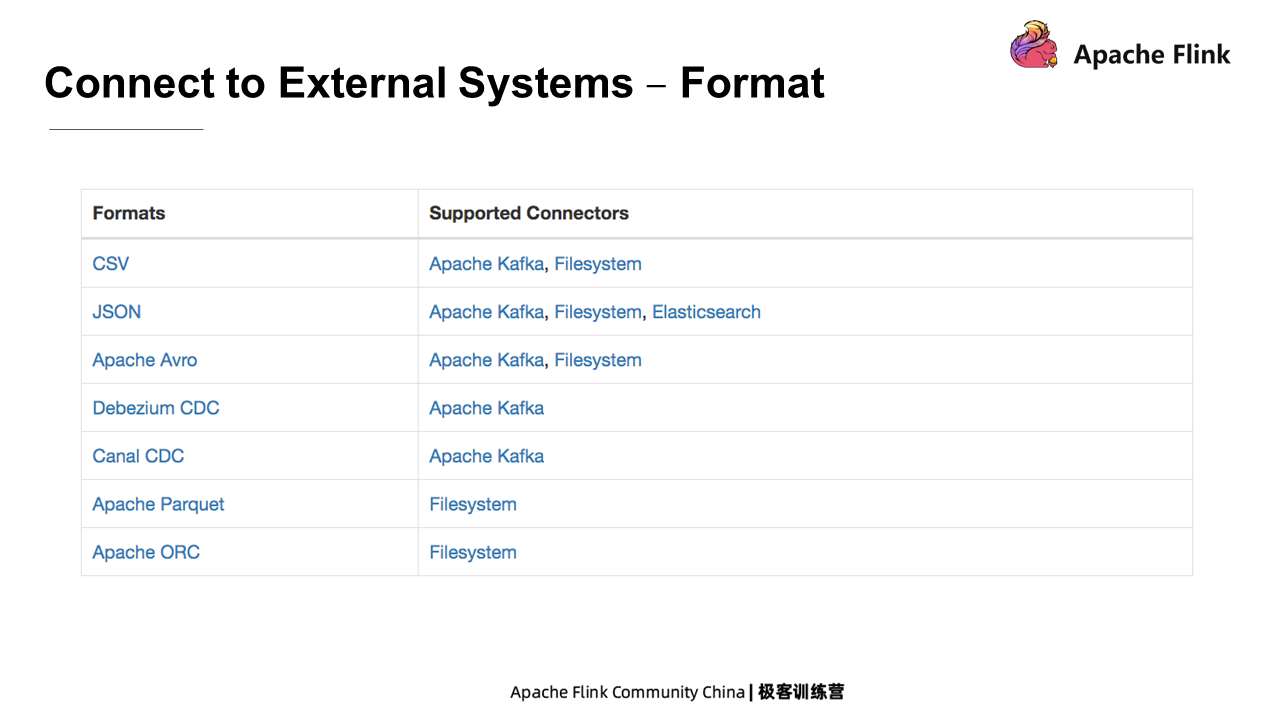

The Format specifies the data format in an external system. For example, the data in a Kafka table may be stored in CSV or JSON format. So when specifying a Connector to connect to an external table, the Format is usually specified so that Flink can correctly read and write the data.

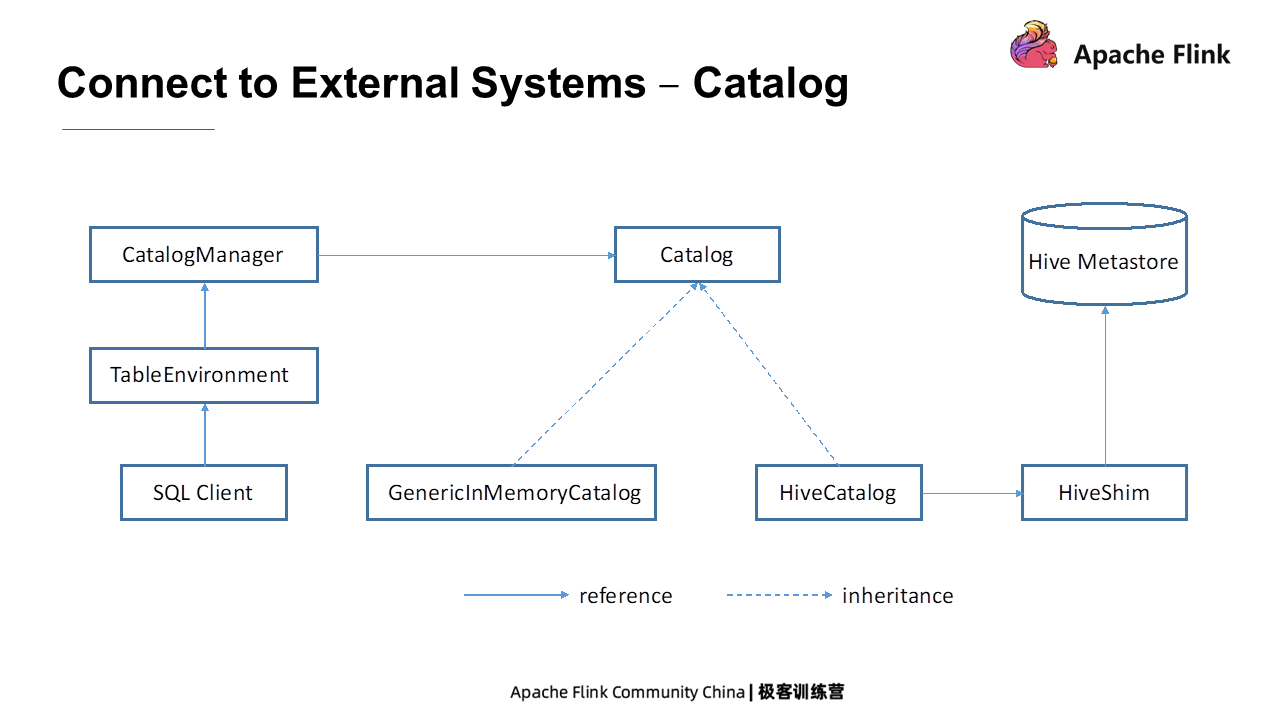

Catalog connects to the metadata of an external system. It provides metadata information to Flink so that Flink can directly access tables or databases that have been created in external systems. For example, Hive metadata is stored in Hive Metastore. If Flink wants to access the Hive table, a Hive catalog must connect to the metadata. The Hive Catalog can also help Flink to persist its metadata. For example, HiveCatalog can help Flink access Hive and store the information of tables created by Flink. As such, users can directly read the table created in Hive Metastore without creating a table when starting the Session.

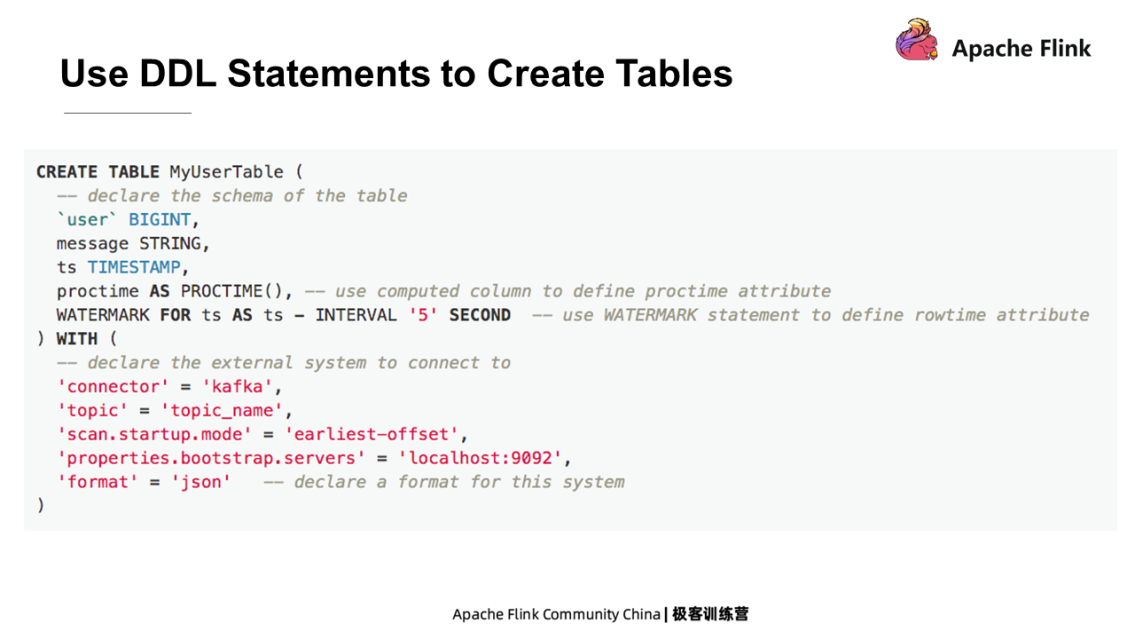

How to create a table and specify the Connector? The following is a table created using DDL statements. This is a standard Create Table statement, in which all Connector-related parameters are specified within a clause, such as the "'connector' = 'Kafka'."

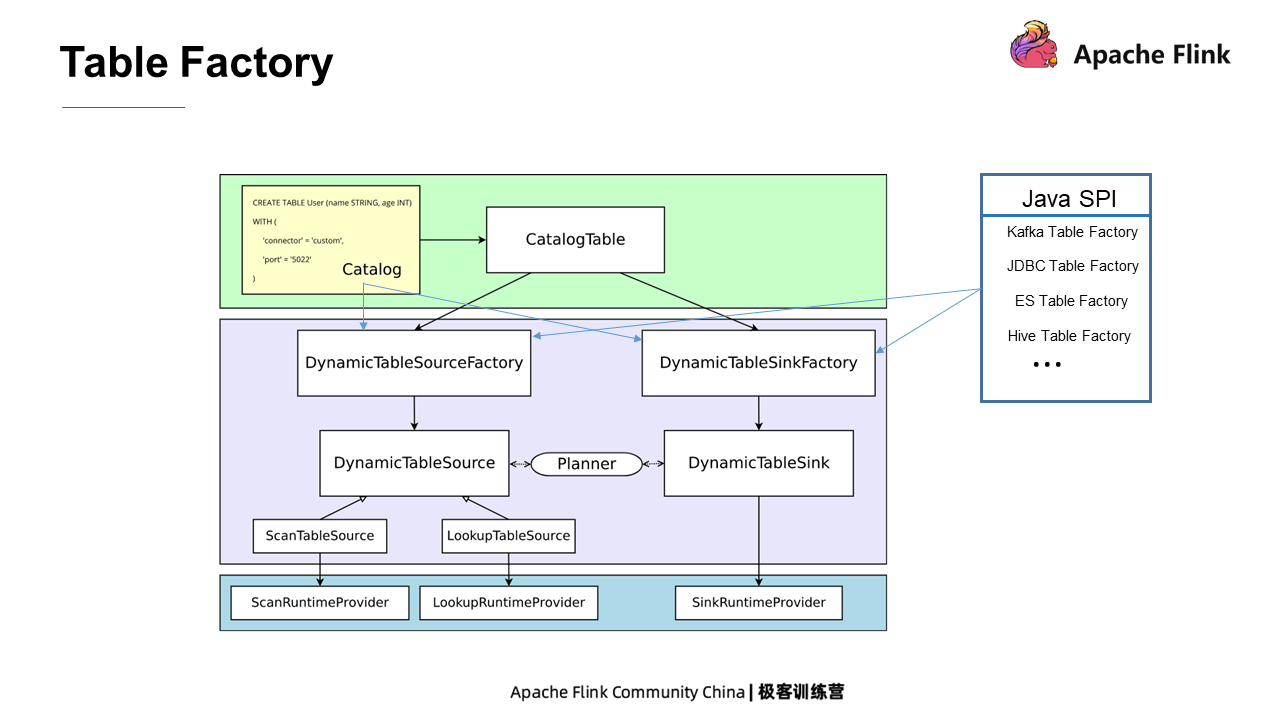

After a table is created using DDL statements, how can it be used in Flink? Here is a crucial concept – Table Factory. In this yellow box shown as follows, a table can be created through DDL or obtained from an external system through Catalog and converted into a Catalog Table object. When using the Catalog Table in an SQL statement, Flink creates a corresponding source or sink for this table. The module that creates the source and sink is called Table Factory.

Table Factory can be obtained in two ways. One is that the Catalog itself is bound to a Table Factory. The other is to determine the Table Factory through Java SPI. Nevertheless, when it searches, errors will be reported unless there is a corresponding Table Factory.

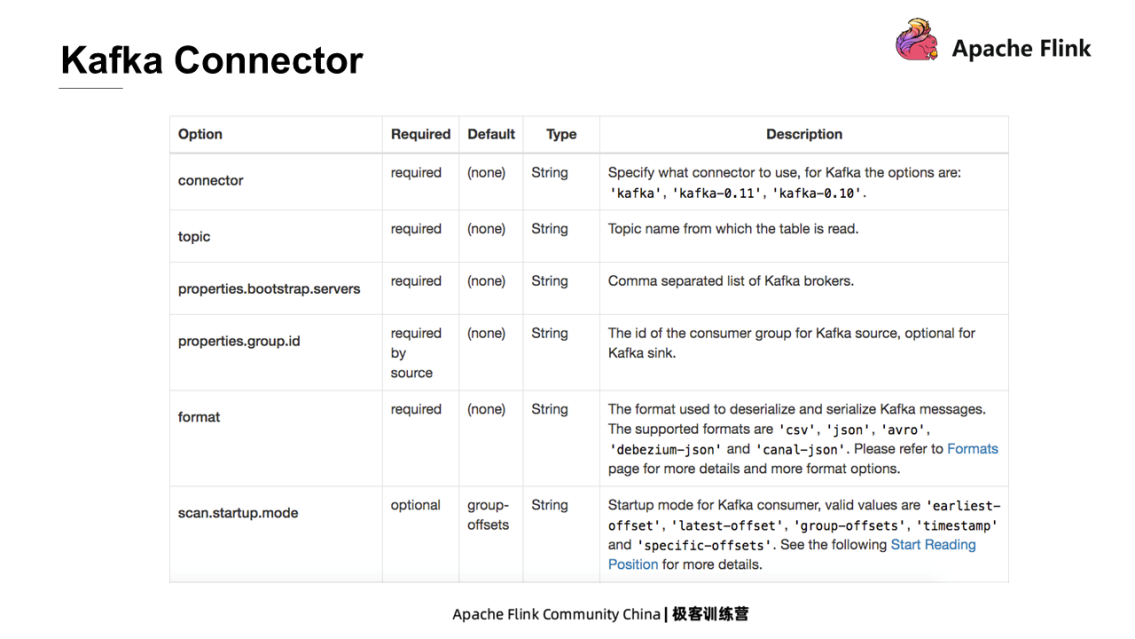

Kafka Connector is used most frequently. Since Flink is a stream computing engine, and Kafka is the most popular message queue, Kafka is used by most Flink users. We have some specific parameters to create a Kafka table. For example, specify the Connector field as Kafka and define the topic that Kafka corresponds to. These parameters and their definitions are shown in the following figure.

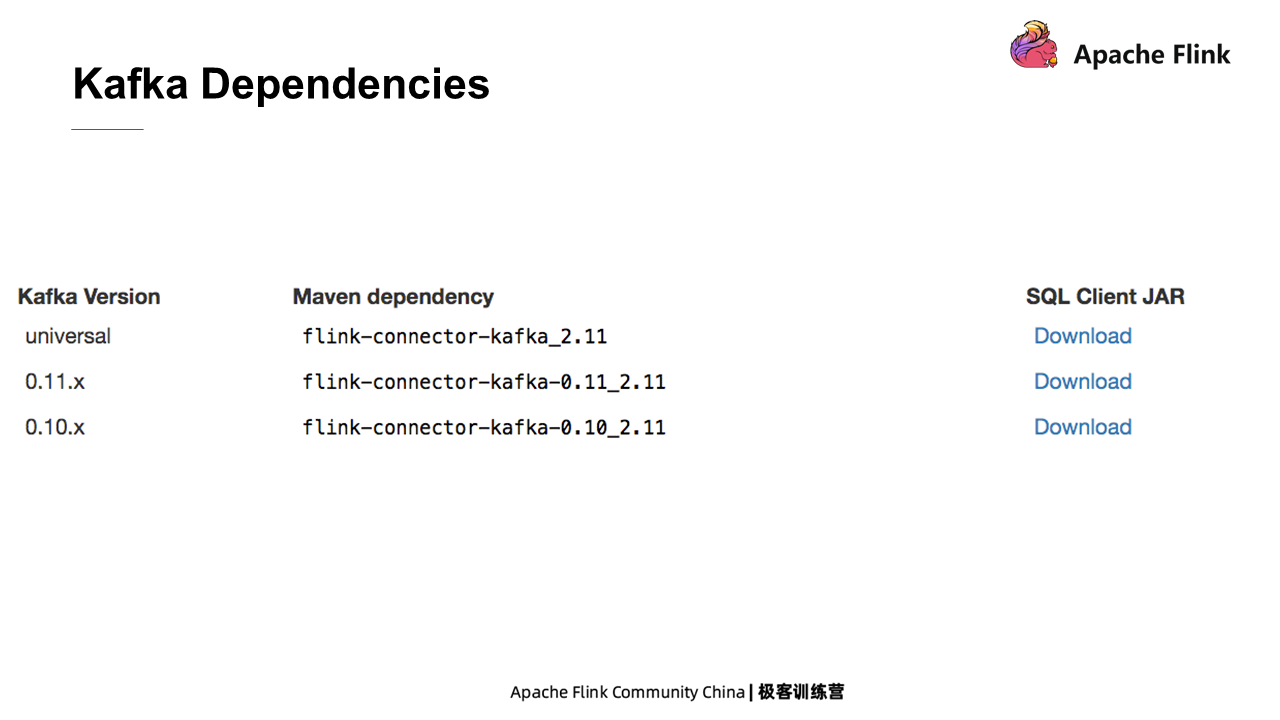

We may need to add some Jar packages of Kafka dependencies to use Kafka Connector. The Jar packages vary with Kafka versions. Users can download all the Jar packages from the official website

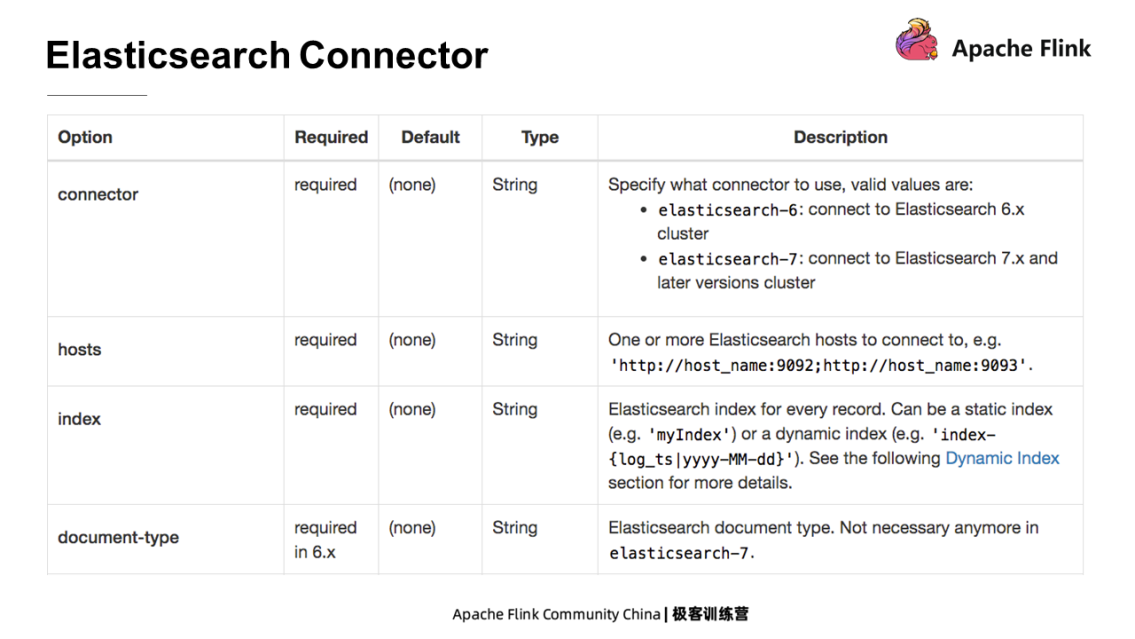

Elasticsearch Connector only implements Sink, so data can only be written into ES but cannot be read. Its connector type can be specified as ES6 or ES7. The hosts' option specifies the nodes of ES in the format of the domain name and port number. The index option specifies the index of ES, which is similar to a traditional database. The document type is identical to a table in a conventional database, but it does not need to be specified in ES7.

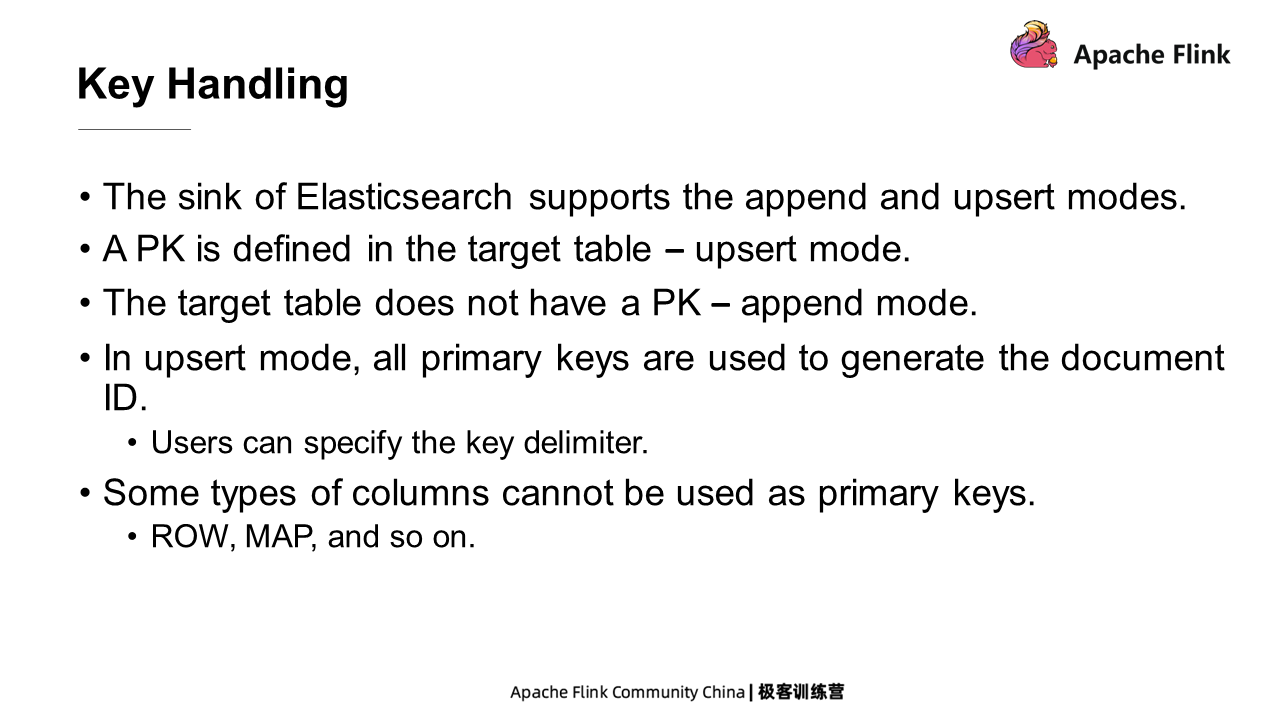

The Sink of ES supports the append and upsert modes. If a PK is specified in the ES table, Sink works in the upsert mode. If not, it works in the append mode. Columns like ROW and MAP cannot be used as PK.

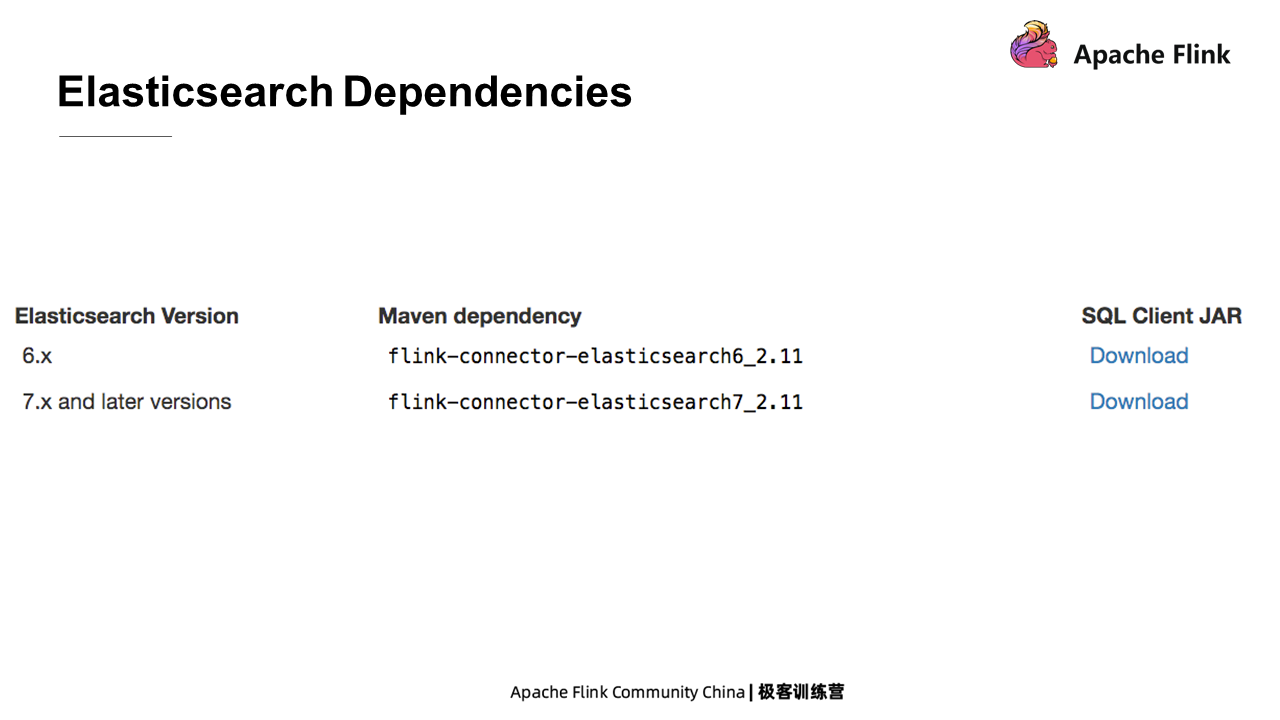

Similarly, additional dependencies need to be specified to use ES. Add ES dependencies according to different ES versions.

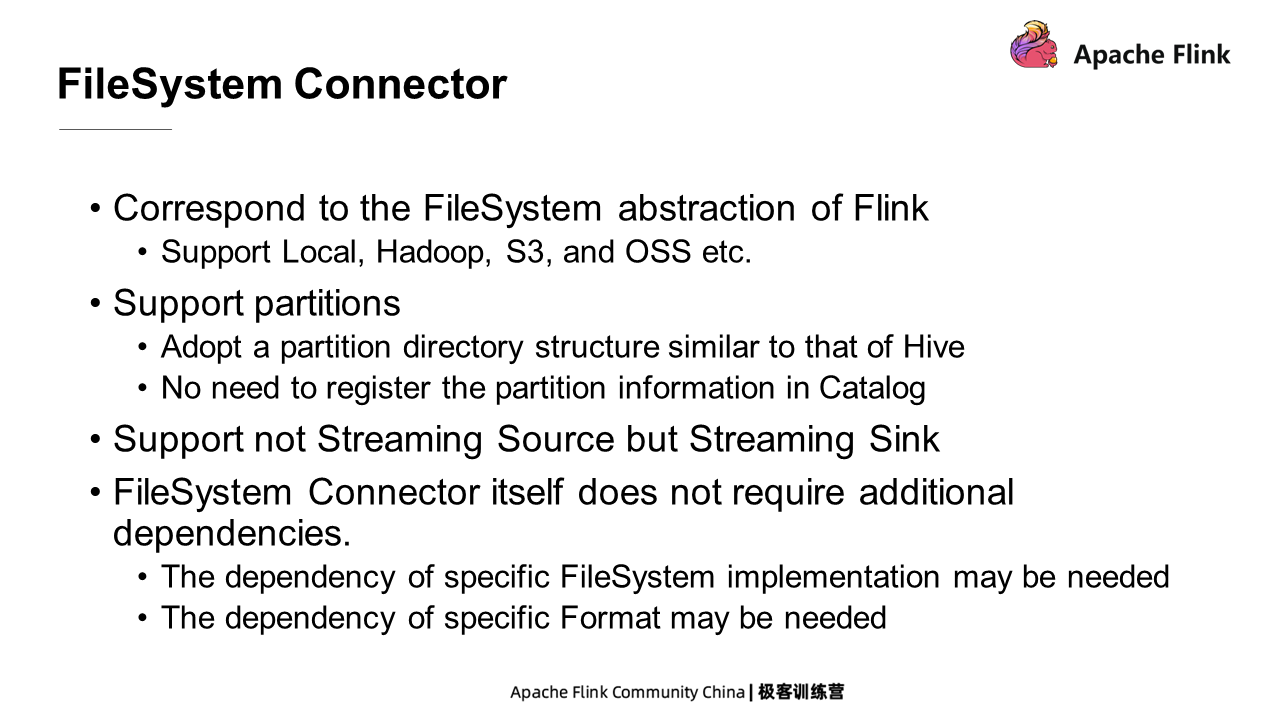

This Connector connects to a file system. What it reads and writes are the files on the file system. The FileSystem here refers to the FileSystem abstraction of Flink. It supports multiple implementations, such as implementing the local file system, Hadoop, S3, OSS, and so on. At the same time, it also supports partitions, with a partition directory structure similar to that of Hive. However, the partition information does not need to be registered in Catalog.

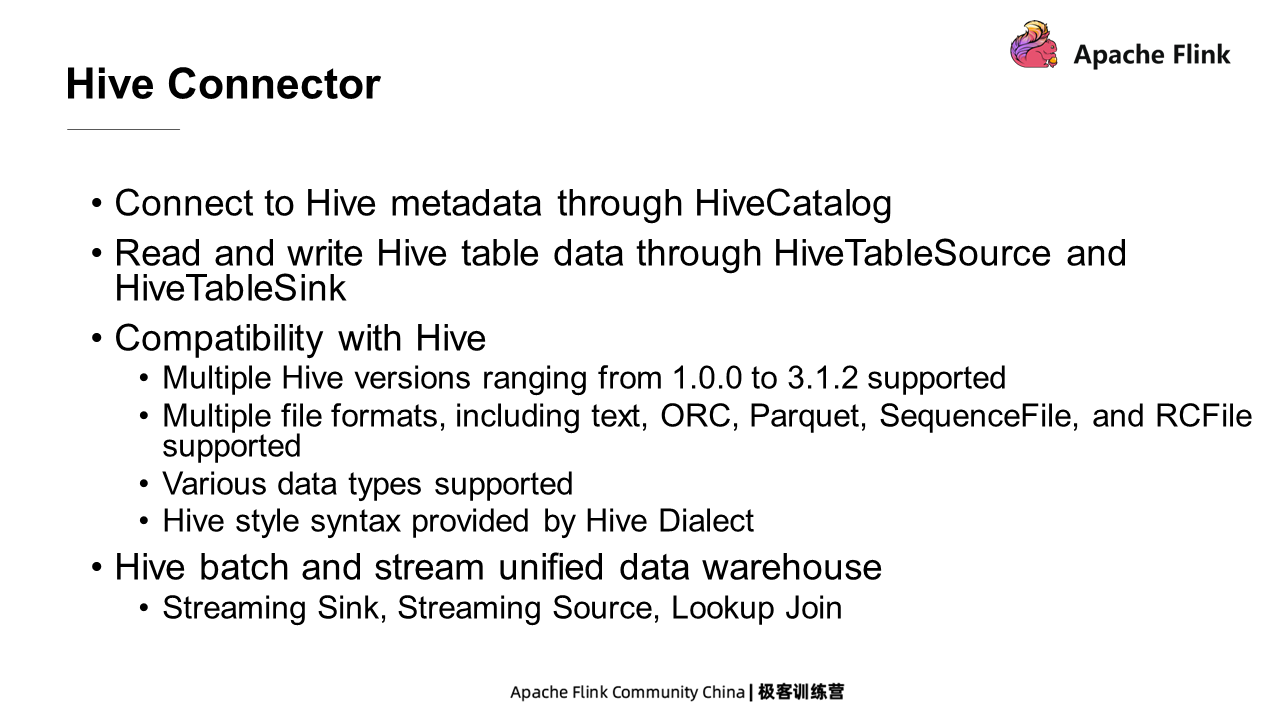

Hive is the earliest SQL engine used by most users in batch processing scenarios. Hive Connector can be divided into two layers. First, connect to Hive metadata through HiveCatalog. Second, read and write the Hive table data through HiveTableSource and HiveTableSink.

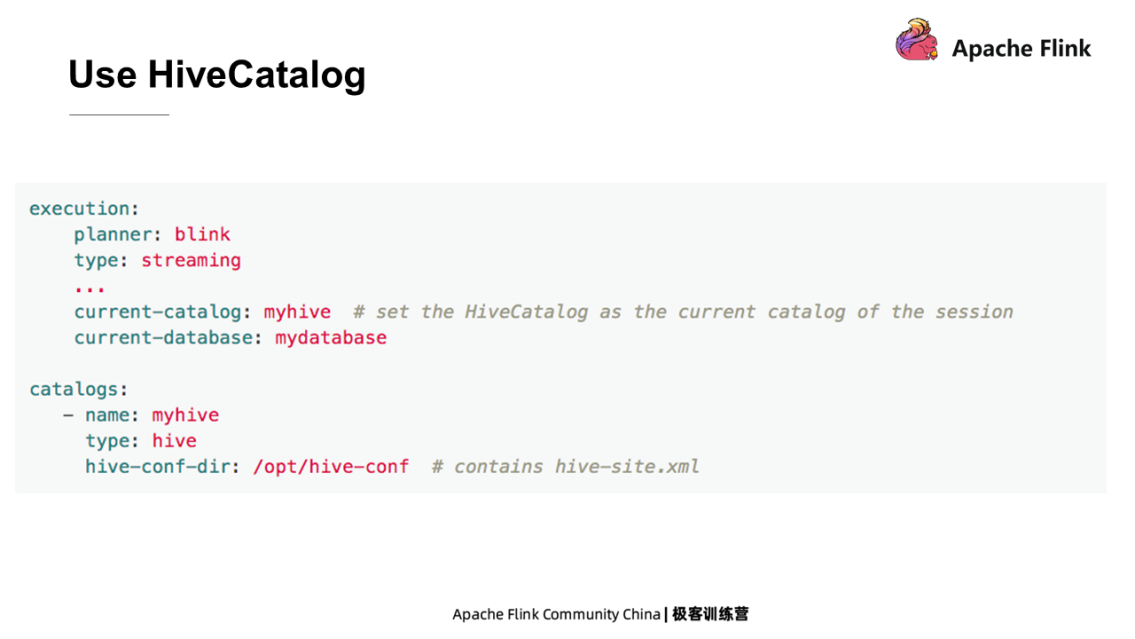

To use Hive Connector, Hive Catalog must be specified. The following example shows how to identify the Hive Catalog.

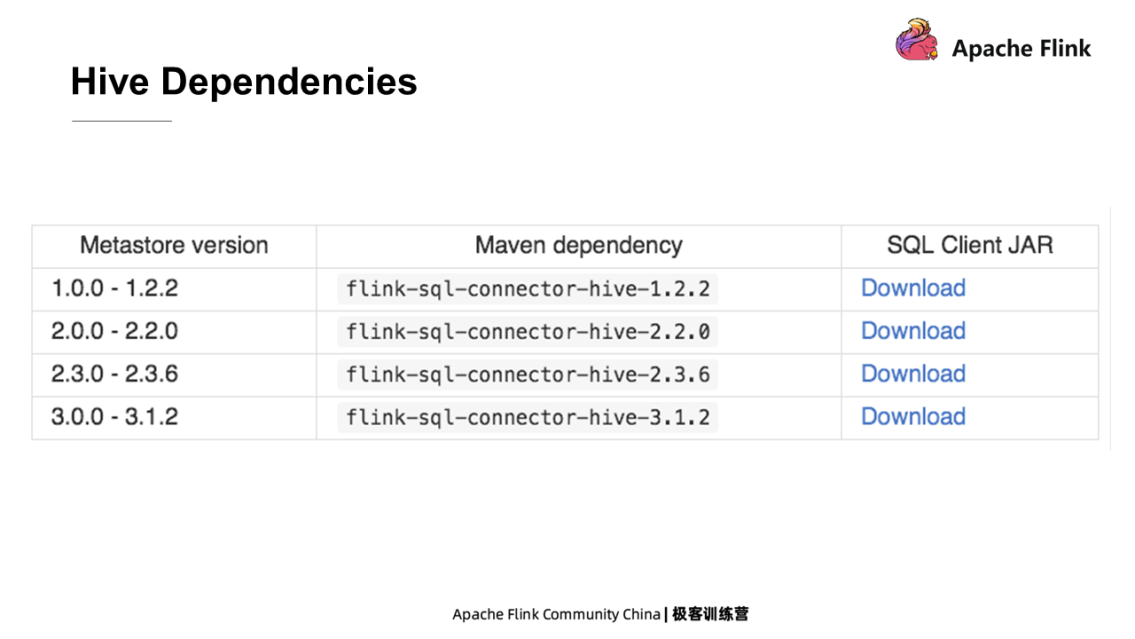

We need to add some additional dependencies to use Hive Connector. Users can select Jar packages according to the Hive version.

There are built-in connectors in addition to those connecting to external systems. They help new users get started with the powerful functions of Flink SQL as soon as possible and help Flink developers with code debugging.

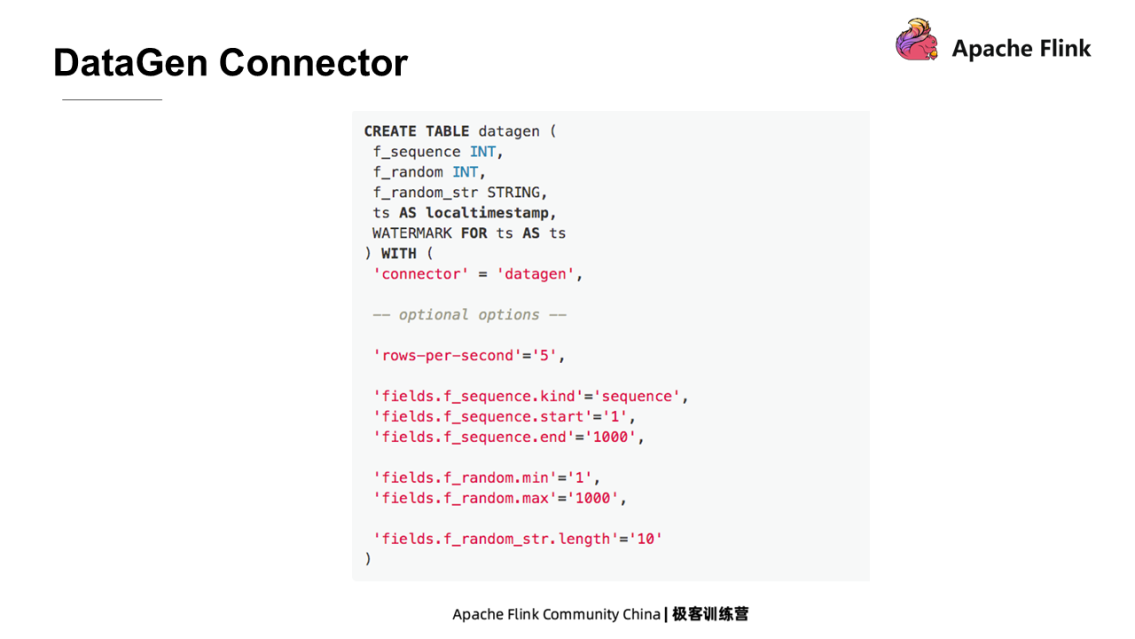

DataGen Connector is a data generator. For example, a DataGen table is created, and several fields are specified in this table. Specify the Connector type as DataGen and read the table. Then, the Connector will generate data. That is, the data is generated instead of being stored somewhere in advance. Users can have some fine-grained control over the DataGen Connector. For example, they can specify how many rows of data are to be generated per second and the field to be created in sequence, in ascending order, or at random.

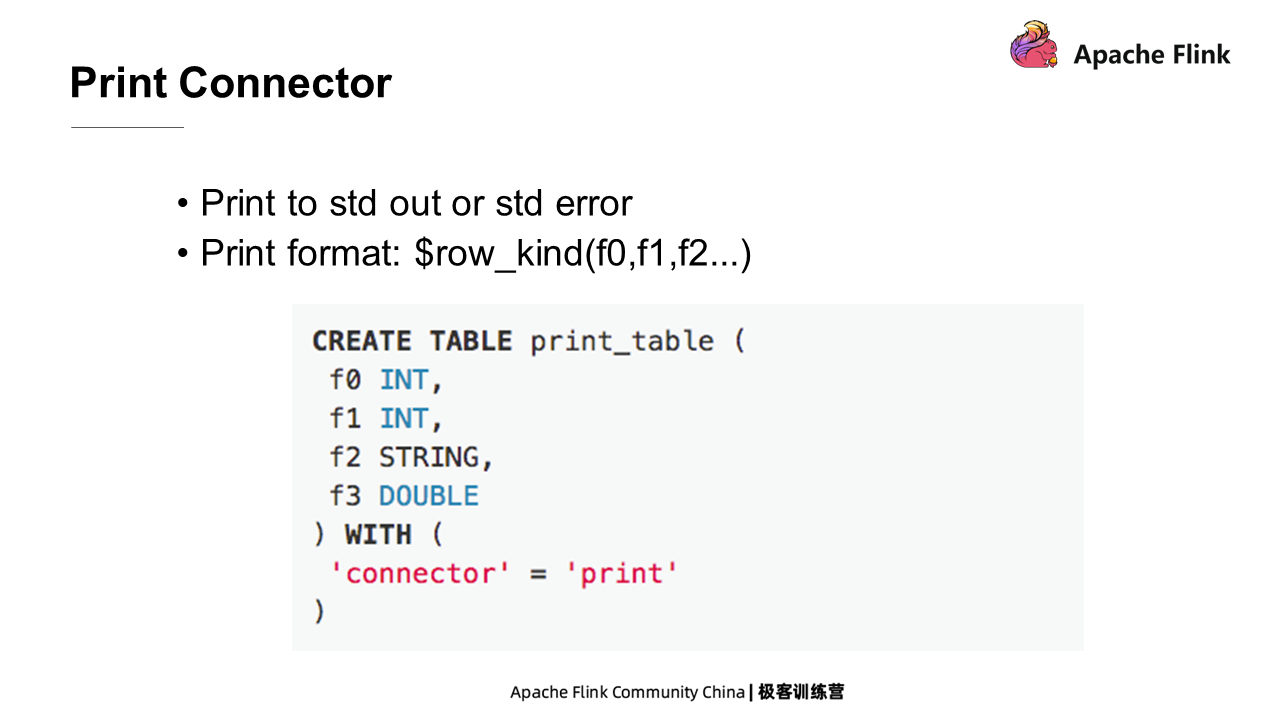

Print Connector provides the Sink feature, which prints all data to the standard output or standard error output. There is a row kind in the front of the print format. It is only needed to specify the Connector type as print when creating a print table.

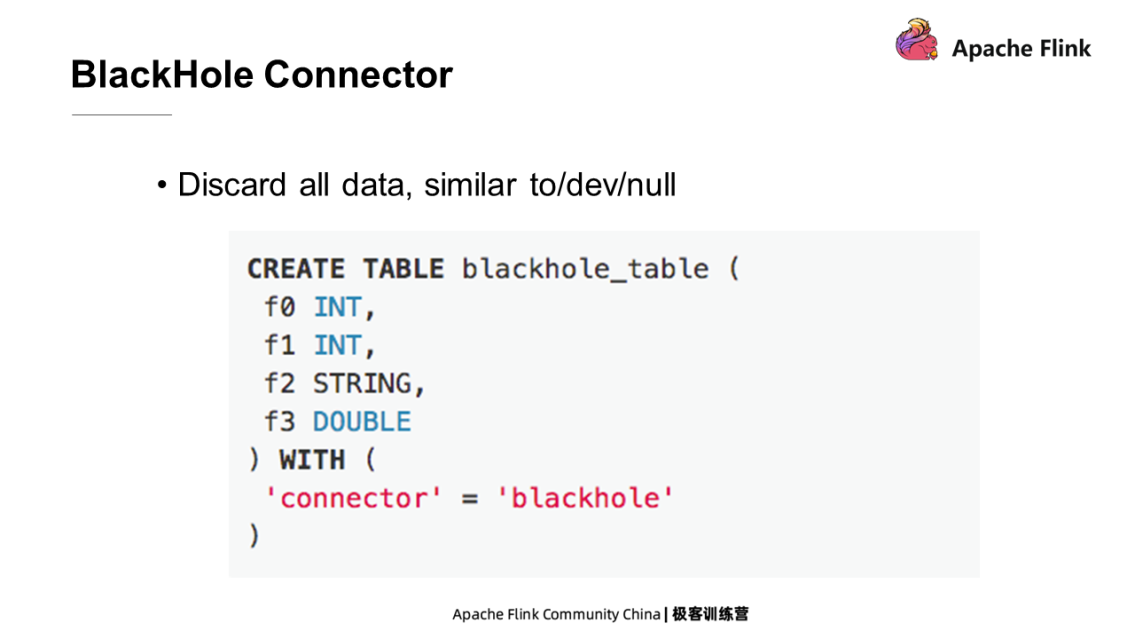

BlackHole Connector is also a Sink. It discards all data, which means that it does nothing when the data is written. It is mainly used for performance testing. To create a BlackHole, specify the Connector type as BlackHole.

Flink Course Series (8): Detailed Interpretation of Flink Connector

206 posts | 54 followers

FollowApache Flink Community China - August 2, 2019

Apache Flink Community China - August 4, 2021

Apache Flink Community China - August 6, 2021

Apache Flink Community China - November 6, 2020

Alibaba Cloud Community - January 4, 2026

Apache Flink Community - August 4, 2021

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community