By Li Yu (Jueding), Apache Flink PMC and Alibaba's senior technical expert.

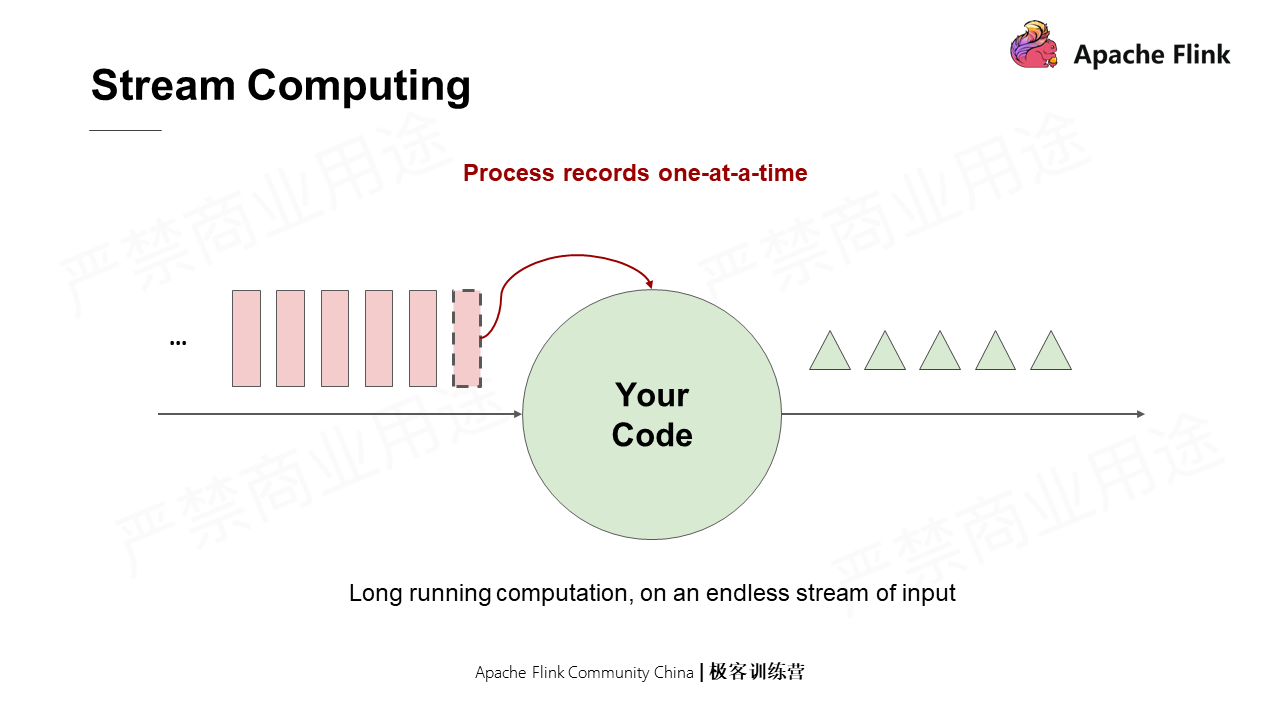

In stream computing, a data source continuously sends messages while a resident program runs the code simultaneously to process the messages received from the data source and outputs the results in downstream.

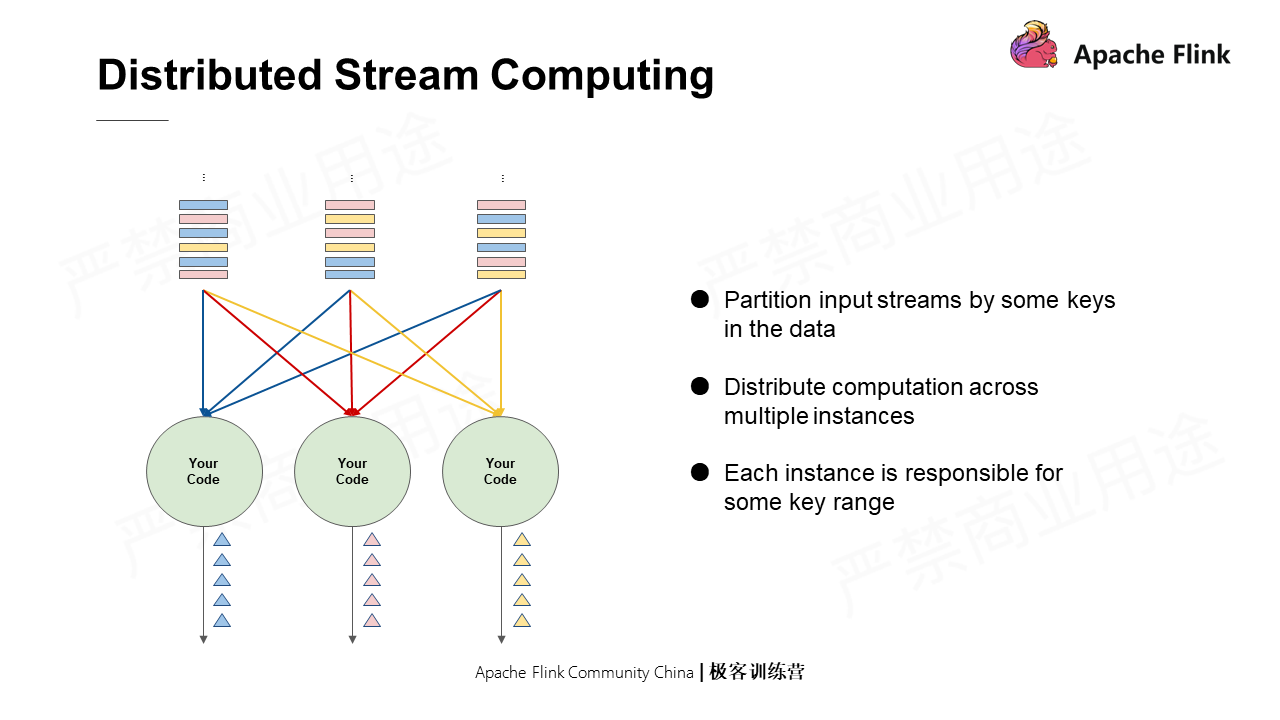

In distributed stream computing, the input streams are divided in a certain way and then processed with multiple distributed instances.

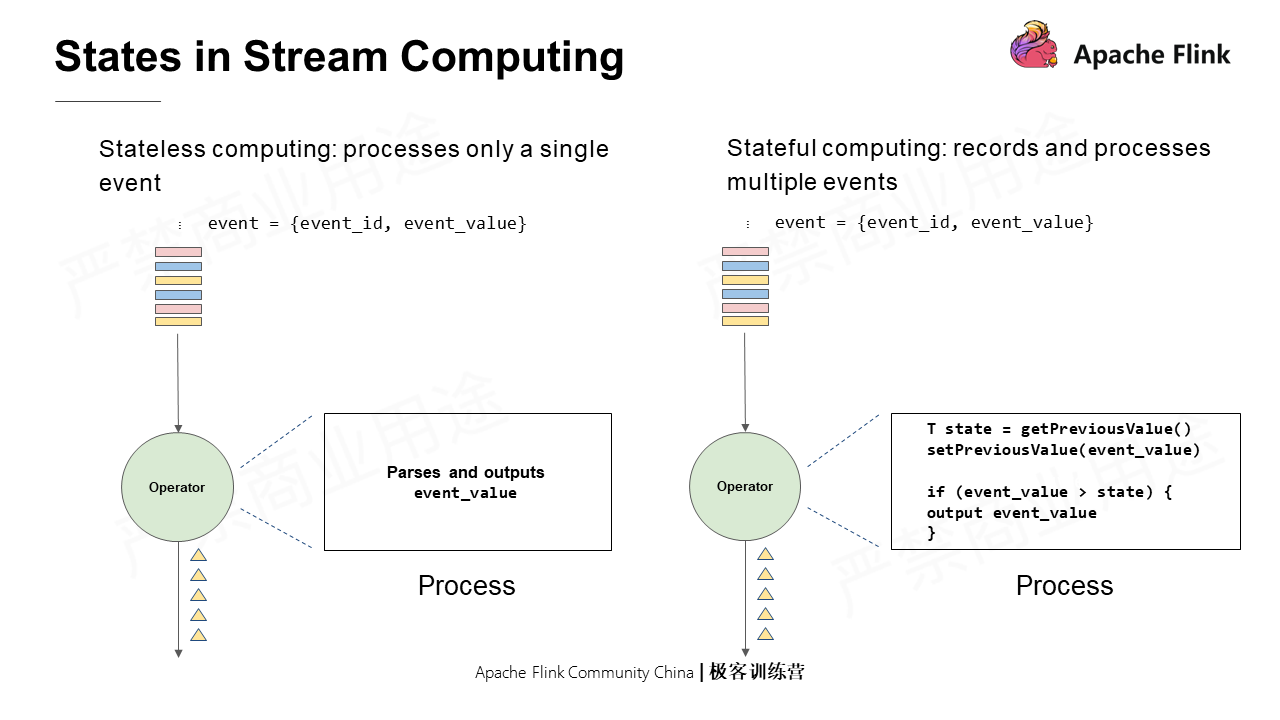

Computations can be classified into stateful and stateless computations. Stateless computations process a single event, while stateful computations need to record and process multiple events.

Here is a simple example: an event is composed of an event ID and an event value. The processing logic in which each event received is parsed and the event value is output indicates a stateless computation; on the contrary, if each event received is parsed and the event value obtained is output only when it is greater than the previous one after comparison, it is a stateful computation.

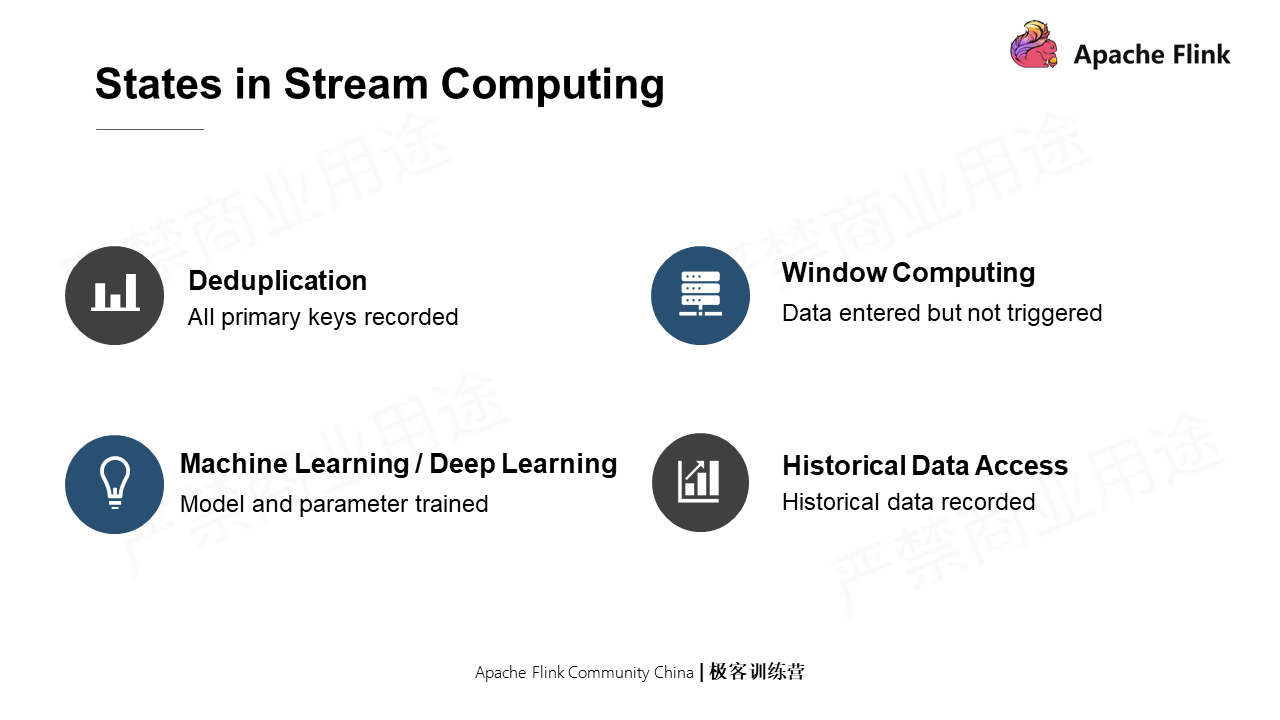

There are many states in stream computing. For example, in the case of deduplication, all primary keys are recorded; in window computing, data that has entered the window without being triggered is also a state of stream computing; in machine learning or deep learning, the trained model and parameter data are all states of stream computing.

The global consistency snapshot serves as a mechanism for the backup and fault recovery of a distributed system.

First of all, a global snapshot is a distributed application with its multiple processes distributed on multiple servers. Secondly, it has its own internal processing logics and states. Thirdly, mutual communications are available among applications. Fourthly, when in such a distributed application, internal states are available, and the hardware supports communications, the global state at a particular time is called a global snapshot.

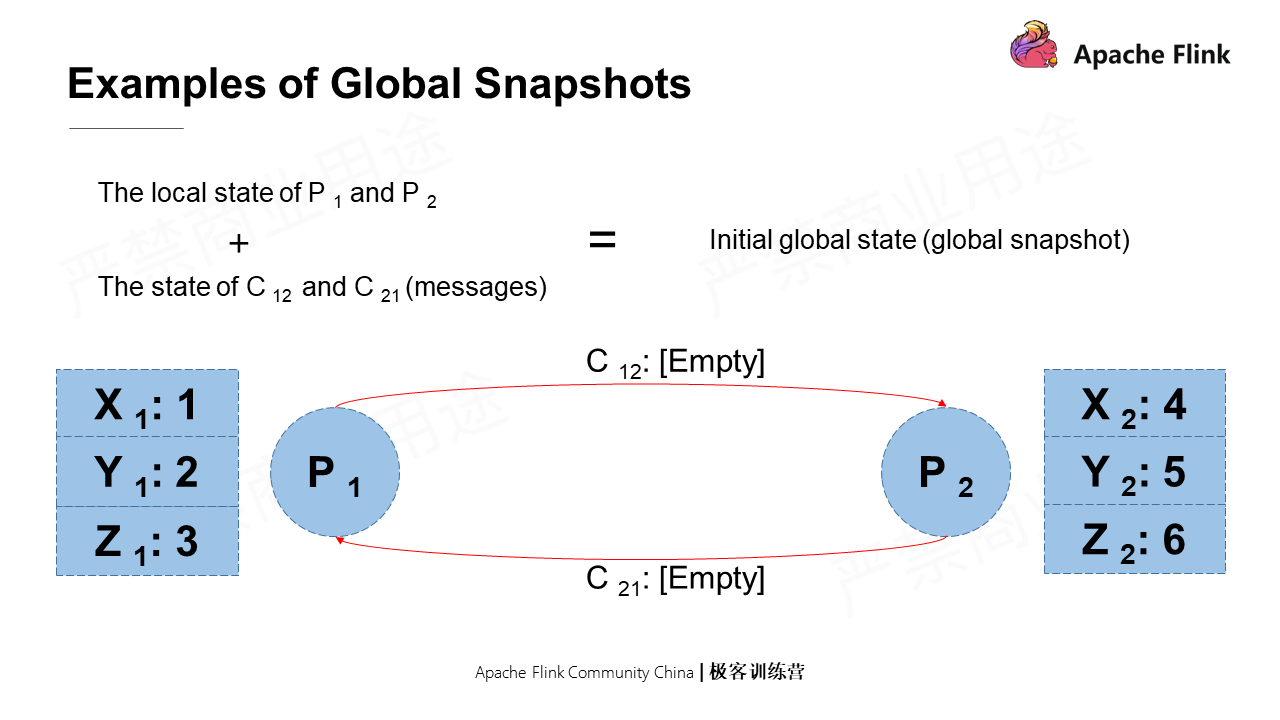

The following figure shows the example of a global snapshot in a distributed system.

P1 and P2 are two processes with message sending pipelines (C12 and C21) between them. For P1, C12 is the message sending pipeline called the output channel, while C21 is the message receiving pipeline called the input channel.

Apart from pipelines, each process has a local state. For example, P1 and P2 have three variables XYZ and their corresponding values in the memory, respectively. Therefore, the local state of P1 and P2 and the states of the pipelines through which they send messages are an initial global state, also called global snapshot.

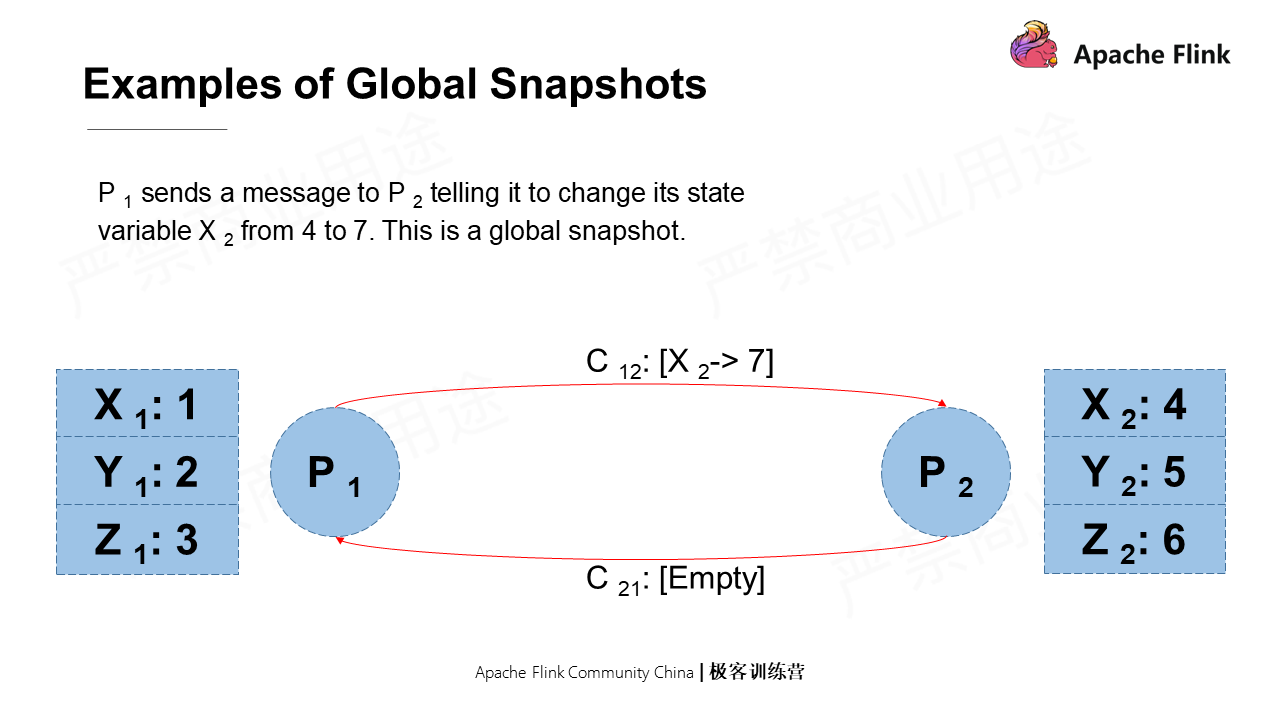

Assume that P1 sends a message to P2 telling it to change the state value of X from 4 to 7. However, this message is still in the pipeline and has not yet reached P2. In this case, such a state is a global snapshot.

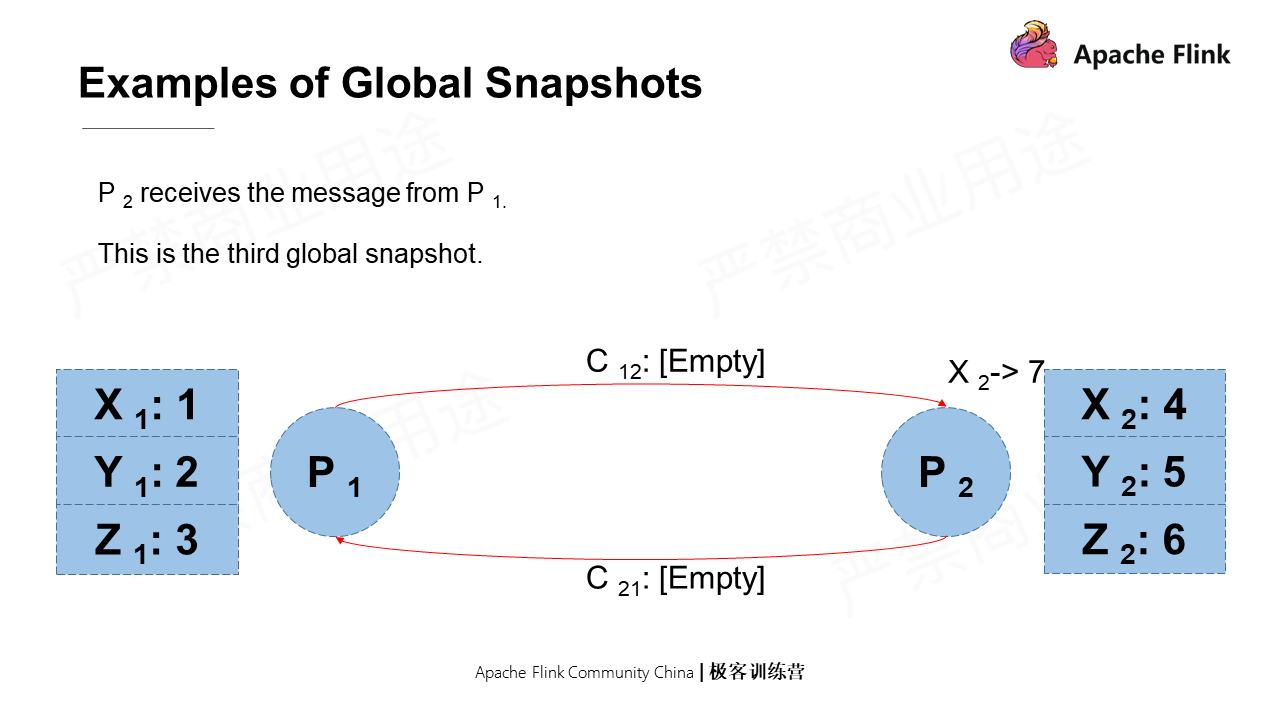

Assume again that P2 receives the message from P1, which has not yet been processed. In this case, the state is also a global snapshot.

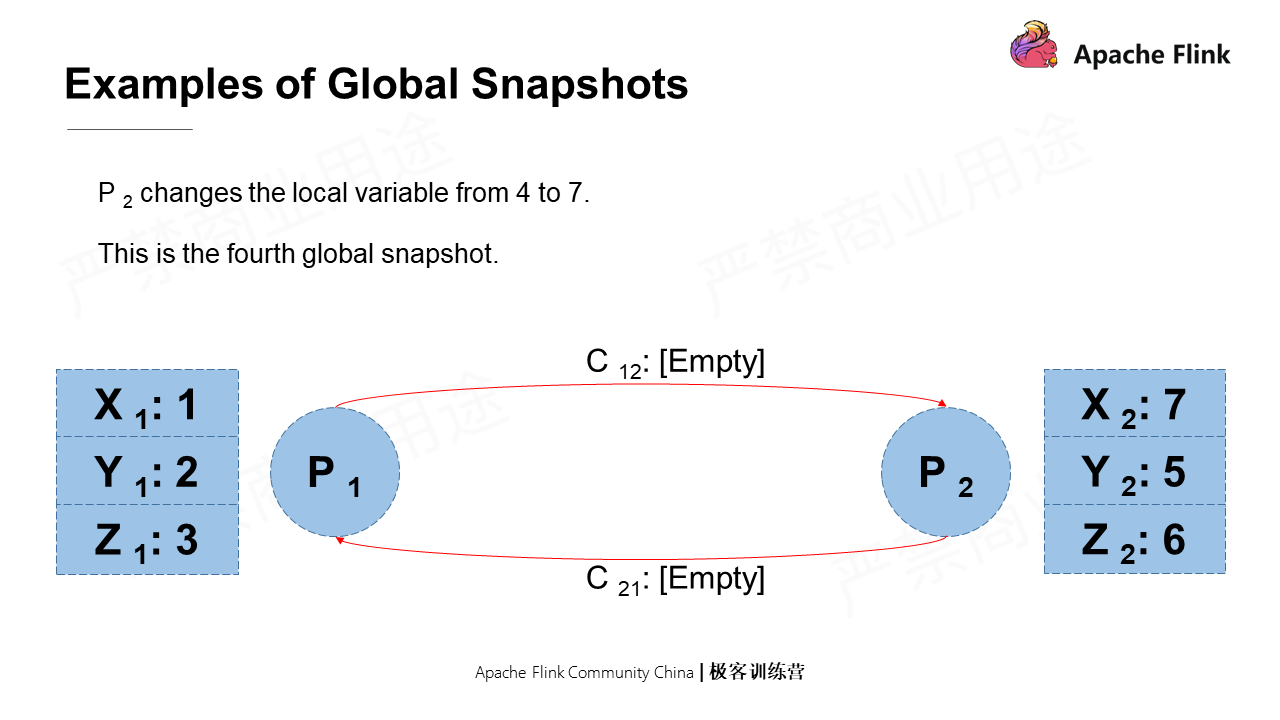

Lastly, P2 changes the local value of X from 4 to 7, which is a global snapshot as well.

It can be seen that when an event occurs, the global state changes. Events include process sending a message, process receiving a message, and process changing its own state.

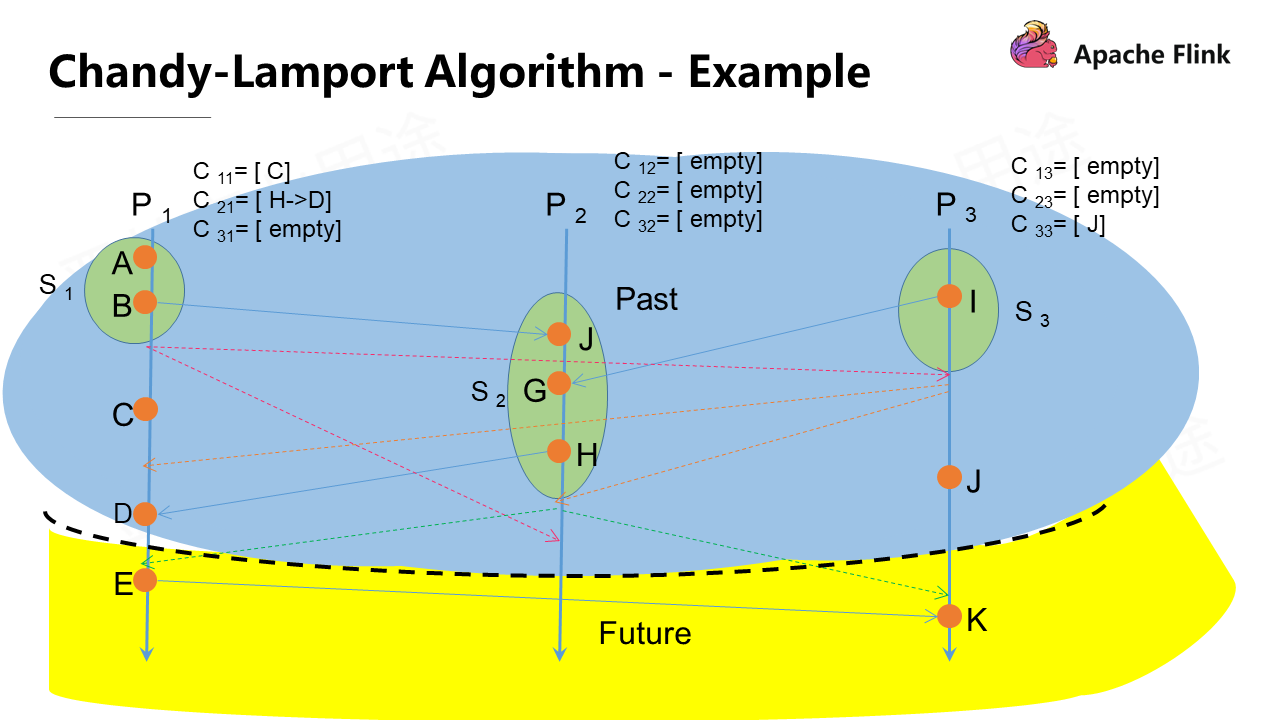

Suppose that there are two events, a and b. In absolute time, if a occurs before b and b is included in the snapshot, a is also included in the snapshot. Global snapshots that meet this condition are called global consistency snapshots.

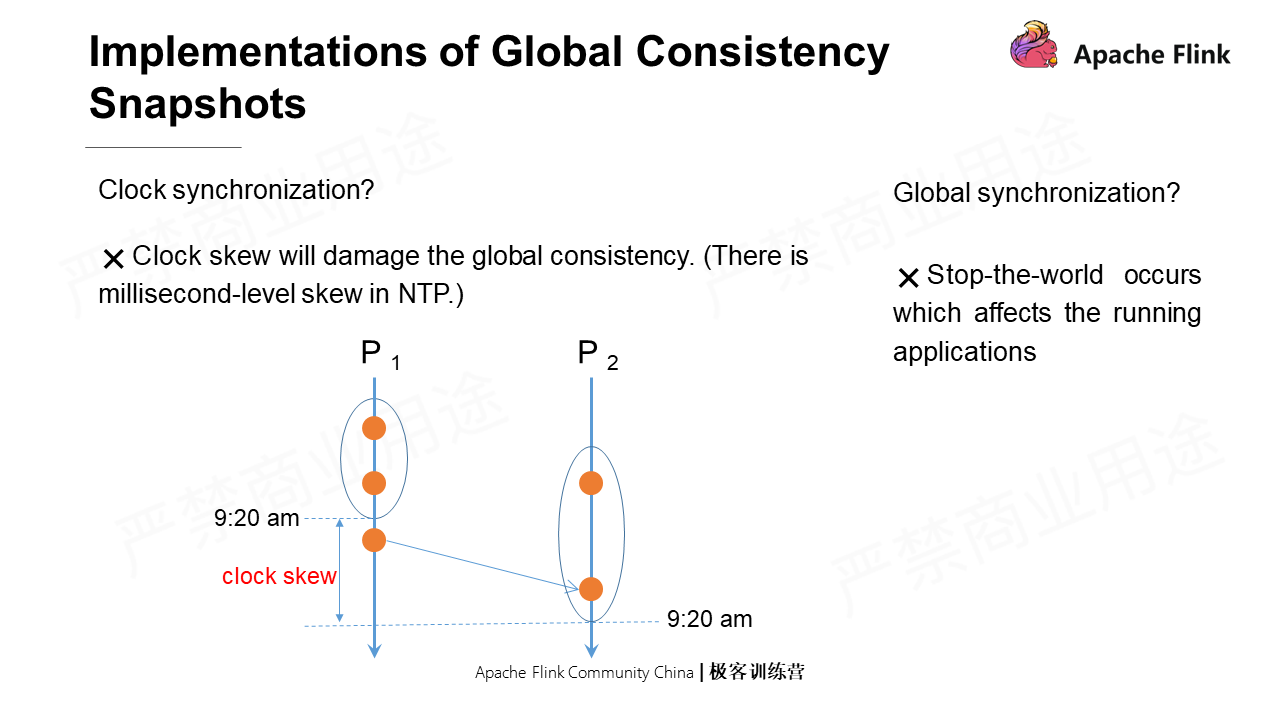

Clock synchronization cannot implement global consistency snapshots. Although global synchronization can do this, it has an obvious disadvantage: it will stop all applications, thus affecting the global performance.

The asynchronous algorithm Chandy-Lamport enables global consistency snapshots without affecting the running applications.

Several requirements for the Chandy-Lamport system are as follows:

At the same time, the execution of the Chandy-Lamport algorithm also requires that the messages are in sequence without repetition, with their reliability guaranteed.

The execution process of Chandy-Lamport is divided into three parts: initiate a snapshot, execute the snapshot in a distributed way, and terminate the snapshot.

Initiate a Snapshot

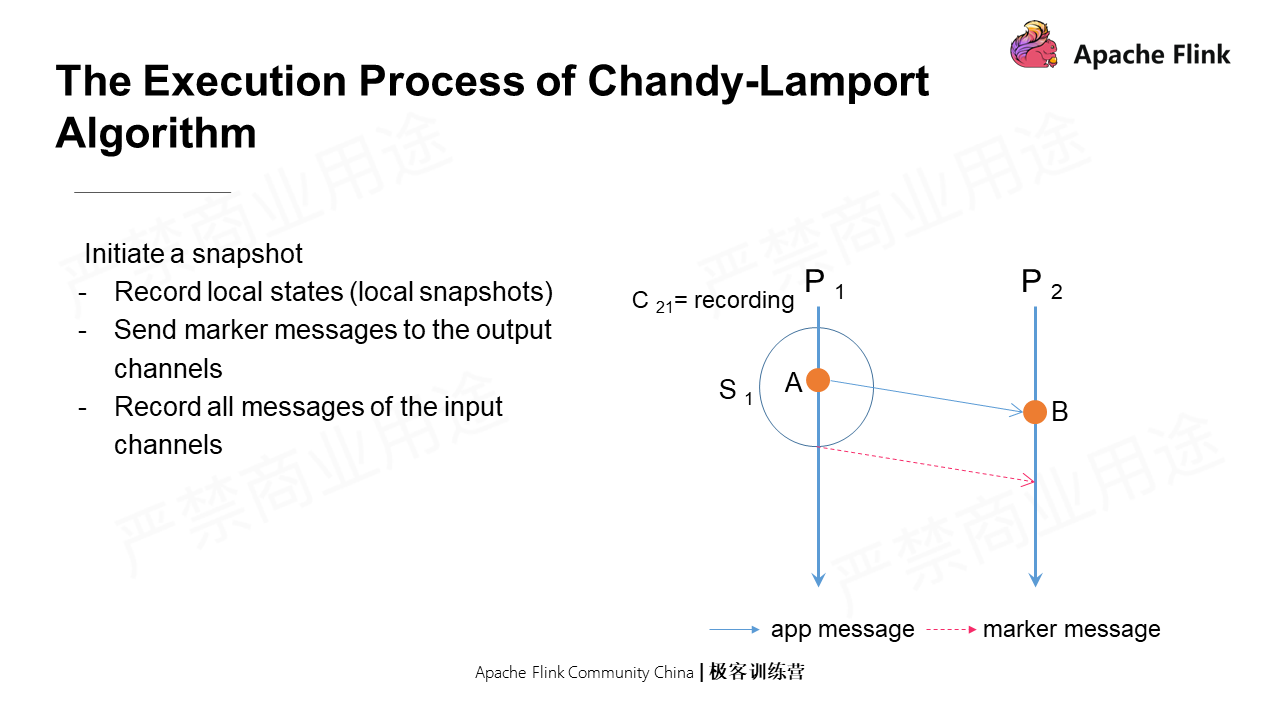

Any process can initiate a snapshot. As shown in the following figure, when P1 initiates a snapshot, the first step is to record the local states, that is, to take a local snapshot. Then, it immediately sends a marker message to all its output channels. There is no time gap in between. The marker message is a special message which is different from the messages transmitted between applications.

After sending a marker message, P1 starts to record all messages of its input channel, namely the messages in the C21 in the figure.

Execute Snapshots in a distributed way

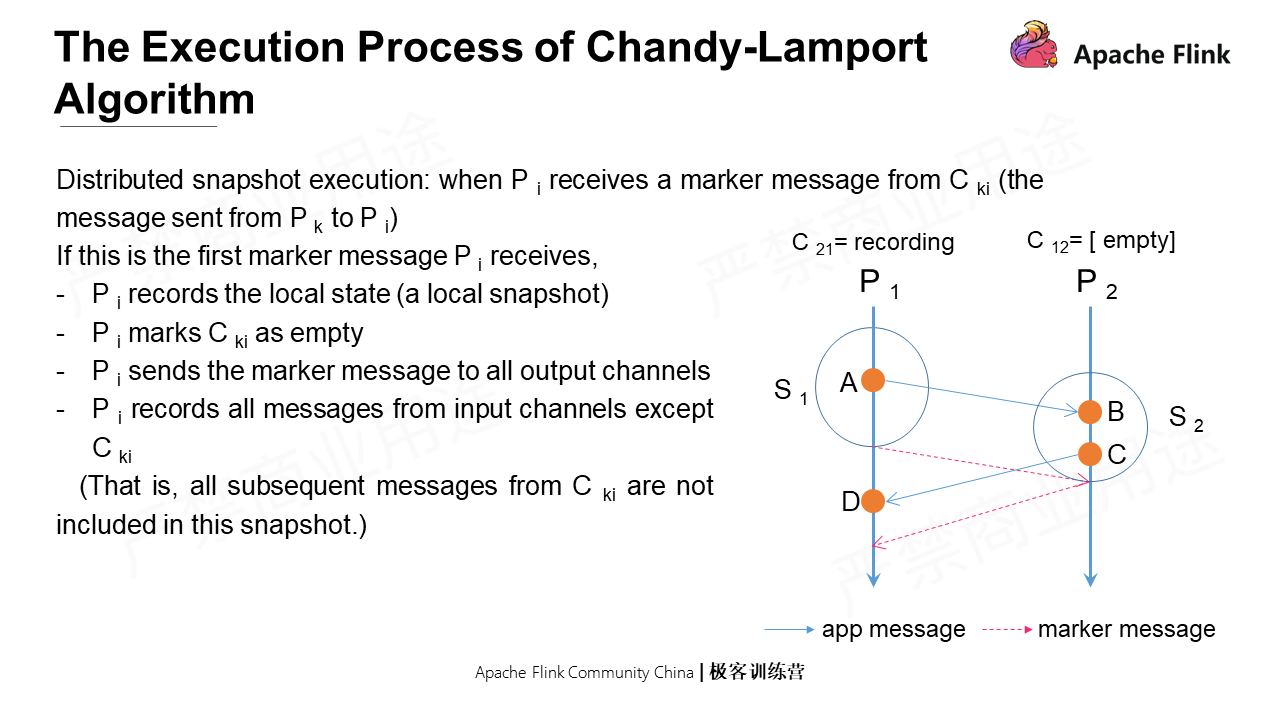

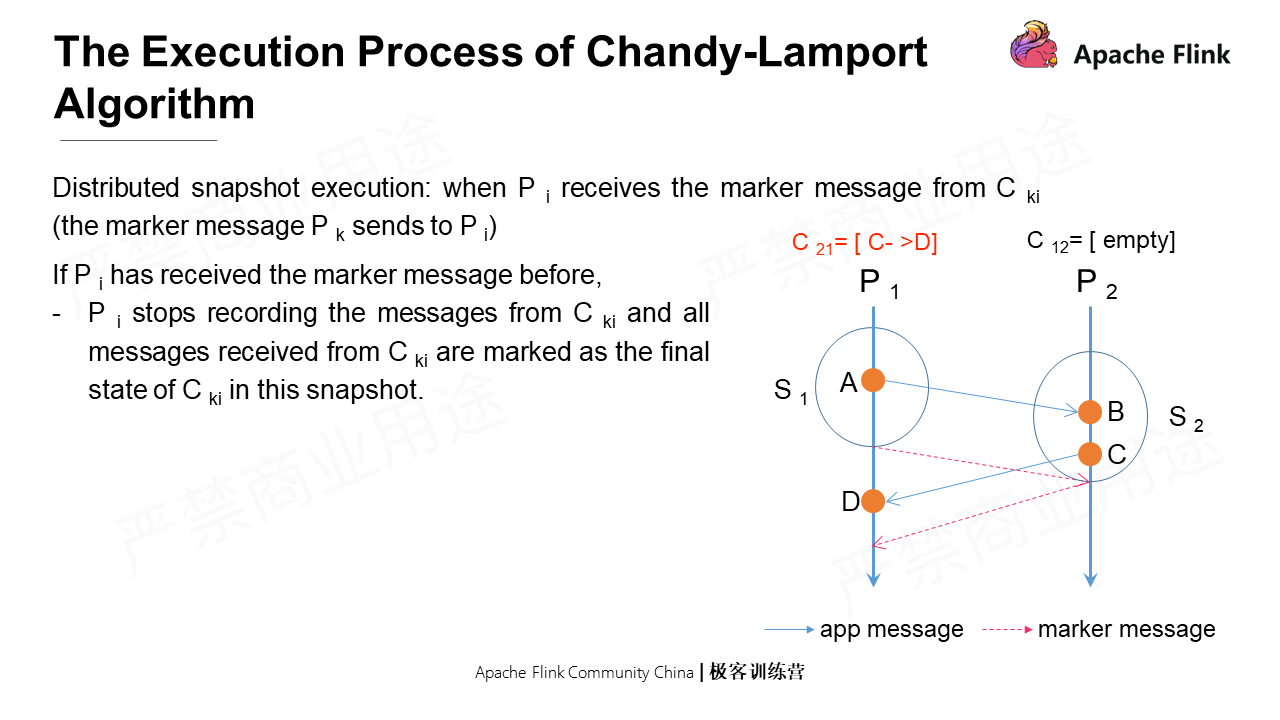

As shown in the following figure, assume that Pi receives the marker messages from Cki, which are also those that Pk sends to Pi. There are two cases:

The first one: when Pi receives the first marker message from other pipelines. It will record the local state first, and then mark the C12 as empty. That is to say, subsequent messages sent from P1 are not included in this snapshot. At the same time, Pi immediately sends a marker message to all its output channels. Lastly, it records messages from all input channels except Cki.

The Cki messages mentioned above are not included in real-time snapshots, but real-time notifications still occur. So the second case is that if Pi has already received the marker message before, it will stop recording Cki messages. All previously-recorded Cki messages will be saved as the final state of Cki in this snapshot.

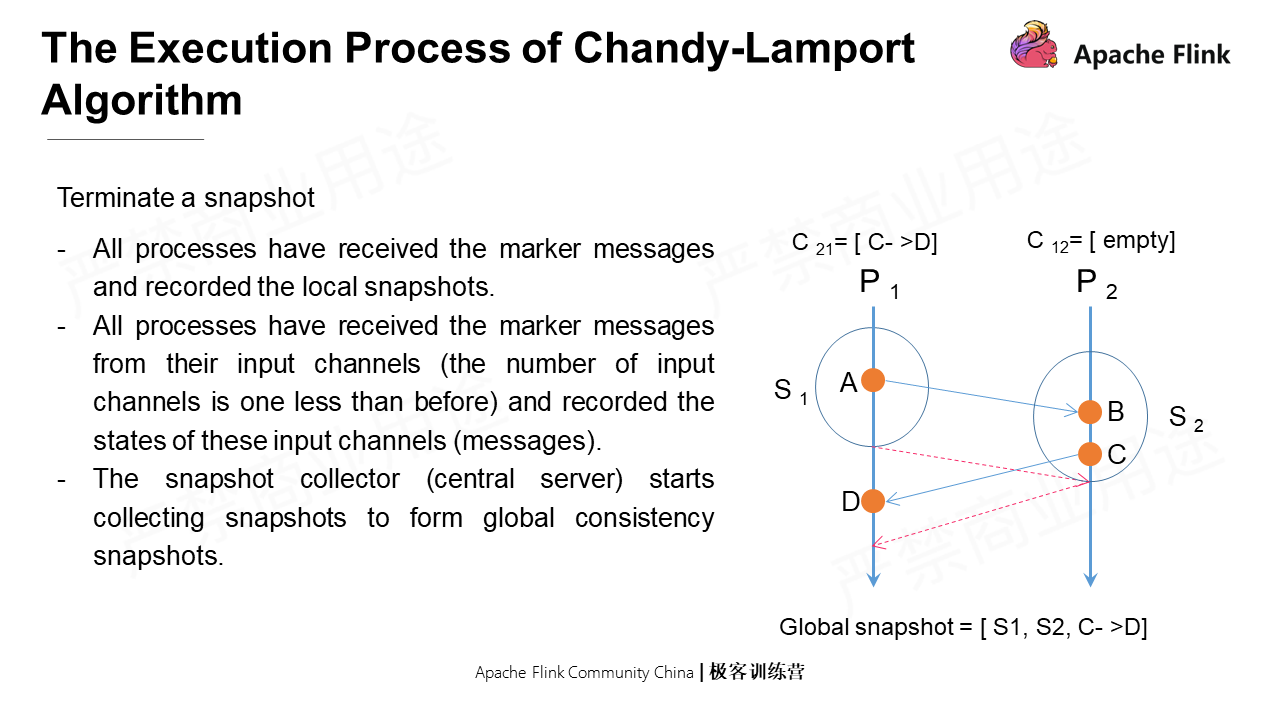

Terminate a Snapshot

There are two conditions to terminate a snapshot:

When the snapshot is terminated, the snapshot collector (Central Server) starts collecting snapshots to form global consistency snapshots.

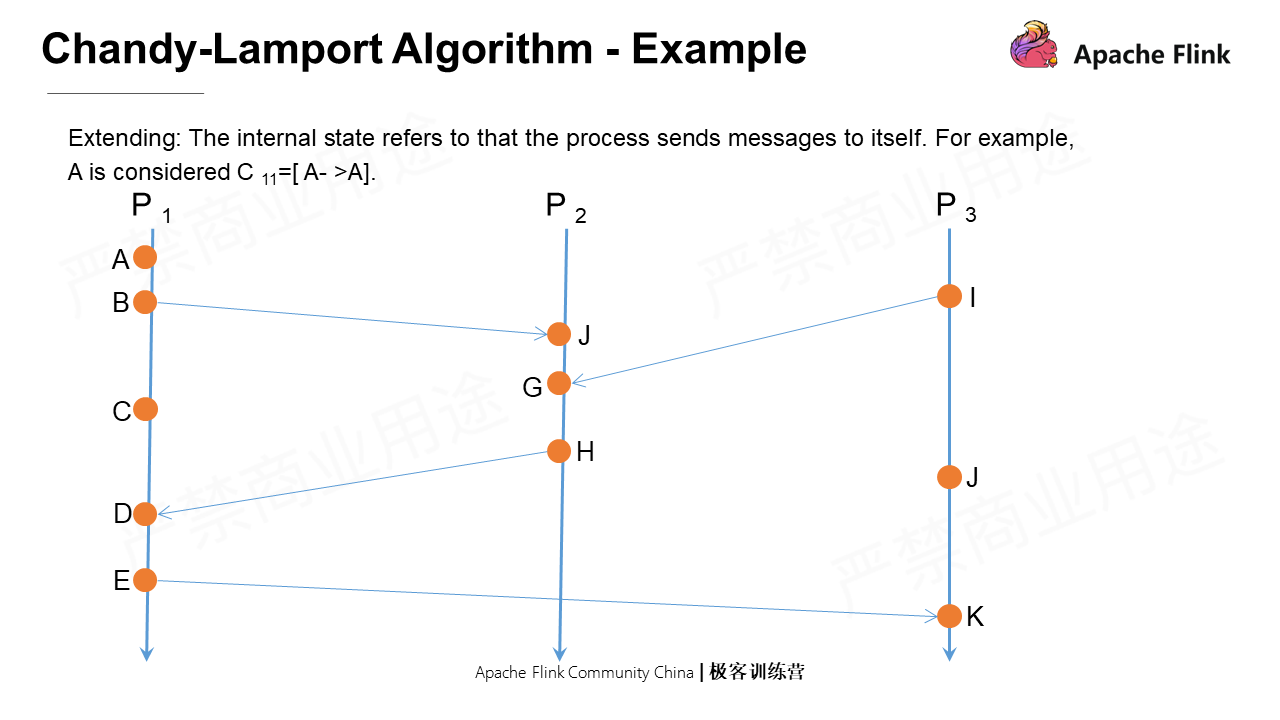

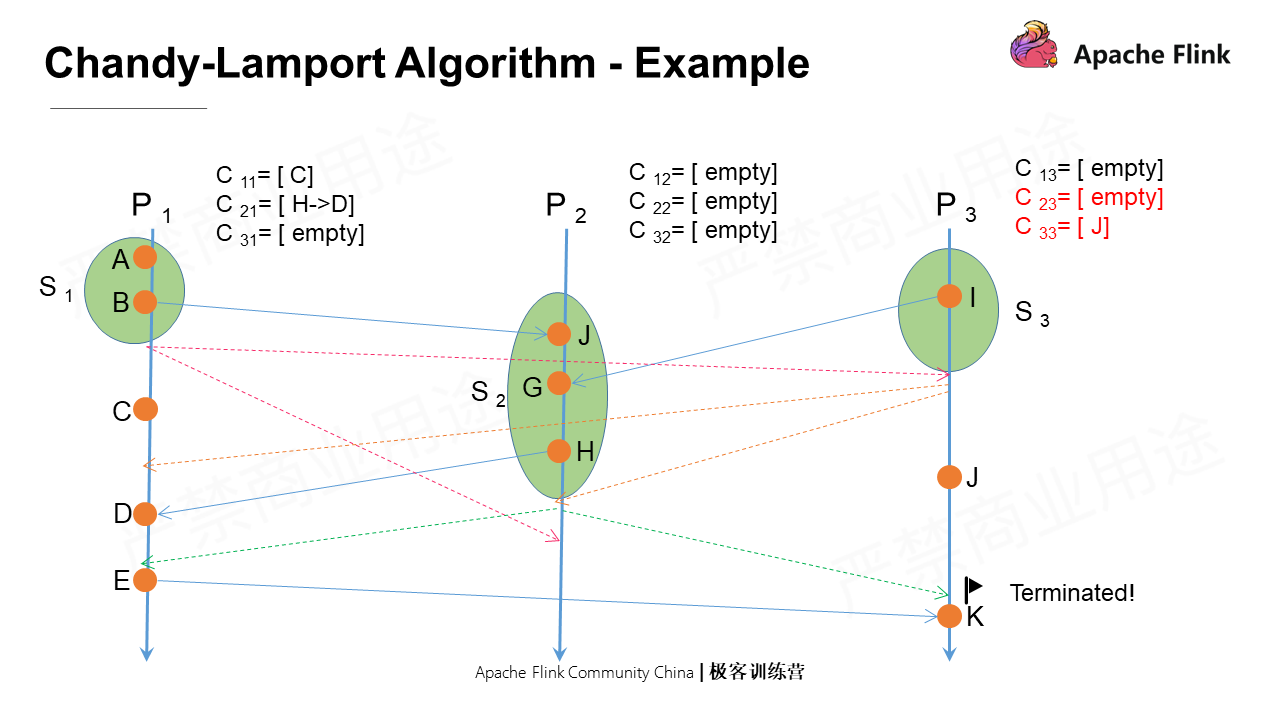

Example

In the following Example, some states occur internally, such as A, which has no interaction with other processes. An internal state means that P1 sends messages to itself, and A can be regarded as C11=[A->].

How is Chandy-Lamport, the global consistency snapshot algorithm, executed?

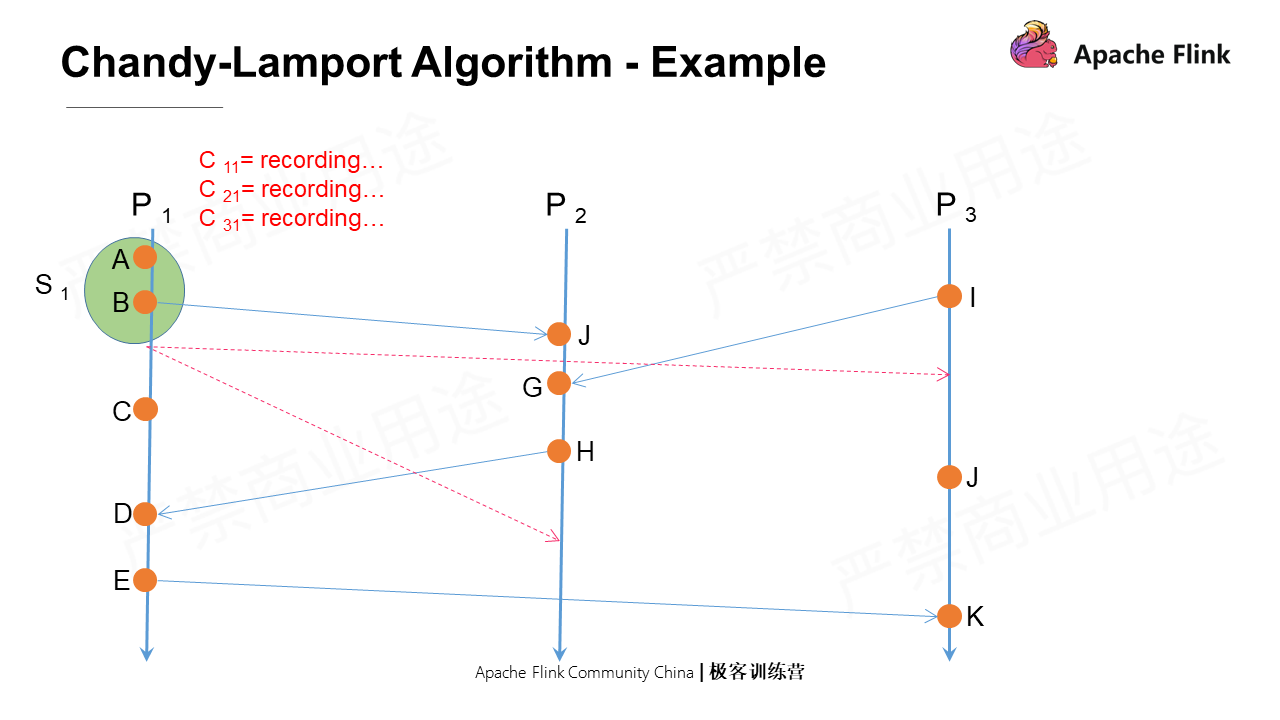

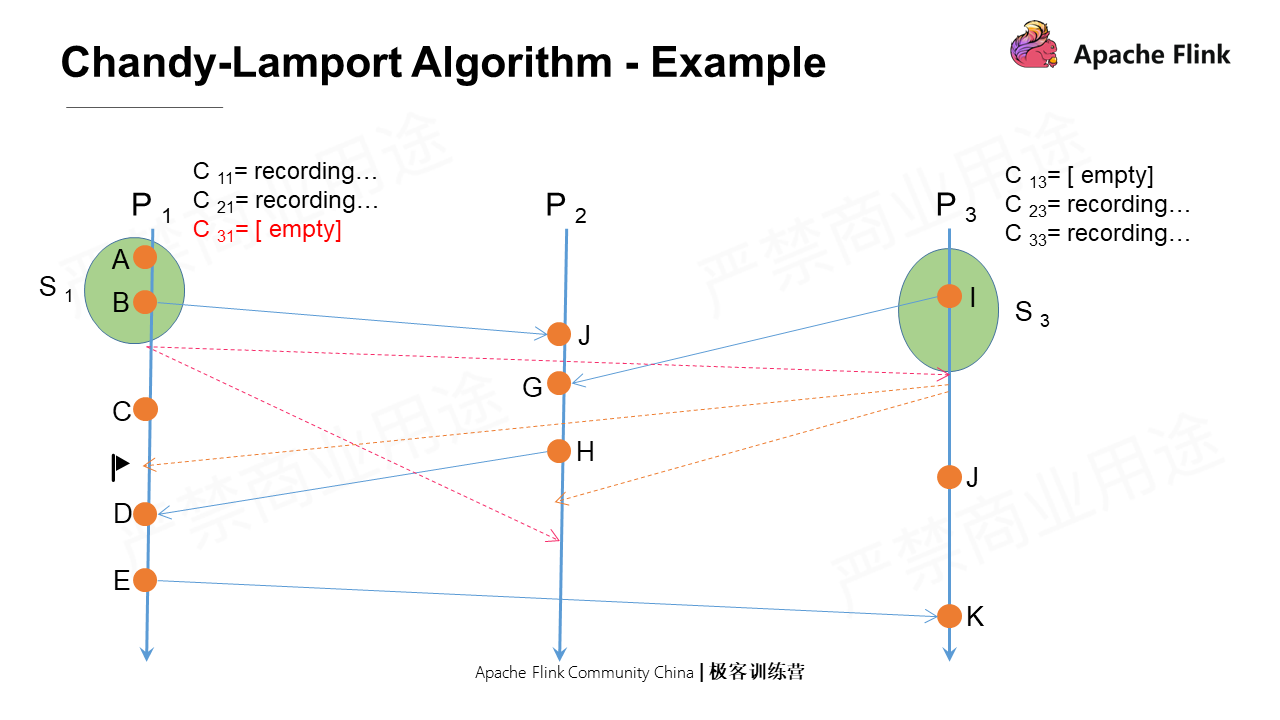

Assume that P1 initiates a snapshot. It first takes a snapshot of the local state, called S1. Then, it immediately sends marker messages to all its output channels respectively, namely P2 and P3. After that, it records the messages from all its input channels P2 and P3 and itself.

As shown in the figure, the vertical axis indicates the absolute time. In terms of the absolute time, why is there a time difference when P3 and P2 receive the marker message? If this is a distributed process in a real physical environment, the network conditions between different nodes are different, which will lead to different message arrival times.

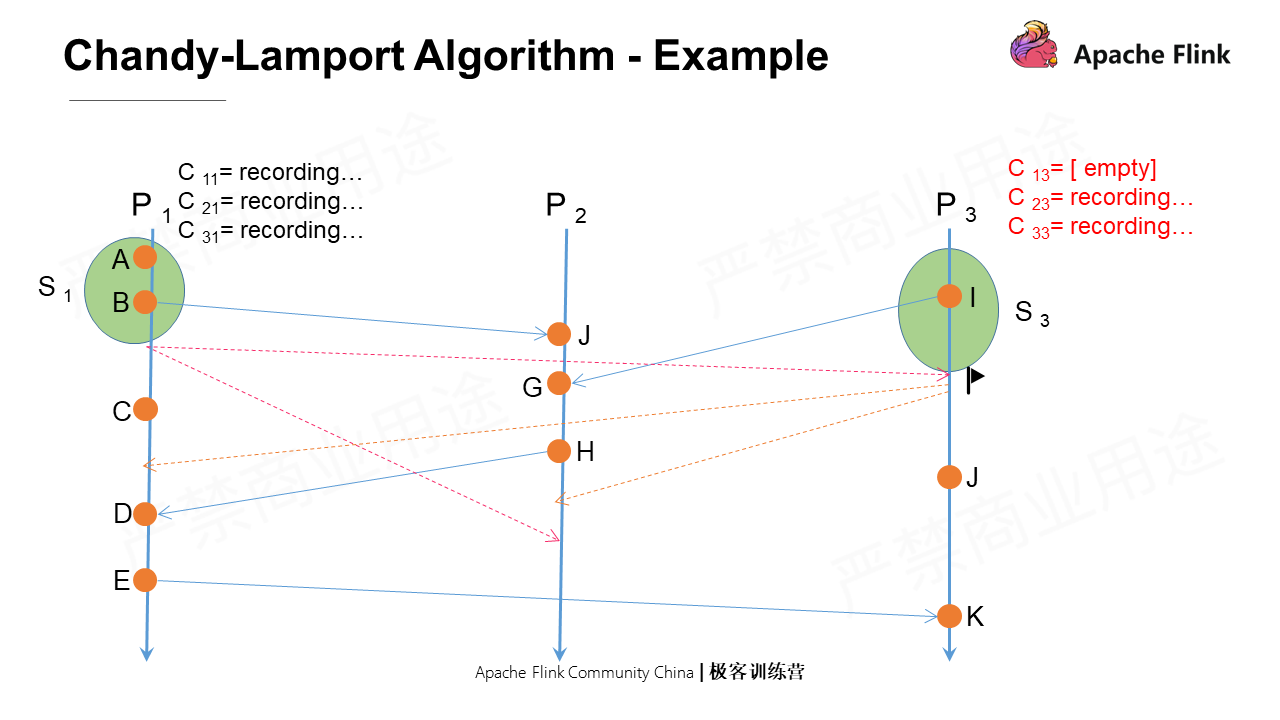

Firstly, P3 receives the marker message that is the first marker message it receives. After this, it first takes a snapshot of the local state and then marks the C13 as close. At the same time, it sends marker messages to all its output channels. Lastly, it records messages from all input channels except C13.

P1 receives a marker message from P3, which is not the first marker message it receives. It will immediately shut down C31 and save the current recorded message as the snapshot of this channel. When it receives other messages from P3 subsequently, they will not be updated in the snapshot state this time.

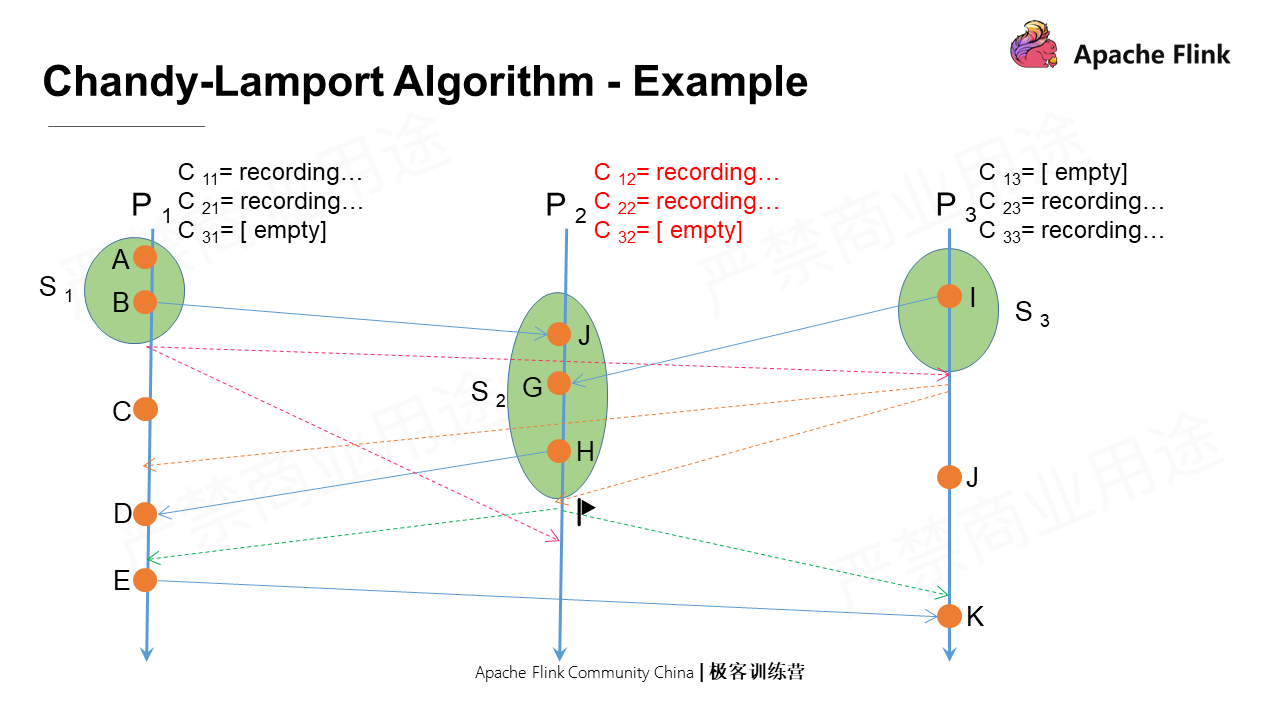

Next, P2 receives a marker message from P3, which is the first marker message it receives. After this, it first takes a snapshot of the local state and then marks C32 as close. At the same time, it sends marker messages to all its output channels. Lastly, it records messages from all input channels except C32.

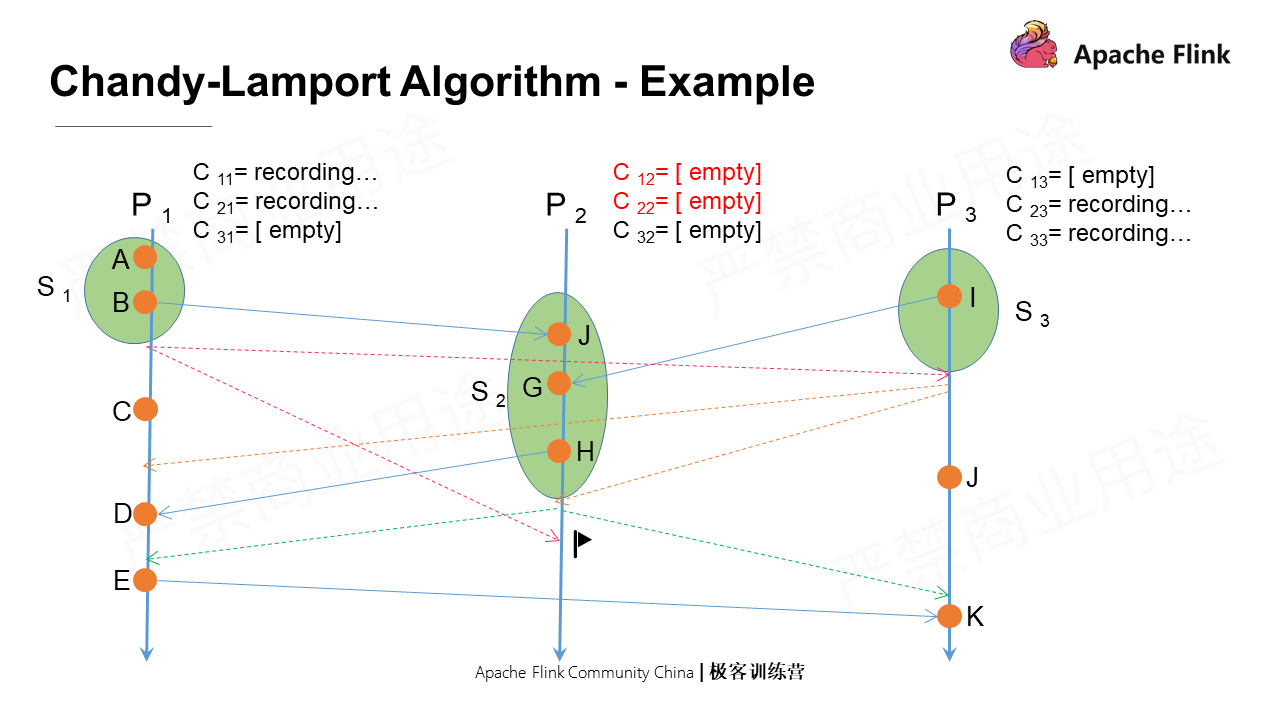

P2 receives a marker message from P1, which is not the first marker message it receives. Therefore, it closes all input channels and records the states of the channels.

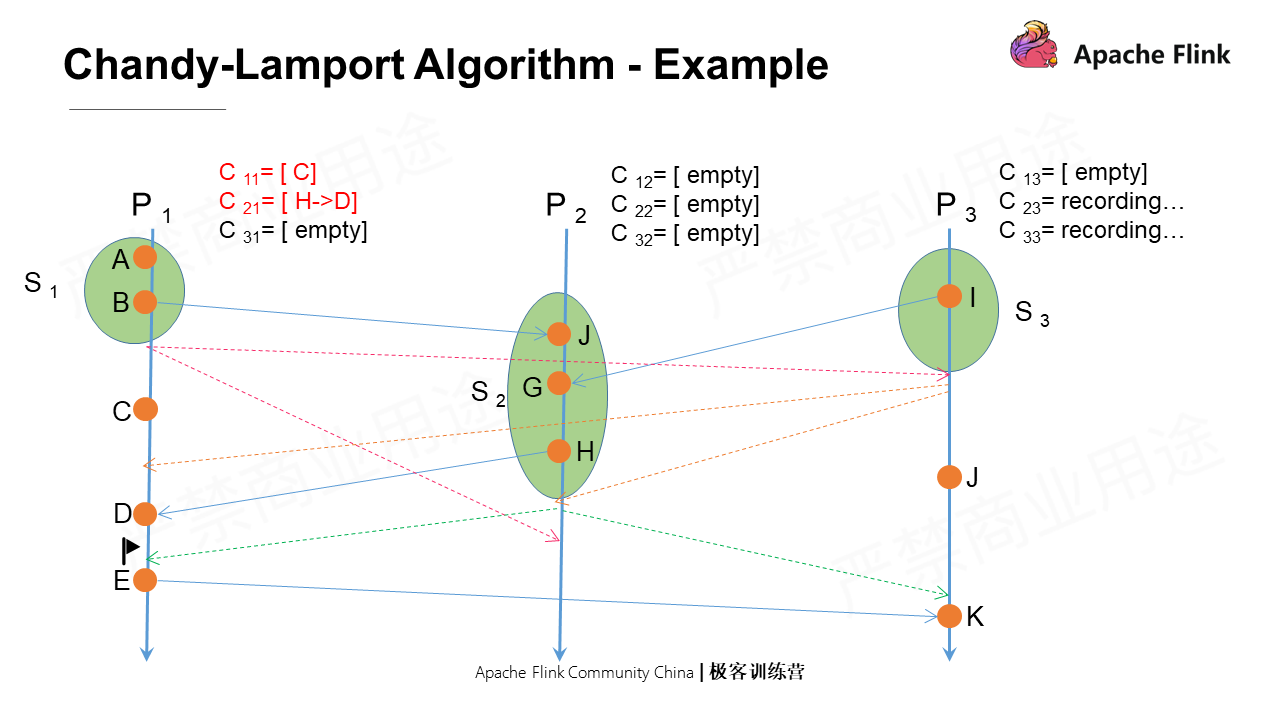

Next, P1 receives a marker message from P2, not the first message it receives. Then, it will shut down all input channels and mark the recorded messages as states, among which there are two states: one is C11, namely the messages P1 sends to itself; the other is C21, which are the messages sent to P1D by H in P2.

At the last point, P3 receives a marker message from P2, which is not the first message it receives. The operation is the same as described above. In this process, P3 has a local event J, which is taken as its state.

When all processes have finished recording local states and all input pipelines of each process have been closed, the global consistency snapshot ends. This means that the global state record of the past time point is completed.

Flink is a distributed system that uses global consistency snapshots to form checkpoints for fault recovery. The asynchronous global consistency snapshot algorithm of Flink differs from the Chandy-Lamport algorithm in the following aspects:

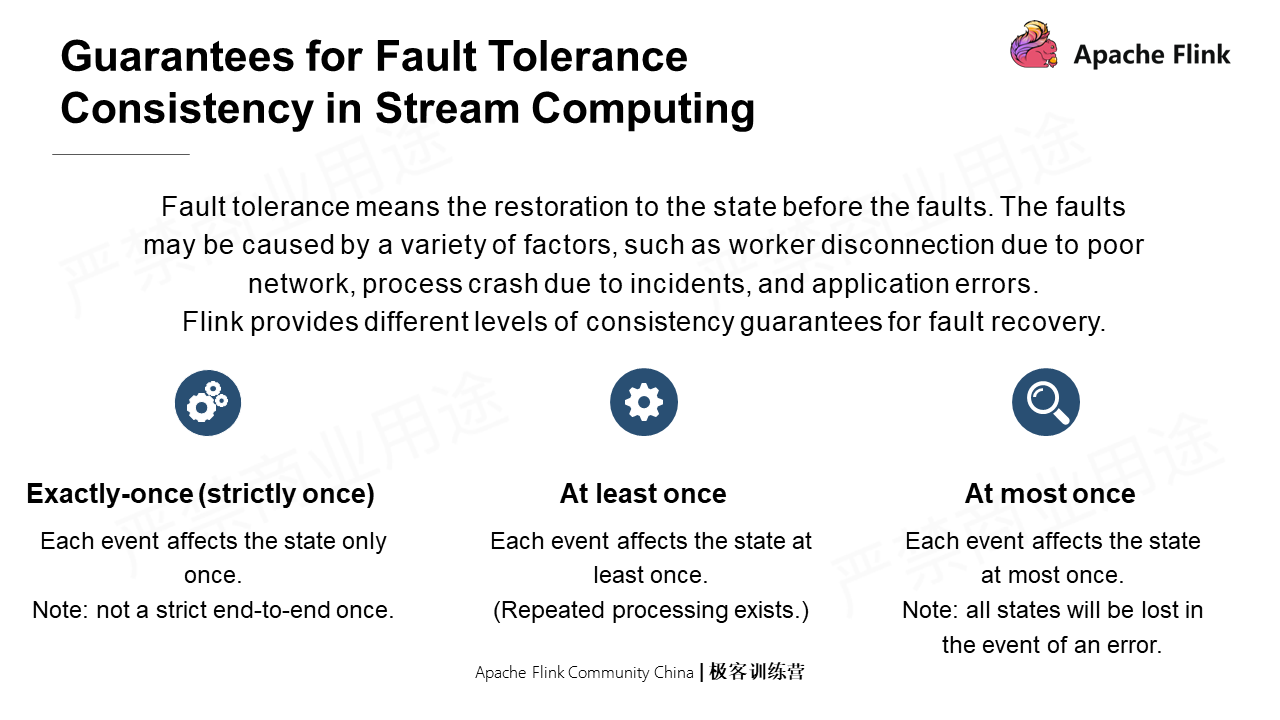

Fault tolerance means restoration to the states before the faults. There are three ways to guarantee the consistency in the fault tolerance of stream computing: Exactly once, At least once, and At most once.

End-to-end Exactly-once

By "Exactly once", the operation results are always correct but are likely to be output multiple times. Therefore, a usable source is required.

By end-to-end Exactly-once, the operation result is correct and will be output only once. Apart from reusable sources, it also requires a transactional sink and the output results that can accept idempotents.

The State Fault Tolerance of Flink

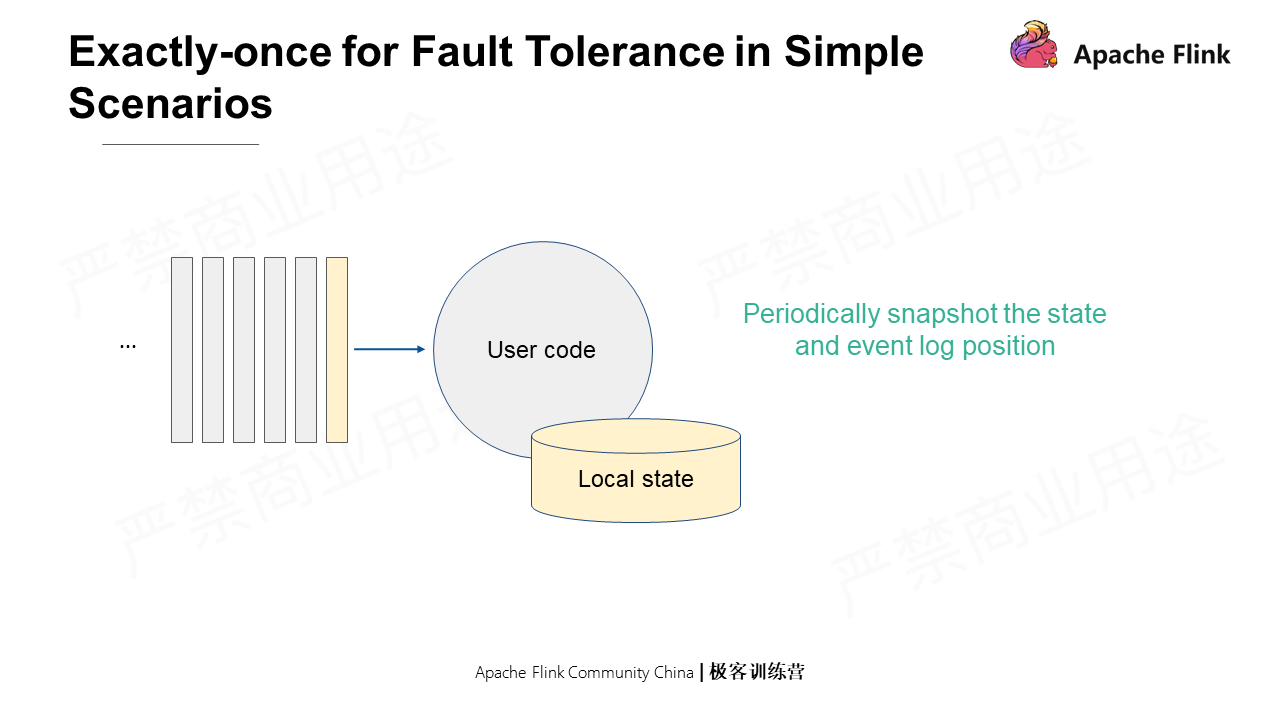

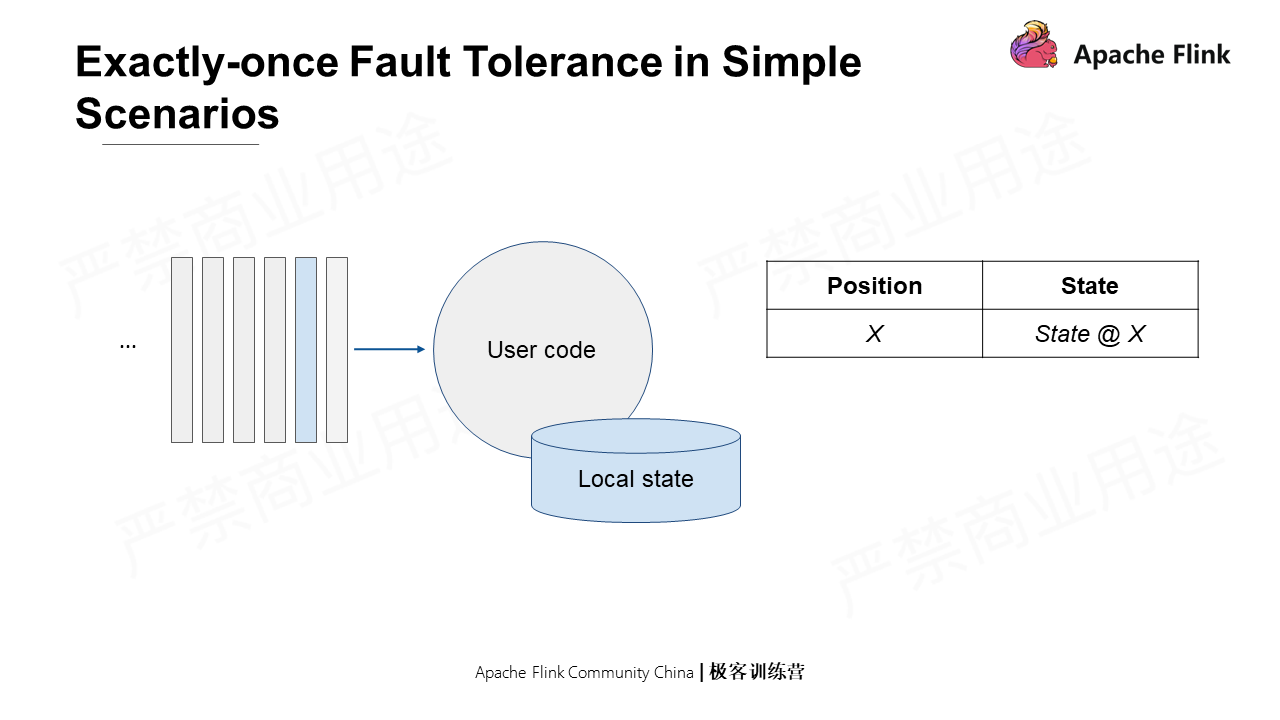

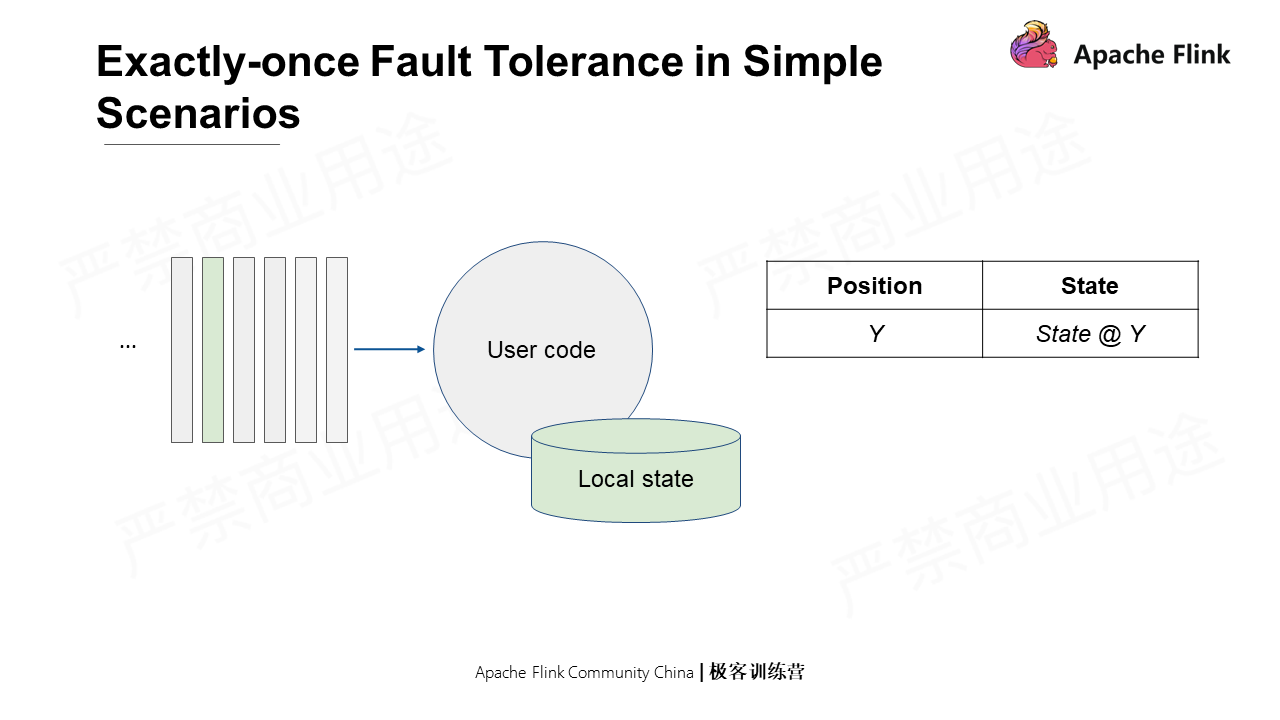

Many scenarios require the Exactly-once semantics; that is, the request is processed only once. How to ensure such semantics?

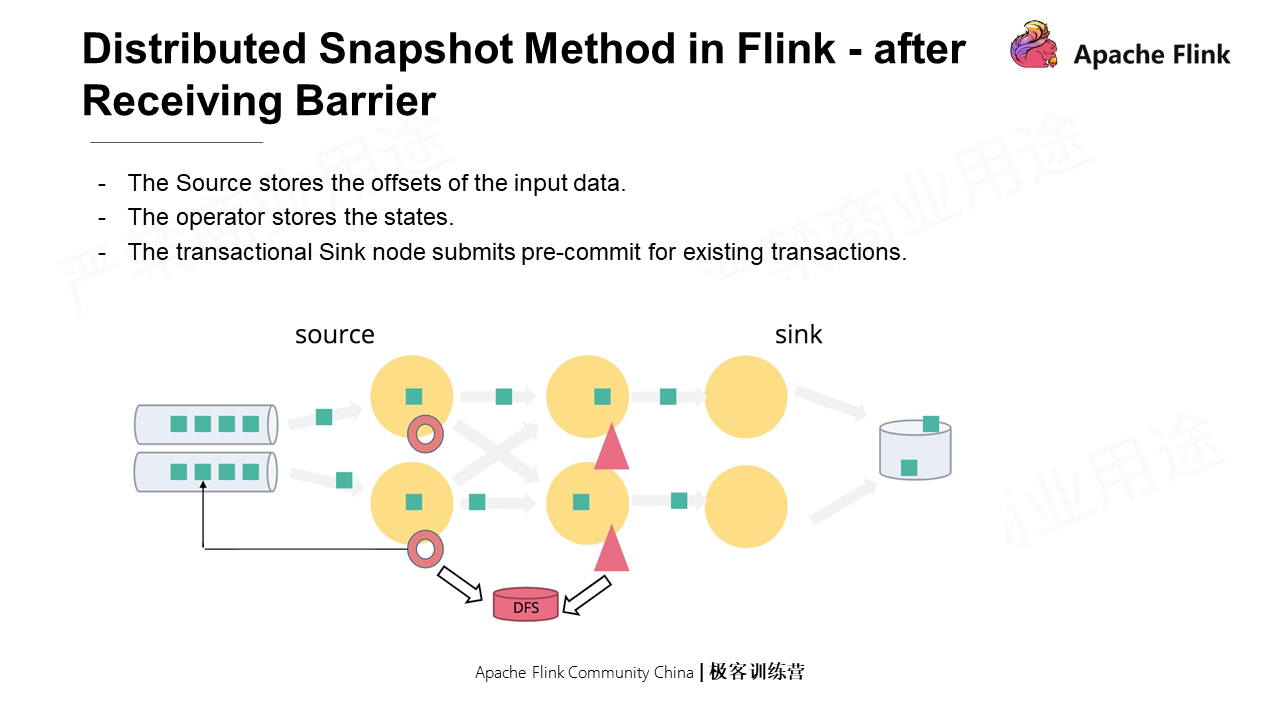

As shown in the following figure, record the local state as well as the offset of the source, namely the location of the Event log.

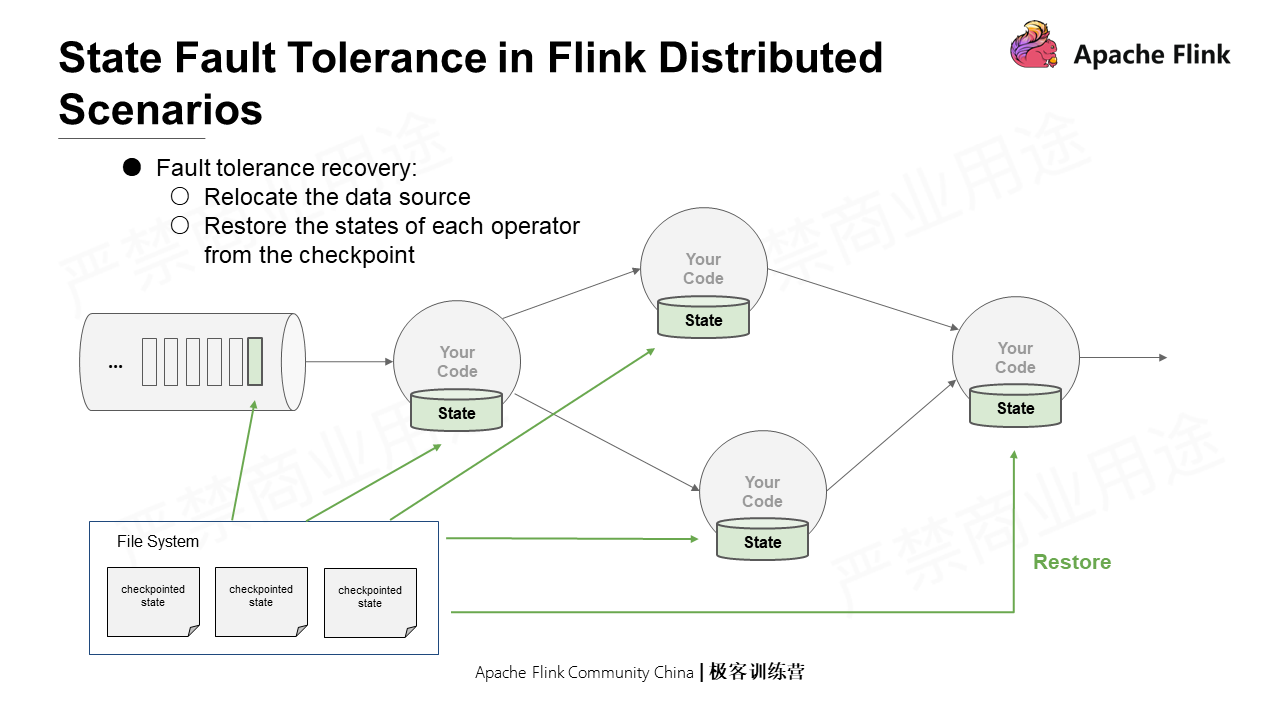

In a distributed scenario, global consistency snapshots must be generated for multiple operators with local states without interrupting the operation. The job topology in the Flink distributed scenario is special as it is a directed, acyclic, and weakly connected graph. The tailored Chandy-Lamport can be used to record only all input offsets and the states of each operator. In addition, a rewindable source (the traceable source) can be used to read the earlier time points through offset without storing the states of the channel. As such, one can save ample storage space when there are aggregation logics.

The final step is recovery. That is to reset the location of the data source, and restore the states of each operator through the checkpoint.

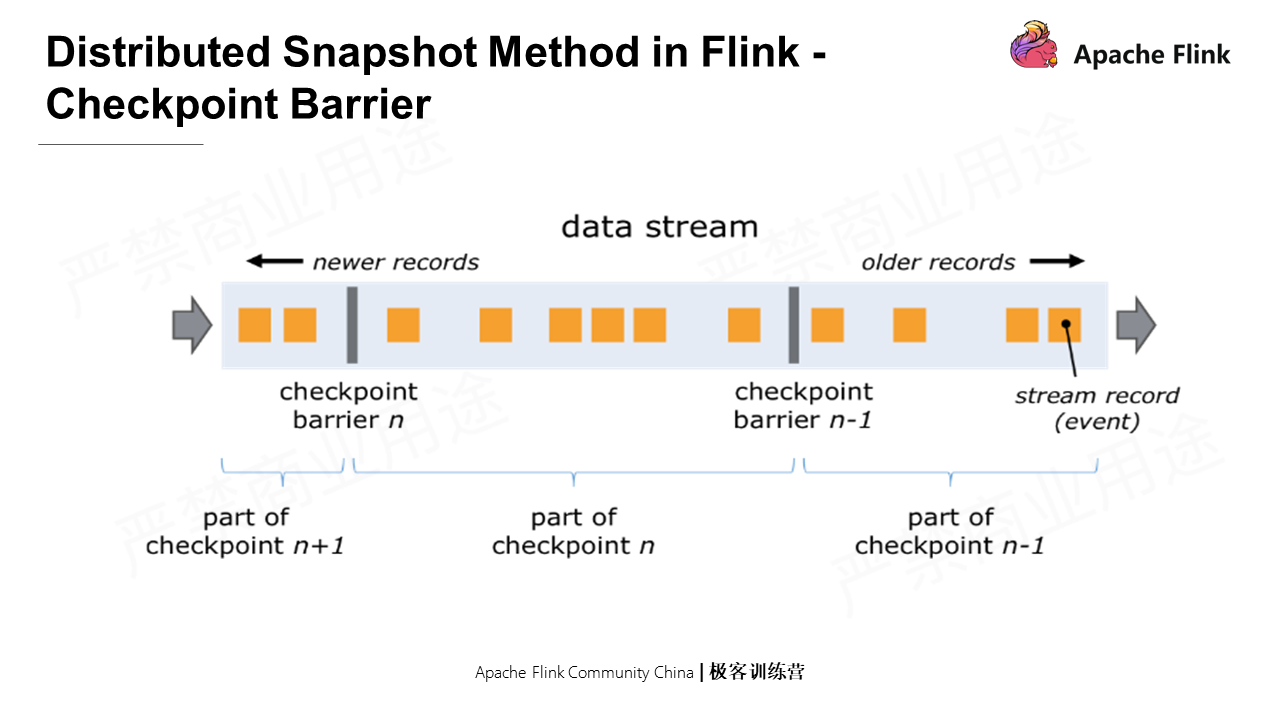

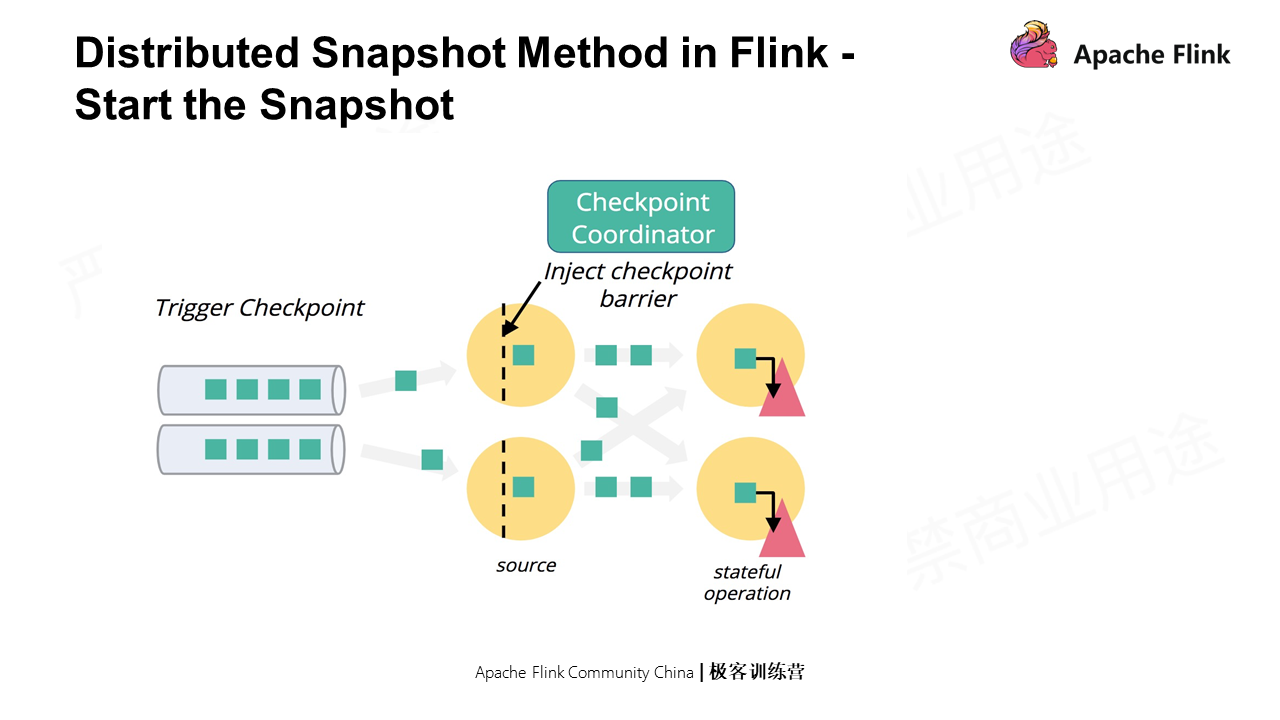

First, insert a Checkpoint barrier, namely the marker message in the Chandy-Lamport algorithm, into the source data stream. Different Checkpoint barriers naturally divide the stream into multiple segments with each containing Checkpoint data;

Flink has a global Coordinator, which, unlike Chandy-Lamport, cannot initiate snapshots for any process. This centralized Coordinator injects Checkpoint barriers to each source and starts the snapshots. When each node receives the barrier, only the local state is stored since the Channel state is not stored in Flink.

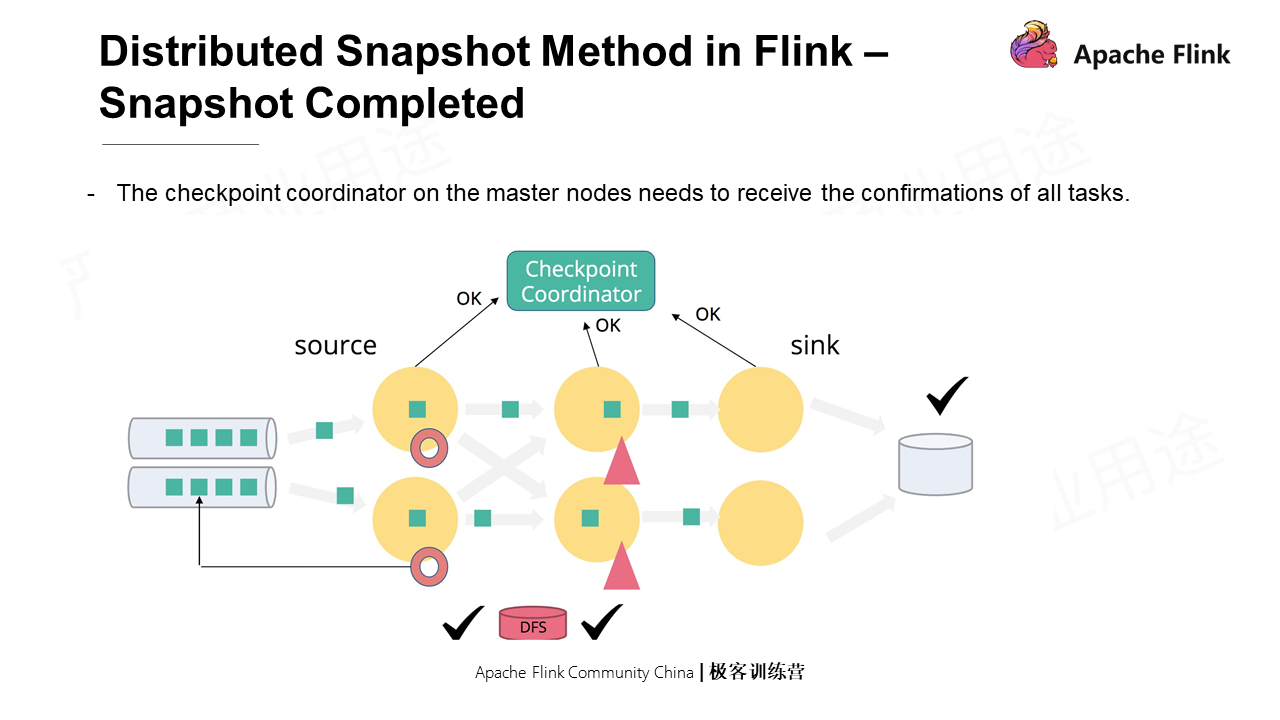

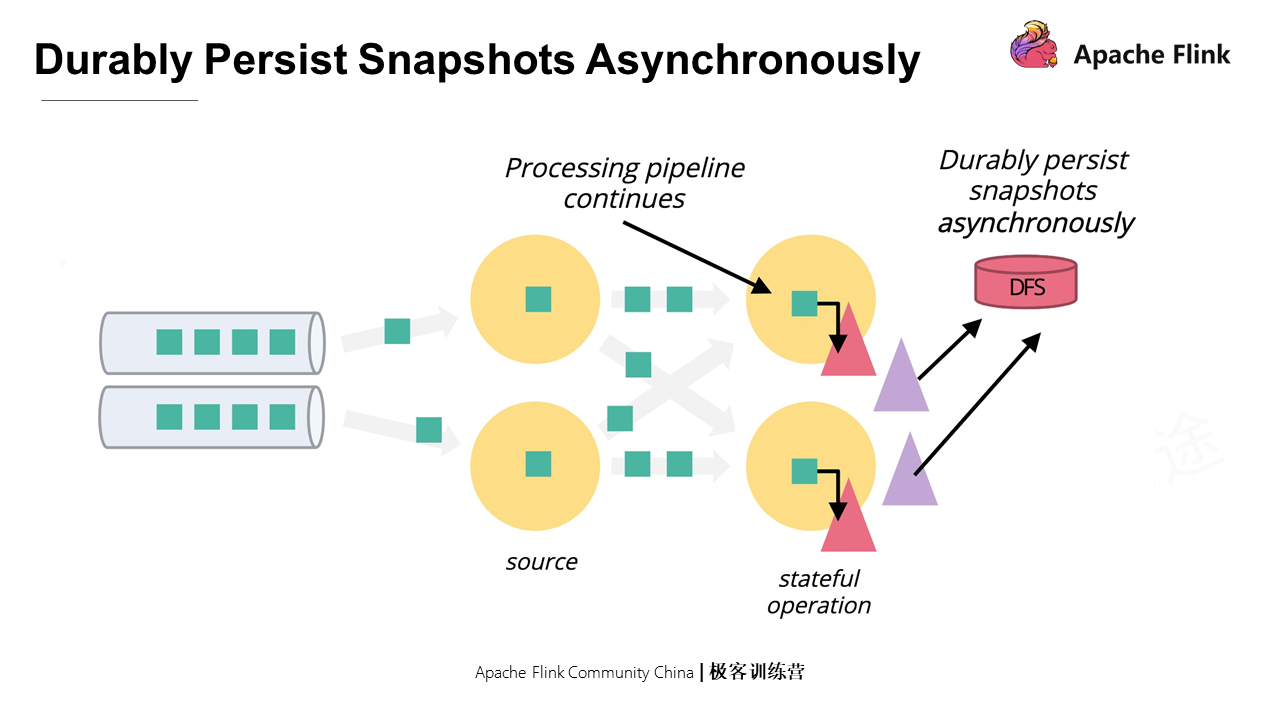

After the Checkpoint is completed, each concurrency of each operator sends a message to the Coordinator for confirmation. When the Checkpoint Coordinator receives the confirmation messages of all tasks, the snapshot is completed.

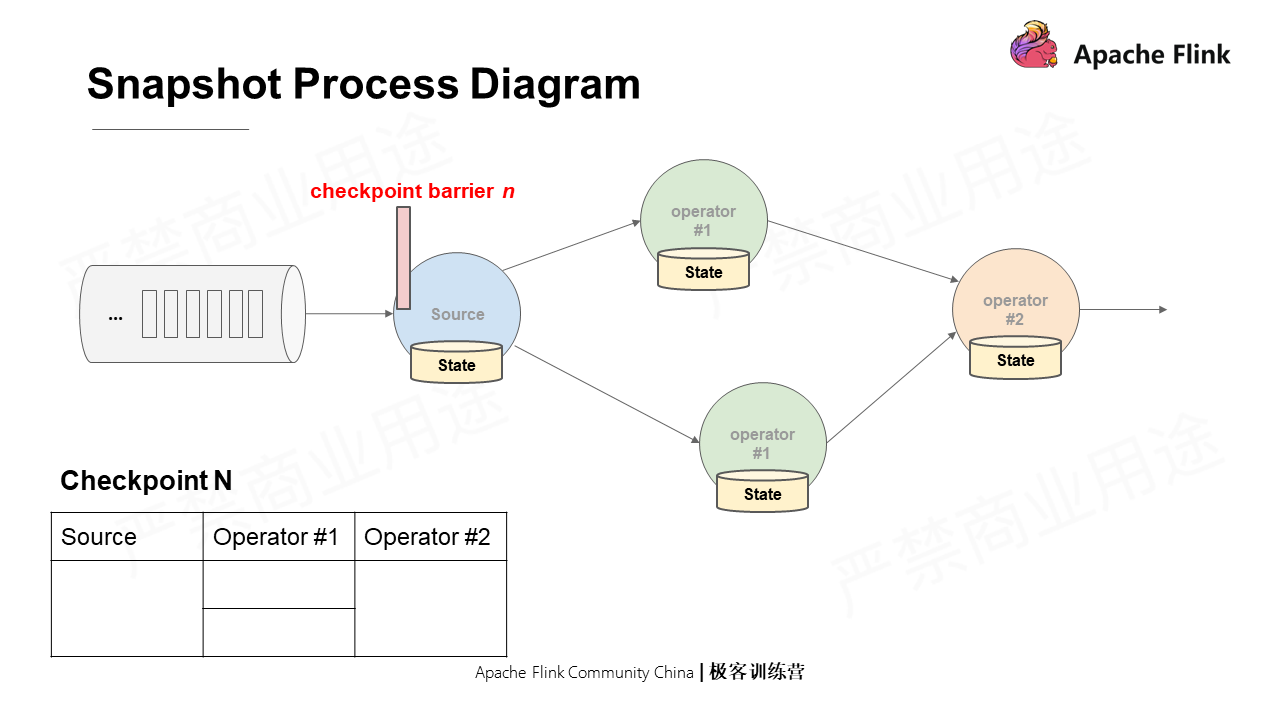

As shown in the following figure, assume that Checkpoint N is injected to the source. The source will first record the offsets of the partition that it is processing.

As time goes by, the Checkpoint barrier is sent downstream of two concurrencies. When the barrier arrives at the two concurrencies, the two concurrencies respectively record their local states in the Checkpoint.

In the end, the barrier arrives at the final subtask, and the snapshot is completed.

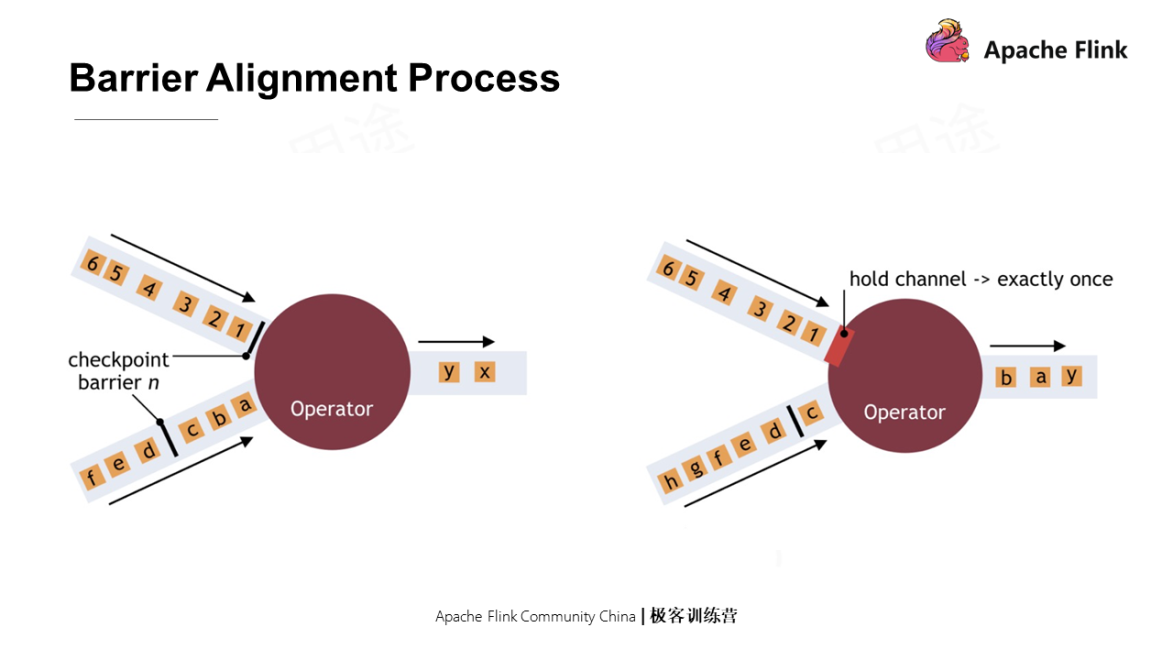

The above is a simple scenario in which each operator has only a single stream input. The following figure shows a more complex scenario in which an operator has multiple streams input.

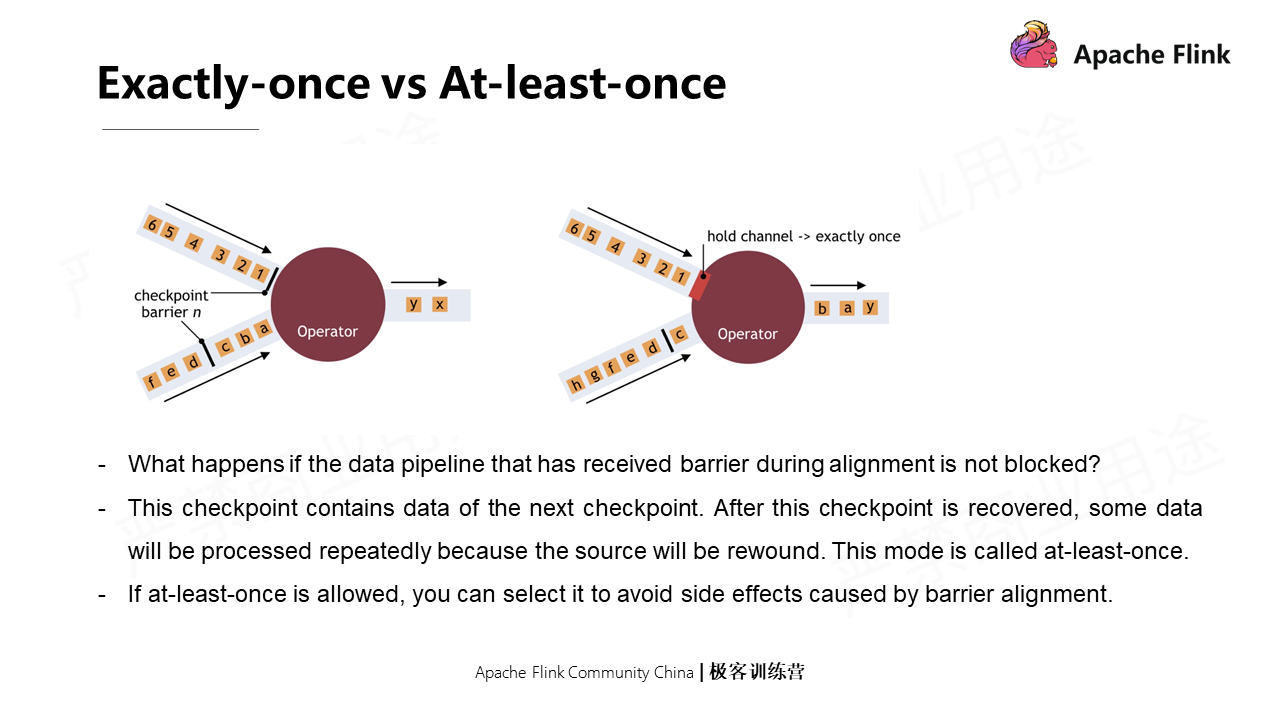

When the operator has multiple inputs, barriers need to be aligned. How to do this? As shown in the following figure, in the original state shown on the left, when one of the barriers has arrived, and others haven't, the first arrived stream is blocked to wait for the data processing of the other stream on the condition that Exactly once is guaranteed. When the other stream arrives, the first stream is unblocked, and the operator sends the barriers.

In this process, blocking one of the streams will cause backpressure. Barrier alignment will cause back pressure and suspend the data processing of the operator.

If the data pipelines that have received the barrier are not blocked during the alignment, data will keep flowing in, and the data belonging to the next Checkpoint is included in the current Checkpoint. Once fault occurs and is recovered, some data will be processed repeatedly since the source is rewound. This is called at least once. If the at-least-once is received, other methods can be selected to avoid the side effects of barrier alignment. Asynchronous snapshots can also be used to minimize pauses in tasks and support multiple Checkpoints running at the same time.

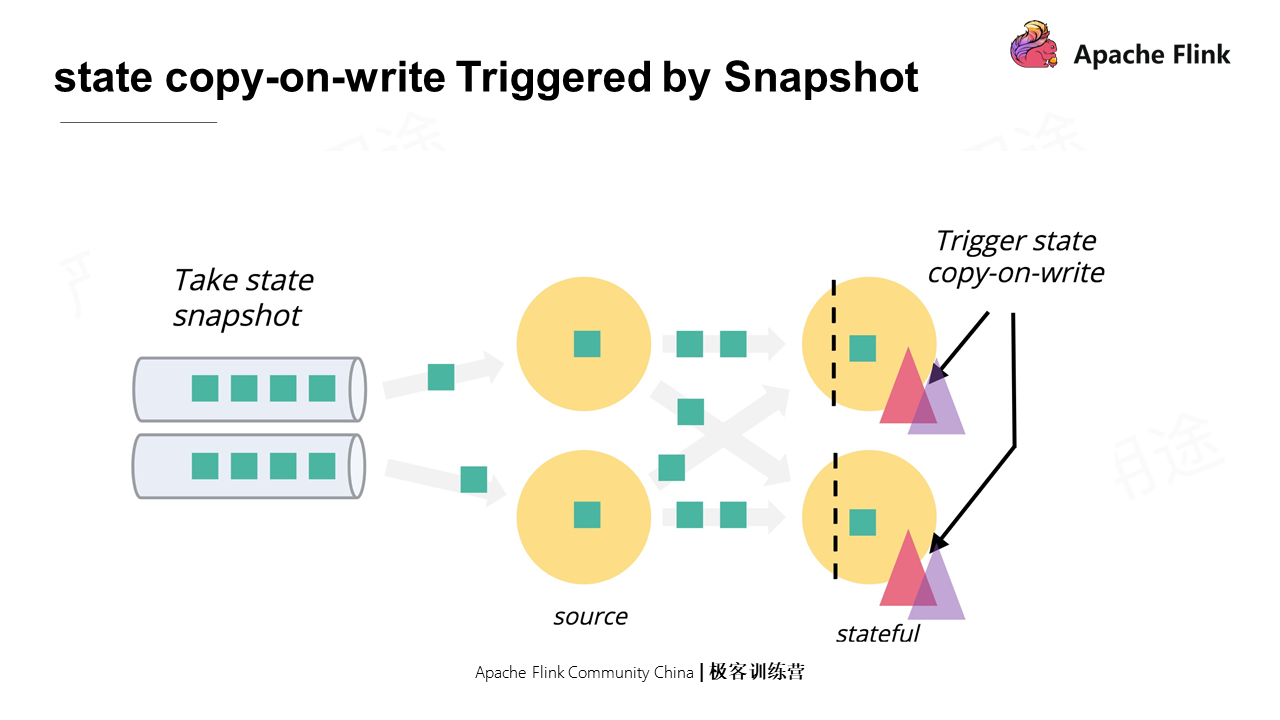

State copy-on-write is needed to synchronize local snapshots to the system.

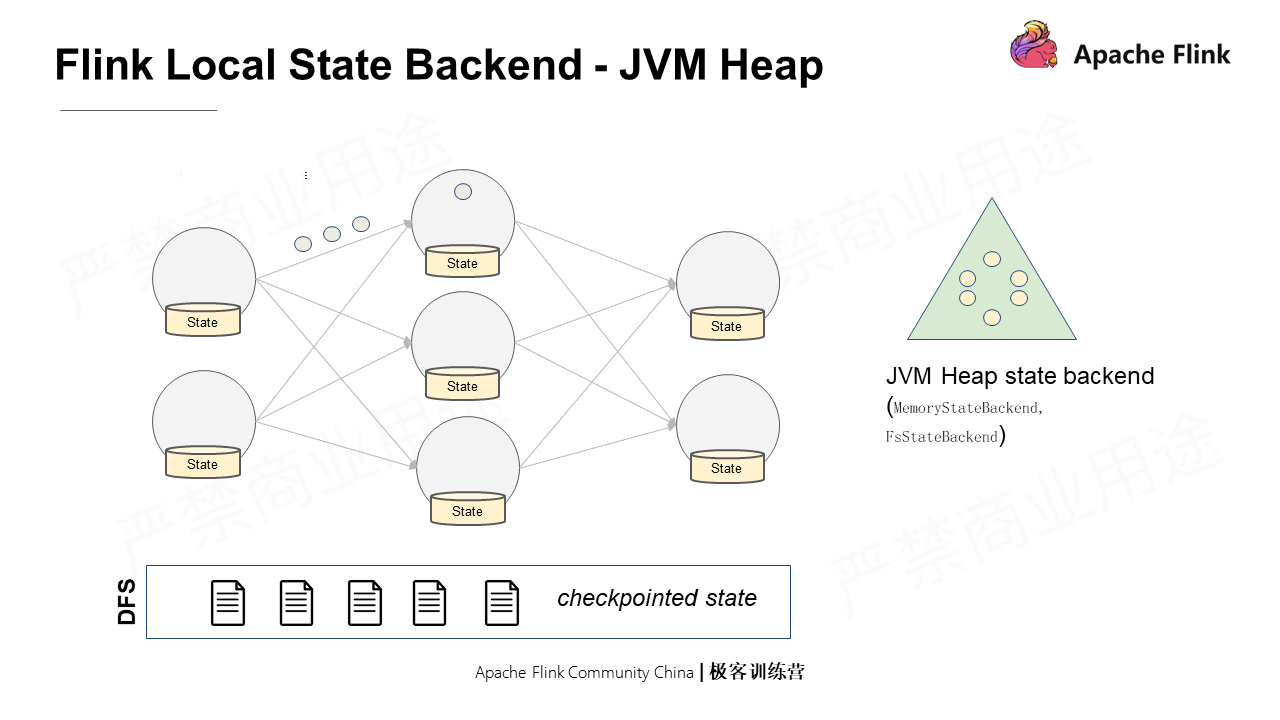

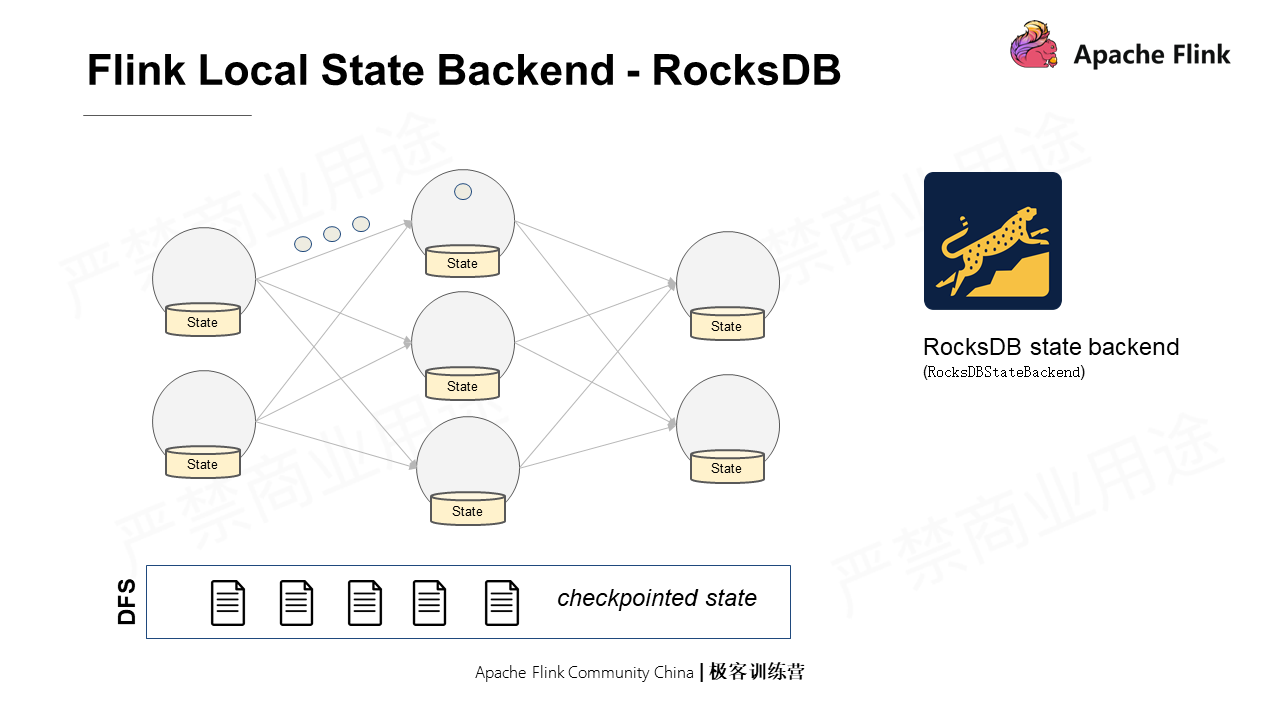

If the data processing is recovered after metadata information is snapshot, how to ensure that the application logic for recovering does not modify the data being uploaded? In fact, different state storage backends have different ways of processing. Heap backend triggers the copy-on-write operation of data, while for the RocksDB backend, the LSM feature can ensure the data that has been snapshot is not modified.

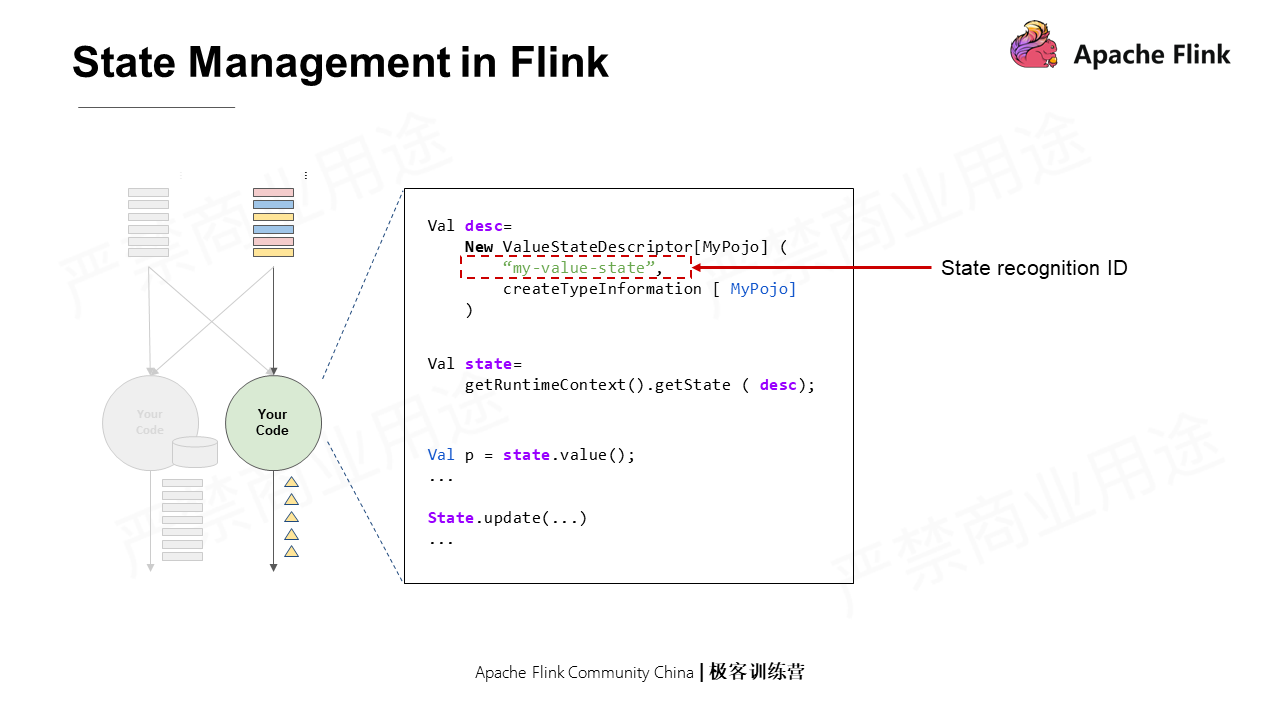

A state needs to be defined first. In the example above, define a Value state first. When defining a state, the following information is needed:

There are two types of Flink state backends:

Flink Course Series (5): Introduction and Practice of Flink SQL Table

151 posts | 43 followers

FollowApache Flink Community China - August 8, 2022

Apache Flink Community China - January 9, 2020

Apache Flink Community China - January 9, 2020

Alibaba Clouder - November 12, 2018

Apache Flink Community - April 16, 2024

Apache Flink Community China - September 27, 2020

151 posts | 43 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Apache Flink Community