This article is compiled from the speech by Mei Yuan (an Engine Architect of Apache Flink and Head of the Alibaba Storage Engine Team) at the Flink Forward Asia 2021 Core Technology Session. This speech focuses on the high availability of Flink to discuss the core issues and technical selection of the new generation stream computing of Flink. It includes:

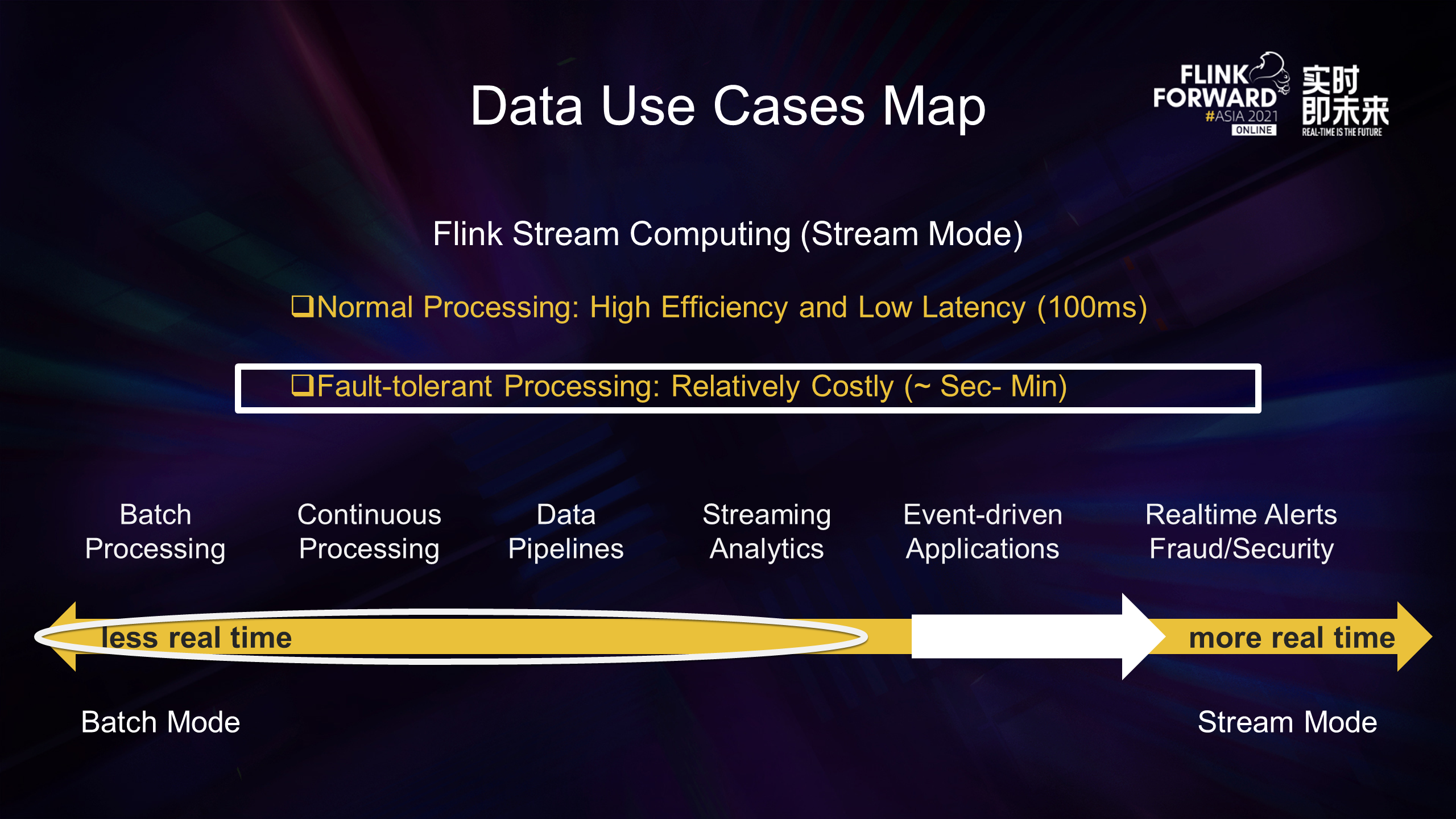

The double-ended arrow in the figure above is a graph of the delay of big data applications over time. The further to the right, the shorter the latency required. The latency of Flink was in the middle of the preceding figure when it was released, which can be understood as stream mode to the right and batch mode to the left. Over the past few years, Flink's application map has been improved significantly, which is streaming-batch unification. At the same time, we keep improving the map to becoming more real-time.

Flink started with stream computing, so what does it mean to a more real-time approach? What is more real-time and extreme stream computing?

In the case of normal processing, the Flink framework has almost no additional overhead except for regular checkpoint snapshots. Moreover, a large part of checkpoint snapshots are asynchronous, so Flink is very efficient under normal processing, and the end-to-end latency is about 100 milliseconds. The cost of fault tolerance recovery and Rescale is relatively high for Flink to support efficient processing. The entire job needs to be stopped and restored from the past snapshot checkpoint. The process takes a few seconds and will take a few minutes when the job status is relatively large. If you need to warm up or start other service processes, it will take longer.

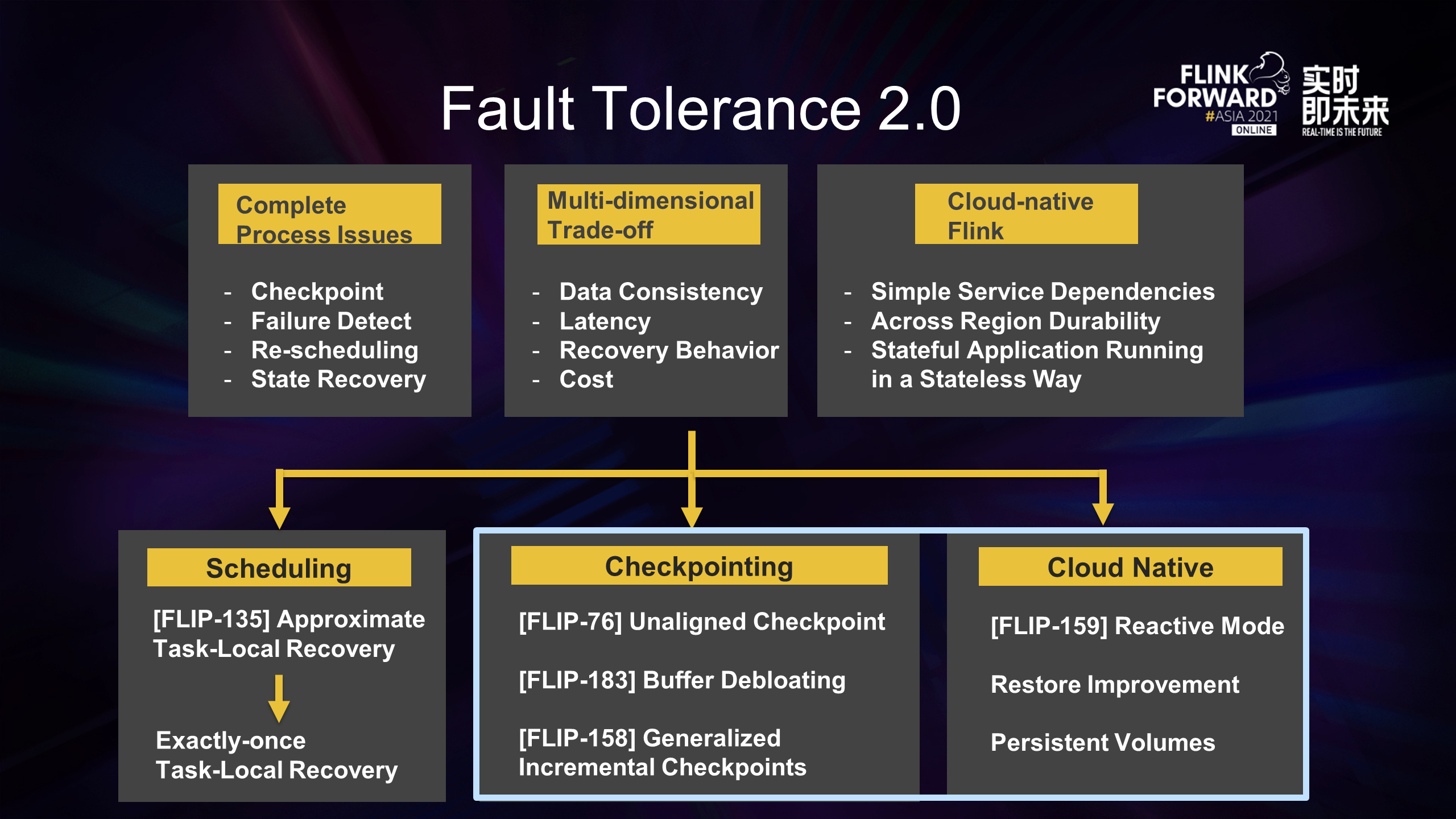

Therefore, the key point of Flinkextreme stream computing is fault tolerance and recovery. The extreme stream computing mentioned here refers to scenarios that require delay, stability, and consistency, such as risk control security. Fault Tolerance 2.0 needs to solve the problem.

Fault tolerance and recovery are complete process problems, including failure detection, job cancel, new resource application scheduling, state recovery, and reconstruction. At the same time, the Checkpoint must be made in the normal processing to recover from the existing state, and it should be lightweight enough to not affect the normal processing.

Fault tolerance is also a multi-dimensional problem. Different users and different scenarios have different requirements for fault tolerance, mainly including the following aspects.

In addition, fault tolerance is not just a problem on the Flink engine. The combination of Flink and cloud-native is an important direction for Flink in the future. The way we rely on cloud-native also determines the design and trend of fault tolerance. We expect to take advantage of the convenience brought by cloud-native through simple weak dependencies, such as cross-region durability. Finally, we hope to be able to deploy stateful Flink applications as elastically as native stateless applications.

Based on the considerations above, we also have different emphases and directions in Flink fault tolerance 2.0.

First, from the perspective of scheduling, when an error is recovered, not all task nodes corresponding to the global snapshot will be rolled back. Only a single or some failed nodes will be recovered. It is necessary for scenarios that require warm-up or a long initialization time for a single node, such as online machine learning scenarios. Some related work (such as Approximate Task-local Recovery) has been launched in VVP. We have also made some achievements in Exactly-once Task-local Recovery.

The next sections will cover Checkpoint and the parts related to cloud-native.

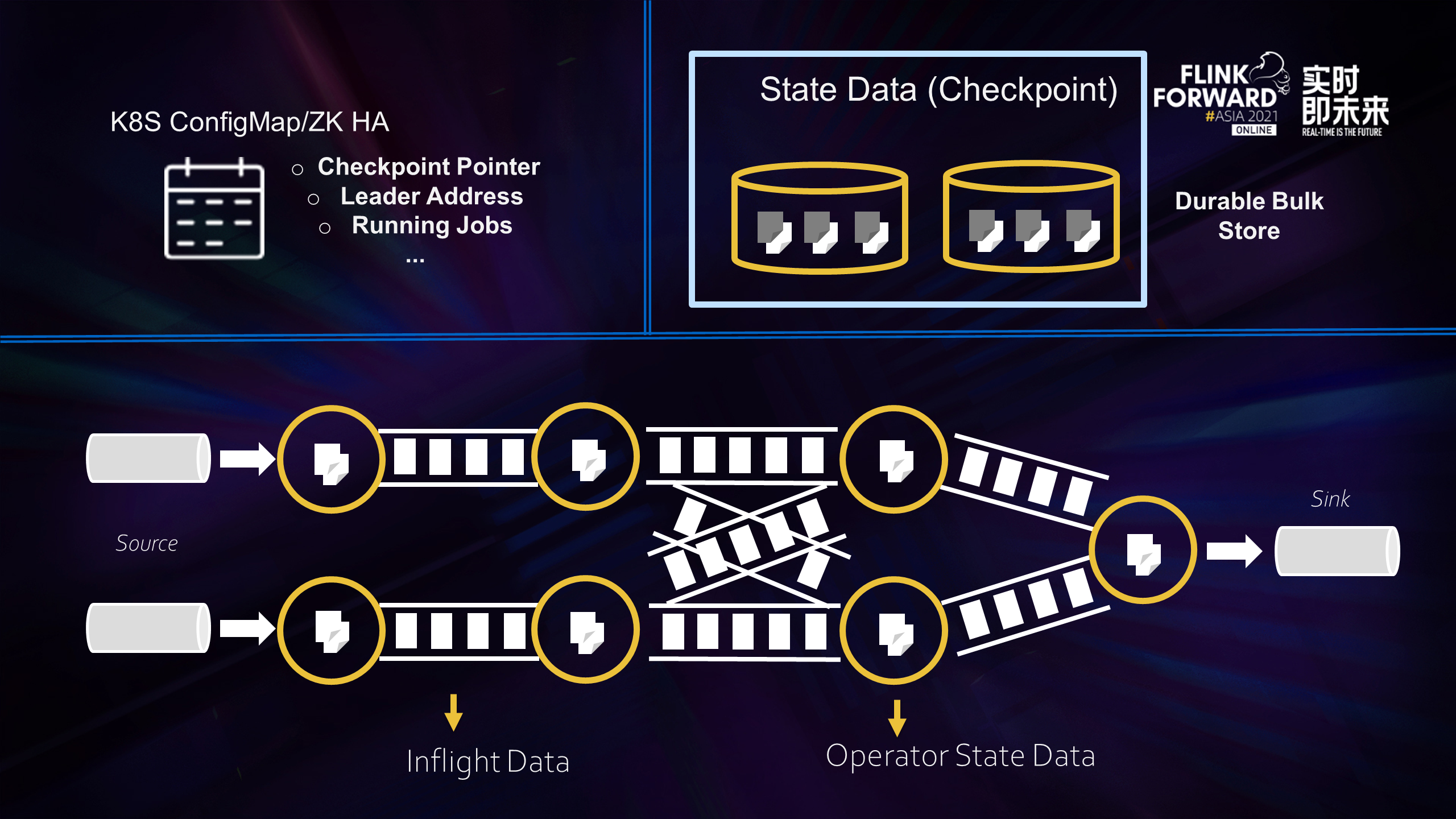

What does fault tolerance solve? In my opinion, its essence is to solve the problem of data recovery.

Flink data can be roughly divided into the following three categories. The first type is meta information, which is equivalent to the minimum information set required for Flink, including Checkpoint address, Job Manager, Dispatcher, and Resource Manager. The fault tolerance of this information is guaranteed by the high availability of Kubernetes/Zookeeper and other systems, which is beyond the scope of fault tolerance in our discussion. After the Flink job runs, it reads data from the data source and writes it to the sink. The data flowing in the middle is called Inflight Data (the second type). For stateful operators (such as aggregation operators), operator state data (the third type) is generated after processing the input data.

Flink periodically takes snapshots of the state data of all operators and uploads them to persistent and stable mass storage (Durable Bulk Store). The process is to make Checkpoint. When an error occurs in the Flink job, it is rolled back to a past snapshot Checkpoint to restore.

Currently, a lot of work is to improve the efficiency of Checkpointing because most Oncall or ticket problems of the engine layer are related to Checkpoint in actual work. Various reasons will cause Checkpointing timeout.

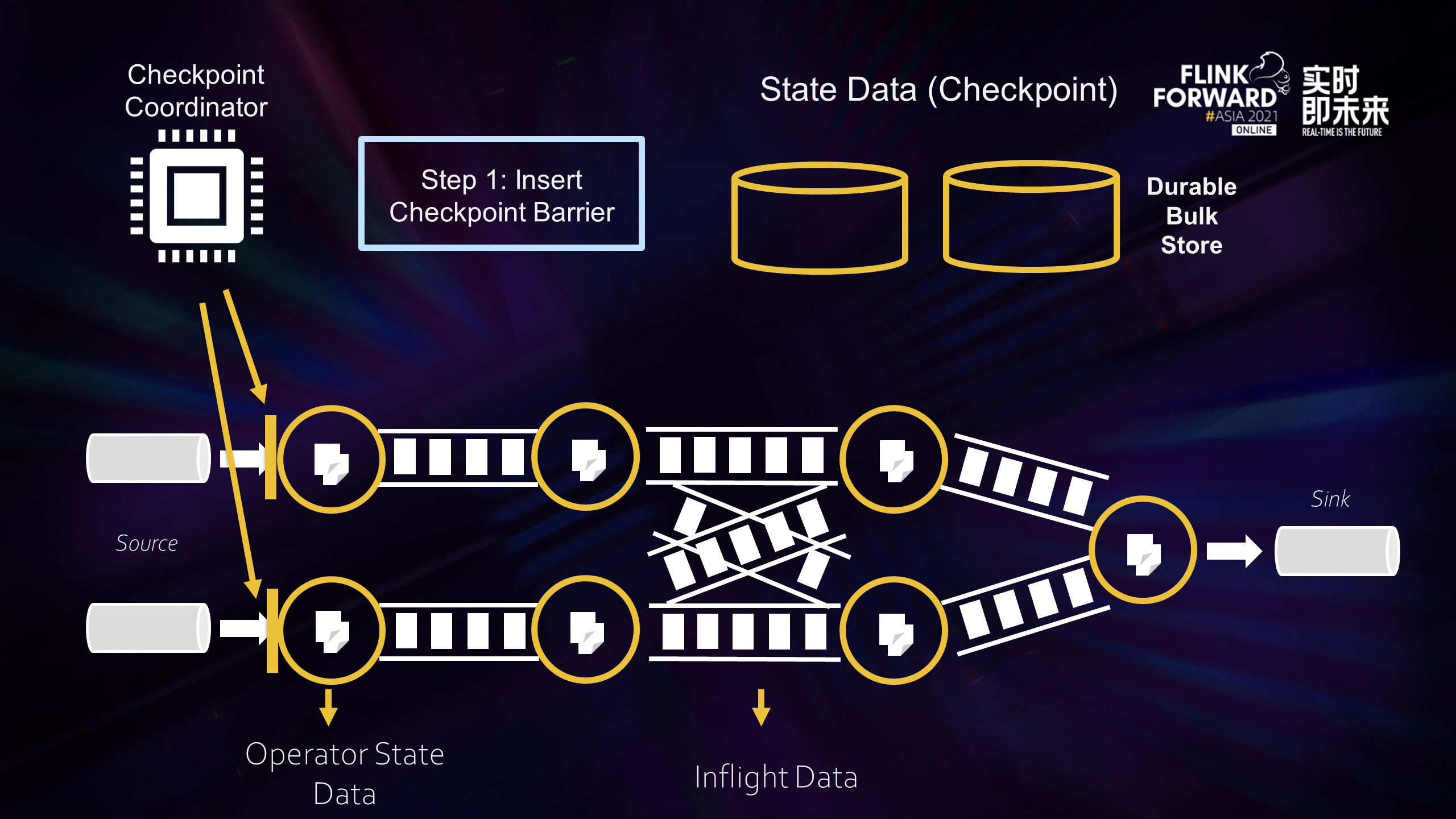

Let's briefly review the process of Checkpointing. If you are familiar with this part, you can skip it. The process of Checkpointing is divided into the following steps:

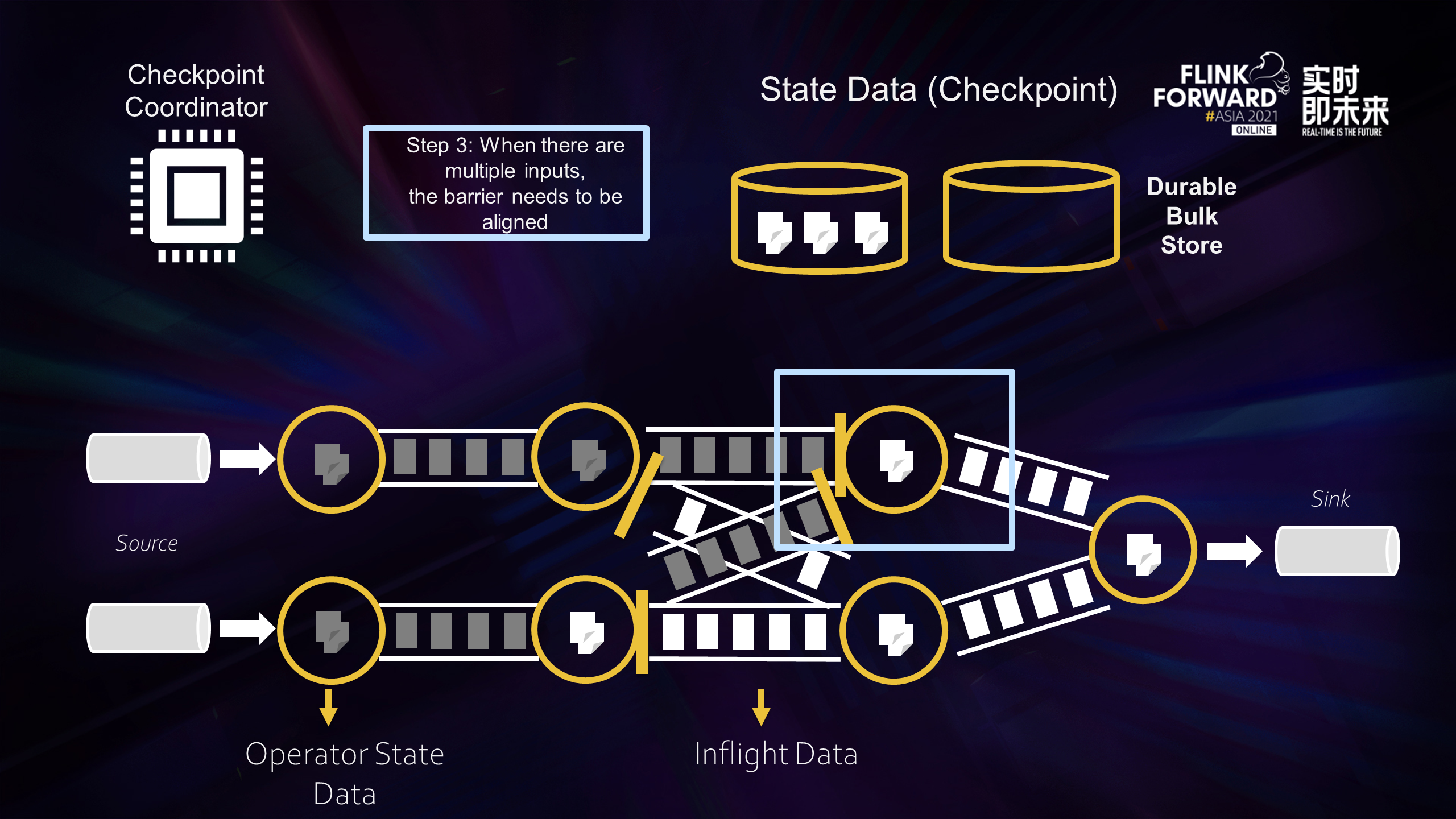

Step 1: Checkpoint Coordinate inserts a Checkpoint Barrier from Source (the yellow vertical bar in the preceding figure).

Step 2: Barriers flow downstream with inflight data. When they flow through operators, the system takes a synchronous snapshot of the current state and asynchronously uploads the snapshot data to the remote storage. As such, the influence of all input data on the operator before the barrier has been reflected in the state of the operator. If the operator state is large, it will affect the time to complete the Checkpointing.

Step 3: When an operator has multiple inputs, the operator needs to get the barriers of all inputs before starting to take snapshots, which is the blue box in the figure above. Back pressure in the alignment process results in the slow flow of intermediate processing data, and those lines without back pressure will also be blocked. Checkpoint will be done very slowly, or it could be impossible.

Step 4: After the inflight data of all operators are successfully uploaded to the remote stable storage, a complete Checkpoint is truly completed.

From these four steps, it can be seen that two main factors affect the fast and stable Checkpoint. One is the slow flow of inflight data, and the other is the slow upload of operator state data due to the large amount. Let's talk about how to solve these two factors.

For the slow flow of inflight data, you can:

Each of the solutions is described in detail below.

The principle of Unaligned Checkpoint is to instantly push the barrier inserted from the source to the sink skipping the intermediate data. The skipped data are placed together in the snapshot. Therefore, for Unaligned Checkpoint, its state data includes the state data of the operator and the processed intermediate data. It can be understood as a complete instantaneous snapshot of the entire Flinkpipeline, as shown in the yellow rectangle in the preceding figure. Although Unaligned Checkpoint can do Checkpoint very quickly, it needs to store the inflight data of the additional pipeline channel, so the state that needs to be stored will be larger. Unaligned Checkpoint was released in Flink 1.11. Rescaling and dynamic switching from Aligned Checkpoint to Unaligned Checkpoint are supported in Flink 1.12 and 1.13.

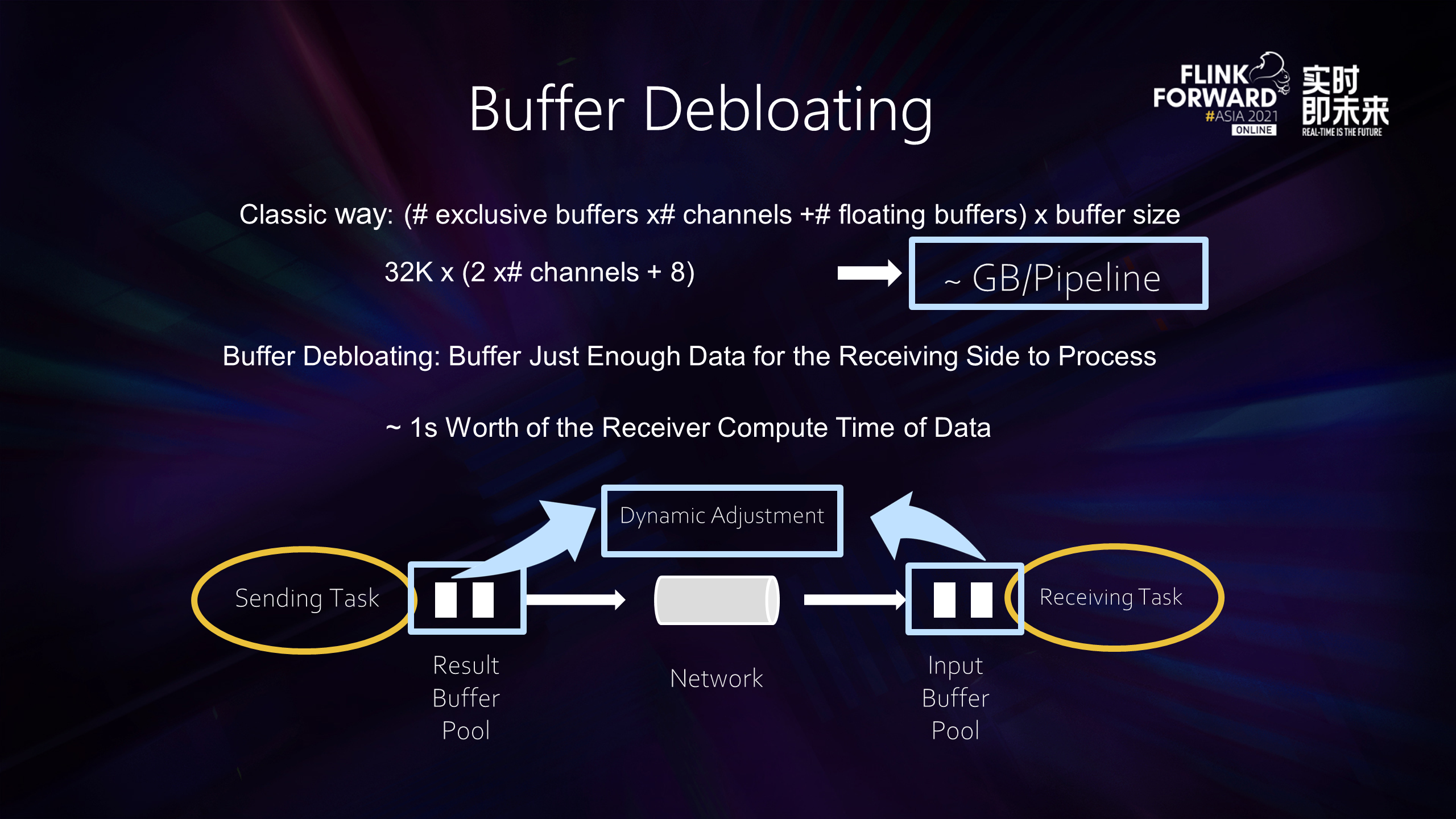

The principle of Buffer Debloating is to reduce the upstream and downstream cached data without affecting the throughput and latency. After observation, it is found that the operator does not need a large input/output buffer. Too much cache data are not very helpful except for allowing the job to fill up the entire pipeline when the data flow is slow and allowing the job memory to overuse OOM.

A simple estimation can be made here. For each task, whether it is output or input, the total buffer number is about the number of exclusive buffers corresponding to each channel multiplied by the number of channels plus the number of common floating buffers. The total number of buffers is multiplied by the size of each buffer, and the result is the size of the total local buffer pool. Then, we can substitute the system default value for calculation. If the concurrency is slightly larger and the data shuffle are several times more, the inflight data size will easily reach several gigabytes.

In practice, we do not need to cache so much data. Only a sufficient amount of data is needed to ensure the operator does not idle. This is what Buffer Debloating does. Buffer Debloating can dynamically resize the total upstream and downstream buffers to minimize the buffer size required by the job without compromising performance. The current strategy is that the upstream will dynamically cache the data that the downstream can process in about one second. In addition, Buffer Debloating is beneficial to Unaligned Checkpoint. Since Buffer Debloating reduces the amount of data flowing in the middle, the amount of additional inflight data that needs to be stored when the Unaligned Checkpoint takes a snapshot is also reduced.

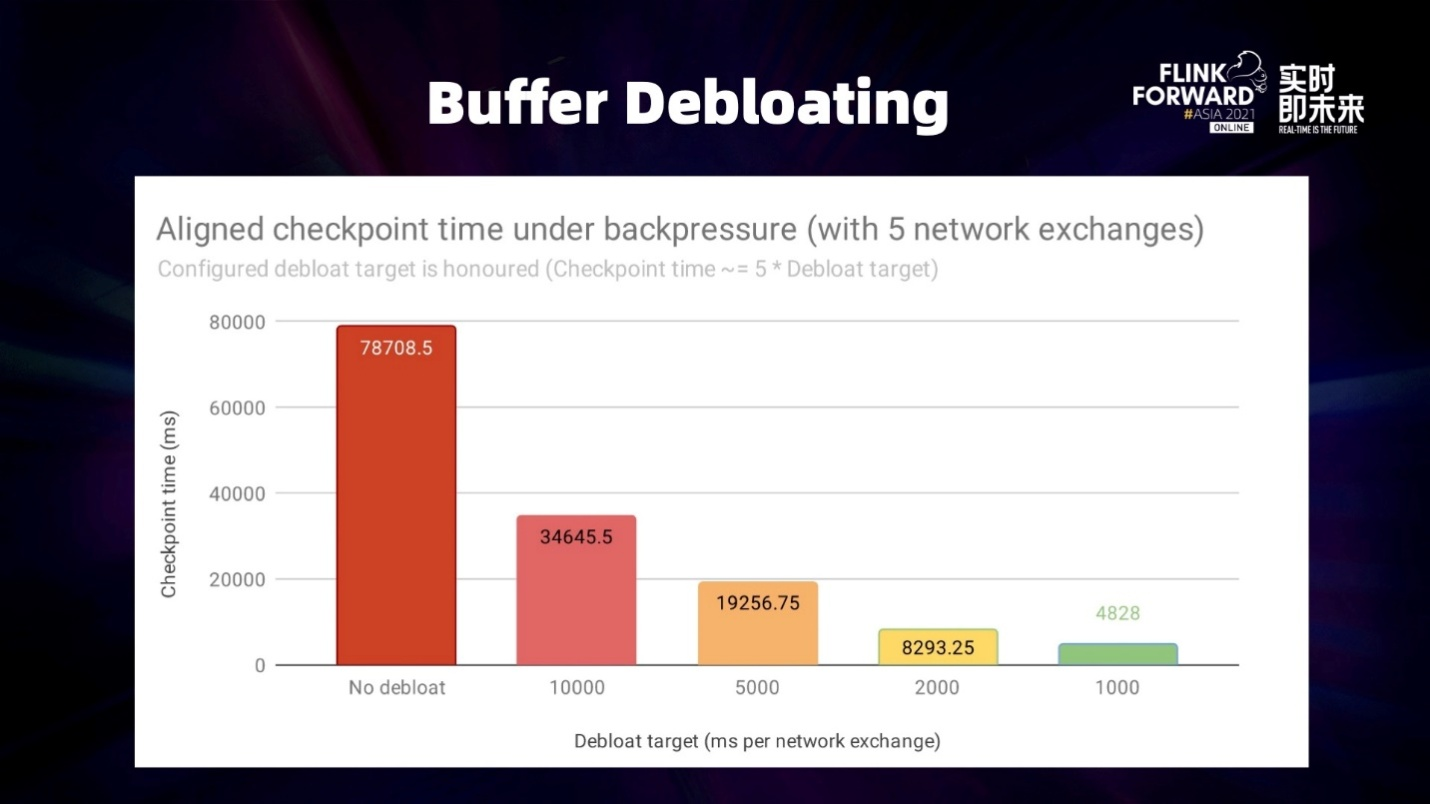

The preceding figure shows the time comparison between the Checkpointing time of Buffer Debloating and Debloat Target under backpressure. Debloat Target refers to the data that can be processed downstream within the expected time of the upstream cache. In this experiment, the Flink job has five Network Exchanges, so the total time required for checking is about five times that of Debloat Target, which is mostly consistent with the experimental results.

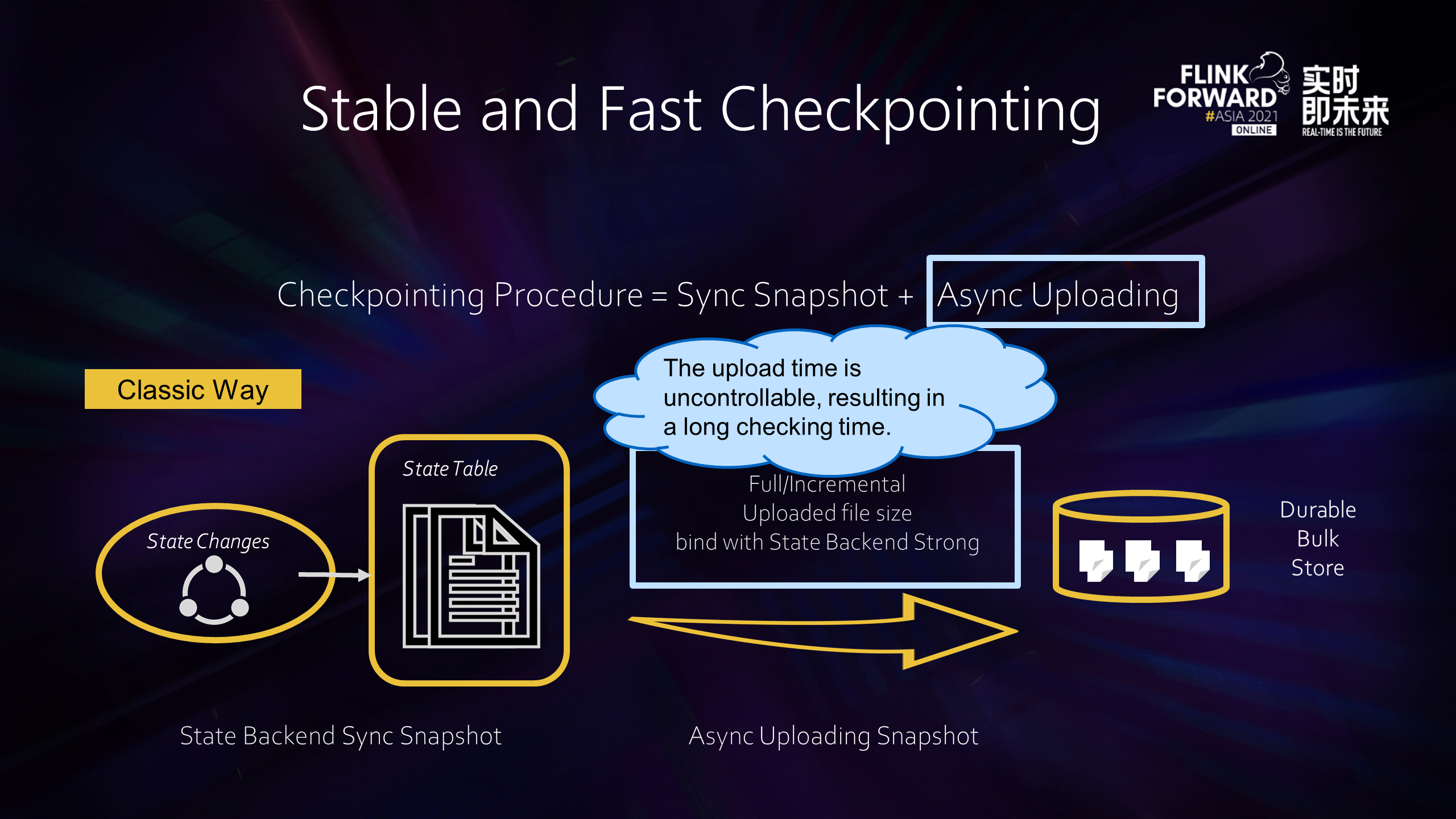

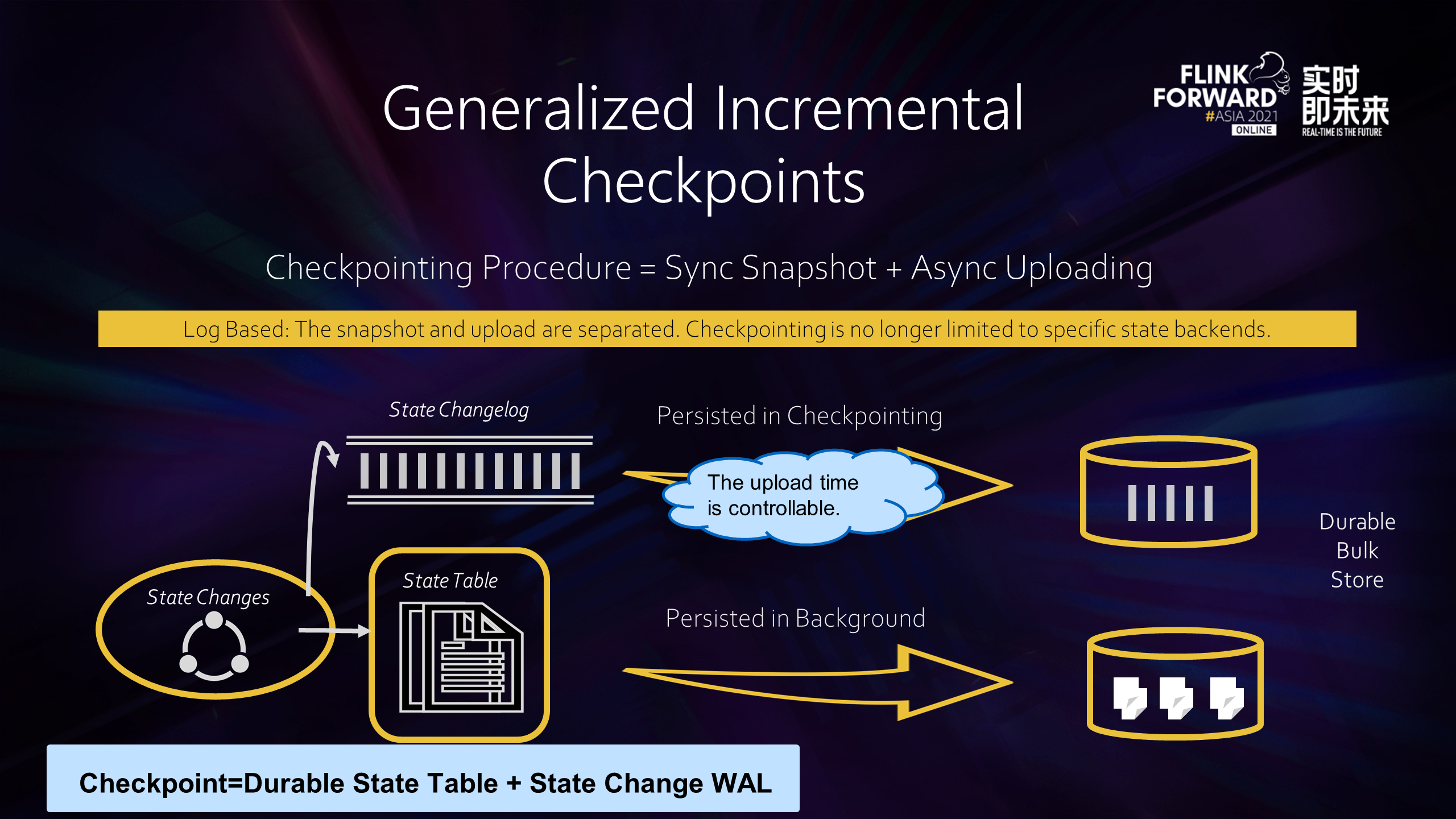

The status size mentioned earlier also affects the time to complete Checkpointing because the Checkpointing process of Flink consists of two parts: synchronous snapshot and asynchronous upload. The synchronization is usually very fast, just brush the state data from memory to disk. However, the asynchronously uploading state data is related to the amount of data uploaded. Therefore, we have introduced Generalized Log-Based Incremental Checkpoint to control the amount of data that needs to be uploaded each time a snapshot is taken.

For stateful operators, after their internal state changes, the update is recorded in the State Table, as shown in the preceding figure. When Checkpointing occurs, let's take RocksDB as an example. This state table is brushed to the disk, and the disk files are asynchronously uploaded to the remote storage. Depending on the Checkpoint mode, the uploaded part can be a complete Checkpoint or an incremental Checkpoint part. However, regardless of the mode, the size of the file it uploads is strongly bound to the State Backend storage implementation. For example, RocksDB supports incremental Checkpoint, but many new files are generated once multi-layer compaction is triggered. In this case, the incremental part is even larger than a complete Checkpoint, so the upload time is still uncontrollable.

Since the upload causes the Checkpointing timeout, the problem can be solved by stripping the upload from the Checkpointing process. This is what Generalized Log-based Incremental Checkpoint wants to do. In essence, it aims to completely separate the Checkpointing process from the state backend storage compaction.

The specific implementation method is listed below:

For a stateful operator, in addition to recording the state update in the State Table, we will write an increment to the State Changelog and brush them asynchronously to the remote storage. As such, Checkpoint becomes two parts. The first part is the State Table that has been materialized on the remote storage. The second part is the incremental part that has not been materialized. Therefore, when Checkpoint is made, the amount of data that needs to be uploaded becomes small and stable. Checkpoints can be made more stable and more frequent. The end-to-end latency can be reduced significantly, especially for Exactly Once Sink, because the two-stage submission needs a complete Checkpoint.

In the context of cloud-native, rapid scaling is a major challenge for Flink. Flink jobs need to do Scaling-In/Out frequently, especially after the Re-active Scaling mode was introduced in Flink version 1.13. Therefore, Scaling has become the main bottleneck of Re-active. The problems to be solved by rescaling and failover are largely similar. For example, after a machine is removed, the system needs to sense it, reschedule, and restore the state quickly. Of course, there are also differences. In failover, you only need to restore the state and pull the state back to the operator. However, in rescaling, since the topology will cause the degree of parallelism to change, the state needs to be reallocated.

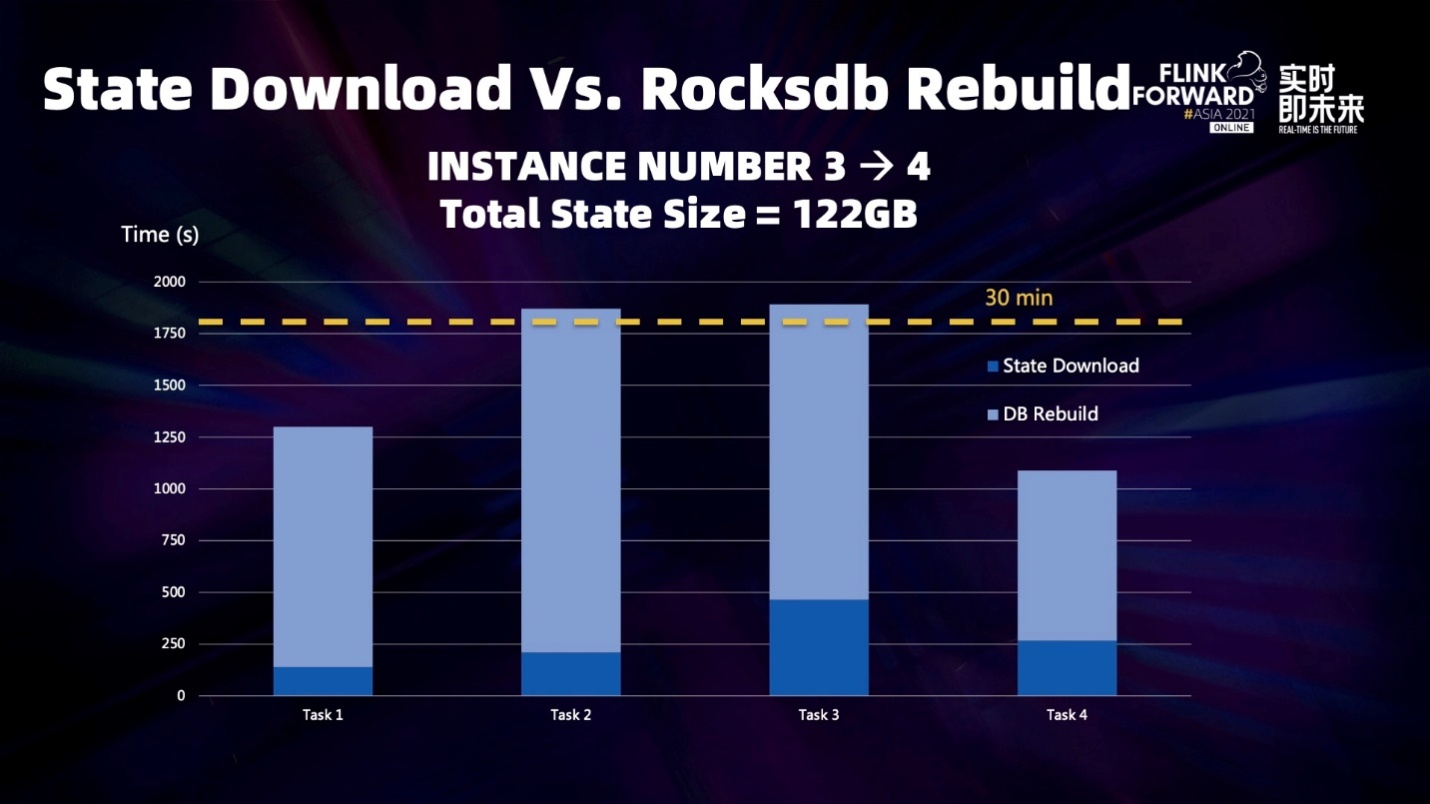

When the state is restored, we need to read the state data from the remote storage to the local and then redistribute the state according to the data. As shown in the preceding figure, if the status is slightly larger, a single concurrency will last more than 30 minutes. In practice, we found that the time required for state redistribution is much greater than reading state data from remote storage.

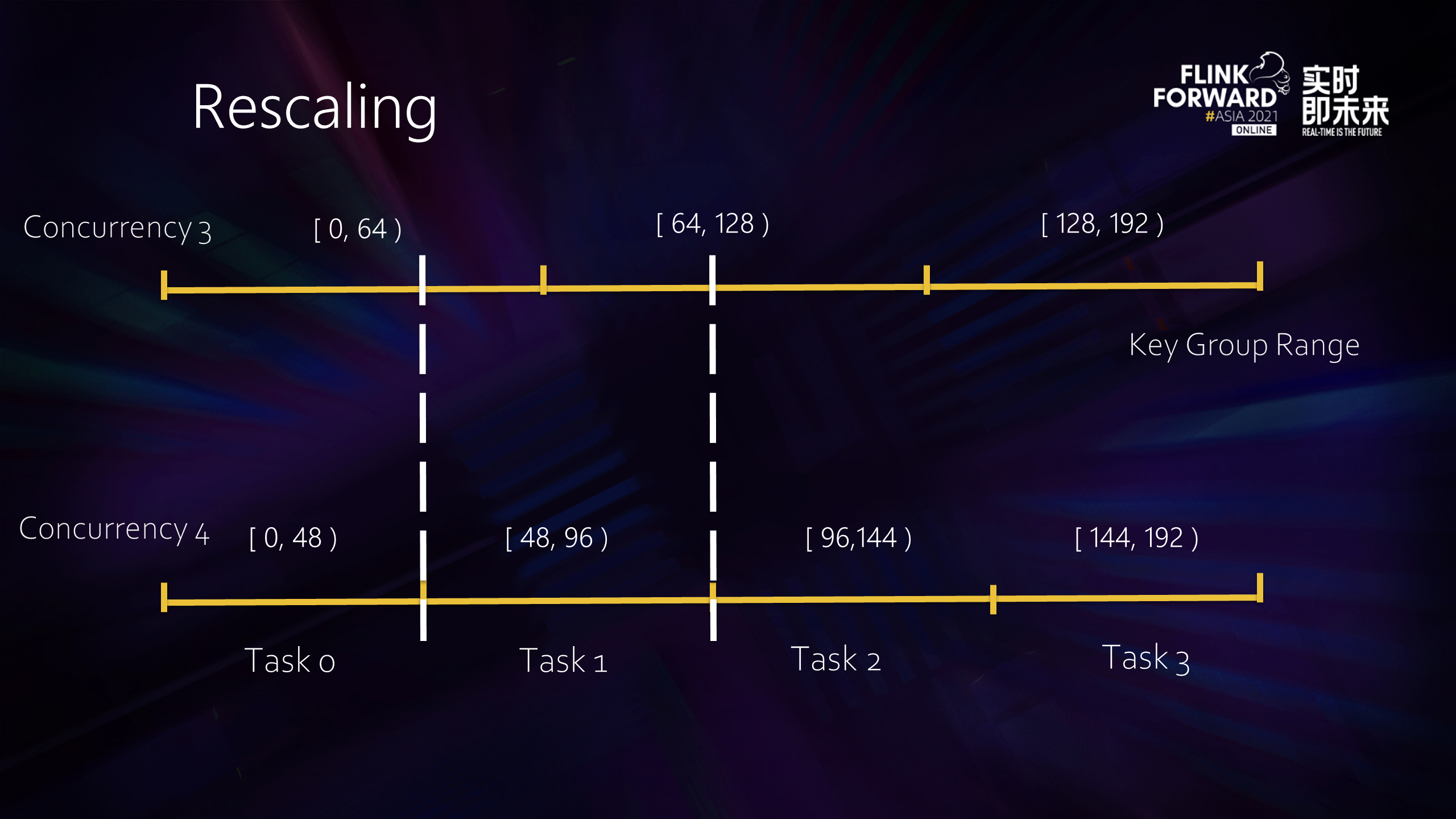

How is the state redistributed? The state of Flink is split using the key group as the smallest unit, which can be understood as mapping the key space of the state to a positive integer set starting from 0. This positive integer set is the Key Group Range. The key group range is related to the maximum concurrency allowed for the operator. As shown in the figure above, when we change the concurrency of operators from 3 to 4, the redistributed task1 state is formed by splicing parts of the original two task states, respectively. The splicing states are continuous and have no intersection, so this feature can be used to do some optimization.

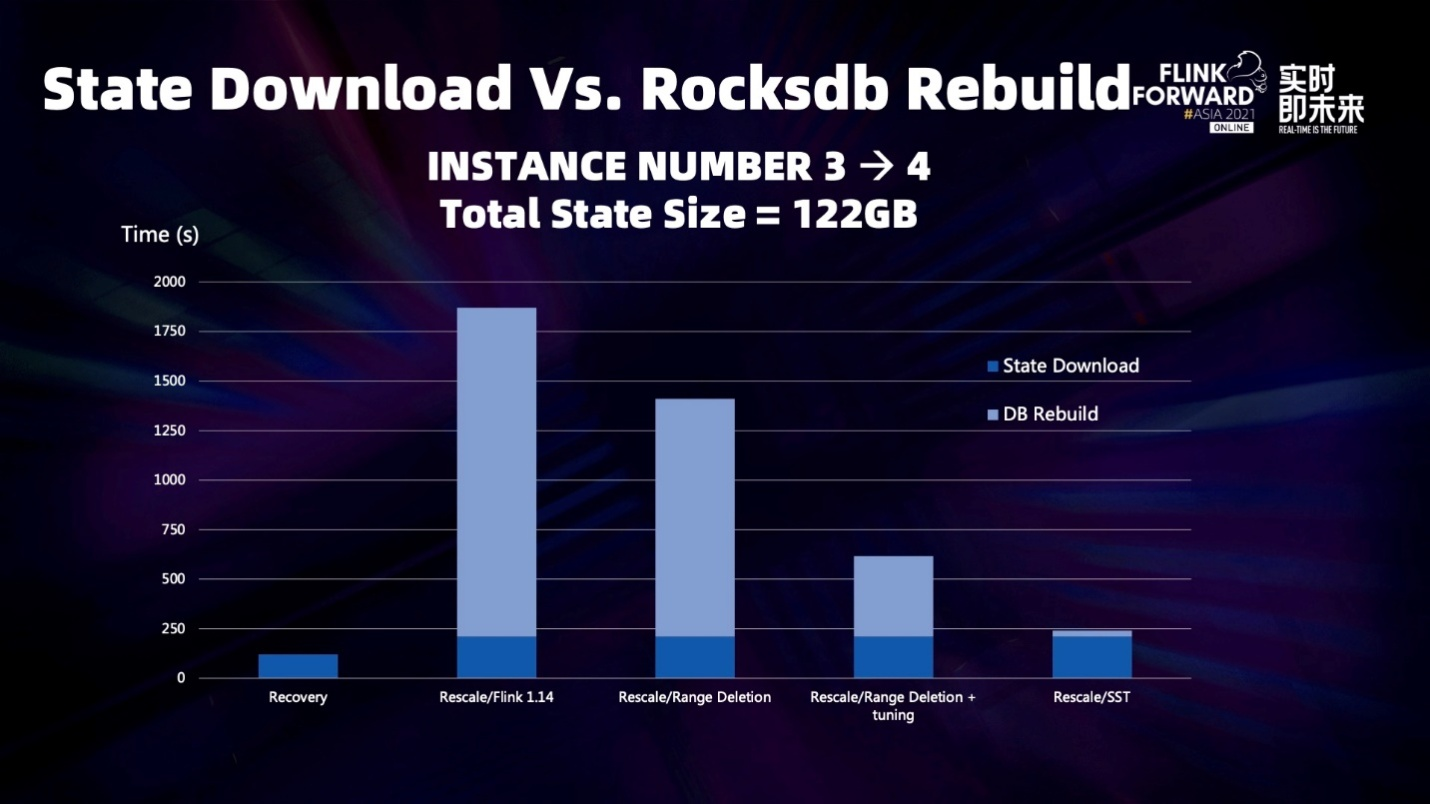

From the figure above, the optimization effect of DB Rebuild is still very clear. However, it is still in the exploratory stage, and many problems have not been solved, so there is no clear community plan for the time being.

First, we discussed why fault tolerance should be done. That is because fault tolerance is the key path of Flink stream computing. Then, we analyzed the factors that affect fault tolerance. Fault tolerance is a problem in the complete process, including Failure Detection, Job Canceling, new resource application scheduling, state recovery, and reconstruction. We need to weigh and think about this problem from multiple dimensions. Currently, our focus is mainly on how to make Checkpoints stable and quick since many practical problems are now related to doing checkpoints. Finally, we discussed some exploratory work on how to put fault tolerance in the context of cloud-native and combine it with elastic scaling.

Improving speed and stability of checkpointing with generic log-based incremental checkpoints

156 posts | 45 followers

FollowAlibaba Cloud Community - August 12, 2022

Apache Flink Community China - January 9, 2020

Apache Flink Community - April 9, 2024

Apache Flink Community China - September 27, 2020

Apache Flink Community China - March 29, 2021

Alibaba Clouder - November 12, 2018

156 posts | 45 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Application High availability Service

Application High availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn MoreMore Posts by Apache Flink Community