By Wu Chong (yunxie), Apache Flink PMC and Technical Expert

Edited by Chen Zhengyu, a Volunteer from the Flink Community

Flink 1.11 introduces Flink SQL CDC. What changes can CDC bring to data and business? It will focus on traditional data synchronization solutions, synchronization solutions based on Flink CDC, more application scenarios, and CDC future development plans.

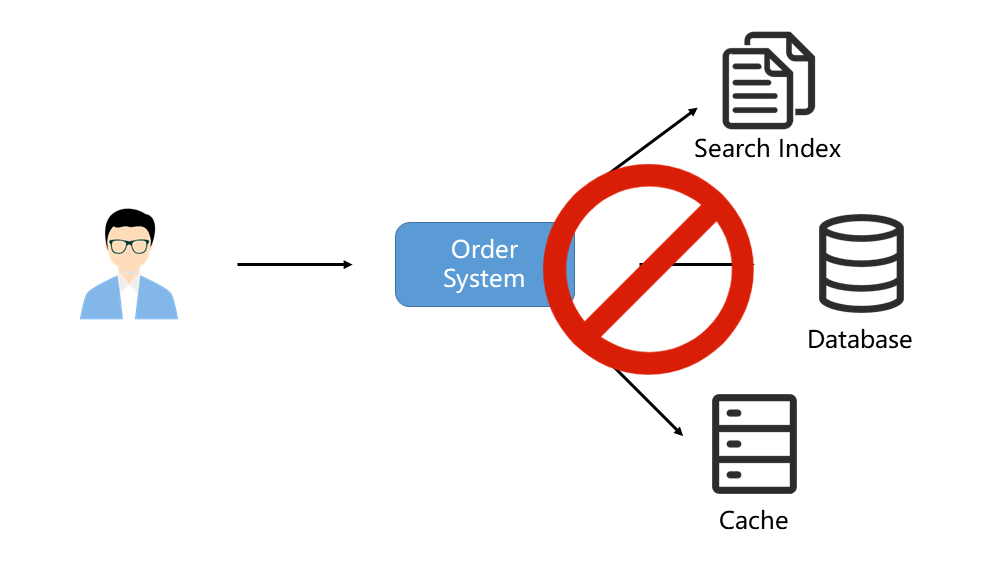

In business systems, data usually needs to be updated in multiple storage components. For example, an order system can complete business by writing data to a database. One day, the BI Team expects to perform full-text indexing on the database. Therefore, one more copy of the data should be written to Elasticsearch. After the transformation, the data needs to be written to the Redis cache.

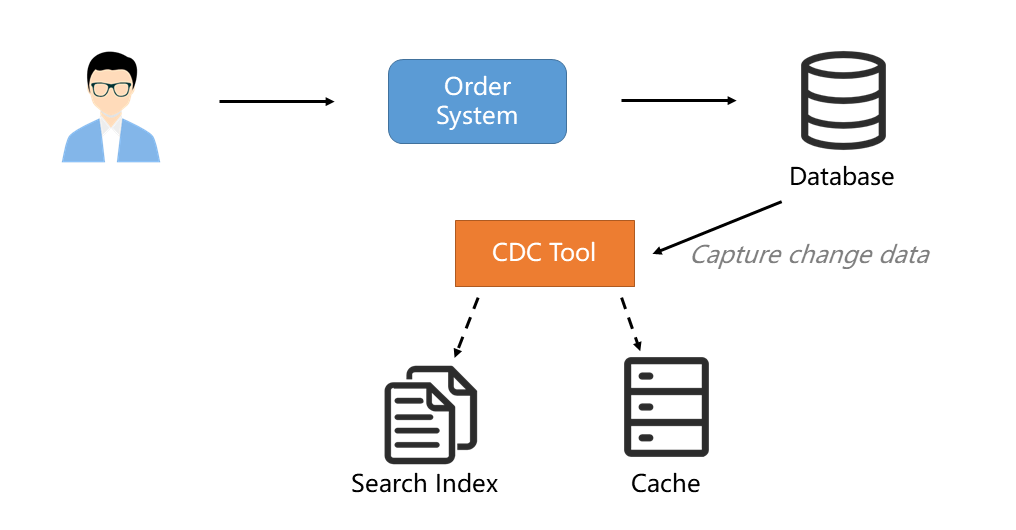

This mode is not sustainable. This data writing mode may lead to maintenance, scalability, and data consistency problems. Distributed transactions are required, and the cost and complexity will increase. You can use the Change Data Capture (CDC) tool to decouple data and synchronize the data to the downstream storage systems. By doing so, systems are more robust, and the subsequent maintenance will be convenient.

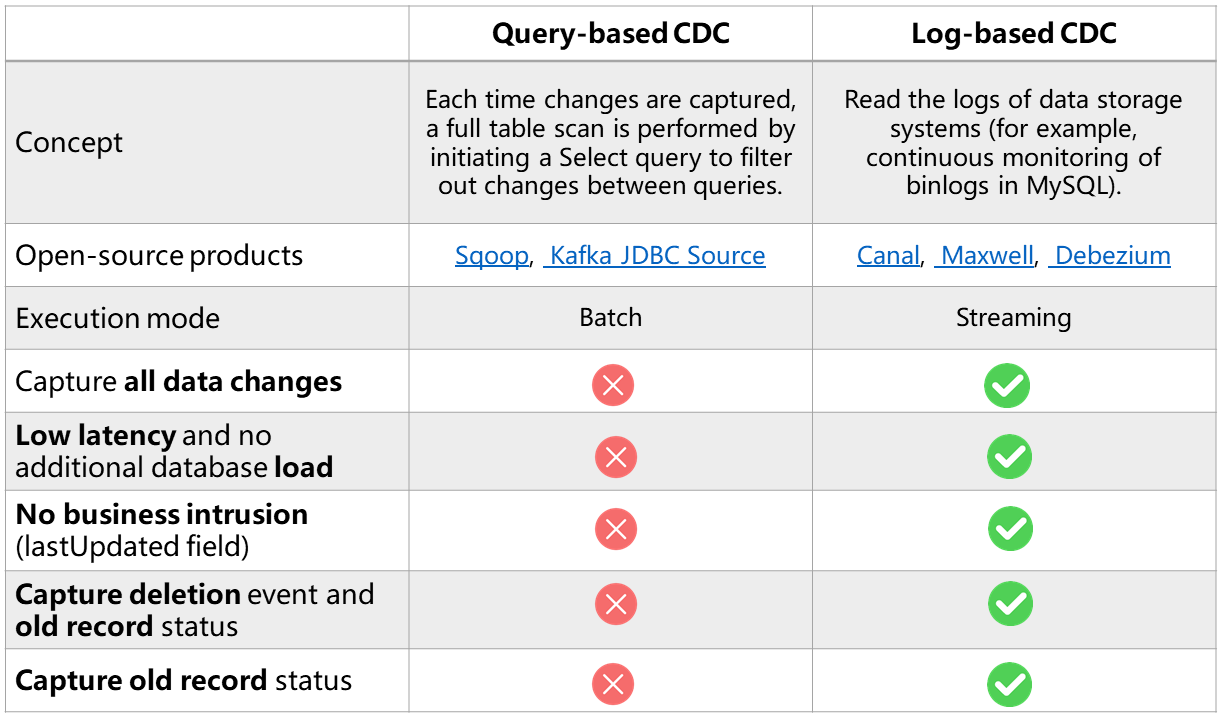

CDC is short for Change Data Capture. It is such a broad concept that as long as changed data can be captured, it can be called CDC. There are query-based CDC and log-based CDC in the industry. The differences in their features are listed below:

Based on the comparison above, the log-based CDC has the following advantages:

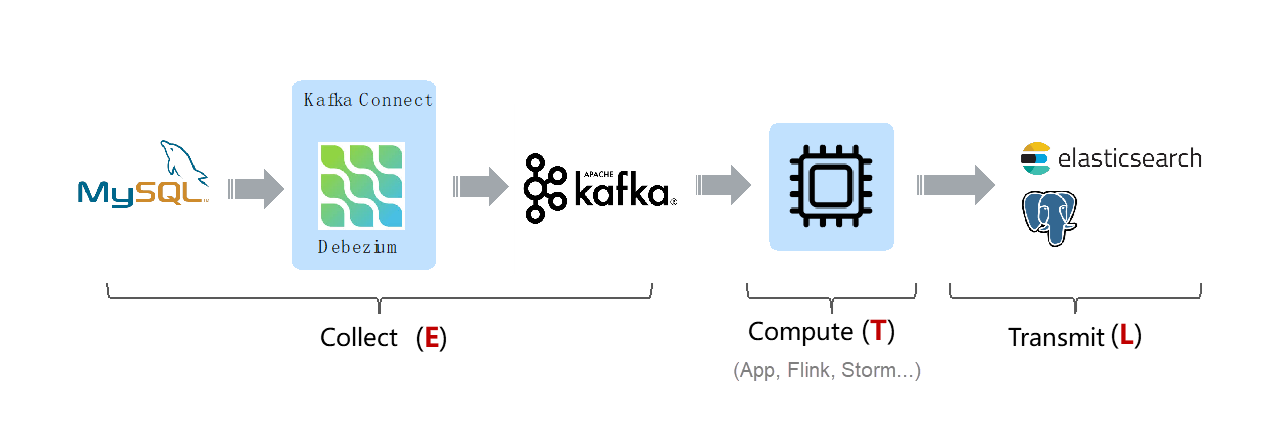

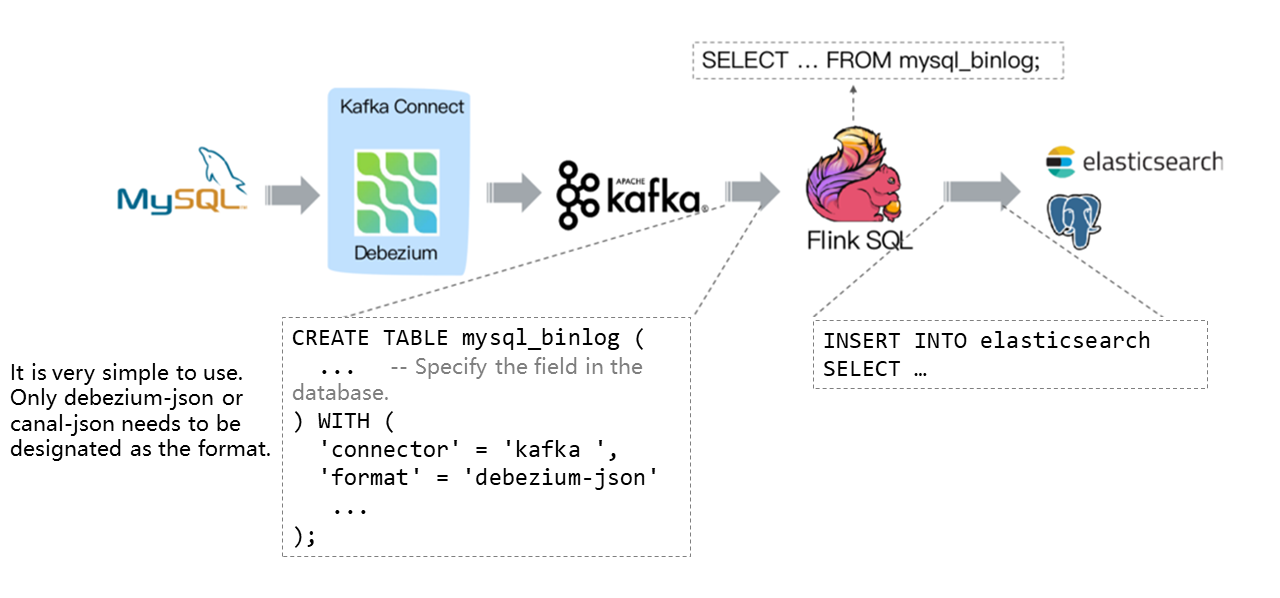

From the perspective of ETL, the business database data is collected generally. Let's take MySQL as an example. MySQL Binlog is collected through Debezium and then sent to Kafka message queue. Then, it is connected to real-time computing engines or applications for consumption. After that, the data is transmitted to an OLAP system or other storage media.

Flink hopes to connect more data sources and give full play to its computing capability. The operational logs and database logs are the main sources in production. Business logs have been well supported by Flink, while the database logs haven't been supported before Flink 1.11. This is one of the reasons why CDC is integrated.

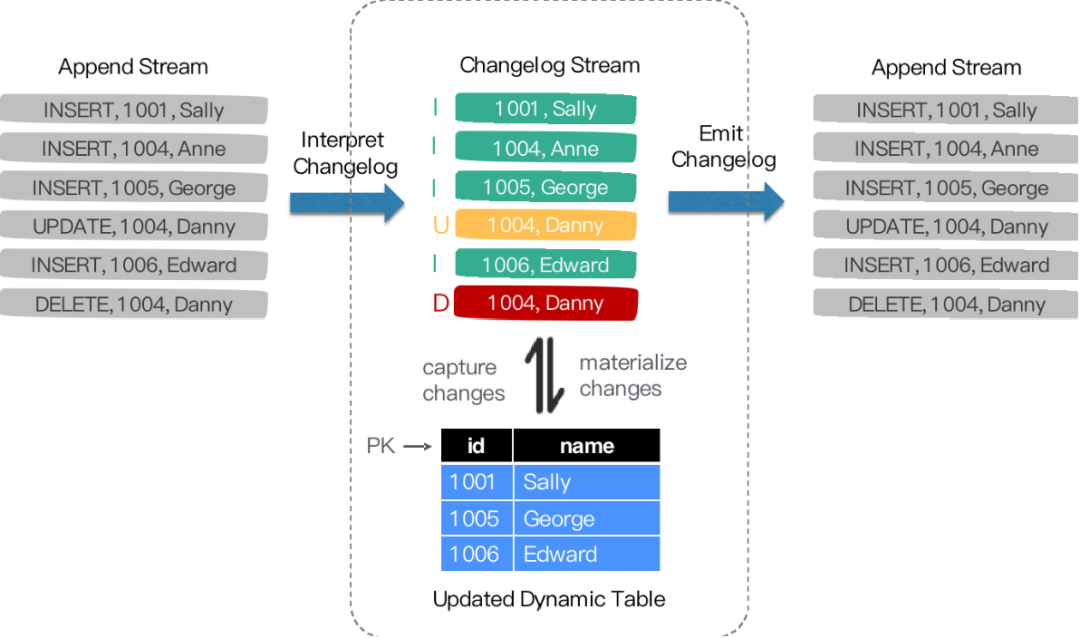

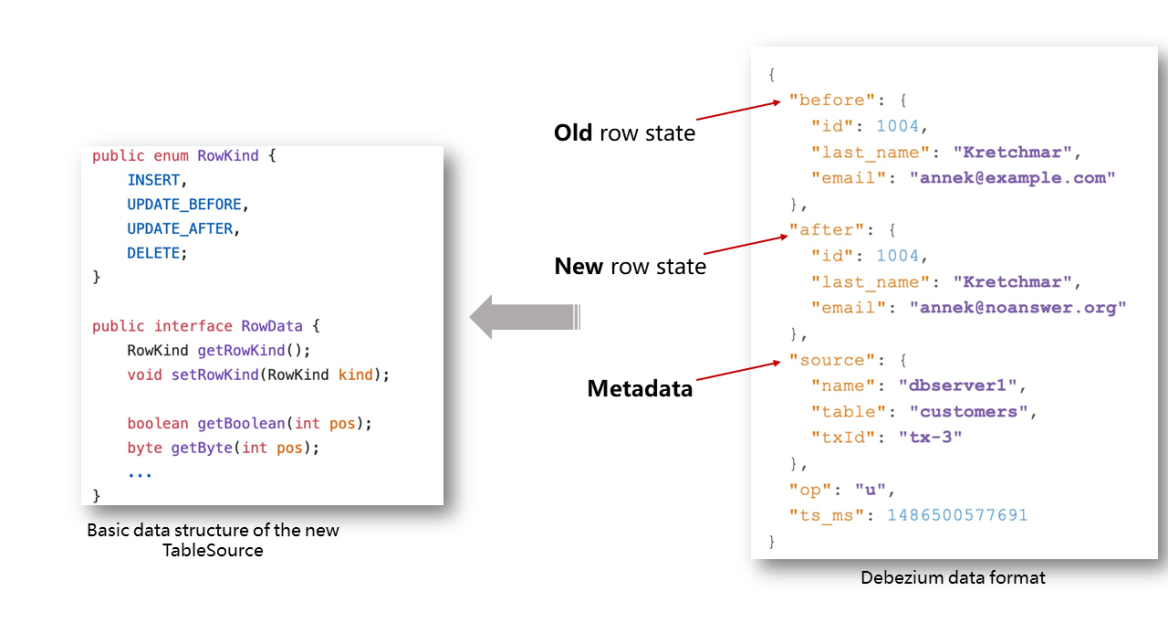

Flink SQL supports the complete changelog mechanism. Flink only needs to convert the CDC data to the data that Flink recognizes to connect CDC data. Therefore, the TableSource API is restructured in Flink 1.11 to better support and integrate the CDC.

The restructured TableSource outputs RowData structure, representing a row of data. There is metadata information above RowData, which is called RowKind. The RowKind contains commands, including INSERT, UPDATE_BEFORE, UPDATE_AFTER, and DELETE, which are similar to the binlog in a database. Through the JSON format collected by Debezium, old and new data rows and original data information are included. The "u" of the "op" indicates the update identifier, and "ts_ms" indicates the synchronization timestamp. Therefore, connecting Debezium JSON data converts the original JSON data into the RowData recognizable to Flink.

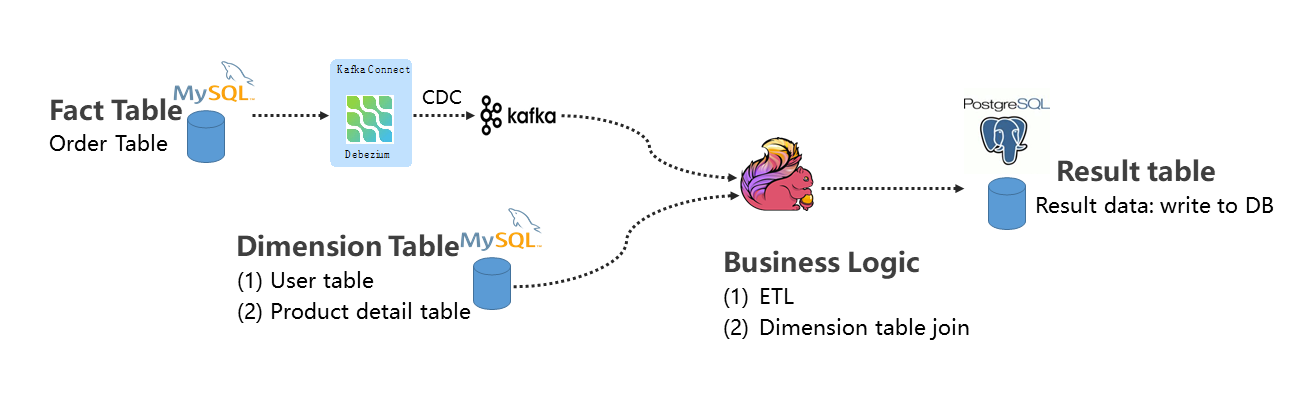

When Flink is selected as the ETL tool, the data synchronization process is shown in the following figure:

Debezium subscribes MySQL Binlog and transmits Binlog to Kafka. Flink designates the format as debezium-json by creating a Kafka table. Then, after computing by Flink, the table is inserted directly into other external data storage systems, such as Elasticsearch and PostgreSQL, in the following figure:

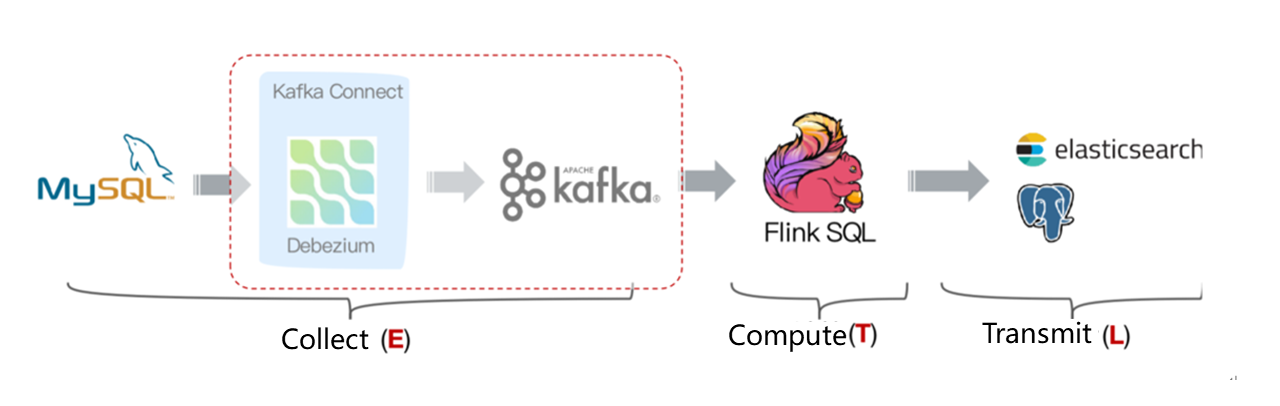

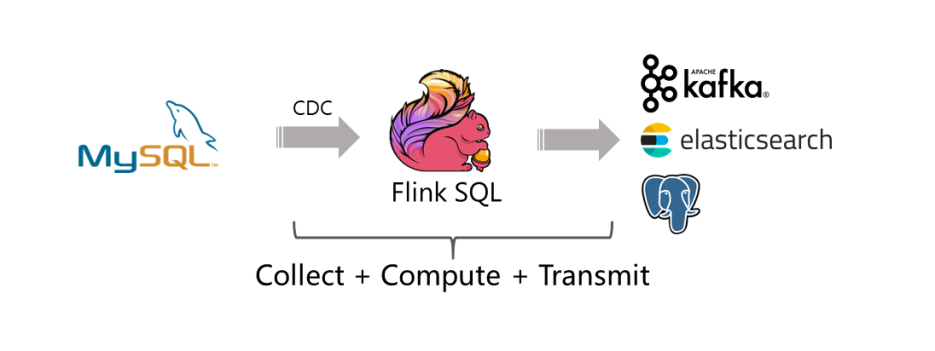

However, there is a disadvantage in this architecture. Too many collection components lead to complicated maintenance. So, we wonder if Flink SQL can be used to connect directly with MySQL Binlog data, and is there any alternative? The answer is yes. The improved structure is shown in the following figure:

The community has developed the flink-cdc-connectors component. It is a source component that can read full data and incremental change data directly from MySQL, PostgreSQL, and other databases. It has been open-sourced. Please see this link for more information.

The flink-cdc-connectors can replace the data collection module of Debezium + Kafka. Thus, Flink SQL collection, computing, and ETL can be unified, which has the following advantages:

Here are three examples of Flink SQL + CDC that are often used in real-world scenarios. If you want to practice, you will need components, such as Docker, MySQL, and Elasticsearch. For more information about the three examples, please see the documents of each example.

In this example, the order table (fact table) data is subscribed, and MySQL Binlog is sent to Kafka through Debezium. Then, the results are output to the downstream PostgreSQL database through Join and ETL operations on the dimension table.

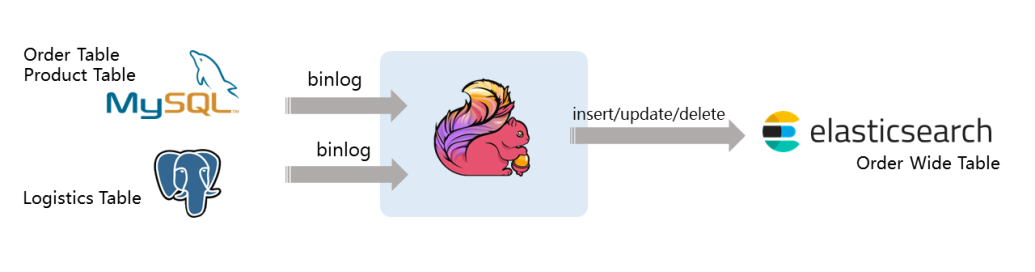

This example simulates the order table and logistics table of the e-commerce company. The order data needs to be statistically analyzed. Different information needs to be associated to form a wide order table. Then, the wide table is delivered to the downstream business team for data analysis through Elasticsearch. This example demonstrates how Binlog data streams are associated once and synchronized to Elasticsearch in real-time through Flink's great computing capability without relying on other components.

For example, the following Flink SQL code can complete the real-time synchronization of full and incremental data in the orders table in MySQL:

CREATE TABLE orders (

order_id INT,

order_date TIMESTAMP(0),

customer_name STRING,

price DECIMAL(10, 5),

product_id INT,

order_status BOOLEAN

) WITH (

'connector' = 'mysql-cdc',

'hostname' = 'localhost',

'port' = '3306',

'username' = 'root',

'password' = '123456',

'database-name' = 'mydb',

'table-name' = 'orders'

);

SELECT * FROM ordersThe test environment of docker-compose is also provided to help readers understand this example. For more detailed tutorials, please refer to the following document: Reference Document

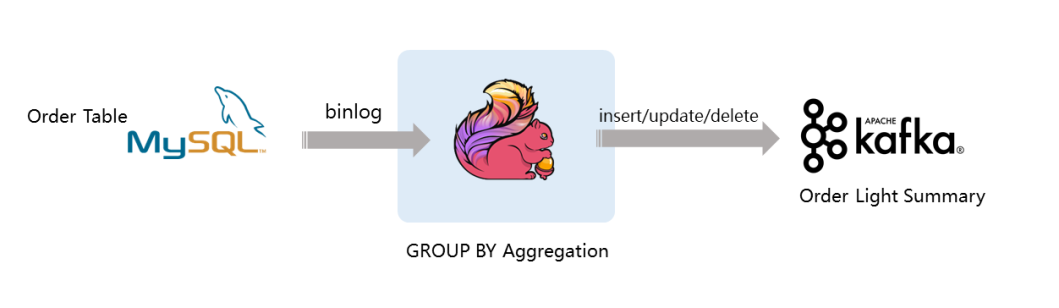

The following example shows how to count the GMV of the whole platform daily. The statements include INSERT, UPDATE, and DELETE. Only the paid order can be calculated into the GMV to observe the changes of GMV value.

Flink SQL CDC can be applied flexibly in real-time data synchronization scenarios. It can also be provided to users in more scenarios.

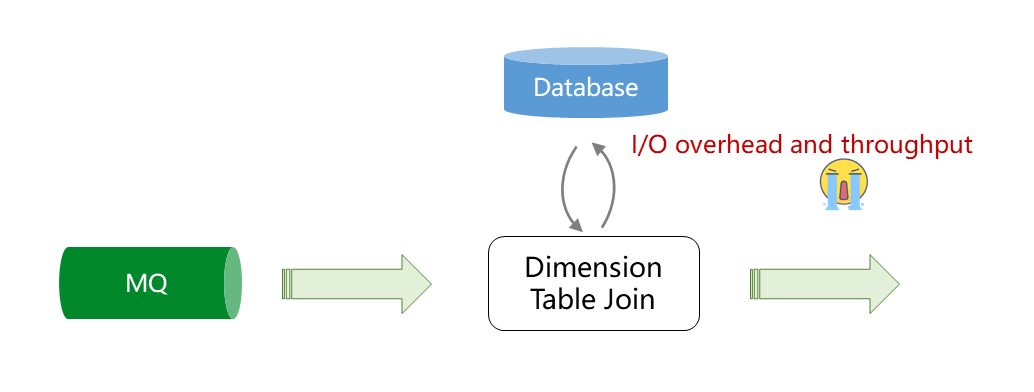

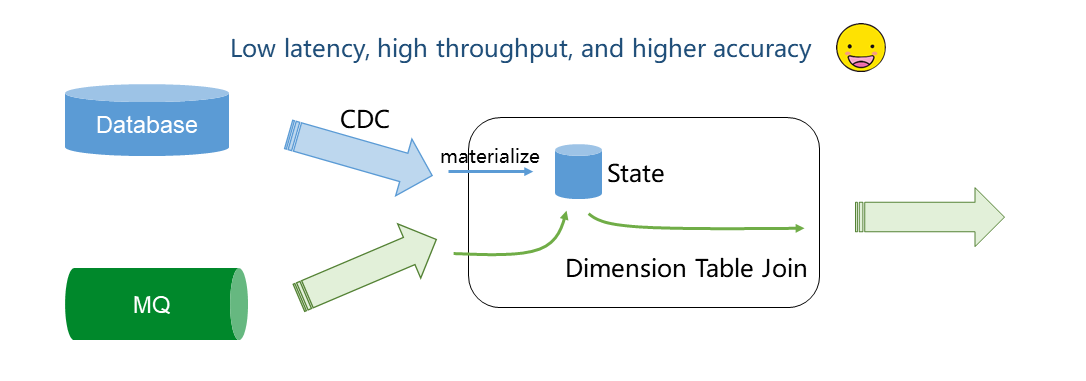

The following section explains why the CDC-based dimension table join is faster than the query-based dimension table join.

Currently, the query of the dimension table is conducted through table join. After data enters the dimension table from the message queue, an I/O request is initiated to the database. Then, the database returns and merges the result and then outputs the result to the downstream. However, this process inevitably consumes I/O and network communication, making it impossible to improve the throughput. Even with some cache mechanisms, the results may not be accurate since the cache is not updated in time.

The data of the dimension table can be imported into the State of the dimension table Join through CDC. Since the State is distributed, it stores the real-time image of the database dimension table in the Database. When the message queue data comes, there is no need to query the remote database again. Direct query to the State in the local disk is enough. This saves I/O operations and realizes low latency, high throughput, and higher accuracy.

Tips: This feature is currently under development for version 1.12. Please pay attention to FLIP-132 for the specific progress.

debezium-avro, OGG, and Maxwell)flink-cdc-connectors

This article shares the advantages of Flink CDC by comparing the Flink SQL CDC and traditional data synchronization solutions. Meanwhile, it introduces the implementation principles of log-based and query-based CDC. There are also examples of MySQL Binlog subscription using Debezium and technology integration for replacing subscription components through flink-cdc-connectors. Additionally, it describes the application scenarios of Flink CDC, such as data synchronization, materialized view, and multi-data center backup, in detail. It also introduces the advantages of CDC-based dimension table join and the CDC component work.

After reading this article, we hope you will have a new understanding of Flink SQL CDC. We hope Flink SQL CDC will bring more convenience for development and be applied in more scenarios.

1. How do I write GROUP BY results to Kafka?

Since the GROUP BY results are updated results, currently, they cannot be written into the "append only" message queue. The updated results writing will be supported natively in Kafka 1.12. In version 1.11, the changelog-json format provided by the flink-cdc-connectors can do this. For more information, please see this document.

2. Does CDC need to ensure sequential consumption?

Yes, it does. The Kafka partitions must be sequential first to synchronize data to Kafka. Data changes with the same key must be synchronized to the same Kafka partition. This way, the sequence can be guaranteed when Flink reads data.

How Does Flink Maintain Rapid Development Even as One of the Most Active Apache Project?

Building an Enterprise-Level Real-Time Data Lake Based on Flink and Iceberg

206 posts | 56 followers

FollowApache Flink Community China - May 18, 2022

Apache Flink Community - May 28, 2024

Apache Flink Community - August 14, 2025

Apache Flink Community - May 30, 2024

Alibaba Cloud Indonesia - March 23, 2023

Apache Flink Community - March 14, 2025

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Apache Flink Community