By Yueyi Yang

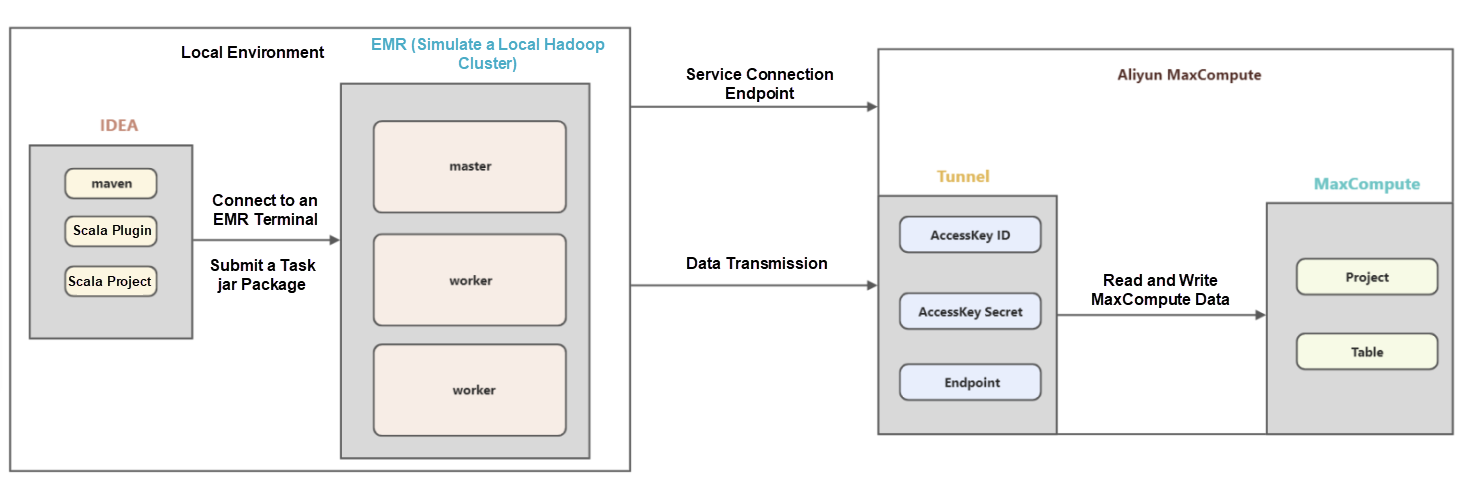

The existing lake house architecture uses MaxCompute as the center to read and write Hadoop cluster data. In some offline IDC scenarios, customers are unwilling to expose the internal information of the cluster to the public network and need to initiate access to cloud data from Hadoop clusters. This article uses EMR (Hadoop on cloud) to simulate a local Hadoop cluster accessing MaxCompute data.

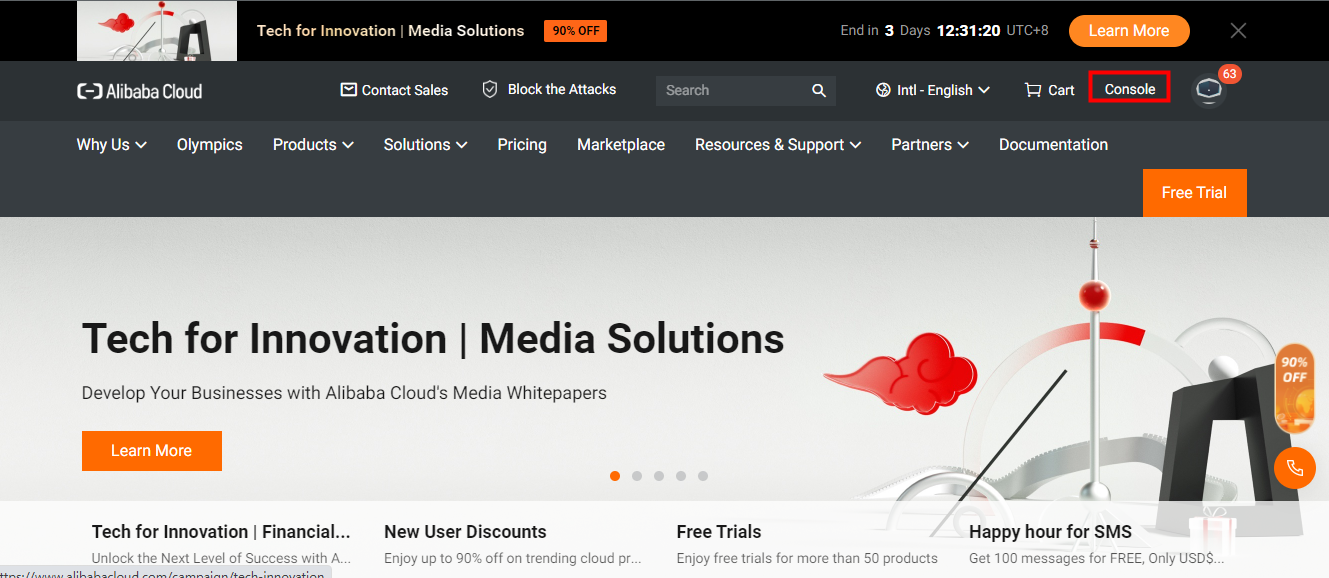

① Log on to the Alibaba Cloud console and click the console option in the upper right corner.

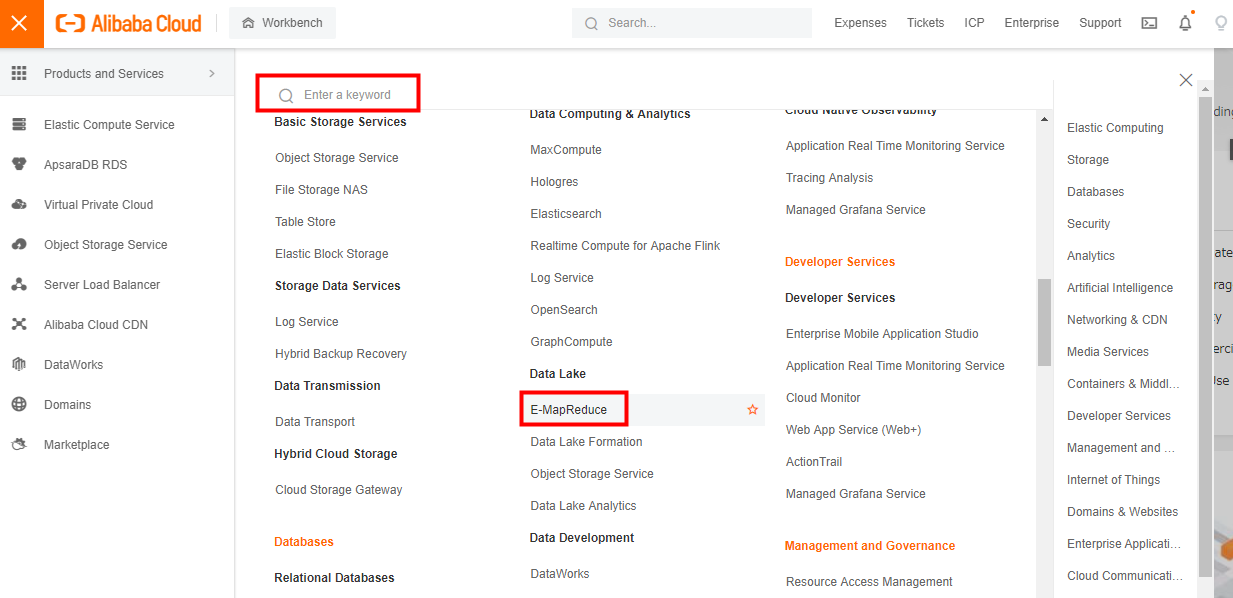

(2) Go to the navigation page and click cloud products – E-MapReduce. You can also search for it.

③ Go to the E-MapReduce homepage, click EMR on ECS, and create a cluster.

Please refer to the official document for specific purchase details: https://www.alibabacloud.com/help/en/e-mapreduce/latest/getting-started#section-55q-jmm-3ts

④ Click the cluster ID to view the basic information, cluster services, node management, and other modules.

Please refer to the official document for more information about how to log on to the cluster: https://www.alibabacloud.com/help/en/e-mapreduce/latest/log-on-to-a-cluster

This article will use logging on to an ECS as an example.

① Click on the Alibaba Cloud Console - ECS

② Click the Instance Name - Remote Connection - Workbench Remote Connection

Please see the article below for more information: https://blog.csdn.net/l32273/article/details/123684435 (Article in Chinese).

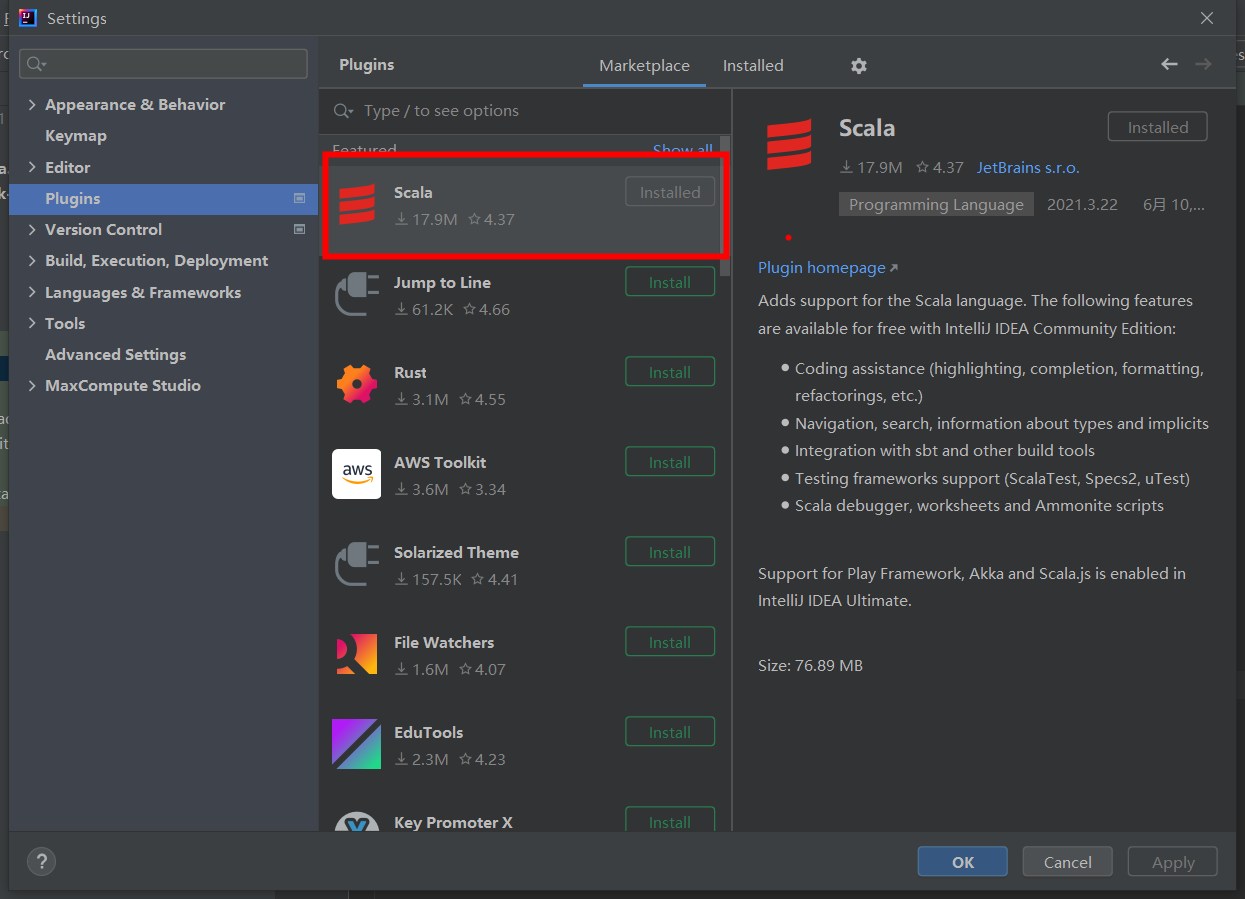

① Download the Scala Plugin:

② Install Scala JDK

③ Create a Scala Project:

Please refer to the official document for more information about how to create a MaxCompute project: https://www.alibabacloud.com/help/en/maxcompute/latest/create-a-maxcompute-project

The AccessKey (AK) pair used to access Alibaba Cloud APIs includes the AccessKey ID and AccessKey secret. After you create an Alibaba Cloud account on the official site (alibabacloud.com), an AccessKey pair is generated on the AccessKey Management page. AccessKey pairs are used to identify users and verify the signature of requests for accessing MaxCompute or other Alibaba Cloud services or connecting to third-party tools. Keep your AccessKey Secret confidential to prevent credential leaks. If there is a leak, disable or update your AccessKey immediately.

Please refer to the official document for more information about AK: https://ram.console.aliyun.com/manage/ak

MaxCompute Service: The connection address is Endpoint, which varies based on the region and network connection mode.

Please see the official document for more information about the region endpoint: https://www.alibabacloud.com/help/en/maxcompute/latest/prepare-endpoints.

Please see the official document for more information about how to create a MaxCompute table: https://www.alibabacloud.com/help/en/maxcompute/latest/ddl-sql-table-operations

This article needs to prepare a partition table and non-partition table for testing.

(1) Prepare the project, AK information, and table data on MaxCompute

(2) Prepare the E-MapReduce cluster

(3) The terminal connects to the E-MapReduce node (the ECS instance)

(4) Configure Scala and Maven environment variables in IDEA and download the Scala plug-in

① Enter the project directory:

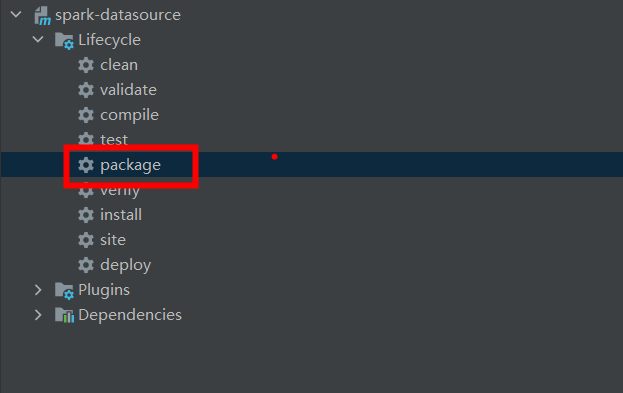

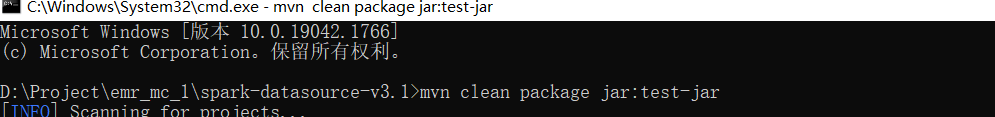

cd ${project.dir}/spark-datasource-v3.1② Run the mvn command to build a spark-datasource:

mvn clean package jar:test-jar

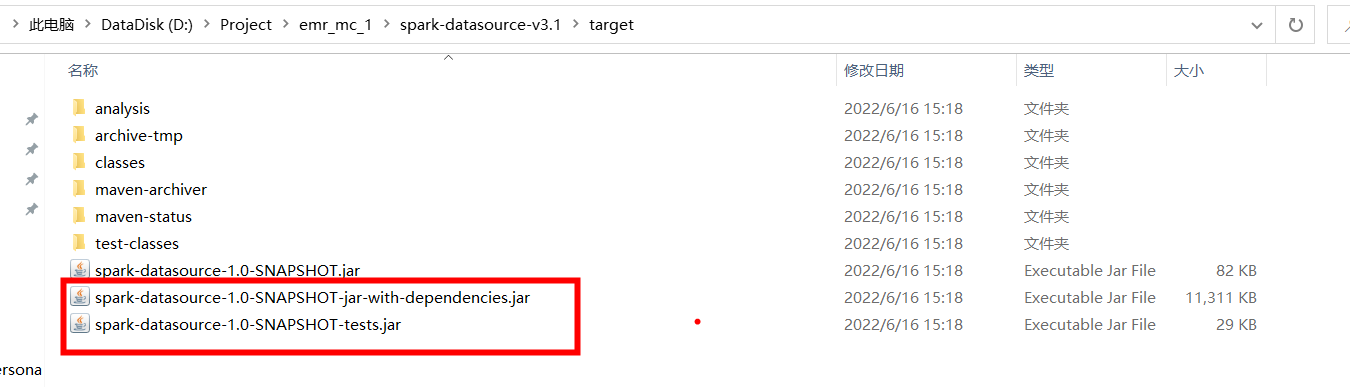

③ Check whether there are dependencies.jar and tests.jar in the target directory:

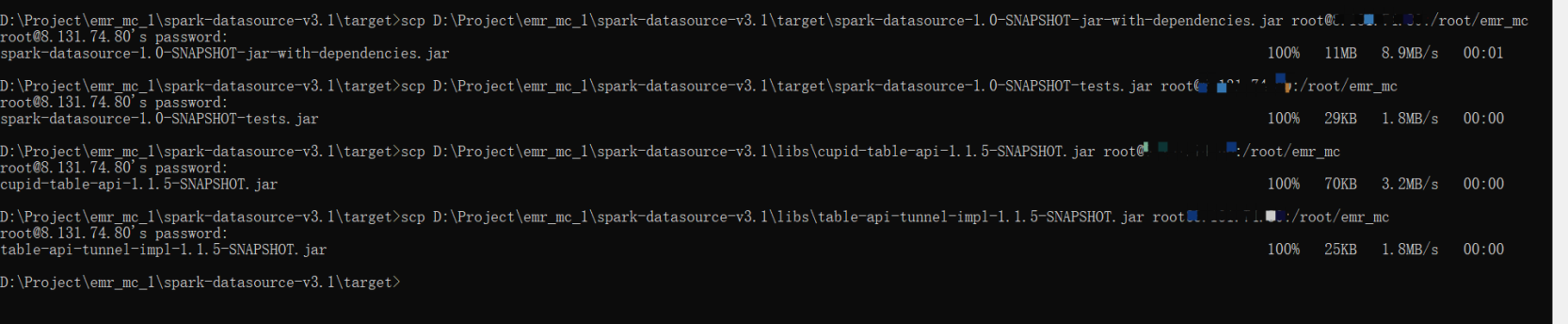

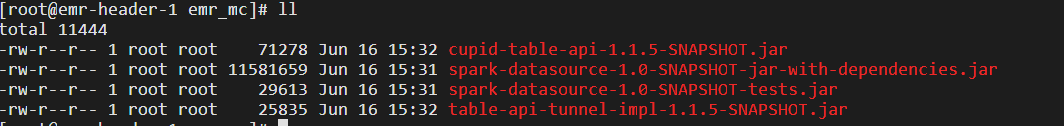

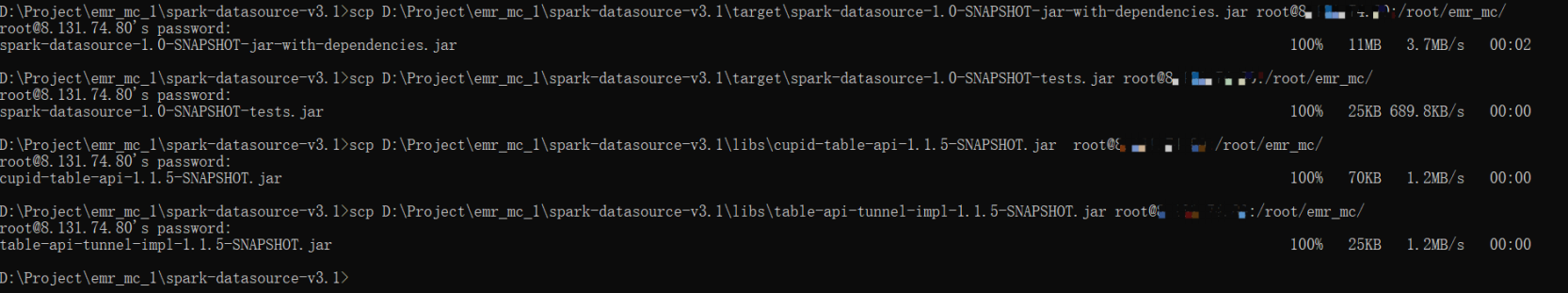

① Upload the scp command:

scp [local jar package path] root@[ecs instance public IP]:[server storing jar package path]

② View server

③ Upload jar packages between nodes:

scp -r [path of this server to store jar packages] root@ecs instance private IP:[address of the receiving server to store jar packages]

① Local Mode: Specify the master parameter as local:

./bin/spark-submit \

--master local \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataReaderTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

${maxcompute-table-name}② Yarn Mode: Specify the master parameter as yarn and select the endpoint in the code to end with -inc:

Code: val ODPS_ENDPOINT = "http://service.cn-beijing.maxcompute.aliyun-inc.com/api"

./bin/spark-submit \

--master yarn \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataReaderTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

${maxcompute-table-name}① Command

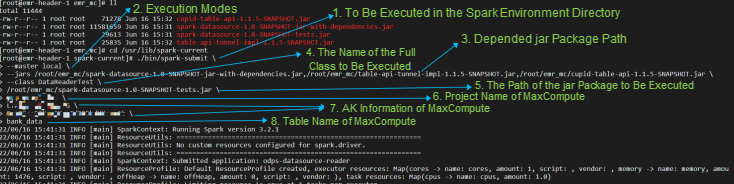

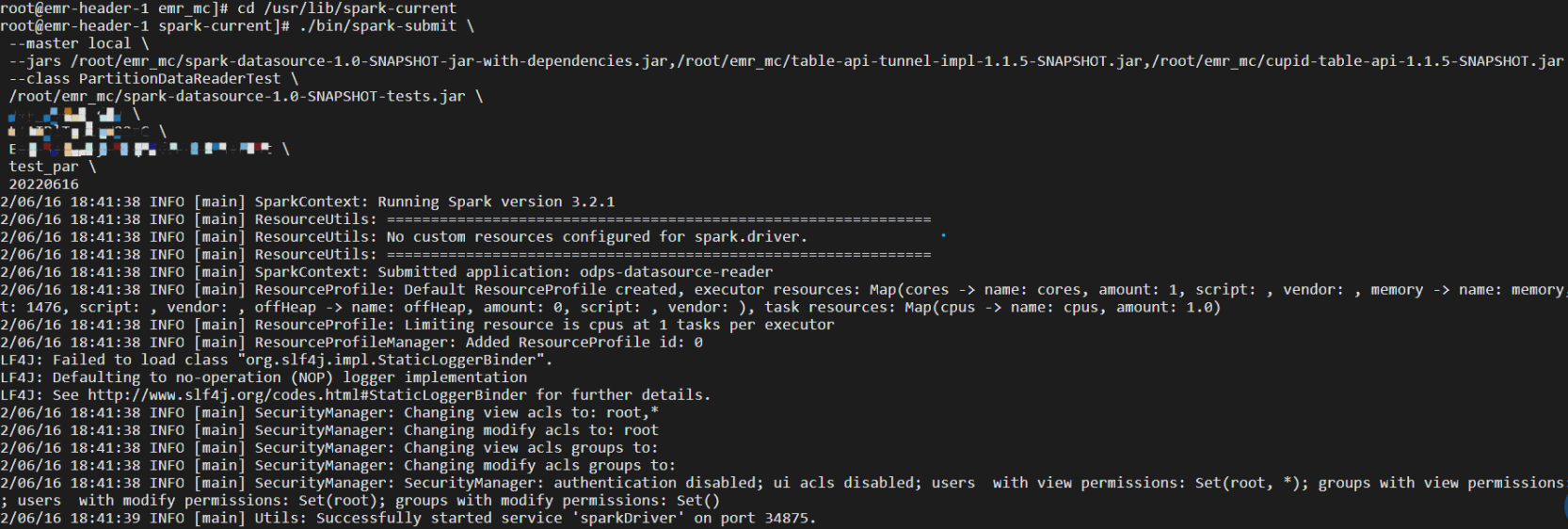

-- First, enter the spark execution environment.

cd /usr/lib/spark-current

-- Submit a task.

./bin/spark-submit \

--master local \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataReaderTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

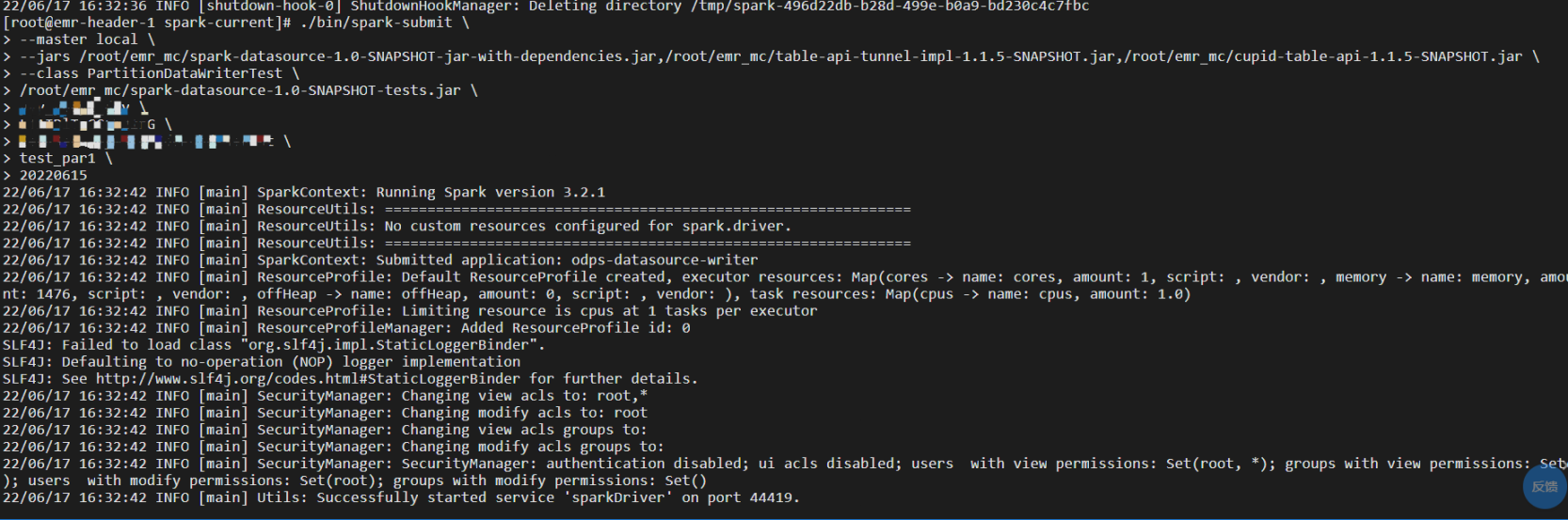

${maxcompute-table-name}② Execution Interface

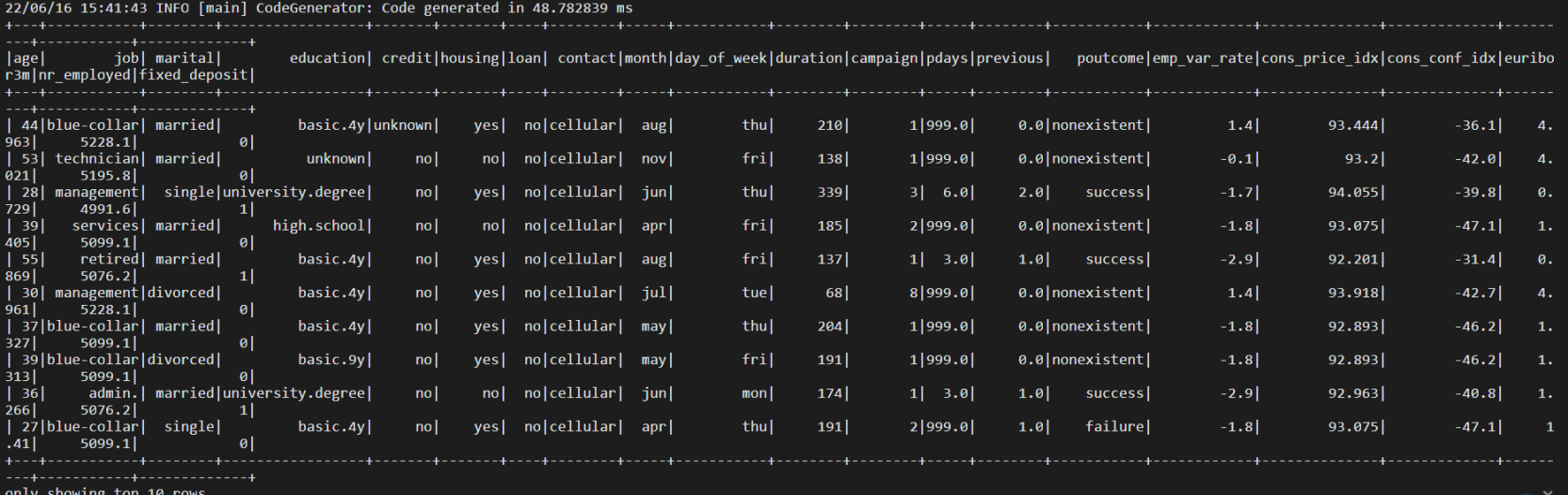

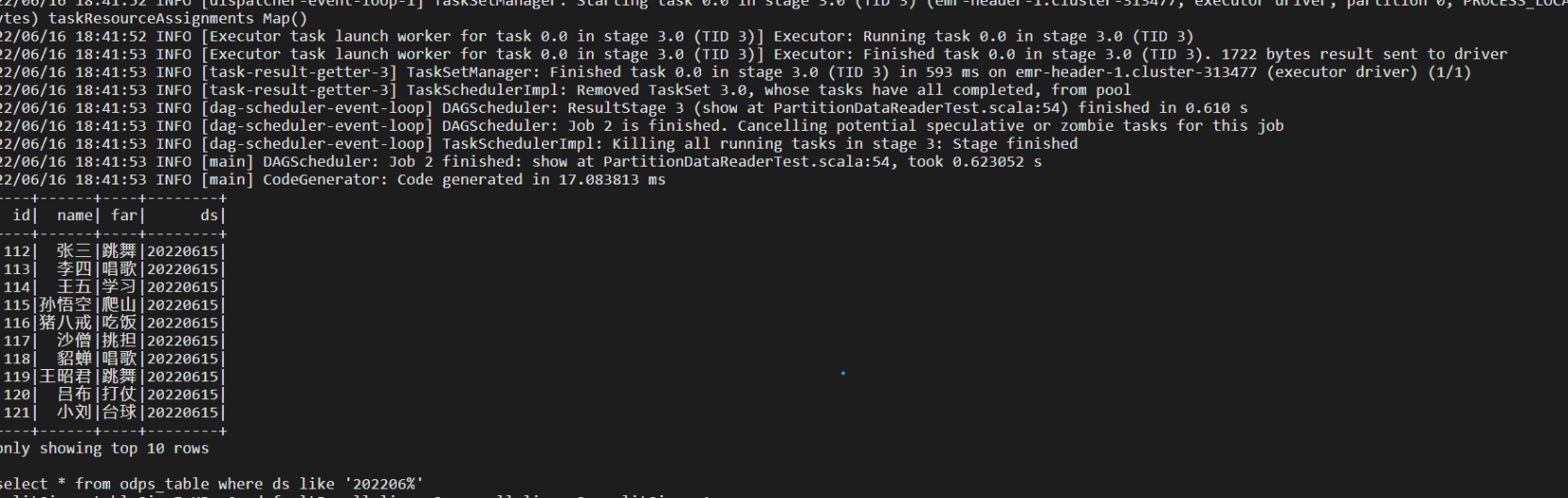

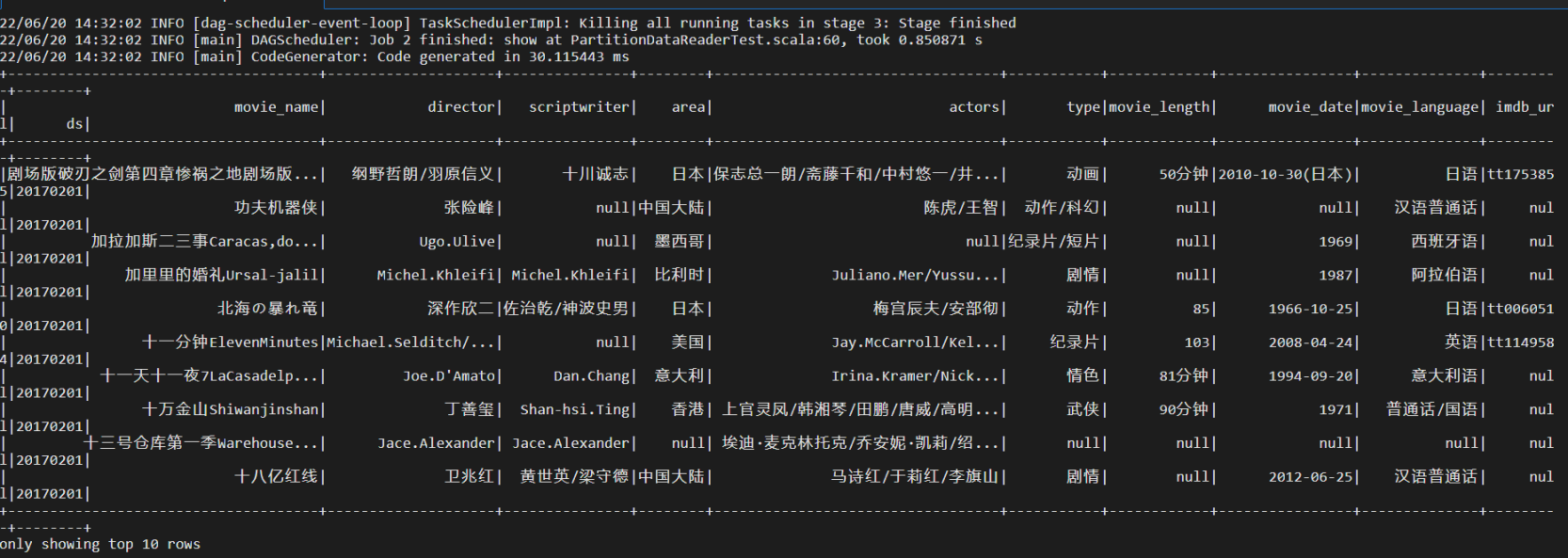

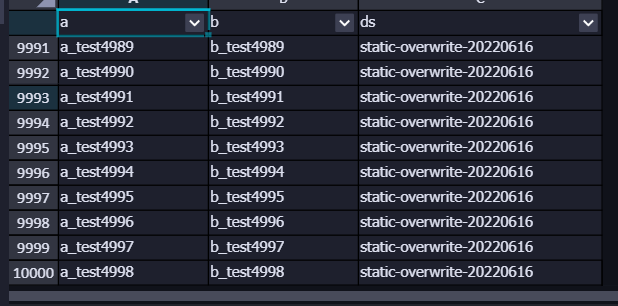

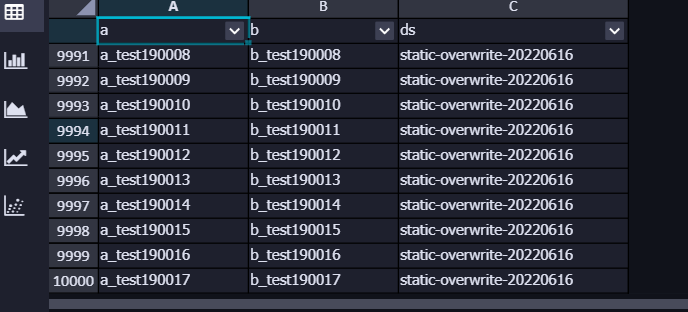

③ Execution Results

① Command

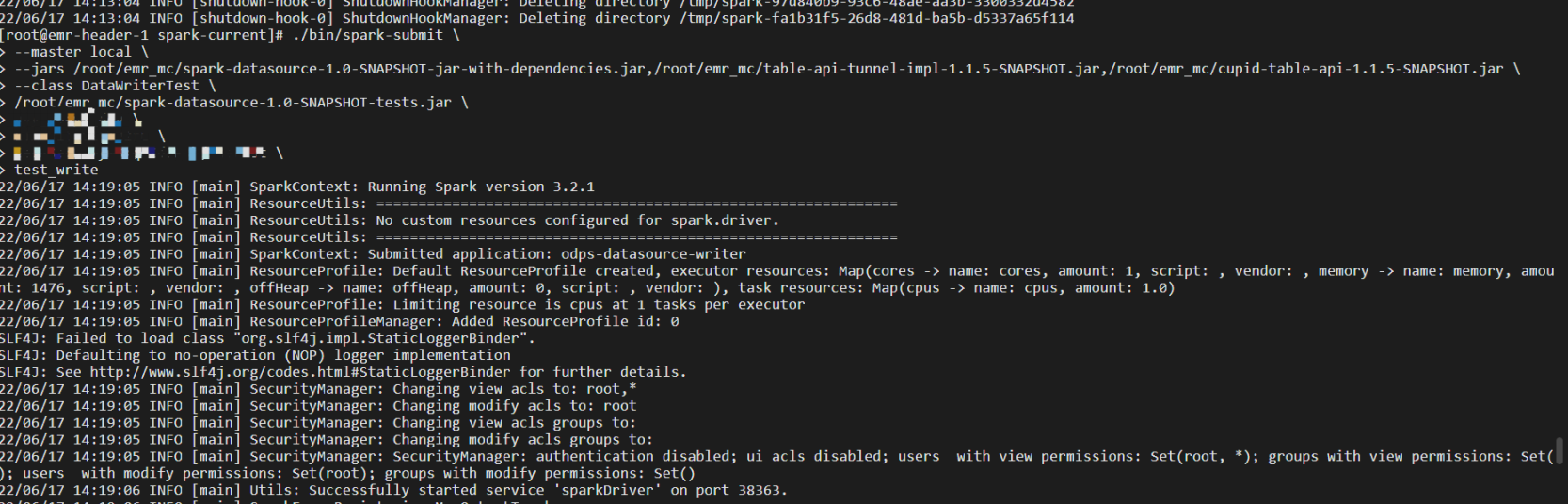

-- First, enter the spark execution environment.

cd /usr/lib/spark-current

-- Submit a task.

./bin/spark-submit \

--master local \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataWriterTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

${maxcompute-table-name} \

${partition-descripion}② Execution Interface

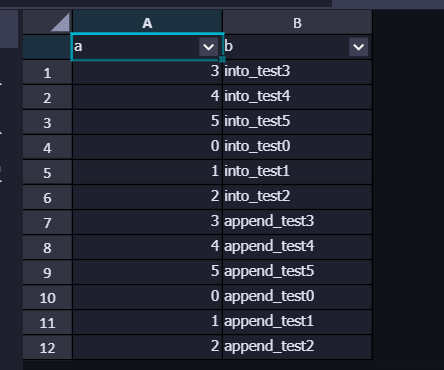

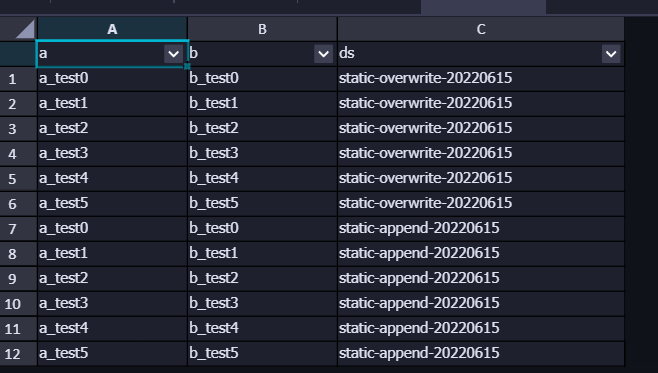

③ Execution Results

① Command

./bin/spark-submit \

--master local \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataWriterTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

${maxcompute-table-name}② Execution Interface

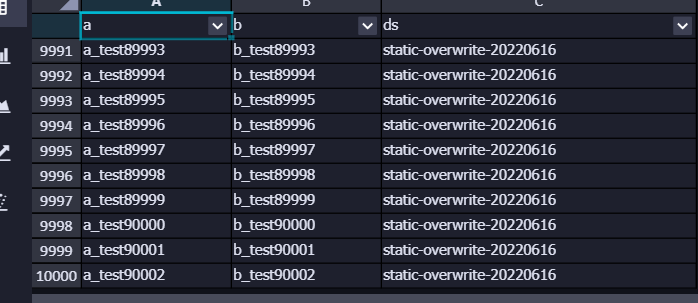

③ Execution Results

① Command

./bin/spark-submit \

--master local \

--jars ${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-jar-with-dependencies.jar,${project.dir}/spark-datasource-v2.3/libs/cupid-table-api-1.1.5-SNAPSHOT.jar,${project.dir}/spark-datasource-v2.3/libs/table-api-tunnel-impl-1.1.5-SNAPSHOT.jar \

--class DataWriterTest \

${project.dir}/spark-datasource-v3.1/target/spark-datasource-1.0-SNAPSHOT-tests.jar \

${maxcompute-project-name} \

${aliyun-access-key-id} \

${aliyun-access-key-secret} \

${maxcompute-table-name} \

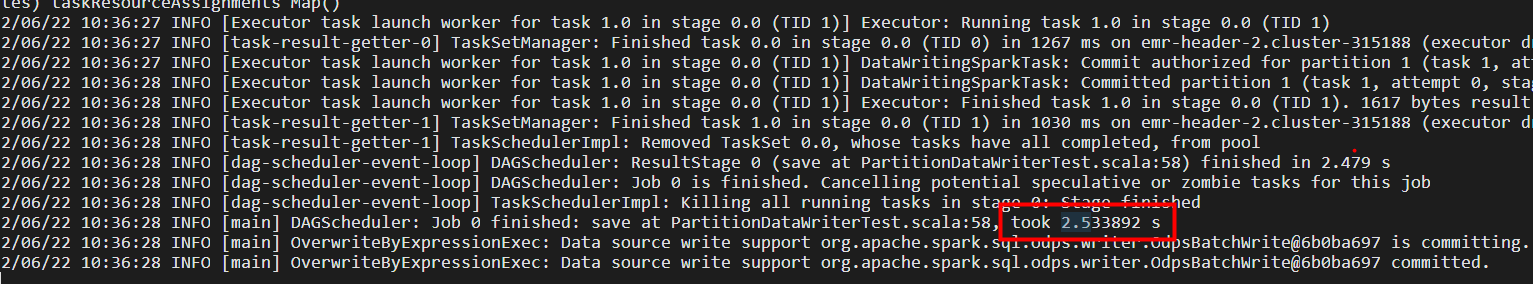

${partition-descripion}② Execution Interface

③ Execution Results

The experimental environment is EMR and MC, which is connected to the cloud. If the IDC network is connected to the cloud, it depends on the tunnel resources or the leased line bandwidth.

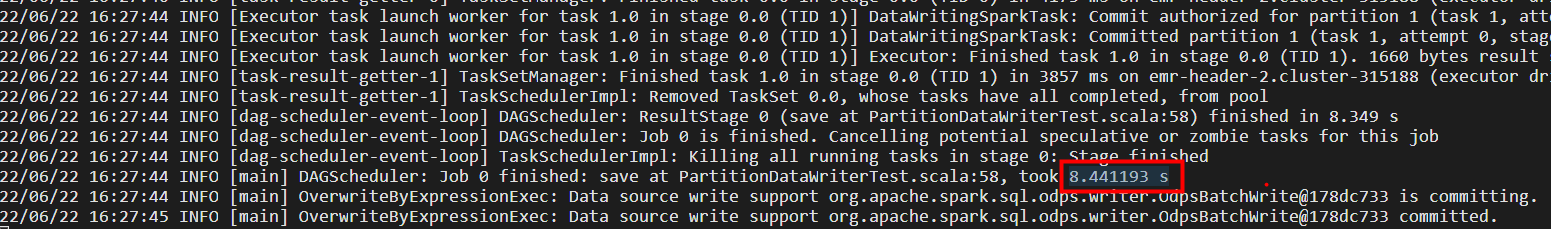

① Write tens of thousands of data in a partition

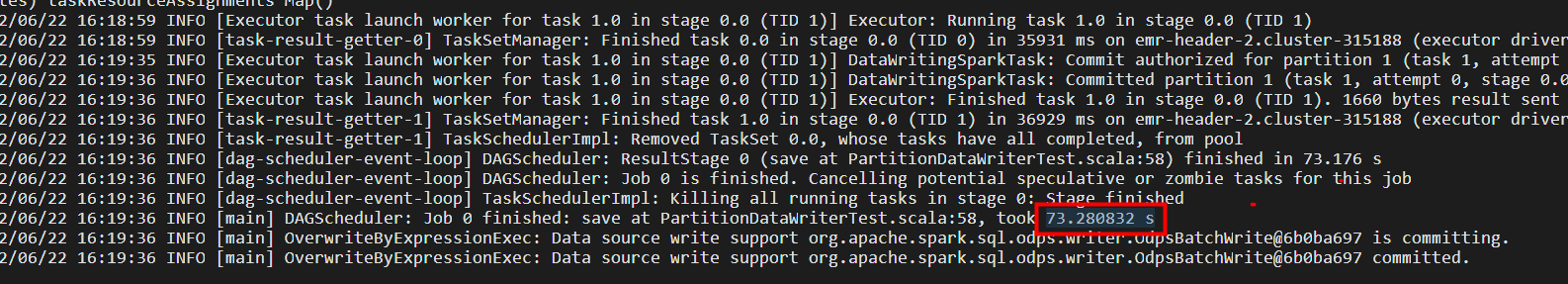

② Write 100,000 pieces of data in a partition

③ Write millions of pieces of data in a partition

New Capabilities of Alibaba Cloud Native Real-Time Offline Integrated Data Warehouse

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Cloud MaxCompute - June 2, 2021

Alibaba Cloud MaxCompute - December 8, 2020

Farruh - January 12, 2024

Alibaba Cloud MaxCompute - December 20, 2018

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Cloud MaxCompute