This article was compiled from a speech from Xiong Jiashu (Data Lake Formation and Analysis Developer) at the Alibaba Cloud Data Lake Technology Special Exchange Meeting on July 17, 2022. This article is mainly divided into four parts.

An open-source big data system refers to a Hadoop-centric ecosystem. Currently, Hive is the de facto standard for open-source data warehouses.

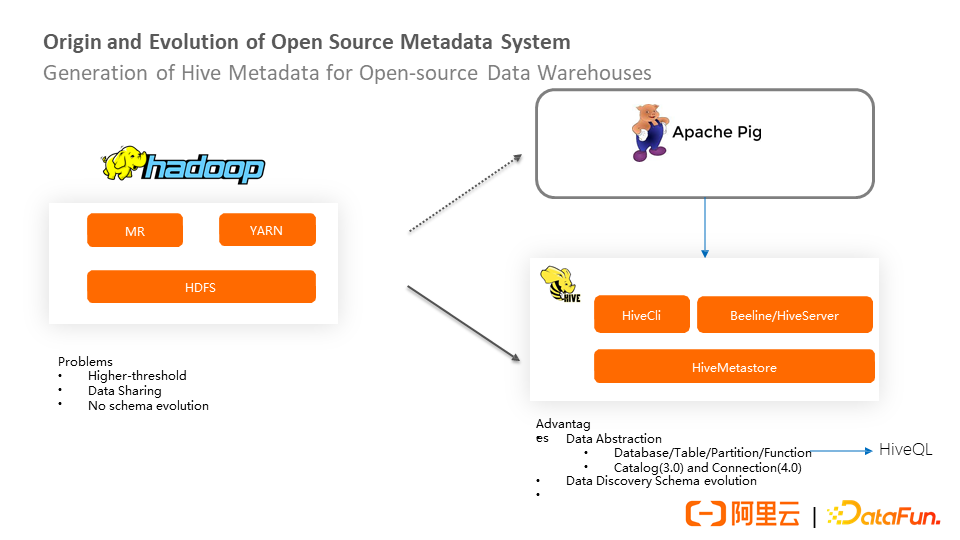

The origin and development of big data can be traced back to the paper published by Google in 2003, where HDFS and MapReduce were proposed. The HDFS component was first used to solve the problem of web storage. It can be deployed on a large number of cheap machines, providing excellent hardware fault tolerance and storage extensibility. The original intention of MapReduce is to solve the problem of parallelization of large-scale web page data in search engines. It is a common parallel processing framework that can be used to handle many big data scenarios.

Although HDFS and MapReduce solve the problems of big data storage and large-scale data parallel processing, they still have several shortcomings.

First, programming is difficult. The programming interface that traditional data warehouse engineers contact is generally SQL, but the MapReduce framework has certain programming capabilities.

Second, HDFS stores files and directories and does not store metadata that cannot be queried. Therefore, even if you know the structure of the file, you cannot manage changes in the content of the file, which may cause the program to lose stability.

Based on the pain points, companies represented by Yahoo proposed Apache Pig, which provides a SQL-like language. The compiler of this language will convert SQL-like data analysis requests into a series of optimized MapReduce operations, shielding MapReduce details and processing data easily and efficiently. The company represented by Facebook proposed Hive's solution, which provides a layer of data abstraction on HDFS. The details of the file system and programming model are shielded, which reduces the threshold for data warehouse developers. You can easily share, retrieve, and view data change history by abstracting data and file systems to metadata. This layer of metadata abstraction is now known as Databa se, Table, Partition, and Function. At the same time, HiveQL, which is close to SQL language, is derived from metadata. In addition, the catalog feature is added to Hive 3.0, and the Connectors feature is added to Hive 4.0. Relying on metadata, the computing engine can trace the history well. In addition to tracing historical schema changes, metadata has the important function of protecting data (not making incompatible changes).

The catalog feature implemented by Hive 3.0 is based on the following two scenarios:

① Namespace of MetaStore metadata may be required between multiple systems. It is expected that a certain degree of isolation can be achieved. For example, Spark has its catalog, and Hive also has its catalog. Different catalogs can have different authentication and operation policies.

② The catalog that can be connected to external systems

Connectors of Hive 4.0 are used to define DataSource. After other engines are connected to Hive metadata, they can use this interface to identify the information of DataSource. This facilitates other engines to query data.

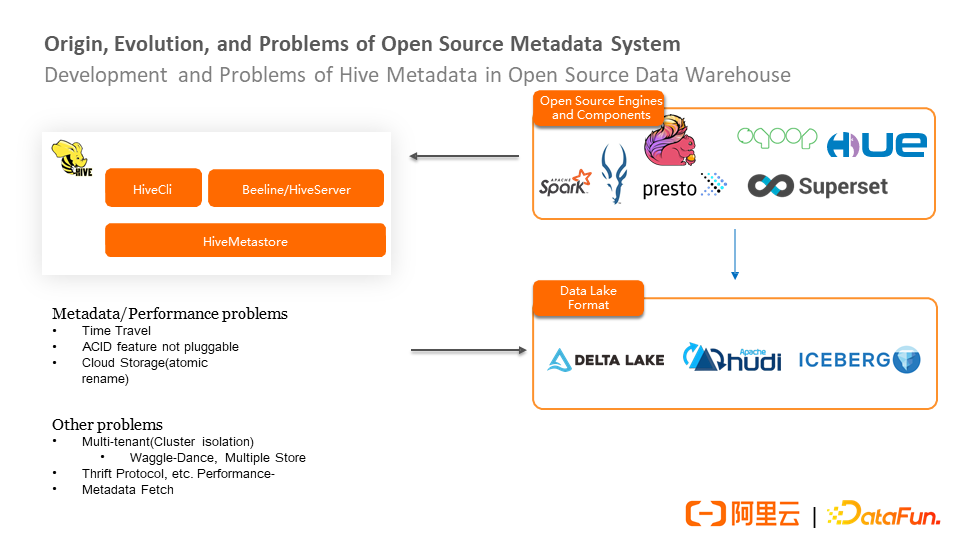

Currently, Hive already supports almost all computing engines in the Hadoop ecosystem. Therefore, Hive has become the de facto standard for open-source data warehouses. However, it has some problems. For example, although it supports schema evolution, it cannot query historical snapshots of data changes through Time-Travel. Although it has new features of atomicity, consistency, isolation, and durability, it cannot be transplanted to other engines due to its deep binding with the Hive engine.

In addition, ACID features mainly use the rename method for isolation, which is prone to problems in scenarios of file compaction and storage separation, such as the performance of the rename process on cloud storage. The open-source community has developed some data lake formats for these points, solving ACID to varying degrees.

The data lake format has its metadata and registers its metadata with Hive Metastore. The metadata in the data lake format complements the Hive metadata and does not depart from the view of the overall Hive metadata. Other engines can still access data in the data lake format through the Hive Metastore metadata management system.

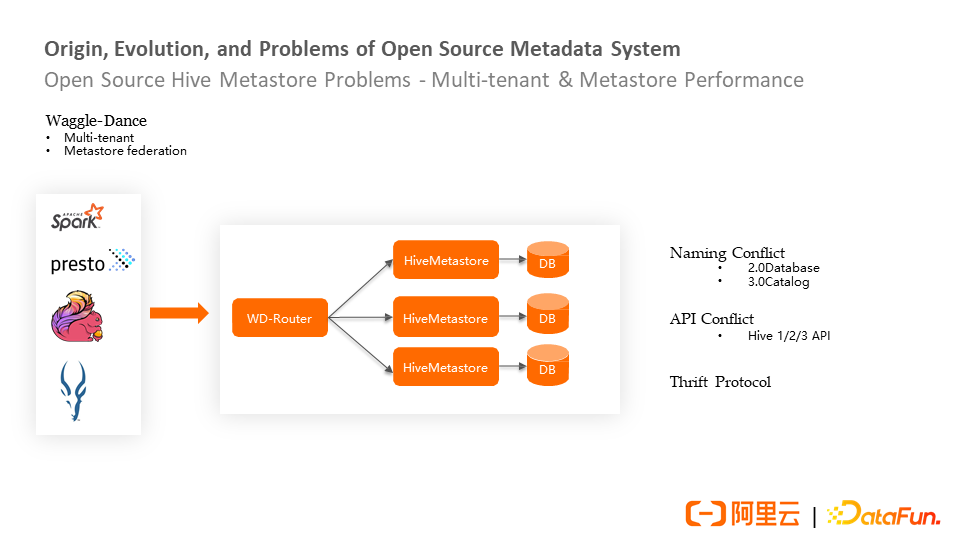

However, Hive Metastore does not have the capability of multi-tenancy and is limited by the capability bottleneck of a single metadatabase. Some companies deploy multiple sets of Metastore, which easily forms data silos. Therefore, some companies have proposed solutions, such as Waggle-Dance and Multiple Hive Metastore solutions. Since HiveMetaStore uses the Thrift protocol, when the internal engine and the in-house engine connect to the Hive Metastore, they must connect to the Metastore protocol and rely on the corresponding client. This dependency is still relatively heavy.

In addition, Metastore is highly de-redundant when designing metadata storage, which brings some performance problems for Metadata Fetch.

The backend of the open-source Waggle-Dance solution has multiple Metastores, each of which has its database storage. The Waggle-Dance module implements the complete Thrift protocol of the Hive Metastore backend and can seamlessly connect to other engines to solve the existing Metastore bottlenecks (such as the storage capacity of a single database and the performance of a single Metastore).

However, Waggle-Dance solves the naming conflict problem in the multi-tenant solution. You need to plan the naming of the Database and Metastore because the module of Waggle-Dance routing has a database-to-backend Metastore routing table. If it is a Hive 3.0, it can be solved through the mapping design of routing at the catalog layer.

In addition, Waggle-Dance may cause conflicts with the Thrift interface protocol. For example, the backend may have multiple versions of Hive Metastore or different clients. Therefore, the routing components in the middle layer may need to be compatible with different versions, which may cause protocol compatibility issues.

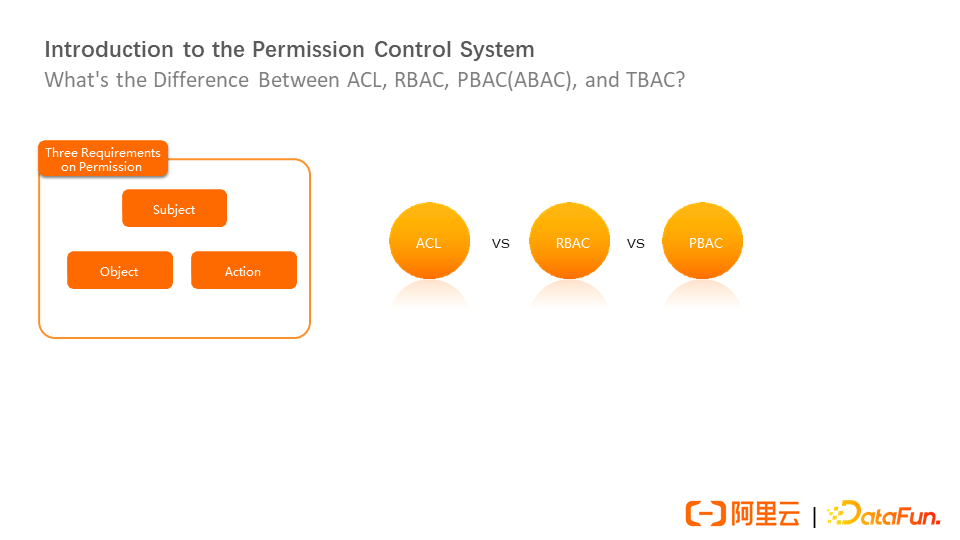

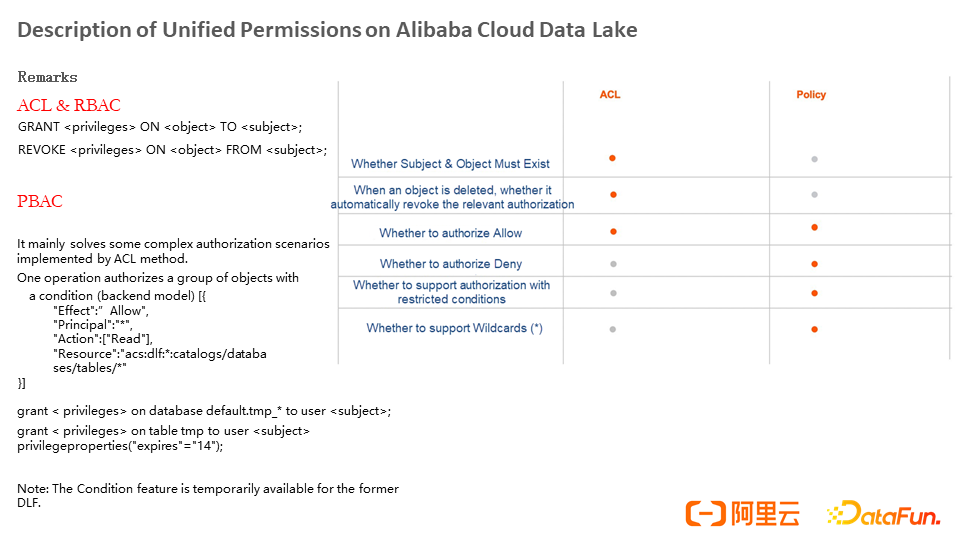

In addition to metadata, the security of metadata and data access is important. The data security of an enterprise must depend on a complete permission system. The basic three elements of authority include subject, object, and operation. The ACL is based on the basic three elements. The corresponding metadata is – What kind of operation permissions does a person have on a table? ACL has certain defects. For example, if there are more permissions in the future, it will be difficult to manage, so RBAC and PBAC permissions are developed.

Let's take staying in a hotel as an example to explain the difference between ACL, RBAC, and PBAC. For example, after staying in a hotel, the guest will get a key and permission to enter a certain room, which is equivalent to ACL. On a specific floor, there is a floor administrator who can manage all rooms, which is equivalent to RBAC. On the ACL, a mapping between the user's role and authority is added. The role reduces the cost of permission management. However, if there are special requirements (such as restricting the administrator of a specific level to only enter the room after 7 a.m.), PBAC is required, which is a policy-based model and supports permission conditions based on the general permission model.

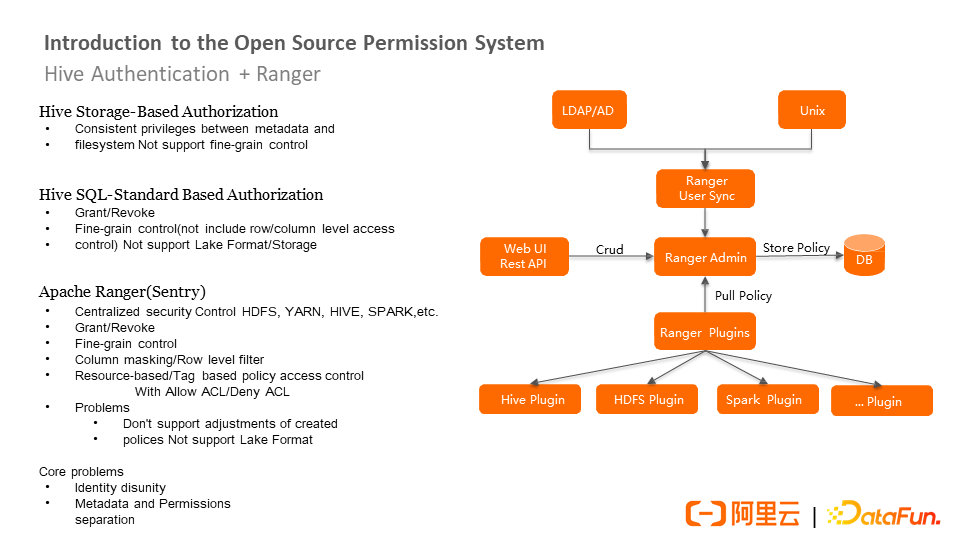

Hive Storage-Based Authorization is proposed in Hive 0.13, which is based on the authentication of the underlying storage. The advantage of this policy is that whether it is a meta or an underlying file storage operation, you can obtain a unified permission view. However, it is a permission system based on directories and underlying files, so it cannot be well-mapped to SQL-level permission operations. Since the underlying permission is the file, it cannot do more fine-grained authentication at the data level or field level, so it does not support fine-grained control.

Based on the problems, the community put forward the Hive SQL-Standard Based Authorization that the SQL-based standardized authentication model can support Grant and Revoke control instructions at the SQL level. You can do relatively fine-grained permission control. For example, you can map many operations on the search to the permission model. This model implements column-level RAM but does not implement row-level permissions.

In addition, although the Hive SQL-Standard Based Authorization has SQL-level permissions, it does not include the permissions of the storage system. Therefore, you cannot obtain a unified permission view when accessing the storage system or accessing the operation level. This mode does not support the data lake format.

Later, a commercial company launched Apache Ranger (Sentry), which provides a centralized permission control solution for all big data open-source ecosystem components and the underlying file systems YARN, Hive, Spark, and Kafka. It supports Grant and Revoke at the SQL level, which adds finer-grained permissions, such as row-level filtering and column-level permission control, as well as exception policy and other capabilities.

However, Apache Ranger still needs to be improved. Since the permissions of the PBAC model are predetermined, it does not depend on the existence of objects. For example, if a user has the SELECT permission on a table, the table is deleted and recreated, but the user still has the permission. This is unreasonable. In addition, although ranger can be used on many big data components, it is limited to Hive's HiveServer, and there is no permission control on Hive Metastore. The problem it faces is that the metadata access interface provided by Hive Metastore is separated from the permission interface provided by it.

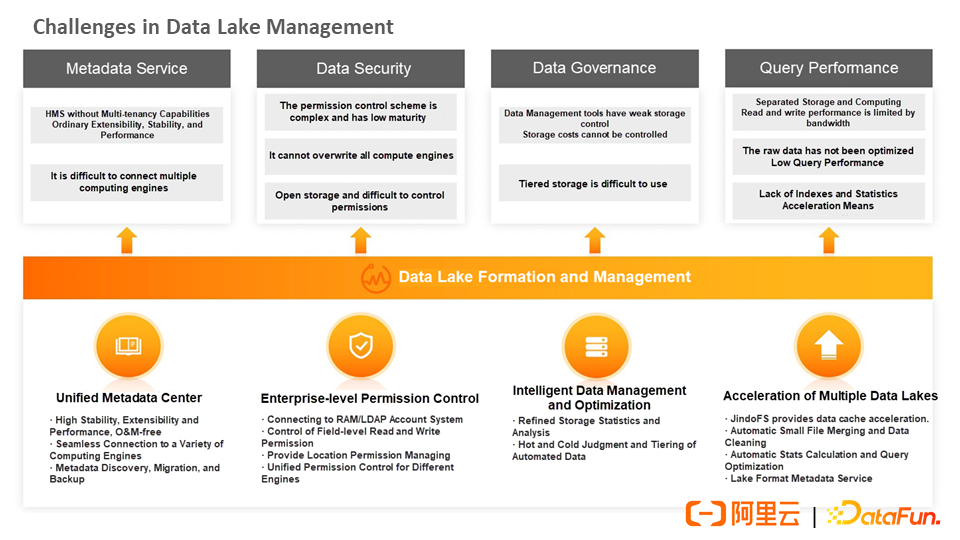

In summary, the challenges faced by data lake management include the following aspects:

At the metadata service level, an open-source tool cannot use a version that does not have multi-tenant. The extensibility, stability, and performance need improvements, and docking more compute engines is difficult.

At the data security level, the permission control scheme is complex and has low maturity. It cannot cover all computing engines and is open storage. It is difficult to control permissions.

At the data governance level, the open-source community does not have good Data Management tools currently. It is impossible to analyze the existing storage costs based on metadata and to manage the lifecycle. Although Hive 4.0 has added some features (such as automatic partition discovery and lifecycle management of partitions), there is still no good governance method on the whole.

At the query performance level, the read and write performance of the storage-computing separation architecture is controlled by bandwidth. The raw data is not optimized, so the query performance is low. Also, the acceleration methods (such as indexes and statistics) are lacking.

Alibaba Cloud has implemented a set of metadata, security, governance, and acceleration solutions for the data lake field to address the preceding issues and challenges.

The unified metadata center is born out of Alibaba Cloud's proven metadatabase and seamlessly connects with a variety of open-source computing engines and self-developed internal engines. It relies on Alibaba Cloud's Data Lake Formation and management of DLF products to provide metadata discovery, migration, and backup capabilities.

In terms of data security, the Alibaba Cloud account system is supported. It can be compatible with the open-source LDAP account system. It provides field-level read and write permission control and location permission hosting. Engines and applications can even connect to the metadata API and permission API to implement their permission integration or metadata integration so that all engines and applications can implement unified control over permissions.

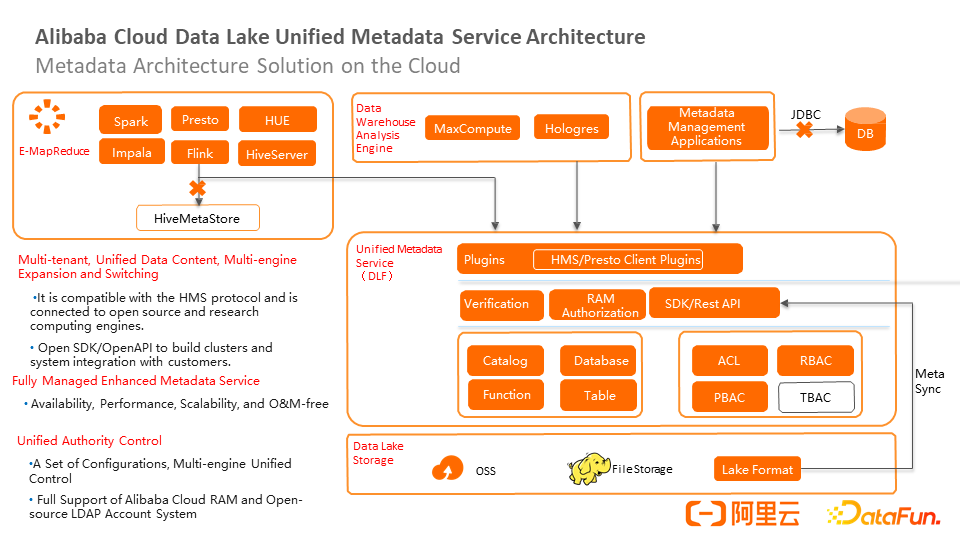

Alibaba Cloud Data Lake Unified Metadata Service provides a multi-tenant and unified data directory and supports the expansion and switchover of multiple engines. It is compatible with the Hive 2.X and Hive 3.X protocols and can seamlessly connect to all open-source and self-developed computing engines. At the same time, all API, SDK, and clients connected to open-source engines are open-source on Alibaba Cloud to support customer-developed engines, applications, or system integration.

In addition, relying on the Tablestore of the underlying storage, services provided for a large number of structured services have excellent Auto Scaling capabilities and do not need to worry about expansion. It also provides high availability and O&M-free capabilities.

In addition, it provides unified permission control. Users only need to rely on the configuration of permissions. Users can implement unified permission control for multiple engines, multiple management platforms, or multiple applications, regardless of the EMR engine, Alibaba Cloud in-house engine, or data development platforms (such as DataWorks). It supports Alibaba Cloud RAM and open-source LDAP account systems.

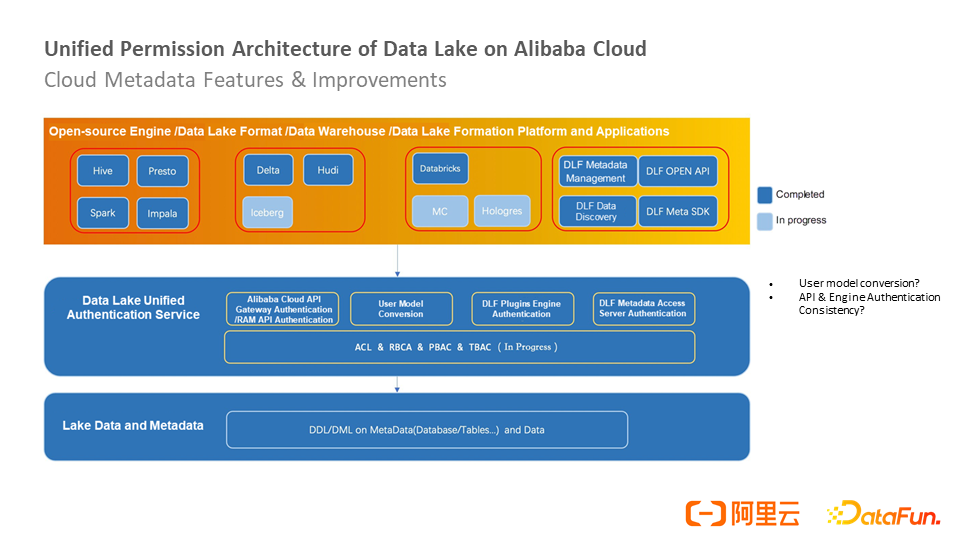

The bottom layer of the unified metadata service is data lake storage, which currently supports Object Storage and file storage. The data service layer provides two basic services. The first layer is the catalog, which is responsible for managing metadata, including Catalog, Database, Function, and Table. The second layer is permission services, including the ACL system, RBAC, and PBAC. TBAC is being planned (mainly for the resource package).

In addition, the upper layer connects to the Alibaba Cloud gateway authentication system, supports RAM sub-account authentication and permission policies, implements the underlying metadata API and permission API, and provides the SDK plug-in system. Some cloud vendors retain all components and package them inside Hive Metastore to implement seamless connection with open source. After changing the Hive Metastore, other engines can automatically connect to the Hive Metastore. However, Alibaba Cloud did not choose the Hive Metastore solution because of the following considerations:

First of all, retaining Hive Metastore will increase the cost. All requests need to be forwarded, which will cause performance loss. The stability of the Hive metastore affects the stability of the overall metadata service. As the traffic of the user cluster increases, the metastore also needs to be expanded. Therefore, Alibaba Cloud has chosen to implement the client interface on a lightweight SDK and directly embed it into the engine to connect the open-source engine to DLF services.

The MaxCompute, Hologres, and fully managed OLAP engines developed by Alibaba Cloud are also fully connected to DLF. In addition, the user's metadata management application or development platform can be connected to the API.

Many users' internal metadata management applications connect to the underlying MySQL through JDBC, which may be problematic. For example, the upgrade of the underlying metadata structure will cause the application to be changed. On the other hand, directly connect to the JDBC interface to query the underlying data, and there is no permission control at all. However, if you connect to DLF API, whether it is API access or EMR access, it will go through a layer of the permission control system and will not disclose permissions.

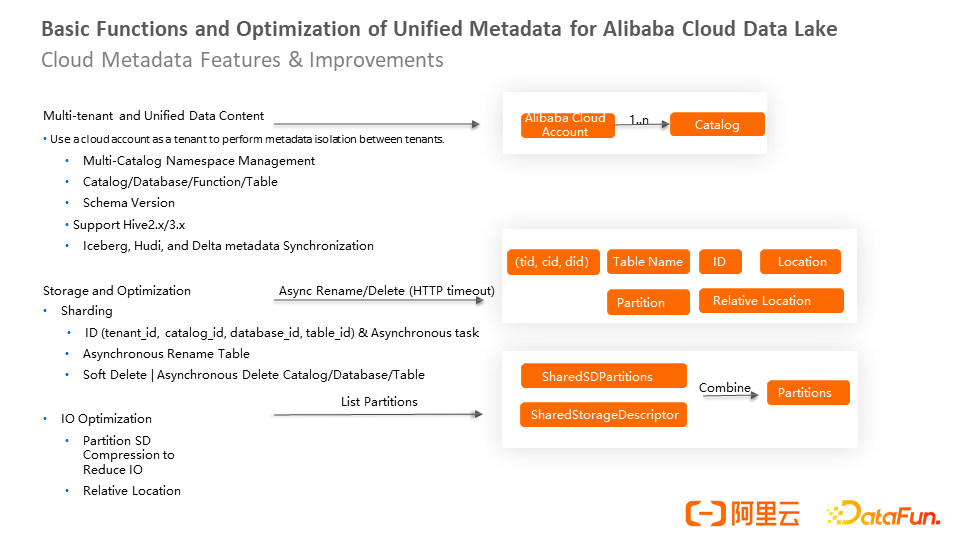

Alibaba Cloud provides multiple tenants and a unified data directory, which is a mechanism that isolates tenants using Alibaba Cloud accounts. You can create multiple catalogs under an Alibaba Cloud account and provide multiple versions of a table-level schema. You can query the evolution process of a table schema based on version data. Alibaba Cloud's internal engines support DLF and implement the Iceberg, Hudi, and Delta data lake formats.

In addition, the catalog is partitioned by the tenant's metadata, and the user's storage is not typed on the same backend storage hotspot partition. At the same time, we have implemented ID, which facilitates subsequent optimization (such as soft deletion and asynchronous deletion). At the same time, asynchronization is implemented on many requests.

The IO aspect has been partially optimized. For example, when you create a list partition, all metadata of the same table partition is returned. However, the SD of the partition metadata is the same in most scenarios. Then, you can share the data based on the same SD and perform partition SD compression to reduce Network Layer IO.

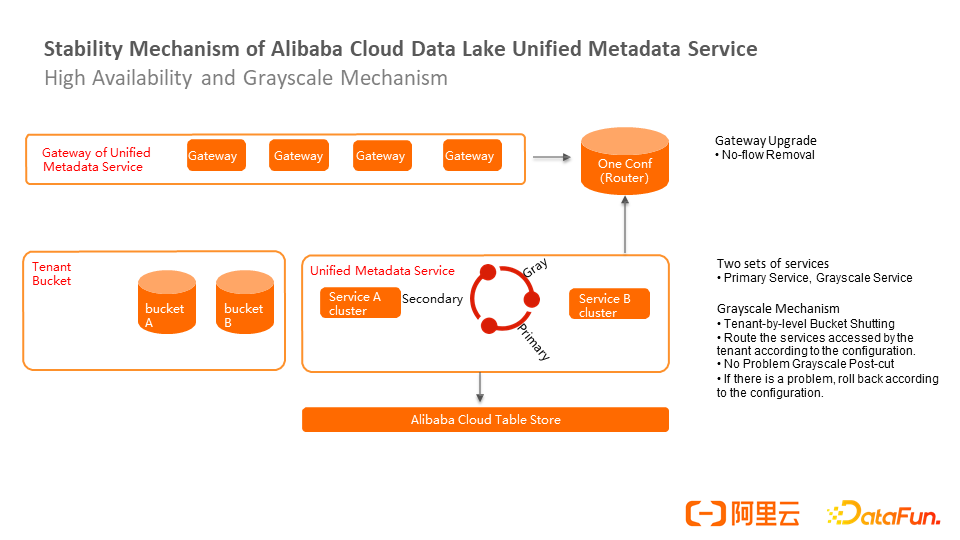

Stability is another key issue. All tenants need to be connected to the cloud. Therefore, the upgrade, canary, and automatic recovery capabilities of the metadata service are important. Automatic recovery capability and Auto Scaling are standards on the cloud and are implemented based on a resource scheduling system.

The underlying layer of the stability mechanism is Alibaba Cloud Tablestore, and the upper layer is the metadata service. The metadata service has two resident clusters (A and B) in a primary/secondary relationship. During the upgrade, configure the secondary service to the phased-out state. The frontend gateway collects traffic based on the service configuration policy and the tenant bucket splitting policy and then sends the traffic to the phased-out service to check whether the phased-out traffic is normal. If the traffic is normal, upgrade to the primary service. If a problem occurs, roll back the traffic based on the configuration.

The upgrade is completely unaware of the user side and the engine side. Even if a service is offline, it will not be offline until the traffic of the main service returns to zero. This layer depends on the metadata of its gateway, so it can be implemented more lightly. For example, it can be offline by cutting off the heartbeat.

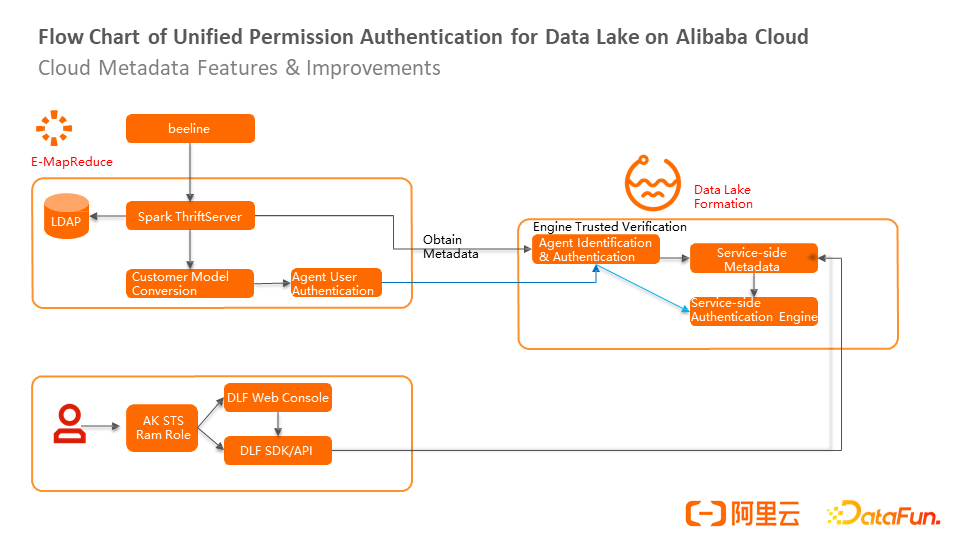

We must first solve two problems to implement the unified permission service for data lakes: identity issues and consistency of access permissions between API and engines.

The preceding figure shows the unified permission architecture of a data lake. The bottom layer is the data and metadata on the lake, and the upper layer is the unified authentication service for the data lake, including the gateway, user model conversion, engine authentication plug-in system, and DLF data access server authentication. The top layer is the implemented and ongoing data lake format, open-source engine, data warehouse, Data Lake Formation platform, and application.

Currently, we provide ACL, RBAC, and PBAC permission control methods. ACL and Policy complement each other. The ACL mode depends on the subject and object of the authorization. Therefore, the subject and object must exist and support whitelist authorization. The policy supports whitelists and blacklists.

How can we implement unified authentication on the engine side and the API side?

If the user holds an AK or uses the management platform for external operations, the authentication engine is called when the server metadata is accessed through the DLF SDK/API.

For an open-source EMR cluster, you can use the beeline interface, pass LDAP authentication, and use Spark ThriftServer to obtain metadata. The engine holds a trusted identity. After the trusted identity verification, the engine can obtain all metadata. Then, the engine is responsible for the conversion of the user model to obtain the user's operation and identity, proxy user authentication, send corresponding authentication requests to the server, call the same server authentication logic, and realize unified authentication at the engine side and API layer.

In the future, data lake metadata and permissions will continue to evolve in terms of unity, consistency, and performance.

(1) Unified Metadata, Permissions, and Ecosystem: The metadata directory and centralized permission management on the cloud will become standard. The engine, format, and support for metadata and permissions of various ecosystem components and development platforms are consistent. In addition, you can customize metadata models for unstructured and Machine Learning scenarios. This way, other types of metadata can be connected to the unified metadata system in a custom manner.

(2) Metadata Acceleration: For example, a custom metadata API is introduced for the data lake format to provide performance-oriented solutions for the computing layer.

(3) Flexible Permission Control and Secure Data Access: Users can customize role permissions and operation permissions. In addition, data storage can be encrypted. All of this will be managed on the unified metadata service side.

Q: How can we solve the problem of metadata conflicts caused by decoupling connection tasks from business tasks?

A: The lake format generally provides an MVCC version control system. This problem can be solved through multi-version control and final commit.

Q: Is there any practical experience in semi-structured and unstructured metadata management?

A: Semi-structured data mainly uses JSON or complex data structures to solve data access problems. Most of the unstructured data is related to Machine Learning scenarios. We plan to connect Machine Learning models to metadata later.

Q: What is the principle of Hive Warehouse Connector?

A: Hive Warehouse Connector is the definition of a layer of external data sources. The engine can use the connector to link external data sources.

Q: How do I enable Spark SQL to take advantage of Hive's permissions?

A: Currently, the open-source community has no solution. However, Hive and Spark SQL have the same authentication on Alibaba Cloud.

Q: Can the metadata of lakes and warehouses be viewed in one view?

A: Currently, this feature has not been developed on Alibaba Cloud, but it can be used on some data development platforms, such as DataWorks.

Q: Does the metadata service have a separate service instance for each tenant, or does everyone share the metadata service?

A: Multiple tenants share the metadata service (including the underlying storage that uses the same table store instance) but is stored on different table store partitions. Use a catalog for namespace isolation within the same tenant. Each catalog can have its permissions.

Q: How does DLF work with the data lake format? At which layer is the multi-version of data implemented?

A: The data lake format mostly synchronizes metadata to DLF metadata in the form of registration or meta synchronization. Multi-version data mainly depends on the capabilities of the underlying data lake format.

Q: If the calculation frequency of real-time metrics in Hologres is high, will it cause excessive pressure?

A: Hologres supports high-concurrency data service scenarios and data analysis scenarios. Row storage or column storage is used to solve problems in different scenarios.

Q: Why choose StructStreaming instead of Flink during metadata consumption?

A: There is little difference in timeliness between the two. The selection of StructStreaming is mainly based on technology stack considerations.

Q: Are there benchmark metrics for metadata performance?

A: The official website is not available yet. Since storage has good scale capability and computing is stateless, the overall QPS can theoretically scale out well. However, there is still a certain upper limit on the number of partitions in a single table.

Q: What are the factors that trigger thawing in lifecycle management?

A: Currently, automatic triggering is not implemented. Only manual unfreezing is supported. An entry is provided to choose whether to unfreeze it before the user uses it.

[1] Data Lake Formation: https://www.alibabacloud.com/product/datalake-formation

[2] E-MapReduce (EMR): https://www.alibabacloud.com/product/emapreduce

[3] Data Lake Exploration - Delta Lake: https://www.alibabacloud.com/blog/599156

[4] Data Lake on Alibaba Cloud: https://www.alibabacloud.com/solutions/data-lake

62 posts | 7 followers

FollowAlibaba EMR - August 5, 2024

Alibaba EMR - July 9, 2021

Alibaba EMR - October 12, 2021

Alibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud MaxCompute - December 22, 2021

ApsaraDB - November 17, 2020

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba EMR