This article was compiled from a speech from Qingwei Yang (a Senior Development Engineer of Alibaba Cloud Open-Source Big Data) at the Alibaba Cloud Data Lake Technology Special Exchange Meeting on July 17, 2022. This article is mainly divided into two parts.

We have faced many challenges during the practice of data lakes.

First, data is difficult to identify and find. A large amount of unrecognized data exists in the data lake, which may be left over from history or unmanaged data. For example, data is written into the lake using file copy and upload or other tool engines. Secondly, there is a lack of effective retrieval services in traditional metadata services. When data grows to a certain scale, it is difficult to search and locate data in specific scenarios from a large number of metadata.

Second, data asset management capabilities are weak, and the lake lacks effective data asset analysis and optimization tools, making it difficult to grasp the data details at the database and table partition levels. It is difficult to implement a schema storage tiering solution and distinguish between hot and cold data. It is impossible to implement tiering in database and table partition dimensions.

Third, lake format optimization lacks a systematic solution. For example, the small file merge operation requires users to have an understanding of the lake format, can actively find unreasonable small files in some schemas, and use the computing engine to run small file merge tasks. There is a certain threshold. In addition, users are required to be able to identify invalid historical data and clean up.

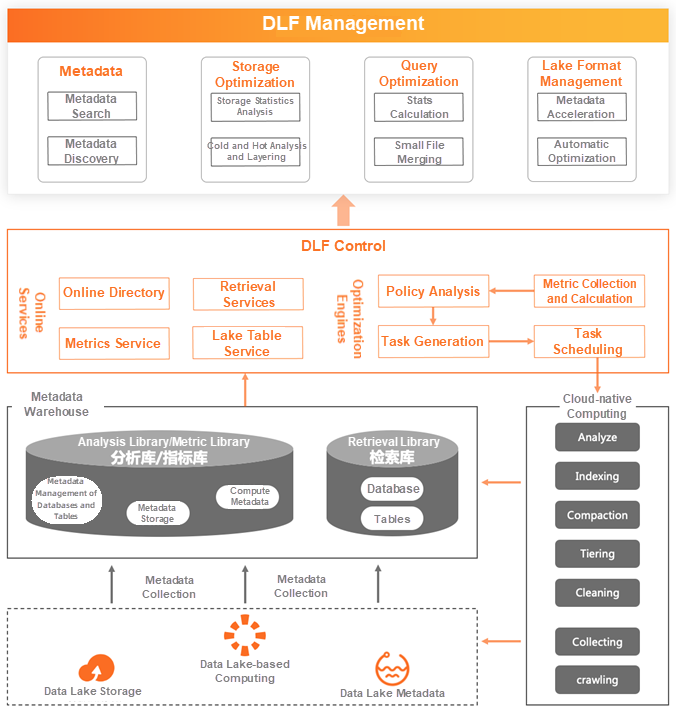

Alibaba Cloud proposes a fully managed lake management and optimized solution based on the massive computing capability of cloud-native resource pools and the online service capabilities of management and control to solve the challenges encountered in the process of data lake practice and integrate the characteristics of data on the lake and the characteristics of computing engines.

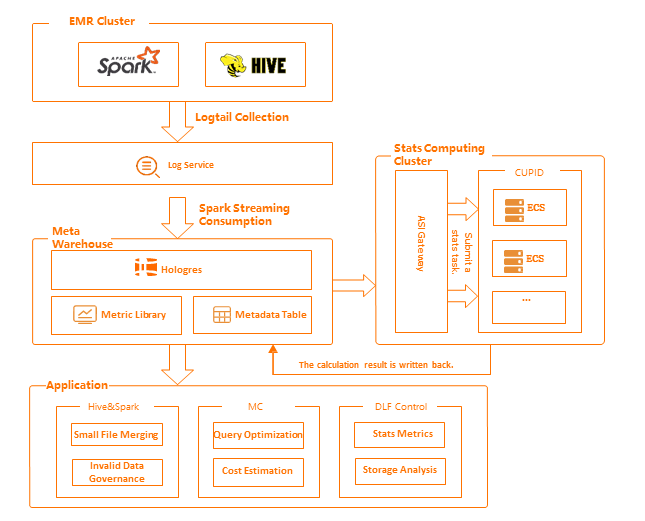

Meta-warehouses form the metadata on the lake and analyze and calculate data, providing reference indicators for lake management and optimization. Data distributed everywhere on the lake is integrated through the process of data collection, ETL, data analysis, calculation, etc. Meaningful indicators are extracted, and analysis libraries, indicator libraries, and index libraries are constructed to provide data support for upper-level applications.

The right side of the preceding figure shows the cloud-native computing pool. The Spark engine uses scalable resources on the cloud to run analysis and optimization tasks (such as Analyze, Indexing, Compaction, and Tiering) and writes the results back to the meta warehouse in real-time. It participates in the construction of the metric and index libraries, enriches and expands the metric assets of the meta warehouses (such as running stats tasks in the computing pool), obtains metrics (such as the number of table rows), and writes back to the meta warehouse metric library. You can view the basic information of the table for the first time and use this as a reference for data quality analysis and other operations.

The control layer provides online service capabilities (such as online content, retrieval service, and metric service). The optimization engine analyzes the metrics on the metadata warehouse, generates optimization tasks based on rules, and submits them to the cloud-native computing pool for computing. On top of this, many lake management optimization capabilities are extended.

(1) Metadata Capability: It solves the problem where data is difficult to identify and operate. For example, for metadata retrieval, you can quickly search for metadata and related schema details by establishing a retrieval library. Metadata discovery extracts metadata information through cloud-native pool computing and running tasks to identify unknown data in the lake.

(2) Storage Optimization Capability: It solves the pain point of weak data asset management capability. For example, for storage statistical analysis, you can use the metric library of the meta-warehouse to analyze databases, table partition-level data details, and hot and cold tiering. The table partitions are automatically tiered through the table partitions provided by the metric library, the latest access time, access frequency, and hot and cold metrics.

(3) Query Optimization Capability: This includes small file merging. The small file table and partition information are extracted from the indicator library, and the small file merging task is run on the cloud-native resource pool according to the rules. This is a fully managed process, and the user is unaware of it.

(4) Lake Format Management Optimization: Metadata acceleration and automatic optimization are realized.

The preceding solution solves some problems of data lake management and optimization and helps users use and manage data lakes better.

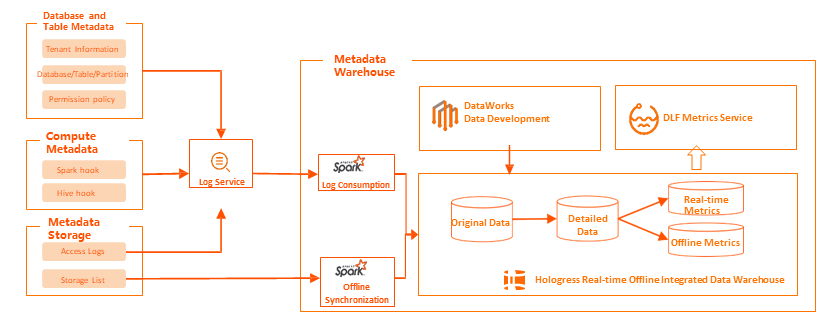

The original data of the metadata warehouse on the lake consists of three types of data.

(1) Storage Data: OSS stores data information at the file level (including size, storage path, storage type, and last update time), all from storage access logs and detailed lists. These basic data form the metadata of storage attributes and are the basis for the analysis and management of OSS.

(2) Metadata: It describes the data of directories, databases, tables, partitions, and functions (including statistics of indexes, attributes, storage, and stats). It mainly comes from the engine metadata service storage and the computing expansion of stats, which is the basic data for optimization operations (such as large table governance and small file merging).

(3) Engine Behavior Data: It includes lineage data and engine task execution data (such as file size, number of files, and upstream and downstream dependency information data of tasks). These data are generated during engine computing and are the basic data for building data maps and lifecycle management.

The preceding data is integrated into the metadata warehouse through real-time consumption methods (such as Log Service consumption, Spark batch tasks, offline synchronization, Spark Structured Streaming, and streaming tasks). The preceding data is used as the raw data of the metadata warehouse. Hologres is selected as the repository since it supports real-time writing, real-time updating, and real-time analysis of a large number of data.

MaxCompute can use offline data processing for scenarios with low real-time requirements but large data volume analysis (such as database and table storage analysis) to convert raw data into detailed data and provide offline metrics to the control layer. Hologres can use real-time analysis capabilities for scenarios with high real-time requirements (such as obtaining the number of rows in table partitions and executing update time) to calculate analysis metrics in real-time and provide it to DLF control.

Meta warehouses include real-time and offline scenarios, providing a stable and high-quality data foundation for data lake management and optimization.

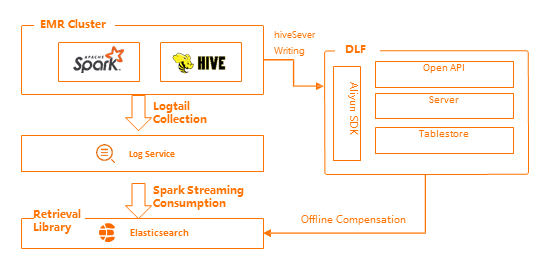

Metadata retrieval addresses the pain point that data on the data lake is difficult to find. Two search methods are mainly provided. First, full-text search. Indexing all column attributes of metadata allows the search for any word to respond within milliseconds. Second, provide an accurate query for multiple columns to meet the search in specific conditions. For example, the search is matched by special attributes (such as database names, table names, column names, Location addresses, creation time, and last modification time).

The Alibaba Cloud Elasticsearch scheme is selected for the index library. ES is a real-time distributed search and analysis engine. It can store, query, and analyze large data sets in near quasi-real time. It is suitable for real-time search scenarios of metadata. In order to achieve the effect of search results second latency, we choose the Spark Streaming technology to synchronize and parse DML logs produced by the engine in real-time and write them to the ES library.

However, there are two problems in consuming logs. First, the sequence of messages cannot guarantee that DML events generated in sequence can be consumed and written to ES libraries. The second problem is the reliability of messages. Log Service cannot guarantee that cluster logs can be captured and written to the hub.

There are two methods to solve the pain points above. On the one hand, the most recent update time in the message is used to make a judgment, which logically ensures the order of the message. On the other hand, the daily offline task synchronization metadatabase is used for index compensation, which ensures the reliability of the daily metadata information. The ability to quickly search and locate from a large number of metadata in the lake is realized through streaming technology, offline compensation technology, and ES retrieval capabilities.

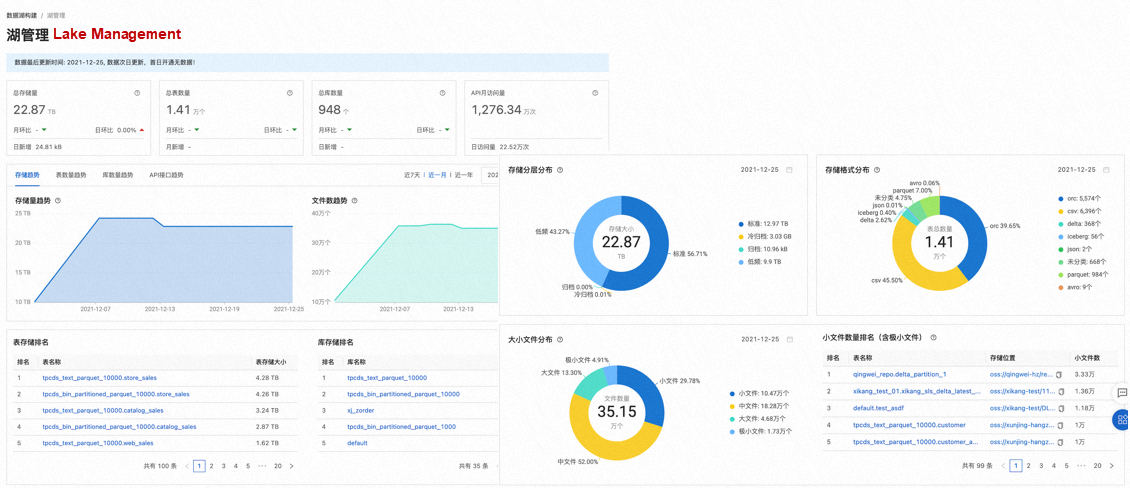

The lake asset analysis capability can help users analyze and manage lakeside assets efficiently and concisely, including resource statistics, trend changes, storage ranking, and storage tiering.

Resource statistics provides the total storage capacity, the total number of databases and tables, and the total amount of API access information. It provides users with an intuitive experience of data and a global view of lake assets.

The trend change reflects the increase and decrease of the statistical indicators in the past seven days, 30 days, and one year. Data fluctuations can discover the development status of a business. For example, you can determine the recent development trend of a business and adjust the resource input based on the situation.

The storage ranking reflects sorting databases and tables within a certain range. Users can find the data cost of tables based on this ranking. For example, 80% of storage is concentrated in 20% of tables.

Storage tiering describes the distribution of storage types, storage formats, and size files on an Object Storage. Determine whether the current data hierarchy is reasonable based on the distribution.

On the one hand, the analysis data can help users understand and master lake assets. On the other hand, it provides a factual basis for users to manage and optimize lake data.

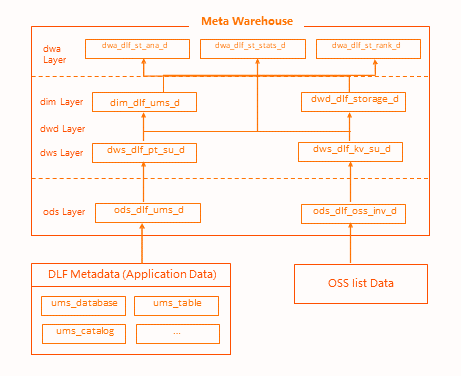

The model of asset analysis follows the hierarchical paradigm of data warehouses, with a source data layer, common data models, and application data services from bottom to top. The logs, detail data, metadata, and audit data stored in OSS are synchronized to the metadata warehouse ODS layer through offline tasks. Then, the common layer data is calculated through offline processing (including metadata, dimension tables, file storage, detailed tables, and database table summary). Then, the common data is added to the application data according to business requirements and output to the control. Finally, the report is displayed.

The DataProfile module adds an extended stats metric on top of the library table element. Stats is the engine's statistics on tables (including the number of records, table size, and the number of files). On this basis, stats are extended (including small file data, small file proportion, cold heat, and hierarchical information indicators). The data lake is connected to a variety of engines (such as Spark and Hive). The stats calculation results of each engine cannot be fully accurate. The trigger conditions are inconsistent, and the coverage of metrics is low. By default, the metadata stats metric cannot be directly used if the partition table number of records and size file metric coverage is less than 20%. Therefore, we actively submit stats analysis tasks to help users calculate the stats data of tables.

First, after the engine performs a DML task, an event is generated. The metadata warehouse records this event. The stats cluster consumes DML events in real-time and pulls up the corresponding stats analysis task. At the same time, the analyze command is extended to support analysis metrics such as the number of small files and the hierarchical proportion of data. The entire stats cluster runs in the cloud-native resource pool. Tasks are written to the metrics database in real-time after they are run to avoid conflicts between the metadata service and the business database.

The preceding solution supplements the problem that the stats data coverage accuracy of the cloud-native computing engine is not high and provides basic data for table analysis and optimization. Stats can provide detailed data at the database and table partition levels on the control page and can also provide data support for other optimization engines (such as analyzing the number of small files in tables to merge small files). It can also serve various engines to optimize CBO.

The lifecycle management module can store hierarchical data in schema dimensions, helping users reduce storage costs. OSS provides file-level tiering capabilities. The prices of OSS vary with storage types. They are standard, low frequency, archive, and cold archive from hot to cold. The costs decrease in sequence. Based on this capability, you can freeze infrequently used data and unfreeze it when it is used. This reduces storage costs.

Based on the tiering capability of OSS and engine metadata, the lifecycle management capability of database and table partitions is provided. Tables and partitions are frozen or unfrozen according to rules. This reduces the threshold for users to use hot and cold tiering in data lakes.

The rule center uses metrics provided by the meta-warehouse, including metrics such as the most recent modification time, creation time, partition value, and access frequency. The access frequency is generated by analyzing the task details of the engine. The task details are collected when Spark or Hive is executed by the hook. After calculation and processing, the hot and cold information about the access frequency and the most recent access time is extracted. Basic metrics are calculated from the meta warehouse (such as the most recent modification time and creation time partition value).

The decision center periodically triggers rule judgment. If the rule is met, archiving tasks are generated and implemented by the task center. The entire task center uses distributed scheduling to execute unfreezing or archiving tasks regularly or manually. JindoSDK uses high concurrency and high stability features to archive files at the directory level.

The lifecycle management process is convenient for users. Users do not need to operate OSS files, which improves users' hierarchical management capabilities for data on the lake.

The article above is about the practice of the Alibaba Cloud data Lake Team in the process of data lake management optimization. It helps customers optimize storage costs and improve the efficiency of data use in the application process.

[1] Data Lake Formation: https://www.alibabacloud.com/product/datalake-formation

[2] E-MapReduce (EMR): https://www.alibabacloud.com/product/emapreduce

[3] Data Lake Exploration - Delta Lake: https://www.alibabacloud.com/blog/599156

[4] Object Storage Service (OSS): https://www.alibabacloud.com/product/object-storage-service

[5] Data Lake on Alibaba Cloud: https://www.alibabacloud.com/solutions/data-lake

[6] Data Lake Storage: https://www.alibabacloud.com/solutions/data-lake-storage

62 posts | 7 followers

FollowAlibaba EMR - May 14, 2021

Alibaba EMR - November 18, 2020

Alibaba EMR - July 9, 2021

Alibaba EMR - August 22, 2022

Alibaba EMR - May 20, 2022

Apache Flink Community - August 21, 2025

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba EMR