By Wang Xiaolong, Technical Expert from Alibaba Cloud Open Source Big Data Platform

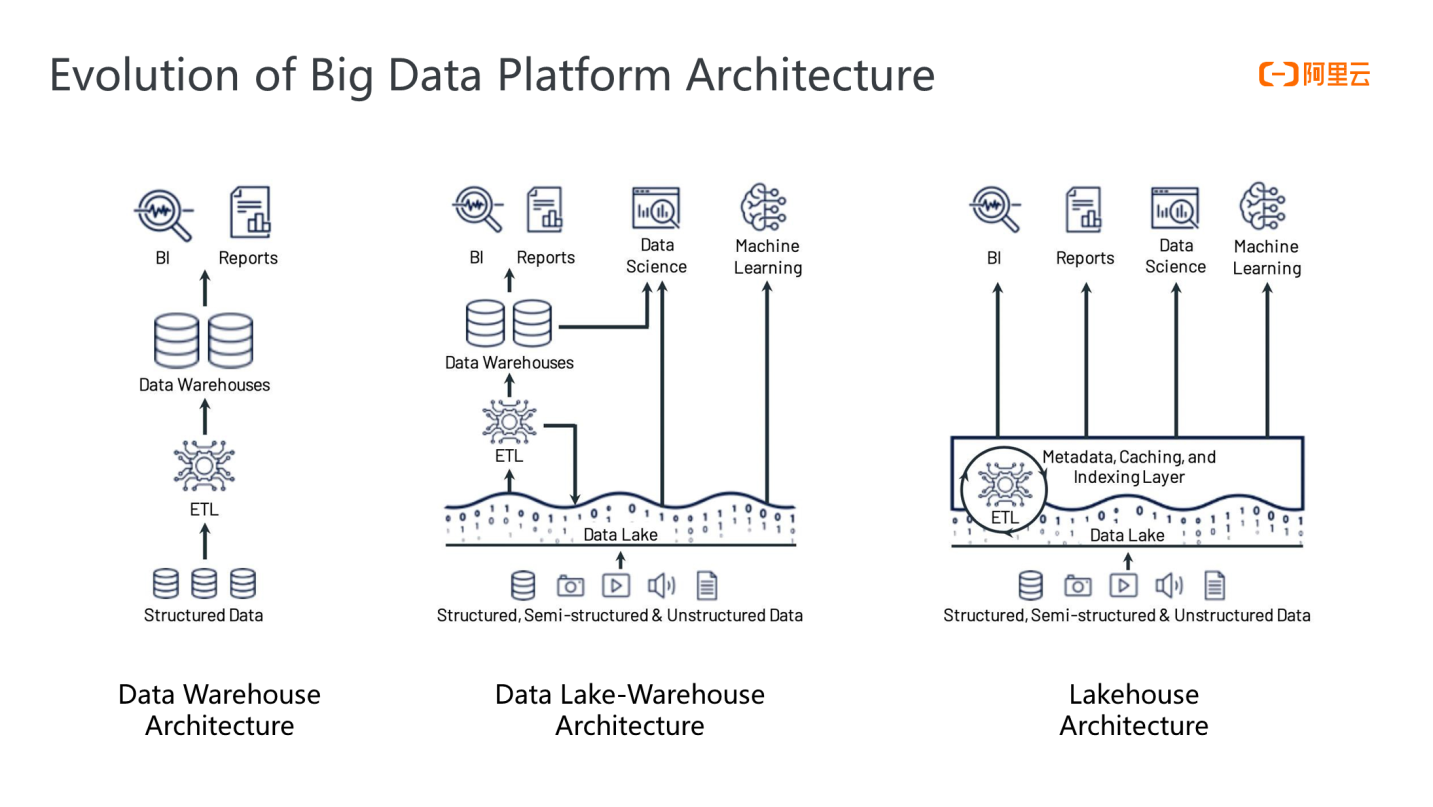

Since the development of big data platform architecture, it has gone through three stages of technological evolution: the earliest data warehouse, the data lake-warehouse architecture, and the Lakehouse architecture (over the last two years).

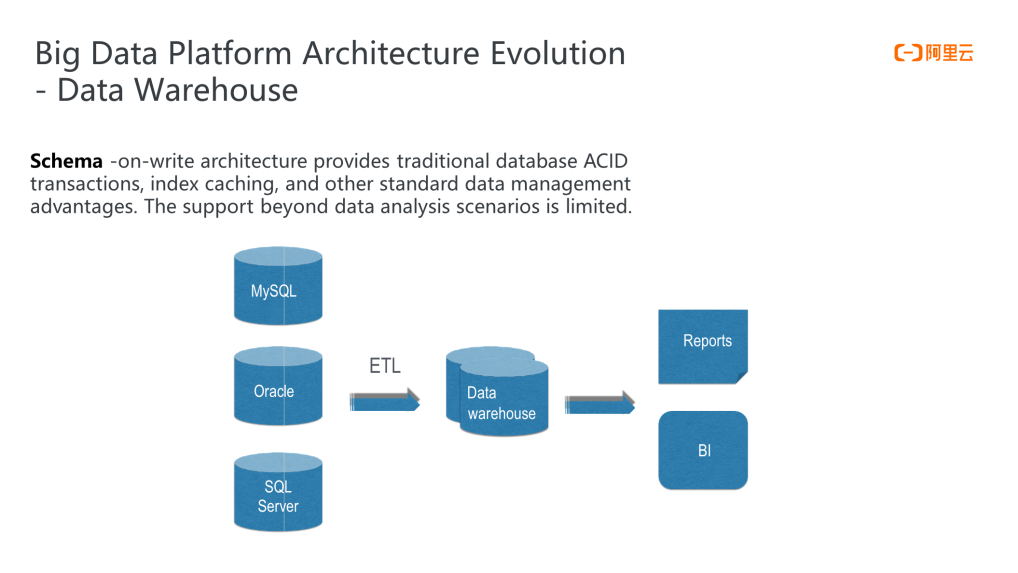

The earliest data warehouse architecture was the Schema-on-write design. As shown in the figure, the data is first imported into the data warehouse by the RDS through ETL. Some BI analysis and report analysis can be done. Its underlying layer is database technology, so it can provide better data management capabilities, such as supporting ACID transactions and providing strong Schema constraints when writing input data based on Schema-on-write to ensure data quality.

At the same time, based on its optimization features, the data warehouse architecture can provide good support for analytical scenarios. However, it only supported limited scenarios, yet they are limited to commonly used analysis scenarios. Enterprises have more requirements for data analysis scenarios as the data scale increases in the era of big data. It results in some advanced analysis scenario requirements, such as data science or machine learning scenarios. It is difficult for data warehouses to support such requirements.

In addition, the data warehouse cannot support semi-structured and unstructured data.

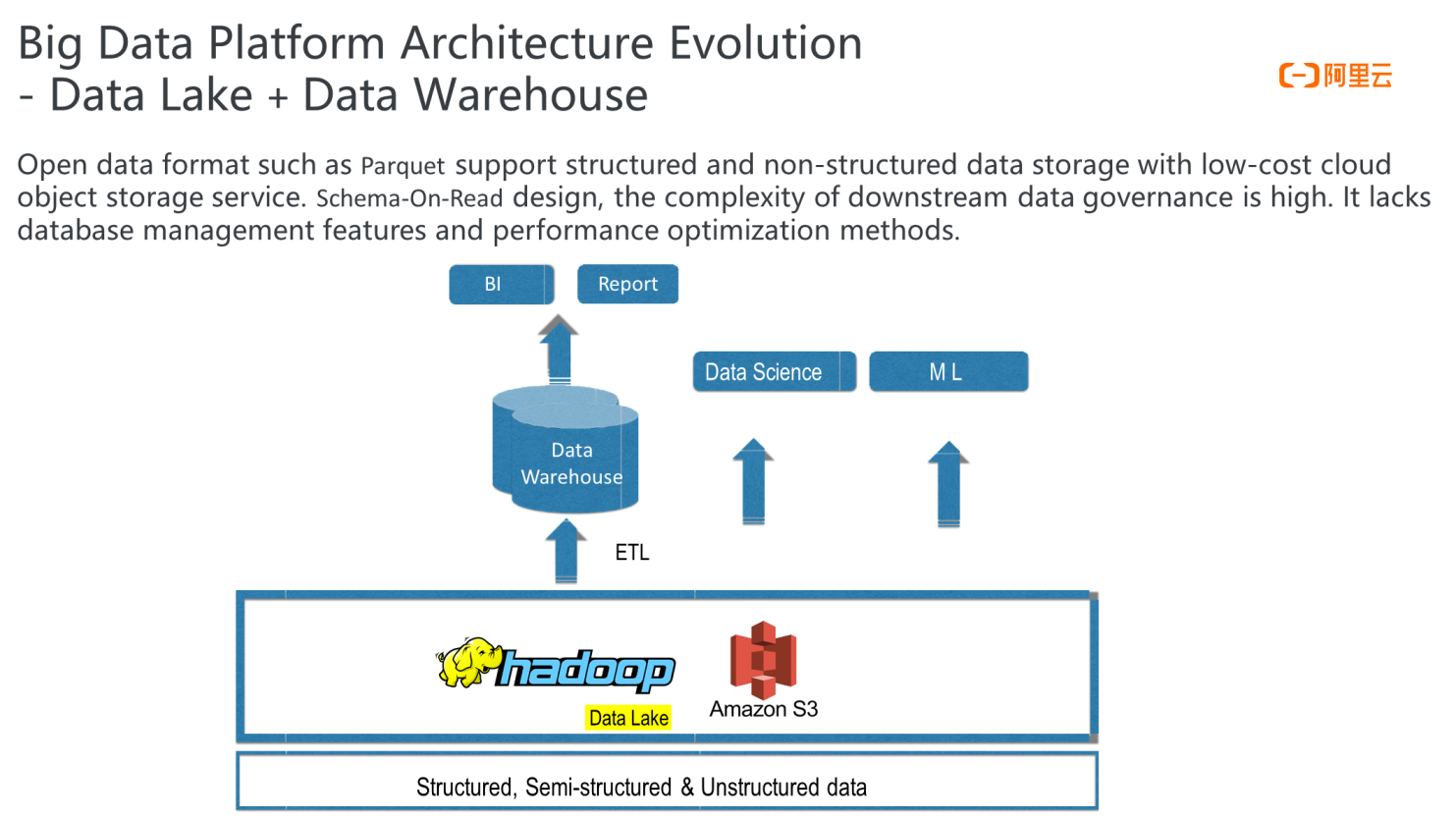

Since the launch of Hadoop, our demand for low-cost storage is becoming urgent with the explosive growth of data size. As a result, the second generation of big data platform architecture emerges. It is based on the data lake and can support the storage of structured, unstructured, and semi-structured data. Compared with the data warehouse, it is a Schema-on-read design. The data can be stored in the data lake efficiently, but it will leave a higher burden on downstream analysis.

The data is not verified before it is written, so the data in the data lake will become messier over time. The complexity of data governance is high. At the same time, the bottom layer of the data lake is stored on the cloud object storage service in an open data format, so some features will cause the data lake architecture to lack data management features like data warehouses. In addition, due to the insufficient performance of cloud object storage services in big data query scenarios, the advantages of data lakes fail to be well reflected in many scenarios.

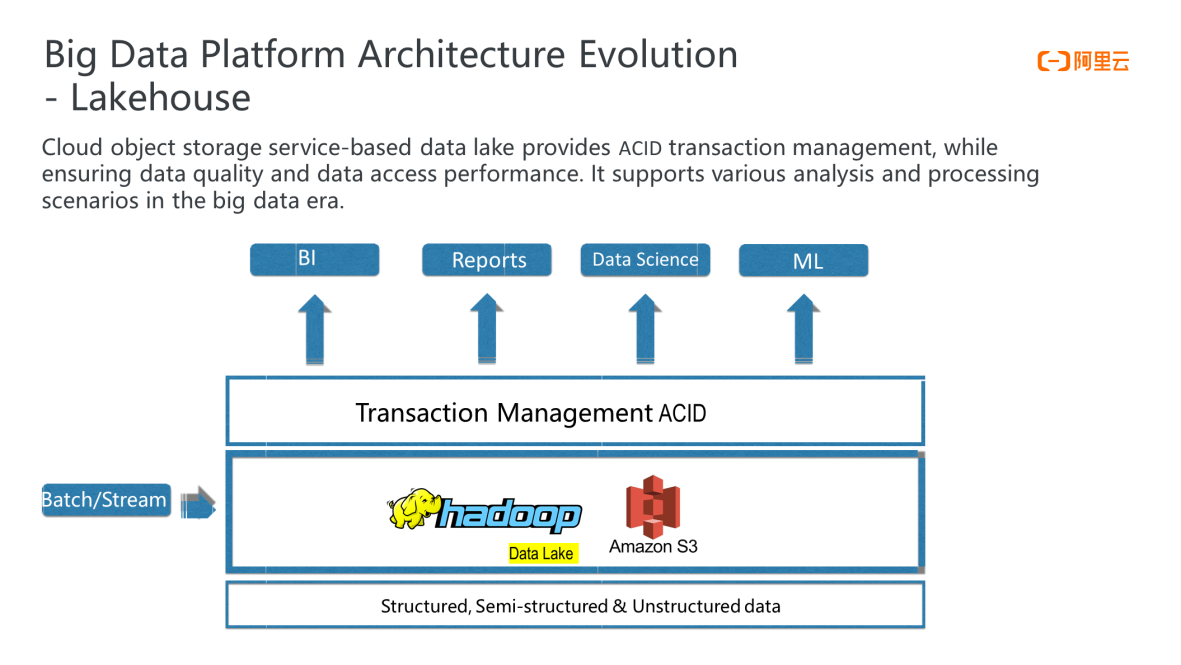

As a result, Lakehouse (the third generation of big data platform architecture) was created. It abstracts the transaction management layer on top of the data lake, can provide some Data Management features of traditional data warehouses, and can optimize the performance of some data for the data on the cloud object storage service. It supports various complex analysis scenarios in the big data era and provides a unified processing method for both stream and batch scenarios.

After the Lakehouse architecture, Delta Lake was created. It is an open-source database solution by Databricks. Its architecture is clear and concise, and it can provide guarantees.

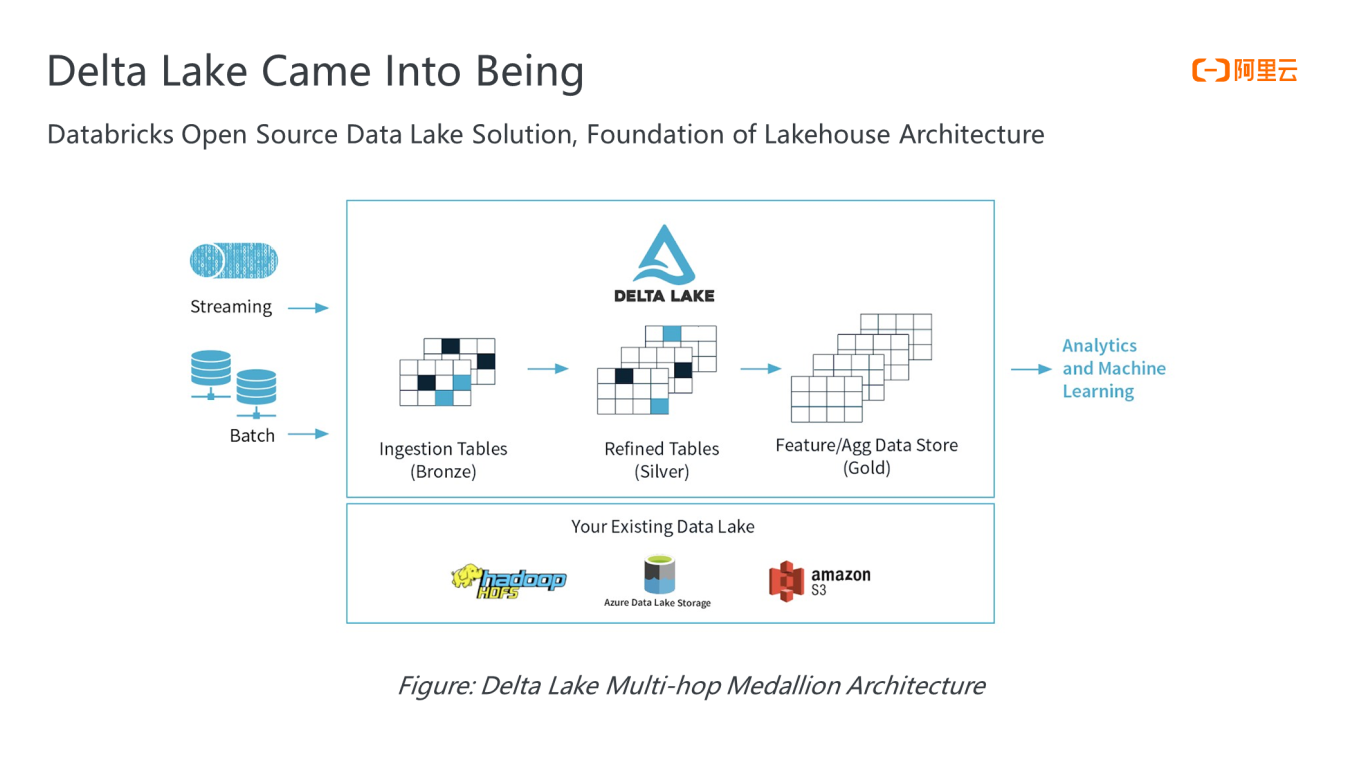

The preceding figure shows the Multi-Hop Medallion architecture of Delta Lake, which supports different analysis scenarios through multiple table structures. Data can be imported into Delta Lake by streaming or batch.

The tables in Delta Lake can be divided into three categories:

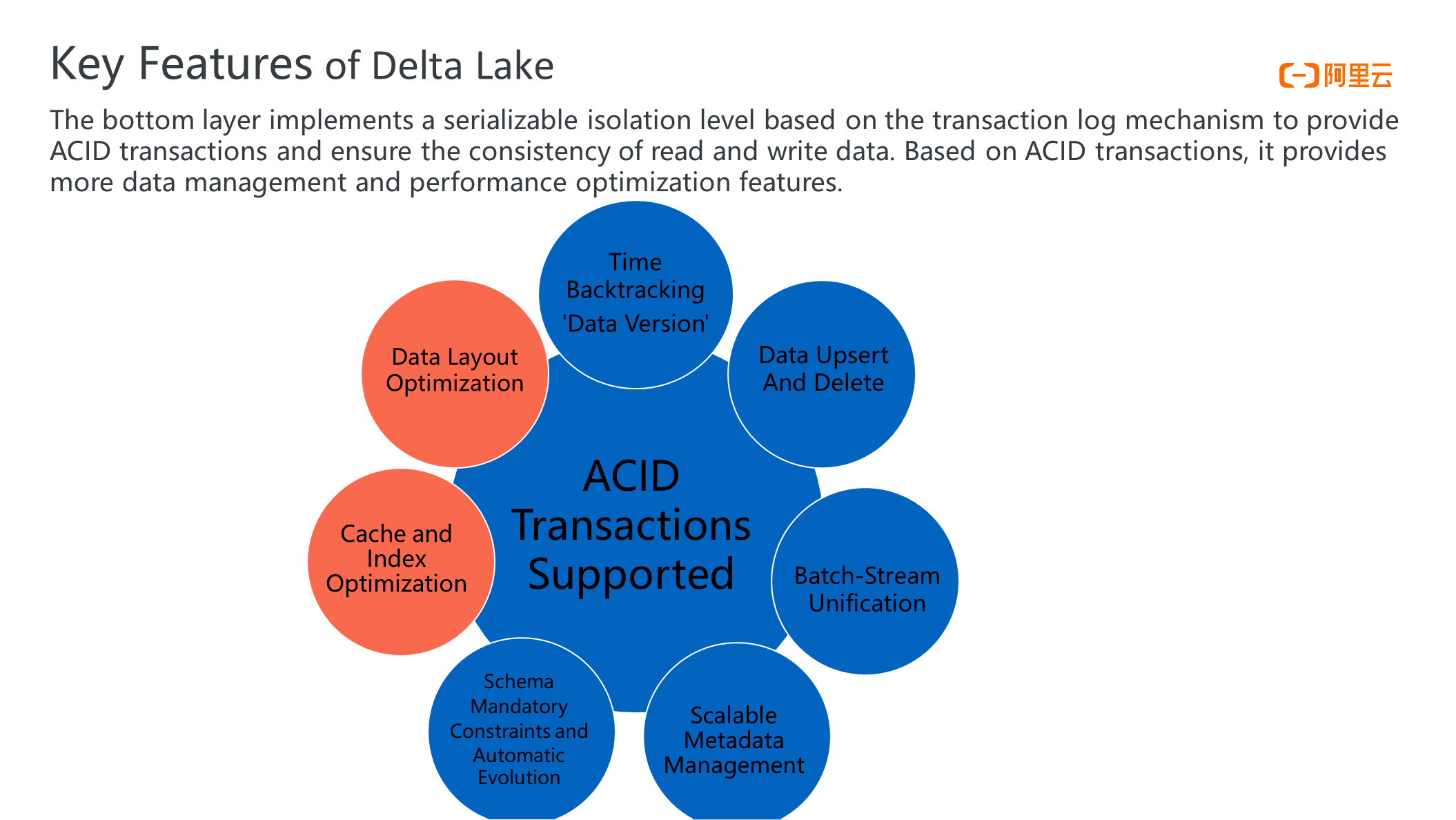

Delta Lake provides a transaction log-based mechanism to implement ACID transaction features, which can the achieve consistency of read and write data and provide a high-quality data guarantee.

Based on ACID transactions, Delta Lake provides more data management and performance optimization features, such as time backtracking and data version, which can trace back to a certain time or version of data based on its transaction log. At the same time, it can realize efficient upsert and delete of data and the capability of scalable metadata management.

Metadata management may be a burden in big data scenarios. Metadata can be the big data for larger tables. Therefore, the way to support metadata management efficiently is also an architectural challenge.

In Delta Lake transaction logs, metadata is a file that is stored in the form of the transaction log. Therefore, the Spark big data engine can be used to achieve metadata extensibility. At the same time, Delta Lake can provide a unified stream-batch method, which can support data injection in a unified way. The premise of the implementation is that Delta Lake can support serializable isolation levels and implement some typical streaming requirements, such as CDC.

At the same time, Delta Lake provides mandatory constraints of schemas and the ability to evolve automatically to ensure the data quality in the data lake.

In addition, in the commercial version of Delta Lake, the ability to optimize the data layout automatically is also supported in the database, along with a series of performance optimization features of traditional data warehouse databases, such as caching and indexing.

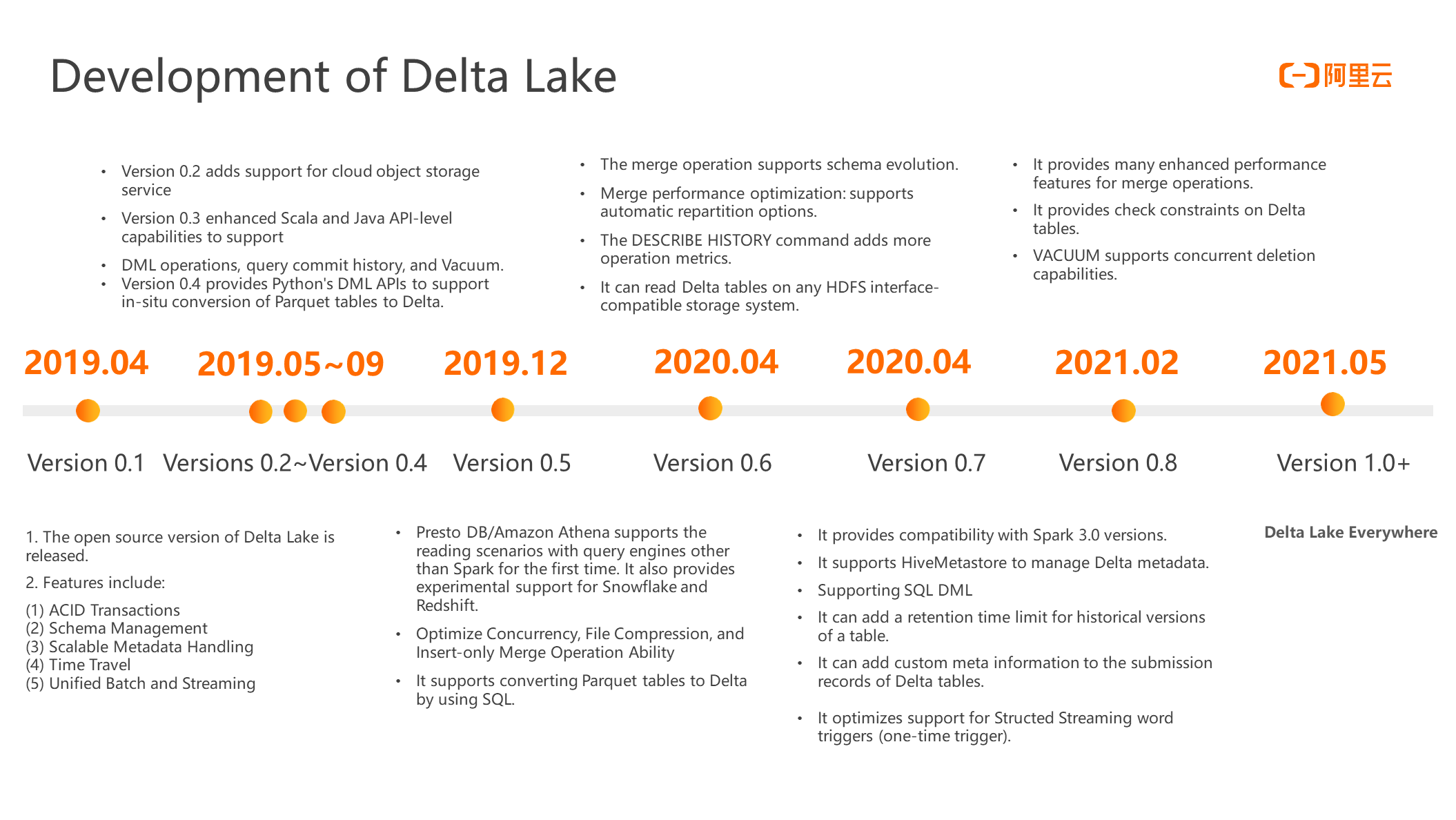

The Delta Lake project was first open-source in April 2019. The core functions (such as transaction and batch-stream unification) have been implemented in 0.1 versions. Since then, Delta Lake has been working hard to realize usability and openness. Lakehouse has also opened up more technologies in the open-source community.

Looking at the development of Delta Lake, it can be seen that it has been moving towards to support more diversified and open ecological development.

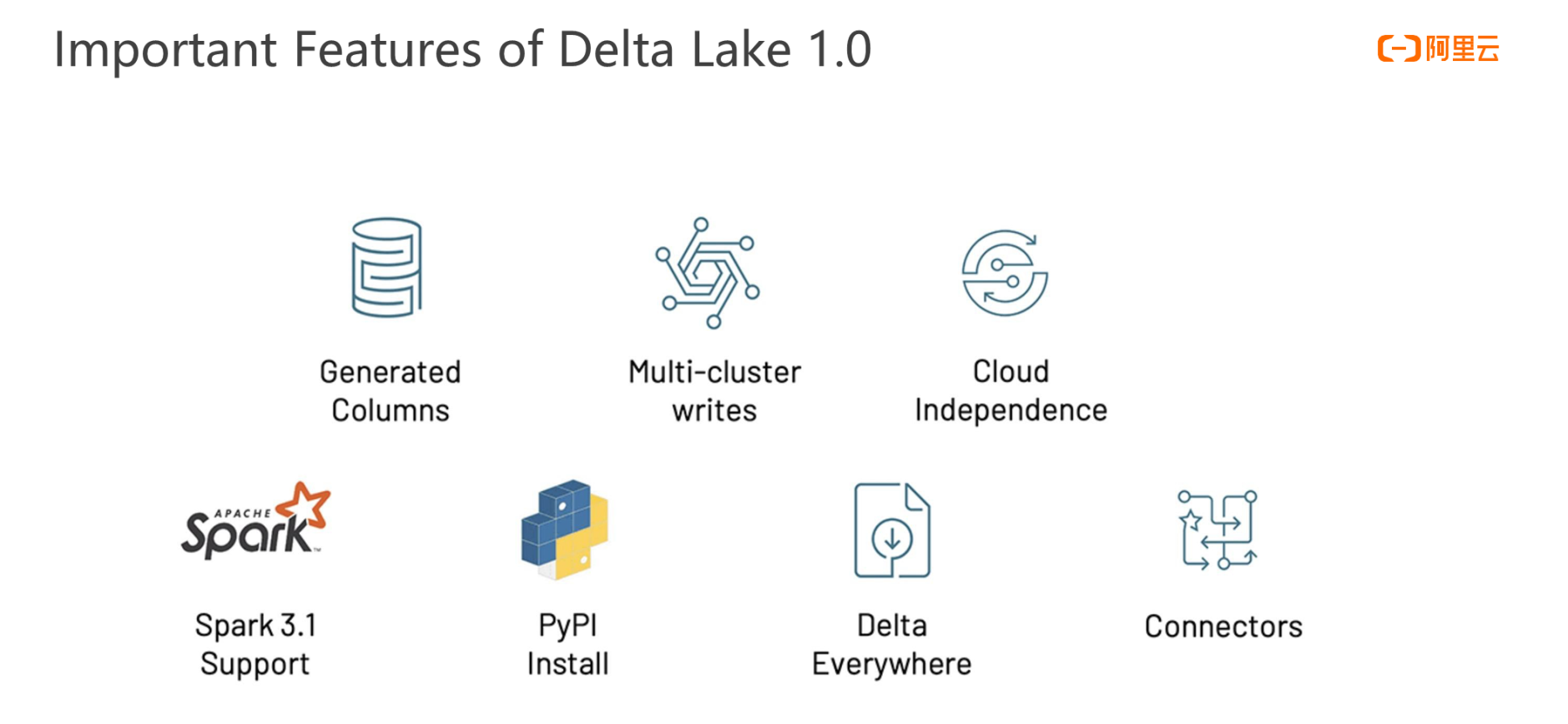

The figure shows some core features of Delta Lake 1.0:

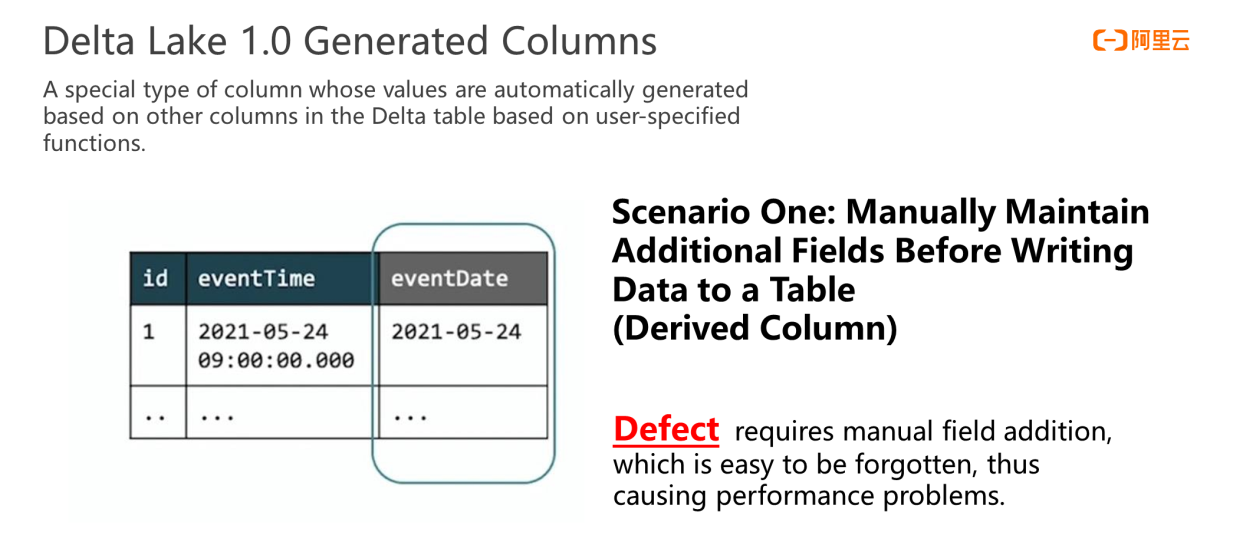

The first is the Generated Columns feature, which will be introduced here with an example.

A typical requirement in big data development scenarios is to partition data tables. For example, when partitioning the date field, the original data before writing to the table may be a timestamp field instead of a date field. As shown in the preceding figure, eventTime is a timestamp, but the data does not contain the eventDate type. How should partitioning be done?

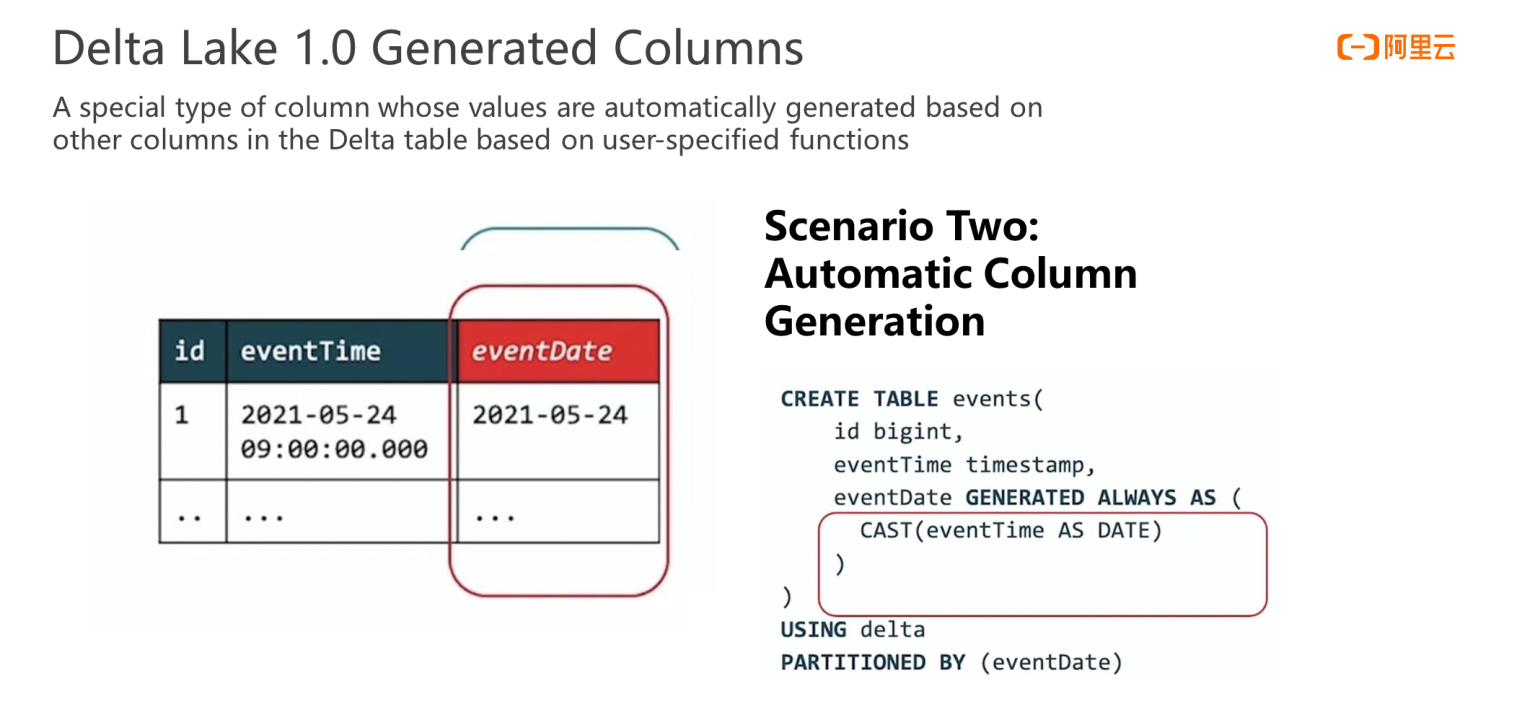

Therefore, Delta Lake 1.0 provides generated columns, a special type of column whose values can be automatically generated according to user-specified functions.

You can use the simple SQL syntax shown in the preceding figure to convert eventTime to date to generate the eventDate field. The whole process is automatic. Users only need to provide this syntax when creating tables at the beginning.

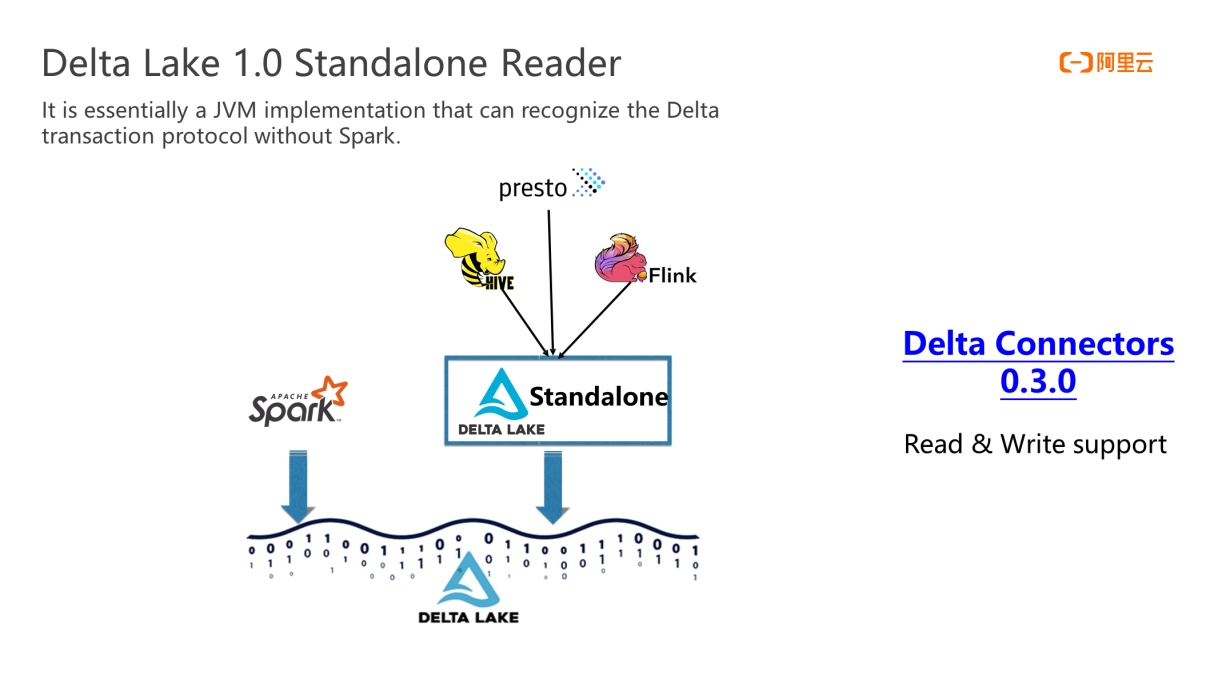

The second important feature provided by Delta Lake 1.0 is Standalone. Its goal is to connect more engines besides Spark, but engines (such as Presto and Flink) do not need to rely on Spark. If Delta Lake only binds compulsively to Spark, it violates Delta Lake's openness goal.

As a result, the community launched Standalone, which implements the processing of Delta Lake transaction protocol at the jvm level. With Standalone, more engines will come in later. The earliest version of Standalone only provided Reader. With the release of Delta Connector version 0.3.0 earlier this year, Standalone began to support write operations. In addition, community support for Flink Sink/Source, Hive, and Presto is under development.

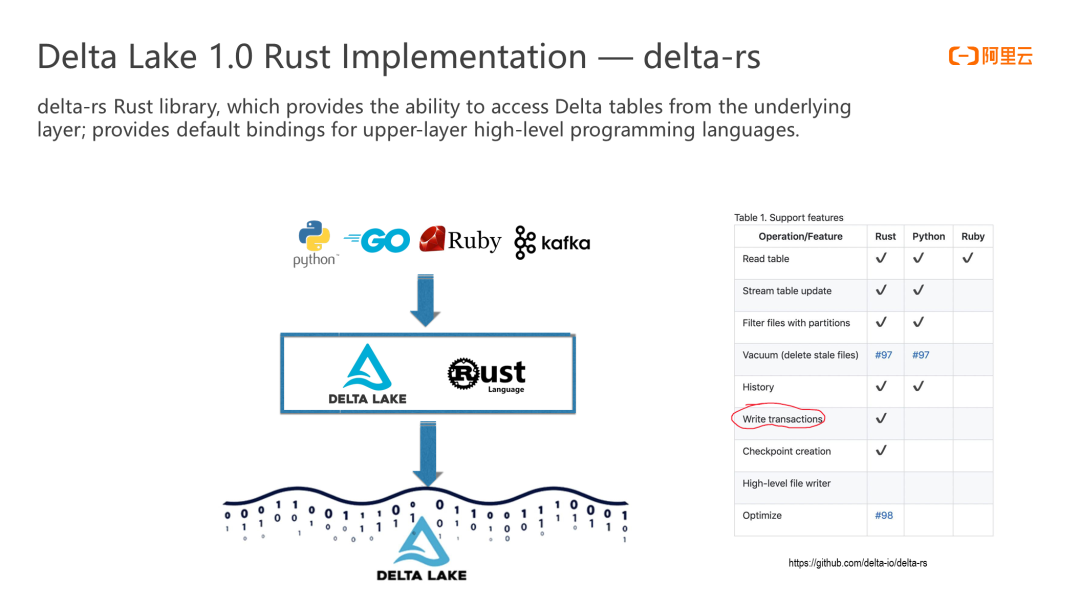

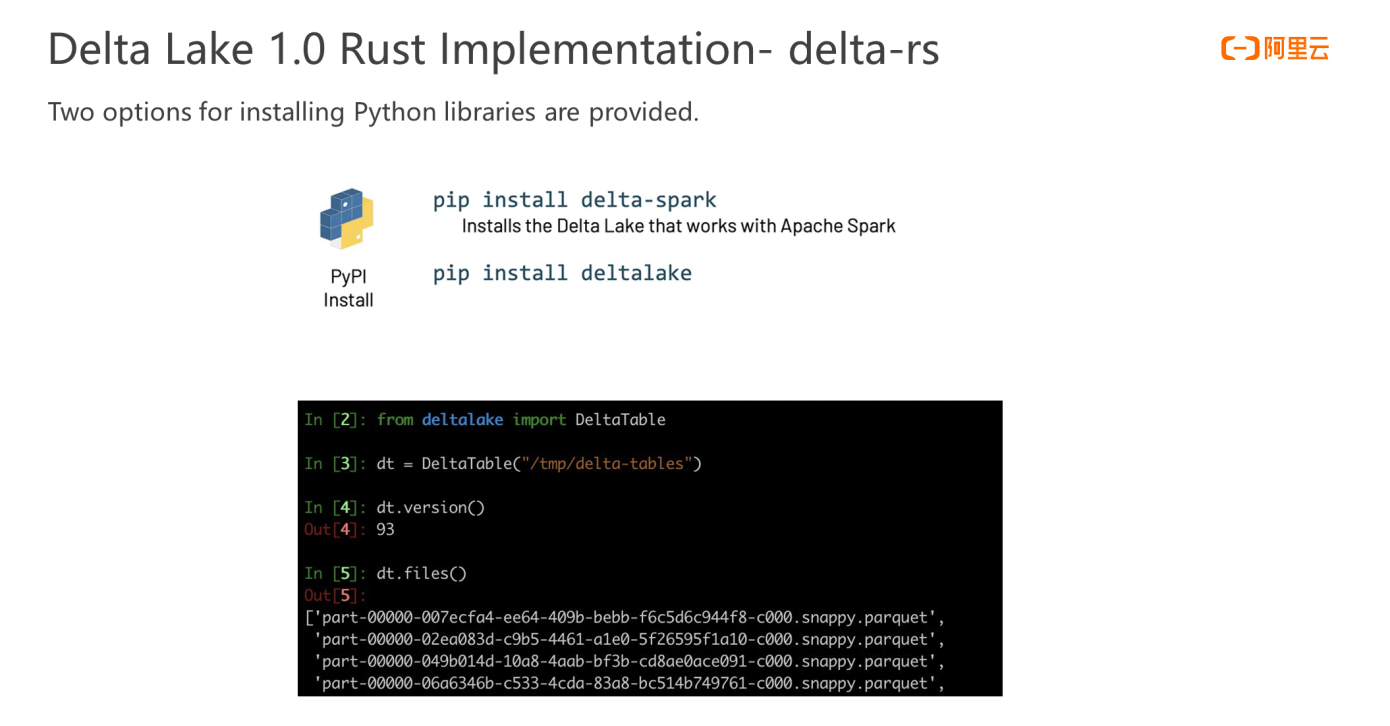

In addition, Delta Lake 1.0 provides Delta-rs Rust libraries. The goal is to support more high-level programming languages through Delta-rs libraries and make Delta Lake more open. With Delta-rs libraries, more programming languages (such as Python and Ruby) can access the Delta Lake table.

Depending on the Delta-rs Rust library, Delta Lake 1.0 provides two options for installing Python libraries, which can be installed directly using pip install. If you do not want to rely on Spark, you can use the pip install Deltalake command line to install Delta Lake. After the installation, you can use Python to read data from the Delta table.

The earliest Delta Lake was a sub-project of Spark, so Delta Lake did a good job of compatibility with the Spark engine. At the same time, due to the rapid development of the Spark community, being compatible with Spark for the first time is the primary goal of the Delta Lake community. Therefore, in version 1.0, Delta Lake is first compatible with Spark 3.1. Some of its features are optimized so it can work in Delta Lake in the first place.

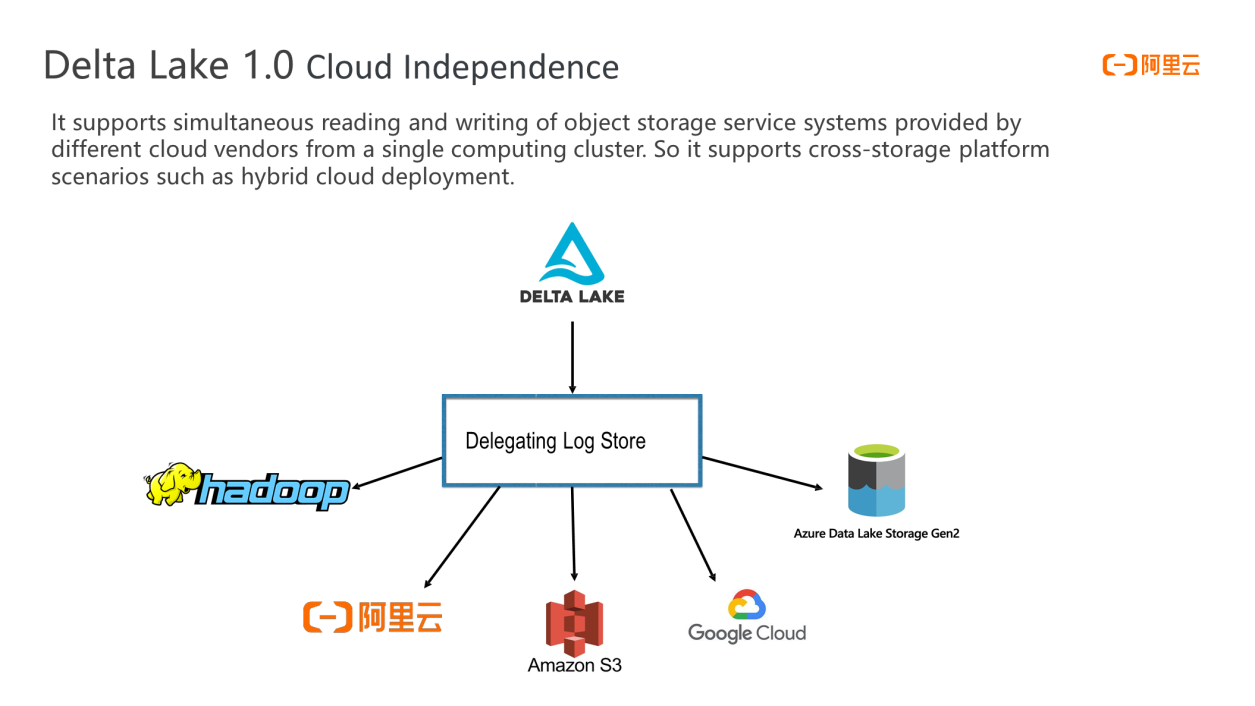

When many enterprises use Delta Lake, a common scenario is to use a single cluster to access/associate a storage system. Delta Lake 1.0 provides delegating log store functions to support object storage service systems of different cloud vendors through log store to support hybrid cloud deployment scenarios and avoid locking binding to a single cloud service provider.

In the future, the Delta Lake community will forge ahead in a more open way.

In addition to the core functions mentioned before, Delta Lake can connect engine products other than Spark. The preceding figure shows the launch plan for related features.

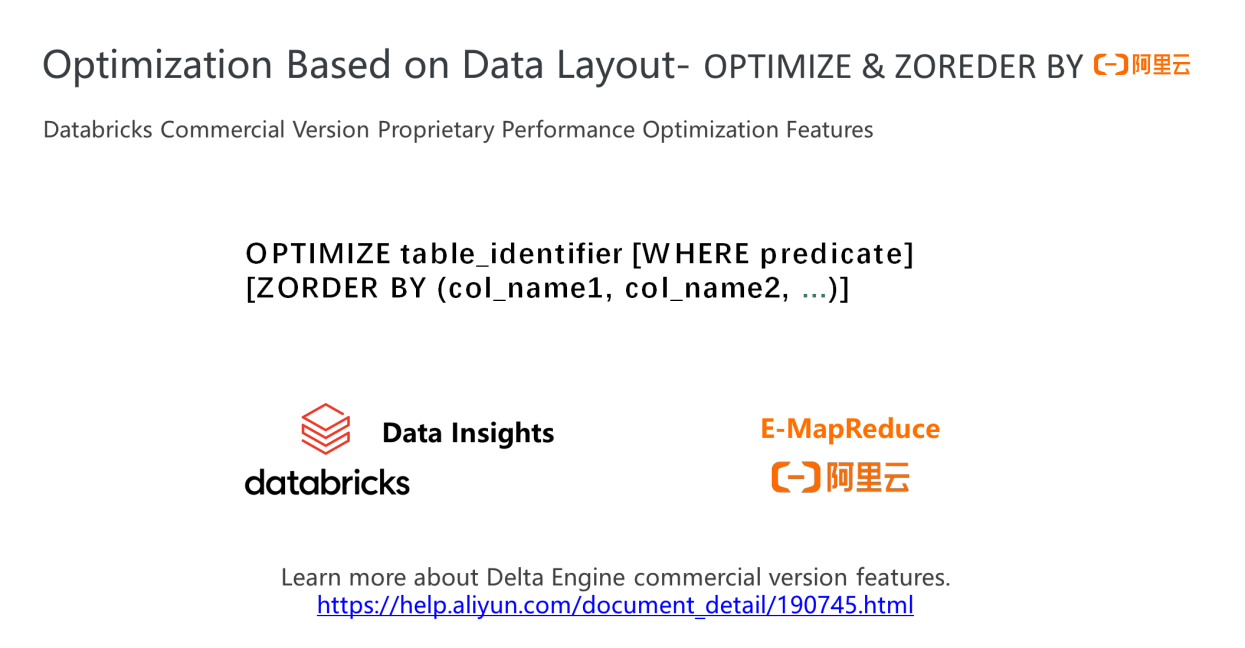

There are two features highly demanded in the community version of Delta Lake: Optimize and Z-Ordering. These two features are not provided in the current open-source Delta Lake version of Databricks, but they are well-supported in the commercial version. Alibaba Cloud has launched a fully managed Spark product based on the commercial version of Databricks engine - Databricks DataInsight. In addition to the Optimize and Z-Ordering listed here, you can experience more commercial Spark and Delta engine features. In addition, Alibaba Cloud E-MapReduce products provide Alibaba Cloud with proprietary implementations for Optimize and Z-Ordering.

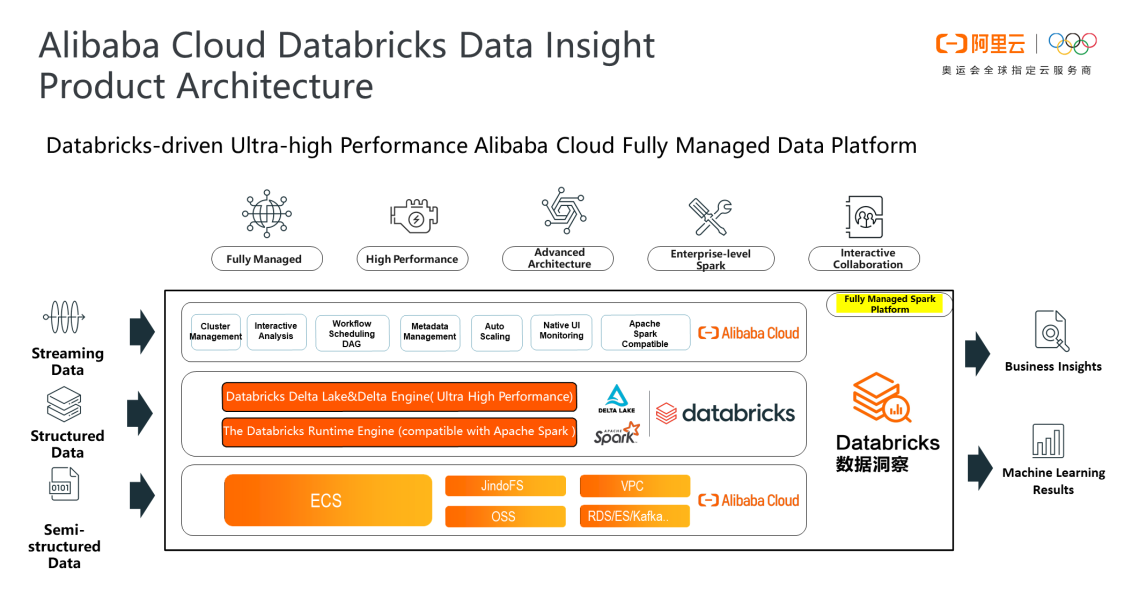

The Databricks DataInsight product is a fully managed data platform based on the Databricks engine. Its core part is the Databricks engine. Databricks RunTime provides the commercial version of Delta engine and Spark engine. Compared with the open-source Spark and Delta, the commercial edition has a significant performance improvement.

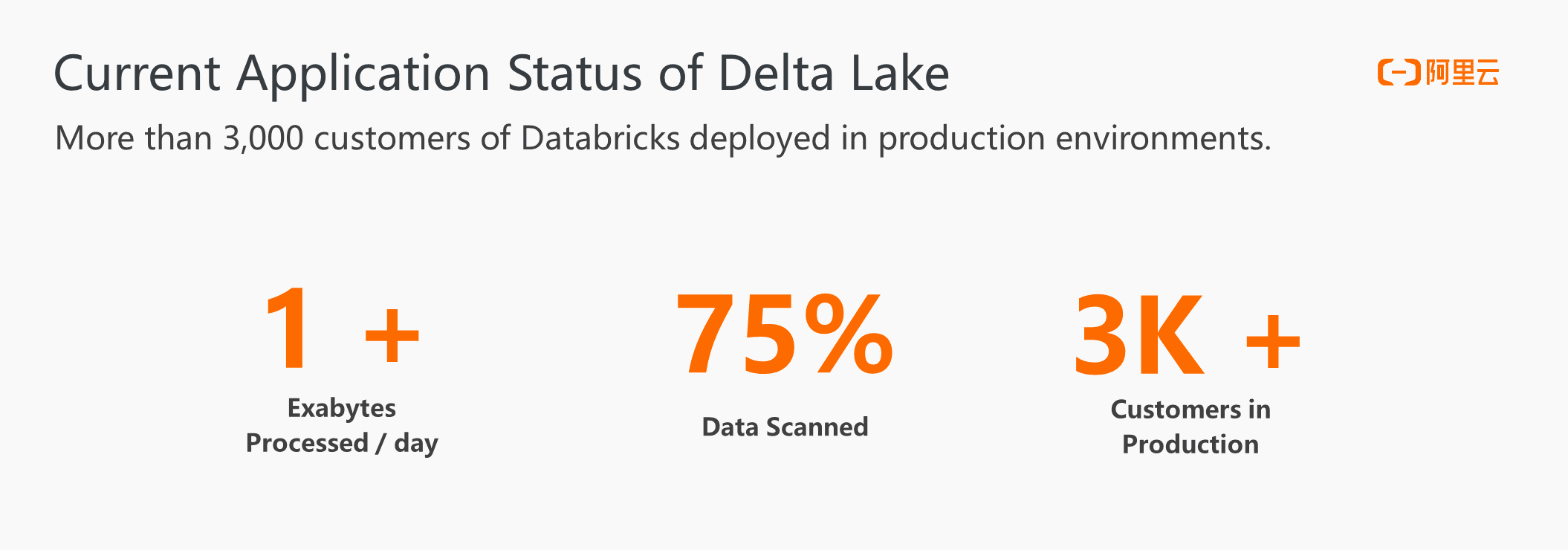

Finally, let's discuss the current global application of Delta Lake. More enterprises have begun to use Delta Lake to build Lakehouse. Based on the data from Databricks, more than 3,000 customers have deployed Delta Lake in the production environment to process exabytes-level data volume everyday. More than 75% of the data has been in Delta format.

62 posts | 7 followers

FollowAlibaba EMR - September 23, 2022

Alibaba Cloud Community - August 26, 2022

Alibaba EMR - September 2, 2022

Alibaba EMR - September 13, 2022

Alibaba Cloud Community - September 30, 2022

Alibaba EMR - July 20, 2022

62 posts | 7 followers

Follow Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR