By Li Yuanjian, Databricks Software Engineer, Feng Jialiang, Alibaba Cloud Open-source Big Data Platform Technical Engineer

Delta Lake is an open-source storage layer that brings reliability to the data lake. Delta Lake provides ACID transactions and extensible metadata processing and unifies stream and batch processing. Delta Lake runs on top of an existing data lake and is fully compatible with Apache Spark's API. I hope this article can give you a deeper understanding of Delta Lake and put it into practice.

This article will break down the features of Delta Lake into two parts:

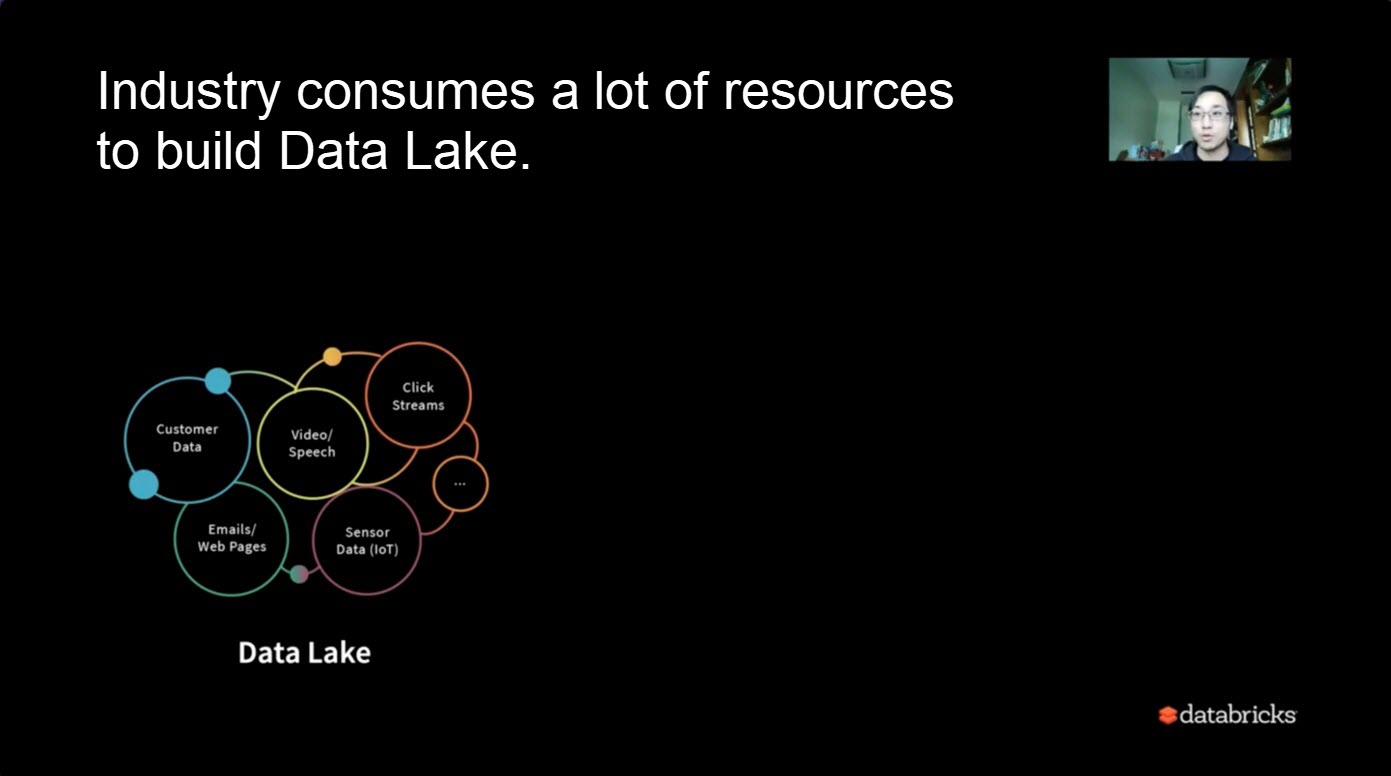

Some readers may be experienced in building data warehouses to process data. The industry consumes a lot of resources to build related systems.

We found that a series of data (such as semi-structured data, real-time data, batch data, and user data) is stored in various places. They provide services for users in different processing forms.

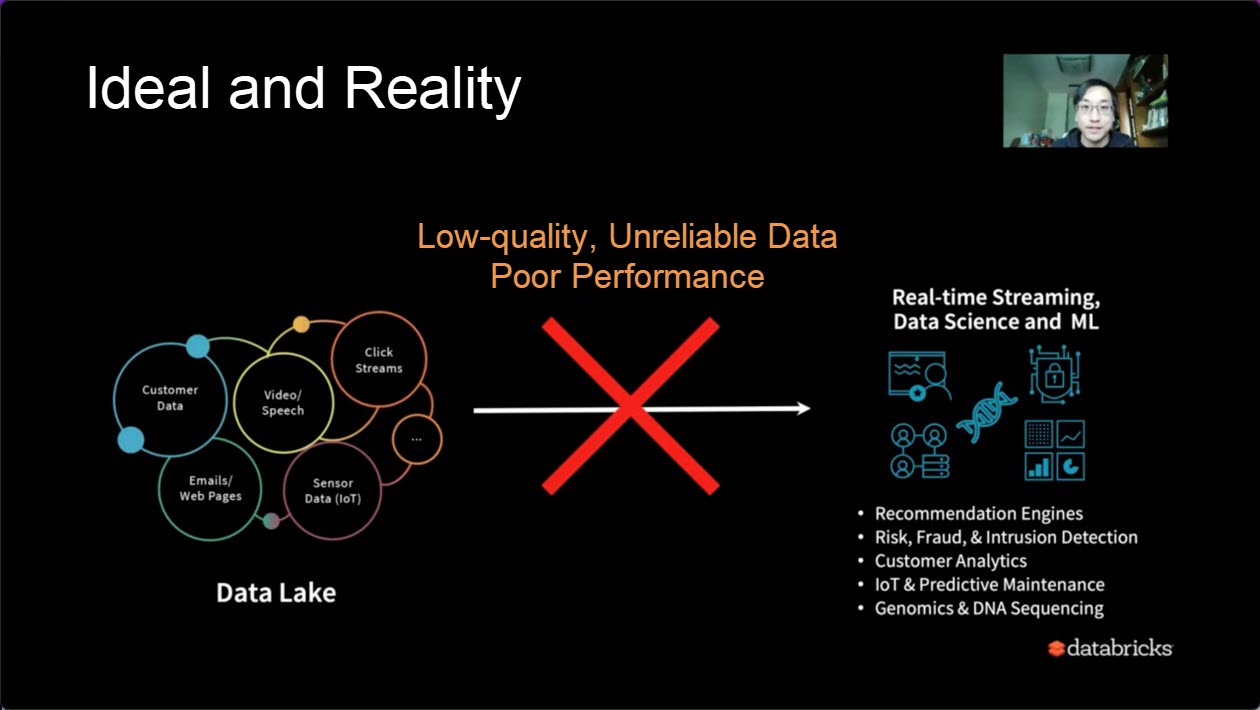

What kind of ideal system do we expect?

However, the reality is:

Delta Lake was created under this context.

Let's take a common user scenario as an example: how to solve such a problem if there is no Delta Lake.

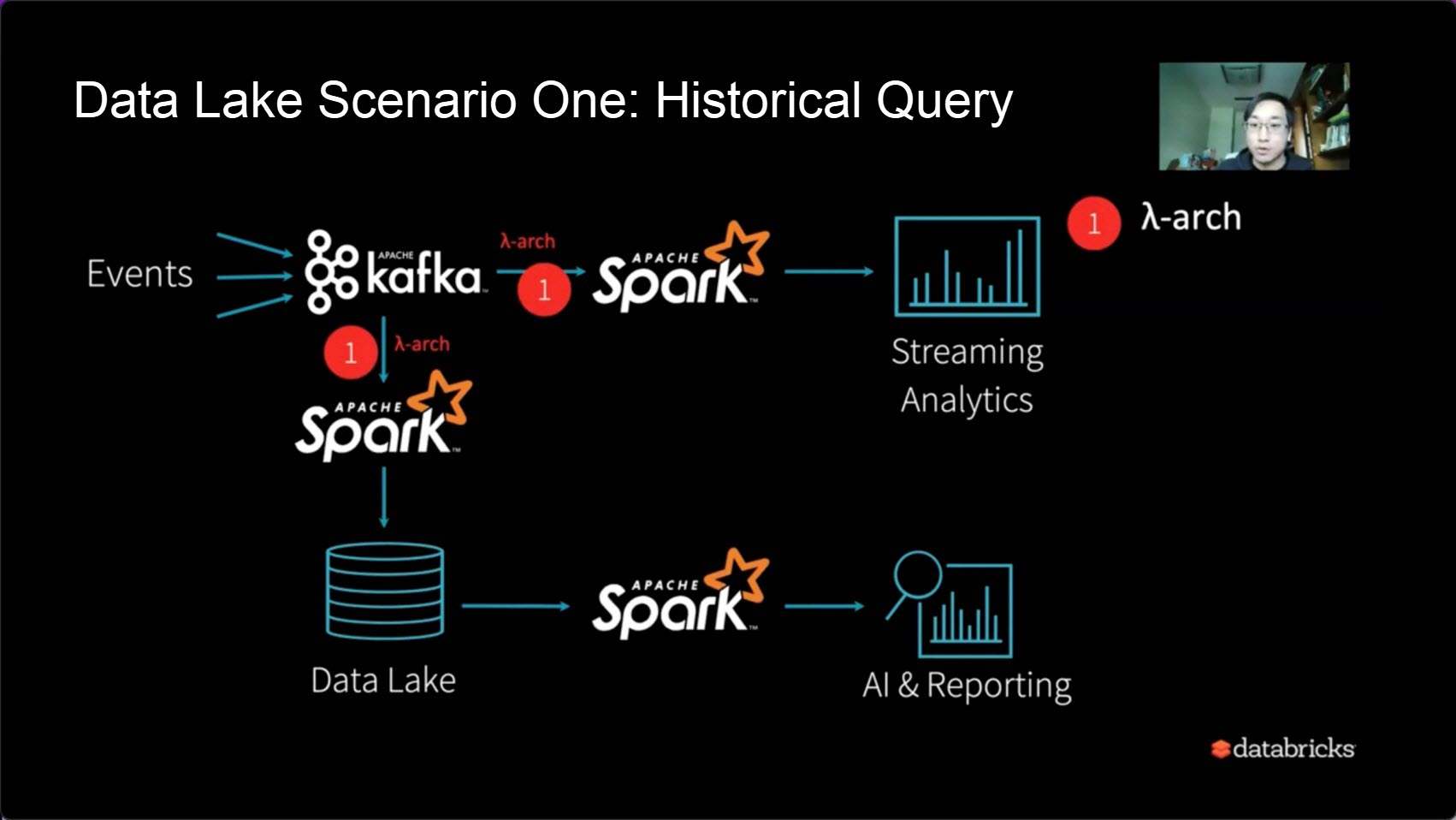

This is probably one of the most common Delta Lake scenarios. For example, we have a series of streaming data that stream from the Kafka system. We expect to have real-time processing capabilities. At the same time, we can periodically put the data in Delta Lake. At the same time, we need the export of the whole system to have AI and Reporting capabilities.

The first process stream is simple. For example, you can use Streaming Analytics to open a real-time stream through Apache Spark.

At the same time, when offline streaming is required, historical queries can use the way the Lambda schema corresponds. Apache Spark provides a good abstract design. We can use a code or API to complete streaming and real-time Lambda architecture design.

Through the query of historical data, we can also use Spark for SQL analysis and generate AI technology in the form of Spark SQL jobs.

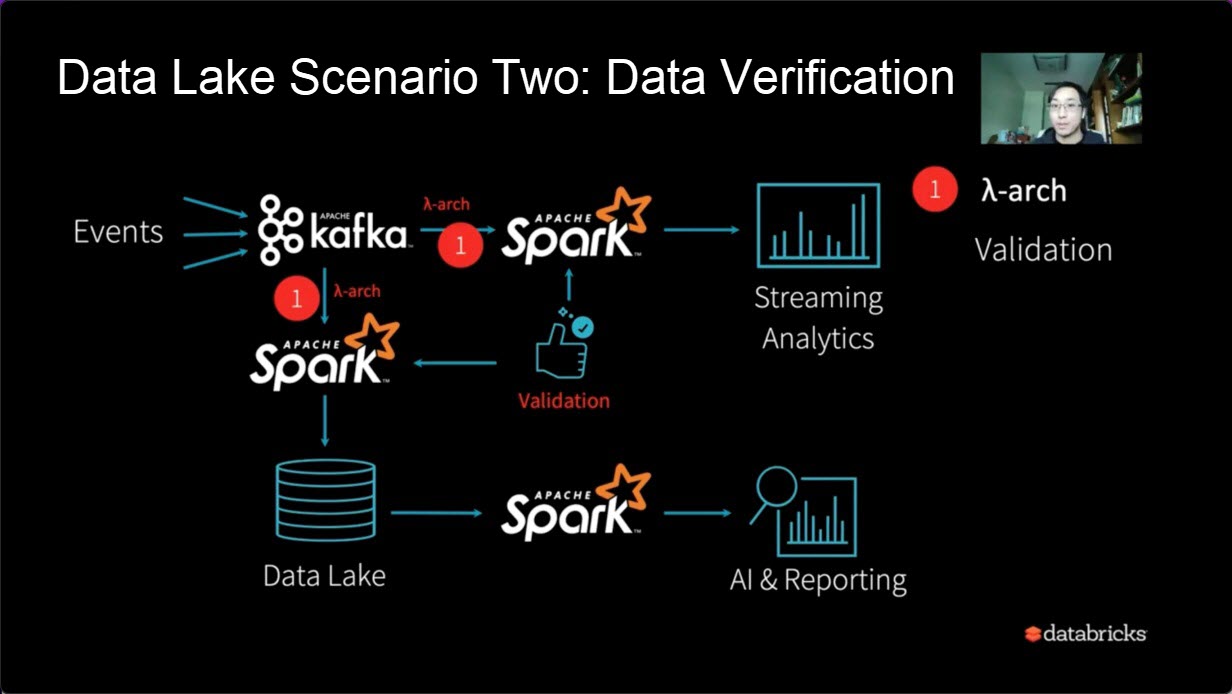

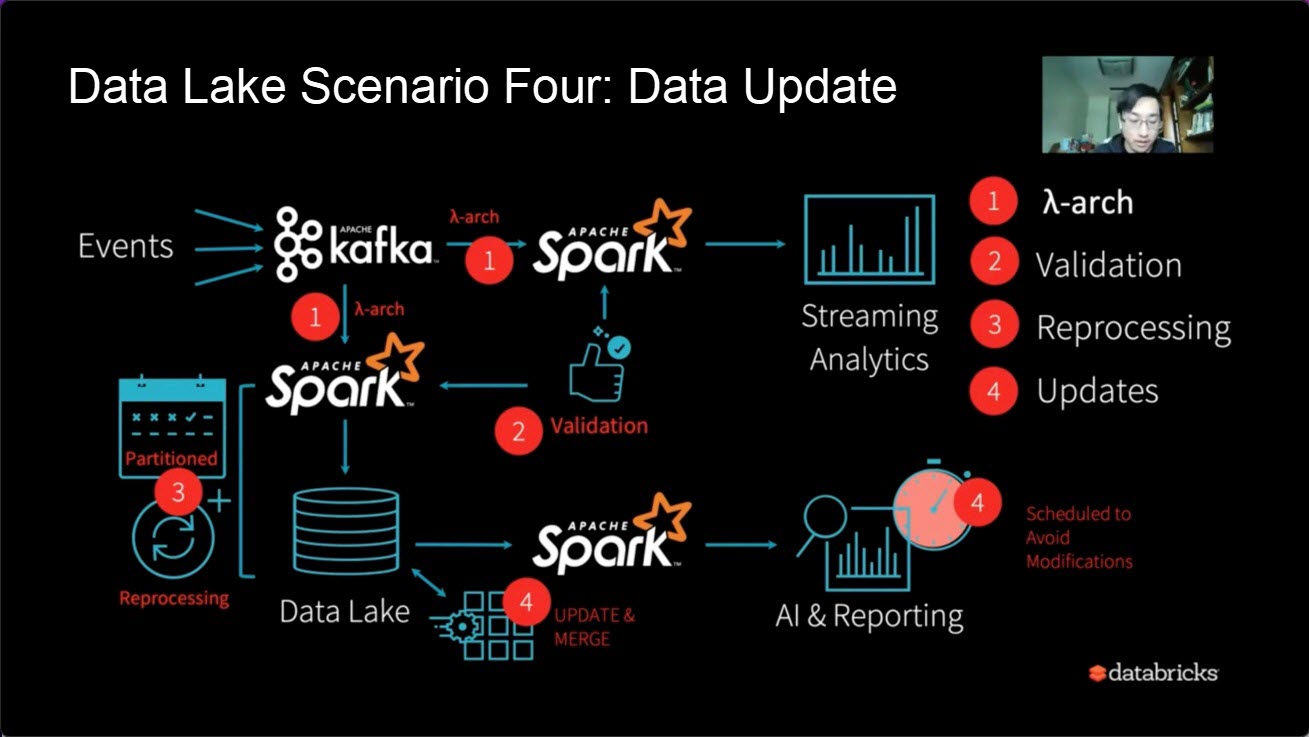

The first problem we need to face is data verification.

If our streaming and batch data exist in the form of Lambda architecture, how can we confirm that the data found at a certain point in time is correct? What is the difference between streaming and batch data? When should our batch data be synchronized with streaming data?

The Lambda architecture also needs to introduce Validation, which needs to be confirmed. Validation is an indispensable step, especially for accurate data analysis systems (such as reporting systems for users).

Perhaps we need a side branch to solve the synchronization problem between streaming and batch and the corresponding verification problem.

Assuming the preceding problem is solved, if there is a problem with our corresponding Partitioned data, the dirty data of the day needs to be corrected several days later after a time in the system. What do we need to do in that situation?

Generally, we need to stop online queries before repairing data and resume online tasks after repairing data. The process adds another fix to the system architecture and the ability to go back to the past versions. So, Reprocessing was born.

Assuming that the Reprocessing problem is solved, a new series of requirements emerges at the final export end of AI and Reporting. For example, one day, the business department, the superior department, and the cooperation department asked whether Schema Change could be made. As more people use data and want to add the UserID dimension, what should be done at this time? Go to Delta Lake to add schemas, stay, and reprocess the corresponding data.

Therefore, there will be new problems after solving this. If it is solved case by case, the system will keep patching up. An otherwise simple or integrated requirement will become redundant and complex.

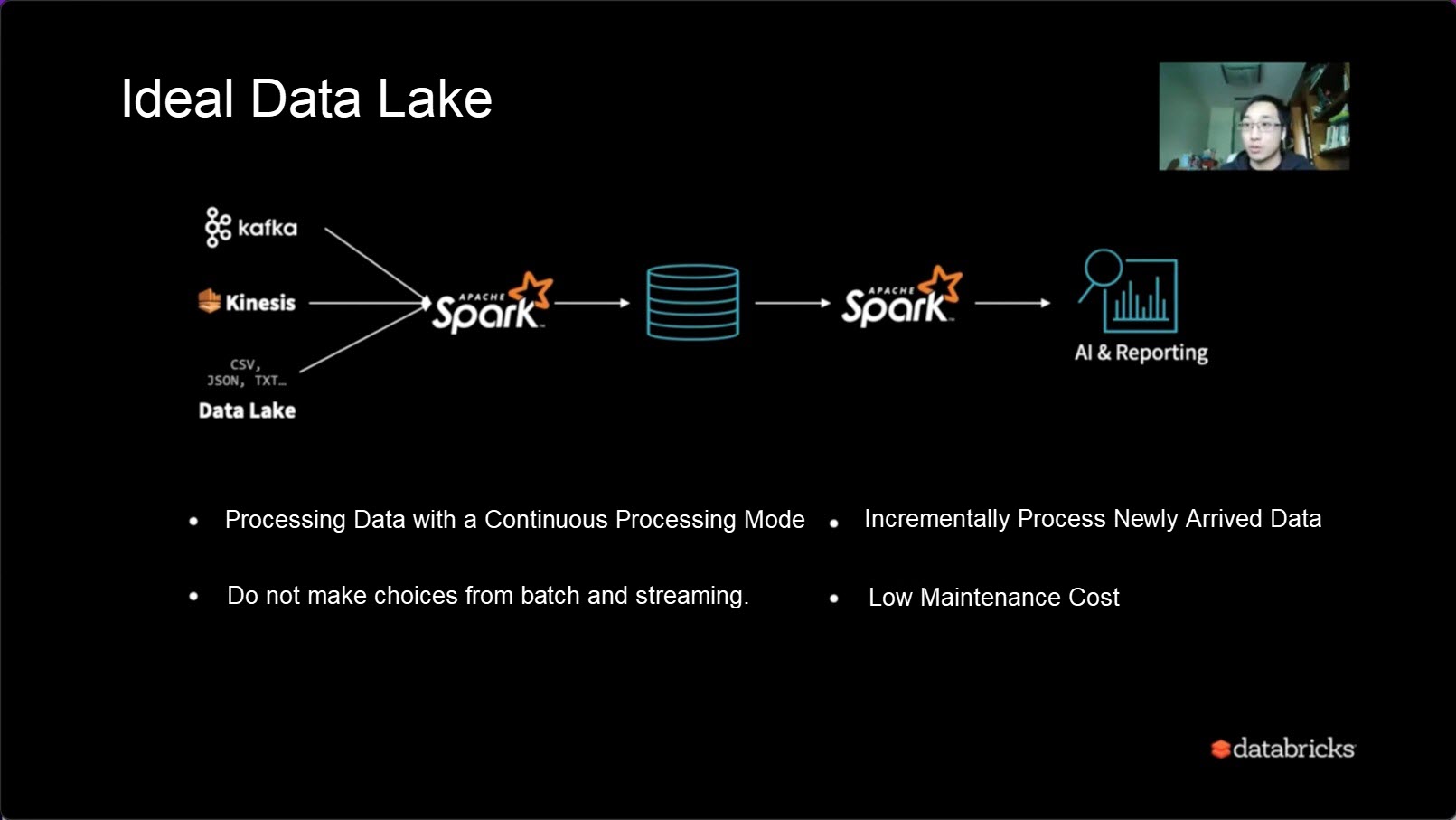

What should the ideal Delta Lake look like?

It is the system corresponding to the entrance and exit to do the corresponding things. The only core is the Delta Lake layer, which means the corresponding data processing and the entire process of data warehousing can be achieved.

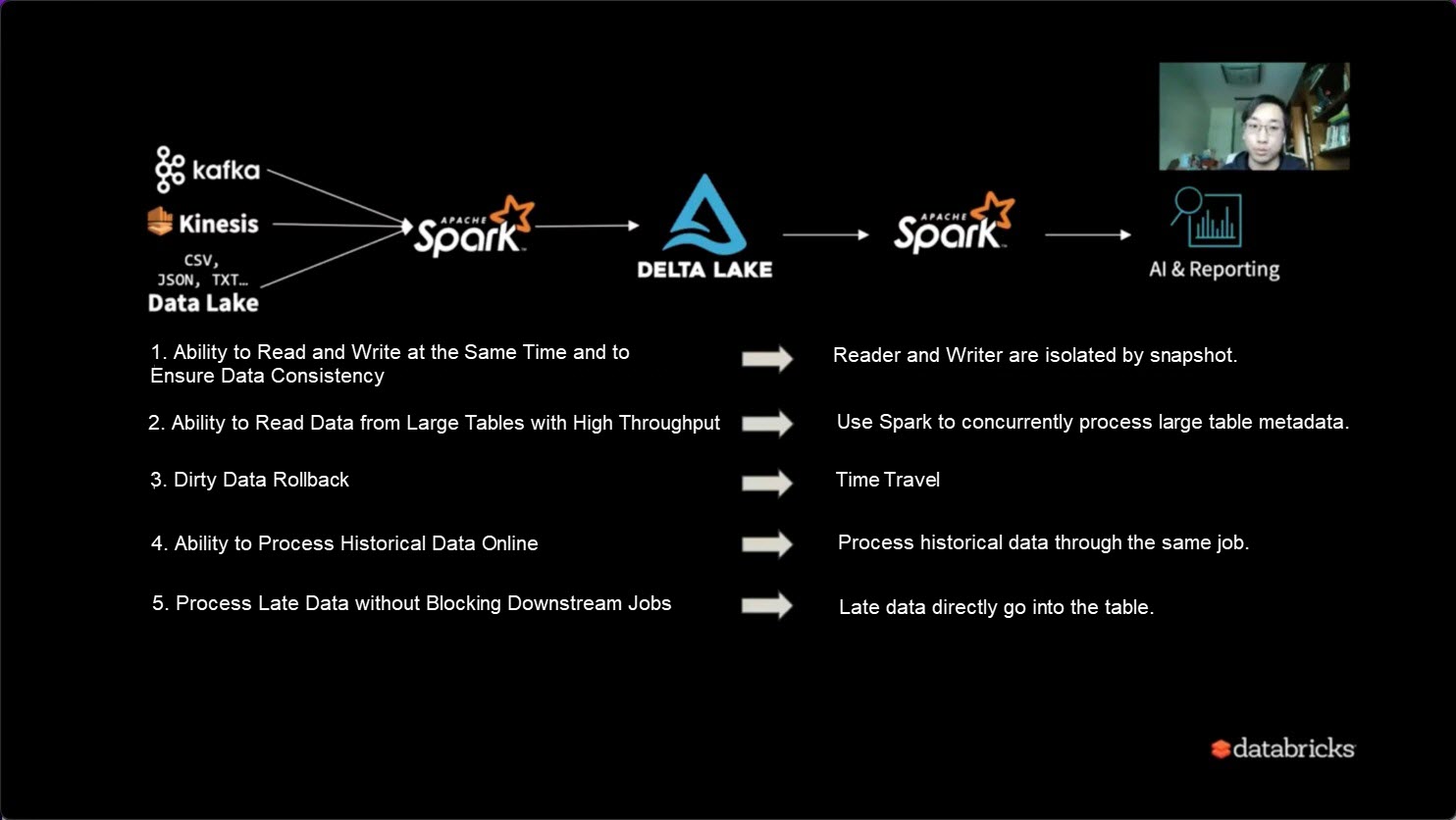

Let's take a look at how this series of problems are solved in Delta Lake:

After the preceding five points are solved, we can replace Lambda architecture with Delta Lake, or we can use Delta Lake architecture for a series of batch and stream systems.

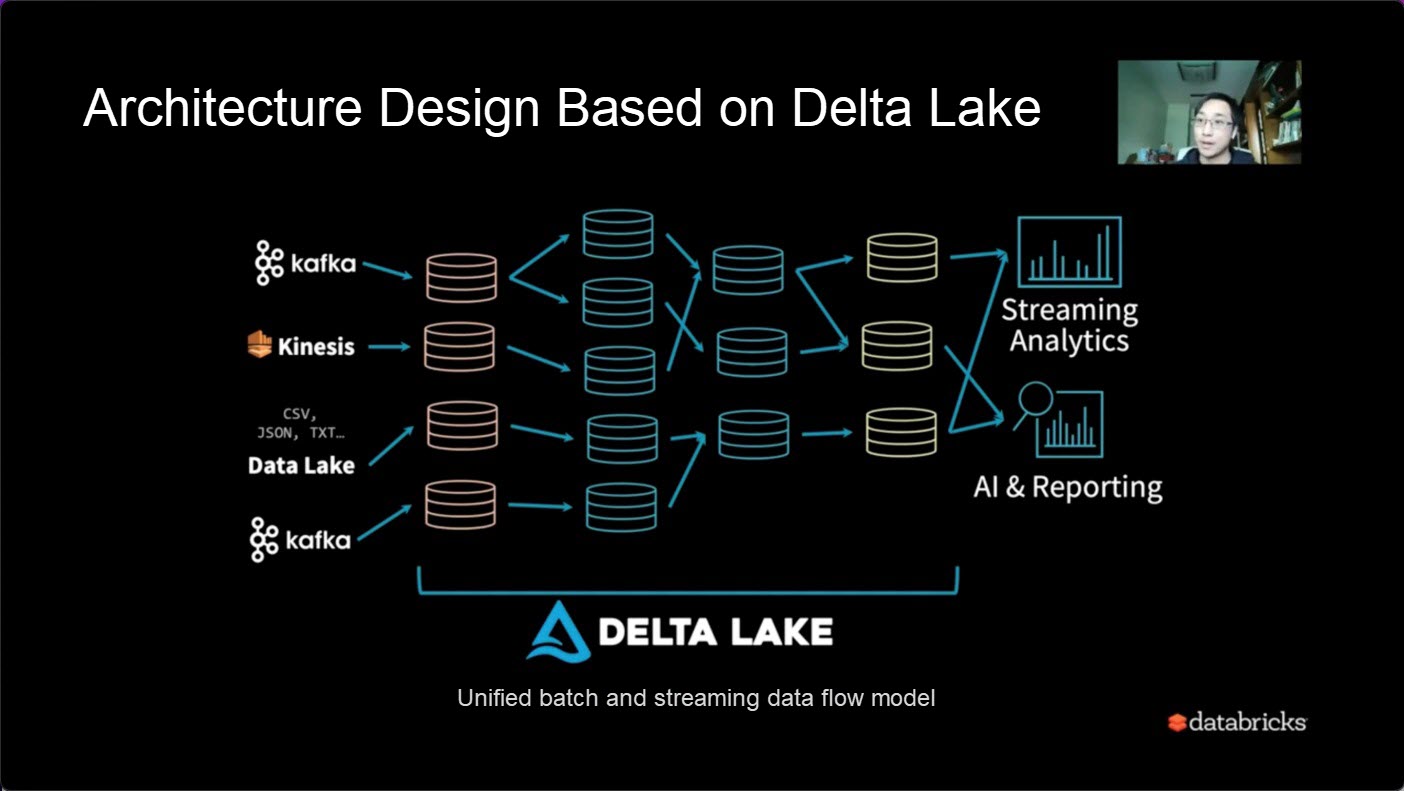

What is Delta Lake-based architecture design?

A series of metadata or the lowest level in the architecture design of Delta Lake is a table. We can divide our data layer by layer into basic data tables, intermediate data tables, and high-quality data tables. Simply focus on the upstream and downstream of the table and whether the dependencies between them become simpler and cleaner. We only need to focus on data organization at the business level. Therefore, Delta Lake is a model for unified batch and streaming continuous data flow.

Databricks Data Insight Open Course - An Evolution History and Current Situation of Delta Lake

Databricks Data Insight Open Course - An Introduction to Delta Lake (Commercial Edition)

62 posts | 7 followers

FollowAlibaba EMR - September 23, 2022

Alibaba EMR - August 22, 2022

Alibaba EMR - September 13, 2022

Alibaba EMR - October 12, 2021

Alibaba Cloud Community - September 30, 2022

Alibaba Cloud Community - August 26, 2022

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba EMR