By Li Jiexing, Senior Software Engineer of Databrick

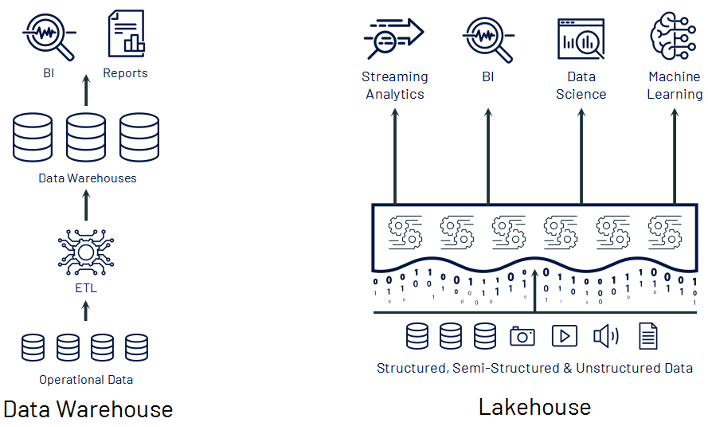

The Data Management system has evolved from the early Data Warehouse to Lakehouse. Lakehouse can store structured, semi-structured, and unstructured data at the same time. It supports scenarios of streaming analytics, BI, data science, and machine analysis.

The data warehouse architecture can control the storage and query of data, so the query system can be designed at the same time. The data storage structure of the query system can be adapted to achieve superior query performance.

Under the Lakehouse architecture, data is stored in an open storage structure (such as Parquet format), so more systems can access data easily. However, the open storage format is not necessarily suitable for query operations, and the query system does not have enough statistical data to achieve an efficient query.

How does Lakehouse achieve efficient query performance with an open storage format?

Databricks Lakehouse has designed a new search engine with excellent SQL performance in both the Data Lake storage system and file format to solve these problems.

Its SQL performance optimization is achieved through the following techniques:

A. High-Speed Cache: Putting hot data into the high-speed cache

B. Establishment of Auxiliary Data Structures such as the collection of statistical data and indexing

C. Data Layout Optimization: Minimize I/O

D. Dynamic File Pruning: Minimize I/O

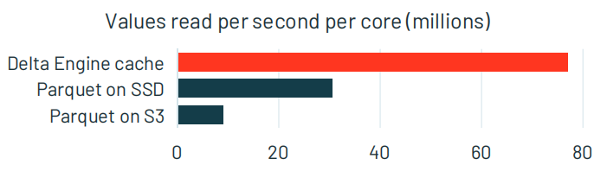

Most workloads focus on accessing some hot data. Data Warehouse often uses SSD and memory as caches to speed up queries of hot data.

Lakehouse can cache the hot data with the same optimized data structure as a data warehouse to improve query performance.

As shown in the figure, using SSD as a cache in Databricks can increase the data reading speed by more than three times and using Delta engine can increase it by more than seven times.

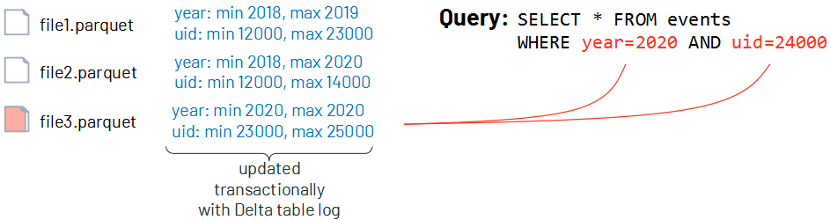

Even if the data is stored in Parquet format, many additional data structures can be established to speed up queries and maintain additional data.

In Parquet files, the minimum/maximum statistics for each data file in the table are maintained, which helps skip some irrelevant data when performing queries.

As shown in the following figure, the query conditions are year=2020 and uid=24000. With the minimum/maximum statistics, the query information only exists in file3, so the reading of file1 and file2 can be skipped.

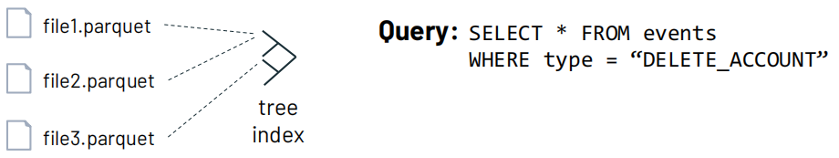

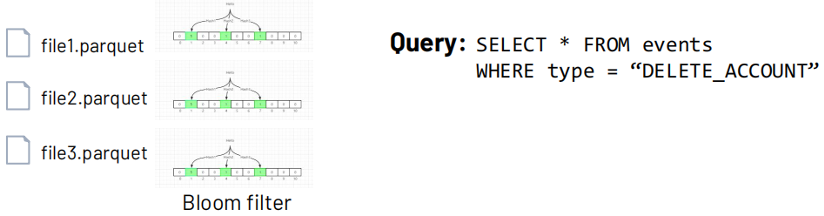

As shown in the following figure, if the query condition is type="DELETE_ACCOUNT" - you can use the index established on the type to go to the corresponding data and avoid reading irrelevant data.

Bloom Filter can be established for each file. Bloom Filter can quickly determine whether the table file contains the data to be queried. If not, the file can be skipped. It reduces the amount of scanned data and improves query performance.

Bloom Filter Principle:

Bloom Filter uses one or more hash tables to calculate the hash value of the data record in each file. Its starting value is 0, which is 1 when the hash value is mapped to the corresponding position, so the position with the value of 0 can be skipped when querying. It is also possible that all the corresponding positions are 1. At this time, the data may not be in this file (false positive). You can control the false positive rate by controlling the number of hash functions and the size of the Bloom Filter.

Frequent MERGE, UPDATE, and INSERT operations executed in Delta Lake may generate many small files. These small files will reduce the reading performance of the system and increase the overhead of metadata operations.

Different techniques are used in Lakehouse to reduce small files.

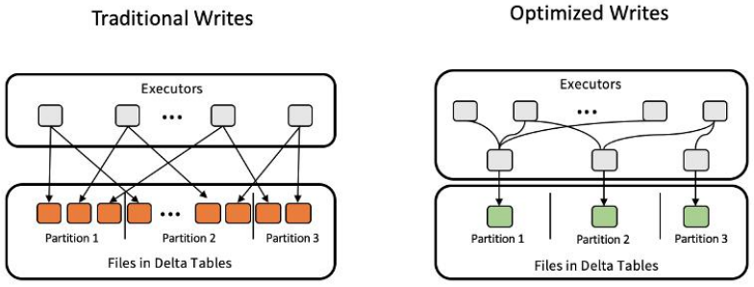

As shown in the following figure, in the open-source Spark, when each executor writes data to a partition, it creates a table file for writing. Then, many small files are generated in one partition.

Databricks optimizes the writing process of Delta tables. A special executor is used to merge writing to the partition by other executors for each partition. This avoids the generation of small files.

After data is written to the Delta table each time, the system checks the number of table files in the Delta table. If the number of small files (whose size is smaller than 128MB) in the Delta table reaches the threshold, the system merges the small files in the Delta table into a large file.

In addition to automatic merging, Databricks provides the Optimize command to enable users to merge small files manually and optimize the table structure to make the structure of table files more compact.

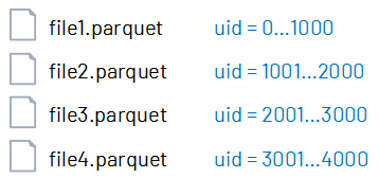

The query run time mainly depends on the amount of data accessed. If the Parquet format is used, you can reduce run time by optimizing the data layout within the table.

Sort the data stored in table files and store a certain amount of data in each table file. In the following figure, file1 stores uid=0...1000, and file2 stores uid=1001...2000. As such, irrelevant table files can be skipped as needed during queries to reduce the number of file scans.

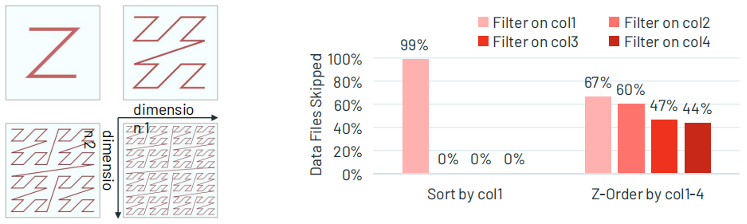

In actual queries, some queries need to look at the data of column1 in a certain range, and some queries need to look at the data of column2 in a certain range, or more. At this time, it is not enough to sort column1.

Z-Ordering can store the associated information in the same set of files in multiple dimensions (like col 1-4 in the following figure) to reduce unnecessary file reading.

Dynamic file pruning is abbreviated as DFP. Let's take the following query as an example.

SELECT sum(ss_quantity)

FROM store_sales

JOIN item ON ss_item_sk = i_item_sk

WHERE i_item_id ='AAAAAAAAICAAAAAA'Query Description: Connect the store_sales table with the item table if item_sk values are equal and item_id is equal to one fixed value.

DFP Disabled

As shown in the preceding figure, a full table scan is performed on store_sales and then the rows in the filtered item table are joined. Although only more than 46,000 data records are returned, over 8.6 billion data records in the store_sales table are scanned.

DFP Enabled

After DFP is enabled, the item table is scanned to query i_item_id='AAAAAAAAICAAAAAA 'data rows in the table items. Then, the i_item_sk values of these data rows are used as the query conditions for the ss_item_sk of the table store_sales and are filtered in the SCAN stage of the table store_sales to skip many irrelevant data. Only more than 6.6 million store_sales entries are scanned, which is nearly 99% less than when DFP is not enabled.

From the results, after enabling DFP, the query achieved a 10-fold performance improvement.

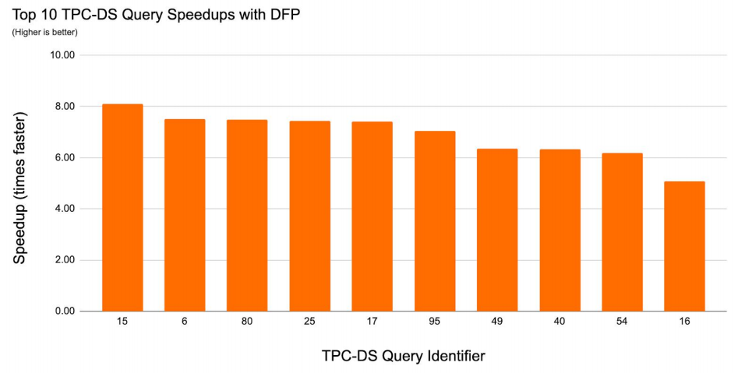

This feature was tested on the TPC-DS (please see the figure below). The test found that after DFP was enabled, the TPC-DS query speed increased 4.5 to 8 times.

The preceding optimization technologies are used to work together, so data reading in Lakehouse is performed in the high-speed cache. An auxiliary data structure is established to reduce I/O for non-cached data reading through data layout optimization, and the Lakehouse engine can provide query performance similar to a data warehouse.

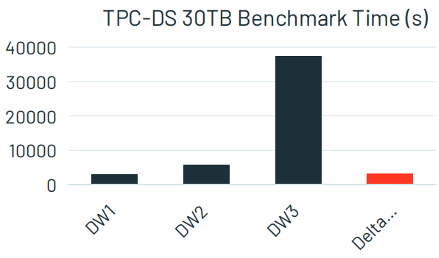

As shown in the following figure, the query performance of Delta Engine is similar to DW1 and exceeds DW2 and DW3.

Delta Clones is an important technology of Lakehouse, which can efficiently copy large data sets and support different needs of testing, sharing, and Machine Learning.

Clone equals to copy. It is a copy of the original data at a given point in time.

It has the same metadata as the source table: the same table structure, constraints, column descriptions, statistics, and partitions.

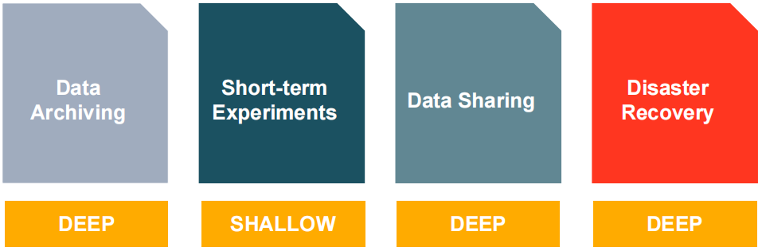

There are two cloning methods: shallow and deep.

Deep clone will completely copy the metadata and data files of the source table and generate a new and independent table.

Run the CREATE TABLE statement in SQL:

# SQL

CREATE TABLE delta.`path/to/copy` CLONE customersRun the DeltaTable statement in Python and Scala statements:

# Python and Scala

DeltaTable

.forName("park", "customers")

.clone("path/to/copy")Shallow cloning only copies the metadata of the table to be cloned. The data files of the table itself are not copied.

Similar to the deep clone statement, except that the SHALLOW CLONE statement is added in SQL. isShallow=true is added in Python and Scala.

# SQL

CREATE TABLE delta.`path/to/copy` SHALLOW CLONE customers# Python and Scala

DeltaTable

.forName("spark", "customers")

.clone("path/to/copy", isShallow=true)There are many applicable scenarios for cloning, such as data storage, short-term experiments, data sharing, and disaster recovery. Deep clone is required in other scenarios except for short-term experiments that use shallow clone.

Databricks Data Insight Open Course - An Introduction to Delta Lake (Open-Source Edition)

62 posts | 6 followers

FollowAlibaba EMR - September 23, 2022

Alibaba EMR - August 22, 2022

Alibaba Cloud Community - September 30, 2022

Alibaba EMR - September 2, 2022

Alibaba EMR - October 12, 2021

Alibaba EMR - May 16, 2022

62 posts | 6 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba EMR